Abstract

With the vigorous development and wide application of mobile communication device technology, various mobile communication devices have emerged. Aiming at the application of mobile communication equipment application software in the field of electrical energy metering, the intelligent human–computer interaction deep learning method of mobile communication equipment of application software for electrical energy metering based on embedded Linux is studied in this paper deeply. First, the architecture and working principle of application software for electrical energy metering are analyzed briefly. Secondly, the basic principle of neural network based on deep learning algorithm are analyzed, and then, the principle process of intelligent human–computer interaction of electric energy metering application software obtained through neural network depth learning is studied in detail. Finally, a simulation example is given. The results of theoretical and simulation studies show that the method studied in this paper has the advantages of simple human–computer interaction, real-time, accurate, and intelligent.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Electrical energy

- Metering

- Application software

- Human–computer interaction

- Deep learning algorithm

- Embedded Linux

1 Introduction

With the rapid development of power system and science and technology, the electric energy metering management technology is constantly innovating, and the remote intelligent management of electric energy metering using mobile communication equipment comes into being.

Literature [1] points out the current problems of traditional managed energy metering. First of all, since most of the traditional energy metering is collected by the employees of power supply enterprises on site, this will lead to inaccurate energy measurement data, and it is inevitable that some users will seize this loophole to steal electricity, resulting in losses of power supply enterprises; Secondly, with the development of science and technology, the current equipment for energy measurement and verification has lagged behind significantly, resulting in errors in energy measurement data. The advantage of mobile communication equipment management energy measurement is that the energy measurement data can be viewed through mobile communication equipment, and the electrical appliances can be controlled through human–computer interaction on mobile communication equipment, and it is faster and more convenient to check the power level. Literature [2] refers to the advantages and disadvantages of human–computer interaction of mobile communication devices at present. As an instant messaging mobile device, the wireless communication function of mobile communication equipment is very portable, and in addition, mobile communication equipment has a variety of human–computer interaction methods, such as fingerprints, faces, voice, touch screens, and buttons. Literature [3] points out the advantages and disadvantages of common electric energy measurement applications (APPs) in the market at present. Nowadays, although most of the energy metering apps such as the “Online State Grid” APP [4] have been able to allow users to apply online for most of the energy metering services provided by power supply enterprises through the network, there are still problems such as opaque business data, slow response, insufficient intelligence level, and inability to realize human–computer interaction, which seriously affects the customer experience.

This paper proposes a deep learning method for human–computer interaction of energy metering APP based on embedded Linux. Firstly, it introduces the system architecture of embedded Linux [5] and the composition and functions of intelligent energy metering APP, and then analyzes the basic principles of deep learning algorithm based on neural networks. The principle process of human–computer interaction obtained by neural network deep learning is studied in detail. On this basis, the further study of the deep learning based on neural network of mobile communication equipment of electric energy metering device application software implementation process and the principle of intelligent human–computer interaction, the fingerprint identification intelligent human–machine interactive simulation, proved in this paper, based on the deep study of electric energy metering APP intelligent human–computer interaction method is feasible.

2 Composition and Function of Energy Metering APP

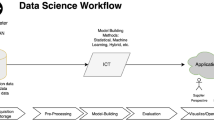

A complete energy metering App includes two parts: the server and the client, as shown in Fig. 12.1. The role of the server is to be responsible for reading the energy data of each power user from the energy meter and storing it in the database, and then moving it out, handling some logical problems, and providing it to the client. Therefore, databases and human–computer interfaces are an essential part of it.

The goal of the client is to facilitate users to access the server, view the user's electricity consumption information and perform operations such as payment, start and stop of electrical equipment, etc. Therefore, a good display interface and human–computer interaction interface are essential. Taking residential users as an example, the APP on this mobile communication device has functional modules such as electricity analysis, electricity billing, payment, and data download. Among them, the electricity bill introduces the monthly electricity and electricity usage of the residential user, including the ladder electricity usage, meter code, historical electricity consumption, payment records, etc. The electricity consumption analysis is a multi-dimensional electricity consumption analysis in the form of a histogram and a line chart, which clearly shows the use of the user’s ladder electricity and the trend of electricity consumption in the past two years. In addition, there are recharge records, manual services, cloud systems, self-service payment, rich data categories, high-precision smart metering, and anti-theft. The highlight of this APP is that the power information can be visually analyzed through fingerprint recognition, which allows residents to clearly and transparently see the use of electricity, especially when the user’s remaining power is insufficient, the APP will issue an alarm to remind the user to pay in time. For example, when a user wants to check his electricity bill balance and says, “Check my electricity balance”, it will respond quickly, and the real-time balance can be displayed after completing fingerprint recognition verification. In the future, the smart assistant will continue to focus on user needs, and continue to launch the forecast of electricity charges in the next few months according to users’ recent electricity expenses and weather changes, thereby reminding users to pre-deposit electricity bills.

Therefore, human–computer interaction is an important part of smart energy metering APP, and the following studies several main human–computer interaction methods of smart energy metering APP.

3 Main Methods of Man–Machine Interaction of Energy Metering APP

Several main human–computer interaction modes of mobile communication devices are introduced, and their advantages and disadvantages are analyzed [6].

3.1 Classical Human–Computer Interaction Method

Classical human–computer interaction methods mainly include keypad, mechanical keyboard, software keyboard, and touch screen. Nowadays, the keys still play an irreplaceable role.

3.2 Intelligent Human–Computer Interaction Method

Keys and Software Keyboard

The human–computer interaction of keys is mainly achieved by gently pressing the keys on mobile communication devices with fingers. The software keyboard is a virtual keyboard realized by software based on touch screen. Nowadays, although the keys are more traditional and are gradually being replaced by software keyboards, they still play an irreplaceable role. Although these days face recognition and fingerprint recognition technologies are very mature, mobile communication devices still retain physical switches and volume control keys, which can be seen from the irreplaceable nature of keys.

Touch

Touch mainly refers to the feeling of contact between fingers and mobile communication devices. Fingerprint recognition is widely used, followed by handwritten character recognition. Through the fingerprint identification of user input, it can unlock mobile communication devices or applications, achieve the purpose of human–computer interaction, and has good confidentiality. However, haptic interaction has its unique advantages, such as short reaction time, small space occupation, and convenience, so it has become the mainstream and accepted by the public.

Visual

Visual interaction mainly refers to the interaction between the eyes and the camera of the mobile communication device. Face recognition is widely used. After entering the features of the face, the camera of the mobile communication device tracks the subtle changes around the eyes, unlocks them, or issues other instructions, so as to achieve the purpose of human–computer interaction. It has good confidentiality.

Voice

Speech recognition interaction mainly refers to the process of interaction with mobile phones by giving instructions [6] through human voice. The advantage of language recognition lies in the simple and direct communication with the machine, and the efficiency is very high. The page has good confidentiality, so the public acceptance is high.

Expression and Awareness

Consciousness is at the stage of imagination and has not yet been put into practice.

The composition of the human–computer interaction system of the smart energy metering app is shown in Fig. 12.2. In view of the relatively simple principle of human–computer interaction between keys and software keyboards, the recognition principles of fingerprints, handwriting, faces, voices, and other methods of fingerprints, handwriting, faces, voices, and other methods are relatively complex and need to be in-depth researched. Taking fingerprint recognition as an example, this paper focuses on the human–computer interaction method of deep learning-based APP suitable for fingerprint, handwriting, face, speech, and other recognition.

4 Intelligent Human–Computer Interaction Principle of APP Based on Deep Learning

4.1 Neural Network Structure Types of Deep Learning

Deep learning is a special form of neural network, and the classical neural network consists of three layers: input layer, intermediate layer, and output layer [7]. When dealing with complex problems, multiple middle tiers may be required. This neural network structure with multiple intermediate layers is called a deep learning network.

In the deep learning network, there is no need to manually extract the feature structure by yourself, the network system will automatically extract the features and feature combinations of different pictures, and find effective features from them.

The neural network based on deep learning studied in this paper is composed of input layer, multiple intermediate layers, and output layer. The deep learning network will automatically extract the features and feature combinations of different pictures, and find effective features from them. The specific deep learning model realizes a variety of difficult to realize complicated functions through layers of intermediate layers. In this paper, the neural network with four intermediate layers is adopted:

-

Layer 1: Extract the most basic underlying features of the object

-

Layer 2: Arrange and combine the features of layer 1, and find useful combination information

-

Layer 3: Arrange and combine the features of layer 2, and find useful combination information

-

Layer 4: Arrange and combine the features of layer 3, and find useful combination information.

4.2 Deep Learning Principle Based on Convolutional Neural Network

The neural network used in this paper is a kind of deep learning neural network based on convolutional neural network, which is a feed-forward neural network in deep learning [7]. The feed-forward input is the semaphore, which is called feed-forward because the signal is conducted forward. Structurally, a convolutional neural network is a mapping network that can get the relationship between output and input without the need for complex and precise mathematical formulas. The design structure of the convolutional neural network can be shown in Fig. 12.3.

It can be seen from Fig. 12.3 that the convolutional neural network is a multi-layer network, each layer has multiple two-dimensional planes, and there are many neurons in each plane. In this paper, a shallow convolution neural network with four 2D convolutions is established.

The principle process of deep learning based on convolutional [8] neural network is as follows:

Convolution

The core idea of convolutional neural network is the existence of convolutional layer. The basic idea of convolution operation is to conduct inner product according to different window data and filter matrix [8]. Through the convolution operation, the convolution layer can obtain the feature image of the input data, highlight some features in these features, and filter some impurity information.

The pixel points in each image are mathematically numbered and represented in the form of a series of numbers, with Xi, j referring to the data in the i-th row and the j-th column. Each weight in the filter is then data numbered. Wm, n represents the data in the n-th column of the m-th row, and the bias term of the filter is expressed in Wb. Each data of the feature map is numbered, and \(a_{i,j}\) represent the data of the i-th row and the j-th column of the feature map.

The convolution step is 1 in (12.1), which is the convolution under one-way communication channel. Since the convolution step has a certain impact on the final feature map, there is a specific calculation to determine the formula of the impact of the convolution step on the feature map, as shown below.

- \(W_{1}\):

-

the width of the graph before convolution;

- \(W_{2}\):

-

the width of the graph after convolution;

- \(F\):

-

wide filter;

- \(P\):

-

the number of turns that make up 0 around the original data image;

- \(S\):

-

step size;

- \(H_{1}\):

-

the height of the graph before convolution;

- \(H_{2}\):

-

the height of the graph after convolution.

However, most of them use multi-channel convolution operations in general.

- \(D\):

-

depth;

- \(F\):

-

height or width of the filter;

- \(w\):

-

weight;

- \(a\):

-

pixels.

Pooling

After the convolution operation of the convolution layer, the basic feature map of the input image is obtained. However, some interference parameters will be generated after convolution, so the dimension of the feature map obtained is high. The role of pooling layer is to further reduce the dimension of the obtained feature map after the convolution operation, so that when the new feature map has as many features as possible, it can reduce the dimension of the feature map.

Full Connection

Full connection is the operation process of spatial transformation of feature matrix, integrating the feature map data obtained after the operation of convolution layer and pooling layer, and reducing the dimension of high-dimensional feature map data.

4.3 Implementation Process of Deep Learning Algorithm Based on Convolutional Neural Network

Assuming a series of image inputs I, a system T is designed with n layers. By improving different parameters of each layer in T, the output is still input I, so that various features in I can be obtained. In terms of deep learning neural networks, the fundamental idea is to stack multiple intermediate layers between the input layer and the output layer, and the output of a certain layer in the middle serves as the input signal of the next layer [9]. In this way, it is a good way to realize the hierarchical expression of the input information.

In addition, it was previously directly assumed that the output of the previous layer is equal to the input of the next layer, but considering the data loss and deviation of the transmission process, the requirement that the output is strictly equal to the output can be slightly reduced, as long as the difference between input and output is as small as possible. The above is the basic idea of deep learning.

Figure 12.4 shows the flowchart of the deep learning algorithm based on convolutional neural network. The signal of the electric energy metering device is collected and stored, and the fingerprint stored in the electric energy metering device is extracted through convolutional neural network and stored in the fingerprint database as a storage signal. After the same fingerprint extraction, the fingerprint is compared in the same device, and the fingerprint is verified one-to-one with the existing fingerprint recorded in the fingerprint database for identity verification.

5 Principle of APP Fingerprint Recognition Human–Computer Interaction Based on Deep Learning

5.1 Deep Learning of Fingerprint Recognition Principle

This section proposes a multi-tasking full-depth convolutional neural network that extracts details from non-contact fingerprints, which can jointly learn the detection of detail positions and the calculation of detail directions [10]. Utilize attention mechanisms to make the network focus on detail points for directional regression.

Detail feature is a special pattern of ridge-valley staggered flow in fingerprint recognition, which is an important feature widely used in fingerprint recognition. There are several types of detail points, such as ridge ends and bifurcations. In this work, regardless of the type of minutiae, all minutiae are considered points of interest. Detail point extraction consists of two tasks: detail point position detection and detail point orientation calculation. Thus, a detail can be represented as a triplet \(\left( {x_{i} , y_{i} , \theta_{i} } \right)\), where \(\left( {x_{i} , y_{i} } \right)\) represents its position and \(\theta_{i} \in \left[ {0, 2\pi } \right]\) represents its direction. There are dozens of points of detail in the fingerprint image.

Detail extraction is often thought of as a type of object detection, where every detail is a target to be inspected. In the past few years, deep CNNs have been widely used for object detection. Therefore, detail extraction based on depth CNNs is usually divided into two steps: detecting detail points, and then calculating detail point direction [11]. The two-step method of training two deep networks separately is not only time-consuming, but also does not make full use of the characteristics of position detection and direction calculation tasks.

To solve the above problems, a non-contact fingerprint detail extraction method based on multi-task full-depth convolutional neural network is proposed. Since detail position detection and orientation calculation are two related tasks, a multi-task deep network is proposed to learn these two tasks together and share their calculations and representations. Unlike the two-stage algorithm based on image chunks, this algorithm is based on a full-size fingerprint image, locates pixel-level detail points, and simultaneously calculates their corresponding orientation. Among them, it includes offline training and online testing phases.

In the offline stage [10,11,12], the training data is used to train a multi-task full-depth convolutional neural network, and the training data is composed of a full-size non-contact fingerprint and its corresponding detailed truth, which are used as the input and output of the network, respectively. Since there is currently no publicly available contactless fingerprint database, with marked granular coordinates and directions, marking details from scratch is time-consuming and labor-sensitive, so commercial fingerprint recognition software is used to extract candidate details and manually check them to generate basic facts about details. First, the COTS Verifinger SDK is used for detailed position and orientation extraction. A GUI tool was then developed to correct detail points and orientations in three ways: (a) adding new details that Verifinger missed; (b) removing false details; and (c) modifying coordinates and orientations that were marked incorrectly. Finally, the position and direction of human detection are used as the ground truth values of the training image.

In the online phase [13, 14], simply input a raw, full-size fingerprint into the trained network to generate two heat maps: one to detect detailed locations and the other to calculate their orientation. Local peaks on the location map are used as detail points, and the pattern map values on detection points are used as detail point directions.

6 Results of Fingerprint Recognition Simulation Based on Deep Learning

6.1 Fingerprint Identification Simulation

The template is designed, so that author affiliations is not repeated each time for multiple authors of the same affiliation. Please keep your affiliations as succinct as possible (e.g., do not differentiate among departments of the same organization). This template was designed for two affiliations.

In the same energy metering device, the fingerprint of the user in the input device is extracted, and then the fingerprint of the same person is extracted outside the device. The fingerprint in the device is compared with the fingerprint extracted again. The original figure and comparison figure are shown in Fig. 12.5.

Through the convolutional neural network algorithm, we can extract the feature map of the fingerprint, as shown in Fig. 12.6.

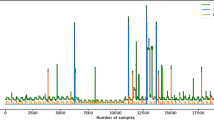

The feature map can be compared with the original map to improve the fingerprint recognition rate of power metering equipment. Figure 12.7 shows the recognition rate of fingerprint after using convolutional neural network algorithm.

In order to make the convolutional neural network algorithm used in this paper more comparable, the convolutional neural network algorithm will not be used in this paper to conduct the simulation again, and the simulation results are shown in Fig. 12.8.

From the above simulation results, it can be seen that the fingerprint recognition algorithm based on deep learning convolutional neural network algorithm has higher accuracy in one-to-one fingerprint recognition, which is beyond the traditional point pattern matching algorithm. When classifying different fingerprints, the accuracy of the proposed algorithm is greatly improved.

7 Summary

In today era of big data, in order to achieve rapid recognition between human–computer interactions, a new human–computer interaction algorithm is proposed, which is a human–computer interaction recognition system based on deep learning neural network [13]. This algorithm improves the recognition accuracy of human–computer interaction in the realization of human–computer interaction. Taking fingerprint recognition as an example, the experiment proves that the fault tolerance rate of traditional fingerprint recognition methods is low. After combining with the deep learning neural network, when using the convolutional neural network model, the fingerprint recognition rate of human–computer interaction has been better improved, with an error rate of about 4%.

Through simulation comparison, the recognition rate of traditional point pattern algorithm based on human–computer interaction is lower than that of convolutional neural network algorithm. But the training of convolutional neural network is very difficult to achieve, and a lot of experimental data are needed to provide data support for training. But the experimental results show that the human–computer interaction method based on deep learning neural network is promising in the future, and the deep learning algorithm will be more widely used in the field of human–computer interaction, but the experimental accuracy needs to be strengthened to further improve the accuracy.

References

Shi, S., Peng, L., Cheng, L.: Current situation and prospect of electric energy measurement technology in China. Sci. Technol. Innov. (05), 44–45 (2019)

Xi, J.: Discussion on power measurement management problem and optimization strategy. Sci. Technol. Wind (18), 176 (2018)

Ding, F., Jiang, Z.; Research on human-computer interaction design in mobile devices. Packaging Eng. (16), 35 (2014)

Meng, F.: Improve the function of the “Online State Grid” app improve the service level of power measurement. Agric. Power Manage. 04, 37–38 (2021)

Wu, X.: Design and implementation of embedded linux file system. Comput. Eng. Appl. (09), 111–112 (2005)

Tao, J., Wu, Y., Yu, C., Weng, D., Li, G., Han, T., Wang, Y., Liu, B.: Overview of multi-mode human-computer interaction. Chin. J. Image Graphics 27(06), 1956–1987 (2022)

Li, B., Liu, K., Gu, J., Jiang, W.: A review of convolutional neural network research. Comput. Age (04), 8–12 (2021)

Nan, Z., Xinyu, O.: Development of convolutional neural network. J. Liaoning Univ. Sci. Technol. 44(05), 349–356 (2021)

Li, B., Liu, K., Gu, J., Jiang, W.: A review of convolutional neural network research. Comput. Age (04), 8–12 (2021)

Zhang, Y.: Design and implementation of radio frequency fingerprint identification system based on deep learning. University of Chinese Academy of Sciences (National Space Science Center of Chinese Academy of Sciences), 000024 (2021)

Zeng, Y., Chen, X., Lin, Y., Hao, X., Xu, X., Wang, L.: Research status and trend of rf fingerprint identification. J. Radio Wave Sci. 35(03), 305–315 (2020)

Chen, H., Li, X., Zheng, Y., Yuan, Shao, N., Yang, L., Liu, L.: Research on fingerprint recognition method based on deep learning. Intell. Comput. Appl. (03), 64–69 (2018)

Zhang, L.: Design of big data fingerprint identification system based on ARM and deep learning. J. Hunan Univ. Sci. Technol. (Nat. Sci. Edn.) 34(01), 77–84 (2019)

Lv, Z., Huang, Z.: Fingerprint identification method and its application. Comput. Syst. Appl. (01), 34–36 (2014)

Acknowledgements

Supported by the State Grid Technology Project (5700-202055484A-0-0-00).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Zeng, W. et al. (2024). The Intelligent Human–Computer Interaction Method for Application Software of Electrical Energy Metering Based on Deep Learning Algorithm. In: Kountchev, R., Patnaik, S., Nakamatsu, K., Kountcheva, R. (eds) Proceedings of International Conference on Artificial Intelligence and Communication Technologies (ICAICT 2023). ICAICT 2023. Smart Innovation, Systems and Technologies, vol 368. Springer, Singapore. https://doi.org/10.1007/978-981-99-6641-7_12

Download citation

DOI: https://doi.org/10.1007/978-981-99-6641-7_12

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-99-6640-0

Online ISBN: 978-981-99-6641-7

eBook Packages: EngineeringEngineering (R0)