Abstract

This paper proposes a novel method of designing a correlation filter for frequency domain pattern recognition. The proposed correlation filter is designed with linear regression technique and termed as linear regression correlation filter. The design methodology of linear regression correlation filter is completely different from standard correlation filter design techniques. The proposed linear regression correlation filter is estimated or predicted from a linear subspace of weak classifiers. The proposed filter is evaluated on standard benchmark database and promising results are reported.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

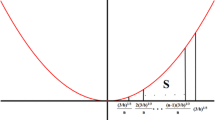

Application of correlation filters in pattern recognition is reported in several research papers with promising results [6, 9, 11, 13, 17, 19]. In [1], a generalized regression network based correlation filtering technique is proposed for face recognition under poor illumination condition. A preferential digital optical correlator is proposed in [2] where the trade-off parameters of the correlation filter are optimized for robust pattern recognition. A class-specific subspace based nonlinear correlation filtering technique is introduced in [3]. Basic frequency domain pattern (face) recognition techniques are carried out by correlation filter by cross-correlating the Fourier transform of test face image with a synthesized template or filter, generated from Fourier transform of training face images and processing the resulting correlation output via inverse Fourier transform. The process of correlation pattern recognition is pictorially given in Fig. 1. The process given in Fig. 1 can be mathematically summarized in the following way. Let \(\mathbf F _x \) and \(\mathbf F _h \) denote the 2D discrete Fourier transforms (DFTs) of 2D image x and 2D filter h in spatial domain, respectively, and let \( \mathbf F _y \) is the Fourier-transformed probe image. The correlation output g in space domain in response to y for the filter \(\mathbf F _h\) can then be expressed as the inverse 2D DFT of the frequency domain conjugate product as

where \( \circ \) represents the element-wise array multiplication, \(^*\) stands for complex conjugate operation, and FFT is an efficient algorithm to perform DFT. As shown in Fig. 1, the information of N number of training images from kth face class (\(k \in C\)), out of total C number of face classes for a given database, are Fourier transformed to form the design input for kth correlation filter. In ideal case, a correlation peak with high value of peak-to-sidelobe ratio (PSR) [8] is obtained, when any Fourier-transformed test face image of kth class is correlated with kth correlation filter, indicating authentication. However, in response to impostor faces no such peak will be found as shown in Fig. 1 corresponding to jth correlation plane.

Traditional correlation filters used for pattern recognition purpose are commonly categorized in constrained and unconstrained filters. Due to several advantages of unconstrained correlation filters [12] over constrained filters, pattern recognition tasks are generally carried out with the former one. Several correlation filters are developed as unconstrained minimum average correlation energy (UMACE) [12], maximum average correlation height (MACH) [12], optimal trade-off synthetic discriminant (OTSDF) [16], and optimal trade-off MACH (OTMACH) [7] for pattern recognition. Basic fundamental blocks needed for designing these correlation filters are minimization of average correlation energy (ACE) of the correlation plane, minimization of the average similarity measure (ASM), maximization of correlation peak height of the correlation plane. Detailed design technique of traditional correlation filters can be found in [14].

Apart from traditional design method of correlation filter, this paper proposes a linear regression based correlation filter estimation from a span of subspace of similar objects. Basic concept used in designiing the linear regression correlation filter (LRCF) is that images from a same class lie on a linear subspace. The proposed method does not include the characteristics of correlation planes like ASM and/or ACE and peak height. Without specifying the correlation plane characteristics this design technique solely depends on some weak classifiers, known as matched filters, obtained from Fourier-transformed training images of a specific class.

Proposed design technique of LRCF: In this paper, a simple and efficient linear regression based correlation filter for face recognition is proposed. Sample images from a specific class are known to lie on a subspace [4]. Using this concept class, specific model of correlation filters is developed with the help of sample gallery images, thereby defining the task of correlation filter design as a problem of linear regression [5]. Gallery images are Fourier transformed which leads to matched filters. These matched filters are representative of weak classifiers which correspond to each gallery image. Design of proposed filter assumes that the matched filters for a specific class lie in a linear subspace. Probe image is also Fourier transformed, which results in a probe-matched filter. Least-squares estimation is used to estimate the vectors of parameters for a given probe-matched filter against all class models. The decision rule is based on frequency domain correlation technique. The probe image is classified in favor of the class with most precise estimation.

Proposed LRCF is tested on a standard database and promising results are given. Rest of the paper is organized as follows: In Sect. 2, the mathematical formulation and design algorithm of LRCF is discussed. Extensive experimental results are reported in Sect. 3. The paper concludes in Sect. 4.

Nomenclature used in this paper:

C : total number of classes,

N : total number of images in each class,

\( x ^{(k)}{^{(i)}} \in \mathfrak {R}^{d_1\times d_2}\) : spatial domain ith image of kth class,

\( \mathbf F _x \in \mathfrak {R}^{d_1\times d_2}\): DFT of x,

\(y\in \mathfrak {R}^{d_1\times d_2}\) : spatial domain probe image,

\(\mathbf F _y \in \mathfrak {R}^{d_1\times d_2}\) : DFT of y,

\( h^{(k)}, \in \mathfrak {R}^{d_1\times d_2}\): spatial domain kth class correlation filter,

\( \mathbf F ^{(k)}_h, \in \mathfrak {R}^{d_1\times d_2}\) : DFT of \(h ^{(k)}\),

\(g ^{(i)}, \in \mathfrak {R}^{d_1\times d_2}\): spatial domain correlation plane in response to ith image,

\(\mathbf F _g^{(i)},\in \mathfrak {R}^{d_1\times d_2}\): DFT version of \(g ^{(i)}\),

\(m = d_1\times d_2\),

\( \mathbf X ^{(k)}\in \mathfrak {R}^{m\times N}\): kth class design matrix,

\(\mathbf f _x,\mathbf f _y, \in \mathfrak {R}^{m\times 1}\): vector representation of \(\mathbf F _x\) and \(\mathbf F _y\), respectively.

\( \theta ^{(k)}, \in \mathfrak {R}^{N\times 1}\): parameter vector of kth class,

DFT : Discrete Fourier Transform.

2 Mathematical Formulation of LRCF

Let C number of distinguished classes with \( N^{(k)}\) number of training images from kth class, \(k=1,2,\ldots ,C\). Each grayscale image \( x{^{(k)}}{^{(i)}}\) is then Fourier transformed. This \( \mathbf F _x^{(k)}{^{(i)}}\) is a simple matched filter generated from \(x{^{(k)}}{^{(i)}}\). These \(N^{(k)}\) number of matched filters can be termed as weak classifier for the kth class. Using the concept that patterns from same class lie in a linear subspace, a class-specific model \(\mathbf X ^{(k)}\), known as design matrix, is developed with the weak classifiers from kth class. The class-specific model is designed in such a way that each column of \(\mathbf X ^{(k)}\) contains \( \mathbf f _x^{(k)}{^{(i)}}\), which is lexicographic version of \(\mathbf F _x^{(k)}{^{(i)}}\). The design matrix mathematically expressed as

Each Fourier-transformed vector \( \mathbf f _x{^{(k)}}{^{(i)}}\) spans a subspace of \(\mathfrak {R}^{m}\) also called column space of \(\mathbf X ^{(k)}\). At the training stage, each class k is represented by a vector subspace \(\mathbf X ^{(k)}\), which can be called as predictor or regressor for class k.

Let y be the probe image to be classified in any of the classes \(k=1,2,\ldots ,C\). Fourier transform of y is evaluated as \(\mathbf F _y\) and lexicographically ordered to get \(\mathbf f _y\). If \(\mathbf f _y\) belongs to the k th class, it should be represented as a linear combination of weak classifiers (matched filters) from the same class, lying in the same subspace, i.e.,

where \(\theta ^{(k)}\in \mathfrak {R}^{N\times 1}\) is the vector of parameters. For each class, the parameter vector can be estimated using least-squares estimation method [18]. The objective function can be set for quadratic minimization problem as

Setting

gives

The estimated vector of parameters \(\theta ^{(k)}\) along with the predictors \(\mathbf X ^{(k)}\) is used to predict the correlation filter in response to the probe-matched filter \(\mathbf f _y\) for each class k. The predicted correlation filter obtained from the linear regression technique is termed as linear regression correlation filter whihc is having the following mathematical expression:

Class-specific \(\mathbf H ^{(k)}\), known as hat matrix [15], is used to map the probe-matched filter \(\mathbf f _y\) into a class of estimated LRCFs \(\hat{\mathbf{f }}^{(k)}_y, k=1,2,\ldots ,C\).

2.1 Design Algorithm of LRCF

Algorithm: Linear Regression Correlation Filter (LRCF).

Inputs: Class model matched filters design matrix \(\mathbf{X ^{(k)}}\in \mathfrak {R}^{m\times N}, k =1,2,\ldots ,C\), and probe-matched filter \(\mathbf f _y\in \mathfrak {R}^{m\times 1}\).

Output: kth class of y

-

1.

\( \theta ^{(k)}\in \mathfrak {R}^{N\times 1}, k=1,2,\ldots ,C\), is evaluated against each class model

$$\begin{aligned} \theta ^{(k)}= (\mathbf X {^{(k)}}^{T}{} \mathbf X ^{(k)})^{-1}{} \mathbf X {^{(k)}}^T\mathbf f _y, k=1,2,\ldots ,C \end{aligned}$$.

-

2.

Estimated probe-matched filter is computed for each parameter vector \( \theta ^{(k)}\) in response to probe-matched filter \(\mathbf f _y\) as

$$\begin{aligned} \hat{\mathbf{f }}^{(k)}_y=\mathbf X ^{(k)}\theta ^{(k)}, k=1,2,\ldots ,C \end{aligned}$$.

-

3.

Frequency domain correlation:

$$\begin{aligned} \hat{\mathbf{F }}^{(k)}_y \otimes \mathbf F _y, k=1,2,\ldots ,C \end{aligned}$$.

-

4.

PSR metric is evaluated for each class, C number of PSRs obtained.

-

5.

y is assigned to kth class ifFootnote 1

$$\begin{aligned} {PSR}^{(k)}= \max \{{PSRs}\}\ge thr,k=1,2,\ldots ,C \end{aligned}$$.

Decision-making: Probe-matched filter \(\mathbf f _y\) is correlated with C numbers of estimated LRCFs \(\hat{\mathbf{f }}^{(k)}_y, k=1,2,\ldots ,C\) and highest PSR value is searched from C correlation planes. The maximum PSR obtained is further tested with a preset threshold and the probe image is classified in class k for which the above conditions are satisfied.

3 Experimental Results

3.1 Database and Preparation

The Extended Yale Face Database B [10] contains 38 individuals under 64 different illumination conditions with 9 poses. Only frontal face images are taken for experiments. Frontal cropped face images are readily available from website.Footnote 2 All grayscale images are downsampled to a size of \(64\times 64\) for experimental purpose.

Each class of yale B database contains 64 images. In training stage, out of 64 images 20 images are randomly chosen 5 times and five sets of \(\mathbf X ^{(k)}\) are designed. For each set, the size of design matrix \(\mathbf X ^{(k)}\) is therefore \(4096\times 20\). Hence, for kth class, five such predictors \(\mathbf X \) are formed, each of which contains different sets of 20 weak classifiers or matched filters (Tables 1 and 2).

In testing stage, rest of the images are taken, i.e., no overlapping is done. Probe image of size \(64\times 64\) is taken and Fourier transformed. The lexicographic version \(\mathbf f _y\) from \(\mathbf F _y\) is then used to estimate the LRCFs \(\mathbf f ^{(k)}_y,k=1,2,\ldots , C\) using the parameter vector \(\theta ^{(k)}\) and predictor class \(\mathbf X ^{(k)}\).

3.2 Performance of LRCF

Correlation plane: In frequency domain correlation pattern recognition, the authentic image should give a distinct peak in correlation plane whereas the imposter will not. To establish the above statement, in this case one probe image from class 1 is taken and tested with both class 1 and class 2 LRCF. Figure 2 shows the proposed method works well for authentication purpose as the probe image from class 1 when correlated with estimated LRCF originated from \(\mathbf X ^{(1)}\) subspace and \(\theta ^{(1)}\) parameters give a correlation plane with distinct peak having high PSR value of 66.65. The same probe image when tested with LRCF originated from \(\mathbf X ^{(2)}\) and \(\theta ^{(2)}\) provides no such peak with a PSR value of 6.15 in the correlation plane.

PSR distribution: This phase of experiment is categorized into two ways: (1)single probe image is taken from a specific class and tested on all class LRCFs and (2) all probe images from all classes are taken and tested with a specific class LRCF. As five sets of predictor class models are available for each class, for each model one estimated LRCF \(\hat{\mathbf{f }}^{(k)}_y\) is evaluated. Then single probe image (matched filter) from a specific class is correlated with five estimated LRCFs of each class. Five such PSR values are then averaged out for each class and 38 PSR values are obtained. Figure 3 shows the PSR distribution of randomly selected single probe image taken from some specific classes 10, 24, 15, 20. It is evident from Fig. 3 that the probe image from a specific class when tested with LRCF of the same class gives high PSR value and in other cases low PSRs are obtained.

Another experiment has been performed where all 44 probe images are taken from each of 38 classes and response to a specific class is observed. Class numbers 5, 10, 25, and 35 are taken for testing purpose. PSR values are averaged out for each class as five sets of training and test sets are available. Figure 4 shows high PSR values when probe images from class 5, 10, 25, and 35 are taken. Clear demarcation is observed between the authentic and imposter PSR values in all four cases.

F1-score and Confusion Matrix: Another experiment is performed to measure the performance of the proposed LRCF by evaluating precision, recall, and F1-score. Precision and recall are mathematically expressed as

where tp : true positive, fp: false positive, and fn: false negative. F1-score is further measured as a harmonic mean of precision and recall as

It is observed from Fig. 5a that high precision high recall is obtained with the proposed method. This ensures that the LRCF classifier performs well in classification. Precision can be seen as a measure of exactness, whereas recall is a measure of completeness. High precision obtained from the experimental results ensures that the proposed filter (LRCF) returns substantially more relevant results than irrelevant ones, while high recall ensures that LRCF returns most of the relevant results. Highest F1-score of value 0.9663 is obtained at PSR value 17 as observed from Fig. 5b. Two confusion matrices are developed for randomly chosen 10 classes out of 38 with hard threshold of PSR value 17 as obtained from F1-score curve (Fig. 6).

Comparative study: A comparative performance analysis of proposed filter is performed with traditional state-of-the-art correlation filter like UMACE and OTMACH. Each filter is trained with four sets of training images and tested with four test sets. Each case grayscale images are downsampled to \(64\times 64\). Both UMACE and OTMACH along with proposed LRCF are evaluated on 38 classes for varying threshold values of PSR from 5 to 20 with 0.5 increment. From the set of PSR values, confusion matrix, precision, and recall are calculated. To analyze the performance of the filters, F1-score is determined. Figure 7 shows comparative F1-curves for four sets of training–testing images. It is observed from Fig. 7 that comparatively higher F1-score is achieved with proposed LRCF filters. Also, the high F1-score is obtained at high PSR values. This result establishes the fact obtained in PSR distribution results, refer Fig. 4.

4 Conclusion

A new design method of correlation filter is proposed. Linear regression method is used to estimate the correlation filter for a given probe image and training-matched filter subspace. Estimated LRCF is correlated with probe filter for classification purpose. The proposed method is tested on a standard database and promising results with high precision high recall are reported. High F1-score, obtained in case of proposed LRCF, ensures the robustness of the classifier in face recognition application. This paper handles only with frontal faces under different illumination conditions. How to extend the application of proposed filter in pose variation, noisy conditions, and other object recognition needs further research.

Notes

- 1.

thr: hard threshold selected empirically.

- 2.

References

Banerjee, P.K., Datta, A.K.: Generalized regression neural network trained preprocessing of frequency domain correlation filter for improved face recognition and its optical implementation. Opt. Laser Technol. 45, 217–227 (2013)

Banerjee, P.K., Datta, A.K.: A preferential digital-optical correlator optimized by particle swarm technique for multi-class face recognition. Opt. Laser Technol. 50, 33–42 (2013)

Banerjee, P.K., Datta, A.K.: Class specific subspace dependent nonlinear correlation filtering for illumination tolerant face recognition. Pattern Recognition Letters 36, 177–185 (2014)

Belhumeur, P.N., Hespanha, J.P., Kriegman, D.J.: Eigenfaces versus fisherfaces: recognition using class specific linear projection. IEEE Trans. Pattern Anal. Mach. Intell. 19(7), 711–720 (1997)

Trevor, H., Robert, T., Jerome, F.: The Elements of Statistical Learning. Springer, Berlin (2009)

Jeong, K., Liu, W., Han, S., Hasanbelliu, E., Principe, J.: The correntropy mace filter. Pattern Recognit. 42(9), 871–885 (2009)

Johnson, O.C., Edens, W., Lu, T.T., Chao, T.H.: Optimization of OT-MACH filter generation for target recognition. In: Proceedings of the SPIE 7340, Optical Pattern Recognition, vol. 7340, pp. 734008–734009 (2009)

Kumar, B., Savvides, M., Xie, C., Venkataramani, K., Thornton, J., Mahalanobis, A.: Biometric verification with correlation filters. Appl. Opt. 43(2), 391–402 (2004)

Lai, H., Ramanathan, V., Wechsler, H.: Reliable face recognition using adaptive and robust correlation filters. Comput. Vis. Image Underst. 111, 329–350 (2008)

Lee, K., Ho, J., Kriegman, D.: Acquiring linear subspaces for face recognition under variable lighting. IEEE Trans. Pattern Anal. Mach. Intell. 27(5), 684–698 (2005)

Maddah, M., Mozaffari, S.: Face verification using local binary pattern-unconstrained minimum average correlation energy correlation filters. J. Opt. Soc. Am. A 29(8), 1717–1721 (2012)

Mahalanobis, A., Kumar, B., Song, S., Sims, S., Epperson, J.: Unconstrained correlation filter. Appl. Opt. 33, 3751–3759 (1994)

Levine, M., Yu, Y.: Face recognition subject to variations in facial expression, illumination and pose using correlation filters. Comput. Vis. Image Underst. 104(1), 1–15 (2006)

Alam, M.S., Bhuiyan, S.: Trends in correlation-based pattern recognition and tracking in forward-looking infrared imagery. Sensors (Basel, Switzerland) 14(8), 13437–13475 (2014)

Imran, N., Roberto, T., Mohammed, B.: Linear regression for face recognition. IEEE Trans. Pattern Anal. Mach. Intell. 32(11), 2106–2112 (2010)

Refregier, Ph: Filter design for optical pattern recognition: multi-criteria optimization approach. Opt. Lett. 15, 854–856 (1990)

Rodriguez, A., Boddeti, V., Kumar, B., Mahalanobis, A.: Maximum margin correlation filter: a new approach for localization and classification. IEEE Trans. Image Process. 22(2), 631–643 (2013)

Seber, G.: Linear Regression Analysis. Wiley-Interscience (2003)

Yan, Y., Zhang, Y.: 1D correlation filter based class-dependence feature analysis forface recognition. Pattern Recognit. 41, 3834–3841 (2008)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Ghosh, T., Banerjee, P.K. (2020). Linear Regression Correlation Filter: An Application to Face Recognition. In: Chaudhuri, B., Nakagawa, M., Khanna, P., Kumar, S. (eds) Proceedings of 3rd International Conference on Computer Vision and Image Processing. Advances in Intelligent Systems and Computing, vol 1022. Springer, Singapore. https://doi.org/10.1007/978-981-32-9088-4_32

Download citation

DOI: https://doi.org/10.1007/978-981-32-9088-4_32

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-32-9087-7

Online ISBN: 978-981-32-9088-4

eBook Packages: EngineeringEngineering (R0)