Abstract

The aim of the present study is to develop an iterative scheme of high convergence order with minimal computational cost. With this objective, a three-step method has been designed by utilizing only two Jacobian matrices, single matrix inversion, and three function evaluations. Under some standard assumptions, the proposed method is found to possess the sixth order of convergence. The iterative schemes with these characteristics are hardly found in the literature. The analysis is carried out to assess the computational efficiency of the proposed method, and further, outcomes are compared with the efficiencies of existing ones. In addition, numerical experiments are performed by applying the method to some practical nonlinear problems. The entire analysis remarkably favors the new technique compared with existing counterparts in terms of computational efficiency, stability, and CPU time elapsed during execution.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The systems of nonlinear equations arise by virtue of modeling the most of the physical processes or practical situations. The constructed models are generally expressed in mathematical form as

where \(O\in \mathbb {R}^m \) represents the zero vector, \(F:\varOmega \subseteq \mathbb {R}^m \rightarrow \mathbb {R}^m\) is a nonlinear mapping which is commonly represented as \((f_1(x),f_2(x),\ldots ,f_m(x))^T\), \(x = (x_1,\ldots ,x_m)^T \in \varOmega \), and \(f_i: \mathbb {R}^m \rightarrow \mathbb {R} \ (i=1,\ldots ,m)\) are nonlinear scalar functions.

Knowledge about the solution of the constructed nonlinear model plays an important role in forecasting the future developments of the corresponding physical problem. But, as a matter of fact, obtaining the analytical solutions of nonlinear systems is generally not feasible. To deal with this challenge, iterative methods [8, 13] offer the numerical solution up to the desired precision. The working process of an iterative method is based on the fixed point iteration theory, under which it locates the solution, \(x^* \in \varOmega \), of the given system (1), as a fixed point of a mapping \(\phi : \mathbb {R}^m \rightarrow \mathbb {R}^m\), so that

where, \(x^{(0)}\) is the initial estimate to the solution, and the mapping \(\phi \) is constrained to satisfy some prescribed assumptions.

The most widely applied iterative procedure to find the solution to nonlinear equations is Newton’s method

where F(x) is continuously differentiable in some neighborhood of its solution, and \(F'(x) \in \mathcal {L}(\mathbb {R}^m,\mathbb {R}^m)\) is a linear operator which is generally represented as a Jacobian matrix \(\left[ \frac{\partial f_i}{\partial x_j}\right] _{m \times m}\). This method approximates the simple solution of (1) with the quadratic rate of convergence. To improve the convergence rate of the method (2), numerous iterative schemes have been presented in the literature (see [2, 4,5,6, 10,11,12, 14] and references therein). As it is evident that Newton’s scheme utilizes evaluation of a function (F), a Jacobian matrix \((F')\), and a matrix inversion \((F'^{-1})\) per iteration. An attempt to increase the rate of convergence of an iterative method generally leads to a technique that involves one or more additional evaluations per iteration than its predecessor. For instance, the Potra and Pták method [9], having cubic convergence, is one of the simplest improvements of the method (2), which is expressed as follows:

Clearly, the above-presented two-step scheme utilizes an additional function evaluation over Newton’s method.

The practice of designing an iterative scheme, by utilizing additional evaluations, accelerates the convergence order but it certainly increases the computational cost per iteration in terms of mathematical operations. Optimizing the computational cost with the improving convergence speed leads to the construction of computationally efficient techniques. The measure of efficiency is formulated in [8, 13] to analyze and further compare the efficiencies of iterative techniques. In addition, the necessary parameters have been introduced in [11] for the thorough investigation of this concept.

Taking into account the above discussion, in the next section, we shall present a simple and efficient iterative method showing the sixth order of convergence. The computational efficiency of the developed method is determined, analyzed, and compared with the efficiencies of existing methods in Sect. 3. Numerical performance is investigated in Sect. 4, and concluding remarks are given in Sect. 5.

2 Development of Method

The primary objective here is to design an iterative scheme that improves the convergence speed of the Potra and Pták method (3) without utilizing any additional inverse operator. In what follows, we shall present a three-step iterative method involving undetermined parameters, which are to be chosen in order to maximize the convergence order. In view of this, we consider the iterative scheme of type,

where a, b, and c are the parameters.

Before proceeding to the convergence analysis, a preliminary result (see [7]) is stated below, which will be followed by the main theorem to show the sixth-order convergence for scheme (4).

Lemma 1

Assume that the mapping \(F:\varOmega \subseteq \mathbb {R}^m \rightarrow \mathbb {R}^m \) is n-times Fréchet differentiable in a convex neighborhood \(\varOmega \in \mathbb {R}^m\), and let \(x,\ t \in \varOmega \), then the following expansion holds:

where \(t^i=(t,{\mathop {\ldots \ldots }\limits ^{i-times}},t)\), \(F^{(i)}(x) \in \mathcal {L}(\mathbb {R}^m \times {\mathop {\ldots \ldots }\limits ^{i-times}} \times \mathbb {R}^m, \mathbb {R}^m)\) for each \(i=1,2,\ldots \), and

Theorem 1

Assume that a nonlinear mapping, \(F:\varOmega \subseteq \mathbb {R}^m \rightarrow \mathbb {R}^m \), is continuously differentiable sufficient number of times in some neighborhood of its simple zero \(x^*\), contained in an open convex region \(\varOmega \). Further, suppose that \(F'(x)\) is non-singular and continuous in that neighborhood, and the initial approximation \(x^{(0)}\) is sufficiently close to \(x^*\). Then, the sequence of iterates generated by the method (4) converges to \(x^*\) with the sixth order of convergence, provided \(a=\frac{7}{2}\), \(b=-4\), and \(c=\frac{3}{2}\).

Proof

Let \(e^{(k)} = x^{(k)}-x^*\) be the error obtained at the \(k{\textrm{th}}\) iteration of (4). Then, as a consequence of Lemma 1, and the fact that \(F(x^*)=O\), Taylor expansions of \(F(x^{(k)})\) and \(F'(x^{(k)})\), about \(x^*\), are developed as

where \(e^{(k)^i}=(e^{(k)},{\mathop {\ldots \ldots }\limits ^{i-times}},e^{(k)})\), and \(A_i = \frac{1}{i!} F'(x^*)^{-1}F^{(i)}(x^*),\ \ i=2,3,\ldots ,\) and consequently,

where \(B_1=-2A_2, B_2=-3A_3+4A_2^2, B_3=-4A_4+6A_2A_3+6A_3A_2-8A_2^3, B_4=-5A_5+ 8A_2A_4+9A_3^2+8A_4A_2-12A_2^2A_3-12A_2A_3A_2-12A_3A_2^2+16A_2^4, \text {and} B_5=-6A_6+10A_2A_5+12A_3A_4+12A_4A_3+10A_5A_2-16A_2^2A_4-18A_2A_3^2-16A_2A_4A_2-18A_3A_2A_3-18A_3^2A_2-16A_4A_2^2+24A_2^3A_3+24A_2^2A_3A_2+24A_2A_3A_2^2+24A_3A_2^3-32A_2^5\).

Denoting \(e_y^{(k)}=y^{(k)}-x^*\) as the error at the first step of method (4), and using Eqs. (5)–(7), we have that

where \(C_1= A_2,\ C_2=2(A_3-A_2^2),\ C_3=3A_4-4A_2A_3-3A_3A_2+4A_2^3, \ C_4=4A_5-6A_2 A_4-6A_3^2-4A_4A_2+8A_2^2A_3+6A_2A_3A_2+6A_3A_2^2-8A_2^4, \ \text {and } C_5=5A_6-8A_2A_59A_3A_4- 8A_4A_3-5A_5A_2+12A_2^2A_4+12A_2A_3^2+8A_2A_4A_2+12A_3A_2A_3+9A_3^2A_2+8A_4A_2^2-16A_2^3 A_3-12A_2^2A_3A_2-12A_2A_3A_2^2-12A_3A_2^3+16A_2^5\).

Using the expression (8), Taylor developments of \(F(y^{(k)})\) and \(F'(y^{(k)})\), about \(x^*\), is given by

where \(K_1= A_2, K_2=2(A_3- A_2^2), K_3=3A_4-4A_2A_3-3A_3A_2+5A_2^3, K_4=4A_5- 6A_2A_4- 6A_3^2-4A_4A_2+10A_2^2A_3+8A_2A_3A_2+6A_3A_2^2-12A_2^4, K_5= 5A_6-8A_2A_5-9A_3A_4-8A_4 A_3-5A_5A_2+15A_2^2A_4+16A_2A_3^2+11A_2A_4A_2+12A_3A_2A_3+9A_3^2A_2+8A_4A_2^2-24A_2^3A_3-19A_2^2A_3A_2-19A_2A_3A_2^2-11A_3A_2^3+28A_2^5,\ L_1=2A_2^2,\ L_2=4(A_2A_3-A_2^3),\ L_3=6A_2A_4-8A_2^2A_3-6A_2A_3A_2+3A_3A_2^2+8A_2^4,\ \text {and}\ L_4=8A_2A_5-12A_2^2A_4-12A_2A_3^2-8A_2A_4A_2+6A_3A_2A_3+6A_3^2A_2+16A_2^3A_3+12A_2^2A_3A_2+12A_2A_3A_2^2-12A_3A_2^3-16A_2^5.\)

Let us denote \(e_z^{(k)}=z^{(k)}-x^*\), then using Eqs. (7)–(9), the second step of method (4) yields

where \(M_1=2A_2^2,\ M_2=4A_2A_3+3A_3A_2-9A_2^3,\ M_3=6A_2A_4+6A_3^2+4A_4A_2-18A_2^2A_3-14A_2A_3A_2-12A_3A_2^2+30A_2^4, \ \text {and}\ M_4=8A_2A_5+9A_3A_4+8A_4A_3+5A_5A_2-27A_2^2A_4-28A_2A_3^2-19A_2A_4A_2-24A_3A_2A_3-18A_3^2A_2-16A_4A_2^2+60A_2^3A_3+47A_2^2A_3A_2+43A_2A_3A_2^2+38A_3A_2^3-88A_2^5.\)

Taylor expansion of \(F(z^{(k)})\), using Eq. (11), is established as

where \(P_1=2A_2^2,\ P_2=4A_2A_3+3A_3A_2-9A_2^3,\ P_3=6A_2A_4+6A_3^2+4A_4A_2-18A_2^2A_3-14A_2A_3A_2-12A_3A_2^2+30A_2^4, \ \text {and} \ P_4=8A_2A_5+9A_3A_4+8A_4A_3+5A_5A_2-27A_2^2A_4-28A_2A_3^2-19A_2A_4A_2-24A_3A_2A_3-18A_3^2A_2-16A_4A_2^2+60A_2^3A_3+47A_2^2A_3A_2+43A_2A_3A_2^2+38A_3A_2^3-84A_2^5.\)

Consequently, the error equation at the \((k+1)^{th}\) iteration is derived by substituting the expressions of (7), (10), (11), and (12) in the final step of method (4), which is given by the expression

where \(Q_1=2(1-a-b-c)A_2^2,\ Q_2=(1-a-b-c)(4A_2A_3+3A_3A_2)-(9-13a-17b-21c)A_2^3, \ Q_3=(1-a-b-c)(6A_2A_4+6A_3^2+4A_4A_2)-2(9-13a-17b-21c)A_2^2A_3-2(7-10a-13b-16c)A_2A_3A_2-6(2-3a-4b-5c)A_3A_2^2+2(15-28a-47b-70c)A_2^4,\) and the expression of \(Q_4\), being lengthy, is not shown explicitly here.

Ultimately, there should be an optimum selection of parameters’ values so as to achieve the maximum possible convergence speed for the proposed scheme. In that sense, if we choose \(a=\frac{7}{2}\), \(b=-4\), and \(c=\frac{3}{2}\), then the coefficients \(Q_1\), \(Q_2\), and \(Q_3\) in Eq. (13) vanish. Further, the error equation is reduced to

Hence, the sixth order of convergence is proved for the iterative method (4). \(\square \)

The proposed sixth-order iterative method is finally presented below.

Clearly, the proposed method utilizes three function evaluations, two Jacobian matrices, and one Jacobian inversion per iteration. For the further reference in this study, the technique (14) is denoted as \(\boldsymbol{\phi }_1\).

3 Computational Efficiency

Solving nonlinear systems using iterative procedures involves a significantly large number of mathematical calculations or operations. Apart from achieving the high convergence order, an iterative algorithm shall also be evaluated on the basis of its computational aspects. The term computational efficiency relates to the investigation of algorithmic characteristics that how much computing resources it utilizes during its implementation. In what follows, the concept of computational efficiency shall be investigated thoroughly, and further, the analysis shall be carried out in this context for the comparison of the new iterative method with the existing counterparts.

For locating the solution of a nonlinear system using an iterative method, initially, an approximation is selected in the neighborhood of the solution. Then, the iterative process is terminated using a specific criterion, which is generally prescribed as

where ‘k’ is the iteration index, ‘\(\epsilon \)’ is the desired precision, and ‘d’ is the number of significant decimal digits of the obtained approximation. To estimate the number of iterations which are required to achieve the desired accuracy, it is assumed that \(||x^{(0)} -x^* || \approx 10^{-1}\). Then, after the ‘k’ number of iterative steps, we have the approximation: \(10^{-d} \approx 10^{-r^k}\), and that simply provides the required estimation \(k \approx \log d/\log r\), where r is the convergence order. Further, let the computational cost per iteration be represented by ‘C’, then the completed iterative process constitutes the total computational cost which is equal to ‘kC’. The measure of computational efficiency, conventionally known as the efficiency index, is formulated in various manners in the literature. Ostrowski in [8] and Traub in [13] have independently provided this measure in different ways. But, defined in any way, the efficiency index always indicates reciprocal relation with the cost of computation. Therefore, taking into consideration the reciprocal relationship, the efficiency index be evaluated as

Consider a m-dimensional function, \(F:\mathbb {R}^m \rightarrow \mathbb {R}^m\), \(F(x)=(f_1(x),..., f_m(x))^T\), where \(x=(x_1, x_2,..., x_m)^T\), then the estimation of computational cost per iteration is given by the formulation,

where \(N_0(m)\) and \(N_1(m)\) represent the number of evaluations of scalar functions in the computation of F and \(F'\), respectively, and \(N(m,\mu )\) stands for the number of product or quotient evaluations per iteration. The ratios \(\eta _0>0\) and \(\eta _1>0\), which interrelate the costs of products and functional evaluations, and a ratio \(\mu >1\), interrelating costs of products and quotients, are the necessary parameters in order to express \(C(m,\eta _0,\eta _1,\mu )\) in terms of product units. Let us note that evaluations of m and \(m^2\) scalar functions are required, respectively, to compute a function F and a derivative \(F'\). Additionally, to compute an inverse linear operator, and eventually to evaluate \(F'^{-1}F\), the technique of LU decomposition is employed that involves \(m(m-1)(2m-1)/6\) products and \(m(m-1)/2\) quotients, which is followed by the resolution of two triangular linear systems requiring \(m(m-1)\) products and m quotients. Further, m products for scalar-vector multiplication and \(m^2\) products for matrix-vector multiplication must be taken into account.

With the purpose to analyze and compare the efficiency of the developed method, we have included the existing sixth-order methods developed by Bahl et al. [2], Cordero et al. [4], Esmaeili and Ahmadi [5], Lofti et al. [6], Soleymani et al. [12], and Wang et al. [14]. For the ready reference, these methods are expressed below, which are denoted by \(\phi _i\), where \(i=2,3,\ldots ,7\).

Method by Bahl et al. (\(\phi _2\)):

Method by Cordero et al. (\(\phi _3\)):

Method by Esmaeili and Ahmadi (\(\phi _4\)):

Method by Lofti et al. (\(\phi _5\)):

Method by Soleymani et al. (\(\phi _6\)):

Method by Wang et al. (\(\phi _7\)):

Denoting the computational costs and the efficiency indices, respectively, by \(C_i\) and \(E_i\), \(i=1,2, \ldots ,7\), and then taking into account the mathematical operations or computations described above, the computational costs and the corresponding efficiency indices are expressed as follows:

Here \(D = \log d.\)

3.1 Comparison of Efficiencies

Consider a ratio, for the comparison of iterative methods, say \(\phi _i\) versus \(\phi _j\), which is defined as

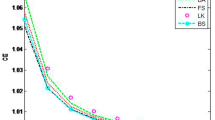

where \(r_i\) and \(r_j\), respectively, are the orders of convergence of the methods \(\phi _i\) and \(\phi _j\). Clearly, if \(\varPi _j^i>1\) holds, then \(\phi _i\) will be more efficient than \(\phi _j\), and we symbolize it as \(\phi _i\gtrdot \phi _j\). The proposed method, \(\boldsymbol{\phi }_1\), shall be compared analytically as well as geometrically with the existing methods, \(\phi _i \ (i=2,3,\ldots ,7)\), which are already presented above. The analytical way of comparison is the resolution of inequality \(\varPi _i^1>1\) for each \(i=2,3,\ldots ,7\), and the results obtained are presented geometrically by projecting the boundary lines \(\varPi _i^1=1\), in \((\eta _1,\eta _0)\)-plane, corresponding to the special cases of \(m=5,\ 10,\ 25,\ \text {and} \ 50\), and fixing \(\mu =3\) in each case. Let us note here that each line will divide the plane into two parts, where \(\boldsymbol{\phi }_1\gtrdot \phi _i\) on one side, whereas \(\phi _i\gtrdot \boldsymbol{\phi }_1\) on the other.

In view of the above discussion, we now present the comparison analysis through the following theorem:

Theorem 2

For all \(\eta _0>0\), \(\eta _1>0\), and \(\mu >1\), we have that

-

(i)

\(E_1>E_2\), for \(\eta _0<\frac{1}{6}(2m^2-3m+19+3\mu (m-3))\).

-

(ii)

\(E_1>E_3\) for \(m \ge 7\), and \(E_1<E_3\) for \(m=2,3\), but otherwise comparison depends on value of \(\mu \).

-

(iii)

\(E_1>E_4\), for \(\eta _0<\frac{1}{6}(2m^2-15m+13+3\mu (m-3))\).

-

(iv)

\(E_1>E_5\), for \(\eta _0<\frac{1}{6}(2m^2-3m+7+3\mu (m-1))\).

-

(v)

\(E_1>E_6\), for \(\eta _0< \frac{1}{6}(2m^2+9m+1+3\mu (m-1))\).

-

(vi)

\(E_1>E_7\), for \(\eta _0< \frac{1}{3}(2m^2-6m+7+3\mu (m-2))\).

Proof

\(\boldsymbol{\phi }_1\) versus \(\phi _2\) case:

The ratio in this case is

By resolving of the inequality \(\varPi _2^1>1\), it is straightforward to deduce that \(\eta _0<\frac{1}{6}(2m^2-3m+19+3\mu (m-3))\), which concludes (i). The boundary lines \(\varPi _2^1=1\), in \((\eta _1,\eta _0)\)-plane, are displayed in Fig. 1, where \(\boldsymbol{\phi }_1\gtrdot \phi _2\) in the section which is below the line for each particular case of m.

\(\boldsymbol{\phi }_1\) versus \(\phi _3\) case:

The ratio in this case is

It is easy to verify that, for \(\eta _0>0,\ \eta _1>0\), and \(\mu >1\), the inequality \(\varPi _3^1>1\) holds for \(m \ge 7\), and \(\varPi _3^1<1\) holds only for \(m=2,3\). For \(4\le m\le 6\), the inequality \(\varPi _3^1>1\) holds when \(\mu >\frac{2m^2-15m-5}{9-3m}\), and this eventually proves (ii). So, we conclude here that \(\boldsymbol{\phi }_1\gtrdot \phi _3\) for all \(m \ge 7\), whereas \(\boldsymbol{\phi }_1\lessdot \phi _3\) for \(m=2,3\), but otherwise, comparison depends on the value of \(\mu \).

\(\boldsymbol{\phi }_1\) versus \(\phi _4\) case:

The ratio in this case is

Resolution of the inequality \(\varPi _4^1>1\) results into \(\eta _0<\frac{1}{6}(2m^2-15m+13+3\mu (m-3))\), which concludes (iii). The boundary lines for this comparison, in \((\eta _1,\eta _0)\)-plane, are shown in Fig. 2, where \(\boldsymbol{\phi }_1\gtrdot \phi _4\) on the lower region of line for each case of m.

\(\boldsymbol{\phi }_1\) versus \(\phi _5\) case:

The ratio in this case is

The inequality \(\varPi _5^1>1\) simply resolves into relation \(\eta _0<\frac{1}{6}(2m^2-3m+7+3\mu (m-1))\), and this proves (iv). In this comparison, the boundary lines are displayed in Fig. 3, where \(\boldsymbol{\phi }_1\gtrdot \phi _5\) holds on the lower section of line for each particular case.

\(\boldsymbol{\phi }_1\) versus \(\phi _6\) case:

The ratio in this case is

It is straightforward to establish the relation \(\eta _0< \frac{1}{6}(2m^2+9m+1+3\mu (m-1))\) by resolving \(\varPi _6^1>1\), which eventually proves (v). The boundary lines, in this case, are presented in Fig. 4 with \(\boldsymbol{\phi }_1\gtrdot \phi _6\) on the lower side of each line.

\(\boldsymbol{\phi }_1\) versus \(\phi _7\) case:

The ratio in this case is

Resolution of the inequality \(\varPi _7^1>1\) results into the relation \(\eta _0< \frac{1}{3}(2m^2-6m+7+3\mu (m-2))\). This concludes (vi), and the boundary lines for this case are shown in Fig. 5, where \(\boldsymbol{\phi }_1\gtrdot \phi _7\) in the region which is below each boundary line. \(\square \)

From the above comparison analysis, it can be clearly observed that the proposed iterative method shows an increase in the efficiency index with the increasing values of m. We conclude this section with a note that, as large as the system is, the proposed sixth-order method exhibits superiority over the existing methods in the subject of computational efficiency.

4 Numerical Experimentation

In this section, the numerical experimentation shall be executed to assess the performance of the developed method. The nonlinear problems arising in different physical situations have been selected for this purpose. Moreover, to arrive at some valid conclusion, the outcomes of this testing need to be analyzed and further compared with the corresponding results of the existing methods. Two of the most significant factors which contribute toward the numerical performance of an iterative technique are (i) Stability and (ii) CPU time elapsed during its execution on the digital platform. Let us note that all the numerical computations, in our case, are being executed using the software Mathematica [15] installed on the computer equipped with specifications: Intel(R) Core (TM) i5-9300H processor and Windows 10 operating system.

In what follows, the comparison analysis shall be illustrated by locating the solutions of nonlinear problems, and for the termination of iterations, the stopping criterion being employed is described as follows:

In addition, the approximated computational order of convergence (ACOC) is required to validate the convergence order established by analytical means, which is computed by the formula (see [5]),

To make connection between the computational efficiency and the performance of technique, it is necessary to estimate the parameters, \(\eta _0\), \(\eta _1\), and \(\mu \), as defined in Sect. 3. These parameters are essential to express the mathematical operations and functional evaluations in terms of product units. In order to achieve this, Table 1 displays the CPU time elapsed during the execution of elementary mathematical operations and their estimated cost of computation in units of products. Note that the estimated cost of division is approximately thrice the cost of the product.

Now, we consider the following nonlinear problems to demonstrate the performance analysis and display results in respect of the following: (i) Number of iterations (k), (ii) ACOC, (iii) Computational cost (\(C_i\)), (iv) Efficiency index (\(E_i\)), and (v) Elapsed CPU time (in seconds). To illustrate the efficiency indices of techniques, we have conveniently chosen \(D=10^{-5}\) for each of the problems.

Problem 1

Starting with the system of three nonlinear equations:

the initial estimate is taken as \(\left( -\frac{3}{2},-\frac{3}{2},-\frac{3}{2}\right) ^T\) to locate the particular solution,

For this particular problem, the parameters used in the equation (16) are estimated as \((m,\eta _0,\eta _1,\mu )=(3,2.33,0.67,2.81)\). Numerical results for the comparison are displayed in Table 2.

Problem 2

Consider the nonlinear integral equation (see [1]),

where \(t \in [0,1]\), and \(u \in C[0,1]\), with C[0, 1] being a space of all continuous functions defined on the interval [0, 1].

The given integral equation can be transformed into a finite-dimensional problem by partitioning the given interval [0, 1] uniformly as follows:

where \(h=1/k\) is the sub-interval length. Approximating the integral, appearing in the equation (18), using the trapezoidal rule of integration, and denoting \(u(t_i)=u_i\) for each i, we obtain the system of nonlinear equations as

where \(t_i=s_i=i/k\) for each i.

We solve this problem in particular by taking \(k=10\). Setting the initial approximation as \((\frac{1}{2},{\mathop {\cdots \cdots }\limits ^{10}},\frac{1}{2})^T\), the approximate numerical solution of the reduced system (19) is obtained as,

The numerical solution, so obtained, is compared graphically with the exact solution in Fig. 6, and further, numerical results are depicted in Table 3. Moreover, the parameters of Eq. (16) are estimated as \((m,\eta _0,\eta _1,\mu )\) = (10, 3, 1, 2.81).

Problem 3

Consider the boundary value problem (see [3]), which models the finite deflections of an elastic string under the transverse load, and it is presented as follows:

where ‘a’ is a parameter. The exact solution of the given problem is \(u(t)=\frac{1}{a^2}\ln \left( \frac{\cos (a(t-1/2))}{\cos (a/2)}\right) \). We intend to remodel the problem (20) into a finite-dimensional problem by considering the partition of [0, 1], with equal sub-interval length \(h=1/k\), as

Denoting \(u(t_i)=u_{i}\) for each \( i=1,2,\ldots ,k-1\), and approximating the derivatives involved in (20) by the second-order divided differences,

the system of \(k-1\) nonlinear equations in \(k-1\) variables is obtained as

where \(u_0=0\) and \(u_k=0\) are the transformed boundary conditions. In particular, setting \(k=51\), the above system reduces to 50 nonlinear equations. Further, choosing \(a=2\), and selecting the initial approximation as \((-1,{\mathop {\cdots \cdots }\limits ^{50}},-1)^T\), the approximate numerical solution so obtained, along with the exact solution, is plotted in Fig. 7. Further, the numerical performance of the methods is displayed in Table 4. The estimated values of parameters, used in Eq. (16), are given as \((m,\eta _0,\eta _1,\mu ) = (50,2,0.078,2.81).\)

Problem 4

Now let us take a system of nonlinear equations as follows:

By taking \(m=100\), we select the initial approximation \((1,{\mathop {\cdots \cdots }\limits ^{100}},1)^T\) to obtain the particular solution,

The estimated values of the parameters in this problem are \((m, \eta _0,\eta _1,\mu )\) = \((100, 175.24, 0.048,2.81)\). Further, Table 5 exhibits the comparison of the performance of methods.

Problem 5

At last, we consider a large system of equations:

where \(m=500\). The above given system has a particular solution,

and to obtain this solution, the initial estimate is taken as \(\left( \frac{1}{10},{\mathop {\cdots \cdots }\limits ^{500}},\frac{1}{10}\right) ^T\). Numerical results for the performance of methods are depicted in Table 6. Further, the values of parameters are estimated as

The findings of numerical experimentation signify the efficient and stable nature of the proposed sixth-order method. The results are remarkable with respect to the efficiency index and CPU time, and certainly favor the new method over its existing counterparts. Furthermore, computation of ACOC validates the theoretically established convergence order.

5 Conclusions

A three-step iterative technique, involving some undetermined parameters, has been designed for the solution of nonlinear equations. The methodology to design the technique is based on the objective to accelerate the convergence rate of the well-known third-order Potra-Pták scheme. Analysis of convergence leads to establishing the sixth order of convergence for a particular set of parametric values. Utilizing only a single Jacobian inversion per iteration, the proposed iterative method exhibits highly economical nature when analyzed in the context of computational complexity. This is affirmed by comparing the computational efficiency of the new method, by analytical as well as visual approach, with the efficiencies of existing methods. Further, numerical performance is examined by locating the solutions of some selected nonlinear problems. The findings of this testing clearly indicate the superiority of the proposed technique over its existing counterparts, especially for large-scale nonlinear systems.

References

Avazzadeh, Z., Heydari, M., Loghmani, G.B.: Numerical solution of Fredholm integral equations of the second kind by using integral mean value theorem. Appl. Math. Model. 35, 2374–2383 (2011). https://doi.org/10.1016/j.apm.2010.11.056

Bahl, A., Cordero, A., Sharma, R., Torregrosa, J.R.: A novel bi-parametric sixth order iterative scheme for solving nonlinear systems and its dynamics. Appl. Math. Comput. 357, 147–166 (2019). https://doi.org/10.1016/j.amc.2019.04.003

Cordero, A., Hueso, J.L., Martínez, E., Torregrosa, J.R.: Efficient high-order methods based on golden ratio for nonlinear systems. Appl. Math. Comput. 217, 4548–4556 (2011). https://doi.org/10.1016/j.amc.2010.11.006

Cordero, A., Hueso, J.L., Martínez, E., Torregrosa, J.R.: Increasing the convergence order of an iterative method for nonlinear systems. Appl. Math. Lett. 25, 2369–2374 (2012). https://doi.org/10.1016/j.aml.2012.07.005

Esmaeili, H., Ahmadi, M.: An efficient three-step method to solve system of nonlinear equations. Appl. Math. Comput. 266, 1093–1101 (2015). https://doi.org/10.1016/j.amc.2015.05.076

Lotfi, T., Bakhtiari, P., Cordero, A., Mahdiani, K., Torregrosa, J.R.: Some new efficient multipoint iterative methods for solving nonlinear systems of equations. Int. J. Comput. Math. 92, 1921–1934 (2014). https://doi.org/10.1080/00207160.2014.946412

Ortega, J.M., Rheinboldt, W.C.: Iterative Solution of Nonlinear Equations in Several Variables. Academic Press, New York (1970)

Ostrowski, A.M.: Solution of Equation and Systems of Equations. Academic Press, New York (1960)

Potra, F.A., Pták, V.: On a class of modified Newton processes. Numer. Funct. Anal. Optim. 2, 107–120 (1980). https://doi.org/10.1080/01630568008816049

Sharma, J.R., Gupta, P.: An efficient fifth order method for solving systems of nonlinear equations. Comput. Math. Appl. 67, 591–601 (2014). https://doi.org/10.1016/j.camwa.2013.12.004

Sharma, R., Sharma, J.R., Kalra, N.: A modified Newton-Özban composition for solving nonlinear systems. Int. J. Comput. Methods 17, 1950047 (2020). https://doi.org/10.1142/S0219876219500476

Soleymani, F., Lotfi, T., Bakhtiari, P.: A multi-step class of iterative methods for nonlinear systems. Optim. Lett. 8, 1001–1015 (2014). https://doi.org/10.1007/s11590-013-0617-6

Traub, J.F.: Iterative Methods for the Solution of Equations. Chelsea Publishing Company, New York (1982)

Wang, X., Kou, J., Gu, C.: Semilocal convergence of a sixth-order Jarratt method in Banach spaces. Numer. Algor. 57, 441–456 (2011). https://doi.org/10.1007/s11075-010-9438-1

Wolfram, S.: The Mathematica Book. Wolfram Media, USA (2003)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Sharma, J.R., Singh, H. (2023). A Computationally Efficient Sixth-Order Method for Nonlinear Models. In: Sharma, R.K., Pareschi, L., Atangana, A., Sahoo, B., Kukreja, V.K. (eds) Frontiers in Industrial and Applied Mathematics. FIAM 2021. Springer Proceedings in Mathematics & Statistics, vol 410. Springer, Singapore. https://doi.org/10.1007/978-981-19-7272-0_39

Download citation

DOI: https://doi.org/10.1007/978-981-19-7272-0_39

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-19-7271-3

Online ISBN: 978-981-19-7272-0

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)