Abstract

This paper deals with the estimation and classification of two logistic populations with a common scale and different location parameters. Utilizing the Metropolis–Hastings method, we compute the Bayes estimators of the associated unknown parameters. For this purpose, we consider gamma priors for the common scale parameter and normal priors for two location parameters. These Bayes estimators are compared with some of the existing estimators in terms of their bias and the mean squared error numerically. Moreover, utilizing these estimators for the associated parameters, we construct some classification rules in order to classify a single observation into one of the two logistic populations under the same model. The performances of each of the classification rules are evaluated through expected probability of misclassification, numerically. Finally, two real-life data sets have been considered in order to show the potential application of the model problem.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Bayes estimator

- Classification rules

- Expected probability of misclassification

- Metropolis–Hastings procedure

- Numerical comparison

1 Introduction

Suppose we have random samples from two logistic populations with a common scale parameter \(\sigma \) and possibly distinct and unknown location parameters \(\mu _{1}\) and \(\mu _{2}.\) Specifically, let  and

and  be m and n independent random samples taken from two logistic populations Logistic\((\mu _{1},\sigma )\) and Logistic\((\mu _{2},\sigma )\) respectively. Here we denote Logistic\((\mu _{i}, \sigma );\) \(i=1,2\) as the logistic population having the probability density function

be m and n independent random samples taken from two logistic populations Logistic\((\mu _{1},\sigma )\) and Logistic\((\mu _{2},\sigma )\) respectively. Here we denote Logistic\((\mu _{i}, \sigma );\) \(i=1,2\) as the logistic population having the probability density function

The problem is to classify a new observation into one of the two logistic populations using some of the estimators of the common scale parameter and two different location parameters. First, we will derive certain estimators of the associated parameters such as \(\sigma ,\) and the other two nuisance parameters \(\mu _{1}\) and \(\mu _{2}\) from a Bayesian perspective. Using these estimators, we will construct some classification rules to classify a new observation into one of the two logistic populations using training samples. The performances of all the estimators will be evaluated through their bias

and the mean squared error (MSE)

where ‘d’ is an estimator for estimating the parameter \(\theta .\)

In a general framework, the problem of classification is to classify a new observation, say z into one of the several populations/groups, say \(\pi _1,\) \(\pi _2,\) \(\ldots ,\) \(\pi _k,\) using certain methodologies and training data. The classification problem can be seen in machine learning for image classification, text classification, electrical science for signal classification, military surveillance for recognizing speech, in biomedical sciences for taxonomic study. It also has an application in computer science for classifying the object using receiver operating characteristic (ROC) curve.

Researchers in the past have considered the problem of classification under the equality assumption of parameters. For example, [12] considered the classification problem under equality assumption on the location parameters using training samples from several shifted exponential populations. Utilizing some well-known estimators of the common location parameter, the authors proposed several classification rules. The performances of all the rules have been evaluated using the expected probability of misclassification. Further, [13] considered the classification rules for the two Gaussian populations with a common mean. It is noted that the classification problem was first considered by Fisher in the year 1936 in a general framework. However, under multivariate normal model, the problem of classification was considered by [1, 2, 16]. Basu [5] proposed the classification rules for the two shifted exponential distribution. Note that [2] considered the classification problem under multivariate normal set up with a common co-variance matrix. This model reduces the problem under common standard deviation (scale) in the case of univariate normal.

Contrary to the above, significantly less attention has been paid to constructing classification rules and studying their performances when the distribution is not normal and exponential. However, there are many practical scenarios where the emerging data sets satisfactorily modeled by logistic distribution. In view of this, we take two logistic populations having a common scale parameter (which is also the standard error) and two different location parameters and construct several classification rules. In this regard, we have considered two real-life data sets from civil engineering science, and the other is related to food science, which has been satisfactorily modeled by using logistic distribution. The details of which have been discussed in Sect. 5. Using the estimators under the same model problem, we construct specific classification rules that may classify a new observation. The applications of logistic distribution are seen in several fields of study. Logistic distribution is use to describe the modeling agriculture production data, growth model in a demographic study (see [4]), analysis of biomedical data, life testing data and bio-assay (see [14, 21]).

Several authors have considered the problem of estimating parameters under equality restrictions in the past. For recent detailed and updates literature on estimating parameters under equality restriction under the various probabilistic model, we refer to [18,19,20, 23,24,25,26]. Recently, [20] studied the estimation of common scale parameter (\(\sigma \)) of two logistic populations with the unknown and unequal location parameters (\(\mu _{i}\)) from Bayesian point of view. They derived the MLEs and individual approximate Bayes estimators of the parameter using non-informative and informative priors, and using the Monte Carlo simulation study; they compare the performances of all the proposed Bayes estimators numerically.

The researchers studied the point and interval estimation of logistic population parameters, namely the location and scale parameters, from classical and Bayesian points of view. The first attempt to estimate the logistic distribution parameters was made by [9], where they derived the linear unbiased estimators based on sample quantiles and some approximate unbiased linear estimators and compared their efficiencies numerically. One may refer to [3, 7, 11, 17] for results on estimating scale and location parameters of a logistic distribution.

The remaining contribution of the current research work can be described as follows. In Sect. 2, we discuss the Bayes classification rule in a general framework and then obtained the classification rule for our proposed model set up. Consequently, using the maximum likelihood estimators (MLEs) of the associated parameters, we construct a classification rule. In Sect. 3, we consider Bayes estimators of the associated parameters using Markov chain Monte Carlo methods. Using these estimators, classification rule also has been constructed. In Sect. 4, we carry out a detailed simulation study and compute the bias and the mean squared error of the proposed estimators. Moreover, these estimators are compared with some of the existing estimators previously proposed by Nagamani [20] for the associated parameters. Further classification rules are constructed using the estimators proposed by Nagamani [20] and compared with the rules using MLEs and the MCMC method. In Sect. 5, we present two real-life data sets, which has been satisfactorily modeled by logistic distributions with common scale parameter. A new observation has been classified into one of the data sets, using the proposed classification rules. Concluding remarks are given in Sect. 6.

2 Classification Rule Using the MLE for Two Logistic Populations

In this section, we construct a classification rule using the MLEs of the associated parameters, \(\sigma ,\) \(\mu _{1}\) and \(\mu _{2}\) to the model (1.1).

Suppose we have training samples  and

and  from two Logistic populations \(\varPi _{1}\) and \(\varPi _{2}\) respectively, where \(\varPi _{i}\) is the population having density \(f_{i}(x;\mu _i,\sigma ),\) \(i=1,2.\) Now a new observation, say z is classified into the population \(\varPi _{1}\) if

from two Logistic populations \(\varPi _{1}\) and \(\varPi _{2}\) respectively, where \(\varPi _{i}\) is the population having density \(f_{i}(x;\mu _i,\sigma ),\) \(i=1,2.\) Now a new observation, say z is classified into the population \(\varPi _{1}\) if

and into the population \(\varPi _{2}\) if

Here C(i|j) is the cost of misclassification when an observation is from \(\varPi _j\) and is misclassified into the population \(\varPi _i,\) \(i\ne j,\) \(i,j=1,2.\) If we assume that \(C(2|1)=C(1|2)\) and \(q_{2}= q_{1},\) where \(q_{i}\) is the prior probability associated to the population \(\varPi _{i}\) then, the classification function for classifying an observation z into Logistic\((\mu _{1},\sigma )\) or Logistic\((\mu _{2},\sigma )\) obtained as

When the parameters are unknown, a natural approach is to replace the parameters by their respective estimators and obtain the classification rule. Thus utilizing the classification function W(z), we propose classification Rule R as: classify z into the population \(\varPi _{1}\) if \(W(z)\ge 0,\) else classify z into the population \(\varPi _{2}.\)

Let us denote P(i|j, R), (\(i\ne j,\) \(i,j=1,2\)) as the probability of misclassification for an observation from \(\varPi _{j}\) when it is misclassified into the population \(\varPi _{i}.\) The new observation z is classified into one of the two populations, such that the probability of misclassification becomes the minimum. We refer to Anderson [1], for the details of the classification rules using prior information.

In order to obtain the MLEs of the associated parameters, let us consider the log-likelihood function under the current model setup which is given by

where \(-\infty<\mu _i<\infty (i=1,2);\) \(\sigma >0.\)

Note that, under the same model setup the MLEs of the parameters \(\sigma ,\) \(\mu _{1}\) and \(\mu _{2}\) have been numerically obtained by Nagamani [20]. Let us denote the MLEs of the parameters \(\sigma ,\) \(\mu _{1}\) and \(\mu _{2}\) respectively by \(\hat{\sigma }_{ml},\) \(\hat{\mu }_{1ml}\) and \(\hat{\mu }_{2ml}.\) For computing the MLEs, using Newton–Raphson method, we have taken the initial guess as suggested by Nagamani [20].

Now using the MLEs of the parameters \(\mu _{1},\) \(\mu _{2}\) and \(\sigma ,\) we define a classification function, say \(W_{ML}(z)\) as,

Using this classification function \(W_{ML}(z),\) we propose a classification rule, say \(R_{ML}\) as: classify z into \(\varPi _{1}\) if \(W_{ML}(z) \ge 0,\) else classify z into the \(\varPi _{2}.\)

3 Bayesian Estimation of Parameters Using MCMC Approach and Classification Rule

It is noted that certain Bayes estimators of parameters using Lindley’s approximation method and different types of priors with respect to the squared error loss function and the LINUX loss function have been obtained by Nagamani [20]. Here, we consider Bayes estimators of the associated parameters using MCMC method along with the Metropolis–Hastings algorithm. Moreover, utilizing these estimators we propose a classification rule. Metropolis–Hastings algorithm is an important class of MCMC method, which is applied to get the samples from the posterior distribution effectively in a systematic manner.

A special case of Metropolis–Hastings method is the symmetric random walk Metropolis (RWM) algorithm. In this method, we take the Markov chain described by c alone to be a simple symmetric random walk, so that \(c(\delta , \delta ^{*}) =c(\delta ^{*}-\delta )\) that is the probability density c is a symmetric function. Suppose that a target distribution has probability density function \(\pi .\) Thus for given \(\delta _n,\) (at \(n^{th}\) state) we generate \(\delta ^{*}_{n+1}\) from a specified probability density, say \(q(\delta _{n},\delta ^{*})\) and is then accepted with probability \(\alpha (\delta _{n}, \delta ^{*}_{n+1})\) which is given by

If the proposed value is accepted, we set \(\delta _{n+1} = \delta ^{*}_{n+1},\) otherwise we reject it and assign \(\delta _{n+1}=\delta _{n}.\) The function \(\alpha (\delta , \delta ^{*})\) is chosen precisely to ensure that the Markov chain \(\delta _0, \delta _1, \dotsc , \delta _n\) is reversible with respect to the target probability density \(\pi (\delta ^{*}),\) in the sense that the target density is stationary for the chain. We refer to [6, 8, 10] for details of the procedure of the Metropolis–Hastings algorithm.

In order to estimate the parameters using Metropolis–Hastings algorithm, in the current model, we need to specify the prior distributions for the unknown parameters \(\sigma ,\) \(\mu _{1}\) and \(\mu _{2}.\) We assume that the three parameters are independent having the prior probability densities respectively as

where \(N(a_{i}, b_{i}^{2})\) denotes the normal distribution having mean \(a_{i}\) and variance \(b_{i}^{2}\) and \(\varGamma (c,d)\) is the gamma distribution having c as shape and d as scale parameters, respectively. These hyperparameters are known. The posterior probability density of the parameters is seen to be of the following forms.

where we denote \(g(\mu _{1},\sigma _{1},x_{i}){=}\exp \{-(\frac{x_{i}-\mu _{1}}{\sigma })\},\) \(h(\mu _{2},\sigma ,y_{j})=\exp \{-(\frac{y_{j}-\mu _{2}}{\sigma })\},\) \(K_{1}=\sum _{i=1}^{m}(x_{i}-\mu ),\) and \(K_{2}=\sum _{j=1}^{n}(y_{j}-\mu ).\) Note that, the posterior densities of \(\mu _{1},\) \(\mu _{2}\) and \(\sigma \) do not have any known distributional form. Hence, to generate samples from the posterior probability distributions of \(\mu _{1},\) \(\mu _{2}\) and \(\sigma ,\) we use the well-known random walk Metropolis–Hastings algorithm (RWM) which is a MCMC procedure. The details of the computational steps to generate \(\mu _{1},\) \(\mu _{2}\) and \(\sigma \) simultaneously using Metropolis–Hastings algorithm can be described as follows.

- Step-1::

-

Let the \(k^{th}\) state of Markov chain consists of \((\mu _{1}^{(k-1)}, \mu _{2}^{(k-1)}, \sigma ^{(k-1)}).\)

- Step-2::

-

In order to update the parameters using random walk Metropolis–Hastings algorithm (RWM), we generate \(\varepsilon _{1} \sim N(0,\sigma _{\mu _{1}}^{2}),\) \(\varepsilon _{2} \sim N(0,\sigma _{\mu _{2}}^{2})\) and \(\varepsilon _{3} \sim N(0,\sigma _{\sigma }^{2}).\) Set \(\mu _{1}^{(*)}=\mu _{1}^{(k-1)}+\varepsilon _{1},\) \(\mu _{2}^{(*)}=\mu _{2}^{(k-1)}+\varepsilon _{2},\) \(\sigma ^{(*)}=\sigma ^{(k-1)}+\varepsilon _{3}.\)

- Step-3::

-

Calculate the term \(\alpha (\mu _{1}^{(*)},\mu _{2}^{(*)},\sigma ^{(*)})\) as given (3.1).

- Step-4::

-

Define a term \(S=\min (1, \alpha (\mu _{1}^{(*)},\mu _{2}^{(*)},\) \(\sigma ^{(*)})).\) Generate a random number \(v \sim U(0,1).\) If \( v \le S,\) accept \((\mu _{1}^{(*)},\mu _{2}^{(*)},\) \(\sigma ^{*})\) and update the parameters as \(\mu _{1}^{(k)}= \mu _{1}^{(*)},\) \(\mu _{2}^{(k)}=\mu _{2}^{(*)}\) and \(\sigma ^{(k)}=\sigma ^{(*)},\) otherwise reject \(\mu _{1}^{(*)},\) \(\mu _{2}^{(*)}\) and \(\sigma ^{(*)}\) and set \(\mu _{1}^{(k)}=\mu _{1}^{(k-1)},\) \(\mu _{2}^{(k)}=\mu _{2}^{(k-1)}\) and \(\sigma ^{(k)}=\sigma ^{(k-1)}.\) Repeat this step for \(k=1,2,\ldots , K,\) where K is a large number suitably fixed.

In this computational method, the most important step is to choose the values of \(\sigma _{\mu _{1}}^{2},\) \(\sigma _{\mu _{2}}^{2}\) and \(\sigma _{\sigma }^{2}.\) One may refer to [6] regarding choice of these variances. The values of \(\sigma _{\mu _{1}}^{2},\) \(\sigma _{\mu _{2}}^{2}\) and \(\sigma _{\sigma }^{2}\) are chosen in such a way that a acceptance ratio should lie within the range from 20% to 30%. Now using these MCMC samples, we estimate the parameters \(\mu ,\) \(\sigma _{1}\) and \(\sigma _{2}\) respectively as

where \(M_{0}\) is the burn-in period for the RWM method.

Utilizing these Bayes estimators of the parameters \(\mu _{1},\) \(\mu _{2}\) and \(\sigma ,\) we construct a classification function, say \(W_{MC}(z)\) as

Using this classification function \(W_{MC}(z),\) we propose a classification rule, say \(R_{MC}\) as: classify z into \(\varPi _{1}\) if \(W_{MC}(z) \ge 0,\) else classify z into the \(\varPi _{2}.\)

Remark 1

We note that, all the existing estimators such as the MLE, Bayes estimators using different priors for the current model are compared with the Bayes estimator using MCMC approach in terms of MSE and bias numerically in Sect. 4. It has been noticed that the MLEs and the Bayes estimators using MCMC approach outperform the earlier estimators for all sample sizes. Hence, in the numerical comparison section, we only consider classification rules for comparison purpose based on the MLEs and the Bayes estimators using the MCMC approach.

4 Numerical Comparison of Classification Rules

In this section, we will evaluate and compare the performances of all the classification rules in terms of probability of misclassification, numerically. Note that none of the estimators, and hence the classification rules, could be obtained in closed form expressions. It is not possible to compare their performances analytically. However, utilizing the advanced computational facilities available nowadays, we have compared the performances of all the proposed rules numerically, which will be handy in certain practical applications.

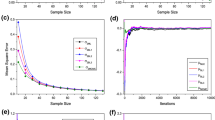

In Sect. 2, we have proposed the classification rule \(R_{ML}\) using the MLEs of the associated parameters for the two logistic populations. In Sect. 3, we have proposed the classification rule \({R}_{MC}\) utilizing the Bayes estimators through MCMC approach. In our simulation study, we have also included estimators proposed by Nagamani [20] and compared their MSE and bias with the Bayes estimator that uses MCMC approach (our proposed estimator). It has been noticed that the MLEs as well as the proposed Bayes estimator through MCMC approach always have minimum MSE and bias. Hence, we have not considered the classification rules based on the Bayes estimators proposed by Nagamani [20], for numerical comparison in terms of probability of misclassification.

In order to compute the probability of misclassification of the classification rules \(R_{ML}\) and \({R}_{MC},\) we proceed in the following manner.

- Step-1::

-

Generate training samples of size m from the logistic population Logistic\((\mu _{1},\sigma ).\) Then, similarly, we generate training samples of size n from another logistic population Logistic\((\mu _{2},\sigma ).\) Utilizing these training samples, we estimate the parameters \(\mu _{1},\) \(\mu _{2}\) and \(\sigma .\) In particular, we compute the MLEs and the Bayes estimators using MCMC approach that is we compute \(\hat{\mu }_{1ml},\) \(\hat{\mu }_{2ml},\) \(\hat{\sigma }_{ml},\) \(\hat{\mu }_{1mc},\) \(\hat{\mu }_{2mc},\) \(\hat{\sigma }_{mc},\) which are involved in the classification functions \(W_{ML}(z)\) and \(W_{MC}(z).\)

- Step-2::

-

Generate a new observation, say z from the logistic population Logistic\((\mu _{1},\sigma )\) and substitute it in the classification rules \(R_{ML}\) and \(R_{MC},\) then we check whether it belongs to the population \(\varPi _{1}\) or not. Similarly, we generate a new sample z from the logistic population \(\varPi _{2},\) and substitute it in the classification rules, then check whether it belongs to the population \(\varPi _{2}\) or not.

The above procedure is carried out using the well-known Monte Carlo simulation method with 20, 000 replications. A high level of accuracy has been achieved in the sense that the standard error in simulation is of the order of \(10^{-4}.\) . The probability of misclassification P(1|2) and P(2|1) using the probability frequency approach has been computed. In the computation of Bayes estimator using MCMC approach, the hyperparameters involved in the conjugate prior have been suitably taken as \(c=2.5,\) \(d=1\) (for the common scale parameter), \(a_1=2.5,\) \(b_1=3,\) \(a_2=2,\) \(b_2=1\) (in the case of location parameters). The computation also has been done using some other choices of hyperparameters; however, the probability of misclassification of \(R_{MC}\) changes insignificantly. In the MCMC method that uses Metropolis–Hastings algorithm, we have generated 50, 000 MCMC samples from the posterior density of \(\sigma ,\) \(\mu _{1}\) and \(\mu _{2}\) with burn-in period \(M_{0}=5000.\) In the simulation study, we have seen that for some rules, the P(1|2) may be small but P(2|1) may be high. In view of this and to have a better picture of the performance, we also define the expected probability of misclassification (EPM), given by

along with the probability of misclassifications, for comparing the rules. We consider the cost and the prior probabilities are equal, that is \(C(1|2)=C(2|1)\) and \(q_1=q_2,\) for convenience.

The simulation study has been carried out by considering various combinations of sample sizes and different choices of parameters. However, for illustration purpose we present the expected probability of misclassification of the rules \(R_{ML}\) and \({R}_{MC}\) for some specific choices of sample sizes and parameters. In Table 1, we have presented the EPM of the rules \(R_{ML}\) and \(R_{MC}\) for equal and unequal sample sizes, for fixed values of \(\mu _{1}\) and \(\mu _{2}\) with variation in scale parameter \(\sigma .\) The following observations were made from our simulation study as well as the Table 1, regarding the performances of the classification rules.

-

The expected probability of misclassification for the proposed rules decrease as the sample sizes increase. It is also noticed that for different location points (\(\mu _{i}\)) and fixing the scale parameter \(\sigma ,\) the EPM remains almost same. It seems that the rules are location invariant.

-

The rule \({R}_{MC}\) based on the MCMC procedure always performs better than the rule \(R_{ML}\) based on MLE in terms of expected probability of misclassification. It is further noticed that when \(\sigma \) is close to 4, the maximum values for EPM is 0.5 for both the rules.

-

Considering the EPM as measure of performance, the classification rule \({R}_{MC}\) has the lowest EPM value among all the proposed rules. Hence, we recommend to use the classification rule \({R}_{MC}\) for classifying a new observation into one of the two logistic populations.

5 Application with Real-Life Data Sets

In this section, we consider two real-life data sets which are related to compressive strength of bricks and dietary fiber content in food. It has been shown that the logistic distribution is a reasonable fit to these data sets. Further, Levene’s test with significance level 0.05 indicates that the equality of scale parameters cannot be rejected (see [20]).

Example 1

Nagamani [20] considered the data sets related to compressive strength (MPa) of clay bricks and fly ash bricks. The experiment was conducted by Teja [22] to determine the mechanical properties, such as initial rate of absorption (IRA), water absorption (WA), dry density (DD), and compressive strength (CS) of 50 brick units from each type. The compressive strength (MPa) of clay bricks and fly ash bricks is given as follows.

Clay Brick: 8.02, 7.31, 7.31, 7.87, 7.09, 9.75, 5.90, 6.76, 6.58, 7.70, 5.70, 6.56, 8.14, 7.28, 5.70, 7.38, 7.02, 5.95, 5.90, 5.67, 7.22, 6.76, 7.73, 8.65, 8.08, 7.98, 5.60, 8.66, 9.67, 8.18, 8.70, 4.86, 9.33, 7.77, 6.21, 7.74, 11.31, 9.13, 8.28, 7.09, 5.62, 11.88, 5.73, 9.21, 7.03, 9.07, 7.81, 6.70, 9.97, 8.85

Fly Ash Brick: 3.62, 4.74, 9.88, 5.93, 6.09, 6.94, 6.32, 5.30, 5.14, 4.55, 4.03, 7.36, 3.57, 3.98, 4.03, 4.74, 7.32, 3.23, 5.38, 7.18, 6.07, 3.62, 6.64, 5.58, 5.23, 3.95, 5.86, 5.58, 6.97, 5.05, 4.35, 4.55, 4.79, 4.03, 4.74, 7.58, 3.62, 6.01, 3.99, 6.04, 4.74, 7.21, 3.61, 5.69, 7.21, 6.40, 3.55, 8.70, 4.35, 7.51.

It is our interest to classify a new observation, say z into Clay Brick or Fly Ash Brick. So, we compute the estimators for the parameters \((\mu _{1},\mu _{2},\sigma )\) as \((\hat{\mu }_{1ml},\hat{\mu }_{2ml},\hat{\sigma }_{ml})=(7.53694,5.35400,0.84857)\) and \((\hat{\mu }_{1mc},\hat{\mu }_{2mc},\hat{\sigma }_{mc})=(7.02195,4.86868,0.78183).\) Utilizing these estimators, we compute the classification functions \(W_{ML}(z)\) and \(W_{MC}(z).\)

Suppose we have a new observation, say \(z=6.5\) and we want to classify this observation into one of the two types of bricks using our proposed classification rules. The values of classification functions are computed as \(W_{ML}= 0.07285\) and \(W_{MC}=-0.91663.\) The rule \(R_{ML}\) classifies \(z=6.5\) into the clay brick, whereas the rule \(R_{MC}\) classifies it into the fly ash brick.

Example 2

These data sets are related to the percentage of total dietary fiber (TDF) content in foods, such as fruits, vegetables, and purified polysaccharides. Li and Cardozo [15] conducted an inter laboratory (nine laboratories involved) study using a procedure as described by Association of Official Agricultural Chemists (AOAC) international to determine the percentage of total dietary fiber (TDF) content in food samples. The experiment was conducted for six different types of food samples, such as (a) apples, (b) apricots, (c) cabbage, (d) carrots, (e) onions, and (f) FIBRIM 1450 (soy fiber). The percentage of TDF using non-enzymatic-gravimetric method from nine laboratories for apples and carrots is given as, Apple: 12.44, 12.87, 12.21, 12.82, 13.18, 12.31, 13.11, 14.29, 12.08; Carrots: 29.71, 29.38, 31.26, 29.41, 30.11, 27.02, 30.06, 31.30, 28.37.

Nagamani [20] shown that logistic distribution fits these two data sets reasonably well. Their Levene’s test also confirms the equality of the scale parameters with level of significance 0.05. In order to classify an observation into these two types of foods, we compute the estimators of the parameters \((\mu _{1},\mu _{2},\sigma )\) as \((\hat{\mu }_{1ml},\hat{\mu }_{2ml},\hat{\sigma }_{ml})=(12.765,29.6497,0.5604)\) and \((\hat{\mu }_{1mc},\hat{\mu }_{2mc},\hat{\sigma }_{mc})=(12.65066,29.47925,0.63596).\) Utilizing these estimators, we compute the classification functions \(W_{ML}(z)\) and \(W_{MC}(z).\)

Suppose we have a new observation, say \(z=21.2\) and we want to classify this observation into one of the two types of foods using our proposed classification rules. The values of classification functions are computed as \(W_{ML}= 0.026588\) and \(W_{MC}=-0.314322.\) The rule \(R_{ML}\) classifies \(z=21.2\) into Apples, whereas the rule \(R_{MC}\) classifies it into the Carrots.

6 Concluding Remarks

In this note, we have considered the problem of classification into one of the two logistic populations with a common scale parameter and possibly different location parameters. It is worth mentioning that the same model was previously considered by Nagamani [20] and estimated the associated parameters. Specifically the authors had derived certain Bayes estimators using different types of priors and Lindley’s approximations. Utilizing their proposed estimators, and the one we have proposed (Bayes estimators using MCMC approach), we have constructed several classification rules. It has been seen that the proposed rule \(R_{MC}\) outperforms all other rules in terms of expected probability of misclassification. The application of our model problem has been explained using two real-life data sets.

References

Anderson TW (1951) Classification by multivariate analysis. Psychometrika 16(1):31–50

Classification into two multivariate normal distributions with different covariance matrices. Ann Math Stat 33(2):420–431

Asgharzadeh A, Valiollahi R, Abdi M (2016) Point and interval estimation for the logistic distribution based on record data. SORT: Stat Oper Res Trans 40(1):0089–112

Balakrishnan N (1991) Handbook of the logistic distribution. CRC Press, Dekker, New York

Basu AP, Gupta AK (1976) Classification rules for exponential populations: two parameter case. Theor Appl Reliab Emph Bayesian Non parametric Methods 1:507–525

Chib S, Greenberg E (1995) Understanding the Metropolis-Hastings algorithm. Am Stat 49(4):327–335

Eubank RL (1981) Estimation of the parameters and quantiles of the logistic distribution by linear functions of sample quantiles. Scandinavian Actuarial J 1981(4):229–236

Gilks WR (1996) Introducing Markov chain Monte Carlo. Markov chain Monte Carlo in practice, Chapman and Hall, London

Gupta SS, Gnanadesikan M (1966) Estimation of the parameters of the logistic distribution. Biometrika 53(3–4):565–570

Hastings WK (1970) Monte Carlo sampling methods using Markov chains and their applications. Biometrika 57(1):97–109

Howlader H, Weiss G (1989) Bayes estimators of the reliability of the logistic distribution. Commun Stat-Theor Methods 18(4):1339–1355

Jana N, Kumar S (2016) Classification into two-parameter exponential populations with a common guarantee time. Am J Math Manage Sci 35(1):36–54

Jana N, Kumar S (2017) Classification into two normal populations with a common mean and unequal variances. Commun Stat-Simul Comput 46(1):546–558

Kotz S, Balakrishnan N, Johnson NL (2004) Continuous multivariate distributions, Volume 1: models and applications. Wiley, New York

Li BW, Cardozo MS (1994) Determination of total dietary fiber in foods and products with little or no starch, nonenzymatic-gravimetric method: collaborative study. J AOAC Int 77(3):687–689

Long T, Gupta RD (1998) Alternative linear classification rules under order restrictions. Commun Stat-Theor Methods 27(3):559–575

Muttlak HA, Abu-Dayyeh W, Al-Sawi E, Al-Momani M (2011) Confidence interval estimation of the location and scale parameters of the logistic distribution using pivotal method. J Stat Comput Simul 81(4):391–409

Nagamani N, Tripathy MR (2017) Estimating common scale parameter of two gamma populations: a simulation study. Am J Math Manage Sci 36(4):346–362

Nagamani N, Tripathy MR (2018) Estimating common dispersion parameter of several inverse Gaussian populations: a simulation study. J Stat Manage Syst 21(7):1357–1389

Nagamani N, Tripathy MR, Kumar S (2020) Estimating common scale parameter of two logistic populations: a Bayesian study. Am J Math Manage Sci. https://doi.org/10.1080/01966324.2020.1833794

Rashad A, Mahmoud M, Yusuf M (2016) Bayes estimation of the logistic distribution parameters based on progressive sampling. Appl Math Inf Sci 10(6):2293–2301

Teja PRR (2015) Studies on mechanical properties of brick masonry. M. Tech. thesis, Department of Civil Engineering, National Institute of Technology Rourkela

Tripathy MR, Kumar S (2015) Equivariant estimation of common mean of several normal populations. J Stat Comput Simul 85(18):3679–3699

Tripathy MR, Kumar S, Misra N (2014) Estimating the common location of two exponential populations under order restricted failure rates. Am J Math Manage Sci 33(2):125–146

Tripathy MR, Nagamani N (2017) Estimating common shape parameter of two gamma populations: a simulation study. J Stat Manage Syst 20(3):369–398

Yang Z, Lin DK (2007) Improved maximum-likelihood estimation for the common shape parameter of several Weibull populations. Appl Stochastic Models Bus Industry 23(5):373–383

Acknowledgements

The authors would like to express gratitude to the two anonymous reviewers for valuable comments that led to improvements in the presentation of this article.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Kumar, P., Tripathy, M.R. (2022). Estimation and Classification Using Samples from Two Logistic Populations with a Common Scale Parameter. In: Ray, S.S., Jafari, H., Sekhar, T.R., Kayal, S. (eds) Applied Analysis, Computation and Mathematical Modelling in Engineering. AACMME 2021. Lecture Notes in Electrical Engineering, vol 897. Springer, Singapore. https://doi.org/10.1007/978-981-19-1824-7_14

Download citation

DOI: https://doi.org/10.1007/978-981-19-1824-7_14

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-19-1823-0

Online ISBN: 978-981-19-1824-7

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)