Abstract

Bionics is fundamentally based on the development of projects for engineering, design, architecture, and others, which are inspired by the characteristics of a biological model organism. Essentially, bionics is based on a transdisciplinary approach, where teams are composed of researchers trained in a variety of disciplines, aiming to find and adapt characteristics from nature into innovative solutions. One of the key steps in a bioinspired project is the comprehensive study and analysis of biological samples, aiming at the correct understanding of the desired features prior to their application. Among the most sought natural elements for a project to be based on, plants represent a large source of inspiration for bionic designs of structures and products due to their natural efficiency and high mechanical performance at the microscopical level, which reflects into their functional morphology. Therefore, examining their microstructure is crucial to adapt them into bioinspired solutions. In recent years, several new technologies for materials characterization have been developed, such as X-ray Microtomography (µCT) and Finite Element Analysis (FEA), allowing newer possibilities to visualize the fine structure of plants. Combining these technologies also allows that the plant material could be virtually investigated, simulating environmental conditions of interest, and revealing intrinsic properties of their internal organization. Conversely to the expected flow of a conventional methodology in bionics—from nature-to-project —besides contributing to the development of innovative designs, these technologies also play an important role in investigations in the plant sciences field. This chapter addresses how investigations in plant samples using those technologies for bionic purposes are reflecting on new pieces of knowledge regarding the biological material itself. An overview of the use of µCT and FEA in recent bionic research is presented, as well as how they are impacting new discoveries for plant anatomy and morphology. The techniques are described, highlighting their potential for biology and bionic studies, and literature case studies are shown. Finally, we present future directions that the potential new technologies have on connecting the gap between project sciences and biodiversity in a way both fields can benefit from them.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Biomimetics

- Biomimicry

- X-ray microcomputed tomography

- Finite element analysis

- Monocotyledons

- Bamboo

- Bromeliaceae

- Micro-CT

1 Introduction

Based on billions of years of life and natural selection, bionics takes advantage of the attributes that made each species successful to this day. This field of applied sciences is fundamentally associated with the development of bioinspired solutions based on a certain aspect or characteristic extracted from the natural world. Such solutions can be used on and applied into projects from a variety of fields [6]: from product design [60, 92], architecture [61, 70, 101], engineering [20, 95], and materials science [31, 144, 145] to biomedicine [53, 128], management [105], and robotics [100]. Having its origins focused on applications in military projects, mainly marked by the development of the SONAR, according to [136], bionics can be defined “as the study of living and life-like systems with the goal to discover new principles, techniques, and processes to be applied in man-made technology”. One of the forerunners of this branch of development was the then-Major from US Air Force Jack Ellwood Steele, who coined the term “bionics” in 1958 through the Greek term βίος, from “life”, and the suffix ῐκός, as “related to” (or “pertaining to”, “in the manner of”), in order to promote it as a new science [42]—the same etymology is also found, for example, in words like “mechanics” (related to machine), “mathematics” (related to knowledge or learning), “dynamics” (related to power), and “aesthetics” (related to the perception of the senses). Besides, the term “Biomimetics” (as what is “related to the imitation of life”) was also proposed by the polymath Otto Herbert Schmitt, also in the late 1950s [135]. Either way, despite preferences, both terms are equally important and are known to be referred to the same connections between bioinspiration and applications, aimed at benefiting society:

Let us consider what bionics has come to mean operationally and what it or some word like it (I prefer bio-mimetics) ought to mean in order to make good use of the technical skills of scientists specializing, or, more accurately, despecializing into this area of research. Presumably our common interest is in examining biological phenomenology in the hope of gaining insight and inspiration for developing physical or composite bio-physical systems in the image of life. [114]

From that moment onward, it can be emphasized the realization of different scientific events that sought to disseminate these new areas of research—like the first Bionics Symposium, entitled “Living Prototypes—the Key to New Technology”, which occurred in Dayton (OH, USA), in 1960 [38]. More recently, in addition to those terminologies’ appearance in popular media, more terms have gained attention, particularly in research papers. Biomimicry and bioinspiration are examples of other names that can be emphasized. Therefore, despite those and more popular terminologies, all of them tend to represent the same goals of performing technical analyses of natural elements with aims at their application through multiple technologies for innovative results. Considering new research findings in scientific publications, the growth in bionic-related papers is remarkable. Figure 1 shows the annual number of papers published that contains some of those “bio*-related” terms, from 1990 to 2020 including bionics, biomimetics, biomimicry, bioinspiration, etc. It is noteworthy that in the past decade the average number of bio*-related papers has grown from around 3,000 to 10,000, yearly, according to the Web of Science™ platform. And not only when considering scientific publications, the presence of bio*-related works is noticed. When analyzing the total number of published patents, following data retrieved from the same platform, in 1990, approximately 0.002% of all globally published pieces were related to bionics. As for 2020, that segment increased to about 0.074%. While the annual number of published patents increased about 14 times from 1990 to 2020, the annual number of bionic-related patents increased 395 times, i.e., the growth rate of patents that use nature as a source of inspiration is over 26 times greater than all other areas in the past three decades. That emphasizes the impact R&D in bionics has on innovation.

From trabeculated bone tissue and biomechanics of animals to the cellular arrangement and seed dispersal of plants, nature has come a long way in discovering different measures to help to protect from predators, attracting pollinators, reinforcing against weather conditions, moving efficiently, saving energy, among many others. Regarding the characteristics that make biological compounds, materials, structures, arrangements, or systems so interesting for applications, they can be found in all magnitudes of scales: from the biochemical composition of biopolymers (e.g., collagen, melanin, suberin, cellulose, lignin, etc.) [37, 131], to the complex equilibrium of entire ecosystems [110]. Hence, there are equally many ways to investigate a certain natural characteristic and, depending on the level of which it is presented or intended to be inspired on, we have to adopt particular approaches, in terms of techniques, equipment, and research protocols to analyze it properly. If researchers choose wrong or incomplete investigation procedures, there is a chance that some vital details regarding the desired characteristic would remain unresolved or unexplored, impairing the correct understanding of the “hows” or the “whys” of the examined biological sample, and consequently the correct application of it in a bioinspired project. Another key aspect to be considered during this type of investigation is being able to count on a transdisciplinary team. Even if the correct approaches and techniques are followed, the natural object of study would also require the interpretation of a biologist. Much more than assisting on the explanation of some specific analyzed characteristic, a biologist can also provide new directions from which the research can proceed to address the issue at hand. Therefore, finding the most adequate methods and specialists is as important as the objects of study themselves.

One of the most fascinating biological objects of study for their complexity in a multitude of levels of hierarchical organizations is plants. They can be considered fantastic examples of how an organism can adapt to several adversities of external conditions in order to prosper in its environment. For instance, by efficiently depositing and shaping biopolymers, cells, and tissues where they are most mechanically needed. In his work “Plant Biomechanics—An Engineering Approach to Plant Form and Function”, Karl [82] emphasized that “plants are the ideal organisms in which to study form-function relations”. Their shape is primarily based on the direction and rate of their growth [59], being this immediately related to characteristics of each type of biological component, as well as how they are arranged throughout the individual. Such arrangement influences several physiological features of plants, from molecular and chemical, to physical and mechanical. And it is the complexity of this organization that gives plants their fascinating attributes as materials. Mechanically, for instance, the hierarchy levels of plants, from the cell wall to the organization of supporting tissues, are the foundation of their properties [112]. The gradient distribution of the material in the stem of plants can also be found in multiple scales, from the macroscopic level to the microscopic and molecular ones [120]. One of the main examples of plants with outstanding and highly efficient structural properties is bamboo. Macroscopically, the hollow stem of this monocot is periodically divided into solid cross sections, called the diaphragm, in the nodal regions. These perpendicular reinforcements act like ring stiffeners, increasing the overall flexural strength of the plant, by preventing the stem from failing by ovulation of its cross section during buckling or bending [138]. When a ductile thin cylindrical structure is bent, its failure is usually reached in buckling when part of its length collapses, due to the initially circular section becoming elliptical and unstable [129], i.e., the longitudinal forces of local tension and compression in the tube also tend to ovalize its cross section, thus precipitating the elastic deformation and reducing the flexural stiffness, known as the “Brazier effect” [10]. Galileo Galilei (1564–1642) demonstrated in 1638 that materials applied in the periphery—rather than in the center—of constructions provided more resistance to bending forces, using hollow stacks of grasses to illustrate it [83], which is aligned to the effect described almost 300 years later. Microscopically, when analyzing the stem’s cross section, the scattered distribution of the vascular bundles in monocots, named atactostele [29]—in which they are bigger and dispersed in the inside and smaller and clustered in the outside—concentrates more material, with a higher relative density, in the regions under which they are subject to the greatest stresses [68, 92]. The higher the relative density, the higher the elastic modulus, therefore the plant is stiffer on the external side, increasing the moment of inertia of the stem [37]. Despite the sclerenchyma bundles being scattered, the parenchyma ground tissue can act as a matrix, in a composite analogy, by distributing stresses throughout the plant, even if a local pressure is applied [92]. Furthermore, combining the sclerenchyma bundles with the low density, foam-like ground parenchymatic material [22], this gradient distribution in the cross-section direction also contributes to the plant’s high efficiency, i.e., considerable stiffness and strength along with reduced weight. Even the actual geometry of each sclerenchyma bundle was verified as having a role in the performance of the stem by increasing the local compressive strength of the fibers [95]. Despite cylindrical structures being considered more efficient in bending when the direction of the lateral load is unknown [37], the different shapes of the vascular bundles of bamboo [69] can be particularly efficient due to their radial orientation in the culm. Given the fact that individual fiber bundles tend to locally bend toward the center, during the universal bending of the stem they contribute by micro-stiffening the structure [95]. Therefore, most failure modes noticed on bamboo culm during bending or compression is due to fiber splitting [117], when the sclerenchyma is detached longitudinally from the parenchyma [41]. And even this failure characteristic is somewhat reduced in the plant by the arranging of the vascular bundles in the nodal region, at which they translate horizontally and interlace themselves in the longitudinal bundles, when connecting to new branches or leaves, and thus preventing them from detaching completely [96, 99, 143]. All those features represent a number of possibilities to be applied on bioinspired solutions. Consequently, once studied, they can be explored, either individually or combined, as a source of inspiration for the development of analogous bionic projects like beams, columns, and thin-walled structures designs, bioinspired in bamboo [18, 33, 57, 71, 146]. And, as previously mentioned, prior from applying the characteristics of a certain biological material in the design of a bionic or bioinspired project, the sample first have to be investigated, by means of observation, analyses, or simulations. In order to benefit from such complex features of plants, engineers and designers have to consider how to approach the investigation, by the selection of the appropriate equipment as well as count with the presence of a specialist from biological sciences—just like in most bamboo-inspired design cases, a botanist was involved.

It is easy to link up that the growing knowledge in plant anatomy and plant morphology was due to technological advances in biological imaging (bioimaging). Considered of primary importance for all lines of research in plant sciences [29], plant anatomy has its first investigations dating back to the seventeenth century [27]. In the middle of that century, with the first developments of early microscopes, Robert Hooke (1641–1712) introduced the term “cell” in his book “Micrographia” [54]—derived from the Latin “cella”, as a small enclosed space [37, 141]—when observing cork samples, in reference to the small cavities surrounded by walls [27]. A few years later, Nehemiah Grew (1655–1703) first described plant tissues in his book “The Anatomy of Plants” [40], published in English in 1682 [64]. While writing his book, Grew actually searched for the advice of Hooke in explaining how cells expand and why some tissues are stiffer and stronger than others [83]. Grew also identified that plant tissues needed to be conceptualized in 3D because they were composed of microscopic structures with distinct spatial relationships [13]. In 1838, botanist Matthias Schleiden (1804–1881) expanded the characteristics of cells by reporting that all plant tissues consist of organized masses of cells, which was later extended by zoologist Theodor Schwann (1810–1882) to all animal tissues, and these basic units do not differ fundamentally in its structure from each other. Therefore, in 1838, the cell theory of Schleiden and Schwann was proposed for all forms of life [28, 64, 137]. And this was also the formal statement of “cell biology” as a newly created and still unexplored field of science [1]. The next major step—the understanding of its basic principles and functions—occurred when advances in staining, lighting techniques, and optics increased the contrast and resolution view of the cell’s internal structures, especially after the introduction of the transmission electron microscopy (TEM) at the beginning of the 1940s [1]. The evolution of photomicrography followed the studies of Thomas Wedgwood (1771–1805), William Talbot (1800–1877), and others, where images were captured with solar-based microscopes (Overney and [88]. In modern times, the digital system and image processing have overcome the human eye limitations, like low brightness and small differences of light intensity in a luminous background, so the modern CCD (charged-coupled device) and CMOS (complementary metal-oxide semiconductor) had become an integrating part of the microscope system. Besides, since the acquisition of images is in digital format, different software became able to correct brightness, contrast, and noise in the images, allowing the reduction of artifacts and limitations of the optical system and the human eye. More recently, advances in bioimaging technologies have pushed the boundaries on new interpretations and insights regarding the structure and morphology of biological samples. In the modern timeline, we can point out the discovery of X-rays in 1895, by German engineer and physicist Wilhelm Röntgen (1845–1923) [109], which rapidly led to the proliferation of this type of equipment in medicine, by allowing a clear visual separation between soft and hard tissues [4]. Later, physicist Ernst Ruska (1906–1988) and electrical engineer Max Knoll (1897–1969) presented the first full design of the electron microscope [63], after years of development of electron-based imaging [39]. In 1936, the first commercial transmission electron microscopy (TEM) was presented in the UK, despite not being completely functional, and only becoming available by commercial companies after the end of World War II [139]. In 1935, Knoll also presented a prototype of the first scanning electron microscopy (SEM), which was improved by Manfred von Ardenne in 1938, and became functional by Vladimir K Zworykin and his research group at the Radio Corporation of America in 1942 [72]. Even though both TEM and SEM techniques required specific protocols for sample preparation [2], the technology became one of the most important pieces of science equipment for the study of materials in plant sciences and many other fields up to the present day [15]. However, important details of microstructures in TEM and SEM still required trained eyes in order to be reconstructed and volumetrically visualize the observed specimen in 3D [50]. More recently, preliminary works employing Atomic Force Microscopy (AFM) were published in the mid-1990s, mainly focusing on the topography of pollen grains [19, 111]. However, the authors stated that despite the high resolution, the 3D presentation of the surface, and the absence of specific preparation methods, generally AFM was still limited by its depth of field. The work of Minsky searching the three-dimensional structure of neural connections led him to propose in 1957 the principles of confocality, and since then, the basic principles are used on all confocal microscopes. The modern confocal laser scanning microscopy has been a powerful tool in elucidating 3D complex internal structures of cells and tissues, allowing studying dynamic processes visualization in living cells, like rearrangements of cytoskeleton and chromosomes. Despite its benefits, the technique has a limited penetration depth ranging from 150 to 250 µm [23, 89]. It is noteworthy that the methodology choice is an important step to be followed by the research team, considering each one has advantages and limitations owing to the image resolution, sample size, and physical preparation, in addition to the need for sectioning [48]. Simultaneously, resources were applied to the progress of 3D imaging. In this regard, mathematical models were also critical for allowing us a better comprehension of the complexity of the biological world. In 1917, Austrian mathematician Johann Radon (1887–1956) proved [106] that an “infinite” object (\(N\)) could be reconstructed based on a “finite” number of projections (\(N-1\)) of it [116]. Such mathematical and physical bases, especially with the works of English electrical engineer Godfrey Hounsfield (1919–2004) and South African physicist Allan Cormack (1924–1998), resulted in the development of the first feasible X-ray computed tomography (CT) scanners, in the early 1970s [56].

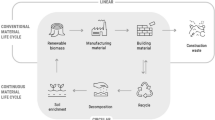

In addition to the findings in biomedical sciences, the dissemination of new 3D observation technologies added new levels of comprehension in many disciplines, including traditional fields such as plant sciences. Consequently, once enough information from a certain biological sample is gathered, improvements and entirely new concepts on bionic designs can be proposed. However, despite the “biology–technology–bionics” direction being well established, its orientation can be questioned. This chapter addresses how technological advances are contributing not only to innovation in bioinspired design but how bionics—as a multidisciplinary approach—is acting as the driving force behind discoveries in plant anatomy and morphology. First, some state of the art, 3D technologies for analyses, and simulations are presented, with a focus on X-ray microtomography (µCT) and Finite Element Analysis (FEA), including examples of recent research studies that are benefiting from both multidisciplinary teams and technological advances. Secondly, the role of biomedical imaging in botany investigation is discussed, showing the importance of 3D technologies for new insights, and how they are improving biodiversity as a whole. Finally, the last topic gives an overview of upcoming technologies and the panorama of future studies in plant sciences and bionics.

2 3D Technologies: Analyses and Simulations

As the awareness of the inherent complexity of biological materials increases—particularly regarding plants—the need for 3D technologies for observing and analyzing samples became more evident. During the pioneer studies in plant anatomy of Hooke and Grew the need for a higher optical magnitude was necessary to allow visualization of small structures like tissues and cells. However, in the past decades, this demand has shifted to also understanding the 3D hierarchy and organization of those fine details, leading to a new comprehension of plant anatomy and morphology. In addition, in-depth functional analyses and simulations have been leading to insights regarding plant physiology, as well as mechanical and physical responses to external stimuli.

Advances in technologies are making them more accessible to researchers from many fields, which contributes directly to findings in many areas. Generally, plant materials have the intrinsic characteristic of presenting a fine, detailed architecture, a complex arrangement of structures, multiple levels of hierarchy, as well as a combination of delicate and soft with stiff and hard cells, tissues, and organs. One of the main ways of documenting, analyzing, and transmitting knowledge in botany is by means of illustrations, which can be found in a major part of specializing literature [27]. In possession of instruments such as microtomes and microscopes, anatomists are able to observe thin sections, which reveal valuable information about the constituent tissues and cells. The registers of each individual section can be done manually or digitally, in order to understand the entire structure. However, traditionally, the volumetric reconstruction of the general aspect of the biological material is done based on the deduction of the researcher, through the interpretation of the observations of the sequential sections [115]. Therefore, having the ability to investigate the biological material with a high-resolution and non-destructive technique is key not just to ensure the integrity of the sample but to facilitate the comprehension of its true morphology [93]. In this topic, we cover two of the main 3D technologies for investigating plants, both for qualitative and quantitative analyses, along with numerical simulations.

2.1 X-Ray Microtomography (µCT)

With the first developments of X-ray-based imaging in 3D, with computed tomography (CT) equipment in the mid-1970s [55], the technique has become a well-established and regularly employed modality in current diagnostic radiology in hospitals worldwide [45]. Shortly after its popularization, CT imaging has evolved with a focus on improving the resolution of the scans, giving birth to the now-called X-ray microtomography (or microcomputed tomography), or µCT. Stock [126] highlights that µCT technology has developed at a slower rate compared to that of clinical CT, because of clear economic reasons—i.e., investments in more expensive technologies are far more acceptable in hospitals, with straightforward benefits to patients than in research centers, where µCT is most commonly employed. However, it was with the need for investigating small animals for the study of human diseases that biomedicals started to emphasize the application of higher resolution CT scans, leading to investments in commercial µCT equipment, in the mid-to-late-1990s [126]. The development of more affordable lab-scale µCT technology was also a crucial step for the democratization of the technology. Once restricted to a small number of large synchrotron-based research facilities, high-resolution X-ray scanners have gained attention to major manufacturers, such as Bruker™ (Kontich, Belgium), Zeiss™ (Pleasanton, CA, USA), North Star Imaging™ (Rogers, MN, USA), General Electric™ (Wunstorff, Germany), and others.

It is noteworthy the recently growing number of research papers that utilize X-ray microtomography as the primary source of investigation methods, in a variety of fields [91]. Assessing them is rather difficult, though, due to the numerous nomenclatures used to define the technique—e.g., X-ray microcomputed tomography, X-ray microtomography, high-resolution computed tomography, X-ray nanotomography, X-ray microfocus, just to name a few—and even several abbreviations of the method: µCT, micro-CT/micro-CT, nCT, HRCT, HRXCT, and others. Even between µCT manufacturers, the terminologies used are diverse: micro-CT (Bruker™), X-ray microscopy (Zeiss™), X-ray system (North Star Imaging™), microfocus CT (General Electric™), etc. Still, the advantages of employing the technique in the research methodology workflow in plant sciences are vast. Firstly, there is the capability of digitalizing a biological sample volumetrically, instead of just superficially, similar to what can be found in other observation techniques, such as scanning electron microscopy and atomic force microscopy. This “bulk”, volumetric scanning is particularly important to avoid multiple passes of superficial digitalization in the same sample (like with SEM, o AFM), where each one is performed with the removal of some material. Second, differently from conventional clinical CT, µCT utilizes a smaller X-ray focal spot size and higher resolution detectors, among others, achieving a much higher level of details in the obtained virtual model. Evidently, to avoid working with huge bioimaging datasets, the higher the chosen spatial resolution, the smaller the field of view needs to be. Third, just like clinical CT, nevertheless, µCT also allows the digital sectioning of the 3D model in virtually any plane, allowing the researcher to investigate the sample without the need to destroy it. When working with fragile or delicate specimens, like many plant-based materials, this is utterly important to ensure the integrity of cells, tissues, and structures. Forth, besides the realization of morphological analysis, binarization and segmentation procedures can be followed for the division of the 3D model into regions of interests (ROIs), which allows quantitative data to be collected and assessed, such as counting (number of cells), sizing (volumes, lengths, surface areas), and others, utilizing a number of proprietary software suites or open-source alternatives like Fiji, a distribution of ImageJ [113]. Many µCT types of equipment also include in situ capabilities of micro- and nanomechanical testing—including compression, tension, and indentation—while scanning, for the sample to be digitalized throughout the experiment. Fifth, unlike many lab-based techniques used in plant sciences, sample preparation often requires little to no additional steps prior to scanning—e.g., fixation, inclusion, staining, and metallization. The usage of contrast agents (e.g., phosphotungstate, osmium tetroxide, bismuth tartrate, and others) is sometimes followed, however with up-to-date µCT features, such as phase contrast and dark field, soft tissues can be distinguished more easily in untreated samples.

The functioning of µCT scanning relies on the effect electromagnetic waves in the X-ray spectrum has on the matter, and the digitalization procedure is often confused by researchers. Fundamentally, image acquisition is performed by the irradiation of the sample by the X-ray source, which is relatively attenuated by different local densities and captured by a detector (scintillator coupled to a CCD sensor), forming a 2D projection of the sample, perpendicular to the X-ray beam. The sample is then rotated, and a new set of 2D projections is captured. After a full—or half—rotation, all projections are combined with a particular CT algorithm that verifies regions with similar density patterns, merging them into a 3D representation. Finally, this 3D volume can be exported into a stack of grayscale slices—where the darker the value of each pixel the lower its density—which can be worked in the post-processing procedure, reconstructed in 3D—where each slice pixel would be treated as a voxel—and used for qualitative and quantitative analyses. In the X-ray tube, the higher the potential difference (voltage) between the cathode and anode, the higher the kinetic energy of the electrons and, consequently, the emitted photons. When striking the target in the X-ray tube (typically made out of tungsten due to its high atomic number and melting point), the electrons decelerate, producing Bremsstrahlung (continuous spectrum) and characteristic radiation (peak). In addition to tube voltage, the current—established by the electron fluency in the tube—and the exposure—defined by the time at each projection—are the main µCT parameters to considerer. In the short term, the higher the current-exposure time, the higher the number of photons focusing on the sample, which may reduce noise, but can also decrease visual resolution due to scattering, and the higher the tube voltage, the higher the X-ray energy and the broader its spectrum, which can be suited for larger samples, but may decrease the contrast levels due to saturation of the detector. As a rule of thumb, for plant samples, it may be interesting to define relatively small values for voltage (~70–80 kV) and current (~90–130 µA), based on the size of the specimen, due to the general low density of the material; besides, working with smaller samples is preferable for obtaining a higher resolution during digitalization, with less noise and more contrast ratio. Further µCT physical principles are beyond the scope of this chapter and can be consulted in the works of [8, 45, 87, 126].

Many recent papers in plant sciences have been applying µCT as an innovative method for discovering or better comprehending features in a variety of samples. It is noteworthy still that, just like in bionic-related works, most research groups count on the presence of professionals with multiple backgrounds, like in engineering, physics, and design, to assist in the research workflow—ever since the set of acquisition parameters in the digitalization, to the post-processing and analyses steps. For instance [84, 86], studied the 3D architecture of congested inflorescences in Bromeliaceae that accumulate different amounts of water, in multiple Nidularioid genera (subfamily Bromelioideae). Authors segmented those regions based on high-resolution µCT images, and described their morphology, volume, and orientation in individuals, allowing the interpretation using a typology-based comparative approach of inflorescence development and branch patterns in this group that presents an obscured morphology to interpret with traditional methods. Due to the congested morphology of inflorescences, investigating their anatomical features and performing precise measurements would be rather difficult to realize via manually sectioning. Pandoli et al. [97, 98] explored ways of preventing microbial and fungal proliferation in bamboo, utilizing silver nanoparticles. Authors used µCT to analyze the distribution of antimicrobial particles in the vascular system of the plant, which contributed not only to increasing the protection of the natural material but to better understand the distribution of particles in its vascular anatomy. Brodersen et al. [11, 12] investigated the 3D xylem network of grapevine stems (Vitis vinifera) and found that these connectivity features could contribute to disease and embolism resistance in some species. The authors mentioned the benefits of applying µCT: “selected vessels or the entire network are easily visualized by freely rotating the volume renderings in 3D space or viewing serial slices in any plane or orientation” and that the automated method generated “orders of magnitude more data in a fraction of the time” compared to the manual one. Teixeira-Costa and Ceccantini [130] analyzed the parasite–host interface of the mistletoe Phoradendron perrottetii (Santalaceae) growing on branches of the host tree Tapirira guianensis (Anacardiaceae through high-resolution µCT imaging. Authors also highlighted the difficulties in “imbedding and cutting lignified and large materials”, therefore manually performing such complex 3D investigation would require a “huge series of sequential anatomical sections”; the authors even mentioned the previous work of [17], where the anatomy of the endophytic system of Phoradendron flavescens required hundreds of anatomical sections. Investigating the intricate distribution of fine arrangements in 3D is one of the main advantages of employing µCT for the study of plants. More recently, for example, Palombini et al. [96] studied the complex morphology of the vascular system of the nodal region of bamboo with the technique. When comparing their findings with previous serial sections based on illustrations (Fig. 2) it is clear that the scattered arrangement of the atactostele distribution of monocots is hard to be spatially understood in 3D by using just anatomical sections (Fig. 2a). Even if the segmentation procedure after µCT imaging acquisition can also be labor intensive—authors had to manually select each one of the hundreds of vascular bundles, separating them into the corresponding axis (Fig. 2b)—due to the difficulties in automatizing the process, at the very least the obtained virtual morphology remained intact and undamaged, allowing it to be anatomically accurate represented.

Technological improvements for detailing the complex anatomy of vascular bundles of the nodal region of bamboo: a hand illustration of nodal reconstruction based on serial cuts by Ding and Liese [21], b actual morphology based on X-ray microtomography of the nodal region, where the bundles of each secondary axis are shown with a different color, and the ones of the primary axis are faded

2.2 Finite Element Analysis (FEA)

As seen before, the segmentation process of a µCT-based investigation permits the 3D visualization of a specific ROI of a sample along with morphologically accurate qualitative and quantitative analyses. Once the region of interest has a segmented mask, it is considered binarized, i.e., it is no longer represented by means of a grayscale image, at which the contour of a certain region gradually fades as it approaches its physical end—as we can originally find in an unprocessed µCT stack—but as a set of black and white voxels. What it means is that a region in a binarized stack can be spatially defined with the precision of a voxel: if the binarized, segmentally masked region is set, it can be distinguished by white voxels, otherwise, it is viewed as a black region, just like the background in µCT. And it is this absolute division between black and white voxels that make a region discrete, allowing further calculations and analyses to take place. Furthermore, since the masked region is spatially—i.e., mathematically—defined, it can be exported to be used for other purposes. One of the main file extensions used in µCT post-processing is STL, a superficial mesh with universal compatibility. For instance, a µCT-based STL mesh can be 3D printed for educational uses [93], allowing a microscopic, morphologically accurate region to be significantly magnified and physically handled by students. Like most biological materials, plants exhibit an intrinsic and complex hierarchical level of elements, arrangements, shapes, and materials that extend from the macro- to the nanoscopic world. Despite being fundamentally constructed by a small number of basic components, it is their build-up that adds natural materials to their remarkable properties and makes them desirable as both a straightforward building material and a source for bioinspiration projects [3, 123]. However, their heterogeneity and intricacy also make biological materials difficult to be accurately investigated, for instance, in terms of predicting their mechanical performance. This is the main reason why they present a natural variability in properties, and thus often require a sufficient number of individuals for the data sampling to be meaningful [46]. Therefore, another major possibility of employing 3D biological models obtained via µCT is their use in numerical analyses.

An important characteristic of scientific reasoning is the process of dividing a larger, complex problem into smaller, simplified components, which can then be separately resolved more effortlessly and, once combined, be used as an estimated solution for the original issue. If a problem—or a numerical model—is obtained with a finite number of components, it is called “discrete”. On the other hand, if a model is considered so complex that it could be indefinitely subdivided, it would require it to be defined by assessing its infinitesimal parts, leading to the need for differential equations, and thus being called “continuous” [147]. This is the essence of the Finite Element Method (FEM) at which in the 1940s mathematicians, physicists, and particularly engineers worked on techniques for the analysis of problems in structural mechanics, and later applied them to the solution of many different types of problems [5]. Simplifying, the procedure is based on the division of a continuous problem into a finite number of elements, in the process called discretization, leading to the procedure known as Finite Element Analysis (FEA). It is worth noting that despite the assistance of desk calculators in the 1930s, FEA only had its most significant improvements with the upsurge of digital computing, at which a set of governing algebraic equations could be established and solved more effectively, leading to real applicability [5]. The origins of the subdivision of continuum problems into finite elements set are arguable, though. With the multiple solutions for the brachistochrone problem—i.e., finding the fastest descent curve between two non-superposed points with different elevations in the same plane—initially proposed by Johann Bernoulli (1667–1748), his brother Jacob Bernoulli (1655–1705) along with other known personalities like Galileo Galilei (1564–1642), Gottfried Leibniz (1646–1716), and later Leonhard Euler (1707–1783), it was observed that it would require reducing the variational problem into a finite number of equidistant problems [125]. Gadala [35] comments on even older possible roots of the discretization process, dating back to early civilizations, like Archimedes’ (ca. 287–212 BC) attempts to determine measures of geometrical objects by dividing them into simpler ones, or the Babylonians and Egyptians search for the ratio of a circle’s circumference to its diameter, i.e., \(\pi\). As for the present day, numerous commercial software solutions are available, including Abaqus™ (Dassault Systèmes™, Vélizy-Villacoublay, France), ANSYS™ (Canonsburg, PA, USA), COMSOL™ (Stockholm, Sweden), Nastran™, and others, for areas varying from structural mechanics to fluid dynamics, electromagnetics, and heat transfer.

Finite element analyses can be applied into a number of fields, from facilitating the resolution of well-defined CAD-based geometries to the simulation of specific, more abstract scenarios which otherwise could be considered much more difficult to achieve. The basic process of conducting an FEA is based on the definition of three essential sets of variables: geometry, constitutive properties, and boundary conditions. The first one is related to the shape, dimensions, and relative organization of the 3D model. As mentioned, the geometry can be obtained employing CAD software or, in the case of biological samples, via digitalization. Recently, µCT-based models are being increasingly used in FEA in many fields of biomedical research, allowing organic and complex geometries to be investigated and simulated in silico. In the process of discretization (Fig. 3), at which the original µCT image stack (Fig. 3a) is converted into a mesh suitable for FEA, two basic methods can be followed, voxel based and geometry based [9, 94]. The first one is the most straightforward due to the direct conversion of the image stack voxels into elements (Fig. 3b). As for the geometry based, the selected regions must first be segmented and then transformed into an FEA mesh (Fig. 3c). The main differences are that in the voxel-based meshing there is no need for segmentation processes, but the resulting mesh tends to be much larger depending on the number of µCT slices in the stack and the resolution of each slice, i.e., the greater the stack size and resolution, the greater the number of voxels and elements in the mesh. As for the geometry-based method, once the mesh is defined automatically, users must also ponder the refinement-performance balance, whereas on the one hand a finer mesh would represent more accurately the original geometry, yet it would consume more computational resources. Another point worth mentioning is that the geometry-based mesh tends to be much more universally accepted in a variety of FEA solvers, consequently allowing a wider range of several types of analyses to be executed.

The second basic set of parameters required in any FEA is the constitutive properties. They are related to the physical characteristics of the geometry regarding its mechanical (elastic modulus, yield strength, dynamic viscosity…), thermal (thermal conductivity, specific heat capacity…), and electromagnetic (electrical conductivity, magnetic permeability…) properties, among others. Since the resulting µCT images are defined in the grayscale, at which the whiter the color the denser that region (Fig. 3a), the constitutive properties of a voxel-based analysis can be extracted directly from the images, like in Fig. 3b, where the warmer colors represent a denser material, i.e., higher values of mechanical properties were attributed. As for the geometry-based method, once the region is homogenized, so must be its properties for the analysis to be accurate [94]. The last main parameter to be configured is the boundary conditions, i.e., all the external parameters that affect the analyzed geometry, e.g., loads (including pressures, forces, temperatures, electric potentials…), displacements, and constraints, among others. Once defined all parameters, the analysis can be solved. FEA is a complex and comprehensive field of research and many other fundamentals and parameters should be considered, like non-linearities (geometric, constitutive, and boundary conditions), types of analyses (implicit, explicit, thermal, modal, buckling, vibration…), types of mesh elements (tetrahedra, hexahedra…), and more, which are also beyond the scope of the chapter, and in-depth information can be accessed in the works of [5, 65, 107, 147].

Despite being less commonly found in the plant sciences fields, the numerical analysis still appears in many important research studies, particularly when combined with biomedical imaging. As discussed before, the main advantages of a µCT-based FEA in plants are the ability to carry out investigations where (i) an accurate morphology is needed; (ii) the potential of conducting experimental analysis (i.e., with real samples) is somewhat difficult or impossible to accomplish due to limiting factors like diminished sample size, lack of control of boundary conditions, restrictions to perform experiments with quick modification of variables, etc. Still, similar to the process of applying the upcoming obtained knowledge in bionics, the investigation of µCT-based FEA itself also benefits from a team with multiple backgrounds, as may be seen by some examples. For instance [92], assessed the structural role of bamboo parenchyma as an important tissue in the plant using µCT and FEA. Due to its matrix-like behavior, in a composite analogy, the ground tissue distributes local stress into a larger region so the sclerenchyma bundles can act like reinforcements for greater stiffness and strength. The authors also observed, using the same set of techniques [96], that bamboo tends to spread compression stresses into secondary branches in order to preserve the main axis. Due to the resolution of workable fibers as well as the complexity of the vascular arrangement in the plant, only µCT-based FEA could be used for this type of analysis. Nogueira et al. [85] presented the first known literature application of a heat-transfer FEA based on µCT of biological material. In this research, the authors utilized high-resolution scanned images of a Bromeliad inflorescence to investigate the role of the accumulated water in the inflorescence tank of the plant, identifying it as acting as a thermal mass, by absorbing the external temperature and preventing internal structures to overheat. This feature results in the protection of the inflorescence and flower components, preserving it without the appearance of injuries and necrosis in bracts and leaves. Even though the authors also utilized an experimental procedure to complement the findings of the numerical analysis, the in silico tests were crucial for a fine assessment of the inflorescence internal structure’s temperatures as well as the replication of the tests with the modification of multiple variables. Forell et al. [30] explored ways to prevent lodging at maize stalks for bioenergy, by means of altering morphological characteristics. Using µCT-based FEA, authors could digitally modify individual stalk variables like diameter and rind thickness to assess its performance modifications. By highlighting the benefits of using non-traditional, engineering-related techniques like FEA in plant analyses, authors state that “collaborations between plant scientists and biomechanical engineers promise to provide many new insights into plant form and function”.

3 Bionics: From Design to Biodiversity

As seen before, bionics or biomimetics is fundamentally based on the relationship of knowledge interchanging between natural and applied sciences, and how it can be achieved is via technology. Not only a better understanding of organisms and biological materials can be obtained with unusual techniques of observation and analysis, but interdisciplinary teams in the context of bionics or biomimetics are also ensuring a more sustainable development [79], which also reflects into gains for biodiversity as a whole. From unraveling signals used by flowers to attract pollinators [102] to assessing the role of parenchyma wall thickness for the mechanical properties of bamboo [22], techniques considered almost exclusive to engineering, design, and architecture are unveiling new applications in the biological world. Likewise, technologies originally intended for the development of new bioinspired products are also a way to promote unexpected insights into the natural world [121], thus paving the street two ways: from nature-to-project and project-to-nature. This concept is also known as “reverse biomimetics” which was defined in a guideline for biomimetics from the Association of German Engineers (VDI or Verein Deutscher Ingenieure):

Over the last few years, it has become apparent that knowledge gained through the implementation of biologically inspired principles of operation in innovative biomimetic products and technologies can contribute to a better understanding of the biological systems. This relatively recently discovered transfer process from biomimetics to biology can be referred to as “reverse biomimetics”. [134]

By employing a “two-way bionics” like philosophy, research groups are able to discover new tools, techniques, equipment, and methodologies, that otherwise may never be considered for solving scientific inquires in a variety of disciplines. Different from interdisciplinary, the transdisciplinary approach lies not only in the interconnection among different disciplines, but in the total removal of the boundaries between them [81], i.e., treating it as a uniform, homogeneous macro-science, where no method is unique and immutable. And by considering the applications of digital technologies, enabling the exchange in knowledge between fields of sciences is becoming more accessible than ever, particularly regarding bionics and biomimetics [62]. 3D technologies and numerical simulations are becoming more “integrated” and more “integrative” in the scientific problem-solving workflow, iteratively questioning the object of study and the object at which the natural characteristic is supposed to be applied. “Within this so-called process of reverse biomimetics, the functional principles and abstracted models (typically including finite element modeling) are repeatedly evaluated in iterative feedback loops and compared with the biological models leading to a considerable gain in knowledge” [122].

An organism, an animal, or a plant is a package of its adaptative history, written in its genes, and seen as its phenotype. Each one of them brings itself its ecological history, strategies of reproduction, responses to the environment, and characteristics that make this unique organism well succeed today. In the same way that our knowledge about the relationship in the life tree has grown with advances in the techniques and analysis of molecular systematics, the understanding of biological morphology was also improved using new technologies, like confocal laser scanning microscopy, nuclear magnetic resonance imaging, microcomputed tomography, and others. Nevertheless, knowledge about biodiversity is not a one-way street, and related or even distant areas end up benefiting from technological advances in different fields. For example, several well-succeed lineages distributed today have some morphological feature associated with their evolutionary success, this morphological adaption or phenotype is also called “key innovation” [58]. This “new” feature could occur in a specific lineage, like the evolution of the tank habit in Bromeliaceae [118], or could be recovered to a large group, as the diversification of different flower morphology among the Angiosperm lineages [26, 142]. Recovering these traits’ origins is one of the main challenges to reconstructing the life history in different groups, and the fossil record is an essential step to our understanding of evolution and diversification of these features, especially in groups that have low preservation or do not have totally preserved specimens, like the monocotyledons [74, 119]. In this sense, the use of µCT have been changing the fossil analysis, not only in plants but in different fields of biodiversity, since using this tool is possible to visualize internal delicate features without the need for a destructive or invasive preparation [51, 127]. Using this approach, a new fossil botanical genus was proposed, Tanispermum, related to the extant Austrobaileyales and Nymphaeales, based on some particular features of the seeds [32]. In the same way, [36], analyzing an inflorescence fossil of Fagales, present several distinct features of flower components, the inflorescence arrangement, and characteristics of the stem useful to understand the diversification of the stem, the evolution of pollination modes, and other character evolution in Fagales. On the other side of biodiversity research [14], studied the semicircular canals of the endosseous labyrinth of living and fossil Archosauria, represented today by birds and crocodylians, in order to understand several aspects of locomotor system diversification, and if shapes variation of this structure could be related of locomotor changes, like flight, semi-aquatic locomotion, and bipedalism. The authors bring to light several aspects of this structure evolution, showing high divergence in this trait and that the differences in the semicircular canal are related to spatial constraints among the analyzed lineages, besides the higher degree of divergence in this structure appeared early in the divergence of bird-crocodylian. The most interesting aspect of these discoveries in biodiversity research using 3D technologies, like µCT, is how significant three-dimensional analysis is for understanding each group. This means that the individual or the structure is three dimensional and the understanding of its form, function, physiological process, size, strain limitation, and growth form is difficult to be inferred by only using 2D images.

Indeed, images are everything, especially nowadays, and all of the images generated by these new technologies are in digital format. This information brings to us another important topic to three-dimensional approaches, which is the “Digitalization of the Biodiversity Collections” and how 3D technologies could be associated with the storage, shared, and popularization of these collections. The natural collection worldwide holds billions of specimens of biodiversity, including information like taxonomic position, geographic location, ecological, and temporal data [90]. In recent years, many institutes and initiatives concentrate efforts to digitalize specimens of natural collections. The reasons are the most diverse, like understanding the taxa distribution through time, climate change, the dynamic of invasive species, and others [75, 90]. One of these efforts is coordinated by the Rio de Janeiro Botanical Garden, in partnership with the CNPq—Brazil (National Council for Scientific and Technological Development), with the so-called “REFLORA” program. Its goal is the historical rescue or the repatriation of the specimens of the Brazilian flora deposited in foreign herbaria, through the digitalization with high-resolution images used to construct the “Virtual Herbaria Reflora”. Besides, the program also includes the digitalization of the Brazilian Herbaria, allowing that researchers worldwide work in this platform, as they do in the physical collections [108]. This step of digitalization, which includes the digital image of the specimen and associated metadata, like taxonomic position and geographic location, is the first step to digitalizing a natural collection. However, with the improvement of technologies, like computed tomography, those specimens digitalized could provide much more details complementing this digital imaging, so the data associated with the specimen using, for example, µCT can be virtually analyzed, dissected, and manipulated by researchers worldwide [47]. Furthermore, the µCT digitalized models could be used for 3D printing, which can be employed as a didactical tool or for sharing these specimens. Recently [7], proposed a 3D model equivalent of a real and unique object, called VERO, which stands for “Virtual Equivalent of a Real Object”, being proposed as a non-fungible token (NFT). In this model, the VERO and the NFT are interlinked, i.e., through this system, the VERO is transformed into a type of NFT. In accordance with the authors, “VEROs can be produced by museums to enable recreational collectors to own NFTs that represent virtual versions of the objects housed within museum collections”. In this way, the idea of having the natural collections digitalize through high-resolution morphology using 3D technologies of the specimens also presents some opportunities for the popularization of the specimens held in these collections, especially that ones that present risk of degradation, such as fossils and unique and delicate specimens. Another major contribution of setting VERO is the possibility of raising funds for maintaining vulnerable or under-resourced collections, museums, and institutions. By defining it as NFTs, with the use of blockchain technology, buyers can both (i) ensure it is an original artwork, by preventing forgeries, which ownership can be traced back to its institution, and (ii) being a digital object, its trade avoids complicated copyright issues that can be quite common with physical objects [7].

4 Conclusions and Future Directions

Bionics and biomimetics should be considered as a means to obtain interchangeable pieces of knowledge between the “project” and the “object” of study. By blurring the lines dividing the work activities of scientists of classical fields and design engineers, we can gain access to new ways to promote more sustainable development, either by (i) better comprehending the functioning and requirements of the natural world as a way to reduce the human impact in nature and furthering healthier biodiversity, and by (ii) creating more environmentally sound projects, which includes more efficient materials and structures, and more ecologically integrated organizational systems. Increasing access to state-of-the-art technologies is a way to ensure a more transdisciplinary approach between sciences, favoring unified teaching and research [91]. µCT has been shown a versatile tool in plant research, due to its non-invasive character and, in most cases, due to the lack of intensive preparation requirements for samples. Some of the advantages of using this approach include high morphological resolution of complex and delicate internal structures, like visualizing the complex vascular system in the nodal region of bamboo [96], obtaining details of the 3D architecture, and the modeling of gas exchange in leaves [24, 52, 73], in addition, some opportunities as in vivo measures and experiments are possible [25]. However, the technique usually requires a long image acquisition time, and the ionizing radiation dosage difficult to repeat the imaging acquisition of some delicate specimens. Besides, some plant tissues like meristems present low density which reduces X-ray attenuation-based contrast, thus requiring a sample preparation to increase the contrast [124].

Available methodologies for imaging samples with different compositions that follow three-dimensional approaches include confocal imaging, Magnetic Resonance Imaging (MRI), Optical Projection Tomography (OPT), Macro-Projection Tomography (M-OPT) [66, 67, 104, 132], and other examples in [103]. The selection of the imaging method in biodiversity research is a matter of scale and resolution. The researcher needs to previously know the limitation of each technique and the trade-offs between the benefits and limitations of the methodology employed. For example, confocal microscopy and OPT present the opportunity for imaging live plants with restrictions of sample size [66, 104]. M-OPT allows imaging of large sample size, presenting 3D digitalization of the morphology of the samples, including visualizing of gene activity and internal details of structures histochemically marked [67]; however, it is limited by the field of view and the processing artifacts that affect morphology due to the partial cleaning of tissues [43]. MRI allows non-invasive and non-destructive analysis of intact and living plants [132], despite limitations in resolution. The technique is used to measure different physiological processes, like measuring and quantifying fluid movement in the xylem and phloem [140], and the development of embolism in vessels [34, 76]. Besides it also allows repeated access to plant organs development, revealing details of its morphology and anatomy [49, 78, 80, 133]. The main drawback of conventional MRI can be overcome by newer high-resolution magnetic resonance imaging techniques (or µMRI). However, the higher the intended resolution in the analysis, the more powerful (and expensive) the magnet of the equipment has to be, which increases the costs of the procedure, in addition to not achieving the same spatial resolutions [43] as in X-ray nanotomography (nCT) systems. Some recent works utilize combined approaches in order to compare size, resolution, and the procedures, showing that the advantages and limitations of each technique can be complemented when used in a combined way [16, 44, 77]. Overall, there is no one perfect technique that can be employed in every investigation, sample, or analysis, and therefore it is up to the scientist to choose the most suitable method or combination of methods to reach the research goals. Furthermore, a broader diversity in the members’ training backgrounds in a research group must be seen as a possibility to accomplish more in-depth analyses, benefiting the whole knowledge transferring process between nature and project and contributing to a better relationship of humans with the environment.

References

Alberts B, Johnson A, Lewis J, Morgan D, Raff M, Roberts K, Walter P (2017) Molecular biology of the cell. W.W. Norton & Company

Anderson TF (1951) Techniques for the preservaation of three-dimensional structure in preparing specimens for the electron microscope*. Trans N Y Acad Sci 13:130–134. https://doi.org/10.1111/j.2164-0947.1951.tb01007.x

Ashby MF, Ferreira PJSG, Schodek DL (2009) Nanomaterials, nanotechnologies and design: an introduction for engineers and architects. Butterworth-Heinemann, Burlington, EUA

Batchelor PG, Edwards PJ, King AP (2012) 3D medical imaging. In: 3D imaging, analysis and applications. Springer, London, pp 445–495

Bathe K-J (1996) Finite element procedures. Prentice Hall, Englewood Cliffs, N.J.

Bhushan B (2009) Biomimetics: lessons from nature—An overview. Philos Trans R Soc A Math Phys Eng Sci 367:1445–1486. https://doi.org/10.1098/rsta.2009.0011

Bolton SJ, Cora JR (2021) Virtual equivalents of real objects (VEROs): a type of non-fungible token (NFT) that can help fund the 3D digitization of natural history collections. Megataxa 6. https://doi.org/10.11646/megataxa.6.2.2

Boyd SK (2009) Micro-computed tomography. Advanced imaging in biology and medicine. Springer, Berlin, Heidelberg, pp 3–25

Boyd SK (2009) Image-based finite element analysis. Advanced Imaging in biology and medicine. Springer, Berlin, Heidelberg, pp 301–318

Brazier LG (1927) On the flexure of thin cylindrical shells and other “thin” sections. Proc R Soc London Ser A, Contain Pap Math Phys Character 116:104–114. https://doi.org/10.1098/rspa.1927.0125

Brodersen CR, Choat B, Chatelet DS, Shackel KA, Matthews MA, McElrone AJ (2013) Xylem vessel relays contribute to radial connectivity in grapevine stems (Vitis vinifera and V. arizonica; Vitaceae). Am J Bot 100:314–321. https://doi.org/10.3732/ajb.1100606

Brodersen CR, Lee EF, Choat B, Jansen S, Phillips RJ, Shackel KA, McElrone AJ, Matthews MA (2011) Automated analysis of three-dimensional xylem networks using high-resolution computed tomography. New Phytol 191:1168–1179. https://doi.org/10.1111/j.1469-8137.2011.03754.x

Brodersen CR, Roddy AB (2016) New frontiers in the three-dimensional visualization of plant structure and function. Am J Bot 103:184–188. https://doi.org/10.3732/ajb.1500532

Bronzati M, Benson RBJ, Evers SW, Ezcurra MD, Cabreira SF, Choiniere J, Dollman KN, Paulina-Carabajal A, Radermacher VJ, Roberto-da-Silva L, Sobral G, Stocker MR, Witmer LM, Langer MC, Nesbitt SJ (2021) Deep evolutionary diversification of semicircular canals in archosaurs. Curr Biol 31:2520-2529.e6. https://doi.org/10.1016/j.cub.2021.03.086

Callister WD, Rethwisch DG (2012) Fundamentals of materials science and engineering: an integrated approach, 4th edn. Wiley, New York

Calo CM, Rizzutto MA, Carmello-Guerreiro SM, Dias CSB, Watling J, Shock MP, Zimpel CA, Furquim LP, Pugliese F, Neves EG (2020) A correlation analysis of light microscopy and X-ray MicroCT imaging methods applied to archaeological plant remains’ morphological attributes visualization. Sci Rep 10:15105. https://doi.org/10.1038/s41598-020-71726-z

Calvin CL (1967) Anatomy of the endophytic system of the mistletoe, phoradendron flavescens. Bot Gaz 128:117–137. https://doi.org/10.1086/336388

Chen BC, Zou M, Liu GM, Song JF, Wang HX (2018) Experimental study on energy absorption of bionic tubes inspired by bamboo structures under axial crushing. Int J Impact Eng 115:48–57. https://doi.org/10.1016/J.IJIMPENG.2018.01.005

Demanet CM, Sankar KV (1996) Atomic force microscopy images of a pollen grain: a preliminary study. South African J Bot 62:221–223. https://doi.org/10.1016/S0254-6299(15)30640-2

Dickinson MH (1999) Bionics: biological insight into mechanical design. Proc Natl Acad Sci 96:14208–14209. https://doi.org/10.1073/pnas.96.25.14208

Ding Y, Liese W (1997) Anatomical investigations on the nodes of bamboos. In: Soc L, Chapman G (eds) The bamboos. Academic Press, London, pp 265–279

Dixon PG, Muth JT, Xiao X, Skylar-Scott MA, Lewis JA, Gibson LJ (2018) 3D printed structures for modeling the Young’s modulus of bamboo parenchyma. Acta Biomater 68:90–98. https://doi.org/10.1016/j.actbio.2017.12.036

Dubos T, Poulet A, Gonthier-Gueret C, Mougeot G, Vanrobays E, Li Y, Tutois S, Pery E, Chausse F, Probst A V., Tatout C, Desset S (2020) Automated 3D bio-imaging analysis of nuclear organization by NucleusJ 2.0. Nucleus 11:315–329. https://doi.org/10.1080/19491034.2020.1845012

Earles JM, Buckley TN, Brodersen CR, Busch FA, Cano FJ, Choat B, Evans JR, Farquhar GD, Harwood R, Huynh M, John GP, Miller ML, Rockwell FE, Sack L, Scoffoni C, Struik PC, Wu A, Yin X, Barbour MM (2019) Embracing 3D complexity in leaf carbon-water exchange. Trends Plant Sci 24:15–24. https://doi.org/10.1016/j.tplants.2018.09.005

Earles JM, Knipfer T, Tixier A, Orozco J, Reyes C, Zwieniecki MA, Brodersen CR, McElrone AJ (2018) In vivo quantification of plant starch reserves at micrometer resolution using X-ray microCT imaging and machine learning. New Phytol 218:1260–1269. https://doi.org/10.1111/nph.15068

Endress PK (2001) Origins of flower morphology. J Exp Zool 291:105–115. https://doi.org/10.1002/jez.1063

Evert RF, Eichhorn SE (2006) Esau’s plant anatomy: meristems, cells, and tissues of the plant body: their structure, function, and development. Wiley, New Jersey

Evert RF, Eichhorn SE (2013) Raven biology of plants, 8th edn. W. H. Freeman, New York

Fahn A (1990) Plant anatomy. Pergamon Press, Oxford, Fourth

Von FG, Robertson D, Lee SY, Cook DD (2015) Preventing lodging in bioenergy crops: a biomechanical analysis of maize stalks suggests a new approach. J Exp Bot 66:4367–4371. https://doi.org/10.1093/jxb/erv108

Fratzl P (2007) Biomimetic materials research: what can we really learn from nature’s structural materials? J R Soc Interface 4:637–642. https://doi.org/10.1098/rsif.2007.0218

Friis EM, Crane PR, Pedersen KR (2018) Tanispermum, a new genus of hemi-orthotropous to hemi-anatropous angiosperm seeds from the early cretaceous of eastern North America. Am J Bot 105:1369–1388. https://doi.org/10.1002/ajb2.1124

Fu J, Liu Q, Liufu K, Deng Y, Fang J, Li Q (2019) Design of bionic-bamboo thin-walled structures for energy absorption. Thin-Walled Struct 135:400–413. https://doi.org/10.1016/j.tws.2018.10.003

Fukuda K, Kawaguchi D, Aihara T, Ogasa MY, Miki NH, Haishi T, Umebayashi T (2015) Vulnerability to cavitation differs between current-year and older xylem: non-destructive observation with a compact magnetic resonance imaging system of two deciduous diffuse-porous species. Plant Cell Environ 38:2508–2518. https://doi.org/10.1111/pce.12510

Gadala M (2020) Finite elements for engineers with ANSYS applications. Cambridge University Press

Gandolfo MA, Nixon KC, Crepet WL, Grimaldi DA (2018) A late cretaceous fagalean inflorescence preserved in amber from New Jersey. Am J Bot 105:1424–1435. https://doi.org/10.1002/ajb2.1103

Gibson LJ, Ashby MF, Harley BA (2010) Cellular materials in nature and medicine. Cambridge University Press, Cambridge, UK

Gierke HE von (1970) Bionics and Bioengineering in Aerospace Research. In: Oestreicher HL, von Gierke HE, Keidel WD (eds) Principles and practice of bionics. TechnivisionServices, Slough, England, pp 19–42

Goldstein J, Newbury DE, Joy DC, Lyman CE, Echlin P, Lifshin E, Sawyer L, Michael JR (2003) Scanning electron microscopy and X-ray microanalysis, 3rd edn. Springer Science & Business Media, New York

Grew N (1682) The anatomy of plants. W. Rawlins, London

Habibi MK, Lu Y (2015) Crack propagation in Bamboo’s hierarchical cellular structure. Sci Rep 4:5598. https://doi.org/10.1038/srep05598

Halacy DS (1965) Bionics: the science of “living” machines. Holiday House, New York

Hallgrimsson B, Percival CJ, Green R, Young NM, Mio W, Marcucio R (2015) Morphometrics, 3D imaging, and Craniofacial development. In: Chai Y (ed) Current topics in developmental biology. Academic Press, pp 561–597

Handschuh S, Baeumler N, Schwaha T, Ruthensteiner B (2013) A correlative approach for combining microCT, light and transmission electron microscopy in a single 3D scenario. Front Zool 10:44. https://doi.org/10.1186/1742-9994-10-44

Hanke R, Fuchs T, Salamon M, Zabler S (2016) X-ray microtomography for materials characterization. In: Hübschen G, Altpeter I, Tschuncky R, Herrmann H-G (eds) Materials characterization using nondestructive evaluation (NDE) methods. Woodhead, Duxford, UK, pp 45–79

Harwood J, Harwood R (2012) Testing of natural textile fibres. In: Kozłowski RM (ed) Handbook of natural fibres. Elsevier, pp 345–390

Hedrick BP, Heberling JM, Meineke EK, Turner KG, Grassa CJ, Park DS, Kennedy J, Clarke JA, Cook JA, Blackburn DC, Edwards SV, Davis CC (2020) Digitization and the future of natural history collections. Bioscience 70:243–251. https://doi.org/10.1093/biosci/biz163

Hesse L, Bunk K, Leupold J, Speck T, Masselter T (2019) Structural and functional imaging of large and opaque plant specimens. J Exp Bot 70:3659–3678. https://doi.org/10.1093/jxb/erz186

Hesse L, Leupold J, Speck T, Masselter T (2018) A qualitative analysis of the bud ontogeny of Dracaena marginata using high-resolution magnetic resonance imaging. Sci Rep 8:9881. https://doi.org/10.1038/s41598-018-27823-1

Heywood VH (1969) Scanning electron microscopy in the study of plant materials. Micron 1:1–14. https://doi.org/10.1016/0047-7206(69)90002-8

Hipsley CA, Aguilar R, Black JR, Hocknull SA (2020) High-throughput microCT scanning of small specimens: preparation, packing, parameters and post-processing. Sci Rep 10:13863. https://doi.org/10.1038/s41598-020-70970-7

Ho QT, Berghuijs HNC, Watté R, Verboven P, Herremans E, Yin X, Retta MA, Aernouts B, Saeys W, Helfen L, Farquhar GD, Struik PC, Nicolaï BM (2016) Three-dimensional microscale modelling of CO2 transport and light propagation in tomato leaves enlightens photosynthesis. Plant Cell Environ 39:50–61. https://doi.org/10.1111/pce.12590

Holzwarth JM, Ma PX (2011) Biomimetic nanofibrous scaffolds for bone tissue engineering. Biomaterials 32:9622–9629. https://doi.org/10.1016/j.biomaterials.2011.09.009

Hooke R (1665) Micrographia: or some physiological descriptions of minute bodies made by magnifying glasses with observations and inquiries thereupon. Jo. Martyn and Ja. Allestry, printers to the Royal Society, London

Hounsfield GN (1973) Computerized transverse axial scanning (tomography): part 1. Description of system. Br J Radiol 46:1016–1022. https://doi.org/10.1259/0007-1285-46-552-1016

Hsieh J (2014) History of x-ray computed tomography. In: Shaw CC (ed) Cone beam computed tomography. CRC Press, Boca Raton, Florida, USA, pp 3–7

Hu D, Wang Y, Song B, Dang L, Zhang Z (2019) Energy-absorption characteristics of a bionic honeycomb tubular nested structure inspired by bamboo under axial crushing. Compos Part B Eng 162:21–32. https://doi.org/10.1016/j.compositesb.2018.10.095

Hunter JP (1998) Key innovations and the ecology of macroevolution. Trends Ecol Evol 13:31–36. https://doi.org/10.1016/S0169-5347(97)01273-1

Kasprowicz A, Smolarkiewicz M, Wierzchowiecka M, Michalak M, Wojtaszek P (2011) Introduction: tensegral world of plants. In: Wojtaszek P (ed) Mechanical integration of plant cells and plants. Springer, Berlin, Heidelberg, pp 1–25

Kindlein Júnior W, Guanabara AS (2005) Methodology for product design based on the study of bionics. Mater Des 26:149–155. https://doi.org/10.1016/j.matdes.2004.05.009

Knippers J, Schmid U, Speck T (eds) (2019) Biomimetics for architecture. De Gruyter

Knippers J, Speck T, Nickel KG (2016) Biomimetic research: a dialogue between the disciplines. 1–5. https://doi.org/10.1007/978-3-319-46374-2_1

Knoll M, Ruska E (1932) Das Elektronenmikroskop. Zeitschrift für Phys 78:318–339. https://doi.org/10.1007/BF01342199

Kraus JE, Louro RP, Estelita MEM, Arduin M (2006) A Célula vegetal. In: Appezzato-da-Glória B, Carmello-Guerreiro SM (eds) Anatomia vegetal, 2nd edn. UFV, Viçosa, pp 31–86

Kurowski PM (2004) Finite element analysis for design engineers. SAE International, Warrendale, PA

Lee K, Avondo J, Morrison H, Blot L, Stark M, Sharpe J, Bangham A, Coen E (2006) Visualizing plant development and gene expression in three dimensions using optical projection tomography. Plant Cell 18:2145–2156. https://doi.org/10.1105/tpc.106.043042

Lee KJI, Calder GM, Hindle CR, Newman JL, Robinson SN, Avondo JJHY, Coen ES (2016) Macro optical projection tomography for large scale 3D imaging of plant structures and gene activity. J Exp Bot erw452. https://doi.org/10.1093/jxb/erw452

Li H, Shen S (2011) The mechanical properties of bamboo and vascular bundles. J Mater Res 26:2749–2756. https://doi.org/10.1557/jmr.2011.314

Liese W (1998) The Anatomy of Bamboo Culms. BRILL, Beijing

López M, Rubio R, Martín S, Croxford B (2017) How plants inspire façades. From plants to architecture: biomimetic principles for the development of adaptive architectural envelopes. Renew Sustain Energy Rev 67:692–703. https://doi.org/10.1016/j.rser.2016.09.018

Ma J, Chen W, Zhao L, Zhao D (2008) Elastic buckling of bionic cylindrical shells based on bamboo. J Bionic Eng 5:231–238. https://doi.org/10.1016/S1672-6529(08)60029-3

Masters BR (2009) History of the electron microscope in cell biology. In: Encyclopedia of life sciences. Wiley, Chichester, UK

Mathers AW, Hepworth C, Baillie AL, Sloan J, Jones H, Lundgren M, Fleming AJ, Mooney SJ, Sturrock CJ (2018) Investigating the microstructure of plant leaves in 3D with lab-based X-ray computed tomography. Plant Methods 14:99. https://doi.org/10.1186/s13007-018-0367-7

Matsunaga KKS, Manchester SR, Srivastava R, Kapgate DK, Smith SY (2019) Fossil palm fruits from India indicate a Cretaceous origin of Arecaceae tribe Borasseae. Bot J Linn Soc 190:260–280. https://doi.org/10.1093/botlinnean/boz019

Meineke EK, Davies TJ, Daru BH, Davis CC (2019) Biological collections for understanding biodiversity in the Anthropocene. Philos Trans R Soc B Biol Sci 374:20170386. https://doi.org/10.1098/rstb.2017.0386

Meixner M, Foerst P, Windt CW (2021) Reduced spatial resolution MRI suffices to image and quantify drought induced embolism formation in trees. Plant Methods 17:38. https://doi.org/10.1186/s13007-021-00732-7

Metzner R, Eggert A, van Dusschoten D, Pflugfelder D, Gerth S, Schurr U, Uhlmann N, Jahnke S (2015) Direct comparison of MRI and X-ray CT technologies for 3D imaging of root systems in soil: potential and challenges for root trait quantification. Plant Methods 11:17. https://doi.org/10.1186/s13007-015-0060-z

Metzner R, van Dusschoten D, Bühler J, Schurr U, Jahnke S (2014) Belowground plant development measured with magnetic resonance imaging (MRI): exploiting the potential for non-invasive trait quantification using sugar beet as a proxy. Front Plant Sci 5. https://doi.org/10.3389/fpls.2014.00469

Möller M, Höfele P, Kiesel A, Speck O (2021) Reactions of sciences to the anthropocene. Elem Sci Anthr 9. https://doi.org/10.1525/elementa.2021.035

Morozov D, Tal I, Pisanty O, Shani E, Cohen Y (2017) Studying microstructure and microstructural changes in plant tissues by advanced diffusion magnetic resonance imaging techniques. J Exp Bot 68:2245–2257. https://doi.org/10.1093/jxb/erx106

Nicolescu B (2010) Methodology of transdisciplinarity—Levels of reality, logic of the included middle and complexity. Transdiscip J Eng Sci 1:19–38

Niklas KJ (1992) Plant biomechanics : an engineering approach to plant form and function. University of Chicago Press, Chicago, EUA

Niklas KJ, Spatz H-C (2012) Plant physics. University of Chicago Press, Chicago

Nogueira FM, Kuhn SA, Palombini FL, Rua GH, Andrello AC, Appoloni CR, Mariath JEA (2017) Tank-inflorescence in Nidularium innocentii (Bromeliaceae): three-dimensional model and development. Bot J Linn Soc 185:413–424. https://doi.org/10.1093/botlinnean/box059

Nogueira FM, Palombini FL, Kuhn SA, Oliveira BF, Mariath JEA (2019) Heat transfer in the tank-inflorescence of Nidularium innocentii (Bromeliaceae): experimental and finite element analysis based on X-ray microtomography. Micron 124:102714. https://doi.org/10.1016/j.micron.2019.102714

Nogueira FM, Palombini FL, Kuhn SA, Rua GH, Mariath JEA (2021) The inflorescence architecture in Nidularioid genera: understanding the structure of congested inflorescences in Bromeliaceae. Flora 284:151934. https://doi.org/10.1016/j.flora.2021.151934

Orhan K (ed) (2020) Micro-computed tomography (micro-CT) in medicine and engineering. Springer International Publishing, Cham

Overney N, Overney G (2011) The history of photomicrography

Paddock SW (2000) Principles and practices of laser scanning confocal microscopy. Mol Biotechnol 16:127–150. https://doi.org/10.1385/MB:16:2:127