Abstract

Shot boundary detection is the first and the most crucial step towards video content management applications including indexing, retrieval and summerisation. In this paper, an abrupt transition detection algorithm has been proposed based on phase congruency feature of the frames. The phase congruency feature is insensitive to illumination variation, change in contrast and scale. Besides this, it captures edges, corners and structural information of the frames. Motivated by this, a PC-based similarity measure is proposed for illumination insensitive video cut detection. The proposed approach is experimentally validated with standard algorithms available in the literature using TRECVid data set and other publicly available videos. The favourable results are in agreement with the proposed model.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Development of the multimedia technology has made available plenty of high performance and easy to operate video capturing devices at affordable cost to the common public. As a result, everyday a huge volume of video data is created. Statistically, it is observed that videos from different sources are uploaded, downloaded and viewed in an unimaginable rate. This led to an escalation of the digital video information in the cyber space. This huge volume of video data is also easily available to and accessible by the common public. So, there is a substantial need for efficient management of the video information starting from video indexing, retrieval and classification to summerisation [1]. Hierarchical levels of video structure from bottom to top are frames, shots, scenes and stories. Combination of shots forms a scene, combination of scene forms a story and so forth combined to produce a video. Scene change detection is the first and the most crucial step towards this goal. It divides the video data into semantically related frames sharing the same content. The boundary between two consecutive shots is generally a hard-cut or a gradual transition (GT). Hard-cut also called cut transitions is observed to be the most predominant type of transition in videos than gradual transitions [2].

2 Review of Literature

Many algorithms have been developed in literature for automatic detection of type and location of transitions. However, sudden illumination change such as flashlight, stage effects and high-speed object/camera motion in videos can be misunderstood as shot change leading to false detection in the shot boundary. Sudden change in illumination and high motion scenes are common in fantasy and thriller movies, news reports or sports videos, that are intentionally included in the video to make them attractive for the audience. So it is a crucial step to eliminate the influence of these effects on shot change detection. To ensure this, a suitable feature needs to be extracted followed by formulation of the similarity measure and shot boundary detection(SBD). Many contributions on video SBD can be found in the literature [3,4,5]. Several features deployed for SBD are pixel intensity [6], histogram [7], edge, SURF and SIFT features [8]. Intensity-based features are found to have higher sensitivity towards large motion and light variation. Histogram feature-based algorithms are comparatively better than intensity feature-based approach, in terms of handling motion and illumination variation. Besides these, various edge and gradient-based features can be found in literature. Edge feature-based approaches are insensitive to small variation in light; however, for videos with large illumination, variations tend to destroy the edge features leading to false transitions. In order to improve efficiency, some researchers combined multiple features [9,10,11] for transition detection. Many authors proposed shot boundary techniques based on new feature space. LBPHF [12], LBP [13], CSLBP [14] and LDP [15] deployed for shot boundary detection are efficient in handling videos with sudden change in lighting condition. As an improvement in LBP-based method, Chakraborty et al. [16] proposed LTP-based approach in laboratory colour space under high object camera motion for SBD but has lower sensitivity to noise and illumination. In the current work, the advantage of illumination insensitivity feature of phase congruency (PC) is explored to develop a new feature similarity(FS) measure, i.e. PCFS. The proposed PCFS outperforms histogram and LBP-based feature similarity measure. This paper is arranged as follows: Sect. 2 describes the related work. Section 3 introduces image feature extraction using phase congruency, its significance in applying for current problem and the algorithm for development of similarity measure for the transition detection. Section 4 presents the simulation result discussions to support for the effectiveness of the extracted feature and algorithm followed by conclusions in Sect. 5.

3 Phase Congruency

Phase congruency has been developed from the phase information of the signal obtained from the frequency domain representation. It is found from the literature that phase is a crucial parameter for the perception of visual features [17]. Further, as per the evidence given by Morrone [18] indicated that the human visual system strongly responds to the image locations. It perceives features with highly ordered phase information. Thus, a human has a tendency to sketch an image, by precisely through the edges and interest points as perceived in a scene. This highlights the points having highest order in phase in a frequency-based image representation. The locations in the image where the Fourier components have highest phase correlation emphasize the visually differentiable image features such as edges, lines and mach bands. This indicates that more informative features can be captured at points of high value of PC. Thus, the PC model defines features as points in an image with high phase order and uniquely defines the image luminance function. PC value lies between 0 and 1. It is a dimensionless quantity and identified as invariant to scale, illumination and contrast of an image [19, 20]. It allows a wide range of feature types to be detected within a single framework. The PC captures edge, corner, structure or contour information of objects. Gradient-based edge detection operators are sensitive to illumination variations and do not have accurate and consistent localization, which is overcome by PC-based feature. Moreover, PC mimics the response of the human visual perception to contours. Yu et al. [21] showed that it is well capable of distinguishing structural information content of the scene. The points where the Fourier waves at different frequencies have congruent phases capture the visually differentiable features. That is, at points of high phase congruency (PC), highly informative features can be extracted. PC can be defined by the frequency response of the log Gabor filter which is given by the following transfer function

where \(\omega _0\): centre frequency of the filter and \(\theta _0\): orientation component of the filter.

For an image f(x, y) with \(M_{so}^{ev}\) and \(M_{so}^{od}\) as the even symmetric and odd symmetric components of the log Gabor filter at scale \(\mathbf {s}\) and orientation \(\mathbf {o}\), the responses of the two quadrature pair filters are given by \(ev_{so}(x,y)\) and \(od_{so}(x,y)\), respectively, in (2)

The amplitude at scale \(\mathbf {s}\) and orientation \(\mathbf {o}\) is given by (3)

Hence, the phase congruency representation of an image f(x, y) in the simplest form without considering weight and noise component is given by (4)

\(\mathbf {\epsilon }\) is a small constant for avoiding zero in the denominator of (4). The phase congruency (PC) representation is a frequency-based modelling of visual information. It supposes that, instead of processing visual data spatially, the visual system can do similar processing via phase and amplitude of the individual frequency components in a signal. In PC evaluation, frequency domain processing is achieved through the Fourier transform. Kovesi [22] showed that corners and edges are well detected using PC. The problem of discriminating between abrupt shot transition in videos has not been addressed earlier by using phase congruency features. Kovesi formulated PC via a log Gabor filter function. In contrast to the Gabor function, it maintains zero DC for arbitrarily large bandwidth. Moreover, log Gabor is characterized by extended tail at higher-frequency region preserving the high-frequency details in the image [23]. To show the features captured by PC, we have considered a hypothetical image having prominent edges and corners given in Fig. 1a. The corresponding edge strength, corner strength and the complete PC map are illustrated in Fig. 1b, c and d, respectively. Edge and corners are well captured through PC. Again to illustrate the illumination insensitivity characteristic of the PC feature map, we have considered two consecutive frames, i.e. 165th and 166th frame of the video “Littlemiss sunshine”, as shown in Fig. 2a, out of which 166th frame is exposed to the flashlight. The original image and the corresponding PC feature frames of the two consecutive frames are given in Fig. 2a and b, respectively. It is clearly visible from the figure that the PC feature frames of the frame numbers 165 and 166 are very similar and not much affected by illumination variation through flashlight effect and hence can be suitable to develop an illumination insensitive similarity for abrupt transition detection. Due to the mentioned characteristics of the PC feature, we are motivated to use it for shot boundary detection problem. Towards this goal, this paper introduces the use of PC-based feature extraction for representing frame content of the video and for illumination insensitive shot boundary detection.

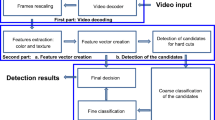

4 Proposed PC-Based CUT Detection

In this section, the proposed PC-based abrupt transition detection algorithm has been explained. The PC feature-based similarity (PCFS) between consecutive PC feature frames is given by (5).

\(PCFS(t,t+1):\) is the PC-based feature similarity measure between tth and \((t+1)\)th frame. \(PC_t\) and \(PC_{t+1}\) are the PC feature frames of tth and \((t+1)\)th frame, respectively. Row and Col are the number of rows and columns in the image. For AT detection, PC-based similarity is compared with Th as given by Zhang et al. [7].

where \(\beta \) is a constant and its value lies between 4 and 8 and \(\mu _s\) and \( \sigma _{s} \) are the average and standard deviation of the PC feature-based similarity value.

5 Simulations and Result Discussions

For validation of the proposed PC-based similarity measure, we considered ten videos of different genre such as English movies, Sitcom video, Soccer video, Cartoon video and Documentary videos, consisting of 78,447 frames in total and 437 cuts collected from [24] and Internet. The detailed information of the test videos is given in Table 1. The proposed PCFS is compared with the histogram-based similarity approach (ASHD) [7] and LBP-based similarity approach [14]. Recall (Rec), precision (Pr) and F1-measure are used to validate different SBD methods. It is clearly observed from Table 2 that the performance of the proposed PCFS is the highest in terms of average Rec, Pr and F1-measures.

6 Conclusions

In this article, an illumination insensitive phase congruency feature-based abrupt transition detection algorithm has been proposed. Besides illumination insensitivity, this feature is robust against contrast and scale changes as well. The performance of the proposed model is validated on publicly available video and the standard benchmark TRECVid data set. The limitations of using PC are the computational complexity in the evaluation process, setting of too many parameters to suit the application and sensitivity to image noise. Techniques of noise reduction prior to the PC evaluation may further improve the result. In future, the PC-based feature can be integrated with other features for efficient detection of gradual transitions.

References

Ejaz N, Mehmood I, Baik SW (2014) Feature aggregation based visual attention model for video summarization. Comput Electrical Eng 40(3):993–1005 April

Smeaton AF, Over P, Doherty AR (2010) Video shot boundary detection: seven years of TRECVid activity. Comput. Vis. Image Understand. 114(4):411–418 April

Abdulhussain SH, Ramli AR, Saripan MI, Mahmmod BM, Al-Haddad SAR, Jassim WA (2018) Methods and challenges in shot boundary detection: a review. Entropy 20(4)

Pal G, Rudrapaul D, Acharjee S, Ray R, Chakraborty S, Dey N (2015) Video shot boundary detection: a review. In: Emerging ICT for bridging the future, proceedings of the 49th annual convention of the computer society of India (CSI), vol 338, pp 119–127

SenGupta A, Singh KM, Thounaojam DM, Roy S (2015) Video shot boundary detection: a review. In: IEEE international conference on electrical, computer and communication technologies (ICECCT)

Lu Z, Shi Y (2013) Fast video shot boundary detection based on svd and pattern matching. IEEE Trans Image Process 22(12):5136–5145 December

Zhang HJ, Kankanhalli A, Smoliar SW (1993) Automatic partitioning of full motion video. Multimed Syst 1(1):10–28 Jan

Birinci M, Kiranyaz S (2014) A perceptual scheme for fully automatic video shot boundary detection. Signal Process Image Commun 29(3):410–423 March

Lakshmi Priya GG, Domnic S (2014) Walsh-Hadamard transform kernel-based feature vector for shot boundary detection. IEEE Trans Image Process 23(12):5187–5197

Chakraborty S, Thounaojam DM (2021) Sbd-duo: a dual stage shot boundary detection technique robust to motion and illumination effect. Multimed Tools Appl 80:3071–3087

Duan FF, Meng F (2020) Video shot boundary detection based on feature fusion and clustering technique. IEEE ACCESS 8:214633–214645

Singh A, Thounaojam DM, Chakraborty S (2019) A novel automatic shot boundary detection algorithm: robust to illumination and motion effect. Signal Image Video Process

Kar T, Kanungo P A texture based method for scene change detection. In: 2015 IEEE power, communication and information technology conference (PCITC), pp 72–77, 15–17 October 2015

Kar T, Kanungo P Cut detection using block based centre symmetric local binary pattern. In:2015 international conference on man and machine interfacing (MAMI), pp 1–5, 17–19 December 2015

Kar T, Kanungo P Abrupt scene change detection using block based local directional pattern. In: Data management, analytics and innovation proceedings of ICDMAI, vol 2, pp 191–203, January 18–20 2019

Chakraborty S, Thounaojam DM, Singh A (2021) A novel bifold-stage shot boundary detection algorithm: invariant to motion and illumination. Visual Comput

Xiao Z, Hou Z (2004) Phase based feature detector consistent with human visual system characteristics. Pattern Recogn Lett 25(10):1115–1121 July

Morrone MC, Burr DC (1988) Feature detection in human vision: a phase dependent energy model. In: Proceedings of the royal society of London, biological sciences, vol 235 of B. The Royal society, pp 221–245

Kovesi P (1999) In: Sun T, Ourseli, Adriaansen (eds) Image features from phase congruency. Videre J Comput Vis Res 1:1–26

Yu G, Zhao S (2020) A new feature descriptor for multimodal image registration using phase congruency. Sensors

Yu J, Sato Y (2015) Structure-preserving image smoothing via phase congruency-aware weighted least square. In: Stam J, Mitra NJ, Xu K (eds) Proceedings Pacific graphics short papers. The Eurographics Association

Kovesi P (2003) Phase congruency detects corners and edges. In: Sun T, Ourselin, Adriaansen (eds) Digital image computing: techniques and applications. Sydney, Australia edn, vol 1. CSIRO Publishing, Victoria, pp 309–318

Morrone MC, Owens RA (1987) Feature detection from local energy. Pattern Recognit Lett 6(5):303–313 Dec.

The open video project. [online] Available at: http://www.open-video.org

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Kar, T., Kanungo, P., Jha, V. (2022). Illumination Insensitive Video Cut Detection Using Phase Congruency. In: Mandal, J.K., Hsiung, PA., Sankar Dhar, R. (eds) Topical Drifts in Intelligent Computing. ICCTA 2021. Lecture Notes in Networks and Systems, vol 426. Springer, Singapore. https://doi.org/10.1007/978-981-19-0745-6_34

Download citation

DOI: https://doi.org/10.1007/978-981-19-0745-6_34

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-19-0744-9

Online ISBN: 978-981-19-0745-6

eBook Packages: EngineeringEngineering (R0)