Abstract

Diagnosis of skin cancer at an early stage poses a great challenge even in the twenty-first century due to complex and expensive diagnostic techniques currently used for detection. Furthermore, traditional detection techniques are highly dependent on human interpretation. In case of fatal diseases such as melanoma, detection in early stages plays a vital role in determining the probability of getting cured. Several techniques such as dermoscopy, thermography and sonography are used for skin cancer detection, but every technique has its own limitations. Also, it is not feasible for every suspected patient to receive intensive screening by dermatologists. These limitations suggest the need for development of a simpler, cheaper, minimal invasive and accurate methodology independent of human intervention for skin cancer detection. Advancements in various computer vision algorithms have led to their extensive use in the area of bioinformatics. Therefore, this research paper aims to resolve the problem of early detection of skin cancer with a higher accuracy than existing methodologies using computer vision.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In recent years, skin cancer has become one of the most dangerous diseases in human beings. According to the World Health Organization, the total fatality cases account to nearly 1 million in 2018. Furthermore, exposure of human beings to ultraviolet rays has increased due to climate change and ozone layer depletion which is one of the major causes in the surge of cases of skin cancer.

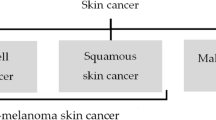

Skin cancer is broadly classified into basal cell carcinoma, squamous cell carcinoma and melanoma. While basal cell carcinoma is least hazardous among all these types as it does not spread from skin to other parts of the body and early diagnosis and treatment can cure it in most of the cases, the squamous cell carcinoma and melanoma are life threatening in nature. Though, melanoma is less common than other types of skin cancer but its spreading nature in our body poses a greater threat and mostly results in death. Melanoma cases mainly occur on skin, but its origin can be in our eyes, brain or lymph nodes (Fig. 1).

It is necessary to detect skin cancer at early stages as early detection enhances the survival chances of a person during treatment of skin cancer. Staging helps in determining whether the cancer has spread to other body parts or not. As the cancer cells grow deeper into the layer of skin, it can be life threatening.

There are many conventional techniques for detecting skin cancer such as biopsy, Raman spectroscopy, tape stripping and dermoscopy but each has its own limitations and drawbacks. For instance, a high resolution camera and years of experience is required to detect skin cancer using dermoscopy. Similarly, Raman spectroscopy uses complex algorithms and involves a series of processing stages leading to high consumption of time and resources. Furthermore, tape stripping technique needs large number of genetic profiles for differentiation of melanoma and non-melanoma cases, and it might cause irritation to patients too. Biopsy, on the other hand, requires surgical intervention. All of these conventional techniques have high dependency on dermatologist or expert technicians for results which raises the chances of human error and difficulty in early detection of skin cancer leading to higher mortality rate. Hence, there is a need for accurate and reliable techniques for early detection which will not only help the clinicians but will also prove to be a vital factor in the treatment of skin cancer.

One such advanced method for detection of skin cancer is computer vision. Computers can analyze large amounts of data available from dermoscopic images. When this data is fed into a computer vision algorithm, an appreciably accurate prediction can be made regarding the skin mole being malignant or benign. Therefore, this research work proposes a computer aided non-invasive diagnostic technique for early detection of skin cancer using computer vision.

2 Literature Review

Many researchers have used computer vision techniques in an effort to build precise and most accurate models for skin cancer detection.

Nahata et al. [1] developed multiple convolutional neural network (CNN)-based computer vision models using architectures such as ResNet50, MobileNet, VGG16, Inception V3 and Inception Resnet to classify the type of skin cancer and its early detection. The accuracy of these models ranges from 85% to 91%.

Furthermore, Hameed et al. [2] performed multi-class classification on skin lesion images to categorize them into healthy, acne, eczema, benign and malignant. The proposed methodology got an accuracy of nearly about 86% by using two different algorithms, error correcting output code (ECOC) support vector machine (SVM) and deep convolutional neural network. Traditional machine learning and advanced deep learning algorithms are used to create the classification algorithm. The proposed algorithm is evaluated on 2742 classified images and collected from different sources, but this technique is cumbersome.

Kaur et al. [3] built a model based on CNN with k-fold cross validation on multi-class classification problems defining seven different types of dermoscopic images of lesions. The model performed at 80% accuracy on reaching 150 epochs.

Similarly, Nasr et al. [4] proposed a convolutional neural network-based architecture to predict whether a given clinical image is malignant or benign in nature. Preprocessing of input non-dermoscopic images is done to remove illumination and other artifacts using image processing techniques. The algorithm attained an overall accuracy of 81%.

However, it is provable that an efficient model with higher accuracy can be developed when compared to existing research works in the same domain.

3 Experimentation and Methodology

The world of computer vision is explored via a family of dense convolution neural networks named as EfficientNet with highly fine-tuned hyperparameters using rectified adam [5] optimization leading to the state of art accuracy in image classification. EfficientNet models outperform conventional convolution neural network models in terms of accuracy and efficiency on a variety of scales.

3.1 Dataset

The project utilizes a dataset from Kaggle repository contributed by Fanconi [6] which consists of 3297 medical images of skin moles preprocessed using Ben and Cropping Technique. The dataset is further subdivided into training and testing dataset using manual categorical sorting with the training set consisting of 2637 medical images, while the test dataset consists of 660 images.

3.2 IDE and Tools Used

This project was created in Python using the Jupyter Notebook Integrated Development Environment (IDE). The Jupyter Notebook is a free, open-source web-based software application that helps in developing documents consisting of live code, visual images, mathematical equations and text information. The primary Python libraries used in this project are Numpy, TensorFlow, SciKit-Learn, Keras and Pandas.

3.3 EfficientNetB0 CNN Model and Rectified Adam Optimizer

EfficientNetB0 model. The proposed algorithm is based on the EfficientNetB0 model which is developed by multi-objective neural architecture search that maximizes the neural network's accuracy as well as its floating-point operations. To improve performance, a new baseline network is created using the AutoML MNAS framework to execute a neural architecture search, which optimizes both accuracy and efficiency. The resultant architecture uses mobile inverted bottleneck convolution (MBConv) and MnasNet, then scaling up this baseline network to obtain a family of models named EfficientNets (Fig. 2).

Source Tan et al. [7]

Efficient net architecture.

Rectified Adam Optimizer. A major disadvantage with conventional optimizers like Adam, RMSProp, etc. is that they have risk of converging into poor local optima in case they are implemented without a warm-up method. The need to use a warm-up method arises from the fact that the adaptive learning rate optimizers contain too much variation, especially in the early stages of training, and hence make too many leaps based on insufficient training data, resulting in poor local optima.

However, since the degree of warm-up required is unknown and varies from dataset to dataset, an algorithm for dynamic variance reduction is created that results in a rectifier, which allows the adaptive momentum to slowly but steadily build up to full expression as it relates to the underlying variance. According to the divergence of the variance, rectified adam activates or deactivates the adaptive learning rate in real-time. Concluding, it provides a dynamic warm-up with no tunable parameters needed. Aside from that, rectified adam has been demonstrated to be more resistant against learning rate fluctuations and to deliver greater training accuracy and generalization on a range of datasets and inside a variety of AI architectures (Fig. 3).

Source Wright et al. [8]

Performance of Adam optimizer with and without warm-up.

3.4 Procedure

-

1.

Importing the images from a local machine and converting them into an equivalent array of RGB values using Numpy function.

-

2.

Defining the target variable and one hot encoding it.

-

3.

Performing image augmentation and normalization of RGB values.

-

4.

Defining the build model function (Global Average 2D Pooling, Dropout = 0.5).

-

5.

Defining the rectified adam (R Adam) optimizer with learning rate = 1e − 3, min learning rate = 1e − 7, and warm-up proportion = 0.15.

-

6.

Building the EfficientNetB0 model with ‘RAdam’ as optimizer, evaluation metrics being ‘accuracy’ and ‘categorical cross entropy’ as the loss function.

-

7.

Defining a learning rate reducer and fitting the model on training dataset with a validation split of 20%.

-

8.

Making prediction on test data and evaluation of accuracy with generation of classification report.

4 Results and Observations

Training of the model reveals that the accuracy increases, while the validation loss decreases with the increase in number of epochs. Figure 4 shows the variation of training accuracy and validation accuracy with the number of epochs. It is observed that the accuracy keeps on increasing and becomes roughly constant after the 25th epoch.

The evaluation of a computer vision model should be done on the basis of not only accuracy but also specificity as well as sensitivity especially if the model is meant to be used for clinical purposes. This is due to the fact that in a clinical setting, we are not only concerned about the number of positive detected cases but also on how many actual positive patients were detected negative and vice versa. High sensitivity implies that the true positive rate is high, while the false negative rate is low, i.e., there are very few cancer patients who are missed out from getting detected. Similarly, high specificity implies that true negative cases are very high, while false positive cases are extremely low, i.e., there are very low chances of a healthy individual being falsely detected as a cancer patient.

Region operating characteristic (ROC) curve is one such evaluation metric that depicts trade-off between true-positives and false-positives. The area under the ROC curve reveals whether the model under test is useful for a given problem or not. In general, the value of area under the curve (AUC) greater than 0.7 makes a model acceptable, while a value below 0.5 is considered worthless. AUC value of 0.8–0.9 is considered excellent, while greater than 0.9 is considered as outstanding. Figure 5 shows the ROC curve of our proposed algorithm. Since the area under the curve is greater than 0.9, it shows that our model is outstanding for skin cancer detection. An appreciably high value of precision and recall as shown in Fig. 6 confirms the same.

Table 1 compares our work with prior studies in the same subject. We found from the table that our algorithm provides good accuracy.

Figure 7 shows the actual case vs. the predicted result of our model. It can be seen clearly that the predicted results match with the actual results.

5 Conclusion and Further Discussion

Our research establishes that the EfficientNetB0 algorithm when optimized using rectified Adam optimizer performs extremely well for skin cancer detection. Moreover, higher accuracy is achieved in a relatively lesser number of epochs as compared to existing computer vision methodologies implemented for the same goal. Furthermore, it is a non-invasive technique which just requires an image of the skin mole of a concerned patient, and the result is displayed within a few seconds overcoming the limitations of traditional testing procedures. Therefore, it is recommended to use this model in all clinical testing setups wherever feasible after more rigorous training on a larger dataset which will enhance its accuracy to even greater levels.

References

Nahata H, Singh SP (2020) Deep learning solutions for skin cancer detection and diagnosis. In: Jain V, Chatterjee J (eds) Machine learning with health care perspective. Learning and analytics in intelligent systems, vol 13. Springer, Cham. https://doi.org/10.1007/978-3-030-40850-3_8

Hameed N, Shabut AM, Hossain MA (2018) Multi-class skin diseases classification using deep convolutional neural network and support vector machine. In: 2018 12th international conference on software, knowledge, information management and applications (SKIMA), 2018, pp 1–7. https://doi.org/10.1109/SKIMA.2018.8631525

Kaur K, Boddu L, Fiaidhi J (2020) Computer vision for skin cancer detection and diagnosis. TechRxiv. Preprint. https://doi.org/10.36227/techrxiv.12089514.v1

Nasr Esfahani E, Samavi S, Karimi N, Soroushmehr SMR, Jafari MH, Ward K, Najarian K (2016) Melanoma detection by analysis of clinical images using convolutional neural network. In: Conference proceedings: annual international conference of the IEEE engineering in medicine and biology society. IEEE Engineering in Medicine and Biology Society. Conference. https://doi.org/10.1109/EMBC.2016.7590963

Liu L et al (2019) On the variance of the adaptive learning rate and beyond. arXiv:1908.03265

Fanconi C (2021) Skin cancer: malignant vs. benign. Kaggle.Com. https://www.kaggle.com/fanconic/skin-cancer-malignant-vs-benign

Tan M, Le QV (2019, May 29) EfficientNet: improving accuracy and efficiency through AutoML and model scaling. Google AI Blog. https://ai.googleblog.com/2019/05/efficientnet-improving-accuracy-and.html

Wright L (2019, Aug 20) New state of the art AI optimizer: rectified Adam (RAdam) medium. https://lessw.medium.com/new-state-of-the-art-ai-optimizer-rectified-adam-radam-5d854730807b

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Khan, Z., Shubham, T., Arya, R.K. (2022). Skin Cancer Detection Using Computer Vision. In: Mandal, J.K., Hsiung, PA., Sankar Dhar, R. (eds) Topical Drifts in Intelligent Computing. ICCTA 2021. Lecture Notes in Networks and Systems, vol 426. Springer, Singapore. https://doi.org/10.1007/978-981-19-0745-6_1

Download citation

DOI: https://doi.org/10.1007/978-981-19-0745-6_1

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-19-0744-9

Online ISBN: 978-981-19-0745-6

eBook Packages: EngineeringEngineering (R0)