Abstract

Due to the proliferation of smart devices with high-speed data communication, video streaming is evolving as the most dominating application on the Internet. Upcoming requirements of 5G wireless include Quality of Experience (QoE) for streaming and other multimedia applications. Recently, HTTP adaptive streaming (HAS) solution has gained popularity for streaming applications. However, many challenges still need to be addressed, in order to adapt HAS for upcoming 5G wireless networks. In this paper, we explore video. In day-to-day life, we consume enormous number of videos across various platforms, so knowing the main mechanism behind the technology should be an essential part in figuring out various difficulties that could be faced if various components are not fitted properly. The main objective of this work is to compare the best algorithms(s) or codecs available for video streaming. The primary aim is to test the efficiency of well-known algorithms (MPEG, H.264) that are being currently used.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In our today’s world, we know how video streaming and video sharing platforms plays a very significant role. It acts as a source for learning new things to provide a channelized entertainment. It’s hard to believe a common man has accesses to such massive resources, all this has become due to these giant tech platforms. Today in this free world any person can share his/her views and perspective regarding any matter without hesitation. And video sharing/ streaming platforms play a vital role in this. For a average human it’s easier to understand a idea or through a video rather than a written document, its saves time and gives better clarity. The most currently used codecs used by large streaming websites like Netflix, Amazon, and video uploading platforms like YouTube of Google use H.264, VP9 (it’s open source) and these codecs are widely used today and have gradually replaced older ones like mp4 and AAC [1]. Apart from mainstream video encoding codecs there are many smaller ones like mp4 family of codecs, H.263, FEVC, MPEG4, 3GP, etc.

Initially, in the beginning, the websites were of simple design and layout. In this age of 5G and fiber, high-speed internet connection could easily watch and upload high-quality videos from anywhere in the globe. Traditionally, streaming is a continuous transmission of audio and/or video files form a source to destination. The source destination pair is a client–server model. Just like other data, the data of video is broken down in little packets. The small packets contain little part of files, and the video decoder/player on client-side interprets them. In simple terms, the YouTube video you watch or the Netflix series you binge are example of video streaming [2]. The media is transmitted to your device from the servers through your internet connection. Traditionally, files used to be downloaded and stored in the device then the user can gain accesses to it. The downside of such method is one has to wait for the completion of the process and a copy of it get stored into local drive. Basically, it very time consuming. So how these huge platforms work so efficiently stands a chance to be explored and gather a clear understanding by focusing on micro details [3].

HTTP (Hypertext Transfer Protocol) live Streaming or HLS. HTTP live streaming also known as (HLS) is used the most for streaming protocols around the world. This is widely used for video hosting and lives streaming [3]. This technique of video streaming work by breaking files of the video into tiny HTTP files then delivering it by HTTP protocol. The receiver device downloads these HTTP files and plays them as video. HTTP is the application layer protocol for transferring information between receiver and sender devices connected to the network [4]. Data transfer on HTTP is based on the old requests and response method. Almost all HTTP messages are either a request or a response to a request.

One of the primary advantages of HLS streaming is, every internet connected devices support the HTTP, making it very simple to implement. Another advantage of HLS is that the video quality can be increased or decreased depending on the internet speed so that the video can be played without interruption. This feature is known as “adaptive bitrate streaming”. The slow internet connection can hamper the video form starting [5]. HLS was developed by Apple and for the Apple products, but now it’s used in large range of devices [6].

1.1 Video Encoding

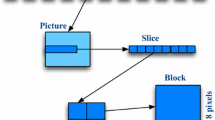

The encoding of video is necessary to help efficient transmission of data from one place to another. The receiving device on which a user is viewing the file use the decoding process while the sending device encodes the data. Publicly used standards are available in order to support diverse of devices and every one can interpret the universally encoded data. Captured video are kept as segments on server with different frame to support adaptive streaming. Streaming protocols like HLS or MPEG-DASH breaks videos into smaller segments [7]. After that, the video is encoded using available standards for video encoding like H.264, H.265.

Basically, the streaming process compresses the video thus, redundant information are reduced. Using the content delivery network (CDN) the encoded videos are sent to the client machine. For compressing and decompressing data a codec is used so that it can be easily delivered and received by different applications. Codecs may use lossy compression or lossless compression to encode. In lossy compression, some parts of the video may be dropped keeping the essential data. Lossless compression retains original quality of the video file by copying every segment of data exactly. However, both lossless compression have their pros and cons. Smaller file sizes and lower video quality is handled by lossy compression; the lossless is used for better quality video.

2 Existing Works

Due to revolution in the smartphone industry the data consumption has increased significantly in recent days. Recent forecast shows the video traffic controls 80% of it. In near future, the rise of 5G will take the bar way further. So, video compression will play a very vital role in fulfilling such promises. Baig et al. [6] states that the advancement of standard compression ratio are growing exponentially which is additionally supported the evolvement of artificial intelligence. He majorly concentrated on developing the next-generation codecs which can work more efficiently than the current generation. Video Streaming over wireless network medium. Since the wide implementation of 5G the focus towards wireless transmission of video has changed in a drastic manner. Streaming of video through wireless network has wide range of application in today’s society. But the unpredictable network barriers create large hindrance in ground-level implementation. The papers written by Zhu and Girod mainly provide solution of how we can remove these barriers. Their work revolves around improving the cross-layer design for allocating resources. There has been numerous comparison of streaming process. We have tried to capture the data saved in wireless network by MPEG and H.264.

3 Video Encoding Algorithms

Any client-side browser (application) can use a codec to compress and decompress the data irrespective of the device hardware.

Dynamic Adaptive Streaming over HTTP (DASH)also known as MPEG-DASH is a popular streaming method. Using this process client can start playing a video before the buffer is fully loaded. Another similar process called HTTP Live Streaming (HLS). HLS breaks video into chunks with small sizes. The chinks are encoded into different quality levels so that the video can be sent according to network conditions.

Streaming process: The video file is broken into smaller pieces which are of different length. After this, the broken pieces is encoded with the help of any available standard encoder. As the user starts watching the video, the encoded file are transferred into the client system. In maximum cases, a CDN comes into action to help distribute and help the stream more efficiently [8]. When the receiver plays the video the encoded video segments gets decoded and video get played in the client device. The best part is the video decoder reduces the quality of stream when there is a hindrance in the network.

In today’s generation, H.264 most widely used video codecs. Having feature such as advance video encoding makes it stand out in the crowd.

3.1 Performance Metrics

The Peak Signal-To-Noise Ratio (PSNR) is commonly used objective video quality method. PSNR is calculated as

where L represents the range of pixels which is dynamic and mean squared error is noted as MSE. To calculate MSE, equation can be used.

- N :

-

the number of pixels of the received frame/video,

- x i :

-

the number of pixel of the source frame/video

- y i :

-

pixel from compressed frame/video

4 Experimental Results and Discussions

We have implemented MPEG and H.264 in MATLAB software considering different network condition with packet loss rate 0.03% [9, 10]. We have used real player and with few n real video parameters. MPEG-DASH and H.264 were evaluated in different condition and average values are presented in results given below. Different bitrate levels used with video resolutions are 240p, 360p, 480p, 720p, and 1080p, respectively, with the bitrates.

Figure 1 shows the amount of noise in the signal through every bitrate that is used. This graph shows that for every bitrate more noise is there in signal of MPEG-DASH as compared to H.264. For higher bitrate, H.264 performs better than MPEG. Figure 2 shows that more data is saved by H.264 in comparison to the MPEG. This also proves that H.264 is more efficient then MPEG.

5 Conclusion and Future Scope

In this work, we have studied MPEG-DASH and H.264 and realized that H.264 is more efficient and faster than MPEG-DASH which has been outdated by this new encoder. This also has been proved by the commercialization of H.264 by various large video hosting sites like Netflix and Amazon Prime because unlike MPEG-DASH, H.264 is very fast encoder without any downsides. H.264 also saves lot of data in case of lossy wireless networks. In future, we shall try to implement this work with highly loss wireless channels and check the quality of experience.

References

Bach M (2002) Freiburg visual acuity, contrast &vernier test (‘FrACT’), http://www.michaelbach.de/fract/index.html

CS MSU Graphics & Media Lab Video Group (2006) MOS codecs comparison

CS MSU Graphics & Media Lab Video Group (2007) Video MPEG-4 AVC/H.264 codecs comparison

Rohaly AM, et al. (2000) Video quality experts group: current results and future directions. In: Ngan KN, Sikora T, Sun M-T (eds) Visual communications and image processing 2000. Proceedings of SPIE, vol 4067. SPIE, pp 742–753

Barzilay MAJ, et al. (2007) Subjective quality analysis of bit rate exchange between temporal and SNR scalability in the MPEG4 SVC extension. In: International conference on image processing, pp II: 285–288

Baig Y, Lai EM, Punchihewa A (2012) Distributed video coding based on compressed sensing, Distributed video coding based on compressed sensing, 325–330

Kooij RE, Kamal A, Brunnström K (2006) Perceived quality of channel zapping. In: Proc. CSN, Palma de Mallorca, Spain. pp 155–158

Singh KD, Aoul YH, Rubino G (2012) Quality of experience estimation for adaptive HTTP/TCP video streaming using H.264/AVC. In: IEEE Consumer Communications and Networking Conference, pp 127–131

Bankoski J, et al. (2013) Towards a next generation open-source video codec. In: Proc. IST/SPIE Electron. Imag.—Vis. Inf. Process. Commun. IV}, pp 606–866

Egger S, et al. (2012) Time is bandwidth? Narrowing the gap between subjective time perception and quality of experience. In: Proc. IEEE ICC Canada

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Mohapatra, K.P., Muduli, S., Biswal, A.B., Sahu, B.J.R. (2022). Performance Analysis of Video Streaming Methods. In: Mallick, P.K., Bhoi, A.K., González-Briones, A., Pattnaik, P.K. (eds) Electronic Systems and Intelligent Computing. Lecture Notes in Electrical Engineering, vol 860. Springer, Singapore. https://doi.org/10.1007/978-981-16-9488-2_32

Download citation

DOI: https://doi.org/10.1007/978-981-16-9488-2_32

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-16-9487-5

Online ISBN: 978-981-16-9488-2

eBook Packages: EngineeringEngineering (R0)