Abstract

Instead of robot replacing the human in industrial workplaces, human–robot collaboration (HRC) focuses on collaborative working of human and robot in the shared workspace. Robots can provide accurate, repetitive and fast working, but it does not have flexibility and adaptability like humans. In human–robot collaboration (HRC), robots are programmed to make decisions about its motions and operations for the specific given task. Therefore in human–robot collaboration, robots are often needed to change their motions and operations to collaborate with humans. But, robots in the industries are pre-programmed with rigid codes and cannot support human–robot collaboration. Hence, computer vision-guided systems can be used for human–robot collaborations. In this paper, an overview of computer vision-guided human–robot collaborative for Industry 4.0 has been given, and it also highlights future research directions. In this paper, various aspects of computer vision-guided human–robot collaboration, such as introduction, gesture recognition, computer vision as a sensor technology, and human safety have been reviewed.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Robots can provide accurate, repetitive and fast working, but it does not have flexibility and adaptability like humans. Hence, human–robot collaboration (HRC) is a recent trend of robotics research to include capabilities of human and robot both in the industry (Wang et al. 2019). In manufacturing industry, human–robot collaboration (HRC) focuses on working of human and robot simultaneously in the workspace (Wang et al. 2017).

2 Human–Robot Collaboration (HRC)

In different scenarios like coexistence, cooperation, interaction and collaboration, relationships of the humans and robots are different. It can be evaluated based on the following criteria (Wang et al. 2019):

-

Workspace: Working area.

-

Contact: Direct physical contact with each other.

-

Working task: Same operation towards the same working objective.

-

Resources: Available equipment and machines.

-

Simultaneous process: Working on same time but different tasks.

-

Sequential process: No overlapping of task, one by one task.

Based on above criteria, different human–robot relationships can be defined as follows (Wang et al. 2020) (Table 1):

In Fig. 1, possible cases and roles of human–robot collaboration have been given.

Possible cases and roles in human–robot collaborations (Wang et al. 2020)

Human–robot collaborative system should have following characteristic:

-

It should follow safety standards and operational regulations

-

Flexibility and adaptability for specific functions

-

Potential of improvement in productivity and product quality

-

Support, assistance and collaboration with human in hazardous, tedious, non-ergonomic and repetitive tasks.

3 Gesture Recognition in Human–Robot Collaboration (HRC)

In manufacturing with robots, robots in the industries are pre-programmed with rigid codes, and reprogramming is a challenging task as it is tedious and time consuming (Liu et al. 2018). Although by programming with demonstration, reprogramming of robots for a different task has been made easy. Rigid code-based robotic control cannot support human–robot collaboration. To solve this problem, intuitive programming with communication channels like gesture, poster, voice, and haptic can be used to implement in human–robot collaboration.

As shown in Fig. 2, gesture recognition for human–robot collaboration can consist of sensor data collection, gesture identification, gesture tracking, gesture classification and gesture mapping (Liu and Wang 2018).

Model for human–robot collaboration (Liu and Wang 2018)

There are three types of gesture that can be used as a communication channel as follows:

-

Head and face gesture

-

Hand and arm gesture

-

Full body gesture.

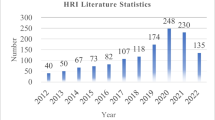

Hongyi Liu and Lihui Wang have reviewed gesture recognition for human–robot collaboration and identified research trend as follows (Liu and Wang 2018) (Fig. 3).

Past work and future trends in gesture recognition (Liu and Wang 2018)

4 Computer Vision as a Sensor Technology in Human–Robot Collaboration

To implement human–robot collaboration, proper communication channel must be implemented. Computer vision-based communication channels can be applied effectively for the same (Liu and Wang 2018). For gesture recognition, sensor data collection needs to be done. As shown in Fig. 4, various sensors can be used for the same (Liu and Wang 2018).

Different type of sensors for gesture recognition (Liu and Wang 2018)

In image-based sensor, single camera and depth camera are widely used in recent gesture recognition research. Depth camera will also give depth information along with RGB data. The depth and visual information provided by the depth sensor (like Kinect sensor) can be used in object tracking and recognition, human activity analysis, hand gesture analysis and indoor sematic segmentation for human–robot collaboration (Papadopoulos et al. 2014; Pham et al. 2016). Fig. 5 shows basic structure and working of depth sensors like Kinect (Han et al. 2013).

Basic structure and working of depth sensors like Kinect (Han et al. 2013)

For effective human–robot collaboration, human gesture should be correctly understood by robot and so human gesture should be interpreted mathematically (Nakamura 2018). In HRC system, mathematical modelling of sequence of human operations and motions is very important.

Liu H. has modelled sequence of human motion in assembly line using existing motion recognition techniques and applied hidden Markov model (HMM) to predict human motion by generating a motion transition probability matrix (Liu and Wang 2017). Example of task-level representation in an assembly line for a case study is shown in Fig. 6.

Example of task-level representation in an assembly line (Liu and Wang 2017)

Liu H has also applied recurrent neural network (RNN) to predict human motion by generating a motion transition probability matrix (Zhang et al. 2020). Overview of RNN for motion prediction in human–robot collaboration (HRC) is shown in Fig. 7.

Overview of RNN for motion prediction (Zhang et al. 2020)

Mainprice and Berenson (2013) proposed framework based on early prediction of the human’s motion. These generate a prediction of human workspace occupancy by computing the swept volume of learned human motion trajectories. This framework is based on generation of a prediction of human workspace occupancy by calculating the swept volume of learned human motion trajectories (Mainprice and Berenson 2013). In Fig. 8, human workspace occupancy has been explained by example.

Example of human occupancy in human–robot collaboration (Mainprice and Berenson 2013)

In recent years, multimodal approach has also been widely investigated. This approach is multidisciplinary including fields like artificial intelligence (AI), image processing, automation control, sensor networks and path planning (Liu et al. 2018). For human–robot collaboration, multimodal programming by deep learning approach has been explained in Fig. 9.

Multimodal conceptual framework for (human–robot collaboration Liu et al. 2018)

5 Human Safety in Human–Robot Collaboration (HRC)

In implementing human–robot collaboration (HRC) for industry, the most important characteristic of HRC is human safety. The seven elements of the collision events are pre-collision, detection, isolation, identification, classification, reaction and collision avoidance (Haddadin et al. 2017). Possible causes of collision can be categorized into three categories as shown in Fig. 10.

Possible causes of collision (Haddadin et al. 2017)

In computer vision-based human–robot collaboration (HRC), some research like Schmidt implements a depth camera-based method for collision detection (Mohammed et al. 2017).

Depth camera (like a Kinect sensor) can be used to calculate relative distance for collision detection as shown in Fig. 11.

Depth camera-based collision avoidance system (Mohammed et al. 2017)

6 Conclusion

This paper explains research in computer vision-guided human–robot collaboration for Industry 4.0 in brief. After introducing the human–robot collaboration, different types of human–robot relationship and roles have been explained. In the second section of the paper, gesture recognition in human–robot collaboration has been studied as the most important element of human–robot collaboration systems. In the third section of the paper, sensor data collection needs to be done, and computer vision-based gesture recognition has been identified as the best suitable method. In the fourth section of the paper, recent research in computer vision-based human–robot collaboration has also been reviewed. In the fifth section of the paper, human safety for human–robot collaboration has been reviewed.

References

Haddadin S, De Luca A, Albu-Schäffer A (2017) Robot collisions: a survey on detection, isolation, and identification. IEEE Trans Rob 33(6):1292–1312

Han J, Shao L, Xu D, Shotton J (2013) Enhanced computer vision with microsoft kinect sensor: a review. IEEE Trans Cybern 43(5):1318–1334

Liu H, Fang T, Zhou T, Wang L (2018) Towards robust human-robot collaborative manufacturing: multimodal fusion. IEEE Access 6:74762–74771

Liu H, Wang L (2017) Human motion prediction for human-robot collaboration. J Manuf Syst 44:287–294

Liu H, Wang L (2018) Gesture recognition for human-robot collaboration: a review. Int J Ind Ergon 68:355–367

Mainprice J, Berenson D (2013) Human-robot collaborative manipulation planning using early prediction of human motion. In 2013 IEEE/RSJ international conference on intelligent robots and systems. IEEE, pp 299–306

Mohammed A, Schmidt B, Wang L (2017) Active collision avoidance for human–robot collaboration driven by vision sensors. Int J Comput Integr Manuf 30(9):970–980

Nakamura Y (2018) Classification of multi-class daily human motion using discriminative body parts and sentence descriptions

Papadopoulos GT, Axenopoulos A, Daras P (2014) Real-time skeleton-tracking-based human action recognition using kinect data. In: International conference on multimedia modeling. Springer, Cham, pp 473–483

Pham TTD, Nguyen HT, Lee S, Won CS (2016) Moving object detection with Kinect v2. In: 2016 IEEE international conference on consumer electronics-Asia (ICCE-Asia). IEEE, pp 1–4

Wang L, Gao R, Váncza J, Krüger J, Wang XV, Makris S, Chryssolouris G (2019) Symbiotic human-robot collaborative assembly. CIRP Ann 68(2):701–726

Wang XV, Kemény Z, Váncza J, Wang L (2017) Human–robot collaborative assembly in cyber-physical production: Classification framework and implementation. CIRP Ann 66(1):5–8

Wang L, Liu S, Liu H, Wang XV (2020) Overview of human-robot collaboration in manufacturing. In Proceedings of 5th international conference on the industry 4.0 model for advanced manufacturing. Springer, Cham, pp 15–58

Zhang J, Liu H, Chang Q, Wang L, Gao RX (2020) Recurrent neural network for motion trajectory prediction in human-robot collaborative assembly. CIRP Annals

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Pandya, J.G., Maniar, N.P. (2022). Computer Vision-Guided Human–Robot Collaboration for Industry 4.0: A Review. In: Parwani, A.K., Ramkumar, P., Abhishek, K., Yadav, S.K. (eds) Recent Advances in Mechanical Infrastructure . Lecture Notes in Intelligent Transportation and Infrastructure. Springer, Singapore. https://doi.org/10.1007/978-981-16-7660-4_13

Download citation

DOI: https://doi.org/10.1007/978-981-16-7660-4_13

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-16-7659-8

Online ISBN: 978-981-16-7660-4

eBook Packages: EngineeringEngineering (R0)