Abstract

Image restoration is a well-known ill-posed inverse problem whose aim is to recover a sharp clean image from the corresponding blur- and noise-corrupted observation. Variational methods penalize solutions deemed undesirable by incorporating regularization techniques. A popular strategy relies on using sparsity promoting regularizers; it is well known that, in general, nonconvex regularizers hold the potential for promoting sparsity more effectively than convex regularizers. Recently a new class of convex non-convex (CNC) variational models has been proposed which includes a general parametric nonconvex nonseparable regularizer. However, the performance of this approach depends critically on the regularization parameter. In this paper we propose to use a parametric CNC variational restoration model within a bilevel framework, where the parameter is tuned by minimizing a measure of the restoration residual whiteness. Some preliminary numerical experiments are shown which indicate the effectiveness of the proposal.

Dedicated to Raymond H. Chan on the occasion of his 60th birthday.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Sparsity-inducing regularization

- Variational methods

- Ill-posed problems

- Non-convex non-smooth regularization

- Optimization

- Additive white gaussian noise.

1 Introduction

In this paper, we consider the problem of restoring 2-D gray-scale images corrupted by blur and additive white Gaussian noise (AWGN).

These images can be represented by the discretization of a real valued function defined on a 2-D compact rectangular domain. Let \(x \in {\mathbb R}^{n}\), with \(n=n_1 n_2\), be the unknown \(n_1 \times n_2\) clean image concatenated into an n-vector, \(A \in {\mathbb R}^{n \times n}\) be a known blurring operator and \(\epsilon \in {\mathbb R}^{n}\) be an unknown realization of the noise process, which we assume white Gaussian with zero-mean and standard deviation \(\sigma \). The discrete imaging model of the degradation process which relates the observed degraded image \(b \in {\mathbb R}^{n}\) with x, can be expressed as follows:

Given A and b, our goal is to solve the inverse problem of recovering an accurate estimate of x, which is known as deconvolution or deblurring. When A is the identity operator, recovering x is referred as denoising.

Image deblurring is a discrete ill-posed problem, as such further a priori assumptions on the solution can help to determine a meaningful approximation of x. Assuming the image is corrupted by AWGN, then an estimate \(\,\!x^*_{\lambda }\) of x can be obtained as a solution—i.e., a global minimizer—of the following variational model which is the sum of a convex smooth (quadratic) fidelity term and a regularization term:

where \(\Vert v\Vert _2\) denotes the \(\ell _2\) norm of vector v and \(\lambda \) represents the classical regularization parameter which controls the trade-off between data-fidelity and regularization.

The regularizer \(\mathcal {R}(x)\) encodes a priori knowledge on the solution. Focusing on the recovery of images characterized by some sparsity property, we consider the general class of sparsity-inducing variational models described in [15] to determine solutions \(x^*_{\lambda }\) which are close to the data b according to the observation model and, at the same time, for which the transformed solution vector \(y^*_{\lambda } = G(Lx^*_{\lambda })\) is sparse with \(L \in {\mathbb R}^{r \times n}\) a linear operator and \(G :{\mathbb R}^r \rightarrow {\mathbb R}^s\) a possibly nonlinear vector-valued function—see [15].

The drawback of the proposal in [15], which will be briefly illustrated in Sect. 3, is that it requires a trial-and-error procedure for tuning the regularization parameter \(\lambda \) and a manual stopping. This represents a crucial aspect in variational restoration methods and has been subject of several research works.

We propose an automatic criterion for adjusting the regularization parameter \(\lambda \). More precisely, our proposal is based on the key idea that if the restored image is a good estimate of the target clean image, then the residual image must resemble the realization of the noise process, thus being spectrally white.

Hence, starting from a sufficiently small \(\lambda \) value, we iteratively increase \(\lambda \) until a suitable whiteness maximality criterion is satisfied.

In Sect. 2 we review some related works on the choice of the regularization parameter. The class of CNC variational models introduced in [15] is briefly illustrated in Sect. 3. In Sect. 4 we define the residual whiteness strategy, and Sect. 5 is devoted to the description of the proposed algorithmic framework. Numerical results are presented in Sect. 6. Conclusions are drawn in Sect. 7.

2 Related Work

A crucial issue in the regularization of ill-posed inverse problems is the choice of the regularization parameter. The quality of the solution is affected by the value of \(\lambda \): a too large value of \(\lambda \) gives an over-smoothed solution that lacks details that the desired original solution may have, while a too small value of \(\lambda \) yields a computed solution that is unnecessarily, and possibly severely, contaminated by propagated error that stems from the error \(\epsilon \) in b.

The discrepancy principle (DP) [22] chooses the regularization parameter so that the variance of the residual equals that of the noise; the DP thus requires an accurate estimate of the noise variance and is known to yield overregularized estimates [7]. The sensitivity of \(\lambda \) and of the computed solution to the inaccuracies in an available estimate of \(\Vert \epsilon \Vert \) has been investigated by Hamarik et al. [6], who proposed alternatives to the discrepancy principle when only a poor estimate of \(\Vert \epsilon \Vert \) is known. Automatic procedures for selecting the \(\lambda \) parameter based on the DP has been proposed in literature, see e.g. [9].

Parameter choice methods when no estimate of \(\Vert \epsilon \Vert \) is available are commonly referred to as “heuristic”, because they may fail in certain situations; see [5].

A large number of heuristic parameter choice methods have been proposed in the literature due to the importance of being able to determine a suitable value of the regularization parameter when the DP cannot be used; see, e.g., [3, 7, 22]. These methods include the L-curve criterion, and generalized cross validation [3].

These methods are outperformed by more recent criteria based on Steins unbiased risk estimate (SURE) [4, 18]. SURE provides an estimate of the mean squared error (MSE), assuming knowledge of the noise distribution and requiring an accurate estimate of its variance [23].

Recently, the fact that the additive noise is the realization of a white random process and, hence, that the restoration residual image must be uncorrelated, has been used not only as an a-posteriori performance evaluation criterion (see, e.g., [21]), but also as a key idea in the design of new fidelity terms [11, 12, 14]. In particular, by evaluating the resemblance of the residue image to a white noise realization, one can check, to some extents, the quality of the restored image. In [1, 8, 20, 21] the measures of residual spectral whiteness have been exploited for adjusting the regularization parameter and/or the number of iterations of the algorithms for deconvolution problems. Comparisons among several state of the art methods have been documented in [2].

3 The Class of CNC Variational Models

The considered class of CNC variational models proposed in [15] relies on a general strategy for constructing non-convex non-separable regularizers starting from any convex regularizer \(\mathcal {R}: {\mathbb R}^n \rightarrow {\mathbb R}\) of the form

with \(L \in {\mathbb R}^{r \times n}\), \(G :{\mathbb R}^r \rightarrow {\mathbb R}^s\) a possibly nonlinear vector-valued function with \(g_i :{\mathbb R}^r \rightarrow {\mathbb R}\), \(i = 1, \ldots , s \), representing its scalar-valued components and \(\Phi :{\mathbb R}^s \rightarrow {\mathbb R}\) a sparsity-promoting penalty function [10, 13, 17]. The CNC model associated with the regularizer \(\mathcal {R}\) is as follows

with the parameterized non-convex non-separable regularizer \(\mathcal {R}_B\) defined by

where \(\boxdot \) denotes the infimal convolution operator and \(B \in {\mathbb R}^{q \times n}\) is a matrix of parameters.

According to Proposition 8 in [15], a sufficient condition for \(\mathcal {J}_B\) to be strongly convex—hence, for the variational model in (4) to admit a unique solution—is that the matrix B satisfies

A simple yet effective strategy for constructing a matrix \(B^{\mathsf {T}}\! B \in {\mathbb R}^{n \times n}\) satisfying the convexity condition in (6) has been presented in [15]. Since the matrix \(\,A^{\mathsf {T}}\! A \in {\mathbb R}^{n \times n}\) is symmetric and positive semidefinite, it admits the eigenvalue decomposition

with \( e_i \), \( i=1,\ldots ,n \), indicating the real non-negative eigenvalues of \(A^{\mathsf {T}}\! A\). By setting

then (6) is clearly satisfied. A special case is to set a unique parameter \(\gamma = \gamma _i \in \, [0,1) \, \forall \, i\), which corresponds to setting \(B = \sqrt{ \gamma / \lambda \, } A\).

In the present work, which addresses the specific problem of image restoration, we consider as a first regularization function \(\mathcal {R}\) in (3) the popular Total Variation (TV) semi-norm [19]. In this case \(\Phi \) is the \(\ell _1\) norm function and \(L = [D_h^{\mathsf {T}}, D_v^{\mathsf {T}}]^{\mathsf {T}}\), with \(D_h,D_v \in {\mathbb R}^{n \times n}\) representing finite difference approximations of the first-order partial derivatives along the horizontal and vertical directions, respectively, then we have:

It is well known that TV-based reconstructions favor piecewise-constant solutions, but present staircase effects in the restoration of smooth parts of the images. To avoid this artifact, in the reconstruction of piecewise-affine solutions, a second-order extensions of the TV regularizer can be considered which promotes sparsity of the Hessian Schatten norms instead of the gradient norms. That is, the sum of the Schatten p-norms of the Hessian matrices computed at every pixel of the image is minimized [16], where, we recall, the Schatten p-norm \(\Vert M \Vert _{\mathcal {S}_p}\) of a matrix \(M \in {\mathbb R}^{ z \times z}\) is defined by

with \(\sigma _i(M)\) indicating the i-th singular value of matrix M. Let \(L = [D_{hh}^{\mathsf {T}}, D_{vv}^{\mathsf {T}}, D_{hv}^{\mathsf {T}}]^{\mathsf {T}}\) with \(D_{hh},D_{vv},D_{hv} \in {\mathbb R}^{n \times n}\) representing finite difference approximations of second-order derivatives along horizontal, vertical and mixed horizontal/vertical directions, respectively. Then the Hessian Schatten p-norm regularizer is defined by

We recall that the Schatten p-norm reduces to the nuclear norm when \(p = 1\).

4 Residual Whiteness

Given a realization \(\epsilon := \{\, \epsilon (i,j) \in {\mathbb R}:\, (i,j) \in \mathrm {\Omega }\,\}\), \(\Omega = \{ 1,2,\ldots ,n_1 \} \times \{ 1,2,\ldots ,n_2 \}\) of a 2D \(n_1 \times n_2\) random noise process, that is the series of noise values corrupting the particular observed image according to the deterministic degradation model in (1), the sample auto-correlation of \(\epsilon \) is a function \(a_{\epsilon }\) mapping all the possible lags \((l,m) \in \mathrm {\Theta } = \{ -(n_1 -1) , \,\ldots \, , n_1 - 1 \} \times \{ -(n_2 -1) , \,\ldots \, , n_2 - 1 \}\) into a scalar value given by

where \(\,\star \,\) and \(\,*\,\) denote the 2-D discrete correlation and convolution operators, respectively, and where \(\epsilon ^{\prime }(i,j) = \epsilon (-i,-j)\). Clearly, for (12) being defined for all lags \((l,m) \in \mathrm {\Theta }\), the noise realization \(\epsilon \) must be padded with at least \(n_1\) samples in the vertical direction and \(n_2\) samples in the horizontal direction. We assume here periodic boundary conditions for \(\epsilon \), such that \(\,\star \,\) and \(\,*\,\) in (12) denote circular correlation and convolution, respectively. If the noise process \(\epsilon \) is white, then it is well known that the auto-correlation \(a_\epsilon \) satisfies the following asymptotic property:

For noise corruptions affecting images of finite dimensions—namely, \(n < +\infty \)—we can say that the auto-correlation values for all non-zero lags are small. Some important examples of distributions of additive white noises are the uniform, the Gaussian, the Laplacian and the Cauchy [14].

Clearly the nearest to the uncorrupted image is the restored image \(x^*_{\lambda }\), the closer the residual image \(r^*_{\lambda } = b - A \, x^*_{\lambda }\) is to the realization \(\epsilon \) in (1) of a white noise process.

Our proposal is to seek for the regularization parameter value \(\lambda ^*\) yielding the whitest restoration residual, which can be formally defined as follows:

with \(\mathcal {W}: {\mathbb R}^n \rightarrow {\mathbb R}\) one of the two following residual whiteness measures:

We notice that, according to definition (12), the term \(a_{r^*_{\lambda }}(0,0)\) represents nothing else than the sample variance of the residual image \(r^*_{\lambda }\).

5 The Proposed Algorithmic Framework

The proposed bilevel framework consists of an iterative procedure for computing an approximate solution \(x^*_{\lambda ^*}\) of the class of CNC models proposed in [15] and defined in (4), or also of the associated purely convex models in (2), with \(\mathcal {R}\) any sparsity-promoting convex regularizer of the form in (3) and \(\lambda ^*\) satisfying the whiteness maximality criterion in (14).

In Algorithm 1 we report the main computational steps of the overall proposed bilevel framework for image restoration.

The algorithm starts with a sufficiently small value of the parameter \(\lambda \) yielding a large value of the residual whiteness measure W in (14)–(15); at each iteration \(\lambda \) is increased by a multiplicative factor \(\theta >1\) to strengthen the effect of the regularization term. The iterative procedure is terminated as soon as the residual whiteness measure stops decreasing, for a certain \(\lambda ^*\) value. Such a scheme relies on the assumption that the residual whiteness function \(W(\lambda )\) in (14) is monotonically decreasing on the \(\lambda \) interval between 0 and the function minimizer \(\lambda ^*\). This property of the whiteness function \(W(\lambda )\) is very hard to be proved theoretically but we verified it empirically and the evidence of such behavior is reported in Sect. 6.

At each (outer) iteration h of Algorithm 1, the restored image \(x^{(h)}\) is computed—that is, the corresponding optimization problem is solved—by using the Primal-Dual Forward-Backward (PDFB) algorithm described in [15] for the CNC models and the Alternating Direction Method of Multipliers (ADMM) for the associated purely convex models. We remark that, for any given \(\lambda \) value, the considered variational models are strongly convex—hence they admit a unique global minimizer—and the PDFB and ADMM minimization algorithms are guaranteed to converge towards such minimizer.

We adopt for efficiency purposes the so called warm-starting strategy to initialize the algorithm at the next inner optimization step using the estimated values at the previous step.

The considered CNC variational approach requires the design of a matrix B satisfying the convexity condition (6) for the functional \(\mathcal {J_B}\). Many such matrices B exist, see [15]. In the following experiments, we set \( \Gamma \) to be a two-dimensional dc-notch filter defined by \( \Gamma = I - H \) where H is a two-dimensional low-pass filter with a dc-gain of unity and \( H \le I \). In our experiments, we set \( \gamma = 0.98 \) and \( H = H_0^{\mathsf {T}}\! H_0 \) where \( H_0 \) is the most basic two-dimensional low-pass filter: the moving-average filter with square support.

6 Numerical Examples

In this section, we report some experimental results aimed at assessing the effectiveness of the automatic parameter selection procedure illustrated in Sects. 4 and 5 for image restoration by using the CNC variational models recently proposed in [15] and briefly outlined in Sect. 3.

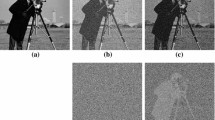

We consider the three test images shown in the first row of Fig. 1: qrcode which belongs to the class of piecewise constant images, roof which is a piecewise affine image, and the popular photographic cameraman image. The test images have been synthetically corrupted by space-invariant Gaussian blur generated by the Matlab command fspecial(’gaussian’,band,sigma) with parameters (band,sigma) = (5,1.5), and AWGN of standard deviation \(\sigma = 40\), so as to obtain the three degraded images shown in the second row of Fig. 1. The qrcode and roof images are characterized by very sparse first- and second-order derivatives, respectively, hence the convex TV and Schatten 1-norm regularization terms in (9)–(11) and their non-convex non-separable counterparts defined according to (5) are suitable to get good restorations.

Hence, in the experiments we perform restoration by using the proposed bilevel framework outlined in Algorithm 1 applied to the two purely convex variational models

and the two associated CNC counterparts

For all the tests, i.e. for all images and all restoration models, the bilevel framework outlined in Algorithm 1 has been used in order to automatically select the regularization parameter \(\lambda ^*\) yielding the whitest restoration residual according to both the whiteness measures \(\mathcal {W}_1\), \(\mathcal {W}_2\) defined in (15); we denote by \(\lambda _1^*\), \(\lambda _2^*\) such two optimal values and by \(W_1^*:=\mathcal {W}_1\left( \lambda _1^*\right) \), \(W_2^*:=\mathcal {W}_2\left( \lambda _2^*\right) \) the associated (minimum) whiteness measure values.

The (inner) iterations of the minimization algorithms used to determine the restored image for any given \(\lambda \) value—namely, ADMM for the \(\mathrm {TV}{-}\ell _2\) and \(\mathcal {S}_1H{-}\ell _2\) models, PDFB for the \(\mathrm {CNC}{-}\mathrm {TV}{-}\ell _2\) and \(\mathrm {CNC}{-}\mathcal {S}_1H{-}\ell _2\) models—are terminated as soon as two successive iterates satisfy

The quality of the obtained restorations is evaluated by means of both the Signal-to-Noise Ratio (SNR) and the Structural Similarity Index (SSIM). We indicate by SNR\(_1^*\), SNR\(_2^*\) and SSIM\(_1^*\), SSIM\(_2^*\) the SNR and SSIM values of the restored images associated with the optimal values \(\lambda _1^*\), \(\lambda _2^*\). In order to quantitatively evaluate the ability of the proposed approach in automatically selecting \(\lambda \) values yielding restored images of good quality, we also introduce—and compute for each test—the following quantities:

where \(\overline{\mathrm {Q}}\) denotes the maximum value of the quality measure - SNR or SSIM—achievable by letting \(\lambda \) vary in its domain. These quantities represent the loss of restoration quality (in percentage) yielded by the proposed automatic selection procedure with respect to the maximum achievable.

In Table 1 we report all the obtained quantitative results, whereas in Figs. 2, 3, 4 and 5 we show some visual and graphical results related to the restoration of the cameraman test image by the four variational models considered. In particular, the results obtained by using the whiteness measure \(\mathcal {W}_1\) are in Figs. 2 and 3, by whiteness measure \(\mathcal {W}_2\) in Figs. 4 and 5. Each column in these figures corresponds to a different restoration model. In the third, fourth and fifth row we report the plots of the SNR and SSIM values of the restored image and the plots of the whiteness measure of the restoration residual as functions of the regularization parameter \(\lambda \), respectively. The dashed vertical red lines indicate the “optimal” regularization parameter values, namely those yielding the smallest residual whiteness measures. It is worth noticing that for all reported tests the residual whiteness measure function \(W(\lambda )\) with both the choices of \(\mathcal {W}\) introduced in (15)—shown in the last row of Figs. 2, 3, 4 and 5—exhibit a monotonically decreasing behavior on the \(\lambda \) interval between 0 and the functions minimizer \(\lambda ^*\).

In the first and second row of Figs. 2, 3, 4 and 5 we show the restored images obtained by using such optimal \(\lambda \) values and the associated absolute error images, respectively.

In Table 1 the best results are marked in boldface. The results obtained by hand-tuning \(\lambda \) (labeled as \(\overline{\mathrm {Q}}\)) indicate that, as expected, TV-based models perform better on the piecewise constant images qrcode and cameraman whereas \(\mathcal {S}_1H\)-based models outperform TV-based models on the piecewise affine image roof. More precisely, the CNC models perform better than their associated purely convex counterparts. This is due to the stronger sparsity-promoting effect produced by non-convex regularization.

For what regards the optimal residual whiteness measures W\(_1^*\) and W\(_2^*\) reported in the last two columns of Table 1, it is worth observing that the lowest results (in boldface) are obtained in correspondence of the best performing models for each restoration test. This in principle should allow to use the proposed automatic parameter selection strategy in order to automatically select the best regularization term for each problem.

Finally, for any given model, the proposed automatic parameter selection strategy seems to perform very well as indicated by the small values of the quality losses \(\mathrm {LQ}^*_j, j=1,2\), reported in Table 1 and visually supported by the plots in the figures.

Visual inspection and comparison of the restored images are consistent with the results in Table 1.

7 Conclusions

We presented a bilevel framework aimed at equipping the class of CNC variational models for image restoration proposed in [15] with an effective strategy for automatically selecting the regularization parameter based on maximizing the residual whiteness. The idea behind our proposal is that if the recovered image is well estimated, the residual image is spectrally white; on the contrary a poorly restored image exhibits structured artifacts which yield spectrally colored residual images. Numerical results for restoring images characterized by some sparsity properties strongly indicate that the considered class of CNC models with the proposed automatic parameter selection strategy outperforms classical convex models with non-smooth but convex regularizers. The proposed parameter selection strategy makes the considered class of CNC models automatic, in the sense that the regularization parameter is set without requiring any knowledge about the noise variance.

References

M. Almeida, M. Figueiredo, Parameter estimation for blind and non-blind deblurring using residual whiteness measures. IEEE Transactions on Image Processing 22(7), 2751–2763 (2013)

F. Bauer, M.A. Lukas, Comparing parameter choice methods for regularization of ill-posed problems. Mathematics and Computers in Simulation 81(9), 1795–1841 (2011)

D. Calvetti, S. Morigi, L. Reichel, F. Sgallari, Tikhonov regularization and the L-curve for large discrete ill-posed problems. Journal of Computational and Applied Mathematics 123(1–2), 423–446 (2000)

Y.C. Eldar, Generalized SURE for exponential families: Applications to regularization. IEEE Transactions on Signal Processing 57(2), 471–481 (2009)

H.W. Engl, M. Hanke, A. Neubauer, Regularization of Inverse Problems (Kluwer, Dordrecht, 1996)

U. Haamarik, R. Palm, T. Raus, A family of rules for parameter choice in Tikhonov regularization of ill-posed problems with inexact noise level. Journal of Computational and Applied Mathematics 236(8), 2146–2157 (2012)

P.C. Hansen, D.P. O’Leary, The use of the L-curve in the regularization of discrete ill-posed problems. SIAM Journal on Scientific Computing 14(6), 1487–1503 (1993)

P.C. Hansen, M.E. Kilmer, R.H. Kjeldsen, Exploiting residual information in the parameter choice for discrete ill-posed problems. BIT Numerical Mathematics 46(1), 41–59 (2006)

C. He, C. Hu, W. Zhang, B. Shi, A fast adaptive parameter estimation for total variation image restoration. IEEE Transactions on Image Processing 23(21), 4954–4967 (2014)

A. Lanza, S. Morigi, F. Sgallari, Convex image denoising via non-convex regularization. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) 9087, 666–677 (2015)

A. Lanza, S. Morigi, F. Sgallari, A.J. Yezzi, Variational image denoising based on autocorrelation whiteness. SIAM Journal on Imaging Sciences 6(4), 1931–1955 (2013)

A. Lanza, S. Morigi, F. Sgallari, Variational image restoration with constraints on noise whiteness. Journal of Mathematical Imaging and Vision 53(1), 61–77 (2015)

A. Lanza, S. Morigi, F. Sgallari, Convex image denoising via non-convex regularization with parameter selection. Journal of Mathematical Imaging and Vision 56(2), 195–220 (2016)

A. Lanza, S. Morigi, F. Sciacchitano, F. Sgallari, Whiteness constraints in a unified variational framework for image restoration. Journal of Mathematical Imaging and Vision 60(9), 1503–1526 (2018)

A. Lanza, S. Morigi, I.W. Selesnick, F. Sgallari, Sparsity-inducing nonconvex nonseparable regularization for convex image processing. SIAM Journal on Imaging Sciences 12(2), 1099–1134 (2019)

S. Lefkimmiatis, J.P. Ward, M. Unser, Hessian Schatten-norm regularization for linear inverse problems. IEEE Transactions on Image Processing 22(5), 1873–1888 (2013)

A. Parekh, I.W. Selesnick, Convex denoising using non-convex tight frame regularization. IEEE Signal Processing Letters 22(10), 1786–1790 (2015)

S. Ramani, Z. Liu, J. Rosen, J. Nielsen, J.A. Fessler, Regularization parameter selection for nonlinear iterative image restoration and MRI reconstruction using GCV and SURE-based methods. IEEE Transactions on Image Processing 21(8), 3659–3672 (2012)

L.I. Rudin, S. Osher, E. Fatemi, Nonlinear total variation based noise removal algorithms, Physics D, vol. 60(1–4), pp. 259–268, 1992

B.W. Rust, D.P. O’Leary, Residual periodograms for choosing regularization parameters for ill-posed problems. Inverse Probl. 24(3) (2008)

B.W. Rust, Parameter selection for constrained solutions to ill-posed problems. Computing Science and Statistics 32, 333–347 (2000)

A.M. Thompson, J.C. Brown, J.W. Kay, D.M. Titterington, A study of methods of choosing the smoothing parameter in image restoration by regularization. IEEE Transactions on Pattern Analysis and Machine Intelligence 13(4), 326–339 (1991)

X. Zhu, P. Milanfar, Automatic parameter selection for denoising algorithms using a no-reference measure of image content. IEEE Transactions on Image Processing 19(12), 3116–3132 (2010)

Acknowledgements

We would like to thank the referees for comments that lead to improvements of the presentation. This research was supported in part by the National Group for Scientific Computation (GNCS-INDAM), Research Projects 2019.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Lanza, A., Morigi, S., Sgallari, F. (2021). Automatic Parameter Selection Based on Residual Whiteness for Convex Non-convex Variational Restoration. In: Tai, XC., Wei, S., Liu, H. (eds) Mathematical Methods in Image Processing and Inverse Problems. IPIP 2018. Springer Proceedings in Mathematics & Statistics, vol 360. Springer, Singapore. https://doi.org/10.1007/978-981-16-2701-9_6

Download citation

DOI: https://doi.org/10.1007/978-981-16-2701-9_6

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-16-2700-2

Online ISBN: 978-981-16-2701-9

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)