Abstract

In this chapter, a gradient-free method is proposed for solving the multi-objective optimization problem in higher dimension. The concept is developed as a modification of the Nelder-Mead simplex technique for the single-objective case. The proposed algorithm is verified and compared with the existing methods with a set of test problems.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

2.1 Introduction

A general multi-objective optimization problem is stated as

\(F(x)=(f_1(x),f_2(x)\ldots ,f_m(x))^T\), \(f_j:\mathbb {R}^n\rightarrow \mathbb {R}\), \(j=1,2,\ldots ,m, m\ge 2\). In practice, (MOP) involves several conflicting and non-commensurate objective functions which have to be optimized simultaneously over \(\mathbb {R}^n\). If \(x^*\in \mathbb {R}^n\) minimizes all the objective functions simultaneously, then certainly an ideal solution is achieved. But in general, improvement in one criterion results in loss in another criterion, leading to the unlikely existence of an ideal solution. For this reason one has to look for the “best” compromise solution, which is known as an efficient or Pareto optimal solution. The concept of efficiency arises from a pre-specified partial ordering on \(\mathbb {R}^m\). The points satisfying the necessary condition for efficiency are known as critical points. Application of these kinds of problems are found in engineering design, statistics, management science, etc.

Classical methods for solving (MOP) are either scalarization methods or heuristic methods. Scalarization methods reduce the main problem to a single objective optimization problem using predetermined parameters. A widely used scalarization method is due to Geofforion [15], which computes proper efficient solutions. Geofforion’s approach has been further developed by several researchers in several directions. Other parameter-free techniques use the concept of order of importance of the objective functions, which have to be specified in advance. Another widely used general solution strategy for multi-objective optimization problems is the \(\epsilon \)-constrained method [10, 21]. All the above methods are summarized in [1, 8, 19, 23]. These scalarization methods are user-dependent and often have difficulties in finding an approximation to the Pareto front. Heuristic methods [7] do not guarantee the convergence property but usually provide an approximate Pareto front. Some well-known heuristic methods are genetic algorithms, particle swarm optimization, etc. NSGA-II [7] is a well-known genetic algorithm.

Recently, many researchers have developed line search methods for (MOP), which are different from the scalarization process and heuristic approach. These line search numerical techniques are possible extensions of gradient-based line search techniques for the single-objective optimization problem to the multi-objective case. In every gradient-based line search method, the descent direction at an iterative point x is determined by solving a subproblem at x, and a suitable step length \(\alpha \) at x in this direction is obtained using the Armijio type condition with respect to each objective function to ensure \(f_j(x+\alpha d)<f_j(x)\). Then a descent sequence is generated, which can converge to a critical point. Some recent developments in this direction are summarized below.

The steepest descent method, which is the first line search approach for (MOP), was developed by Fliege and Svaiter [12] in 2000 to find a critical point of (MOP). In this method, descent direction d at every iterating point x is the solution of the following subproblem,

which is same as

The Newton method for single-objective optimization problem is extended to (MOP) by Fliege et al. [11] in 2009, which uses convexity criteria. Newton direction for (MOP) at x is obtained by solving the following min-max problem, which involves the quadratic approximation of all the objective functions.

This is equivalent to the following subproblem.

If every \(f_j\) is a strictly convex function, then the above subproblem is a convex programming problem. Using the Karush-Kuhn-Tucker (KKT) optimality condition, the solution of this subproblem becomes the Newton direction \(d_N(x)\) as

where \(\lambda _{j}(x)\) are Lagrange multipliers. This iterative process is locally and quadratically convergent for Lipschitz continuous functions.

An extension of the Quasi-Newton method for (MOP) is studied by Qu et al. [27] in 2011 for critical point, which avoids convexity assumptions. The method proposed by Qu et al. [27] uses the approximate Hessian of every objective function. The subproblem in Qu et al. [27] is

where \(B_j(x)\) is the approximation of \(\nabla ^2 f_j(x)\).

These individual \(B_j(x)\) are replaced by a common positive definite matrix in Ansari and Panda [2] to reduce the complexity of the algorithm. In [2], the descent direction at every iterating point x is determined by solving the following subproblem which involves linear approximation of every objective function along with the common positive definite matrix B(x) in place of individual matrices \(B_j(x)\).

Here, a sequence of positive definite matrices is generated during the iterative process like the quasi-Newton method for the single-objective case. The Armijo-Wolfe type line search technique is used to determine the step length. A descent sequence is generated whose accumulation point is a critical point of (MOP) under some reasonable assumptions.

The above line search techniques are restricted to unconstrained multi-objective programming problems, which are further extended to constrained multi-objective problems. A general constrained multi-objective optimization problem is

Concept of the line search methods for single objective constrained optimization problems are extended to the multi-objective case in some recent papers; see [3, 4, 13]. A variant of the sequential quadratic programming (SQP) method is developed for inequality constrained (\(MOP_C\)) in the light of the SQP method for the single-objective case by Ansari and Panda [4] recently. The following quadratic subproblem is solved to obtain a feasible descent direction at every iterating point x, which involves linear approximations of all functions.

The same authors consider a different subproblem in [3] which involves quadratic approximations of all the functions, and use the SQCQP technique to develop a descent sequence. This subproblem is

With these subproblems, a non-differentiable penalty function is used to restrict constraint violations. To obtain a feasible descent direction, the penalty function is considered as a merit function with a penalty parameter. The Armijo type line search technique is used to find a suitable step length. Global convergence of these methods is discussed under the Slater constraint qualification.

The above iterative schemes are free from the burden of selection of parameters in advance, and also have the convergence property. These iterative schemes are gradient-based methods, and large-scale problems can be solved efficiently only if the gradient information of the functions is available. Some optimization software packages perform the finite difference gradient evaluation internally. But this is inappropriate when function evaluations are costly and noisy. Hence there is a growing demand for derivative-free optimization methods which neither require derivative information nor approximate the derivatives. The reader may refer to the book Cohn et al. [5] for the recent developments on derivative-free methods for single-objective optimization problem.

Coordinate search is the simplest derivative-free method for the unconstrained single-objective optimization problem. It evaluates the objective function of n variables at 2n points around a current iterate defined by displacements along the coordinate directions, their negatives, and a suitable step length. The set of these directions form a positive basis. This method is slow but capable of handling noise and guarantees to converge globally. The implicit filtering algorithm is also a derivative-free line search algorithm that imposes sufficient decrease along a quasi-Newton direction. Here, the true gradient is replaced by the simplex gradient. This method resembles the quasi-Newton approach. The trust region-based derivative-free line search method is also in demand to address noisy functions. In this method, quadratic subproblems are formulated from polynomial interpolation or regression. The implicit filtering is less efficient than the trust region but more capable of capturing noise.

The next choice is the widely cited Nelder-Mead method [24], which is a direct search iterative scheme for single objective optimization problems. This evaluates a finite number of points in every iteration, which takes care of the function values at the vertices of the simplex \(\{y^0,y^1,...,y^n\}\) in n dimension, ordered by increasing values of the objective function which has to be minimized. Action is taken based on simplex operations such as reflections, expansions, and contractions (inside or outside) at every iteration. The Nelder-Mead method attempts to replace the simplex vertex that has the worst function value. In such iterations, the worst vertex \(y^n\) is replaced by a point in the line that connects \(y^n\) and \(y^c\), where

\(\delta =1\) indicates a reflection, \(\delta =2\) an expansion, \(\delta =1/2\) an outer contraction, and \(\delta =-1/2\) an inside contraction. Nelder-Mead can also perform shrink. Except for the shrinks, the emphasis is on replacing the worse vertex rather than improving the best. The simplices generated by Nelder-Mead may adapt well to the curvature of the function.

In this chapter, a derivative-free iterative scheme is developed for (MOP). The idea of the Nelder-Mead simplex method is imposed in a modified form using the Non-dominated Sorting algorithm to solve (MOP). This algorithm is coded in MATLAB(2019) to generate the Pareto front. The efficiency of this algorithm is justified through a set of test problems, and comparison with a scalarization method and NSGA−II is provided in terms of the number of iterations and CPU time.

2.2 Notations and Preliminaries

Consider that \(\mathbb {R}^m\) is partially ordered by a binary relation induced by \(\mathbb {R}^m_+\), the non-negative orthant of \(\mathbb {R}^m\). For \(p,q\in {\mathbb {R}^m}\),

Definition 2.1

A point \(x^* \in S\) is called a weak efficient solution of the (MOP) if there does not exist \(x\in {S}\) such that \(F(x)\prec _{\mathbb {R}_{+}^{m}} F(x^*)\). In other words, whenever \(x\in {S},~F(x)-F(x^*)\notin {-int(\mathbb {R}_{+}^{m}})\). In set notation, this becomes \((F(S)-F(x^*))\cap -int(\mathbb {R}_{+}^{m})=\phi \).

Definition 2.2

A point \(x^*\in {S}\) is called an efficient solution of the (MOP) if there does not exist \(x\in {S}\) such that \(F(x)\preceq _{\mathbb {R}_{+}^{m}}{F(x^*)}\). In other words, whenever \(x\in {S},~F(x)-F(x^*)\notin {-(\mathbb {R}_{+}^{m}}\setminus {\{0\}})\). In set notation, this becomes \((F(S)-F(x^*))\cap (-(\mathbb {R}_{+}^{m}\setminus {\{0\}}))=\phi \). This solution is also known as the Pareto optimal or non-inferior solution. If \(X^*\) is the set of all efficient solutions, then the set \(F(X^*)\) is called the Pareto front for (MOP).

Definition 2.3

For \(x_1,~x_2\in \mathbb {R}^n\), \(x_1\) is said to dominate \(x_2\) if and only if \(F(x_1)\preceq _{\mathbb {R}_{+}^{m}} F(x_2)\), that is, \(f_j(x_1)\le f_j(x_2)\) for all j and \(F(x_1)\ne F_(x_2)\). \(x_1\) weakly dominates \(x_2\) if and only if \(F(x_1)\prec _{\mathbb {R}_{+}^{m}} F(x_2)\), that is, \(f_j(x_1)< f_j(x_2)\) for all j. A point \(x_1\in \mathbb {R}^n\) is said to be non-dominated if there does not exist any \(x_2\) such that \(x_2\) dominates \(x_1\).

This concept can also be extended to find a non-dominated set of solutions of a multi-objective programming problem. Consider a set of N points \(\{x_1,x_2,...,x_N\}\), each having \(m (>1)\) objective function values. So \(F(x_i)=(f_1(x_i),f_2(x_i),..., f_m(x_i))\). The following algorithm from Deb [6] can be used to find the non-dominated set of points. This algorithm is used in the next section to order the objective values at every vertex of the simplex.

Algorithm 1[6]

Step 0 : Begin with \(i=1\).

Step 1 : For all \(j\ne {i}\), compare solutions \(x_i\) and \(x_j\) for domination using Definition 2.3 for all m objectives.

Step 2 : If for any j, \(x_i\) is dominated by \(x_j\), mark \(x_i\) as “dominated”.

Step 3 : If all solutions (that is, when \(i=N\) is reached) in the set are considered, go to Step 4, else increment i by one and go to Step 1.

Step 4 : All solutions that are not marked “dominated” are non-dominated solutions.

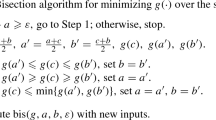

2.3 Gradient-Free Method for MOP

In this section, we develop a gradient-free algorithm as an extension of the Nelder-Mead simplex method, which is a widely used algorithm for the single-objective case. The dominance property as explained in Algorithm 1 helps to compare F(x) at different points in \(\mathbb {R}^m\).

Consider a simplex of \(n+1\) vertices in \(\mathbb {R}^n\) as \(Y=\{y^0,y^1,...,y^n\}\) ordered by component-wise increasing values of F. To order the vertices component-wise, one can use the “Non-dominated Sorting Algorithm 1”. The most common Nelder-Mead iterations for the single-objective case perform a reflection, an expansion, or a contraction (the latter can be inside or outside the simplex). In such iterations, the worst vertex \(y^n\) is replaced by a point in the line that connects \(y^n\) and \(y^c\),

where \(y^c=\sum \limits _{i=0}^{n-1}\frac{y^i}{n}\) is the centroid of the best n vertices. The value of \(\delta \) indicates the type of iteration. For instance, when \(\delta =1\) we have a (genuine or isometric) reflection, when \(\delta =2\) an expansion, when \(\delta =\frac{1}{2}\) an outside contraction, and when \(\delta =-\frac{1}{2}\) an inside contraction. A Nelder-Mead iteration can also perform a simplex shrink, which rarely occurs in practice. When a shrink is performed, all the vertices in Y are thrown away except the best one \(y^0\). Then n new vertices are computed by shrinking the simplex at \(y^0\), that is, by computing, for instance, \(y^0+\frac{1}{2}(y^i-y^0)\), \(i=1,2,...,n\). Note that the “shape” of the resulting simplices can change by being stretched or contracted, unless a shrink occurs.

2.3.1 Modified Nelder-Mead Algorithm

Choose an initial point of vertices \(Y_0=\{y_{0}^{0},y_{0}^{1},...,y_{0}^{n}\}\). Evaluate F at the points in \(Y_0\). Choose constants:

For \(k=0,1,2,...\), set \(Y=Y_k\).

-

1.

Order the \(n+1\) vertices of \(Y=\{y^0,y^1,...,y^n\}\) using Algorithm 1 so that

$$F(y^0)\preceq _{\mathbb {R}_{+}^{m}} F(y^1)\preceq _{\mathbb {R}_{+}^{m}}{...}\preceq _{\mathbb {R}_{+}^{m}} F(y^n).$$Denote \(F(y^t)=F^t\), \(t=0,1,\ldots ,n\)

-

2.

Reflect the worst vertex \(y^n\) over the centroid \(y^c= \sum \limits _{i=0}^{n-1}\frac{y^i}{n}\) of the remaining n vertices:

$$y^r=y^c+\delta (y^c-y^n)$$Evaluate \(F^r=F(y^r)\). If \(F^0\) dominates \(F^r\) and \(F^r\) dominates weakly \(F^{n-1}\), then replace \(y^n\) by the reflected point \(y^r\) and terminate the iteration:

$$ Y_{k+1}=\{y^0,y^1,...,y^r\}.$$ -

3.

If \(F^r\) dominates weakly \(F^0\), then calculate the expansion point

$$y^e=y^c+\delta ^r(y^c - y^n)$$and evaluate \(F^e=F(y^e)\). If \(F^e\) dominates \(F^r\), replace \(y^n\) by the expansion point \(y^e\) and terminate the iteration:

$$Y_{k+1}=\{y^0,y^1,...,y^e\}.$$Otherwise, replace \(y^n\) by the reflected point \(y^r\) and terminate the iteration:

$$Y_{k+1}=\{y^0,y^1,...,y^r\}.$$ -

4.

If \(F^{n-1}\) dominates \(F^r\), then a contraction is performed between the best of \(y^r\) and \(y^n\).

-

(a)

If \(F^r\) dominates weakly \(F^n\), perform an outside contraction

$$y^{oc}=y^c+\delta ^{oc}(y^c-y^n)$$and evaluate \(F^{oc}=F(y^{oc})\). If \(F^{oc}\) dominates \(F^r\), then replace \(y^n\) by the outside contraction point \(y^{oc}\) and terminate the iteration:

$$Y_{k+1}=\{y^0,y^1,...,y^{oc}\}.$$Otherwise, perform a shrink.

-

(b)

If \(F^n\) dominates \(F^r\), perform an inside contraction

$$y^{ic}=y^c+\delta ^{ic}(y^c-y^n)$$and evaluate \(F^{ic}=F(y^{ic})\). If \(F^{ic}\) dominates weakly \(F^n\), then replace \(y^n\) by the inside contraction point \(y^{ic}\) and terminate the iteration:

$$Y_{k+1}=\{y^0,y^1,...,y^{ic}\}.$$Otherwise, perform a shrink.

-

(a)

-

5.

Evaluate f at the n points \(y^0+\gamma ^{s}(y^i-y^0)\), \(i=1,...,n\), and replace \(y^1,...,y^n\) by these points, terminating the iteration:

$$Y_{k+1}=y^0+\gamma ^s(y^i-y^0), i=0,...,n.$$

2.4 Numerical Illustrations and Performance Assessment

In this section, the algorithm is executed on some test problems, which are collected from different sources and summarized in Table 2.1 (see Appendix). The results obtained by Algorithm 2.3.1 are compared with the existing methods: the scalarization method (Weighted sum) and NSGA-II. MATLAB code (R2017b) for these three methods is developed. The comparison is provided in Table 2.2 (see Appendix). In this table, “Iter” corresponds to the number of iterations and “CPU time” corresponds to the time for executing the Algorithms. Denote the algorithms in short term as

Algorithm 2.3.1—(NMSM)

Weighted Sum Method—(WSM)

NSGA-II

Pareto front: To generate the Pareto front by Algorithm 2.3.1, the RAND strategy is considered for selecting the initial point. 500 uniformly distributed random initial points between lower bound and upper bound are selected. Every test problem is executed 10 times with random initial points. The Pareto front of the test problem “BK1” in NSGA-II, NMSM, and WSM is provided in Fig. 2.1 with red, green, and blue stars, respectively.

2.5 Performance Profile

Performance profile is defined by a cumulative function \(\rho (\tau )\) representing a performance ratio with respect to a given metric, for a given set of solvers. Given a set of solvers S and a set of problems P, let \(\zeta _{p,s}\) be the performance of solver s on solving problem p. The performance ratio is then defined as \(r_{p,s}=\zeta _{p,s}/\underset{s\in S}{\min }\zeta _{p,s}\). The cumulative function \(\rho _s(\tau )~(s\in S)\) is defined as

It is observed that the performance profile is sensitive to the number and types of algorithms considered in the comparison; see [16]. So the algorithms are compared pairwise. In this chapter, the performance profile is compared using purity, \(\Gamma \), \(\Delta \) spread metrics.

Purity metric: Let \(P_{p,s}\) be the approximated Pareto front of problem p obtained by method s. Then an approximation to the true Pareto front \(P_p\) can be built by considering \(\underset{s\in S}{\cup }P_{p,s}\) first and removing the dominated points. The purity metric for algorithms s and problem p is defined by the ratio

Clearly, \(\bar{t_{p,s}}\in [0,1]\). When computing the performance profiles of the algorithms for the purity metric, it is required to set \(t_{p,s}'=1/\bar{t_{p,s}}.\,\, t'=0\) implies that the algorithm is unable to solve p.

Spread metrics: Two types of spread metrics (\(\Gamma \) and \(\Delta \)) are used in order to analyze if the points generated by an algorithm are well-distributed in the approximated Pareto front of a given problem. Let \(x_1,~x_2,\ldots ,x_N\) be the set of points obtained by a solver s for problem p and let these points be sorted by objective function j, that is, \(f_j(x_i)\le f_j(x_{i+1})~(i=1,2,\ldots , N-1)\). Suppose \(x_0\) is the best known approximation of global minimum of \(f_j\) and \(x_{N+1}\) is the best known global maximum of \(f_j\), computed over all approximated Pareto fronts obtained by different solvers. Define

Then \(\Gamma \) spread metric is defined by

Define \(\delta _j\) to be the average of the distances \(\delta _{i,j},i=1,2,\ldots ,N-1\). For an algorithm s and a problem p, the spread metric \(\Delta \) is

Result Analysis: A deep insight into Figs. 2.2, 2.3, and 2.4 clearly indicates the advantage of the proposed method (NMSM) to the existing methods WSM and NSGA−II. In RAND, NMSM has a better performance ratio in the \(\Gamma \) metric than WSM and NSGA−II and purity and \(\delta \) metrics than NSGA−II in most of the test problems. Also from the computational details tables, one may observe that NMSM takes less number of iterations and time than WSM and NSGA−II in most of the test problems.

2.6 Conclusions

In this chapter, a Nelder-mead simplex method is developed for solving unconstrained multi-objective optimization problems. This method is modified from the existing Nelder-mead simplex method for single-objective optimization problems. Justification of this iterative process is carried out through numerical computations. This chapter can be further studied for the constrained multi-objective programming problem and for the better spreading technique to generate the Pareto points, which can be considered as the future scope of the present contribution.

References

Ansari, Q.H., Köbis, E., Yao, J.C.: Vector Variational Inequalities and Vector Optimization. Springer (2018)

Ansary, M.A.T., Panda, G.: A modified quasi-newton method for vector optimization problem. Optimization 64, 2289–2306 (2015)

Ansary, M.A.T., Panda, G.: A sequential quadratically constrained quadratic programming technique for a multi-objective optimization problem. Eng. Optim. 51(1), 22–41 (2019). https://doi.org/10.1080/0305215X.2018.1437154

Ansary, M.A.T., Panda, G.: A sequential quadratic programming method for constrained multi-objective optimization problems. J. Appl. Math. Comput. (2020). https://doi.org/10.1007/s12190-020-01359-y

Conn, A.R., Scheinberg, K., Vicente, L.N.: Introduction to Derivative-Free Optimization. SIAM (2009)

Deb, K.: Multi-Objective Genetic Algorithms: Problem Difficulties and Construction of Test Problems. Wiley India Pvt. Ltd., New Delhi, India (2003)

Deb, K.: Multi-objective Optimization Using Evolutionary Algorithms. Wiley India Pvt. Ltd., New Delhi, India (2003)

Ehrgott, M.: Multicriteria Optimization. Springer Publication, Berlin (2005)

Eichfelder, G.: An adaptive scalarization method in multiobjective optimization. SIAM J. Optim. 19(4), 1694–1718 (2009)

Engau, A., Wiecek, M.M.: Generating \(\epsilon \)-efficient solutions in multiobjective programming. Eur. J. Oper. Res. 177, 1566–1579 (2007)

Fliege, F., Drummond, L.M.G., Svaiter, B.: Newton’s method for multiobjective optimization. SIAM J. Optim 20(2), 602–626 (2009)

Fliege, J., Svaiter, F.V.: Steepest descent methods for multicriteria optimization. Math. Methods Oper. Res. 51(3), 479–494 (2000)

Fliege, J., Vaz, A.I.F.: A method for constrained multiobjective optimization based on SQP techniques. SIAM J. Optim. 26(4), 2091–2119 (2016)

Fonseca, C.M., Fleming, P.J.: Multiobjective optimization and multiple constraint handling with evolutionary algorithms. i. a unified formulation. IEEE Trans. Syst. Man Cybern. Part A: Syst. Hum. 28(1), 26–37 (1998)

Geofforion, A.M.: Proper efficiency and the theory of vector maximization. J. Optim. Theory Appl. 22, 618–630 (1968)

Gould, N., Scott, J.: A note on performance profiles for benchmarking software. ACM Trans. Math. Softw. 43, 15 (2016)

Huband, S., Hingston, P., Barone, L., While, L.: A review of multiobjective test problems and a scalable test problem toolkit. IEEE Trans. Evol. Comput. 10(5), 477–506 (2006)

Hwang, C.L., Yoon, K.: Multiple Attribute Decision Making: Methods and Applications a State-of-the-Art Survey. Springer Science and Business Media (1981)

Jahn, J.: Vector Optimization. Theory, Applications, and Extensions. Springer, Berlin (2004)

Jin, Y., Olhofer, M., Sendhoff, B.: Dynamic weighted aggregation for evolutionary multi-objective optimization: Why does it work and how? pp. 1042–1049 (2001)

Li, Z., Wang, S.: \(\epsilon \)-approximate solutions in multiobjective optimization. Optimization 34, 161–174 (1998)

Lovison, A.: Singular continuation: generating piecewise linear approximations to pareto sets via global analysis. SIAM J. Optim. 21(2), 463–490 (2011)

Miettinen, K.M.: Nonlinear Multiobjective Optimization. Kluwer, Boston (1999)

Nelder, J.A., Mead, R.: A simplex method for function minimization. Comput. J. 7(4), 308–313 (1965). https://doi.org/10.1093/comjnl/7.4.308

Okabe, T., Jin, Y., Olhofer, M., Sendhoff, B.: On test functions for evolutionary multi-objective optimization. In: International Conference on Parallel Problem Solving from Nature, pp. 792–802. Springer (2004)

Preuss, M., Naujoks, B., Rudolph, G.: Pareto set and EMOA behavior for simple multimodal multiobjective functions, pp. 513–522 (2006)

Qu, S., Goh, M., Chan, F.T.S.: Quasi-newton methods for solving multiobjective optimization. Oper. Res. Lett. 39, 397–399 (2011)

Zitzler, E., Deb, K., Thiele, L.: Comparison of multiobjective evolutionary algorithms: empirical results. Evol. Comput. 8(2), 173–195 (2000)

Acknowledgements

We thank the anonymous reviewers for the valuable comments that greatly helped to improve the content of this chapter.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix

Appendix

Rights and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Bisui, N.K., Mazumder, S., Panda, G. (2021). A Gradient-Free Method for Multi-objective Optimization Problem. In: Laha, V., Maréchal, P., Mishra, S.K. (eds) Optimization, Variational Analysis and Applications. IFSOVAA 2020. Springer Proceedings in Mathematics & Statistics, vol 355. Springer, Singapore. https://doi.org/10.1007/978-981-16-1819-2_2

Download citation

DOI: https://doi.org/10.1007/978-981-16-1819-2_2

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-16-1818-5

Online ISBN: 978-981-16-1819-2

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)