Abstract

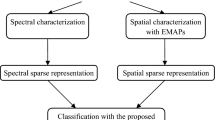

We propose a novel framework for the classification of hyperspectral data corrupted by severe degradation. We propose an optimization framework for extracting discriminative features from noisy hyperspectral data which are then passed onto a simple classifier which exploits both the spatial and spectral correlations in the data. Instead of directly extracting the features from the noisy input data, we learn a basis matrix from the underlying clean data using a combination of non-negative matrix factorization and nuclear-norm minimization. The input degraded data are then projected onto the basis vectors to obtain features. We use structural incoherence to maximize the discriminative ability of the features, which, to the best of our knowledge, is being used for the first time in hyperspectral image processing literature. We show the deterioration in the performance of classification algorithms with the progressive addition of noise while demonstrating the robustness of our algorithm. We evaluate the performance of our algorithm on three well known datasets, namely, Botswana, Salinas and Indian Pines, obtaining state-of-the-art results that validate the efficacy of our algorithm.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Hyperspectral image classification

- Structural incoherence

- Non-negative matrix factorization

- Nuclear-norm minimization

1 Introduction

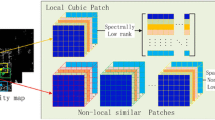

Hyperspectral image (HSI) classification has been an area of active research since over 20 years where the task is to assign each pixel in a HSI to its respective class. HSI classification is a challenging task due to the high data dimensionality, availability of very few labelled samples and the presence of noise. The presence of noise damages both the spatial and spectral information available in the data, thereby degrading the performance of classification algorithms drastically. This motivates us to devise a novel technique which can achieve impressive classification accuracies even in the presence of significant amount of noise. Algorithms used in the earlier days of research in HSI classification considered only the spectral signatures which did not provide satisfactory classification results. However, recent works [4, 8, 9] acknowledge the contribution of the spatial information contained in HSI in addition to the spectral information. The importance of spatial information stems from the simple hypothesis that nearby pixels in a HSI belong to the same class with high probability. In [12], the authors address the problem of robust face recognition in the presence of data corrupted due to occlusion and disguise. They incorporate a constraint of structural incoherence in order to make the bases learnt for different classes independent. This leads to the achievement of a higher discriminating ability resulting in an impressive classification performance. Inspired by their approach, in this work, we aim to exploit both the spectral and spatial information and classify a severely degraded HSI by learning discriminative features. Instead of learning these features from the corrupted data, we learn them from the underlying clean data which leads to state-of-the-art classification results.

2 Prior Work

Several methods which perform a pixelwise classification of HSIs have been proposed [10, 14, 23]. Sparse representation based classifiers have been used for HSI classification in [5, 6]. A combination of low rank and sparsity for HSI classification can be found in [8, 19, 21]. Collaborative representation based classifiers have been used in [9, 11]. In [1], extended morphological profiles were used for HSI classification. The authors in [22] used conditional random fields for HSI classification. The random forest framework was investigated for HSI classification in [7]. Owing to the excellent performance in classification of images and videos, deep learning based methods have been extensively investigated for HSI classification. Stacked auto encoders (SAEs) [16], convolutional neural networks (CNNs) [13], deep belief networks (DBNs) [4] and deep recurrent neural networks (RNNs) [15] have been used for HSI classification. Recently, generative adversarial networks (GANs) have been introduced for the purpose of HSI classification [24]. However, these models are data hungry and are prone to overfitting due to the scarcity of available labelled samples. Hence, most of the recent research with deep models deals with finding new techniques to mitigate this problem.

3 Proposed Methodology

In this work, we present a novel framework wherein we combine non-negative matrix factorization (NMF), nuclear norm minimization (NNM) and structural incoherence to learn discriminative features from a degraded HSI. Given a HSI of size \(m \times n \times b\), the training samples are the b-dimensional spectral vectors. Let \(\mathbf {Y} \in \mathcal {R}^{b \times N}\) denote a matrix formed by stacking together all the N available training samples. Hence, for a HSI with C classes, \(\mathbf {Y}=[\mathbf {Y}_1, \mathbf {Y}_2, \dots ,\mathbf {Y}_C]\) where \(\mathbf {Y}_i =[\mathbf {y}^i_1, \mathbf {y}^i_2, \dots , \mathbf {y}^i_{n_i}] \in \mathcal {R}^{b \times n_i}\) (\(i= 1, 2, \dots , C\)) denotes the training samples belonging to class i and \(n_i\) is the number of available training samples from class i so that \(N=Cn_i\). We model the data as:

where \(\mathbf {L}=[\mathbf {L}_1, \mathbf {L}_2, \dots , \mathbf {L}_C] \in \mathcal {R}^{b \times N}\) is a low rank matrix, \(\mathbf {S}=[\mathbf {S}_1, \mathbf {S}_2, \dots , \mathbf {S}_C] \in \mathcal {R}^{b \times N}\) is the matrix of sparse noise and \(\mathbf {N}=[\mathbf {N}_1, \mathbf {N}_2, \dots , \mathbf {N}_C] \in \mathcal {R}^{b \times N}\) denotes additive Gaussian noise. We aim to combine non-negative matrix factorization and nuclear norm minimization to simultaneously denoise the available data and learn a basis matrix for classification. We wish to solve the following optimization problem:

where \(\Vert \cdot \Vert _F\) and \(\Vert \cdot \Vert _*\), respectively, denote the Frobenius norm and the nuclear norm of a matrix, \(\alpha \), \(\beta \), \(\gamma \), \(\delta \) are positive parameters and \(\epsilon \) is a small constant whose value is fixed to 0.001. It is noteworthy that the basis matrix \(\mathbf {U}\) is being learnt from the underlying clean data \(\mathbf {L}\) and not directly from the degraded training data \(\mathbf {Y}\). The third term in Eq. (2) corresponds to the structural incoherence [17] which measures the similarity between the derived low-rank matrices of different classes. Hence, minimizing this term separately for every class tends to make these derived matrices incoherent and enhances their discriminating ability. Hence, for the \(i^{th}\) class, we wish to solve:

We use the method of augmented Lagrangian multipliers (ALM) [18] to solve the above problem. To this end, we first introduce an auxiliary variable \(\mathbf {Z}_i\) as follows:

The augmented Lagrangian is as follows:

where \(\mathbf {M}_1\), \(\mathbf {M}_2\) are Lagrange multipliers, \(\mu \) is a positive parameter and \(\langle \cdot ,\cdot \rangle \) denotes the inner product. We now provide the updation rules for each of the variables.

-

Computing \(\mathbf {L}_i\) with other variables fixed: With some algebraic manipulations, the \(\mathbf {L}_i\)-subproblem can be obtained from Eq. (5) as follows:

$$\begin{aligned} \min _{\mathbf {L}_i} \dfrac{\alpha }{2\mu } \Vert \mathbf {L}_i\Vert _* + \dfrac{1}{2} \Vert \mathbf {L}_i-(\mathbf {Y}_i-\mathbf {S}_i+\mathbf {Z}_i+\dfrac{\mathbf {M}_1}{\mu }+\dfrac{\mathbf {M}_2}{\mu })\Vert _F^2 \end{aligned}$$(6)Eq. (6) can be solved using singular value thresholding [3].

-

Computing \(\mathbf {S}_i\) fixing others: The \(\mathbf {S}_i\)-subproblem can be obtained from Eq. (5) as follows:

$$\begin{aligned} \min _{\mathbf {S}_i} \dfrac{\beta }{\mu } \Vert \mathbf {S}_i\Vert _1+\dfrac{1}{2}\Vert \mathbf {S}_i-(\mathbf {Y}_i-\mathbf {L}_i+\dfrac{\mathbf {M}_1}{\mu })\Vert _F^2 \end{aligned}$$(7)Eq. (7) can be solved using the soft shrinkage operation.

-

Computation of \(\mathbf {Z}_i\): In order to achieve factorization of \(\mathbf {Z}_i\), the non-negativity constraint on \(\mathbf {Z}_i\) must be satisfied. To this end, we first introduce an indicator function as follows:

$$\begin{aligned} l _+(\mathbf {Z}_i)={\left\{ \begin{array}{ll} 0, &{}Z_{i_{m,n}}\ge 0 \;\;\;\; \forall m,n\\ \infty , &{} otherwise \end{array}\right. } \end{aligned}$$(8)where, \(Z_{i_{m,n}}\) denotes the \((m,n)^{th}\) element of \(\mathbf {Z}_i\). The \(\mathbf {Z}_i\) sub-problem is then obtained from Eq. (5) as follows:

$$\begin{aligned} \min _{\mathbf {Z}_i} \gamma \sum _{j \ne i} \Vert {\mathbf {L}_j^T\mathbf {Z}_i}\Vert _F^2 + \delta \Vert \mathbf {Z}_i-(\mathbf {U}\mathbf {V})_i\Vert _F^2 +\dfrac{\mu }{2}\Vert \mathbf {Z}_i-\mathbf {L}_i+\dfrac{\mathbf {M}_2}{\mu }\Vert _F^2+ l _+(\mathbf {Z}_i) \end{aligned}$$(9)To solve this, we use the alternating direction method of multipliers (ADMM) [2]. To do so, we first introduce an auxiliary variable in order to make the objective function separable:

$$\begin{aligned} \min _{\mathbf {Z}_i}&\gamma \sum _{j \ne i} \Vert {\mathbf {L}_j^T\mathbf {Z}_i}\Vert _F^2 + \delta \Vert \mathbf {Z}_i-(\mathbf {U}\mathbf {V})_i\Vert _F^2 +\dfrac{\mu }{2}\Vert \mathbf {Z}_i-\mathbf {L}_i+\dfrac{\mathbf {M}_2}{\mu }\Vert + l _+(\mathbf {C}_i) \nonumber \\&\text{ s.t. } \mathbf {C}_i=\mathbf {Z}_i \end{aligned}$$(10)The above equation is solved iteratively by updating one of the variables and keeping the other fixed until convergence. The update equations are:

$$\begin{aligned} \mathbf {Z}_i=[(\delta +\dfrac{\mu }{2}+\dfrac{\rho }{2})\mathbf {I}+&\gamma \sum _{j \ne i} {\mathbf {L}_j \mathbf {L}_j^T}]^{-1}[\delta (\mathbf {U}\mathbf {V})_i+\dfrac{\mu \mathbf {L}_i}{2}-\mathbf {M}_2-\mathbf {T}+\rho \mathbf {C}_i] \end{aligned}$$(11)$$\begin{aligned}&\mathbf {C}_i \leftarrow \max (\mathbf {Z}_i+ \dfrac{\mathbf {T}}{\rho },0)\end{aligned}$$(12)$$\begin{aligned}&\mathbf {T}\leftarrow \mathbf {T}+\rho (\mathbf {Z}_i-\mathbf {C}_i)\end{aligned}$$(13)$$\begin{aligned}&\rho \leftarrow \min (\kappa \rho ,\rho _{max}) \end{aligned}$$(14)where, \(\mathbf {I}\) denotes the identity matrix of appropriate size, \(\mathbf {T}\) is the Lagrange multiplier and \(\rho \) > 0, \(\kappa \) >0, \(\rho _{max}\) are parameters.

-

Computation of \(\mathbf {U}\) and \(\mathbf {V}\):

$$\begin{aligned} \min _{\mathbf {U\ge 0,V\ge 0}} \Vert \mathbf {Z}_i-(\mathbf {U}\mathbf {V})_i\Vert _F^2 \end{aligned}$$(15)The above optimization problem can be solved directly using any of the existing NMF solvers.

-

The final step is to update the multipliers and \(\mu \):

$$\begin{aligned}&\mathbf {M}_1 \leftarrow \mathbf {M}_1 +\mu (\mathbf {Y}_i-\mathbf {L}_i-\mathbf {S}_i)\end{aligned}$$(16)$$\begin{aligned}&\mathbf {M}_2 \leftarrow \mathbf {M}_2 +\mu (\mathbf {Z}_i-\mathbf {L}_i)\end{aligned}$$(17)$$\begin{aligned}&\mu \leftarrow \min (\psi \mu ,\mu _{max}) \end{aligned}$$(18)

Once we obtain the basis matrix \(\mathbf {U}\) learnt from the underlying clean data \(\mathbf {L}\), we project all the degraded training and testing data onto \(\mathbf {U}\) to obtain discriminative features which are subsequently used for classification. For any spectral vector \(\mathbf {y}\), we obtain its feature vector as follows:

where \(\mathbf {U}^{\dagger }\) denotes the pseudoinverse of \(\mathbf {U}\). We propose to use a very simple classifier to classify these obtained features with high accuracy. For a test feature \(\mathbf {f}_{test}\) and the training features \(\mathbf {f}_i, i=1,2, \dots , N\), let the spatial positions of the test and training features (corresponding to the test and training spectral vectors, respectively) be denoted by \(\mathbf {p}_{test}=[x,y]^T\) and \(\mathbf {p}_i=[x_i,y_i]^T\), respectively. Let \(d_1=dist(\mathbf {p}_{test}, \mathbf {p}_i)\) and \(d_2 = dist(\mathbf {f}_{test}, \mathbf {f}_i)\), where \(dist(\mathbf {a,b})\) denotes the squared Euclidean distance between the vectors \(\mathbf {a}\) and \(\mathbf {b}\). Then we propose to define

as the dissimilarity between the test feature \(\mathbf {f}_{test}\) and the training feature \(\mathbf {f}_i\). The test sample is then classified to the class of the training sample with which this dissimilarity metric is minimum. \(d_1\) simply takes into account the spatial correlation acknowledging the fact that pixels close to each other belong to the same class with a high probability while \(d_2\) corresponds to the Euclidean distance between the test and training features. Note that setting \(\alpha _1\) to zero leads to the nearest neighbour classifier.

4 Experimental Results

In order to assess the performance of our algorithm, we use three HSI datasets. We synthetically corrupt these datasets by adding Gaussian noise of 0.05 standard deviation. Note that the spectral vectors are normalized to [0–1]. We also add impulse noise and stripes to bands 61 to 70 in all the three datasets. We randomly choose 10 per cent of labelled samples from each class for training and use the rest for testing. We compare our algorithm with SVM [14], SRC [20] and CRNN [11]. The classification performance is measured by the overall accuracy (OA) which is defined as the ratio between the number of correctly predicted pixels to the total number of test pixels. The robustness of our algorithm to noise is analysed by gradually increasing the amount of noise added and monitoring its effect on the class accuracies as compared to the other algorithms. The parameters \(\alpha ,\beta ,\gamma ,\delta ,\alpha _1\) and \(\alpha _2\) are tuned to obtain the best results.

4.1 Datasets

The datasets used are Indian Pines, Botswana and Salinas. The Indian Pines dataset has a size of 145 \(\times \) 145 \(\times \) 200 and has 16 classes. The Botswana dataset has a size of 1476 \(\times \) 256 \(\times \) 145 and has 14 classes. The third dataset is the Salinas dataset which has a size of 512 \(\times \) 217 \(\times \) 204 and 16 classes. The Botswana dataset has only 326 labelled training samples and hence is particularly more challenging among these datasets due to the scarcity of training samples.

4.2 Classification Performance

The performance of the classifiers on the three synthetically corrupted datasets is demonstrated in Tables 1, 2 and 3. We find that our algorithm achieves accuracies of 97.39%, 99.85% and 98.28% on the Botswana, Salinas and Indian Pines datasets respectively, outperforming the other methods. In the Salinas dataset, CRNN achieves the next best accuracy of 90.42% while SVM achieves the next best accuracies of 30.15% and 52.03% in the Botswana and the Indian Pines datasets, respectively. Note that our algorithm outperforms other state-of-the-art methods in these two datasets by a very large margin. Figure 1 depicts the sensitivity of the classifiers to noise. We progressively increase the standard deviation of Gaussian noise using increments of 0.005 upto 0.1 and examine the effect on class specific accuracies obtained by the algorithms. From Fig. 1, we infer that our algorithm is robust to noise in the data since the class specific accuracies do not drop and remain fairly constant while the performance of all the other methods deteriorates sharply with the increase in noise levels.

5 Conclusion

A novel algorithm for the classification of degraded hyperspectral data is proposed. A combination of nuclear norm minimization and non-negative matrix factorization is used to exploit the low rank nature of the data. A basis matrix is learnt from the underlying clean data which is used to extract features from the input degraded data. The discriminative ability of the underlying clean data is exploited using structural incoherence, which to the best of our knowledge, is being introduced for the first time in the hyperspectral image processing literature. Both the spatial and spectral information are exploited for classification which lead to state-of-the-art results.

References

Benediktsson, J.A., Palmason, J.A., Sveinsson, J.R.: Classification of hyperspectral data from urban areas based on extended morphological profiles. IEEE Trans. Geosci. Remote Sens. 43(3), 480–491 (2005)

Boyd, S., Parikh, N., Chu, E., Peleato, B., Eckstein, J., et al.: Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn. 3(1), 1–122 (2011)

Cai, J.F., Candès, E.J., Shen, Z.: A singular value thresholding algorithm for matrix completion. SIAM J. Opt. 20(4), 1956–1982 (2010)

Chen, Y., Zhao, X., Jia, X.: Spectral-spatial classification of hyperspectral data based on deep belief network. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens. 8(6), 2381–2392 (2015)

Chen, Y., Nasrabadi, N.M., Tran, T.D.: Hyperspectral image classification using dictionary-based sparse representation. IEEE Trans. Geosci. Remote Sens. 49(10), 3973–3985 (2011)

Chen, Y., Nasrabadi, N.M., Tran, T.D.: Hyperspectral image classification via kernel sparse representation. IEEE Trans. Geosci. Remote Sens. 51(1), 217–231 (2012)

Ham, J., Chen, Y., Crawford, M.M., Ghosh, J.: Investigation of the random forest framework for classification of hyperspectral data. IEEE Trans. Geosci. Remote Sens. 43(3), 492–501 (2005)

Jia, S., Zhang, X., Li, Q.: Spectral-spatial hyperspectral image classification using \(\ell _{1/2}\) regularized low-rank representation and sparse representation-based graph cuts. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens. 8(6), 2473–2484 (2015)

Jiang, J., Chen, C., Yu, Y., Jiang, X., Ma, J.: Spatial-aware collaborative representation for hyperspectral remote sensing image classification. IEEE Geosci. Remote Sens. Lett. 14(3), 404–408 (2017)

Li, J., Bioucas-Dias, J.M., Plaza, A.: Semisupervised hyperspectral image segmentation using multinomial logistic regression with active learning. IEEE Trans. Geosci. Remote Sens. 48(11), 4085–4098 (2010)

Li, W., Du, Q., Zhang, F., Hu, W.: Collaborative-representation-based nearest neighbor classifier for hyperspectral imagery. IEEE Geosci. Remote Sens. Lett. 12(2), 389–393 (2015)

Lu, Y., Yuan, C., Zhu, W., Li, X.: Structurally incoherent low-rank nonnegative matrix factorization for image classification. IEEE Trans. Image Process. 27(11), 5248–5260 (2018)

Makantasis, K., Karantzalos, K., Doulamis, A., Doulamis, N.: Deep supervised learning for hyperspectral data classification through convolutional neural networks. In: Proceedings IGARSS, pp. 4959–4962. IEEE (2015)

Melgani, F., Bruzzone, L.: Classification of hyperspectral remote sensing images with support vector machines. IEEE Trans. Geosci. Remote Sens. 42(8), 1778–1790 (2004)

Mou, L., Ghamisi, P., Zhu, X.X.: Deep recurrent neural networks for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 55(7), 3639–3655 (2017)

Ozdemir, A., Gedik, B., Cetin, C.: Hyperspectral classification using stacked autoencoders with deep learning. In: Proceedings WHISPERS, pp. 1–4. IEEE (2014)

Ramirez, I., Sprechmann, P., Sapiro, G.: Classification and clustering via dictionary learning with structured incoherence and shared features. In: Proceedings CVPR, pp. 3501–3508. IEEE (2010)

Rockafellar, R.T.: Augmented lagrange multiplier functions and duality in nonconvex programming. SIAM J. Contr. 12(2), 268–285 (1974)

Sun, W., Yang, G., Du, B., Zhang, L., Zhang, L.: A sparse and low-rank near-isometric linear embedding method for feature extraction in hyperspectral imagery classification. IEEE Trans. Geosci. Remote Sens. 55(7), 4032–4046 (2017)

Wright, J., Yang, A.Y., Ganesh, A., Sastry, S.S., Ma, Y.: Robust face recognition via sparse representation. IEEE Trans. Pattern Anal. Mach. Intell. 31(2), 210–227 (2009)

Zhao, Y., Yang, J.: Hyperspectral image denoising via sparse representation and low-rank constraint. IEEE Trans. Geosci. Remote Sens. 53(1), 296–308 (2015)

Zhong, P., Wang, R.: Learning conditional random fields for classification of hyperspectral images. IEEE Trans. Image Process. 19(7), 1890–1907 (2010)

Zhong, Y., Zhang, L.: An adaptive artificial immune network for supervised classification of multi/hyperspectral remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 50(3), 894–909 (2012)

Zhu, L., Chen, Y., Ghamisi, P., Benediktsson, J.A.: Generative adversarial networks for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 56(9), 5046–5063 (2018)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Sarkar, S., Sahay, R.R. (2020). LR-HyClassify: A Low Rank Based Framework for Classification of Degraded Hyperspectral Images. In: Babu, R.V., Prasanna, M., Namboodiri, V.P. (eds) Computer Vision, Pattern Recognition, Image Processing, and Graphics. NCVPRIPG 2019. Communications in Computer and Information Science, vol 1249. Springer, Singapore. https://doi.org/10.1007/978-981-15-8697-2_35

Download citation

DOI: https://doi.org/10.1007/978-981-15-8697-2_35

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-15-8696-5

Online ISBN: 978-981-15-8697-2

eBook Packages: Computer ScienceComputer Science (R0)