Abstract

To design an effective multi-objective optimization evolutionary algorithms (MOEA), we need to address the following issues: 1) the sensitivity to the shape of true Pareto front (PF) on decomposition-based MOEAs; 2) the loss of diversity due to paying so much attention to the convergence on domination-based MOEAs; 3) the curse of dimensionality for many-objective optimization problems on grid-based MOEAs. This paper proposes an MOEA based on space partitioning (MOEA-SP) to address the above issues. In MOEA-SP, subspaces, partitioned by a k-dimensional tree (kd-tree), are sorted according to a bi-indicator criterion defined in this paper. Subspace-oriented and Max-Min selection methods are introduced to increase selection pressure and maintain diversity, respectively. Experimental studies show that MOEA-SP outperforms several compared algorithms on a set of benchmarks.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Multi-objective optimization problems (MOPs) widely exist in engineering practice. There is no single optimal solution, but a set of trade-off optimal solutions, because of the conflict between objectives. Multi-objective evolutionary algorithms (MOEAs), with the ability to obtain a set of approximately optimal solutions in a single run, have become a useful tool to solve MOPs.

Over the past decades, with the development of evolutionary multi-objective optimization (EMO) research, domination-based and decomposition-based algorithms have attracted many researchers.

One of the most classical domination-based MOEAs is the nondominated sorting genetic algorithm (NSGA-II) [1]. NSGA-II selects offspring according to elitist nondominated sorting and density-estimation metrics. However, it takes too many resources on the convergence during evolution, which results in loss of diversity.

One of the most classical decomposition-based MOEAs is the MOEA based on decomposition (MOEA/D) [2], which decomposes a MOP into multiple single-objective subproblems. Although it simplifies the problem, it still suffers several issues. Decomposition approaches are very sensitive to the shapes of Pareto front (PF). For example, Weighted Sum (WS) can not find Pareto optimal solutions in the nonconvex part of PF with complex shape. On extremely convex PF, a set of solutions obtained by Tchebycheff (TCH) are not uniformly distributed.

To address the sensitivity issue discussed above, some researchers have proposed the grid-based MOEAs, such as the grid-based evolutionary algorithm (GrEA) [3] and the constrained decomposition approach with grids for MOEA (CDG-MOEA) [4]. The two grid-based algorithms evenly partition each dimension (i.e., objective), which is beneficial to maintain diversity. However, with the increase in the number of objectives, the number of grids will increase exponentially, resulting in the curse of dimensionality.

In this paper, an MOEA based on space partitioning (MOEA-SP) is proposed to address the above issues. In MOEA-SP, subspaces, partitioned by a k-dimensional tree (kd-tree), are sorted according to a bi-indicator criterion defined in this paper. The two indicators refer to dominance degree and the niche count that measure convergence and diversity, respectively. The introduction of historical archive pushes the population toward the true PF and distributed evenly.

The rest of this paper is organized as follows. Section 2 describes the related work of MOEAs based on decomposition and grid decomposition. Section 3 gives the details about MOEA-SP. Section 4 presents the experimental studies. Finally, Sect. 5 concludes this paper.

2 Related Work

This section discusses related work regarding the improvement of decomposition-based and grid-based MOEAs.

The weight vector generation method in MOEA/D makes the population size inflexible, and the distribution of the generated weight vector is not uniform. In order to address these issues, researchers have proposed some improved methods. For example, Fang et al. [5] proposed the combination of transformation and uniform design (UD) method. Deb et al. [6] proposed the two-layer weight vector generation method, including the boundary and inside layer, where the weight vectors of the inside layer are shrunk by a coordinate transformation, finally, the weight vectors of the boundary and inside layer are merged into a set of weight vectors.

The three decomposition methods of MOEA/D are sensitive to the shapes of PF, and it is difficult to solve the PF with the shape of nonconvex or extremely convex. For Penalty-based Boundary Intersection (PBI), it is also difficult to set the penalty parameters. The improved methods include inverted PBI (IPBI) [7], new penalty scheme [8], and angle penalized distance (APD) decomposition method [9]. Although these methods improve the performance of the algorithms for some MOPs, they are still sensitive to the shape of PF and difficult to set the penalty parameters. Besides, to overcome the shortcomings of traditional decomposition methods, Liu et al. [10] proposed a new alternative decomposition method, i.e., using a set of reference vectors to divide the objective space into multiple subspaces and assign a subpopulation to evolve in each subspace. This method does not need traditional decomposition methods.

The neighborhood could have a significant impact on generating offspring and environment selection, so the improper definition of the neighborhood relationship may mislead algorithms. To define the proper neighborhood, Zhao et al. [11] proposed to dynamically adjust the neighborhood structure according to the distribution of the current population. To address the issue of the loss of diversity due to the large update area in the selection of offspring, Li et al. [12] introduced the concept of the maximum number of a new solution to replace the old solutions. Zhang et al. [13] proposed a greedy-based replacement strategy, which calculates the improvement of the new solution for each subproblem, and then replaces the solutions of the two subproblems with the new solution with the best improvement.

Although there are many improved methods, they can not fundamentally overcome the above limitations. In order to overcome the limitations of the sensitivity for the shapes of PF and loss of diversity in decomposition-based method, Cai et al. [4] proposed a constrained decomposition approach with grids for MOEA (CDG-MOEA), which uniformly divides the objective space into \(M\times K^{M-1}\) (where K is the grid decomposition parameter, and M is the number of objectives) subproblems, and choose offspring according to a decomposition-based ranking and lexicographic sorting method. CDG-MOEA has great advantages to maintain diversity. However, with the increasing number of objectives, the number of subproblems will increase exponentially, resulting in the curse of dimensionality.

3 MOEA Based on Space Partitioning

This section introduces the design process of the proposed algorithm. The major idea of MOEA-SP is to partition the objective space into a set of subspaces, then select offspring according to the rank of subspaces after sorting.

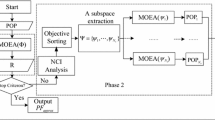

3.1 The Framework of MOEA-SP

Algorithm 1 gives the framework of MOEA-SP in detail. MOEA-SP starts with initialization, then repeat generate offspring, partition objective space, and select offspring (i.e., environmental selection) until the termination conditions are satisfied. The environmental selection mainly includes nondominated sorting of the subspaces, subspace-orient selection, and Max-Min selection.

3.2 Subspace-Oriented Domination and Sorting

In MOEA-SP, the multiple subspaces are obtained after the objective space is partitioned by kd-tree method. The set of subspaces is denoted as \(R=\left\{ s_{1},~s_{2},~...~,~s_{n}\right\} \), where each subspace may contain a number of individuals. As shown in Fig. 1a, the objective space is partitioned into twenty subspaces, eleven of them, which contain individuals, are marked with \(s_{1},...,s_{11}\).

(a) Selection in MOEA-SP with \(A^{r}<N\). (b) Selected in NSGAII. Solid circles represent individuals, where deeper-color circles represent individuals selected. Individuals connected by dotted lines are the same level of individuals after nondominated sorting. Note that in (a), there are individuals distributed in the gray subspaces (i.e, \(s_{1},...,s_{11}\)), and the total number of individuals in the historical archive is twenty-one.

This paper defines the concept of subspace-oriented: neighborhood N(x), niche count (nc), dominance ratio (dr), dominance matrix (D) and dominance degree (dd).

The neighborhood N(x) of individual x is defined as the set of individuals in the neighborhood of the subspace to which it belongs. It is worth noting that a subspace itself belongs to its own neighborhood. As shown in Fig. 1a, for \(x_{1}\) of belonging to \(s_{2}\), the neighborhood of subspace \(s_{2}\) are subspaces \(s_{1},~s_{2},~s_{5}\), which contains five individuals: \(x_{1},~x_{2},~x_{4},~x_{8},~x_{13}\), i.e., \(N(x_{1})=\left\{ x_{1},~x_{2},~x_{4},~x_{8},~x_{13}\right\} \).

The niche count (nc) of the subspace is defined as the sum of all individuals in its adjacent subspaces in historical archive A. The niche count of all subspaces is recorded as \(NC=\left\{ nc(s_{1}),...,nc(s_{n})\right\} \). As shown in lines 9 to 12 of Algorithm 2. For example, the neighborhood of subspace \(s_{2}\) includes subspaces \(s_{1}\), \(s_{2}\), and \(s_{5}\), and they consist of five individuals with a fold of \(x_{1},~x_{2},~x_{4},~x_{8},~\text {and}~x_{13}\), so \(nc(s_{2})=5\). In this example, the niche count of all subspaces can be obtained, i.e., \(NC=\left\{ 3,~5,~2,~4,~5,~9,~11,~7,~6,~9,~9\right\} \).

The dominance ratio (dr) is defined as

where \(NO(s_{1}\prec s_{2})\) denotes the number of objectives subspace \(s_{1}\) dominates \(s_{2}\), and M is the number of objectives. \(dr(s_{1},s_{2})\) denotes the ratio of the difference between the number of objectives \(s_{1}\) dominates \(s_{2}\) and the number of objectives \(s_{2}\) dominates \(s_{1}\) to M, obviously, \(dr(s_{1},s_{2})=-dr(s_{2},s_{1})\). For example, subspaces \(s_{1}\) and \(s_{3}\) in the bi-objective MOP shown in Fig. 1a, \(s_{1}\) dominates \(s_{3}\) on the second objective, and there is no comparability on the first one, so the difference in objectives number of \(s_{1}\) dominance \(s_{3}\) are 1, so \(dr(s_{1},s_{3})=1/M=1/2\). If subspaces \(s_{1}\) and \(s_{2}\) do not dominate each other, then \(dr(s_{1},s_{2})=0\), such as subspaces \(s_{1}\) and \(s_{4}\) are nondominated; if subspace \(s_{1}\) completely dominates \(s_{2}\), then \(dr(s_{1},s_{2})=1\), for example, subspace \(s_{1}\) completely dominates \(s_{7}\).

The dominance matrix of the subspaces is defined as \(D_{n\times n}\), where \(D[i][j]=dr(s_{i},s_{j})\). In this example, as shown in Algorithm 2, the dominance matrix D is calculated as

The dominance degree (dd) of subspace denotes the degree of convergence, calculated according to the dominance matrix D. The dominance degree of all subspaces is recorded as \(DD=\left\{ dd(s_{1}), dd(s_{2}),...,dd(s_{n})\right\} \). As shown in lines 3 to 8 of Algorithm 2, according to the domination matrix, the dominating value \(val_{s}\) and the dominated value \(val\_ed_{s}\) of each subspace can be obtained, i.e., \(val_{s}=\sum _{l\in GP(l)}^{n}D[s][l]\) (\(GP(l)=\left\{ l~|~D[s][l]\ge 0 \right\} \)), \(val\_ed_{s}=\sum _{l\in GN(l)}^{n}|D[s][l]|\) (\(GN(l)=\left\{ l~|~D[s][l]\le 0 \right\} \)), and then the dominance degree of the subspace is calculated by \(dd(s)=val_{s}/val\_ed_{s}\). Note that when val or \(val\_ed\) is 0, dd is assigned to \(+1e5\) or \(-1e5\), respectively. In this example, \(val_{2}=D[2][5]+D[2][6]+D[2][7]+D[2][8]+D[2][9]+D[2][10]=7/2\), \(val\_ed_{2}=|D[2][1]|=1/2\), then \(dd(s_{2})=val_{2}/val\_ed_{2}=7\). Finally, DD can be obtained, i.e., \(DD=\left\{ +1e5,~7,~10,~+1e5,\right. \) \(\left. 4/3,~3/5,~3/7,~2/3,~1/8,~1/9,~-1e5 \right\} \).

In summary, the dominance degree dd and niche count nc can measure the convergence and diversity, respectively and push the population to these two directions. This paper uses the two indicators as two objectives, i.e.,, maximizing dd, minimizing nc, and then performs nondominated sorting by these two indicators. As shown in lines 13 to 14 of Algorithm 2, a new sorting subspaces set R is obtained according to the rank value of nondominated sorting. In this example, according to the DD and NC values calculated above, the eleven subspaces can be divided into eight ranks, \(\left\{ s_{1},s_{3} \right\} , \left\{ s_{4}\right\} , \left\{ s_{2}\right\} , \left\{ s_{5}\right\} , \left\{ s_{8},s_{9}\right\} , \left\{ s_{6}\right\} , \left\{ s_{7},s_{10}\right\} ,\) \(\left\{ s_{11}\right\} \) by nondominated sorting, i.e., \(R=\left\{ s_{1},s_{3},s_{4},s_{2},s_{5},s_{8},s_{9},s_{6}, \right. \) \(\left. s_{7},s_{10},s_{11} \right\} \).

3.3 Environmental Selection

This paper uses the historical archive to find the nondominated individuals for the selection of offspring, where the set of nondominated individuals is recorded as \(A^{r}\), and the set of other individuals is \(A^{d}\).

The environmental selection is divided into two cases according to the size of \(|A^{r}|\), as shown in step 3.3 of Algorithm 1. IF \(|A^{r}|<N\), then besides \(A^{r}\), \(N-|A^{r}|\) individuals need to be selected from \(A^{d}\) for the next-generation population; Otherwise, N individuals of the next-generation population need to be selected from \(A^{r}\).

In either case of the above, the next-generation population is selected according to the sorted subspaces. Algorithm 3 shows subspace-oriented selection in detail, where \(num\_max\) is the maximum number of individuals selected from each subspace. Firstly, we select the individuals from the first subspace in R, and proceed in sequence but not more than N (population size). To maintain diversity, this algorithm sets the maximum number of individuals (\(num\_max\)) selected from each subspace. If the number of individuals in a subspace is not greater than \(num\_max\), then all the individuals in this subspace are selected; Otherwise, \(num\_max\) individuals are selected according to the NSGAII [1].

Selection from Dominated Subspaces. In the first case, the number of nondominated individuals is smaller than N (\(|A^{r}|<N\)). \(N-|A^{r}|\) individuals need to be selected from \(A^{d}\) for next-population, as mentioned earlier. The subspaces set R sorted was obtained by Algorithm 2. The next step is to select individuals from \(A^{d}\) distributed in dominated subspaces, the detailed procedure is shown in Algorithm 4. Note that if individuals in each subspace are not evenly distributed, the number of selected individuals will be less than N. At this time, we need to select the remaining individuals from the unselected \(A^{d}\) according to the NSGA-II [1], as shown in lines 6 to 7 of Algorithm 4.

As shown as Fig. 1a, the result of environment selection for MOEA-SP is \(P=\left\{ x_{1},~x_{2},~x_{3},~x_{4},~x_{9}\right\} \), compared with the result \(P=\left\{ x_{1},~x_{2},~x_{3},~x_{4},~x_{7}\right\} \) of NSGA-II in Fig. 1b. From the results of the two selection, the diversity of MOEA-SP is better than that of NSGA-II during evolution.

Selection from Nondominated Subspaces. In the second case, the number of nondominated individuals is larger than N (\(A^{r}\ge N\)). N individuals of next-generation population need to be selected from \(A^{r}\). As shown in Fig. 2, \(|A^{r}|=7>N=5\). In this case, the population normally enters into the late stage of the evolution. This means diversity should be paid more attention than convergence.

Different from Algorithm 4, in Algorithm 5, \(num\_max \) is the average number of individuals in each subspace, which is set to \(num\_max=\left\lfloor \frac{N}{|R|} \right\rfloor \). In this way, the value of \(num\_max\) will be adaptively adjusted according to the distribution of the current population. The setting of \(num\_max\) ensures that individuals will be selected from each nondominated subspace, which is conducive to maintaining the diversity of the population.

Max-Min selection is described with lines 4 to 13 of Algorithm 5. The major idea is to select an individual each time that its minimum distance to the set of selected individuals is the largest. In other words, an individual, who is as far away as possible from the selected individual, is expected to select. Firstly, the minimum Minkowski distance between each individual in \(A^{r}\) and individual in P is calculated and stored in Dist. Then, the individual in \(A^{r}\) corresponding to the maximum value of Dist is found and selected into P, which proceeds in turn until the number of individuals in P reaches N. The method is conducive to maintaining diversity greatly. Here the Minkowski distance is calculated as

where n is the dimension of x or y, p is a parameter. The reason why the Minkowski distance \((0<p<1)\) is used is that it can enlarge the difference and facilitate the comparison of distances.

For example in Fig. 2, \(num\_max=\left\lfloor \frac{5}{4} \right\rfloor =1\), and individuals \(x_{1},~x_{2},~x_{9},~x_{3}\) are selected from \(s_{1},~s_{2},~s_{3},~s_{4}\), respectively, i.e., \(P=\left\{ x_{1},~x_{2},~x_{9},~x_{3} \right\} \). It is necessary to select an individual from \(\left\{ x_{4},~x_{6},~x_{7} \right\} \) according to the following Max-Min method because of \(5\,-\,|P|=1\). Obviously, individual \(x_{7}\) in \(\left\{ x_{4},~x_{6},~x_{7} \right\} \) is the farthest away from all the selected individuals. So individual \(x_{7}\) is selected, i.e., \(P=\left\{ x_{1},~x_{2},~x_{9},~x_{3},~x_{7} \right\} \).

3.4 Historical Archive Update

The reason for introducing the historical archive is that it can guide the direction of population evolution and facilitate the convergence and diversity of the population. The historical archive participates in the calculation of the aforementioned two indicators and environmental selection. In the early stage of evolution, due to the pressure and information of historical individuals, it promotes the convergence of population and accelerates the evolution speed and efficiency. In the late stage, it can increase diversity to select offspring from the set of all nondominated historical individuals according to the Max-Min method. However, if the number of historical individuals is too large, computing resources will be overtaken, so we need to delete some individuals from the historical archive.

The update of the historical archive in this paper is divided into two cases according to the size of nondominated individuals. In the first case, the number of nondominated individuals is smaller than the size of the population, which needs historical individuals to guide algorithm search. Therefore, the historical archive reserves some representative individuals in each sampled subspace. In the second case, when the number of nondominated individuals is larger than the size of the population, the historical archive only preserves nondominated individuals.

4 Experimental Studies

To verify the validity of the proposed algorithm MOEA-SP, this paper makes some comparative experiments on a set of benchmarks.

4.1 Benchmark Functions and Performance Metric

GLT1-GLT6 [14] benchmark problems are used for testing the performance of algorithms. The shape of these functions has a variety of forms, including convex, nonconvex, extremely convex, disconnected, nonuniformly distributed. The dimensions of the decision variables of GLT1-GLT6 are set to 10.

In this paper, the Inverted Generational Distance (IGD) is used as a performance metric to measure the quality of a solution set P, which represents the average distance from a set of reference points \(P^{*}\) on true PF to the solved population P. The IGD metric is defined as

where dist(v, P) is the minimum Euclidean distance from the solution v in \(P^{*}\) to solution in P. The IGD metric can measure the convergence and diversity of a set of solutions P, and the smaller the value of the IGD is, the better the algorithm performs.

4.2 Peer Algorithms and Parameter Settings

The compared algorithms with MOEA-SP are CDG-MOEA [4], NSGA-II [1] and MOEA/D [2]. These MOEAs belong to grid-based, dominance-based and decomposition-based methods, respectively. The population size is set to 200. Here the population size, in MOEA/D, is set to 210 (the closest integer to 200) for three objective problems because of the same as the number of weight vectors.

The number of evaluations of each test function is: 300,000; \(\delta =0.9 \); In the DE operator: \(CF=1,~F=0.5,~\eta =20,~p_{m}=1/D\), where D is the dimensions of the decision variables. Each of the above four MOEAs runs 30 times independently on each test problem.

4.3 Experimental Results

Table 1 gives the mean and standard deviation of the IGD-metric values of the four algorithms (i.e., MOEA-SP, CDG-MOEA, NSGA-II, and MOEA/D) on GLT1-GLT6 test problems in 30 independent runs, where the IGD-metric value with the best mean for each test problem is highlighted in boldface. MOEA-SP performs the best on GLT5 and GLT6 test problems, compared with the other three MOEAs. For GLT1-GLT4 test problems, the other three MOEAs have their own strong points. NSGA-II performs the best on GLT2 and GLT3. CDG-MOEA and MOEA/D perform the best on GLT1 and GLT4, respectively.

The reason for the best performance of CDG-MOEA on GLT1 is that the grid partitioning is very favorable for GLT1 with the linear shape of PF, compared with the kd-tree partitioning in the MOEA-SP. Although NSGA-II performs the best on GLT3 with the extremely concave PF, MOEA-SP does not perform poorly. As shown in Fig. 3, we give the distribution of the nondominated solutions sets obtained by the four algorithms on GLT3. In terms of the uniformity, the distribution of the nondominated solutions set obtained by MOEA-SP is better than MOEA/D. The performance of MOEA-SP on GLT5 and GLT6 is superior to the other three MOEAs. The reason is that, in MOEA-SP, the subspace sorting and the subspace-oriented selection increase selection pressure and are conducive to solving GLT5 and GLT6 with three objectives. As shown in Fig. 4, we give the distribution of the nondominated solutions sets obtained by the four algorithms on GLT6. The distribution of nondominated solutions set obtained by MOEA-SP on GLT6 is superior to the other three algorithms in terms of both the convergence and diversity.

5 Conclusion

This paper proposes a novel MOEA based on space partitioning (MOEA-SP). The proposed MOEA-SP transforms a MOP into a bi-objectives optimization problem to sort the subspaces, then selects offspring by the subspace-oriented selection, which simplifies the complexity of the problem. The kd-tree space partitioning method overcomes the limitation of shape sensitivity to PF and the curse of dimensionality. The subspaces sorting and the Max-Min selection are used for pushing the population convergence and maintaining the diversity, respectively, which has great potential to solve MaOPs. MOEA-SP is compared with three MOEAs on GLT test suite. The experimental results show that MOEA-SP outperforms the compared algorithms in some benchmarks.

Although MOEA-SP overcomes some of the above issues, it still faces great challenges. If the number of partitioning subspaces and the value of \(num\_max\) are not set properly, the performance of the algorithm will be affected to some extent. In addition, the definition of the bi-indicator criterion needs to fine-tune to the more accurate description of the convergence and the diversity. Adaptively adjusting the \(num\_max\) and furtherly modifying the definition of the bi-indicator criterion what needs to be done in the future.

References

Deb, K., Pratap, A., Agarwal, S., Meyarivan, T.: A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evol. Comput. 6(2), 182–197 (2002)

Zhang, Q., Hui, L.: MOEA/D: a multiobjective evolutionary algorithm based on decomposition. IEEE Trans. Evol. Comput. 11(6), 712–731 (2007)

Yang, S., Li, M., Liu, X., Zheng, J.: A grid based evolutionary algorithm for many objective optimization. IEEE Trans. Evol. Comput. 17(5), 721–736 (2013)

Cai, X., Mei, Z., Fan, Z., Zhang, Q.: A constrained decomposition approach with grids for evolutionary multiobjective optimization. IEEE Trans. Evol. Comput. 22(4), 564–577 (2017)

Fang, K., Yang, Z.: On uniform design of experiments with restricted mixtures and generation of uniform distribution on some domains. Stat. Probab. Lett. 46(2), 113–120 (2000)

Deb, K., Jain, H.: An evolutionary many-objective optimization algorithm using reference-point-based nondominated sorting approach, part I: solving problems with box constraints. IEEE Trans. Evol. Comput. 18(4), 577–601 (2014)

Sato, H.: Analysis of inverted PBI and comparison with other scalarizing functions in decomposition based MOEAs. J. Heuristics 21(6), 819–849 (2015). https://doi.org/10.1007/s10732-015-9301-6

Yang, S., Jiang, S., Jiang, Y.: Improving the multiobjective evolutionary algorithm based on decomposition with new penalty schemes. Soft Comput. 21(16), 4677–4691 (2016). https://doi.org/10.1007/s00500-016-2076-3

Cheng, R., Jin, Y., Olhofer, M., Sendhoff, B.: A reference vector guided evolutionary algorithm for many-objective optimization. IEEE Trans. Evol. Comput. 20(5), 773–791 (2016)

Liu, H., Gu, F., Zhang, Q.: Decomposition of a multiobjective optimization problem into a number of simple multiobjective subproblems. IEEE Trans. Evol. Comput. 18(3), 450–455 (2014)

Zhao, S., Suganthan, P., Zhang, Q.: Decomposition-based multiobjective evolutionary algorithm with an ensemble of neighborhood sizes. IEEE Trans. Evol. Comput. 16(3), 442–446 (2012)

Li, H., Zhang, Q.: Multiobjective optimization problems with complicated pareto sets, MOEA/D and NSGA-II. IEEE Trans. Evol. Comput. 13(2), 284–302 (2009)

Zhang, H., Zhang, X., Gao, X., Song, S.: Self-organizing multiobjective optimization based on decomposition with neighborhood ensemble. Neurocomputing 173, 1868–1884 (2016)

Gu, F., Liu, H., Tan, K.: A multiobjective evolutionary algorithm using dynamic weight design method. Int. J. Innov. Comput. Inf. Control IJICIC 8(5), 3677–3688 (2012)

Acknowledgments

This work was supported in part by the National Natural Science Foundation of China under Grant No. 61673355, in part by the Fundamental Research Funds for the Central Universities, China University of Geosciences (Wuhan) under Grant (CUG170603, CUGGC02).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Wu, X., Li, C., Zeng, S., Yang, S. (2020). A Novel Multi-objective Evolutionary Algorithm Based on Space Partitioning. In: Li, K., Li, W., Wang, H., Liu, Y. (eds) Artificial Intelligence Algorithms and Applications. ISICA 2019. Communications in Computer and Information Science, vol 1205. Springer, Singapore. https://doi.org/10.1007/978-981-15-5577-0_10

Download citation

DOI: https://doi.org/10.1007/978-981-15-5577-0_10

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-15-5576-3

Online ISBN: 978-981-15-5577-0

eBook Packages: Computer ScienceComputer Science (R0)