Abstract

Averaging is a fundamental necessity for deep photoacoustic (PA) imaging when using low-energy pulsed laser sources or LED’s. Intrinsic (breathing, heartbeat…) or extrinsic (freehand probe guidance) tissue motion, however, leads to phase cancellation of the averaged PA signal when the axial displacement of tissue becomes larger than half the acoustic wavelength at the probe’s centre frequency. Motion-compensated averaging (DCA) is a solution to this problem, and allows the detection of deep structures that are else not visible. In a combined PA and echo-ultrasound (US) system, tissue motion can be quantified in US images that are interleaved with PA images. In this chapter, we exemplarily illustrate the power of this technique when trying to image the optical absorption inside the carotid artery, using a fully integrated PA/US system based on a handheld clinical probe containing a miniaturised laser source. The key components of DCA are discussed and exemplified on volunteer data, and the influence of various parameters on image contrast is investigated. We demonstrate that DCA enables freehand PA detection of blood vessels at a depth of 1.5 cm using only 2 mJ pulse energy, and give some guidelines for image interpretation.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

1 Introduction

One of the promising application areas of photoacoustic (PA) imaging is its integration with clinical handheld ultrasonography [1, 2], to complement classical B-mode and colour flow imaging, and more recently elastography [3] and speed-of-sound imaging [4,5,6,7,8], with new valuable diagnostic information in a single multi-modal handheld system. For such a system being flexible and widely affordable, the pulsed light source is preferably integrated in the handheld probe itself. For this purpose, various groups and companies have developed light emitting diode (LED) and laser diode (LD) based miniaturised light sources [9,10,11,12,13,14,15,16,17]. So far, these systems have in common that the pulse energy is very low, compared to the more commonly used—but bulky and expensive—external solid-state lasers. For deep PA imaging where SNR becomes an important issue due to optical attenuation, the low pulse energy can partially be compensated for by increasing the pulse repetition frequency (prf) together with more extensive averaging. Laser safety regulations, however, limit the average irradiated power per unit area, so that—for a given total averaging time—the SNR (which is proportional to the square-root of the number of pulses) decreases with increasing prf due to the linearly decreasing maximum permissible pulse energy. Put differently, the lower the pulse energy, the longer the averaging time required to achieve a target SNR. This makes averaging substantially more important for low energy PA systems than for the ones using high-energy solid-state lasers.

Especially for deep imaging where longer averaging times are required than for superficial imaging, averaging becomes more challenging owing to tissue motion. On one hand, motion of tissue relative to the probe aperture occurs due to involuntary probe motion. On the other hand, the tissue exhibits intrinsic motion even when the probe is static, due to pulsating arteries, the beating heart or breathing, among others. With the 7.5–15 MHz centre frequencies that are typically used for high-resolution US imaging of a few cm depth range, the displacement magnitude of intrinsic tissue motion can easily exceed half an US wavelength. As a result, conventional averaging leads to phase cancellation of the PA signals, limiting the maximum averaging time up to which an SNR improvement is possible.

A solution to this problem is motion-compensated averaging, or—as previously named—displacement-compensated averaging (DCA) [18,19,20,21]. This technique takes benefit of the interleaved acquisition of pulse-echo data with PA data, which allows to estimate the tissue motion by tracking anatomical details in US images, and—subsequently—to motion-compensate PA images before averaging. DCA has originally been proposed for reducing clutter noise in PA imaging, along with other clutter-reduction techniques [22,23,24,25,26,27]. Clutter consists of PA echoes and out-of-plane PA signals, and it is a prominent noise source especially in reflection-mode PA imaging where it cannot be temporally separated from “real” direct in-plane PA signals [28, 29]. Since clutter is a systematic noise, it cannot be removed by conventional averaging, and thus poses an ultimate limit to imaging depth. DCA takes benefit of the fact that, upon tissue deformation, clutter behaves differently than the “real” signals, as the apparent reconstructed location of clutter does not coincide with the actual source location (else it would be “real” signal). Due to this different behaviour, clutter tends to decorrelate along a motion-compensated PA image sequence, thus the clutter intensity level can be reduced by averaging. In solid-state laser PA imaging where clutter is more prominent that thermal noise, we have demonstrated that DCA substantially improves contrast and imaging depth.

In low-pulse-energy deep PA imaging where thermal noise is more prominent than clutter, the main benefit of DCA is that the motion compensation allows for more extensive averaging and thus improved SNR by reducing the effect of phase cancellation. In an ideal case where the tracking is perfectly accurate and no out-of-plane motion occurs, at least the same SNR can be achieved as if tissue motion would be absent. In a more realistic scenario, however, decorrelation of US echoes and out-of-plane motion results in tracking and compensation errors. In addition, out-of-plane motion leads to decorrelation of PA image features so that phase cancellation can occur even with accurate motion compensation. For this reason, the optimum SNR is obtained in a trade-off between averaging time, tracking errors and out-of-plane motion. Depending on intrinsic tissue displacement magnitude and complexity of tissue structures (slipping boundaries and architectural anisotropy leading to 3D motion field), the sweet spot in this trade-off limits the achievable SNR. A further limitation to averaging time stems from the necessity of real-time feedback: the effective frame rate is given by the averaging time constant. Above a couple of seconds, the lag between freehand probe guidance and the effect on the DCA result makes it difficult for an operator to choose a probe placement that optimises the DCA result.

Along these lines, this chapter is dedicated to the elaboration of the various components and features of DCA and the investigation of their influence, exemplified on a specific implementation for an LD-based fully integrated handheld PA/US probe. In Sect. 2, we focus on the design of the main component of DCA, namely the motion tracking of US images. The tracking algorithm needs to be fast as well as robust, and its specific implementation is dictated by limitations of the specific acquisition system. In Sect. 3, we detail the experimental setup and processing steps, including a novel way of how to overlay the PA signal with the US image. In Sect. 4 we illustrate the various steps in the DCA processing in volunteer results, with a focus on the role of various parameters that influence its performance. To make the benefit of DCA most evident, we focus on the detection of the carotid artery which shows a large intrinsic pulsatile motion making it especially difficult to image. In addition we give more general experience on how to interpret DCA images, especially on how to identify real PA signal (as opposed to clutter) based on the US image. Our results demonstrate that detection of the carotid artery and other blood vessels at a depth of 1.5 cm is feasible using only 2 mJ pulse energy (80 Hz prf, 2.5 s averaging time), and they confirm that DCA is essential for achieving this imaging depth, not only by allowing effective averaging but also via its clutter-reducing effect. These results are especially important for LED-based PA imaging where the pulse energy is substantially lower.

2 DCA Prerequisites

2.1 US Image Quality

The motion tracking accuracy is crucial to the achievable DCA outcome. Tracking errors can stem on one hand from noise in the US images, on the other hand from imperfections of the tracking algorithm. First, we put a focus on the US image noise. Apart from thermal noise, US images contain clutter noise the same as PA images do. Clutter noise consists of system-related artifacts (such as side-lobes, grating lobes due to below-Nyquist sampling of the element-to-element pitch …) but also of tissue-related noise caused by higher-order echoes (multiply scattered US), which cannot be distinguished from the first-order echoes that make up a “clean” US image. Since the echogenicity (echo strength) of tissue varies on a large dynamic range (tens of dB), low echogenicity areas are easily dominated by clutter spreading from high echogenicity areas. The same as in PA, clutter noise in US tends to decorrelate with tissue deformation, leading to wrong detection of the echo shift in regions where clutter dominates. For this reason, a key point of attention in DCA is the optimisation of US image quality in terms of signal-to-clutter ratio (SCR).

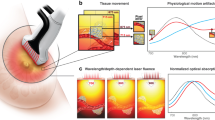

In a classical line-by-line scan (LLS), US power is transmitted into a narrow collimated or slightly focused beam at a time, and the probe receives a dynamically focused signal (conventionally using delay-and-sum) from inside that beam. The time trace of the signal forms one image “line”, and multiple lines are obtained when scanning the tissue with the beam and together form an image. With the advances of hardware development of the past decade, plane-wave (PW) (or ultrafast) US imaging has become popular [30], where a single plane US pulse is transmitted into the tissue and signals are digitized simultaneously on all elements on receive, allowing for the reconstruction of a large field-of-view (FoV) image in a single shot. While PW imaging has a great speed advantage over LLS, the big disadvantage is the much higher clutter noise level, making this type of image unsuitable for motion tracking. Figure 1a, b show an LLS and a PW image of the same region around the carotid artery, demonstrating the substantial difference in contrast. Especially note the increased apparent echogenicity inside the carotid in Fig. 1b.

a Line-by-line scan of tissue around carotid artery (c: carotid lumen; th: thyroid gland; m: muscle). b Plane-wave image of same area. c Image when irradiating a single line but reconstructing the full image area. The beam profile and the event horizon are indicated by white and red dashed lines, respectively. d Result of coherent plane-wave compounding. All images are displayed in the same dB scale covering 60 dB. Note that c was not taken at the exactly same position as a, b, d. These images were produced using a Vantage 64 LE research US system (Verasonics Inc. WA)

The increased clutter noise in PW imaging as compared to LLS consists of diffuse 2nd order echoes. In the LLS, the US power is transmitted into a narrow beam. The 1st order echoes are generated only inside that beam, but 2nd order scattering leads to echoes that propagate back to the probe also from outside the beam. This is illustrated in Fig. 1c which shows an image when irradiating only one beam but reconstructing a large tissue area around the beam. The intensity follows the actual beam profile only near the transducer aperture (upper part of the image) and echoes are reconstructed also outside the actual beam where echo intensity is dominated by 2nd and higher order echoes. These echoes are confined by an “event horizon” that marks the first possible arrival of an echo (of any order) at the different probe elements. In a line-by-line scan, the receive part is focused into the same area as the transmitted beam, i.e. only pixels inside that area are reconstructed. Therefore, the out-of-beam 2nd order echoes are less sensitively detected than the in-focus 1st order echoes. The sensitivity ratio determines the SCR in the image line. In a PW image, where a broad unfocused US pulse is transmitted into a large tissue region at once, 2nd order echoes are detected from inside the receiving beam area that originate from the irradiated tissue outside the receive area. Therefore, the relative contribution of 2nd order echoes is much higher than in a LLS.

An alternative to PW imaging for improving US quality is coherent plane wave compounding (PWC) [31]. In this technique, PW images are acquired with a variety of different PW transmit (Tx) angles. For each angle an image is reconstructed, and all images are coherently averaged (i.e. before envelope detection). The result is shown in Fig. 1d, an image that looks practically identical to the line-by-line scan in terms of contrast and spatial resolution. The observed SCR improvement can be understood from two perspectives: first, diffuse 2nd and higher order echoes decorrelate with varying Tx angle, so that averaging reduces the intensity of such echoes due to phase cancellation; second, coherent averaging of images obtained with different Tx angles corresponds to synthetic Tx focusing, similar to the (coherent) delay-and-sum (DAS) beamforming that synthetically focuses the transducer on receive (Rx). In that view, the Tx angle range in PWC is equivalent to the angular aperture in a LLS. For this equivalence to hold, the angle spacing should be chosen sufficiently small so that a hypothetical superposition of the plane pulses (with appropriate relative delays) could indeed result in a single focused or collimated beam within the size of the probe aperture. Then the SCR is similar or even better than the one of LLS. With a larger angle step, the hypothetical superposition would result in multiple parallel beams, the larger the step the more beams. This results in reduced SCR, equivalent to an increased clutter level that results from 2nd order echoes that couple from adjacent Tx beams into a Rx beam.

PWC is referred to as “ultrafast imaging” [30], referring to the fact that a full image can be reconstructed from a single or few PW acquisitions. One has, however, to keep in mind that to achieve an identical spatial resolution (identical angular aperture of Tx focusing) as well as an identical SCR (this was followed in Fig. 1), LLS and PWC require the same number of acquisitions (taking into account that dynamic Tx focusing can be achieved in a line-by-line scan by retrospective Tx beamforming [32]). A disadvantage of PWC compared to LLS can be the larger amount of data that needs to be transferred and processed, as for each angle, data are required for all probe elements and each pixel must be reconstructed. In LLS only the elements corresponding to a certain line need be active on receive and only the pixels inside the corresponding Tx beam area need to be reconstructed. A different practical difference between the two techniques is the different way in which motion affects the final image. In LLS, abrupt motion shows up as a relative shift of different parts of the image, and—in retrospective Tx beamforming—degrades SCR around the line that was acquired during the motion due to phase cancellation. In PWC, the phase cancellation due to motion equally degrades the SCR in the whole image, but the degradation is weaker than in LLS. Depending on the specific application, one or the other technique can be more advantageous. Ultimately, the system at hand will determine what type of data can be acquired (e.g. the specific system presented in this chapter only allows interleaved acquisition of PWC data with PA).

2.2 Motion Tracking Algorithm

Previously we proposed Loupas’ phase correlation (LPC) [33] for estimating the tissue motion field based on quantifying the resulting phase shift of US echoes. Assuming that the array probes bandwidth \(\left( {BW} \right)\) is smaller than the centre frequency \(f_{0}\) (this is typically the case for standard clinical US probes), it is practical to model a beamformed (e.g. using DAS) radio-frequency (RF) signal \(s\left( z \right)\) (where \(z\) is the axial dimension) as the product of a “slowly” (given by \(BW\)) varying complex envelope \(S\left( z \right)\) with a “quickly” (given by \(f_{0}\)) oscillating complex exponential carrier:

where c is the speed of sound. Note that Eq. 1 contains a factor 2 in the complex exponent that accounts for the two-way propagation in echo US. Assuming that no lateral nor out-of-plane motion occurs, and that the gradient of the motion along the axial direction \(z\) is small, image lines acquired before (\(s_{n}\)) and after (\(s_{n + 1}\)) a motion step are identical apart from a \(z\)–dependent shift \({ }\Delta z_{n,n + 1} \left( z \right)\) and can therefore be modelled as:

If, in addition, \(\Delta z\) is smaller than half the oscillation period \({\Lambda }\), \(\Delta z\) can be estimated from the point-wise Hermitian product \(C_{n,n + 1}\) between \(s_{n}\) and \(s_{n + 1}\):

For the last step of Eq. 3, one uses the assumption that \(S\left( z \right)\) varies “slowly” compared to \({\Lambda }\) and \(\Delta z\) and thus can be assumed constant over the distance \(\Delta z\). Since the pre-factor to the complex exponential in Eq. 3 is thus real, \(\Delta z\) can be estimated based on the phase angle of \(C\). Even though we assumed purely axial motion, LPC is not limited to axial motion: by acquiring US images with two (or more) different view directions through Tx and/or Rx beamsteering, a 2D-vector (or 3D for 2D arrays) field can be obtained.

Now, let’s have a closer look at the various assumptions that were involved in the derivation of Eq. 4:

No lateral motion: Accepting some error, it can be slackened, saying that lateral motion has to be below the lateral resolution of the image. In case this assumption is not fulfilled, lateral motion causes decorrelation of \(S\) resulting in tracking errors. Such errors can be reduced by reducing the lateral resolution (e.g. by lateral spatial low-pass filtering [34]), but at the cost of lateral resolution of the motion field.

No out-of-plane motion: Out-of-plane motion can lead to decorrelation of \(S\) without any possible remedy. Therefore, this condition is crucial for any motion tracking algorithm to work. Also, out-of-plane motion can decorrelate the PA signal, making DCA useless. Therefore, real-time display of US images during DCA is a very important feedback for probe guidance, to help minimise out-of-plane motion.

Small axial gradient of motion field: The envelope \(S\) is the result of the interference (destructive and constructive) of echoes generated by reflectors that cannot be resolved by the axial impulse response (given by the \(BW\)) of the system. If the displacement magnitude changes by about \(0.5{\Lambda }\) within the length of the axial impulse response, then the changing relative position of reflectors results in a changing interference of the echoes (destructive turns to constructive and vice versa) and thus to full decorrelation of \(S\). Even for smaller gradients, partial decorrelation occurs [34].

Slowly varying envelope: The shorter the axial impulse response (the larger the \(BW\)), the more the complex envelope can vary with the displacement, and the pre-factor in the last line of Eq. 3 deviates from a real number so that the relation between the phase angle and the displacement magnitude becomes inaccurate. Even for a broadband signal, it is possible to enforce a narrower \(BW\) and thus a more slowly varying envelope by bandpass filtering. The increased length of the axial impulse response, however, leads in turn to more decorrelation of \(S\) according to the previous paragraph. For this reason, the correlation length together with the axial gradient of the motion field determine a minimum decorrelation rate.

The net effect when the above conditions are only partially fulfilled is thus decorrelation of \(S\), which in turn results in tracking noise. To reduce tracking noise, one employs a convolution of \(C\) with a typically 2D window function (or 3D for matrix arrays) before calculating the phase angle. Similar to the axial impulse response length, an increasing axial length of this tracking window induces errors when the gradient of the motion field is not zero. The choice of the tracking window length thus deserves attention, and depends on the application.

The main limitation of LPC is that the displacement magnitude has to be smaller than \(0.5{\Lambda }\) (0.075 mm at 5 MHz) to avoid phase aliasing. One could argue that it is possible to enforce this condition by properly choosing \(f_{0}\) via bandpass filtering. In practise however, this approach is limited by the bandwidth of the system. A way to track large tissue displacements is to make sure the condition is fulfilled between successive US acquisitions, and accumulate the motion field over time [34]. With externally induced tissue motion, the motion between successive US acquisitions can be controlled to be sufficiently small. In carotid imaging, however, the intrinsic pulsatile motion of the artery wall can easily lead to a total displacement on the order of several \({\Lambda }\) in a fraction of a second. In such a case, one can in principle choose the framerate fast enough to capture sufficiently small motion intervals. Depending on the US system at hand, however, such a high frame rate may not be possible, either due to limited data transfer speed and/or due to limited processing speed. With the system used for this exemplary study, the frame rate was limited by the transfer and processing speed to about 10 fps. This made a type of tracking algorithm necessary that is capable of accommodating displacement magnitudes of several \({\Lambda }\) length.

In the US elastography literature, block-matching (BM) is often used for tracking large displacement magnitudes [35,36,37]: the similarity of image patches of successive images is quantified using a similarity measure (e.g. cross-correlation) for a variety of test displacements (“search approach”), resulting in a map of the value of the similarity measure. The displacement is then estimated as the one that optimises the similarity measure. In comparison to the BM techniques, LPC has several advantages: (i) it is fast because it is based on a point-wise calculation whereas BM requires a time-consuming search approach; (ii) it is more robust because it can accurately determine phase shift even in low SNR situations where BM fails when the noise modifies the amplitude distribution (“peak-hopping”) [38]; and (iii) it directly gives an accurate continuous-valued result of displacement magnitude, whereas an error-prone interpolation is required in BM to determine fractional displacements from the discrete search area. To avoid the interpolation, some authors have proposed to combine BM with LPC (BM-LPC) [39, 40], where BM is used for a rough estimate of the displacement and LPC is used for fine-tuning.

For the system proposed in this chapter we designed a different approach that is more robust and faster than BM-LPC: similar to some BM techniques, this approach makes use of the envelope of the complex RF-mode image, but instead of BM it consequently employs the LPC concept for improved speed and accuracy. As mentioned above, the limitation to the use of LPC is the limited bandwidth of the RF signal, so that the low frequencies that would be required for detecting large displacements are not available. The envelope, on the other hand, can have far lower spatial frequencies, but also a much larger fractional bandwidth so that the prerequisite for LPC is not fulfilled. To solve this problem, we bandpass-filter the envelope, to obtain synthetic RF data to which LPC can be readily applied. For each motion step \(n\), the bandpass-filtered envelope at the bandpass frequency \(k\), \(u_{n,k}\), is defined as:

Note that the envelope is defined here as the squared absolute value of the RF signal. The reasoning behind this definition as opposed to the absolute value itself is as follows: the absolute value can have sharp edges at the locations where adjacent echoes interfere to zero amplitude. These edges contain artificially high spatial frequencies above the actual spatial resolution given by the probe bandwidth. The squared absolute value, on the other hand, contains only truly resolvable spatial variations. It is moreover reasonable in a physics sense, as it corresponds to an actual physical quantity, i.e. energy density, whereas the absolute value itself doesn’t.

The filtering and tracking are done in a multi-stage approach: In a first stage, the bandpass centre frequency is chosen sufficiently small so that the largest experienced displacement magnitude is smaller than half the wavelength \({\Lambda }_{k}\) of the \(k\)th bandpass filter. LPC of successive frames \(u_{n,k}\) and \(u_{n + 1,k}\) results in a first displacement estimate \(\widehat{\Delta z}_{n,n + 1,k}\), albeit at a low spatial resolution. To increase the spatial resolution, this displacement estimate is then used to motion-compensate the frames \(u_{n,k + 1}\) and \(u_{n + 1,k + 1}\) at the next higher bandpass frequency. After motion compensation, the residual displacement is ideally smaller than \({\Lambda }_{k + 1}\) so that LPC can be applied without phase aliasing on this stage, resulting in an estimate of the residual displacement. This estimate is added to \(\widehat{{{ }\Delta z}}_{n,n + 1,k}\) resulting in a refined estimate of the displacement, \(\widehat{{{ }\Delta z}}_{n,n + 1,k + 1}\). This procedure is repeated for all chosen filter stages. In the end, a final residual displacement estimate can be obtained from LPC of the motion corrected RF signals \(s_{n}\) and \(s_{n + 1}\).

3 Combined Handheld PA and US System

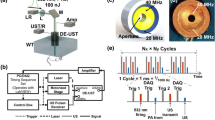

3.1 Acquisition System

For illustration of its benefit for low energy handheld PA imaging, we exemplarily show results of the implementation of DCA on a system that was developed within the H2020 project Cvent. The goal of Cvent is an improved diagnosis of plaque vulnerability using PA detection of blood clots inside carotid plaque. The system contains a fully integrated hand-held probe, based on a pre-existing commercial linear-array probe (7.5 MHz centre frequency, 5 MHz bandwidth, 0.245 mm element pitch) that was re-engineered to contain a built-in multi-wavelength diode laser source for PA imaging. Figure 2 shows a picture of the probe. Probes with various combinations of optical wavelengths in the near infrared were produced. The results presented in the next section were obtained using a single wavelength at 808 nm, irradiating the skin alongside the linear array through an elongated area of 1.5 cm2 with pulses of 60 ns duration and 2 mJ pulse energy. An average pulse repetition rate of 80 Hz was used in this study, resulting in 100 mW/cm2 time average irradiance well below the safety limit of 330 mW/cm2 according to IEC 60825-1. The probe is connected to a commercial portable ultrasound system (MyLab™ One, Esaote Europe B.V., NL) for data acquisition. The limited on-board memory of the system allows to acquire a maximum of 9 PW data frames (for US imaging), each covering a depth range of 10 mm, and 10 PA data frames covering a depth range of 20 mm. After filling the on-board memory, the data (a “burst”) is transferred via USB to a PC, where processing is performed on graphical processing units (GPU). For the presented results, an Acer Aspire E 15 laptop (Intel core i7-6500U, 2.5 GHz) was used with a built in NVIDIA GeForce 940 MX graphics card. With this PC, the over-all speed (transfer and processing) allowed for processing 8 bursts per second, allowing real-time imaging.

3.2 Image Reconstruction

Both the US and the PA images were reconstructed using conventional DAS algorithms. The carotid artery of the volunteer was located at a depth of 10–15 mm and had about 5 mm diameter, and thus could be easily covered by the 20 mm depth range of the PA acquisitions. The US acquisitions, however, only cover a range of 10 mm. For visual inspection of potential echo clutter in the PA images, the US images must show the tissue located between the probe and the carotid. This allows identifying PA signal as real signal or clutter based on the absence or presence of strong echoes at roughly half the depth. At the same time, the US images must also contain the carotid to allow motion tracking of the tissue at the location where DCA is most important. An US depth range covering superficial and deep tissue is also desired by the clinicians as it helps interpretation of the anatomical context during freehand probe guidance. A 20 mm US depth range was therefore achieved via the spatial distribution and superposition of the 9 US patches (Fig. 3a). The distribution was done in a way that PWC with 7 different angles (−3° to 3° in 1° steps) was achieved in an area covering the upper part of the carotid, where US image quality was most important for motion tracking. The areas above and below the artery as well as at the lateral edges of the image contained less angles, resulting in reduced image quality. This was regarded acceptable as these regions were only needed for identification of the anatomy. For the demonstration of imaging depth using DCA, however, our desire was to achieve the maximum possible motion tracking accuracy resulting in maximum possible contrast-to-noise ratio (CNR) given the system limitations. For this purpose, we designed an additional mode where all 9 US acquisitions were placed on top of the location of the carotid artery (Fig. 3b) so that PWC could be performed using 9 (−4° to 4° in 1° steps) instead of only 7 angles, as we noticed that the extra angles resulted in a visible reduction of the tracking noise.

Arrangement of the 9 US patches (indicated by solid rectangles and lines) acquired with various different angles for plane-wave compounding, covering an extended depth range (a) and a reduced depth range (b). The full image area is denoted by a dashed rectangle, and the upper edge of the carotid lumen is indicated by solid arcs

Both the US and the PA images were reconstructed in complex RF-mode with a pixel resolution of 0.15 mm (laterally) by 0.083 mm (axially). The 10 PA acquisitions of each burst were averaged before image reconstruction to reduce numerical cost. This could be done without motion compensation, as tissue motion during a burst was negligible.

3.3 DCA Details

Motion tracking was performed as described in Sect. 2. The bandpass convolution kernels were implemented as a truncated sinc function multiplied with an exponential carrier

for \(k\) corresponding to virtual centre frequencies [0.25, 0.5, 1.0] MHz. The local averaging of the complex correlation prior to determining the phase angle was implemented as a successive convolution in lateral and axial direction, with hamming windows with length \(1/k\) corresponding to [3.0, 1.5, 0.75] mm. For the last stage, the tracking of the actual RF signal, the local averaging was implemented the same way but with a window length of 1.5 mm.

Motion compensation was performed via interpolation of the complex envelope of the complex RF-mode images (the IQ-data), followed by a phase correction to account for deviations of the continuous-valued displacement map from the discrete pixel grid. The reason is that the axial grid required for interpolation of the IQ-data can be sampled with the resolution given by the probe bandwidth, whereas interpolation of RF-data would require a higher resolution given by the (larger) centre frequency and, thus, increased computing time for image reconstruction.

Apart from the tracking and motion compensation algorithm, an important detail of a DCA implementation is what motion information is extracted from the US images and how this information is used for motion compensation: motion tracking can either be performed between successive US images or relative to a fixed reference. When determining displacement relative to a fixed reference, the disadvantage is that the increasing decorrelation of echoes with time (e.g. due to out-of-plane motion) leads to increasing displacement quantification errors. The advantage is that, for any displacement, such errors are only made once. When determining displacement between successive US frames, the quantification errors are on average smaller, but when accumulating displacement maps over larger time intervals, errors accumulate. Depending on the statistics of echo correlation/decorrelation, the accumulated errors can become larger than the errors of a large but single tracking step. Motion compensation can either be performed in a forward or in a backward way. In forward compensation, one option is to compensate the DCA result of time step \(n - 1\) for the displacement from US frame \(\left( {n - 1} \right)\) to frame \(n\), and update with the PA frame from time step \(n\). This implementation is computationally efficient because only one motion compensation is required per time step, but it is only applicable if a moving average with exponential weights is desired/acceptable. Alternatively, a number of past PA frames from time steps \(\left( {n - m} \right)\) to \(\left( {n - 1} \right)\) can be compensated for the displacement between US frames \(\left( {n - m{ }} \right)\) to \(\left( {n - 1} \right)\) and the US frame at step \(n\), and averaged with the PA frame at step \(n\) using arbitrary averaging weights. The disadvantage of this approach is that it is computationally more expensive as \(m\) motion compensation operations are required at each time step. In forward compensation, tissue motion is visually preserved in the DCA result, which can be considered an advantage. In backward compensation, on the other hand, PA frames from time step \(n\) are compensated for the displacement between US frame \(n\) and the US frame at a constant time step \(n_{0} < n\). Averaging can be performed with arbitrary averaging weights, even though only one motion compensation operation is required per time step. This combination of flexibility and computational efficiency is a big advantage of backward in comparison to forward compensation. In addition, backward compensation of US images in parallel to PA can serve as a useful feedback for assessing tracking quality: a static backward-compensated US image indicates perfect tracking.

Given the rather low framerate of the Cvent system, it turned out that a combination of fixed reference motion tracking and backward compensation worked best for imaging down to the depth of the carotid artery. For visual feedback on tracking accuracy, DCA was applied not only to PA but also to US images. The US images were thus not only backward compensated, but also averaged over time. In addition to allowing a coarse assessment of tracking accuracy based on the absence of motion in the back-compensated image, averaging of motion-compensated RF-mode US images provides additional feedback as small tracking errors show up as phase cancellation artifacts.

A moving average with exponential weights \(w\left( n \right)\) was used to define the DCA result \(P_{dca} \left( n \right)\) because this could be efficiently implemented in a recursive way:

Thereby, \(P\left( n \right)\) is the nth motion-compensated frame (either PA or US). For PA, a time constant \(T\) of 20 bursts (i.e. \(w\) decreases to 1/e over 20 bursts) is used in practise, corresponding to a 2.5 s average delay between acquisition and display, but we will also show the influence of different time constants in the next section. For US feedback on tracking quality, a shorter time constant of 5 bursts was used, as this was sufficient to reveal phase cancellation due to tracking errors but provided a more immediate feedback (0.6 s) which was helpful for adjusting probe guidance.

3.4 Image Display

The software of the Cvent system was programmed to display two different images side-by-side: on the left, image 1 displays a “high quality” (HQ) US image, to help guide the radiologist within the anatomical context when looking for plaque. This US image is not motion-compensated nor averaged. Standard US image post-processing was implemented (envelope detection, logarithmic compression, speckle filtering) to match, as far as feasible (given the limited amount of data), standard B-mode US image quality. On the right, image 2 provides an overlay of the DCA PA image with the DCA US image. Again, envelope detection and logarithmic compression was used for the PA image, but no speckle filtering. Depth-gain compensation (TGC) was applied to both to US and PA, to reduce intensity variations caused by optical and ultrasound attenuation.

Combining PA and US data in a single image has the advantage that the PA signal can be identified within the anatomical context given by the echo texture in US. Various different ways of combining PA and US data are possible. One way would be to simply blend the colour maps of the two modalities. When using clinical probes this is, however, not a good approach: the limited bandwidth of clinical probes cuts off low spatial frequencies of PA signals. High spatial frequency detail in PA images is mainly found as sparsely distributed small blood vessels and surfaces of larger blood vessels or tissue interfaces (e.g. between fat and muscle). Large areas of the PA image thus do not contain useful information, and simply blending colour maps would unnecessarily tone the US image in these areas. A simple and popular way of combining PA and US data is thus by displaying PA data in colour scale only where PA intensity exceeds a certain threshold which is chosen above the expected noise intensity level. The disadvantage of this technique is that noise not only consists of thermal noise but also contains clutter noise. The latter can vary, depending on the imaging location but also depending on variations in the light coupling efficiency, so that the threshold needs to be adapted in an unpredictable way.

To avoid this problem, we have chosen a different approach based on the coherence factor (CF) concept. Conventionally, the CF quantifies the coherence of an US or PA signal across the probe aperture, as the squared coherent sum (phase preserved) normalised to the incoherent sum (of the intensity, no phase information) of the signal (after applying the same time delays as in conventional DAS). A perfectly coherent signal yields the largest CF value, whereas a perfectly incoherent signal yields a comparably small value due to phase cancellation in the coherent sum. We adapted this concept to measure the coherence along the sequence of bursts, per pixel in the reconstructed (after DAS) and motion-compensated PA images. Clutter and noise decorrelate along the burst sequence, thus being “incoherent”, whereas true PA signal is correlated and thus shows a higher “coherence”. We therefore defined the CF as:

The sum in the numerator equals the conventional DCA result, whereas the sum in the denominator is an incoherent version of DCA. For perfectly correlated PA signal, this CF becomes one, independent of the magnitude of the PA signal, whereas it takes on small values in noise- or clutter-dominated areas. The CF provides a more practical way of identifying “real” PA signal than thresholding the PA intensity because it does not depend on the PA signal magnitude but only on the coherence. We use this CF for the combined PA/US display in the following way: first, the intensity (squared envelope) of the PA image is logarithmically compressed and the result is coded into an RGB colour map. Then, the colour channels are multiplied with the CF in each pixel. This approach opens up the freedom to code the intensity of PA signals as colour hue alone, whereas the colour brightness is determined by the “coherence”. This allows to distinguish different signal intensity levels based on hue while the brightness emphasizes “real” signal independent of signal intensity. For the results shown in the next section, a “blackbody” colour map was chosen. In a last step, the PA colour image is blended with the grayscale US DCA image, using (1-CF) as spatially dependent transparency value, after multiplying the US grayscale by 0.5 to provide better colour contrast between PA and US. To make the effect of the CF on the final image more pronounced and independent of depth-dependent optical attenuation, the CF is logarithmically compressed to a range of 7.5 dB, starting from −5 dB and including a TGC of 3.5 dB/cm.

4 Results

This section presents results of detecting blood vessels at the depth of the carotid artery in a healthy volunteer. In a first part, the influence of the various steps of the signal processing are illustrated on a single example data set. In a second part, various different imaging examples are shown, to underline the reproducibility of the achieved imaging depth and to provide some recommendations on image interpretation.

4.1 Illustration of Processing Steps

Figure 4a shows right away the final side-by-side display of the “conventional” HQ B-mode US image and the PA/US overlay. For this and following results, the limited US depth range configuration was used to achieve maximum tracking accuracy. The HQ B-mode US image on the left reveals anatomical detail of the tissue surrounding the carotid artery, including the carotid lumen, thyroid gland, small vessels and muscle epimysia. The PA/US overlay clearly shows PA signal emanating from the upper surface of the carotid lumen. The appearance of this signal is typical for PA imaging using conventional clinical US probes: only the lumen surface is seen because the limited BW suppresses low spatial frequencies of optical absorption (the light penetration depth itself is few mm), and the signal is laterally limited due to the limited probe aperture: from the cylindrical transient generated by the vessel lumen, only the part that propagates within the sector indicated by lines is detected by the probe. Apart from the carotid lumen, PA signal is observed at small vessels that are indicated in the US image. Note that the signal intensity is similar in spite of the substantially different vessel sizes. This is expected, as the signal amplitude is proportional to the absorption contrast at the vessel boundaries, which is similar as all vessels are situated at roughly the same depth. The averaging time constant was 20 bursts, corresponding to 2.5 s. Figure 4b shows the PA/US overlay when averaging the PA signal over the same 20 bursts but without prior motion compensation. As a consequence of phase cancellation, the carotid signal is not discernible from the background noise. The results in Fig. 4 thus clearly demonstrate that DCA is a requirement for deep imaging, especially in low energy PA imaging where long averaging times are required. Apart from enabling the detection of the carotid signal, a comparison of the PA results reveals a reduced background noise level inside and around the carotid lumen in Fig. 4a compared to Fig. 4b. This indicates that part of the noise was systematic noise that persisted in Fig. 4b but was reduced in Fig. 4a due to the motion correction. Such noise can be explained by clutter stemming from reverberations within the superficial tissue layers, of PA transients that are generated in or just below the skin where laser fluence is largest.

a Side-by-side display of B-mode US (left) and PA/US overlay (right). The US image shows a transversal section through the left carotid artery (C: carotid lumen; J: internal jugular vein; v: small vessel; th: thyroid gland and e: muscle epimysia). The overlay of PA (blackbody colour scale) with US (grayscale) shows strong PA signal at the upper surface of the carotid lumen (arrowhead), around the jugular vein and around the small vessel. The lateral extension of the carotid lumen signal is limited by the receive angular aperture, indicated by dashed lines. b For comparison, when averaging without motion compensation, the carotid signal is missing. Intensity display ranges were: 50 dB for US (5 dB/cm TGC), 25 dB for PA (12.5 dB/cm TGC)

Figure 5 illustrates the multi-stage motion tracking process, exemplified on the axial displacement map detected at the peak of the carotid wall motion. A set of three envelope bandpass stages was chosen at bandpass centre frequencies (0.25, 0.5 and 1.0 MHz). In a first step, the 0.25 MHz are used for tracking. Figure 5a shows the resulting displacement map. Note that displacement values are available only within the region of interest (RoI) that is covered by the US image. The 0.25 MHz were chosen so as to provide the most robust motion-compensation of the US images (by visual inspection). This displacement map, however, has very low spatial resolution, so that short-scale variations of displacement magnitude are missed. As a result, only the upward motion of the upper carotid wall is captured because the upper carotid wall gives the strongest echo within the spatial resolution. This leads to an overestimation of the displacement magnitude in a large area outside the vessel lumen. To avoid such errors, the displacement map is refined in a second step, by phase-tracking the 0.5 MHz-filtered US envelope, resulting in Fig. 5b. As a result of the refinement, this displacement map shows an improved spatial resolution, so that it is able to capture also the downwards motion of the lower carotid wall. At the same time the displacement magnitude is decreased above the upper carotid wall because the weaker echoes from the tissue overlying the carotid can now be spatially separated from the strong carotid wall echo, resulting in more accurate estimation of the displacement magnitude in this area. The displacement map is further refined in the third tracking stage based on the 1.0 MHz-filtered envelope (Fig. 5c). While the spatial resolution is again markedly improved, the spatial distribution shows an increased level of bumpy short-scale spatial variations. In a last step (Fig. 5d), the displacement map is refined based on phase tracking the RF-mode US images as opposed to the bandpass filtered envelope. After this phase correction, the spatial distribution has become smooth over a large area, as one would expect from a real displacement field. The difference between Fig. 5d and c underlines the earlier made statement that RF phase tracking is more robust than envelope tracking: the increased noise in Fig. 5c can be assigned to decorrelation of the envelope due to a changing interference of echoes. Only few small areas can be identified in Fig. 5d with unrealistic sharp discontinuities that result from phase aliasing. Comparison to the HQ US image in Fig. 4 reveals that these areas are found in regions of low echogenicity, mainly inside the hypoechoic carotid lumen. This is expected, as such low echogenic areas can be dominated by higher order echoes that move differently than 1st order echoes.

Axial displacement field detected at maximum carotid motion, based on multi-stage bandpass-filtered envelope tracking: a stage 1 at 0.25 MHz bandpass centre frequency; b stage 2 at 0.5 MHz; c stage 3 at 1.0 MHz. d Phase correction at 7.5 MHz smoothens the result of stage 3. The colour scale spans ± 0.25 mm, where white and black indicate motion towards and away from the transducer, respectively

With the chosen backward compensation, the DCA PA image is static and thus the overlay with US also requires a static US image. Therefore, motion compensation is applied to US the same way as to PA. As already mentioned, the motion compensated US image provides a useful real-time feedback on tracking quality, as tracking errors can be identified based on residual motion. In addition, when averaging the motion-compensated US images, even small errors can become visible when they lead to phase cancellation. This is illustrated in Fig. 6: phase cancellation shows up as fluctuating small areas where echo intensity is transiently reduced. Based on the visual assessment of residual echo motion and phase cancellation areas, freehand probe motion can be adapted in real-time to minimise the frequency of tracking errors, to provide an optimum data set to which DCA can be most successfully applied.

Illustration of using the DCA US image for real-time tracking quality feedback. This figure shows the US image underlying the PA/US overlay, but without the PA data. a Perfect tracking results in an US image that is static and shows a stable intensity distribution. b With less-than-perfect tracking, the image may still look static, but small tracking errors result in small fluctuating areas of decreased intensity due to phase cancellation (arrows). 50 dB (5 dB/cm TGC)

Apart from choosing between forward or backward motion compensation, one main decision to be made when implementing DCA is between accumulative and fixed-reference motion tracking. As already mentioned, both approaches can have advantages and disadvantages. For our system we use fixed-reference tracking because accumulative tracking turned out to be less robust. This is illustrated in Fig. 7: accumulative and fixed-reference tracking led to similar contrast-to-noise ratio (CNR), but accumulative tracking resulted in an increasingly distorted DCA image already after 34 bursts (4 s averaging time) due to accumulation of tracking errors. In comparison, fixed-reference tracking is limited in tracking accuracy, but no error accumulation occurs so that the DCA result remains robust over time.

DCA result for accumulative (a) and fixed reference tracking (b), at burst number 14, 24, 34, 50 (left to right). 20× averaging time constant, 25 dB intensity range (12.5 dB/cm TGC). Whereas contrast is similar between the two techniques, accumulative tracking leads to geometrical distortions, best visible at the lower edge of the image or at the curvature of the carotid signal (arrowhead)

A further decision to be taken is between coherent averaging (of motion-compensated RF-mode PA images) and incoherent averaging (of the motion-compensated PA envelope). Incoherent averaging can have the advantage that tracking inaccuracies cannot lead to phase cancellation as in coherent averaging. On the downside, incoherent averaging is less efficient in terms of noise reduction: the convergence rate of averaging the square of a Gaussian distributed random number is weaker than when averaging the random number itself. In addition, averaging the square converges to a positive number instead of zero, which adds a disturbing bias to the signal intensity distribution. This is illustrated in Fig. 8, where the DCA results using the two different techniques can be compared. Note that both images are displayed in identical dB scales. Within the first cm depth range where SNR is large, the colour hue of strong PA signals is coded in the same colour in both images. In areas where the signal is dominated by noise, the colour reveals an increased intensity level in Fig. 8b compared to Fig. 8a due to the biased convergence limit. Due to the TGC, the colour indicates larger intensity values with increasing depth. Since the SNR of e.g. the carotid signal is quite small, the positive average noise adds an offset so that the carotid signals colour hue indicates a higher intensity in 8b than in 8a. Note that the CNR is visibly decreased, e.g. around the jugular vein, as the noisy background achieves the same colour hue as the signal from the jugular vein. We decided for coherent DCA because it has an over-all improved CNR (better convergence property, not markedly sensitive to phase cancellation errors) compared to incoherent DCA.

Coherent (a) and incoherent (b) DCA. 25 dB intensity range (12.5 dB/cm TGC). Note the similar intensity level of superficial and deep PA signals (empty arrowheads), but due to the positive value of the average square noise (full arrowheads) incoherent DCA has reduced CNR compared to coherent DCA. 25 dB intensity range

Finally, an important parameter for DCA is the averaging time constant. All PA DCA results shown so far were based on a time constant of 20 bursts. The time constant has to be chosen in a trade-off between SCR and real-time feedback. For comparison of SCR, Fig. 9 shows DCA results for various different constants, i.e. 10, 20, 40 and 80 bursts, corresponding—for an 8 Hz burst rate—to 1.25, 2.5, 5 and 10 s averaging time, respectively. As one can see, SCR markedly increases from 10 to 20, but converges above 40 bursts. The reason for this convergence as opposed to a continuous increase is that part of the noise consists of persistent clutter. Even though part of clutter can be reduced due to the carotid motion, this reduction is limited: since the carotid displacement magnitude is limited and the motion is periodic, only a limited number of statistically independent clutter realisations can be averaged regardless of how long the averaging time is chosen. Even though SCR slightly improved from 20 to 40 bursts, we considered 5 s a too long averaging time regarding real-time feedback. Therefore, we decided for a time constant of 20 bursts corresponding to 2.5 s, which at the same time provides close-to-maximum SCR with a still acceptable delay between acquisition and display.

The last step in the DCA processing chain is the combination of the PA with the US image. The way this is done has an important influence on the visibility and interpretation of the PA signal. As mentioned earlier, we decided for an overlay of the two images based on the DCA coherence factor (CF), as the CF provides an amplitude-independent measure of the significance of a PA signal. The way the CF is used for that purpose is illustrated in Fig. 10. The first step is the choice of the base colour map that defines the colour hue for displaying the PA signal intensity. We decided for a “blackbody” colour map because it resulted in the visually most pleasant blend with the grayscale of the US image. The blackbody colour map starts with black for zero signal intensity, over red and yellow, to white for the highest intensity. Therefore, not only the colour hue depends on signal intensity but also the colour brightness. Alternatively, one could choose a colour map that codes signal intensity entirely in colour hue. As a step into this direction, we also show results for a modified “jet” colour map. The original jet colour map starts from dark blue over bright blue, etc. to bright red and dark red. To reduce brightness variation, this map was truncated to range from bright blue to bright red. Figure 10 exemplarily shows results for both the blackbody and the modified jet map. Figure 10a shows the result of coding the PA intensity into the respective colour map. Assuming that only coherent signal (coherent in the sense that we defined earlier) is “real” and worth displaying, the CF is then used to highlight “coherent” and supress “incoherent” signal, by coding the CF into the colour brightness value. The result is shown in Fig. 10b. In both colour maps, some features retain the original colour hue and brightness. In the lower half of the image, these are notably the features that can be assigned (based on the US image) to jugular vein, carotid, and a small vessel. Relative to these, the brightness of the background is reduced. In case of the blackbody colour map, it is difficult to determine in the CF-faded image alone whether the darkness is due to a dark value in the original colour map or due to the CF fading. In the modified jet colour map, on the other hand, it is clear that mostly areas that were initially blue (low intensity) were set to black by CF fading. Some areas that were initially green (intermediate intensity), however, were set to black, too, and some features that were initially blue (low intensity) remain so in the CF-faded image, demonstrating that the CF provides complementary information to signal intensity. In a last step, the CF is used to set the local transparency value in the overlay of the PA onto the grayscale US image (Fig. 10c): in pixels where the CF is high, transparency is set low, so that the pixel colour is determined by the PA signal, whereas transparency is set high in pixels where the CF is low, to show the anatomical context in areas where the PA signal can be assumed not to contain valuable information.

Illustration of the use of the DCA coherence factor (CF) for colour-coding PA data and for the PA/US overlay, departing from two different base colour maps, blackbody (top) and modified jet (bottom): a PA data without CF fading. b Fading of PA colour map using CF. c Overlay of faded PA colour map with US grayscale map using (1-CF) as transparency value. To improve visibility of the PA signal, the US colour values were multiplied with 0.5 before overlay. The signals from carotid lumen (C), jugular vein (J) and the small vessel (v) maintain their colour value with CF fading. Some medium intensity features (e.g. solid circles) keep their colour hue but are attenuated by CF fading. The low intensity background (blue in modified jet map) becomes black by CF fading, apart from some pixels (e.g. dotted circle)

4.2 Further Results

The remainder of this section is dedicated to showing and discussing further results and provide experience on how to interpret images.

As mentioned before, the limited bandwidth of the clinical probe acts like a spatial bandpass filter that allows to detect only rapid spatial variations of optical absorption, e.g. only the surface of large blood vessels. In addition, the limited aperture size allows only part of the surface to be seen, i.e. the part from which the PA transient propagates into the probe aperture. This leads to the typical arclet-shaped appearance of the PA signal emerging from the carotid lumen. In case of a 1D array like the one we are using in this study, the limited aperture of the probe has a further implication not discussed so far: to provide a well-defined imaging plane, the array aperture is by design focused in elevation (the dimension perpendicular to the imaging plane). For that reason, a main requirement for detecting the carotid signal is that the imaging plane has to be perpendicular to the lumen surface. By that the transients emanating from the lumen surface and hitting the transducer have propagated parallel to the imaging plane and are detected with maximum elevation sensitivity. With increasing deviation from a perpendicular orientation, the same transients arrive at an increasing elevation and sensitivity rapidly decreases. For reasons that are yet unclear (but will be discussed in the next section) it can be difficult to detect the carotid signal in a transverse section even with perfectly perpendicular orientation. The chance to at least partially detect the carotid is higher in a longitudinal section: then the carotid signal appears as a line (Fig. 11a) that covers a larger number of pixels and thus provides a richer statistics for identification of this signal. It makes it also more practical to adjust the probe orientation: by only slightly tilting or moving the probe, the angle between imaging plane and lumen surface changes rapidly due to the surface curvature, thus it is possible to optimise the sensitivity without substantially changing the imaging plane position. For a transversal section, optimising the angle between imaging plane and lumen surface requires a search over a large probe tilt angle range, and the location of the section area within the lumen changes together with the tilt angle, so that two probe orientation parameters need be simultaneously optimised for detecting a desired location.

Further results demonstrating imaging depth. a longitudinal section of left carotid artery; b–d transversal sections of right internal jugular vein. In c and d the jugular vein appears flattened due to compression of the tissue (C: carotid lumen; J: jugular vein lumen). In all images, the lateral extent of the PA signal is indicated by brackets. Intensity range: 50 dB for US (5 dB/cm TGC), 25 dB for PA (12.5 dB/cm TGC)

In our experience, it is much easier to catch the signal emanating from the internal jugular vein (and from smaller vessels) than from the carotid itself. This is illustrated in Fig. 11b–d where the signal from the internal jugular vein can be clearly identified even when it is located near the lower edge of the image. Note that, in Fig. 11c, the carotid lumen is visible on US at the same depth as the jugular vein (but no PA signal is detected due to the difficulties mentioned before). These results therefore further underline the ability of the presented system to detect optical absorption in the blood at the depth of the carotid artery.

So far, all results were based on a limited RoI US image. As mentioned in the previous section, the US RoI depth range was limited to 1 cm in order to achieve maximum angle coverage for PWC and thus maximum tracking quality within the hardware limitations of the available system. Apart from showing the anatomical context of PA signal and serving for motion tracking, however, the US image is needed for identification of PA reflection artifacts based on the presence of echogenic structures seen in US. For this purpose, the US image needs to show structures that are located at half the depth of the PA signal of interest. Therefore, we implemented a software version where a larger depth range is shown on the US image, at the cost of giving up on angle coverage and thus on tracking quality and DCA performance. Figure 12 shows some results using this software, to illustrate how the US image can be used for evaluating the authenticity of PA signal: in Fig. 12a (as already in Fig. 4) PA signal is visible which can anatomically be related to the surface of the left carotid lumen. At half its depth, no reflecting structure is seen on US (dashed line). These two observations together give confidence that the PA signal is actually from the lumen, not a reflection artifact. In Fig. 12b, the left internal jugular vein is seen on US. It appears as a line because it is fully collapsed due to the static pressure exerted by the probe. Next to the jugular vein, a small vessel is visible. PA signal is visible inside the jugular vein as well as in the small vessel. The authenticity of the PA signal is again suggested by the correspondence of the PA signal to the anatomy seen on US, together with the fact that no reflectors are seen in US at half the depth. In Fig. 12c, PA signal is visible at the location of a vessel seen on US. At half the depth, a structure is visible on US which might cause a reflection artifact at the depth of the PA signal. However, one would then expect further artifacts with similar or higher intensity in near vicinity corresponding to other and more intense structures visible in the US image at similar depth. Moreover, based on the fact that the same PA signal is often found at the location of this vessel (as in Fig. 4), one can safely assume that this is real signal. Apart from blood vessels, epimysia often exhibit a distinct PA signal as in Fig. 12d. Again, the authenticity is confirmed by the anatomical correspondence and by the absence of a reflector at half the depth. The horizontal stripes of high PA signal intensity that have been present in all images shown so far can partially be interpreted as echo artifacts: they do not show anatomical correspondence with the US image, but can be interpreted as reverberations between skin surface (outside the RoI shown in the US images) and the muscle surface and horizontal muscle layers (visible on US).

a–d Evaluation of authenticity of PA signal is based on US in two ways: by the correspondence of location of PA signal to anatomical features (indicated by solid white lines or brackets; C: carotid lumen, J: internal jugular vein, v: vessel, e: epimysium), and by the absence of strongly reflecting structures on US image at half the depth of the PA signal (indicated by straight dashed lines and dashed circumscribed areas. Intensity range: 40 dB for US (5 dB/cm TGC), 25 dB for PA (12.5 dB/cm TGC)

5 Discussion and Conclusion

The results presented in this chapter demonstrate that DCA is a key requirement for deep PA imaging using low energy (LED or LD based) PA systems, and we have shown that this technique allows detecting the PA signal of the carotid artery using a compact fully-integrated PA/US probe in a freehand approach.

The results show the known limitations of using a clinical linear array probe for PA imaging, namely that the limited probe bandwidth and aperture allow only to detect sharp boundaries, e.g. at the upper (and sometimes lower) edge of a vessel lumen. Moreover, sensitivity depends on the relative orientation angle between the PA signal sources and the imaging plane. This certainly puts limits to how much can be interpreted from PA images, and—in the worst case—renders quantitative interpretation of PA signal amplitude impossible. To solve this problem, one approach has been to use concave arrays that are better matched to e.g. the neck or breast geometry to increase angle coverage [41, 42], and a large bandwidth (more) suitable to capture a tomographic section. Such a system has, however, the disadvantage of providing a less well defined imaging plane: the size of the elevation focus which defines the thickness of the imaged tissue slice depends on the acoustic wavelength in relation to the transducer element size. Below a certain frequency limit, the focusing capability is lost, and if these frequencies are not suppressed by the transducer’s frequency response (or by the successive signal filtering), signals from anywhere in the 3D tissue sample are projected onto the same 2D image. This becomes a problem in PA when signals from below the skin surface but outside the imaging plane are orders of magnitude stronger than the signals coming from deep inside tissue due to the large difference in laser fluence. Therefore, the lowest detected frequency at the same time defines the minimum imaging plane thickness. If the wish is to detect the inside of the lumen of the carotid artery, for example, the imaging plane thickness would implicitly have to be larger than the diameter of the artery, i.e. around 10 mm. This would preclude such a system from the combination with conventional US images where a much better elevational resolution is desired (typically below 1 mm). A way to avoid the ambiguity of the PA imaging plane is to use a 2D array [43] for Rx beamsteering in azimuth and elevation. To achieve a sufficient tomographic coverage, however, the aperture size of such an array must be large in both dimensions, so that it becomes again impractical for an integration with standard handheld US.

We therefore foresee that, while a dedicated broadband and curved array can have specific clinical applications, a conventional clinical probe can have advantages in applications where the limited bandwidth is not a substantial problem and where PA can add important diagnostic information to conventional US: if, for example, the goal is to quantify blood oxygen saturation (SO2) in small vessels, reconstructing the inside of the vessel lumen is not required (SO2 can be regarded to be uniform across the lumen) and quantitative absolute values of PA signal amplitude are not required (SO2 can be determined from relative variations of PA signal amplitude as function of optical wavelength [44]). In the Cvent project, the goal is to detect blood clots inside plaque. In the previous section we mentioned that detecting the signal from the carotid lumen requires a high level of experience in probe guidance as the detection sensitivity depends on a perpendicular orientation of the imaging plane relative to the lumen surface. This is, however, less of a problem when detecting blood clots: the optical absorption by clots is distributed non-uniformly inside plaque so that we expect it to act like a collection of small independent absorbing centres [45]. Figure 12 illustrates in a phantom the expected difference of the PA signal inside plaque compared to the signal from a healthy artery. For this purpose, a uniformly absorbing but acoustically transparent cylinder was embedded inside a background medium that is acoustically scattering. In one position, the cylinder contains a small echogenic volume in which graphite powder was mixed, mimicking plaque containing blood clots. When imaging the phantom in a “healthy” area (Fig. 13a), it looks similar as in a healthy volunteer: the cylinder appears as hypoechoic area on US, and the transversal section shows a PA signal in the shape of an arclet at the upper surface of this area, and the longitudinal section shows a line-shaped signal. When imaging at the position of the “plaque” (Fig. 13b), the plaque appears as a collection of diffuse PA speckle. This diffuse type of signal can be detected independent of the orientation of the imaging plane because it acts like a collection of independent and isotropically radiating sources.

Phantom mimicking a healthy carotid artery (a) and an artery containing plaque with haemorrhage (b). C: “healthy carotid” signal; p: “plaque” signal. Note that the apparent PA signal at the lower edge of the “carotid” lumen are echo artifacts, caused by an impedance mismatch between the background and the cylinder medium

In our study we made the interesting observation that detecting the PA signal from the carotid artery is substantially more difficult than the one from adjacent small vessels or from the internal jugular vein at the same depth. The 808 nm optical wavelength used for this study is very near the isosbestic point of the optical attenuation spectra of oxy- and deoxyhaemoglobin, so the absorption contrast is expected to be identical for carotid and other vessels. A possible explanation for the observed difference in signal intensity may, however, be found in the different morphology of the carotid artery wall compared to surrounding vessels: it contains a substantially thicker muscle cell layer (tunica media) that is perfused by capillaries (vasa vasorum). The depth profile of optical absorption in haemoglobin may thus resemble more a staircase than a single-step function, so that the optical contrast is blurred towards low spatial frequencies that may be less well detected. At the same time, the intensity of the light reaching the lumen interior is reduced by the thicker tunica media.

The most important component of DCA is the tracking algorithm. The goal of the presented study was to demonstrate that sufficient imaging depth could be achieved to detect the PA signal from blood vessels at the depth of the carotid artery. For this purpose, we relayed on an easily implementable, robust and real-time capable ad-hoc algorithmic solution. The advantage of the chosen algorithm compared to the commonly used block-matching (BM) technique is the lower numerical cost: Fig. 4 indicates that the peak displacement magnitude of the carotid wall motion was roughly 0.25 mm, corresponding to 2.5 wavelengths (0.1 mm) of the oscillations of the RF-mode image at the 7.5 MHz centre frequency. A BM technique would thus require at least 5 test displacements (2.5 in positive and in negative axial direction) but preferably more, to retrieve the optimum value of the block-matching criterion (e.g. correlation coefficient) with sufficient resolution. In comparison, only three filter stages were needed in our approach. At each stage, the displacement is directly estimated from the correlation phase (thus not requiring a search approach), and multiple filter stages are only used for refining the spatial resolution of the displacement map. A displacement map with slightly reduced quality could even be obtained with only two stages.

Even though the chosen tracking algorithm was sufficient for the demonstration of imaging depth in the presented volunteer results, it has potential for improvement: so far, we used only axial motion tracking and compensation, as it is the axial motion that leads to phase cancellation of the average PA signal if not accounted for. Lateral motion, on the other hand, can laterally blur the average PA image, thus the SNR of the DCA result can be further improved by lateral motion tracking and compensation. As previously mentioned, a 2D displacement vector field can be obtained for this purpose by acquiring two US images with different view directions (via Tx and/or Rx beamsteering). One-dimensional tracking of these images along the respective view direction results in projections of the displacement vector onto the different directions, and the displacement vector field can be reconstructed from these projections. An advantage of this approach is that it is substantially faster than a BM approach that requires a 2D search area. A disadvantage is the reduced lateral resolution if Rx beamsteering is used (as the full Rx angular aperture must be split into different view directions), or the increased data size if Tx beamsteering is used (due to the larger number of acquisitions). The envelope-based LPC technique proposed in this chapter is a practical alternative which combines the advantage of BM (full resolution without increasing data size) with one-dimensional tracking (low computational cost): phase tracking of the bandpass-filtered squared envelope can be applied to the lateral dimension equally well as to the axial dimension. This directly results in the lateral component of the displacement field with only a factor 2 increase in computational cost. This approach is very similar to spatial quadrature [46], where tracking is based on the complex RF signal and a lateral oscillation is achieved via Rx apodisation. Apart from increasing the dimensionality of the displacement field, multi-dimensional motion tracking has been shown to improve the accuracy of each dimension over a single-dimensional tracking [35]. Further ideas for improvement are found in literature on US strain imaging [36, 47,48,49].

As previously mentioned, the accuracy of the motion tracking relies on the US image quality. In the presented results, the US image quality was good in the sense that the intensity level of higher-order echo clutter was lower than the intensity of first-order echoes in most of the image area. Preliminary experience from an ongoing clinical study, however, reveal that motion tracking is more difficult in a large part of cases. Anatomy and acoustic properties of the neck vary substantially between subjects. Fat in and between the musculature above the artery can lead to reverberations of ultrasound that obscure the artery lumen so that the detected displacement is determined by the motion of the superficial tissue from where the reverberations originate, rather than by the actual artery wall motion. Similarly, calcifications inside plaque lead to reverberations that obscure the lower artery wall, such that the tracking result at the lower wall is determined by the motion of the upper wall. To enable reliable results independent of anatomy, the tracking algorithm thus must be able to (better) discriminate between superposing first- and higher-order echoes. This might be achieved via identification of different statistical features of the RF signal, via the different motion speed using a blind signal separation technique, via deep learning, or via a combination of these.

The presented results were obtained with an LD-based system providing 2 mJ pulse energy, using an average prf of 80 Hz and an averaging time constant of 2.5 s. As previously mentioned, the resulting average irradiance at the skin surface was a factor 3 below the safety limit (for 808 nm). With a faster data transfer and processing speed, the prf could thus have been increased by a factor 3 up to 240 Hz. Maintaining the 2.5 s averaging time constant, this would have led to a factor 1.7 increase in SNR (amplitude). By irradiating the skin on two sides of the linear probe instead of only one, the total increase in SNR would augment to a factor of 3.5. This indicates that identical results as the presented ones could have been achieved with—by a factor of 3.5—reduced pulse energy, i.e. only 0.7 mJ. This is a promising result, as it suggests that imaging the carotid artery is within the reach of the performance of LED-based systems.

References

J.J. Niederhauser, M. Jaeger, R. Lemor, P. Weber, M. Frenz, Combined ultrasound and optoacoustic system for real-time high-contrast vascular imaging in vivo. IEEE Trans. Med. Imaging 24(4), 436–440 (2005). https://doi.org/10.1109/TMI.2004.843199

M.K.A. Singh, W. Steenbergen, S. Manohar, Handheld probe-based dual mode ultrasound/photoacoustics for biomedical imaging, in Frontiers in Biophotonics for Translational Medicine (Springer, Singapore, 2016), pp. 209–247

J.-L. Gennisson, T. Deffieux, M. Fink, M. Tanter, Ultrasound elastography: principles and techniques. Diagn. Interv. Imaging 94, 487–495 (2013). https://doi.org/10.1016/j.diii.2013.01.022

M. Jaeger, G. Held, S. Peeters, S. Preisser, M. Grünig, M. Frenz, Computed ultrasound tomography in echo mode for imaging speed of sound using pulse-echo sonography: proof of principle. Ult. Med. Biol. 41(1), 235–250 (2015). https://doi.org/10.1016/j.ultrasmedbio.2014.05.019

M. Jaeger, M. Frenz, Towards clinical computed ultrasound tomography in echo-mode: dynamic range artefact reduction. Ultrasonics 62, 299–304 (2015). https://doi.org/10.1016/j.ultras.2015.06.003

M. Jaeger, E. Robinson, H.G. Akarcay, M. Frenz, Full correction for spatially distributed speed-of-sound in echo ultrasound based on measuring aberration delays via transmit beam steering. Phys. Med. Biol. 60, 4497–4515 (2015). https://doi.org/10.1088/0031-9155/60/11/4497

P. Stähli, M. Kuriakose, M. Frenz, M. Jaeger, Forward Model for Quantitative Pulse-Echo Speed-of-Sound Imaging. arXiv: 1902.10639v2 [physics.med-ph]

M. Imbault, M.D. Burgio, A. Faccinetto, M. Ronot, H. Bendjador, T. Deffieux et al., Ultrasonic fat fraction quantification using in vivo adaptive sound speed estimation. Phys. Med. Biol. 63, 215013 (2018). https://doi.org/10.1088/1361-6560/aae661

A. Hariri, J. Lemaster, J. Wang, A.S. Jeevarathinam, D.L. Chao, J.V. Jokerst, The characterization of an economic and portable LED-based photoacoustic imaging system to facilitate molecular imaging. Photoacoustics 9, 10–20 (2018). https://doi.org/10.1016/j.pacs.2017.11.001

A. Hariri, E. Zhao, A.S. Jeevarathinam, J. Lemaster, J. Zhang, J.V. Jokerst, Molecular imaging of oxidative stress using an LED-based photoacoustic imaging system. Sci. Rep. 9, 11378–11410 (2019). https://doi.org/10.1117/12.2509204

J. Jo, G. Xu, Y. Zhu, M. Burton, J. Sarazin, E. Schiopu et al., Detecting joint inflammation by an LED-based photoacoustic imaging system: a feasibility study. J. Biomed. Opt. 23(11), 110501 (2018). https://doi.org/10.1117/1.JBO.23.11.110501