Abstract

Quantum systems have an exponentially large degree of freedom in the number of particles and hence provide a rich dynamics that could not be simulated on conventional computers. Quantum reservoir computing is an approach to use such a complex and rich dynamics on the quantum systems as it is for temporal machine learning. In this chapter, we explain quantum reservoir computing and related approaches, quantum extreme learning machine and quantum circuit learning, starting from a pedagogical introduction to quantum mechanics and machine learning. All these quantum machine learning approaches are experimentally feasible and effective on the state-of-the-art quantum devices.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Quantum reservoir computing

- Quantum circuit learning

- Quantum extreme learning machine

- Quantum machine learning

- Noisy intermediate-scale quantum technology

1 Introduction

Over the past several decades, we have enjoyed exponential growth of computational power, namely, Moore’s law. Nowadays even smart phone or tablet PC is much more powerful than super computers in 1980s. People are still seeking more computational power, especially for artificial intelligence (machine learning), chemical and material simulations, and forecasting complex phenomena like economics, weather and climate. In addition to improving computational power of conventional computers, i.e., more Moore’s law, a new generation of computing paradigm has been started to be investigated to go beyond Moore’s law. Among them, natural computing seeks to exploit natural physical or biological systems as computational resource. Quantum reservoir computing is an intersection of two different paradigms of natural computing, namely, quantum computing and reservoir computing.

Regarding quantum computing, the recent rapid experimental progress in controlling complex quantum systems motivates us to use quantum mechanical law as a new principle of information processing, namely, quantum information processing (Nielsen and Chuang 2010; Fujii 2015). For example, certain mathematical problems, such as integer factorisation, which are believed to be intractable on a classical computer, are known to be efficiently solvable by a sophisticatedly synthesized quantum algorithm (Shor 1994). Therefore, considerable experimental effort has been devoted to realizing full-fledged universal quantum computers (Barends et al. 2014; Kelly et al. 2015). In the near feature, quantum computers of size \(>50\) qubits with fidelity \(>99\%\) for each elementary gate would appear to achieve quantum computational supremacy beating simulation on the-state-of-the-art classical supercomputers (Preskill 2018; Boixo et al. 2018). While this does not directly mean that a quantum computer outperforms classical computers for a useful task like machine learning, now applications of such a near-term quantum device for useful tasks including machine learning has been widely explored. On the other hand, quantum simulators (Feynman 1982) are thought to be much easier to implement than a full-fledged universal quantum computer. In this regard, existing quantum simulators have already shed new light on the physics of complex many-body quantum systems (Cirac and Zoller 2012; Bloch et al. 2012; Georgescu et al. 2014), and a restricted class of quantum dynamics, known as adiabatic dynamics, has also been applied to combinatorial optimisation problems (Kadowaki and Nishimori 1998; Farhi et al. 2001; Rønnow et al. 2014; Boixo et al. 2014). However, complex real-time quantum dynamics, which is one of the most difficult tasks for classical computers to simulate (Morimae et al. 2014; Fujii et al. 2016; Fujii and Tamate 2016) and has great potential to perform nontrivial information processing, is now waiting to be harnessed as a resource for more general purpose information processing.

Physical reservoir computing, which is the main subject throughout this book, is another paradigm for exploiting complex physical systems for information processing. In this framework, the low-dimensional input is projected to a high-dimensional dynamical system, which is typically referred to as a reservoir, generating transient dynamics that facilitates the separation of input states (Rabinovich et al. 2008). If the dynamics of the reservoir involve both adequate memory and nonlinearity (Dambre et al. 2012), emulating nonlinear dynamical systems only requires adding a linear and static readout from the high-dimensional state space of the reservoir. A number of different implementations of reservoirs have been proposed, such as abstract dynamical systems for echo state networks (ESNs) (Jaeger and Haas 2004) or models of neurons for liquid state machines (Maass et al. 2002). The implementations are not limited to programs running on the PC but also include physical systems, such as the surface of water in a laminar state (Fernando and Sojakka 2003), analogue circuits and optoelectronic systems (Appeltant et al. 2011; Woods and Naughton 2012; Larger et al. 2012; Paquot et al. 2012; Brunner et al. 2013; Vandoorne et al. 2014), and neuromorphic chips (Stieg et al. 2012). Recently, it has been reported that the mechanical bodies of soft and compliant robots have also been successfully used as a reservoir (Hauser et al. 2011; Nakajima et al. 2013a, b, 2014, 2015; Caluwaerts et al. 2014). In contrast to the refinements required by learning algorithms, such as in deep learning (LeCun et al. 2015), the approach followed by reservoir computing, especially when applied to real systems, is to find an appropriate form of physics that exhibits rich dynamics, thereby allowing us to outsource a part of the computation.

Quantum reservoir computing (QRC) was born in the marriage of quantum computing and physical reservoir computing above to harness complex quantum dynamics as a reservoir for real-time machine learning tasks (Fujii and Nakajima 2017). Since the idea of QRC has been proposed in Fujii and Nakajima (2017), its proof-of-principle experimental demonstration for non-temporal tasks (Negoro et al. 2018) and performance analysis and improvement (Nakajima et al. 2019; Kutvonen et al. 2020; Tran and Nakajima 2020) has been explored. The QRC approach to quantum tasks such as quantum tomography and quantum state preparation has been recently garnering attention (Ghosh et al. 2019a, b, 2020). In this book chapter, we will provide a broad picture of QRC and related approaches starting from a pedagogical introduction to quantum mechanics and machine learning.

The rest of this paper is organized as follows. In Sect. 2, we will provide a pedagogical introduction to quantum mechanics for those who are not familiar to it and fix our notation. In Sect. 3, we will briefly mention to several machine learning techniques like, linear and nonlinear regressions, temporal machine learning tasks and reservoir computing. In Sect. 4, we will explain QRC and related approaches, quantum extreme learning machine (Negoro et al. 2018) and quantum circuit learning (Mitarai et al. 2018). The former is a framework to use quantum reservoir for non-temporal tasks, that is, the input is fed into a quantum system, and generalization or classification tasks are performed by a linear regression on a quantum enhanced feature space. In the latter, the parameters of the quantum system are further fine-tuned via the gradient descent by measuring an analytically obtained gradient, just like the backpropagation for feedforward neural networks. Regarding QRC, we will also see chaotic time series predictions as demonstrations. Section 5 is devoted to conclusion and discussion.

2 Pedagogical Introduction to Quantum Mechanics

In this section, we would like to provide a pedagogical introduction to how quantum mechanical systems work for those who are not familiar to quantum mechanics. If you already familiar to quantum mechanics and its notations, please skip to Sect. 3.

2.1 Quantum State

A state of a quantum system is described by a state vector,

on a complex d-dimensional system \(\mathbb {C}^d\), where the symbol \(| \cdot \rangle \) is called ket and indicates a complex column vector. Similarly, \(\langle \cdot |\) is called bra and indicates a complex row vector, and they are related complex conjugate,

With this notation, we can write an inner product of two quantum state \(|\psi \rangle \) and \(|\phi \rangle \) by \(\langle \psi | \phi \rangle \). Let us define an orthogonal basis

a quantum state in the d-dimensional system can be described simply by

The state is said to be a superposition state of \(|i\rangle \). The coefficients \(\{c_i\}\) are complex, and called complex probability amplitudes. If we measure the system in the basis \(\{ |i \rangle \}\), we obtain the measurement outcome i with a probability

and hence the complex probability amplitudes have to be normalized as follows

In other words, a quantum state is represented as a normalized vector on a complex vector space.

Suppose the measurement outcome i corresponds to a certain physical value \(a_i\), like energy, magnetization and so on, then the expectation value of the physical valuable is given by

where we define an hermitian operator

which is called observable, and has the information of the measurement basis and physical valuable.

The state vector in quantum mechanics is similar to a probability distribution, but essentially different form it, since it is much more primitive; it can take complex value and is more like a square root of a probability. The unique features of the quantum systems come from this property.

2.2 Time Evolution

The time evolution of a quantum system is determined by a Hamiltonian H, which is a hermitian operator acting on the system. Let us denote a quantum state at time \(t=0\) by \(|\psi (0)\rangle \). The equation of motion for quantum mechanics, so-called Schrödinger equation, is given by

This equation can be formally solved by

Therefore, the time evolution is given by an operator \(e^{-i H t}\), which is a unitary operator and hence the norm of the state vector is preserved, meaning the probability conservation. In general, the Hamiltonian can be time dependent. Regarding the time evolution, if you are not interested in the continuous time evolution, but in just its input and output relation, then the time evolution is nothing but a unitary operator U

In quantum computing, the time evolution U is sometimes called quantum gate.

2.3 Qubits

The smallest nontrivial quantum system is a two-dimensional quantum system \(\mathbb {C}^2\), which is called quantum bit or qubit:

Suppose we have n qubits. The n-qubit system is defined by a tensor product space \((\mathbb {C}^2)^{\otimes n}\) of each two-dimensional system as follows. A basis of the system is defined by a direct product of a binary state \(|x_k\rangle \) with \(x_k \in \{ 0,1\}\),

which is simply denoted by

Then, a state of the n-qubit system can be described as

The dimension of the n-qubit system is \(2^n\), and hence the tensor product space is nothing but a \(2^n\)-dimensional complex vector space \(\mathbb {C}^{2^n}\). The dimension of the n-qubit system increases exponentially in the number n of the qubits.

2.4 Density Operator

Next, I would like to introduce operator formalism of the above quantum mechanics. This describes an exactly the same thing but sometimes the operator formalism would be convenient. Let us consider an operator \(\rho \) constructed from the state vector \(|\psi \rangle \):

If you chose the basis of the system \(\{ |i \rangle \}\) for the matrix representation, then the diagonal elements of \(\rho \) corresponds the probability distribution \(p_i = |c_i |^2\) when the system is measured in the basis \(\{ |i \rangle \}\). Therefore, the operator \(\rho \) is called a density operator. The probability distribution can also be given in terms of \(\rho \) by

where \(\mathrm{Tr}\) is the matrix trace. An expectation value of an observable A is given by

The density operator can handle a more general situation where a quantum state is sampled form a set of quantum states \(\{ |\psi _k \rangle \}\) with a probability distribution \(\{ q_k \}\). In this case, if we measure the system in the basis \(\{ | i\rangle \langle i|\}\), the probability to obtain the measurement outcome i is given by

where \(\rho _k = | \psi _k \rangle \langle \psi _k |\). By using linearity of the trace function, this reads

Now, we interpret that the density operator is given by

In this way, a density operator can represent classical mixture of quantum states by a convex mixture of density operators, which is convenient in many cases. In general, a positive and hermitian operator \(\rho \) being subject to \(\mathrm{Tr} [\rho ] = 1\) can be a density operator, since it can be interpreted as a convex mixture of quantum states via spectral decomposition:

where \(\{ |\lambda _i \rangle \}\) and \(\{ \lambda _i \}\) are the eigenstates and eigenvectors, respectively. Because of \(\mathrm{Tr}[\rho ] =1\), we have \(\sum _i \lambda _i =1\).

From its definition, the time evolution of \(\rho \) can be given by

or

Moreover, we can define more general operations for the density operators. For example, if we apply unitary operators U and V with probabilities p and \((1-p)\), respectively, then we have

As another example, if we perform the measurement of \(\rho \) in the basis \(\{ |i \rangle \}\), and we forget about the measurement outcome, then the state is now given by a density operator

Therefore, if we define a map from a density operator to another, which we call superoperator,

the above non-selective measurement (forgetting about the measurement outcomes) is simply written by

In general, any physically allowed quantum operation \(\mathcal {K}\) that maps a density operator to another can be represented in terms of a set of operators \(\{K_i \}\) being subject to \(K_i ^{\dag } K_i = I\) with an identity operator I:

The operators \(\{K_i\}\) are called Kraus operators.

2.5 Vector Representation of Density Operators

Finally, we would like to introduce a vector representation of the above operator formalism. The operators themselves satisfy axioms of the linear space. Moreover, we can also define an inner product for two operators, so-called Hilbert–Schmidt inner product, by

The operators on the n-qubit system can be spanned by the tensor product of Pauli operators \(\{ I, X, Y,Z\}^{\otimes n}\),

where \(\sigma _{ij}\) is the Pauli operators:

Since the Pauli operators constitute a complete basis on the operator space, any operator A can be decomposed into a linear combination of \(P({\boldsymbol{i}})\),

The coefficient \(a_{{\boldsymbol{i}}}\) can be calculated by using the Hilbert–Schmidt inner product as follows:

by virtue of the orthogonality

The number of the n-qubit Pauli operators \(\{ P ({\boldsymbol{i}})\}\) is \(4^n\), and hence a density operator \(\rho \) of the n-qubit system can be represented as a \(4^n\)-dimensional vector

where \(r_{00\ldots 0}=1/2^n\) because of \(\mathrm{Tr}[\rho ]=1\). Moreover, because \(P({\boldsymbol{i}})\) is hermitian, \({\boldsymbol{r}}\) is a real vector. The superoperator \(\mathcal {K}\) is a linear map for the operator, and hence can be represented as a matrix acting on the vector \({\boldsymbol{r}}\):

where the matrix element is given by

In this way, a density operator \(\rho \) and a quantum operation \(\mathcal {K}\) on it can be represented by a vector \({\boldsymbol{r}}\) and a matrix K, respectively.

3 Machine Learning and Reservoir Approach

In this section, we briefly introduce machine learning and reservoir approaches.

3.1 Linear and Nonlinear Regression

A supervised machine learning is a task to construct a model f(x) from a given set of teacher data \(\{ x^{(j)},y^{(j)} \}\) and to predict the output of an unknown input x. Suppose x is a d-dimensional data, and f(x) is one dimensional, for simplicity. The simplest model is linear regression, which models f(x) as a linear function with respect to the input:

The weights \(\{w_i\}\) and bias \(w_0\) are chosen such that an error between f(x) and the output of the teacher data, i.e. loss, becomes minimum. If we employ a quadratic loss function for given teacher data \( \{ \{x^{(j)}_i\} , y^{(j)}\}\), the problem we have to solve is as follows:

where we introduced a constant node \(x_0 =1\). This corresponds to solving a superimposing equations:

where \(\mathbf {y} _j = y^{(j)}\), \(\mathbf {X}_{ji} = x^{(j)}_i\), and \(\mathbf {w}_i = w_i\). This can be solved by using the Moore–Penrose pseudo inverse \(\mathbf {X}^{+}\), which can be defined from the singular value decomposition of \(\mathbf {X} = U D V^{T}\) to be

Unfortunately, the linear regression results in a poor performance in complicated machine learning tasks, and any kind of nonlinearity is essentially required in the model. A neural network is a way to introduce nonlinearity to the model, which is inspired by the human brain. In the neural network, the d-dimensional input data x is fed into N-dimensional hidden nodes with an \(N\times d\) input matrix \(W^\mathrm{in}\):

Then, each element of the hidden nodes is now processed by a nonlinear activation function \(\sigma \) such as \(\tanh \), which is denoted by

Finally, the output is extracted by an output weight \(W^\mathrm{out}\) (\(1 \times N\) dimensional matrix):

The parameters in \(W^\mathrm{in}\) and \(W^\mathrm{out}\) are trained such that the error between the output and teacher data becomes minimum. While this optimization problem is highly nonlinear, a gradient based optimization, so-called backpropagation, can be employed. To improve a representation power of the model, we can concatenate the linear transformation and the activation function as follows:

which is called multi-layer perceptron or deep neural network.

3.2 Temporal Task

The above task is not a temporal task, meaning that the input data is not sequential but given simultaneously like the recognition task of images for hand written language, pictures and so on. However, for a recognition of spoken language or prediction of time series like stock market, which are called temporal tasks, the network has to handle the input data that is given in a sequential way. To do so, the recurrent neural network feeds the previous states of the nodes back into the states of the nodes at next step, which allows the network to memorize the past input. In contrast, the neural network without any recurrency is called a feedforward neural network.

Let us formalize a temporal machine learning task with the recurrent neural network. For given input time series \(\{ x_k \}_{k=1}^{L}\) and target time series \(\{ \bar{y}_k \}_{k=1}^{L}\), a temporal machine learning is a task to generalize a nonlinear function,

For simplicity, we consider one-dimensional input and output time series, but their generalization to a multi-dimensional case is straightforward. To learn the nonlinear function \(f(\{ x_j\}_{j=1}^{k})\), the recurrent neural network can be employed as a model. Suppose the recurrent neural network consists of m nodes and is denoted by m-dimensional vector

To process the input time series, the nodes evolve by

where W is an \(m \times m\) transition matrix and \(W^\mathrm{in}\) is an \(m \times 1\) input weight matrix. Nonlinearity comes from the nonlinear function \(\sigma \) applied on each element of the nodes. The output time series from the network is defined in terms of a \(1 \times m\) readout weights by

Then, the learning task is to determine the parameters in \(W^\mathrm{in}\), W, and \(W^\mathrm{out}\) by using the teacher data \(\{ x_k , \bar{y}_k \}_{k=1}^{L}\) so as to minimize an error between the teacher \(\{ \bar{y}_k \}\) and the output \(\{ y_k \}\) of the network.

3.3 Reservoir Approach

While the representation power of the recurrent neural network can be improved by increasing the number of the nodes, it makes the optimization process of the weights hard and unstable. Specifically, the backpropagation-based methods always suffer from the vanishing gradient problem. The idea of reservoir computing is to resolve this problem by mapping an input into a complex higher dimensional feature space, i.e., reservoir, and by performing simple linear regression on it.

Let us first see a reservoir approach on a feedforward neural network, which is called extreme learning machine (Huang et al. 2006). The input data x is fed into a network like multi-layer perceptron, where all weights are chosen randomly. The states of the hidden nodes at some layer are now regarded as basis functions of the input x in the feature space:

Now, the output is defined as a linear combination of these

and hence the coefficients are determined simply by the linear regression as mentioned before. If the dimension and nonlinearity of the the basis functions are high enough, we can model a complex task simply by the linear regression.

The echo state network is similar but employs the reservoir idea for the recurrent neural network (Jaeger and Haas 2004; Maass et al. 2002; Verstraeten et al. 2007), which has been proposed before extreme learning machine appeared. To be specific, the input weights \(W^\mathrm{in}\) and weight matrix W are both chosen randomly up to an appropriate normalization. Then, the learning task is done by finding the readout weights \(W^\mathrm{out}\) to minimize the mean square error

This problem can be solved stably by using the pseudo inverse as we mentioned before.

For both feedforward and recurrent types, the reservoir approach does not need to tune the internal parameters of the network depending on the tasks as long as it posses sufficient complexity. Therefore, the system, to which the machine learning tasks are outsourced, is not necessarily the neural network anymore, but any nonlinear physical system of large degree of freedoms can be employed as a reservoir for information processing, namely, physical reservoir computing (Fernando and Sojakka 2003; Appeltant et al. 2011; Woods and Naughton 2012; Larger et al. 2012; Paquot et al. 2012; Brunner et al. 2013; Vandoorne et al. 2014; Stieg et al. 2012; Hauser et al. 2011; Nakajima et al. 2013a, b, 2014, 2015; Caluwaerts et al. 2014).

4 Quantum Machine Learning on Near-Term Quantum Devices

In this section, we will see QRC and related frameworks for quantum machine learning. Before going deep into the temporal tasks done on QRC, we first explain how complicated quantum natural dynamics can be exploit as generalization and classification tasks. This can be viewed as a quantum version of extreme learning machine (Negoro et al. 2018). While it is an opposite direction to reservoir computing, we will also see quantum circuit learning (QCL) (Mitarai et al. 2018), where the parameters in the complex dynamics is further tuned in addition to the linear readout weights. QCL is a quantum version of a feedforward neural network. Finally, we will explain quantum reservoir computing by extending quantum extreme learning machine for temporal learning tasks.

4.1 Quantum Extreme Learning Machine

The idea of quantum extreme learning machine lies in using a Hilbert space, where quantum states live, as an enhanced feature space of the input data. Let us denote the set of input and teacher data by \(\{ x^{(j)}, \bar{y}^{(j)} \}\). Suppose we have an n-qubit system, which is initialized to

In order to feed the input data into quantum system, a unitary operation parameterized by x, say V(x), is applied on the initial state:

For example, if x is one-dimensional data and normalized to be \(0 \le x \le 1\), then we may employ the Y-basis rotation \(e^{-i \theta Y}\) with an angle \(\theta = \arccos (\sqrt{x})\):

The expectation value of Z with respect to \(e^{-i \theta Y} |0\rangle \) becomes

and hence is linearly related to the input x. To enhance the power of quantum enhanced feature space, the input could be transformed by using a nonlinear function \(\phi \):

The nonlinear function \(\phi \) could be, for example, hyperbolic tangent, Legendre polynomial, and so on. For simplicity, below we will use the simple linear input \(\theta = \arccos (\sqrt{x})\).

If we apply the same operation on each of the n qubits, we have

Therefore, we have coefficients that are nonlinear with respect to the input x because of the tensor product structure. Still the expectation value of the single-qubit operator \(Z_k\) on the kth qubit is \(2x-1\). However, if we measure a correlated operator like \(Z_1 Z_2\), we can obtain a second-order nonlinear output

with respect to the input x. To measure a correlated operator, it is enough to apply an entangling unitary operation like CNOT gate \(\Lambda (X)=|0\rangle \langle 0| \otimes I + |1\rangle \langle 1| \otimes X\):

In general, an n-qubit unitary operation U transforms the observable Z under the conjugation into a linear combination of Pauli operators:

Thus if you measure the output of the quantum circuit after applying a unitary operation U,

you can get a complex nonlinear output, which could be represented as a linear combination of exponentially many nonlinear functions. U should be chosen to be appropriately complex with keeping experimental feasibility but not necessarily fine-tuned.

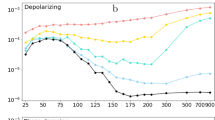

To see how the output behaves in a nonlinear way with respect to the input, in Fig. 1, we will plot the output \(\langle Z \rangle \) for the input \((x_0,x_1)\) and \(n=8\), where the inputs are fed into the quantum state by the Y-rotation with angles

on the 2kth and \((2k+1)\)th qubits, respectively. Regarding the unitary operation U, random two-qubit gates are sequentially applied on any pairs of two qubits on the 8-qubit system.

Suppose the Pauli Z operator is measured on each qubit as an observable. Then, we have

for each qubit. In quantum extreme learning machine, the output is defined by taking linear combination of these n output:

Now, the linear readout weights \(\{ w_i \}\) are tuned so that the quadratic loss function

becomes minimum. As we mentioned previously, this can be solved by using the pseudo inverse. In short, quantum extreme learning machine is a linear regression on a randomly chosen nonlinear basis functions, which come from the quantum state in a space of an exponentially large dimension, namely quantum enhanced feature space. Furthermore, under some typical nonlinear function and unitary operations settings to transform the observables, the output in Eq. (67) can approximate any continuous function of the input. This property is known as the universal approximation property (UAP), which implies that the quantum extreme learning machine can handle a wide class of machine learning tasks with at least the same power as the classical extreme learning machine (Goto et al. 2020).

Here we should note that a similar approach, quantum kernel estimation, has been taken in Havlicek et al. (2019) and Kusumoto et al. (2021). In quantum extreme learning machine, a classical feature vector \( \phi _i(x) \equiv \langle \Phi (x) |Z_i | \Phi (x) \rangle \) is extracted from observables on the quantum feature space \(|\Phi (x) \rangle \equiv V(x)|0\rangle ^{\otimes n}\). Then, linear regression is taken by using the classical feature vector. On the other hand, in quantum kernel estimation, quantum feature space is fully employed by using support vector machine with the kernel functions \(K(x,x') \equiv \langle \Phi (x) | \Phi (x') \rangle \), which can be estimated on a quantum computer. While classification power would be better for quantum kernel estimation, it requires more quantum computational costs both for learning and prediction in contrast to quantum extreme learning machine.

a The quantum circuit for quantum extreme learning machine. The box with \(theta _k\) indicates Y-rotations by angles \(\theta _k\). The red and blue boxes correspond to X and Z rotations by random angles, Each dotted-line box represents a two-qubit gate consisting of two controlled-Z gates and 8 X-rotations and 4 Z-rotations. As denoted by the dashed-line box, the sequence of the 7 dotted boxes is repeated twice. The readout is defined by a linear combination of \(\langle Z_i \rangle \) with constant bias term 1.0 and the input \((x_0,x_1)\). b (Left) The training data for a two-class classification problem. (Middle) The readout after learning. (Right) Prediction from the readout with threshold at 0.5

In Fig. 2,we demonstrate quantum extreme learning machine for a two-class classification task of a two-dimensional input \(0\le x_0, x_1 \le 1\). Class 0 and 1 are defined to be those being subject to \((x_0 -0.5)^2 + (x_1-0.5)^2 \le 0.15\) and \(>0.15\), respectively. The linear readout weights \(\{ w_i\}\) are learned with 1000 randomly chosen training data and prediction is performed with 1000 randomly chosen inputs. The class 0 and 1 are determined whether or not the output y is larger than 0.5. Quantum extreme learning machine with an 8-qubit quantum circuit shown in Fig. 2a succeeds to predict the class with \(95\%\) accuracy. On the other hand, a simple linear regression for \((x_0,x_1)\) results in \(39\%\). Moreover, quantum extreme learning machine with \(U=I\), meaning no entangling gate, also results in poor, \(42\%\). In this way, the feature space enhanced by quantum entangling operations is important to obtain a good performance in quantum extreme learning machine.

4.2 Quantum Circuit Learning

In the split of reservoir computing, dynamics of a physical system is not fine-tuned but natural dynamics of the system is harnessed for machine learning tasks. However, if we see the-state-of-the-art quantum computing devices, the parameter of quantum operations can be finely tuned as done for universal quantum computing. Therefore, it is natural to extend quantum extreme learning machine by tuning the parameters in the quantum circuit just like feedfoward neural networks with backpropagation.

Using parameterized quantum circuits for supervised machine leaning tasks such as generalization of nonlinear functions and pattern recognitions have been proposed in Mitarai et al. (2018), Farhi and Neven (2018), which we call quantum circuit learning. Let us consider the same situation with quantum extreme learning machine. The state before the measurement is given by

In the case of quantum extreme learning machine, the unitary operation for a nonlinear transformation with respect to the input parameter x is randomly chosen. However, the unitary operation U may also be parameterized:

Thereby, the output from the quantum circuit with respect to an observable A

becomes a function of the circuit parameters \(\{ \phi _k\}\) in addition to the input x. Then, the parameters \(\{ \phi _k\}\) are tuned so as to minimize the error between teacher data and the output, for example, by using the gradient just like the output of the feedforward neural network.

Let us define a teacher dataset \(\{ x^{(j)}, y^{(j)}\}\) and a quadratic loss function

The gradient of the loss function can be obtained as follows:

Therefore, if we can measure the gradient of the observable \(\langle A (\{ \phi _k\},x^{(j)}) \rangle \), the loss function can be minimized according to the gradient descent.

If the unitary operation \(u(\phi _k)\) is given by

where \(W_k\) is an arbitrary unitary, and \(P_k\) is a Pauli operator. Then, the partial derivative with respect to the lth parameter can be analytically calculated from the outputs \(\langle A (\{ \phi _k\},x^{(j)}) \rangle \) with shifting the lth parameter by \(\pm \epsilon \) (Mitarai et al. 2018; Mitarai and Fujii 2019):

By considering the statistical error to measure the observable \(\langle A \rangle \), \(\epsilon \) should be chosen to be \(\epsilon = \pi /2\) so as to make the denominator maximum. After measuring the partial derivatives for all parameters \(\phi _k\) and calculating the gradient of the loss function \(L(\{ \phi _k\})\), the parameters are now updated by the gradient descent:

The idea of using the parameterized quantum circuits for machine learning is now widespread. After the proposal of quantum circuit learning based on the analytical gradient estimation above (Mitarai et al. 2018) and a similar idea (Farhi and Neven 2018), several researches have been performed with various types of parameterized quantum circuits (Schuld et al. 2020; Huggins et al. 2019; Chen et al. 2018; Glasser et al. 2018; Du et al. 2018) and various models and types of machine learning including generative models (Benedetti et al. 2019a; Liu and Wang 2018) and generative adversarial models (Benedetti et al. 2019b; Situ et al. 2020; Zeng et al. 2019; Romero and Aspuru-Guzik 2019). Moreover, an expression power of the parameterized quantum circuits and its advantage against classical probabilistic models have been investigated (Du et al. 2020). Experimentally feasible ways to measure an analytical gradient of the parameterized quantum circuits have been investigated (Mitarai and Fujii 2019; Schuld et al. 2019; Vidal and Theis 2018). An advantage of using such a gradient for the parameter optimization has been also argued in a simple setting (Harrow and John 2019), while the parameter tuning becomes difficult because of the vanishing gradient by an exponentially large Hilbert space (McClean et al. 2018). Software libraries for optimizing parameterized quantum circuits are now developing (Bergholm et al. 2018; Chen et al. 2019). Quantum machine learning on near-term devices, especially for quantum optical systems, is proposed in Steinbrecher et al. (2019), Killoran et al. (2019). Quantum circuit learning with parameterized quantum circuits has been already experimentally demonstrated on superconducting qubit systems (Havlicek et al. 2019; Wilson et al. 2018) and a trapped ion system (Zhu et al. 2019).

4.3 Quantum Reservoir Computing

Now, we return to the reservoir approach and extend quantum extreme learning machine from non-temporal tasks to temporal ones, namely, quantum reservoir computing (Fujii and Nakajima 2017). We consider a temporal task, which we explained in Sect. 3.2. The input is given by a time series \(\{ x_k\}_{k}^{L}\) and the purpose is to learn a nonlinear temporal function:

To this end, the target time series \(\{ \bar{y}_k \}_{k=1}^{L}\) is also provided as teacher.

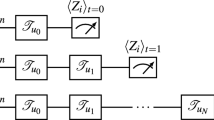

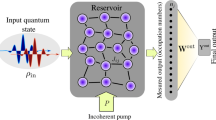

Contrast to the previous setting with non-temporal tasks, we have to fed input into a quantum system sequentially. This requires us to perform an initialization process during computation, and hence the quantum state of the system becomes mixed state. Therefore, in the formulation of QRC, we will use the vector representation of density operators, which was explained in Sect. 2.5.

In the vector representation of density operators, the quantum state of an N-qubit system is given by a vector in a \(4^N\)-dimensional real vector space, \({\boldsymbol{r}} \in \mathbb {R}^{4^N}\). In QRC, similarly to recurrent neural networks, each element of the \(4^N\)-dimensional vector is regarded as a hidden node of the network. As we seen in Sect. 2.5, any physical operation can be written as a linear transformation of the real vector by a \(4^N \times 4^N\) matrix W:

Now we see, from Eq. (79), a time evolution similar to the recurrent neural network, \({\boldsymbol{r}}' = \tanh ( W {\boldsymbol{r}} )\). However, there is no nonlinearity such as \(\tanh \) in each quantum operation W. Instead, the time evolution W can be changed according to the external input \(x_k\), namely \(W_{x_k}\), which contrasts to the conventional recurrent neural network where the input is fed additively \(W {\boldsymbol{r}} + W^\mathrm{in} x_k\). This allows the quantum reservoir to process the input information \(\{x_k\}\) nonlinearly, by repetitively feeding the input.

Suppose the input \(\{ x_k\}\) is normalized such that \(0 \le x_k \le 1\). As an input, we replace a part of the qubits to the quantum state. The density operator is given by

For simplicity, below we consider the case where only one qubit is replaced for the input. Corresponding matrix \(S_{x_k}\) is given by

where \(\mathrm{Tr}_\mathrm{replace}\) indicates a partial trace with respect to the replaced qubit. With this definition, we have

The unitary time evolution, which is necessary to obtain a nonlinear behavior with respect to the input valuable \(x_k\), is taken as a Hamiltonian dynamics \(e^{-i H \tau }\) for a given time interval \(\tau \). Let us denote its representation on the vector space by \(U_\tau \):

Then, a unit time step is written as an input-depending linear transformation:

where \({\boldsymbol{r}}(k\tau )\) indicates the hidden nodes at time \(k\tau \).

Since the number of the hidden nodes are exponentially large, it is not feasible to observe all nodes from experiments. Instead, a set of observed nodes \(\{\bar{r}_l\}_{l=1}^{M}\), which we call true nodes, is defined by a \(M \times 4^N \) matrix R,

The number of true nodes M has to be a polynomial in the number of qubits N. That is, from exponentially many hidden nodes, a polynomial number of true nodes are obtained to define the output from QR (see Fig. 3a):

where \(W_\mathrm{out}\) is the readout weights, which is obtained by using the training data. For simplicity, we take the single-qubit Pauli Z operator on each qubit as the true nodes, i.e.,

so that if there is no dynamics these nodes simply provide a linear output \((2x_k -1)\) with respect to the input \(x_k\).

Moreover, in order to improve the performance we also perform the temporal multiplexing. The temporal multiplexing has been found to be useful to extract complex dynamics on the exponentially large hidden nodes through the restricted number of the true nodes (Fujii and Nakajima 2017). In temporal multiplexing, not only the true nodes just after the time evolution \(U_\tau \), also at each of the subdivided V time intervals during the unitary evolution \(U_{\tau }\) to construct V virtual nodes, as shown in Fig. 3b. After each input by \(S_{x_k}\), the signals from the hidden nodes (via the true nodes) are measured for each subdivided intervals after the time evolution by \(U_{ v \tau /V}\) (\(v=1,2,\ldots V\)), i.e.,

In total, now we have \(N \times V\) nodes, and the output is defined as their linear combination:

By using the teacher data \(\{ \bar{y}_k \}_{k}^{L}\), the linear readout weights \(W^\mathrm{out}_{j,v}\) can be determined by using the pseudo inverse. In Fujii and Nakajima (2017), the performance of QRC has been investigated extensively for both binary and continuous inputs. The result shows that even if the number of the qubits are small like 5–7 qubits the performance as powerful as the echo state network of the 100–500 nodes have been reported both in short term memory and parity check capacities. Note that, although we do not go into detail in this chapter, the technique called spatial multiplexing (Nakajima et al. 2019), which exploits multiple quantum reservoirs with common input sequence injected, is also introduced to harness quantum dynamics as a computational resource. Recently, QRC has been further investigated in Kutvonen et al. (2020), Ghosh et al. (2019a), Chen and Nurdin (2019). Specifically, in Ghosh et al. (2019a), the authors use quantum reservoir computing to detect many-body entanglement by estimating nonlinear functions of density operators like entropy.

4.4 Emulating Chaotic Attractors Using Quantum Dynamics

To see a performance of QRC, here we demonstrate an emulation of chaotic attractors. Suppose \(\{ x_k\}_k^{L}\) is a discretized time sequence being subject to a complex nonlinear equation, which might has a chaotic behavior. In this task, the target, which the network is to output, is defined to be

That is, the system learns the input of the next step. Once the system successfully learns \(\bar{y}_{k}\), by feeding the output into the input of the next step of the system, the system evolves autonomously.

Demonstrations of chaotic attractor emulations. a Lorenz attractor. b Mackey–Glass system. c Rössler attractor. d Hénon map. The dotted line shows the time step when the system is switched from teacher forced state to autonomous state. In the right side, delayed phase diagrams of learned dynamics are shown

Here, we employ the following target time series from chaotic attractors: (i) Lorenz attractor,

with \((a,b,c)=(10,28,8/3)\), (ii) the chaotic attractor of Mackey–Glass equation,

with \((\beta , \gamma , n) = (0.2, 0.1 , 10)\) and \(\tau = 17\), (iii) Rössler attoractor,

with (0.2, 0.2, 5.7), and (iv) Hénon map,

Regarding (i)-(iii), the time series is obtained by using the fourth-order Runge–Kutta method with step size 0.02, and only x(t) is employed as a target. For the time evolution of quantum reservoir, we employ a fully connected transverse-field Ising model

where the coupling strengths are randomly chosen such that \(J_{ij}\) is distributed randomly from \([-0.5,0.5]\) and \(h=1.0\). The time interval and the number of the virtual nodes are chosen to be \(\tau = 4.0\) and \(v=10\) so as to obtain the best performance. The first \(10^4\) steps are used for training. After the linear readout weights are determined, several \(10^3\) steps are predicted by autonomously evolving the quantum reservoir. The results are shown in Fig. 4 for each of (a) Lorenz attractor, (b) the chaotic attractor of Mackey–Glass system, (c) Rössler attractor, and (d) Hénon map. All these results show that training is done well and the prediction is successful for several hundreds steps. Moreover, the output from the quantum reservoir also successfully reconstruct the structures of these chaotic attractors as you can see from the delayed phase diagram.

5 Conclusion and Discussion

Here, we reviewed quantum reservoir computing and related approaches, quantum extreme learning machine and quantum circuit learning. The idea of quantum reservoir computing comes from the spirit of reservoir computing, i.e., outsourcing information processing to natural physical systems. This idea is best suited to quantum machine learning on near-term quantum devices in noisy intermediate quantum (NISQ) era. Since reservoir computing uses complex physical systems as a feature space to construct a model by the simple linear regression, this approach would be a good way to understand the power of a quantum enhanced feature space.

References

L. Appeltant, M.C. Soriano, G. Van der Sande, J. Danckaert, S. Massar, J. Dambre, B. Schrauwen, C.R. Mirasso, I. Fischer, Information processing using a single dynamical node as complex system. Nat. Commun. 2, 468 (2011)

R. Barends et al., Superconducting quantum circuits at the surface code threshold for fault tolerance. Nature 508, 500 (2014)

M. Benedetti et al., A generative modeling approach for benchmarking and training shallow quantum circuits. NPJ Quantum Inf. 5, 45 (2019a)

M. Benedetti et al., Adversarial quantum circuit learning for pure state approximation. New J. Phys. 21 (2019b)

V. Bergholm et al., PennyLane: automatic differentiation of hybrid quantum-classical computations (2018), arXiv:1811.04968

I. Bloch, J. Dalibard, S. Nascimbène, Quantum simulations with ultracold quantum gases. Nat. Phys. 8, 267 (2012)

S. Boixo, T.F. Rønnow, S.V. Isakov, Z. Wang, D. Wecker, D.A. Lidar, J.M. Martinis, M. Troyer, Evidence for quantum annealing with more than one hundred qubits. Nat. Phys. 10, 218 (2014)

S. Boixo et al., Characterizing quantum supremacy in near-term devices. Nat. Phys. 14, 595 (2018)

D. Brunner, M.C. Soriano, C.R. Mirasso, I. Fischer, Parallel photonic information processing at gigabyte per second data rates using transient states. Nat. Commun. 4, 1364 (2013)

K. Caluwaerts, J. Despraz, A. Işçen, A.P. Sabelhaus, J. Bruce, B. Schrauwen, V. SunSpiral, Design and control of compliant tensegrity robots through simulations and hardware validation. J. R. Soc. Interface 11, 20140520 (2014)

J. Chen, H.I. Nurdin, Learning nonlinear input-output maps with dissipative quantum systems. Quantum Inf. Process. 18, 198 (2019)

H. Chen et al., Universal discriminative quantum neural networks. Quantum Mach. Intell. 3, 1 (2021)

Z.-Y. Chen et al., VQNet: library for a quantum-classical hybrid neural network (2019), arXiv:1901.09133

J.I. Cirac, P. Zoller, Goals and opportunities in quantum simulation. Nat. Phys. 8, 264 (2012)

J. Dambre, D. Verstraeten, B. Schrauwen, S. Massar, Information processing capacity of dynamical systems. Sci. Rep. 2, 514 (2012)

Y. Du et al., Implementable quantum classifier for nonlinear data (2018), arXiv:1809.06056

Y. Du et al., The expressive power of parameterized quantum circuits. Phys. Rev. Res. 2 (2020)

E. Farhi, H. Neven, Classification with quantum neural networks on near term processors (2018), arXiv:1802.06002

E. Farhi, J. Goldstone, S. Gutmann, J. Lapan, A. Lundgren, D. Preda, A quantum adiabatic evolution algorithm applied to random instances of an NP-complete problem. Science 292, 472 (2001)

C. Fernando, S. Sojakka, Pattern Recognition in a Bucket. Lecture Notes in Computer Science, vol. 2801 (Springer, 2003), p. 588

R.P. Feynman, Simulating physics with computers. Int. J. Theor. Phys. 21, 467 (1982)

K. Fujii, Quantum Computation with Topological Codes-From Qubit to Topological Fault-Tolerance Springer Briefs in Mathematical Physics. (Springer, Berlin, 2015)

K. Fujii, S. Tamate, Computational quantum-classical boundary of complex and noisy quantum systems. Sci. Rep. 6, 25598 (2016)

K. Fujii, K. Nakajima, Harnessing disordered-ensemble quantum dynamics for machine learning. Phys. Rev. Appl. 8 (2017)

K. Fujii, H. Kobayashi, T. Morimae, H. Nishimura, S. Tamate, S. Tani, Power of Quantum Computation with Few Clean Qubits, in Proceedings of 43rd International Colloquium on Automata, Languages, and Programming (ICALP 2016) (2016), pp. 13:1–13:14

I.M. Georgescu, S. Ashhab, F. Nori, Quantum simulation. Rev. Mod. Phys. 86, 153 (2014)

S. Ghosh et al., Quantum reservoir processing. NPJ Quantum Inf. 5, 35 (2019a)

S. Ghosh, T. Paterek, T.C.H. Liew, Quantum neuromorphic platform for quantum state preparation. Phys. Rev. Lett. 123 (2019b)

S. Ghosh et al., Reconstructing quantum states with quantum reservoir networks. IEEE Trans. Neural Netw. Learn. Syst. 1–8 (2020)

I. Glasser, N. Pancotti, J.I. Cirac, From probabilistic graphical models to generalized tensor networks for supervised learning (2018), arXiv:1806.05964

T. Goto, Q.H. Tran, K. Nakajima, Universal approximation property of quantum feature maps (2020), arXiv: 2009.00298

A. Harrow, N. John, Low-depth gradient measurements can improve convergence in variational hybrid quantum-classical algorithms. Phys. Rev. Lett. 126, 140502 (2021)

H. Hauser, A.J. Ijspeert, R.M. Füchslin, R. Pfeifer, W. Maass, Towards a theoretical foundation for morphological computation with compliant bodies. Biol. Cybern. 105, 355 (2011)

V. Havlicek et al., Supervised learning with quantum enhanced feature spaces. Nature 567, 209 (2019)

G.-B. Huang, Q.-Y. Zhu, C.-K. Siew, Extreme learning machine: theory and applications. Neurocomputing 70, 489 (2006)

W. Huggins et al., Towards quantum machine learning with tensor networks. Quantum Sci. Technol. 4 (2019)

H. Jaeger, H. Haas, Harnessing nonlinearity: predicting chaotic systems and saving energy in wireless communication. Science 304, 78 (2004)

T. Kadowaki, H. Nishimori, Quantum annealing in the transverse Ising model. Phys. Rev. E 58, 5355 (1998)

J. Kelly et al., State preservation by repetitive error detection in a superconducting quantum circuit. Nature 519, 66 (2015)

N. Killoran et al., Continuous-variable quantum neural networks. Phys. Rev. Res. 1 (2019)

Kusumoto et al., Experimental quantum kernel trick with nuclear spins in a solid. npj Quantum Inf. 7, 94 (2021)

A. Kutvonen, K. Fujii, T. Sagawa, Optimizing a quantum reservoir computer for time series prediction. Sci. Rep. 10, 14687 (2020)

L. Larger, M.C. Soriano, D. Brunner, L. Appeltant, J.M. Gutierrez, L. Pesquera, C.R. Mirasso, I. Fischer, Photonic information processing beyond Turing: an optoelectronic implementation of reservoir computing. Opt. Express 20, 3241 (2012)

Y. LeCun, Y. Bengio, G. Hinton, Deep learning. Nature 521, 436 (2015)

J.-G. Liu, L. Wang, Phys. Rev. A 98 (2018)

W. Maass, T. Natschläger, H. Markram, Real-time computing without stable states: a new framework for neural computation based on perturbations. Neural Comput. 14, 2531 (2002)

J.R. McClean et al., Barren plateaus in quantum neural network training landscapes. Nat. Commun. 9, 4812 (2018)

K. Mitarai, K. Fujii, Methodology for replacing indirect measurements with direct measurements. Phys. Rev. Res. 1 (2019)

K. Mitarai et al., Quantum circuit learning. Phys. Rev. A 98 (2018)

T. Morimae, K. Fujii, J.F. Fitzsimons, Hardness of classically simulating the one-clean-qubit model. Phys. Rev. Lett. 112 (2014)

K. Nakajima, H. Hauser, R. Kang, E. Guglielmino, D.G. Caldwell, R. Pfeifer, Computing with a muscular-hydrostat system, in Proceedings of 2013 IEEE International Conference on Robotics and Automation (ICRA), vol. 1496 (2013a)

K. Nakajima, H. Hauser, R. Kang, E. Guglielmino, D.G. Caldwell, R. Pfeifer, A soft body as a reservoir: case studies in a dynamic model of octopus-inspired soft robotic arm Front. Comput. Neurosci. 7, 1 (2013b)

K. Nakajima, T. Li, H. Hauser, R. Pfeifer, Exploiting short-term memory in soft body dynamics as a computational resource. J. R. Soc. Interface 11, 20140437 (2014)

K. Nakajima, H. Hauser, T. Li, R. Pfeifer, Information processing via physical soft body. Sci. Rep. 5, 10487 (2015)

K. Nakajima et al., Boosting computational power through spatial multiplexing in quantum reservoir computing. Phys. Rev. Appl. 11 (2019)

M. Negoro et al., Machine learning with controllable quantum dynamics of a nuclear spin ensemble in a solid (2018), arXiv:1806.10910

M.A. Nielsen, I.L. Chuang, Quantum Computation and Quantum Information (Cambridge University Press, Cambridge, 2010)

Y. Paquot, F. Duport, A. Smerieri, J. Dambre, B. Schrauwen, M. Haelterman, S. Massar, Optoelectronic reservoir computing. Sci. Rep. 2, 287 (2012)

J. Preskill, Quantum computing in the NISQ era and beyond. Quantum 2, 79 (2018)

M. Rabinovich, R. Huerta, G. Laurent, Transient dynamics for neural processing. Science 321, 48 (2008)

J. Romero, A. Aspuru-Guzik, Variational quantum generators: Generative adversarial quantum machine learning for continuous distributions (2019), arXiv:1901.00848

T.F. Rønnow, Z. Wang, J. Job, S. Boixo, S.V. Isakov, D. Wecker, J.M. Martinis, D.A. Lidar, M. Troyer, Defining and detecting quantum speedup. Science 345, 420 (2014)

M. Schuld et al., Evaluating analytic gradients on quantum hardware. Phys. Rev. A 99 (2019)

M. Schuld et al., Circuit-centric quantum classifiers. Phys. Rev. A 101 (2020)

P.W. Shor, Algorithms for quantum computation: Discrete logarithms and factoring, in Proceedings of the 35th Annual Symposium on Foundations of Computer Science, vol. 124 (1994)

H. Situ et al., Quantum generative adversarial network for generating discrete data. Inf. Sci. 538, 193 (2020)

G.R. Steinbrecher et al., Quantum optical neural networks. NPJ Quantum Inf. 5, 60 (2019)

A.Z. Stieg, A.V. Avizienis, H.O. Sillin, C. Martin-Olmos, M. Aono, J.K. Gimzewski, Emergent criticality in complex Turing B-type atomic switch networks. Adv. Mater. 24, 286 (2012)

Q.H. Tran, K. Nakajima, Higher-order quantum reservoir computing (2020), arXiv:2006.08999

K. Vandoorne, P. Mechet, T.V. Vaerenbergh, M. Fiers, G. Morthier, D. Verstraeten, B. Schrauwen, J. Dambre, P. Bienstman, Experimental demonstration of reservoir computing on a silicon photonics chip. Nat. Commun. 5, 3541 (2014)

D. Verstraeten, B. Schrauwen, M. D’Haene, D. Stroobandt, An experimental unification of reservoir computing methods. Neural Netw. 20, 391 (2007)

J.G. Vidal, D.O. Theis, Calculus on parameterized quantum circuits (2018), arXiv:1812.06323

C.M. Wilson et al., Quantum kitchen sinks: an algorithm for machine learning on near-term quantum computers (2018), arXiv:1806.08321

D. Woods, T.J. Naughton, Photonic neural networks. Nat. Phys. 8, 257 (2012)

J. Zeng et al., Learning and inference on generative adversarial quantum circuits. Phys. Rev. A 99 (2019)

D. Zhu et al., Training of quantum circuits on a hybrid quantum computer. Sci. Adv. 5, 9918 (2019)

Acknowledgements

KF is supported by KAKENHI No.16H02211, JST PRESTO JPMJPR1668, JST ERATO JPMJER1601, and JST CREST JPMJCR1673. KN is supported by JST PRESTO Grant Number JPMJPR15E7, Japan, by JSPS KAKENHI Grant Numbers JP18H05472, JP16KT0019, and JP15K16076. KN would like to acknowledge Dr. Quoc Hoan Tran for his helpful comments. This work is supported by MEXT Quantum Leap Flagship Program (MEXT Q-LEAP) Grant No. JPMXS0118067394.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Singapore Pte Ltd.

About this chapter

Cite this chapter

Fujii, K., Nakajima, K. (2021). Quantum Reservoir Computing: A Reservoir Approach Toward Quantum Machine Learning on Near-Term Quantum Devices. In: Nakajima, K., Fischer, I. (eds) Reservoir Computing. Natural Computing Series. Springer, Singapore. https://doi.org/10.1007/978-981-13-1687-6_18

Download citation

DOI: https://doi.org/10.1007/978-981-13-1687-6_18

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-13-1686-9

Online ISBN: 978-981-13-1687-6

eBook Packages: Computer ScienceComputer Science (R0)