Abstract

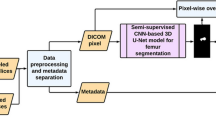

This chapter addresses the problem of segmentation of proximal femur in 3D MR images. We propose a deeply supervised 3D U-net-like fully convolutional network for segmentation of proximal femur in 3D MR images. After training, our network can directly map a whole volumetric data to its volume-wise labels. Inspired by previous work, multi-level deep supervision is designed to alleviate the potential gradient vanishing problem during training. It is also used together with partial transfer learning to boost the training efficiency when only small set of labeled training data are available. The present method was validated on 20 3D MR images of femoroacetabular impingement patients. The experimental results demonstrate the efficacy of the present method.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

Keywords

- MRI

- Segmentation

- Femoroacetabular impingement (FAI)

- Proximal femur

- Deep learning

- Fully Convolutional Network (FCN)

- Deep supervision

6.1 Introduction

Femoroacetabular Impingement (FAI) is a cause of hip pain in adults and has been recognized recently as one of the key risk factors that may lead to the development of early cartilage and labral damage [1] and a possible precursor of hip osteoarthritis [2]. Several studies [2, 3] have shown that the prevalence of FAI in young populations with hip complaints is high. Although there exist a number of imaging modalities that can be used to diagnose and assess FAI, MR imaging does not induce any dosage of radiation at all and is regarded as the standard tool for FAI diagnosis [4]. While manual analysis of a series of 2D MR images is feasible, automated segmentation of proximal femur in MR images will greatly facilitate the applications of MR images for FAI surgical planning and simulation.

The topic of automated MR image segmentation of the hip joint has been addressed by a few studies which relied on atlas-based segmentation [5], graph cut [6], active model [7, 8], or statistical shape models [9]. While these methods reported encouraging results for bone segmentation, further improvements are needed. For example, Arezoomand et al. [8] recently developed a 3D active model framework for segmentation of proximal femur in MR images, and they reported an average recall of 0.88.

Recently, machine learning-based methods, especially those based on convolutional neural networks (CNNs), have witnessed successful applications in natural image processing [10, 11] as well as in medical image analysis [12,14,15,15]. For example, Prasoon et al. [12] developed a method to use a triplanar CNN that can autonomously learn features from images for knee cartilage segmentation. More recently, 3D volume-to-volume segmentation networks were introduced, including 3D U-Net [13], 3D V-Net [14], and a 3D deeply supervised network [15].

In this chapter, we propose a deeply supervised 3D U-net-like fully convolutional network (FCN) for segmentation of proximal femur in 3D MR images. After training, our network can directly map a whole volumetric data to its volume-wise label. Inspired by previous work [13, 15], multi-level deep supervision is designed to alleviate the potential gradient vanishing problem during training. It is also used together with partial transfer learning to boost the training efficiency when only small set of labeled training data are available.

6.2 Method

Figure 6.1 illustrates the architecture of our proposed deeply supervised 3D U-net-like network. Our proposed neural network is inspired by the 3D U-net [13]. Similar to 3D U-net, our network also consists of two parts, i.e., the encoder part (contracting path) and the decoder part (expansive path). The encoder part focuses on analysis and feature representation learning from the input data, while the decoder part generates segmentation results, relying on the learned features from the encoder part. Shortcut connections are established between layers of equal resolution in the encoder and decoder paths. The difference between our network and the 3D U-net is the introduction of multi-level deep supervision, which gives more feedback to help training during back propagation process.

Previous studies show small convolutional kernels are more beneficial for training and performance. In our deeply supervised network, all convolutional layers use kernel size of 3 × 3 × 3 and strides of 1, and all max pooling layers use kernel size of 2 × 2 × 2 and strides of 2. In the convolutional and deconvolutional blocks of our network, batch normalization (BN) [16] and rectified linear unit (ReLU) are adopted to speed up the training and to enhance the gradient back propagation.

6.2.1 Multi-level Deep Supervision

Training a deep neural network is challenging. As the matter of gradient vanishing, final loss cannot be efficiently back propagated to shallow layers, which is more difficult for 3D cases when only a small set of annotated data is available. To address this issue, we inject two branch classifiers into network in addition to the classifier of the main network. Specifically, we divide the decoder path of our network into three different levels: lower layers, middle layers, and upper layers. Deconvolutional blocks are injected into lower and middle layers such that the low-level and middle-level features are upscaled to generate segmentation predictions with the same resolution as the input data. As a result, besides the classifier from the upper final layer (“UpperCls” in Fig. 6.1), we also have two branch classifiers in lower and middle layers (“LowerCls” and “MidCls” in Fig. 6.1, respectively). With the losses calculated by the predictions from classifiers of different layers, more effective gradient back propagation can be achieved by direct supervision on the hidden layers.

Let W be the weights of main network and w l, w m, w u be the weights of the three classifiers “LowerCls,” “MidCls,” and “UpperCls,” respectively. Then the cross-entropy loss function of a classifier is:

where c ∈ {l, m, u} represents the index of the classifiers; χ represents the training samples; and p(y i = t(x i) | x i; W, w c) is the probability of target class label t(x i) corresponding to sample x i ∈ χ.

The total loss function of our deep-supervised 3D network is:

where ψ() is the regularization term (L 2 norm in our experiment) with hyper-parameter λ; α l, α m, α u are the weights of the associated classifiers.

By doing this, classifiers in different layers can also take advantages of multi-scale context, which has been demonstrated in previous work on segmentation of 3D liver CT and 3D heart MR images [15]. This is based on the observation that lower layers have smaller receptive fields, while upper layers have larger receptive fields. As a result, multi-scale context information can be learned by our network which will then facilitate the target segmentation in the test stage.

6.2.2 Partial Transfer Learning

It is difficult to train a deep neural network from scratch because of limited annotated data. Training deep neural network requires large amount of annotated data, which are not always available, although data augmentation can partially address the problem. Furthermore, randomly initialized parameters make it more difficult to search for an optimal solution in high-dimensional space. Transfer learning from an existing network, which has been trained on a large set of data, is a common way to alleviate the difficulty. Usually the new dataset should be similar or related to the dataset and tasks used in the pre-training stage. But for medical image applications, it is difficult to find an off-the-shelf 3D model trained on a large set of related data of related tasks.

Previous studies [17] demonstrated that weights of lower layers in deep neural network are generic, while higher layers are more related to specific tasks. Thus, the encoder path of our neural network can be transferred from models pre-trained on a totally different dataset. In the field of computer vision, lots of models are trained on very large dataset, e.g., ImageNet [18], VGG16 [19], GoogleNet [20], etc. Unfortunately, most of these models were trained on 2D images. 3D pre-trained models that can be freely accessed are rare in both computer vision and medical image analysis fields.

C3D [21] is one of the few 3D models that has been trained on a very large dataset in the field of computer vision. More specifically, C3D is trained on the Sports-1M dataset to learn spatiotemporal features for action recognition. The Sports-1M dataset consists of 1.1 million sports videos, and each video belongs to one of 487 sports categories.

In our experiment, C3D pre-trained model was adopted as the pre-trained model for the encoder part of our neural network. For the decoder parts of our neural network, they were randomly initialized.

6.2.3 Implementation Details

The proposed network was implemented in Python using TensorFlow framework and trained on a desktop with a 3.6 GHz Intel(R) i7 CPU and a GTX 1080 Ti graphics card with 11GB GPU memory.

6.3 Experiments and Results

6.3.1 Dataset and Preprocessing

We evaluated our method on a set of unilateral hip joint data containing 20 T1-weighted MR images of FAI patients. We randomly split the dataset into two parts, ten images are for training and the other ten images are for testing. Data augmentation was used to enlarge the training samples by rotating each image (90, 180, 270) degrees around the z-axis of the image and flipped horizontally (y-axis). After that, we got in total 80 images for training.

6.3.2 Training Patches Preparation

All sub-volume patches to our neural network are in the size of 64 × 64 × 64. We randomly cropped sub-volume patches from training samples whose size are about 300 × 200 × 100. In the phase of training, during every epoch, 80 training volumetric images were randomly shuffled. We then randomly sampled patches with batch size 2 from each volumetric image for n times (n = 5). Each sampled patch was normalized as zero mean and unit variance before fed into network.

6.3.3 Training

We trained two different models, one with partial transfer learning and the other without. More specifically, to train the model with partial transfer learning, we initialized the weights of the encoder part of the network from the pre-trained C3D [21] model and the weights of other parts from a Gaussian distribution(μ = 0, σ = 0. 01). In contrast, for the model without partial transfer learning, all weights were initialized from Gaussian distribution(μ = 0, σ = 0. 01).

Each time, the model was trained for 14,000 iterations, and the weights were updated by the stochastic gradient descent (SGD) algorithm (momentum = 0. 9, weight decay = 0. 005). The initial learning rate was 1 × 10−3 and halved by 3000 every training iterations. The hyper-parameters were chosen as follows: λ = 0. 005, α l = 0. 33, α m = 0. 67, and α u = 1. 0.

6.3.4 Test and Evaluation

Our trained models can estimate labels of an arbitrary-sized volumetric image. Given a test volumetric image, we extracted overlapped sub-volume patches with the size of 64 × 64 × 64 and fed them to the trained network to get prediction probability maps. For the overlapped voxels, the final probability maps would be the average of the probability maps of the overlapped patches, which were then used to derive the final segmentation results. After that, we conducted morphological operations to remove isolated small volumes and internal holes as there is only one femur in each test data. When implemented with Python using TensorFlow framework, our network took about 2 min to process one volume with size of 300 × 200 × 100.

The segmented results were compared with the associated ground truth segmentation which was obtained via a semiautomatic segmentation using the commercial software package called Amira.Footnote 1 Amira was also used to extract surface models from the automatic segmentation results and the ground truth segmentation. For each test image, we then evaluated the distance between the surface models extracted from different segmentation as well as the volume overlap measurements including Dice overlap coefficient [22], Jaccard coefficient [22], precision, and recall.

6.3.5 Results

Table 6.1 shows the segmentation results using the model trained with partial transfer learning. In comparison with manually annotated ground truth data, our model achieved an average surface distance of 0.22 mm, an average Dice coefficient of 0.987, an average Jaccard index of 0.974, an average precision of 0.991, and an average recall of 0.982. Figure 6.2 shows a segmentation example and the color-coded error distribution of the segmented surface model.

We also compared the results achieved by using the model with partial transfer learning with the one without partial transfer learning. The results are presented in Table 6.2, which clearly demonstrate the effectiveness of the partial transfer learning.

6.4 Conclusion

We have introduced a 3D U-net-like fully convolutional network with multi-level deep supervision and successfully applied it to the challenging task of automatic segmentation of proximal femur in MR images. Multi-level deep supervision and partial transfer learning were used in our network to boost the training efficiency when only small set of labeled 3D training data were available. The experimental results demonstrated the efficacy of the proposed network.

Ethics

The medical research ethics committee of Inselspital, University of Bern, Switzerland, approved the human protocol for this investigation. Informed consent for participation in the study was obtained.

Notes

References

Laborie L, Lehmann T, Engester I et al (2011) Prevalence of radiographic findings thought to be associated with femoroacetabular impingement in a population-based cohort of 2081 healthy young adults. Radiology 260:494–502

Leunig M, Beaulé P, Ganz R (2009) The concept of femoroacetabular impingement: current status and future perspectives. Clin Orthop Relat Res 467: 616–622

Clohisy J, Knaus E, Hunt DM et al (2009) Clinical presentation of patients with symptomatic anterior hip impingement. Clin Orthop Relat Res 467: 638–644

Perdikakis E, Karachalios T, Katonis P, Karantanas A (2011) Comparison of MR-arthrography and MDCT-arthrography for detection of labral and articular cartilage hip pathology. Skeletal Radiol 40:1441–1447

Xia Y, Fripp J, Chandra S, Schwarz R, Engstrom C, Crozier S (2013) Automated bone segmentation from large field of view 3D MR images of the hip joint. Phys Med Biol 21:7375–7390

Xia Y, Chandra S, Engstrom C, Strudwick M, Crozier S, Fripp J (2014) Automatic hip cartilage segmentation from 3D MR images using arc-weighted graph searching. Phys Med Biol 59:7245–66

Gilles B, Magnenat-Thalmann N (2010) Musculoskeletal MRI segmentation using multi-resolution simplex meshes with medial representations. Med Image Anal 14:291–302

Arezoomand S, Lee WS, Rakhra K, Beaule P (2015) A 3D active model framework for segmentation of proximal femur in MR images. Int J CARS 10:55–66

Chandra S, Xia Y, Engstrom C et al (2014) Focused shape models for hip joint segmentation in 3D magnetic resonance images. Med Image Anal 18: 567–578

Krizhevsky A, ISutskever, Hinton G (2012) Imagenet classification with deep convolutional neural networks. In: Pereira F, Burges CJC, Bottou L, Weinberger KQ (eds) Advances in neural information processing systems, vol 25. Curran Associates, Inc., Red Hook, pp 1097–1105

Long J, Shelhamer E, Darrell T (2015) Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR 2015), pp 3431–3440, Boston

Prasson A, Igel C, Petersen K et al (2013) Deep feature learning for knee cartilage segmentation using a triplanar convolutional neural network. In: Proceedings of the 16th international conference on medical image computing and computer assisted intervention (MICCAI 2013), vol 16(Pt 2), pp 246–53, Nagoya

Cicek O, Abdulkadir A, Lienkamp S, Brox T, Ronneberger O (2016) 3D u-net: learning dense volumetric segmentation from sparse annotation. In: Proceedings of the 16th international conference on medical image computing and computer assisted intervention (MICCAI 2016). LNCS, vol 9901, pp 424–432, Athens

Milletari F, Navab N, Ahmadi SA (2016) V-net: fully convolutional neural networks for volumetric medical image segmentation. In: Proceedings of the 2016 international conference on 3D vision (3DV). IEEE, pp 565–571, Stanford

Dou Q, Yu L, Chen H, Jin Y, Yang X, Qin J, Heng PA (2017) 3D deeply supervised network for automated segmentation of volumetric medical images. Med Image Anal 41:40–54

Ioffe S, Szegedy C (2015) Batch normalization: accelerating deep network training by reducing internal covariate shift. In: Proceedings of international conference on machine learning (ICML 2015), Lille

Yosinski J, Clune J, Bengio Y, Lipson H (2014) How transferable are features in deep neural networks? In: Advances in neural information processing systems, pp 3320–3328, Curran Associates, Inc.

Deng J, Dong W, Socher R, Li LJ, Li K, Fei-Fei L (2009) ImageNet: a large-scale hierarchical image database. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR 2009), Miami Beach

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv:1409.1556

Szegedy C, Liu W, Jia Y et al (2015) Going deeper with convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR 2015), Boston. IEEE, pp 1–9

Tran D, Bourdev L, Fergus R, Torresani L, Paluri M (2015) Learning spatiotemporal features with 3D convolutional networks. In: Proceedings of the IEEE international conference on computer vision (CVPR 2015), pp 4489–4497, Boston

Karasawa K, Oda M, Kitasakab T et al (2017) Multi-atlas pancreas segmentation: atlas selection based on vessel structure. Med Image Anal 39:18–28

Acknowledgements

This chapter was modified from the paper published by our group in the MICCAI 2017 Workshop on Machine Learning in Medical Imaging (Zeng and Zheng, MLMI@MICCAI 2017: 274-282). The related contents were reused with the permission. This study was partially supported by the Swiss National Science Foundation via project 205321_163224/1.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Singapore Pte Ltd.

About this chapter

Cite this chapter

Zeng, G., Zheng, G. (2018). Deep Learning-Based Automatic Segmentation of the Proximal Femur from MR Images. In: Zheng, G., Tian, W., Zhuang, X. (eds) Intelligent Orthopaedics. Advances in Experimental Medicine and Biology, vol 1093. Springer, Singapore. https://doi.org/10.1007/978-981-13-1396-7_6

Download citation

DOI: https://doi.org/10.1007/978-981-13-1396-7_6

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-13-1395-0

Online ISBN: 978-981-13-1396-7

eBook Packages: Biomedical and Life SciencesBiomedical and Life Sciences (R0)