Abstract

Today, with advances in the science of machine vision, wide dimensions have been opened in the field of identity identification (biometric) in people’s lives. With the increase in inspection, surveillance and security centers, biometric systems are more crucial than ever. Iris recognition is one of the main biometric identification approaches in human’s identity recognition which has become a very functional and attractive subject in the research and practical applications. Due to the unique features of the iris, this kind of recognition is highly effective to identify a person. In literature, many researches have been done with regard to locating, image description and iris recognition. In this paper, a solution is provided for extracting features of iris that generated datasets can be analyzed. Given that the neural network uses this data set, iris patterns classification is done. Adaptive learning strategy is used to train the neural network. The simulation results show that the proposed system of identification of individuals can offer 95% accuracy in normal conditions and 88% in noisy conditions.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Iris recognition

- Feature extraction

- Multilayer perceptrons

- Gaussian noise

- Walsh Hadamard transform

- Neural network

1 Introduction

Biometric recognition is one of the tools that will lead to the identification in the world today. Given that millions of people daily pass border crossings and checkpoints and since inspection is an exhausting process and full of errors, biometric systems can be used. Biometric measures biological characteristics, such as fingerprints, iris pattern, facial structure, hand or behavioral characteristics such as walking or sound. Biometric uses these features automatically to identify people, in general, ideally, characteristics should be limited to a person and they are constant over time and can be easily measured. The human’s eye iris recognition is a biometric process. You can also take advantage of iris recognition such fast matching and strong resistance against being fake and iris stability as one of human organs. Iris tissue, as a biometric feature to identify, is useful even without examining its internal variability. The eye is an internal organ that is visible externally, and we can get its pictures easily. Compared with fingerprints it should be mentioned that they are in outer organ of skin which are more susceptible to damage and change. Also a change in the physical texture of iris includes risk of harm to a person’s vision. So, as long as the person does not use lenses with specific characteristics, iris tissue that has been given to the system can be considered valid. Aging effect on iris a biometric, is an active research section [1, 2] but outside the scope of this research. Primarily methods of iris recognition work by focusing iris and marking any artificial obstructive effects. In the image of an eye, the iris is a circular section between the sclera and pupil. Eyelids, eyelashes and bright lights may block parts of this region. So the iris should be normalized in a way that it can be more appropriate to specify the characteristics of iris. Properties often are created through image filtering and normalized shape is a rectangular image that includes the iris without any obstruction. By using neural network (NN: Neural Network); this study offers a prototype of a powerful iris pattern recognition system for classification of a number of different people. At the end the proposed system is carefully evaluated with and without noise.

2 Copyright Form

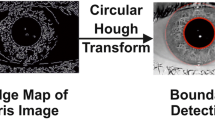

Surveillance cameras transmit images to a system and these images after pre-processing, are ready for feature extraction. There are many ways to extract features from images. The most common methods of image feature extraction are accelerating the powerful features, Haar wavelet, color histogram, the local binary pattern, swirl gradient histogram techniques, principal component analysis. In this section, we explore existing systems in the field of biometric iris recognition. For example, detection of HT circular methods [3], integro-differential operator [4] and in the field of iris feature extracting methods such as small two-dimensional Haar wavelet transform, Gabor filters, small Daubechies wavelet transform and in the field of recognition, classification algorithms such as NN, Bayesian, deep learning and SVM: Support Vector Machine were presented. In Umer et al. [5], by using a new Multiscale way to extract features an iris recognition system (IRS: Iris Recognition System) with improved performance was introduced. This system is able to evaluate and identify people efficiently and effectively in the four stages. In the first phase, a rapid method for determining the position of the iris was adopted. Secondly, only part of the picture of the iris to avoid the problem of occlusion was used for authentication. Third, Multiscale Morphologic features of segmented image were extracted from iris, and finally in the last stage, SVM was used as a classifier. And according to the results of Falohun’s et al. [6] research, we found that Quadtree segmentation in the IRS by which is prepared by NN, in division, training and detection stages act faster than Hough transform (HT) in the same NN environment. Nabti et al. [7] offered a multi-resolution method for extracting iris features. In order to do feature extraction, they applied a specific Gabor filter collection on normal iris image. Khedkar et al. [8] after initial operation of locating and Hadamard feature extraction method, different NN configuration including Multi-Layer Perceptron (MLP), Radial basis function and SVM were applied after changing parameters of respective networks also Gaussian noise and uniform noise have been injected to all input features and it was found that the MLP with one hidden layer in terms of performance acts better compared to all other networks. Mira et al. [9] offered eye IRS on the basis of mathematical morphology operators (MM: Mathematical Morphology). MM is directly related to the information in the form of digital images in the environmental domain. The main advantage of MM is the ability to extract essential texture, structure information and characteristics of the image in the IRS. Liu et al. [10] is able to acquired different domains in different directions and then combine them to make a coherent three-dimensional image of the sample in a process referred to as 4D-OCT, and of course Optical Coherence Tomography (OCT) requires large and expensive pieces of equipment and trained operators to run them. In addition, the sensors act very close to the sample and the slightest movement of the sample will add significant noise. Albadarneh et al. [11] evaluated a hybrid Gabor approach with initiatives such as Discrete Cosine Transform (DCT), Histogram of Oriented Gradients (HOG). HOG accuracy in identifying iris images reaches to 20% and accuracy of a Gabor combined method reaches to 76%. Tallapragada et al. [12] in order to o identify offered a framework with SVM and Hidden Markov Model (HMM) that performs well, but only used 100 samples that reach to the accuracy of 93.2%.

3 Research Methodology

In this section, the proposed layout describes the eye IRS structure in several steps. The proposed plan offers simplified procedure for the iris image processing, feature extraction and training by the MLP for iris recognition. Partitioning the iris image data and a data conversion is offered and studied.

Figure 1 shows the overall architecture of the proposed IRS. First, the proposed system performs pre-processing on the iris image and then detects the iris of the eye through the category of extracted image data. Obtaining iris image includes lighting systems, positioning systems and physical imaging systems, and results are described in the discussion section.

Iris recognition includes pre-processing, feature extraction and information classification by NN. When obtaining an iris, iris picture in the input sequence must be clear. The resolution of points and tiny elements and clarity of boundary between the iris and the pupil and the boundary between iris and sclera affect iris image quality. High-quality pictures should be chosen for iris recognition. In preprocessing, the iris picture is entered, resize and taken into the gray mode. Image enhancement process can be obtained by applying the combined filters. Image is displayed by a matrix that black and white values in gray scale, describe the iris image. This matrix is converted to a set of data in line with NN training. IRS includes two functions: Training mode and test mode. Firstly, detection system training is done by using black and white values of iris images. NN is trained with all iris images. After the training, in test mode, NN does the classification and recognizes iris patterns that belong to a particular person.

In Fig. 2, the proposed system uses the NN to detect iris patterns. In this method, that standardized and modified image iris is displayed by a two-dimensional array. This array includes gray scale values of iris pattern texture. Characteristics and attributes that are extracted from these values are the input signals for NN.

Two hidden layers can be used in NN. In this structure, \( x_{1} ,x_{2} , \ldots ,x_{m} \) are input array values characteristics that describe the iris texture information, \( p_{1} ,p_{2} , \ldots ,p_{n} \) are output patterns that describe the iris. kth output of NN is determined by Eq. 1 [13].

where \( v_{jk} \) are the weights of output and hidden layers of the network, \( u_{ij} \) are the weights of the hidden layers, \( w_{li} \) is the eight of input and the first hidden layers, F is the activation Function in neurons and \( x_{l} \) is the input signal. Here \( {\text{k}} = 1 ,\ldots , {\text{n}} \), \( {\text{j}} = 1 ,\ldots , {\text{h2}} \), \( {\text{i}} = 1 ,\ldots , {\text{h1}} \), \( l = 1 ,\ldots , {\text{m}} \) that m is the number of neurons in the input layer, n the number of neurons in the outer layer, h1 and h2 are the number of neurons in the first and second hidden layers, respectively. In Eq. 1, Pk determines NN output signals as follows [13]:

Here \( y_{i} \) and \( y_{j} \) are respectively equal to the first and second hidden layers output signals. After NN activation, the NN parameters training starts. Then the trained network is used for iris recognition in test system. In this section, a gradient-based learning algorithm with adaptive learning rate is used. This ensures convergence and accelerates learning processes. In addition, a momentum is also used to speed up the learning process.

Initially, NN parameters are randomly generated \( u_{ij} \), \( v_{jk} \) and \( w_{li} \) parameters that are related to the NN are equal to the weight coefficients of the first, second and third layers. Here, \( {\text{k}} = 1 ,\ldots , {\text{n}} \), \( {\text{j}} = 1 ,\ldots , {\text{h2}} \), \( {\text{i}} = 1 ,\ldots , {\text{h1}} \), \( l = 1 ,\ldots , {\text{m}} \). To produce model for NN detection, Training of the weight coefficients \( u_{ij} \), \( v_{jk} \) and \( w_{li} \) is carried out. During the training cost function value is calculated as follows [13]:

Here n is the number of output signals and \( {\text{P}}_{k}^{d} \) and \( {\text{P}}_{k} \) are respectively equal to the current and desired network output values. Parameters \( u_{ij} \), \( v_{jk} \) and \( w_{li} \) that are related to NN are regulated by the following formula [13].

Here γ is the learning rate, and λ is momentum. Adaptive learning rate is used to increase learning speed and ensure convergence. The following strategy is used for any given number of new courses. NN learning parameters begins with a small amount of γ learning rate. During the training, if the amount of the error \( \Delta E = E(T) - E(T + 1) \) is positive, the learning rate increases and If changes in the error \( \Delta E = E(T) - E(T + 1) \) is negative, the learning rate decreases. After determining the derivatives, NN parameters updating is performed.

4 Discussion and Results

To evaluate the proposal, a dataset is needed that contains images of human iris. Therefore, we extract 250 images from UBIRIS.v1 dataset that we have put them beside the executable file.

UBIRIS dataset is composed of 241 people in various states and has 1877 photos. This collection is standardized and recorded with the minimal noise and noise factors, brightness, contrast and reflectivity are minimized. All images were recorded by Nikon E5700 camera in RGB mode with 300 dpi image quality and focal length of 71 mm at a depth of 24 bits [14].

Dataset contains several images of the human iris is that each belongs to a human. All pictures must be in standard mode and the four directions (up, down, left and right) and the image should start from cornea and space should not be empty. First of all human iris images were converted to the same size and 23 features were extracted from any image. The vector of these features is as follows, that all of which have been obtained from the images of the iris and at the end of each image has 23 features.

Person1= [WH1, WH2… WH16, Contrast, Correlation, Energy, Homogeneity, Average, Standard Deviation, Entropy]

WH1…WH16: Sixteen Walsh features have been achieved from Walsh-Hadamard transform. To find 16 features of Walsh-Hadamard, each iris picture will be converted into 16 blocks and Walsh- Hadamard transform on each block is a feature of 16 features [15].

Walsh function has a unique sequence value. This function can be produced in different ways. Hadamard function can be used in MATLAB to create this function. Here the length of Walsh function is considered as 64. Rows and columns in this matrix are symmetrical and comprise Walsh functions. Walsh functions in this matrix are not considered in increasing order of their sequence or the number of zero-crossing. But they are arranged according to Hadamard order. Then, one dataset contains 250 images; each with 23 features will be prepared. Of course in the initial assessment this number was obtained and the original volume of this dataset is greater than this number of image. Now data is ready to enter into the neural network. NN is used for the production of IRS. Eighty percent of data will be used for NN training in order to train the system to identify the future cornea and 20% of data will be used to evaluate the training system. Output matrix specifies that any input image of the iris is related to which person. In fact, in NN, each neuron can identify a person so in this project 250 irises were selected. Thus the structure of neural network has 250 neurons in the output layer. Therefore, the output matrix should also have 250 rows that each row represents an individual. In NN with Iris input and output matrix, the number of person is designed with two hidden layers that there are 20 neurons in the first and 10 neurons in the second layer. Neural network output matrix shows the most similarity to the cornea.

Program testing was conducted at two conditions of with and without noise. In no noise condition, accuracy has been validated between 95 and 100% and in terms of noise the accuracy was 88%. A noisy condition was applied σ = 4 by Gaussian Blur filter on the 20% of the images. Figure 3 shows the acceptable level of mean square error. This chart reports the mean square error in 10 epochs for a test, training, and validation collections. As it is evident in the fourth repetition the lowest error has been achieved for the validation operation. Figure 4, shows the peak signal to noise ratio with respect to the σ = 4 value for Gaussian filtering for 20 sample pictures in the dataset. In classification operation, the accuracy of a class equals to the number of items that are to be labeled correctly as diagnosed normal class with regard to the total number of items labeled normal class. Here, after evaluating 180 cases properly diagnosed by normal label and given that the amount of wrong negative is equal to 20, we have:

And also for the false noise level, given that the rate of 10 cases were misidentified as false positive class, we have:

According to the above results, positive likelihood ratio (LR+) can be simply calculated.

For items rate, class lost items can be calculated from total negative items that have been misdiagnosed based on positive number of items.

Calculating sensitivity (SPC) shows us the rate of negative stories that have been diagnosed correctly.

To calculate SPC, the negative likelihood ratio (−LR) can be obtained.

Diagnostic odds ratio (DOR) according to positive and negative likelihood ratios has been achieved from the following equation.

The resulting number shows the odds ratio for non-noise level is high. To calculate the overall accuracy according to the following equation we have.

All methods that can be used in feature extraction are not applicable for biometric iris recognition. Methods such as gradient HOG which are used in Biometric facial recognition have low accuracy in iris recognition. Table 1 shows the proven results of above relationships.

Finally in Fig. 5, a comparison of research that has been proposed as the frequency shows the accuracy of proposed scheme.

In this paper it has been shown that Walsh-Hadamard transform method is an effective way to extract features from pictures. Also applying the NN classifier is an effective detection tool in noisy environments. Initiatives filters to increase the resolution of very small elements in the resolution of the boundary between the iris and the pupil and boundary between iris and sclera that is effective on the iris picture quality, will be studied.

5 Conclusion

In this article, we described a multi-step plan. We have shown the suggested solutions with the feature extraction approach of Walsh-Hadamard transfer with other statistical characteristics and classification of neural network can identify iris pictures with high precision. Simulations show that up to 95% recognition accuracy can be achieved with testing the same irises. The Gaussian blur filter was applied to 20% of records. The results show that proposed scheme with an accuracy of 88% is still good diagnostic tool. In terms of the future researches.

References

Fenker SP, Bowyer KW (2011) Experimental evidence of a template aging effect in iris biometrics. In: IEEE computer society workshop on applications of computer vision, pp 232–239

Sazonova N, Hua F, Liu X, Remus J, Ross A, et al. (2012) A study on quality-adjusted impact of time lapse on iris recognition. In: Sensing technologies for global health, military medicine, disaster response, and environmental monitoring II; and biometric technology for human identification IX, vol 8371, pp 83711–83719

Djekoune O, Messaoudi K, Amara K (2017) Incremental circle Hough transform: an improved method for circle detection. Optik Int J Light Electr Opt 133:17–31

Kumar AA, Gupta A (2015) Iris localization based on integro-differential operator for unconstrained infrared iris images. In: 2015 international conference on signal processing, computing and control (ISPCC), Waknaghat, pp 277–281

Umer S, Dhara BC, Chanda B (2015) Iris recognition using multiscale morphologic features. Pattern Recogn Lett 65:67–74

Falohun AS, Ismaila WO, Adeosun O (2015) Performance evaluation of quadtree & hough transform segmentation techniques for iris recognition using artificial neural network (ANN). Int J Comput Trends Technol (IJCTT) 25(1):18–22

Nabti M, Bouridane A (2008) An effective and fast iris recognition system based on a combined multiscale feature extraction technique. Pattern Recogn 41(3):868–879

Khedkar MM, Ladhake SA (2013) Robust human iris pattern recognition system using neural network approach. In: 2013 international conference on information communication and embedded systems (ICICES), Chennai, pp 78–83

Mira Jd Jr, Neto HV, Neves EB et al (2015) Biometric oriented iris identification based on mathematical morphology. J Sig Process Syst 80(2):181–195

Liu JJ, Grulkowski I, Potsaid B et al (2013) 4d dynamic imaging of the eye using ultrahigh speed ss-oct. In: Proceedings of SPIE, vol 8567

Albadarneh IA, Alqatawna J (2015) Iris recognition system for secure authentication based on texture and shape features. In: 2015 IEEE Jordan conference on applied electrical engineering and computing technologies (AEECT), Amman, pp 1–6

Tallapragada VVS, Rajan EG (2012) Improved kernel-based IRIS recognition system in the framework of support vector machine and hidden markov model. IET Image Process 6(6):661–667

Abiyev RH, Altunkaya K (2008) Personal iris recognition using neural network. Int J Secur Appl 2(2):41–50

Proenca H, Alexandre LA (2005) UBIRIS: a noisy iris image database. In: Springer lecture notes in computer science—ICIAP 2005: 13th international conference on image analysis and processing, Cagliari, Italy, vol 1, pp 970–977

Bell DA (1996) Walsh functions and Hadamard matrixes. Electr Lett 2(9):340–341

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Mamdouhi, M., Kazemi, M., Amoabedini, A. (2019). A New Model for Iris Recognition by Using Artificial Neural Networks. In: Montaser Kouhsari, S. (eds) Fundamental Research in Electrical Engineering. Lecture Notes in Electrical Engineering, vol 480. Springer, Singapore. https://doi.org/10.1007/978-981-10-8672-4_14

Download citation

DOI: https://doi.org/10.1007/978-981-10-8672-4_14

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-8671-7

Online ISBN: 978-981-10-8672-4

eBook Packages: EngineeringEngineering (R0)