Abstract

This paper considers the problem of uncertain camera pose and camera parameters for a 3-degree-of-freedom (DOF) robot manipulator in nonlinear visual servoing tracking control. To solve this problem, the typical Kalman filter (KF) algorithm is designed to estimate the image Jacobian matrix online, which can reduce the system noises to improve the robustness of the control system. Visual optimal feedback controller is developed to precisely track the desired position of the robot manipulator. In addition, stereo cameras are incorporated into the robot manipulator system such that the tracking errors in both camera image frame and robot base frame can simultaneously converge to zero. Experimental results are included to illustrate the effectiveness of the proposed approach.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Visual servoing has been generally used in robot manipulator nonlinear tracking control. The traditional robot hand-eye coordination control theories rely on the study of camera calibration technique. However, the calibration accuracy restricts the accuracy of system in practice. The camera pose can be changed and sometimes its working condition can be difficult to realize calibration. Compared with known camera parameters, uncalibrated visual servo system performs higher flexibility and adaptability.

Several visual servoing control methods have been developed to improve the control performance of robot manipulator. Chaumette presented a summary of visual servo control approaches [1]. Farrokh investigated the comparison of the position-based and image-based robot visual servoing methods to improve the dynamics performance and robustness of visual servoing (RVS) system [2]. In [3], it was mentioned that image-based visual servoing (IBVS) is a properer way under the condition of unknown camera parameters. Cai presented a 2-1/2-D visual servoing choosing orthogonal image features to enhance the behavior of tracking system [4]. The image Jocabian matrix, first presented by Weiss, is universally utilized to define the relationship between camera image frame and robot base frame. Zergerogluprovided the analytic solution of the image Jacobian matrix under the condition of ideal camera pose for the problem of position tracking control of a planar robot manipulator with uncertain robot-camera parameters [5]. While in practice, it is impossible to guarantee that the camera imaging plane is parallel to the task plane, that is, the relationship between the two coordinate systems cannot only be defined by one rotation angle. Hence, the control methods based on the direct estimation for the numerical solution of the image Jacobian matrix are widely used in robot hand-eye coordination research field. In [6], discrete-time estimator was developed by using the least squares algorithm. Yoshimi utilized geometric effect and rotational invariance to accomplish alignment [7]. Piepmeier performed online estimation using a dynamic recursive least-squares algorithm [8], while the work [9] addressed robust Jacobian estimation.

In this paper, the nonlinear visual servo controller is developed for a 3-degree-of-freedom (DOF) robot manipulator with fixed uncalibrated stereo cameras configuration. Only one-step robot movement is used to estimate the current image Jacobian matrix completely using Kalman filter (KF) algorithm. Furthermore, the filtering process adopts recursive computing without storing historical data. Then, a simple visual optimal feedback control is designed to achieve nonlinear trajectory tracking.

2 Problem Formulation

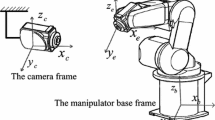

The visual sensor is used to measure the information in robot task space, and intuitively reflects the relationship between the robot end-effector and the target to be tracked. According to [10], the mapping relation between camera image frame and robot base frame is defined as follows:

where \( p\left( t \right) = \left( {x_{b} \left( t \right)\;\;y_{b} \left( t \right)} \right)^{\rm T} \) denotes the end-effector position in robot base frame, \( s\left( t \right) = \left( {u_{l} \left( t \right)\;\;v_{l} \left( t \right)\;\;u_{r} \left( t \right)\;\;v_{r} \left( t \right)} \right)^{\rm T} \) represents the projected position of the end-effector in stereo camera image frame. The image Jacobian matrix, expressed by \( J_{I} \left( q \right) \in {\mathbb{R}}^{4 \times 2} \), is defined as

where \( J_{I} \left( p \right) \) is the interaction matrix involving the end-effector position and the intrinsic and extrinsic parameters of cameras. Specifically, the corresponding spatial position can be uniquely determined from the image feature, because the dimension of image feature space is greater than the dimension of robot motion space, which means that the tracking of image space is equivalent to the tracking of robot motion space with stereo cameras configuration.

3 Online Estimation and Visual Optimal Feedback Controller

3.1 Online Estimation

To estimate the image Jacobian matrix online via KF, transforming the image Jacobian matrix into a state vector which can be expressed as the following form:

where \( \frac{{\partial s_{i} }}{\partial p} = \left( {\begin{array}{*{20}c} {\frac{{\partial s_{i} }}{{\partial x_{b} }}} & {\frac{{\partial s_{i} }}{{\partial y_{b} }}} \\ \end{array} } \right)^{\rm T} \),\( i = 1,2,3,4 \),denotes the transposition of \( i \) th row vector of the image Jacobian matrix \( J_{I} \left( p \right) \).

Based on the definition of \( J_{I} \left( p \right) \) in (1), \( s \) can be written as

We define \( x \) in (3) as the system state, and the image feature variation \( \Delta s\left( k \right) = s\left( {k + 1} \right) - s\left( k \right) \) as the system output \( z\left( k \right) \). Hence, the state equation can be derived as follows:

where \( \eta \left( k \right) \in {\mathbb{R}}^{8} \) denotes the state noise and \( w\left( k \right) \in {\mathbb{R}}^{4} \) is the observation noise, which are both assumed to be white Gaussian noises shown in (7).

The system input of the state Eqs. (5) and (6) is contained in \( C\left( k \right) \):

Five steps of recursive estimation based on KF algorithm are listed as follows:

Step 1. Predict the next state based on current state

Step 2. Update the uncertainty with respect to the state estimate

Step 3. The Kalman gain can be computed as

Step 4. The optimal state estimate value is given by

Step 5. Update the state covariance matrix

Given any two-step linearly independent test movement \( \Delta p_{1} ,\Delta p_{2} \), the corresponding image feature variations \( \Delta s_{1} ,\Delta s_{2} \) are gained. Thus, the initial value of \( \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{x} \left( 0 \right) \) (i.e. \( \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{J}_{I} \left( 0 \right) \)) can be obtained:

where \( \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{x} \left( 0 \right) \) can be remodeled by \( \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{J}_{I} \left( 0 \right) \) using the form in (3).

3.2 Visual Optimal Feedback Controller Design

After online estimation of the image Jacobian matrix, the design of the direct visual feedback controller can be easily established. Define the system error in camera image frame as:

where \( s^{ * } \left( t \right) = s_{o} \left( t \right) \) denotes the desired image feature describing the image feature of the moving target.

The control objective of this paper is to design a control signal \( u = \dot{p} \), which minimize the following objective function

Discretizing the control signal \( u \), the optimal control signal at time \( k \) is given by

where \( J_{I} \left( k \right)^{ + } \) is Moore–Penrose pseudoinverse of \( J_{I} \left( k \right) \).When \( J_{I} \left( k \right) \) is of full rank 2, its inverse can be expressed as

The estimation of \( k + 1 \), denoted by \( \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{s}_{o} \left( {k + 1} \right) \), can be obtained by

Theorem 1

With the configuration of stereo cameras, the control law ( 17 ) guarantees global asymptotic position tracking at any time \( k \).

Proof

Substituting (4), (17) and (19) into (16), the objective function is rewritten as the following form:

From (21), the difference between the target image feature and its estimation tends to zero via KF algorithm. Under stereo cameras configuration, the convergence of image space is equivalent to the convergence of robot motion space with stereo cameras configuration. Hence, the conclusion of (20) is straightforward. In Eye-to-hand system, the control signal \( u\left( k \right) \) is able to guarantee the asymptotic convergence of tracking error.

4 Experimental Verification

In this section, a 3-DOF robot manipulator system is employed as the test-rig to test the proposed control scheme. The configuration of the whole experimental setup is depicted in Fig. 1. The experimental setup is composed of a YASKAWA 6-DOF robot manipulator (rotation part is locked), two fixed pinhole cameras and an upper computer. The control method is written by using Visual C ++ program. The camera frame rate is set as 30 fps.

The robot end-effector performs two-dimensional translation movement, tracking the curve track target on task plane, and uncalibrated stereo cameras are fixed right above the task plane.

The linearly independent test movements lead to the initial state vector is given by

The variance of the each noise in the state equation and the covariance matrix is set to

Experimental results are shown in Figs. 2 and 3. Figure 2a describes the tracking result of the left camera, Fig. 2b gives the tracking results of the right camera. Figure 3 shows the tracking errors of the left camera and the right camera, respectively. From Figs. 2 and 3, it is found that the output can precisely track the desired trajectory and the tracking errors can converge to zero. Hence, one can conclude that the aforementioned results successfully illustrate that effectiveness of the proposed visual optimal feedback control method.

5 Conclusions

In this paper, online estimation of the image Jacobian matrix has been investigated using uncalibrated stereo cameras. This method, which combines KF algorithm and visual optimal feedback control, is presented for a 3-DOF robot manipulator with system noises. KF is utilized to estimate the image Jacobian matrix, and the visual optimal feedback control is designed based on the estimate values. Stereo cameras system is applied to guarantee tracking error converging to zero. Experimental results verify the effectiveness and robustness of the proposed algorithm.

References

Chaumette F, Hutchinson S. Visual servo control. II. Advanced approaches [Tutorial]. IEEE Robot Autom Mag. 2007;14(1):109–18.

Janabi-Sharifi F, Deng L, Wilson WJ. Comparison of basic visual servoing methods. IEEE/ASME Trans Mechatron. 2011;16(5):967–83.

Tao B, Gong Z, Han D. Survey on uncalibrated robot visual servoing control. Chin J Theor Appl Mech. 2016;48(4):767–83.

Cai CX, Somani N, Knoll A. Orthogonal image features for visual servoing of a 6-DOF manipulator with uncalibrated stereo cameras. IEEE Trans Robot. 2016;32(2):452–61.

Zergeroglu E, Dawson D, Querioz M, et al. Vision-based nonlinear tracking controllers with uncertain robot-camera parameters. IEEE/ASME Trans Mechatron. 2001;6(3):322–37.

Hosoda K, Asada M. Versatile visual servoing without knowledge of true jacobian. In: IEEE/RSJ/GI international conference on intelligent robots and systems ‘94. Advanced robotic systems and the real world;1994. p. 186–93.

Yoshimi B, Allen P. Alignment using an uncalibrated camera system. IEEE Trans Robot Autom. 1995;11(4):516–21.

Piepmeier J, McMurray G, Lipkin H. Uncalibrated dynamic visual servoing. IEEE Trans Robot Autom. 2004;20(1):143–7.

Azad S, Farahmand AM, Jagersand M. Robust Jacobian estimation for uncalibrated visual servoing. In: IEEE international conference on robotics and automation;2010. p. 5564–69.

Su J, Zhang Y, Luo Z. Online estimation of Image Jacobian Matrix for uncalibrated dynamic hand-eye coordination. Int J Syst Control Commun. 2008;1(1):31–52.

Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grants 61433003, 61472037.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Li, S., Ren, X., Li, Y., Qiu, H. (2018). Nonlinear Tracking Control for Image-Based Visual Servoing with Uncalibrated Stereo Cameras. In: Jia, Y., Du, J., Zhang, W. (eds) Proceedings of 2017 Chinese Intelligent Systems Conference. CISC 2017. Lecture Notes in Electrical Engineering, vol 460. Springer, Singapore. https://doi.org/10.1007/978-981-10-6499-9_32

Download citation

DOI: https://doi.org/10.1007/978-981-10-6499-9_32

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-6498-2

Online ISBN: 978-981-10-6499-9

eBook Packages: EngineeringEngineering (R0)