Abstract

We first propose in this paper a recursive algorithm for triangular matrix inversion (TMI) based on the ‘Divide and Conquer’ (D&C) paradigm. Different versions of an original sequential algorithm are presented. A theoretical performance study permits to establish an accurate comparison between the designed algorithms. Our implementation is designed to be used in place of dtrtri, the level 3 BLAS TMI. Afterwards, we generalize our approach for dense matrix inversion (DMI) based on LU factorization (LUF). This latter is used in Mathematical software libraries such as LAPACK xGETRI and MATLAB inv. \(\mathrm{{A}}=\mathrm{{LU}}\) being the input dense matrix, xGETRI consists, once the factors L and U are known, in inverting U then solving the triangular matrix system \(\mathrm{{XL}}=\mathrm{{U}}^{-1}\) (i.e. \({\mathrm{{L}}}^{\mathrm{{T}}}{\mathrm{{X}}}^{\mathrm{{T}}}=({\mathrm{{U}}}^{-1})^\mathrm{{T}}\), thus \(\mathrm{{X}}={\mathrm{{A}}}^{-1})\). Two other alternatives may be derived here (L and U being known) : (i) first invert L, then solve the matrix system \(\mathrm{{UX}}=\mathrm{{L}}^{-1}\) for X ; (ii) invert both L and U, then compute the product \(\mathrm{{X}}={\mathrm{{U}}}^{-1}{\mathrm{{L}}}^{-1}\). Each of these three procedures involves at least one triangular matrix inversion (TMI). Our DMI implementation aims to be used in place of the level 3 BLAS TMI-DMI. Efficient results could be obtained through an experimental study achieved on a set of large sized randomly generated matrices.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Dense matrix inversion

- Divide and conquer

- Level 3 BLAS

- LU factorization

- Recursive algorithm

- Triangular matrix inversion

1 Introduction

Triangular matrix inversion (TMI) is a basic kernel used in many scientific applications. Given its cubic complexity in terms of the matrix size, say n, several works addressed the design of practical efficient algorithms for solving this problem. Apart the standard TMI algorithm consisting in solving n linear triangular systems of size \(\mathrm{{n}}, \mathrm{{n}}-1,{\ldots }1\) [1], a recursive algorithm, of same complexity, has been proposed by Heller in 1973 [2–4]. It uses the ‘Divide and Conquer’ (D&C) paradigm and consists in successive decompositions of the original matrix. Our objective here is two-fold i.e. (i) design an efficient algorithm for TMI that outperforms the BLAS routines and (ii) use our TMI kernel for dense matrix inversion (DMI) through LU factorization, thus deriving an efficient DMI kernel.

The remainder of the paper is organized as follows. In Sect. 2, we present the D&C paradigm. We then detail in Sect. 3 a theoretical study on diverse versions of Heller’s TMI algorithm. Section 4 is devoted to the generalization of the former designed algorithm for DMI. An experimental study validating our theoretical contribution is presented in Sect. 5.

2 Divide and Conquer Paradigm

There are many paradigms in algorithm design. Backtracking, Dynamic programming, and the Greedy method to name a few. One compelling type of algorithms is called Divide and Conquer (D&C). Algorithms of this type split the original problem to be solved into (equal sized) sub-problems. Once the sub-solutions are determined, they are combined to form the solution of the original problem. When the sub-problems are of the same type as the original problem, the same recursive process can be carried out until the sub-problem size is sufficiently small. This special type of D&C is referred to as D&C recursion. The recursive nature of many D&C algorithms makes it easy to express their time complexity as recurrences. Consider a D&C algorithm working on an input size n. It divides its input into a (called arity) sub-problems of size n/b. Combining and conquering are assumed to take f(n) time. The base-case corresponds to \(\mathrm{{n}} = 1\) and is solved in constant time. The time complexity of this class of algorithms can be expressed as follows:

Let \(\mathrm{{f(n)}} = O( {n^{\delta }} )(\updelta \ge 0)\) , the master theorem for recurrences can in some instances be used to give a tight asymptotic bound for the complexity [1]:

-

\(a<b^{\updelta } \Rightarrow T(n)=O( {n^{\delta }} )\)

-

\(a=b^{\updelta } \Rightarrow T(n)=O( {n^{\delta }\log _b n})\)

-

\(a>b^{\updelta } \Rightarrow T(n)=O( {n^{\log _b a}} )\)

3 Recursive TMI Algorithms

We first recall that the well known standard algorithm (SA) for inverting a triangular matrix (either upper or lower), say A of size n, consists in solving n triangular systems. The complexity of (SA) is as follows [1]:

3.1 Heller’s Recursive Algorithm (HRA)

Using the D&C paradigm, Heller proposed in 1973 a recursive algorithm [2, 3] for TMI. The main idea he used consists in decomposing matrix A as well as its inverse B (both of size n) into 3 submatrices of size n/2 (see Fig. 1, A being assumed lower triangular). The procedure is recursively repeated until reaching submatrices of size 1. We hence deduce:

Therefore, inverting matrix A of size n consists in inverting 2 submatrices of size n/2 followed by two matrix products (triangular by dense) of size n/2. In [3] Nasri proposed a slightly modified version of the above algorithm. Indeed, since \(B_{2}=-B_{3}A_{2}\) and \(B_1 =-A_3^{-1} A_2 A_1^{-1}\), let \(Q=A_{3}^{-1} A_{2}\). From (2), we deduce:

Hence, instead of two matrix products needed to compute matrix \({\mathrm{{B}}}_{2}\), we have to solve 2 matrix systems of size n/2 i.e. \({\mathrm{{A}}}_{3}\mathrm{{Q}} ={\mathrm{{A}}}_{2}\) and \((\mathrm{{A}}_{1})^{\mathrm{{T}}}({\mathrm{{B}}}_{2})^{\mathrm{{T}}}= -{\mathrm{{Q}}}^{\mathrm{{T}}}\). We precise that both versions are of \({\mathrm{{n}}}^{3}/3+\mathrm{{O}}({\mathrm{{n}}}^{2})\) complexity [3].

Now, for sake of simplicity, we assume that \(\mathrm{{n}}=2^{\mathrm{{q}}} (\mathrm{{q}} \ge 1)\). Let RA-k be the Recursive Algorithm designed by recursively applying the decomposition k times i.e. until reaching a threshold size \(\mathrm{{n}}/2^{\mathrm{{k}}}(1\le \mathrm{{k}}\le \mathrm{{q}})\). The complexity of RA-k is as follows [3]:

3.2 Recursive Algorithm Using Matrix Multiplication (RAMM)

As previously seen, to invert a triangular matrix via block decomposition, one requires two recursive calls and two triangular matrix multiplications (TRMM) [5]. Thus, the complexity recurrence formula is:

The idea consists in using the fast algorithm for TRMM presented below.

-

TRMM algorithm

To perform the multiplication of a triangular (resp. dense) by a dense (resp. triangular) via block decomposition in halves, we require four recursive calls and two dense matrix-matrix multiplications (MM) Fig. 2.

The complexity recurrence formula is thus :

To optimize this algorithm, we will use a fast algorithm for dense MM i.e. Strassen algorithm.

-

MM algorithm

In [6, 7], the author reported on the development of an efficient and portable implementation of Strassen MM algorithm. Notice that the optimal number of recursive levels depends on both the matrix size and the target architecture and must be determined experimentally.

3.3 Recursive Algorithm Using Triangular Systems Solving (RATSS)

In this version, we replace the two matrix products by two triangular systems solving of size n/2 (see Sect. 3.1). The algorithm is as follows:

-

TSS algorithm

We now discuss the implementation of solvers for triangular systems with matrix right hand side (or equivalently left hand side). This kernel is commonly named trsm in the BLAS convention. In the following, we will consider, without loss of generality, the resolution of a lower triangular system with matrix right hand side (\(\mathrm{{AX}}=\mathrm{{B}}\)). Our implementation is based on a block recursive algorithm in order to reduce the computations to matrix multiplications [8, 9].

3.4 Algorithms Complexity

As well known, the complexity of the Strassen’s Algorithm is \(\mathrm{{MM}}(\mathrm{{n}}) = \mathrm{{O}}({\mathrm{{n}}}^{{\log _{2} 7}})\)

Besides, the cost RAMM(n) satisfies the following recurrence formula:

Since

We therefore get :

Following a similar way, we prove that \(\mathrm{{TRMM}}(\mathrm{{n}})=O(n^{log_2 7})\)

4 Dense Matrix Inversion

4.1 LU Factorization

As previously mentioned, three alternative methods may be used to perform a DMI through LU factorization (LUF). The first one requires two triangular matrix inversions (TMI) and one triangular matrix multiplication (TMM) i.e. an upper one by a lower one. The two others both require one triangular matrix inversion (TMI) and a triangular matrix system solving (TSS) with matrix right hand side or equivalently left hand side (Algorithm 4). Our aim is to optimize both LUF, TMI as well as TMM kernels [10].

4.2 Recursive LU Factorisation

To reduce the complexity of LU factorization, blocked algorithms were proposed in 1974 [11]. For a given matrix A of size n, the L and U factors verifying A=LU may be computed as follows:

4.3 Triangular Matrix Multiplication (TMM)

Block wise multiplication of an upper triangular matrix by a lower one, can be depicted as follows:

Thus, to compute the dense matrix \(\mathrm{{C}}=\mathrm{{AB}}\) of size n, we need:

-

Two triangular matrix multiplication (an upper one by a lower one) of size n/2

-

Two multiplications of a triangular matrix by a dense one (TRMM) of size n/2.

-

Two dense matrix multiplication (MM) of size n/2.

Clearly, if any matrix-matrix multiplication algorithm with \({\text{ O }}({\text{ n }}^{{{\text{ log }}_{2} 7}})\) complexity is used, then the algorithms previously presented both have the same \( {\text{ O }}({\text{ n }}^{{{\text{ log }}_{2} 7}} )\) complexity instead of \( {\text{ O }}({\text{ n }}^{3} ) \) for the standard algorithms.

5 Experimental Study

5.1 TMI Algorithm

This section presents experiments of our implementation of the different versions of triangular matrix inversion described above. We determinate the optimal number of recursive levels for each one (as already precised, the optimal number of recursive levels depends on the matrix size and the target architecture and must be determined experimentally). The experiments (as well as the following on DMI) use BLAS library in the last level and were achieved on a 3 GHz, 4Go RAM PC. We used the g++ compiler under Ubuntu 11.01.

We recall that dtrtri refers to the BLAS triangular matrix inversion routine with double precision floating points. We named our routines RAMM, RATSS, see fig. 3.

We notice that for increasing matrix sizes, RATSS becomes even more efficient than dtrtri (improvement factor between 15 and 24 %). On the other hand, dtrtri is better than RAMM, see table 1.

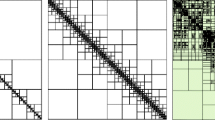

5.2 DMI Algorithm

Table 2 provides a comparison between LU factorization-based algorithms i.e. MILU_1 (one TMI and one triangular matrix system solving), MILU_2 (two TMIs and one TMM), and the BLAS routine where the routine dgetri was used in combination with the factorization routine dgetrf to obtain the matrix inverse (see Fig. 4).

We remark that the time ratio increases with the matrix size i.e. MILU_1 and MILU_2 become more and more efficient than BLAS (the speed-up i.e. time ratio reaches 4.4 and more).

6 Conlusion and Future Work

In this paper we targeted and reached the goal of outperforming the efficiency of the well-known BLAS library for triangular and dense matrix inversion. It has to be noticed that our (recursive) algorithms essentially benefit from both (recursive) Strassen matrix multiplication algorithm, recursive solvers for triangular systems and the use of BLAS routines in the last recursion level. This performance was achieved thanks to (i) efficient reduction to matrix multiplication where we optimized the number of recursive decomposition levels and (ii) reusing numerical computing libraries as much as possible.

These results we obtained lead us to precise some attracting perspectives we intend to study in the future. We may particularly cite the following points.

-

Achieve an experimental study on matrix of larger sizes.

-

Study the numerical stability of these algorithms

-

Generalize our approach to other linear algebra kernels

References

Quarteroni A, Sacco R, Saleri F (2007) Méthodes numériques. Algorithmes, analyse et applications, Springer, Milan

Heller D (1978) A survey of parallel algorithms in numerical linear algebra. SIAM Rev 20:740–777

Nasri W, Mahjoub Z (2002) Design and implementation of a general parallel divide and conquer algorithm for triangular matrix inversion. Int J Parallel Distrib Syst Netw 5(1):35–42

Aho AV, Hopcroft JE, Ullman JD (1975) The design and analysis of computer algorithms. Addison-Wesley, Reading

Mahfoudhi R (2012) A fast triangular matrix inversion. Lecture notes in engineering and computer science: Proceedings of the world congress on engineering 2012, WCE 2012, London, UK, 4–6 July 2012, pp 100–102

Steven H, Elaine M, Jeremy R, Anna T, Thomas T (1996) Implementation of Strassen’s algorithm for matrix multiplication. In: Supercomputing ’96 proceedings ACM/IEEE conference on supercomputing (CDROM)

Strassen V (1969) Gaussian elimination is not optimal. Numer Math 13:354–356

Andersen BS, Gustavson F, Karaivanov A, Wasniewski J, Yalamov PY (2000) LAWRA—Linear algebra with recursive algorithms. Lecture notes in computer science, vol 1823/2000, pp 629–632

Dumas JG, Pernet C, Roch JL (2006) Adaptive triangular system solving. In: Proceedings of the challenges in symbolic computation software

Mahfoudhi R, Mahjoub Z (2012) A fast recursive blocked algorithm for dense matrix inversion. In: Proceedings of the 12th international conference on computational and mathematical methods in science and engineering, cmmse 2012, La Manga, Spain

Aho AV, Hopcroft JE, Ullman JD (1974) The design and analysis of computer algorithms. Addison-Wesley, Reading

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer Science+Business Media Dordrecht

About this chapter

Cite this chapter

Mahfoudhi, R., Mahjoub, Z. (2013). On Fast Algorithms for Triangular and Dense Matrix Inversion. In: Yang, GC., Ao, Sl., Gelman, L. (eds) IAENG Transactions on Engineering Technologies. Lecture Notes in Electrical Engineering, vol 229. Springer, Dordrecht. https://doi.org/10.1007/978-94-007-6190-2_4

Download citation

DOI: https://doi.org/10.1007/978-94-007-6190-2_4

Published:

Publisher Name: Springer, Dordrecht

Print ISBN: 978-94-007-6189-6

Online ISBN: 978-94-007-6190-2

eBook Packages: EngineeringEngineering (R0)