Abstract

In this paper the author discusses the engineers understanding of the strength and displacement demands imposed to structures by earthquake motion, and of the structures capacities to withstand these demands, in a historical perspective. Without any claim of completeness or accurate critical assessment of the significance of various seismic events or of the scientific development of knowledge, the essential relevance of the lessons learnt from some seismic events is critically examined in parallel with the development of structural dynamics. The story moves from a claimed use of some base isolation measure in the temple of Diana at Ephesus in the sixth century B.C., continues with the renaissance treaties, where in one case only some emphasis is placed on how to build a safe structure and passing through the first understanding of dynamic equilibrium arrives to the ages of enlightenment and the Lisbon earthquake of 1755. The breakthrough towards modern seismic analysis is clearly identified with the two earthquakes of San Francisco (1906) and Messina (1908). In particular it is discussed how most of the fundamental principles used for a century had already been stated after the second one. Spectra, ductility and performance based design are then identified as further milestones derived from earthquake evidence, to conclude with a critical appraisal of some major misunderstanding of structural response, with the merits of displacement based approaches and to eventually close the circle opened with the temple of Diana with modern base isolation techniques.

Graece magnificentiae vera admiratio exstat templum Ephesiae Dianae CXX annis factum a tota Asia. In solo id palustri fecere, ne terrae motus sentiret aut hiatus timeret rursus ne in lubrico atque instabili fundamenta tantae molis locarentur, calcatis ea substravere carbonibus, dein velleribus lanae 1.

Pliny, Naturalis Historia, Liber XXXVI, xxi, 95 [Something that should be really admired of the Greek magnificence is the temple of Diana at Ephesus, constructed in 120 years with the contribution of all Asia. It was built on a marshy soil, locating charcoal and wool furs under its foundation, to reduce its sensitivity to earthquakes and to avoid locating such a big mass on unstable soil].

This paper was the subject of the inaugural lecture for the beginning of the academic year at the Università degli Studi di Pavia on 18 January 2010, (1185th year from the Capitolare of Lotario, 649th from the Studium Generale institution), “without any claim of completeness or accurate critical assessment of the significance of various seismic events or of the scientific and technical development of knowledge, but instead illustrating a history of events and ideas seen from the point of view of the author, influenced by his training, the places in which he has lived, the master teachers he had”

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

Pliny the Elder doesn’t explain why the temple of Diana at Ephesus (Fig. 10.1) should not have feared earthquakes thanks to the fact that it had been built on marshy ground, or what function the layers of coal and animal hides laid underneath the foundations had. So it comes as no surprise that for centuries builders and scientists alike forgot about this passage and resigned themselves to seeing earthquakes essentially as divine punishment, humbly accepting deaths and collapses, without even wondering whether it was possible to build structures in such a way as to limit the damage, without realising that, eventually, it is houses and bridges that collapse and cause deaths, not woods and meadows.

There are a number of examples of this scientifically peculiar but basically useless approach in Renaissance treatises. For example, and this goes for all of them, it is useful to quote the treatise that Stefano Breventano, the caretaker of the Accademia degli Affidati, wrote in Pavia in 1576 following the Ferrara earthquake of 1570. The text discusses seismogenesis (What an earthquake is and what causes it), drastically concluding that the principal cause of an earthquake is God, wave motion (How many kinds of earthquake there are), warning signs (Signs, which precede the earthquake), duration (The duration of earthquakes), dangerousness and amplification of the movement (Places which are more or less susceptible to earthquakes), effects (Under which weather conditions are earthquakes most likely to occur, and The effects that cause earthquakes), but dedicates only half a page to the topic of reducing vulnerability and risk (Remedies or protection against earthquakes), drawing on some of the instructions given by Pliny (him again).Footnote 1

It is curious to observe that there was a preference for debating theories on the causes of earthquakes, which had already been developed by Greek philosophers, rather than learning how to build.

The only exception can be found in a work by Pirro LigorioFootnote 2 (which was also written after the Ferrara earthquake, which I mentioned earlier), in which the author, an architect, designed an seismic resistant building (Fig. 10.2). This was merely a matter of implementing geometric rules of proportion and structural details about the connection between the walls and the floor, which, moreover, would have considerably reduced the number of victims and the amount of damage caused if they had been systematically implemented in the construction of historic buildings.

The analytical concepts that would form the basis of seismic engineering until the second half of the twentieth century were set out in domains that had nothing to do with earthquakes, within the course of the 10 years from 1678 to 1687.

Between 1675 and 1679 Robert Hooke announced to the Royal Society that he had discovered the fundamental law of elasticity, published it in the form of an anagram (CEIIINOSSSTTVV)Footnote 3 and revealed the meaning of this with the formula “vt tensio sic vis” (Fig. 10.3). The theory of proportionality between force and elongation constituted a fundamental concept for progress in many areas of technology, including the science of construction, but, as we shall see, it subsequently became a heavy burden that hindered the logical development of seismic engineering.

The fundamental law of elasticity as presented by Hooke, at point 3 (on the left the front page of the document). Note that at point 2 it is described the principle to design a perfectly compressed arch, whose geometry should correspond to the opposite of that derived from a flexible string hanging the loads. The meaning of the anagram “abcccddeeeeeeiiiiiiiiillmmmmnnnnprrsssttttttuuuuuuuux” was revealed after his death, in 1705: “ut pendet continuum flexile, sic stabit contiguum rigidum inversum”)

In 1678 Newton, partially drawing on Galileo,Footnote 4 set out the three fundamental principles of dynamics,Footnote 5 and I hope physics teachers will forgive me if in this context I translate them by saying that he essentially expressed the concepts of balance and of proportionality between force and acceleration, in accordance with a constant property of the object to which force is applied, i.e. the mass.

Therefore, from the end of the 1600s, engineers, who had already existed for centuries,Footnote 6 though they did not yet have the formal training of university courses, had at their disposal all the tools required to design an antiseismic structure, in accordance with the criteria that would then be used for the majority of the twentieth century. They just didn’t know it yet.

It is in this context that the “Age of Enlightenment” began, with the battle between a dynamic optimism that believed in the progression of knowledge, and the consequent possibility of improving every aspect of human life, and the theological optimism of Leibniz and Pope, who maintained that the created world was perfect and that it was impossible to improve any aspect of divine creation. The Lisbon earthquake of 1755 broadened and aggravated the debate. Voltaire, in what we now would call an “instant book”,Footnote 7 wondered whether Pope would have dared to declare that all that is is for the best if he had lived in Lisbon. Rousseau noted that if people insisted on wanting to live in cities and build houses of six or seven storeys they should blame themselves, not God, for the consequences of earthquakes.Footnote 8

It is again surprising to note that the only comment of any practical value came from a utopian philosopher. Earthquakes continued to be the subject of debate among thinkers, not builders.

Not surprisingly, there were now university courses for engineers, but these were generally taught in philosophy faculties (in Pavia from 17867).

Two earthquakes once again came as a wake-up call, in San FranciscoFootnote 9 in 1906 and in MessinaFootnote 10 in 1908. In the first case it is interesting to analyse the logics and techniques applied in the reconstruction: the army build 5,610 small houses in a very short space of time, which were rented for 2 dollars a month, up to a maximum of 50 dollars to acquire ownership (Fig. 10.4).

The second was much more important from the point of view of the progress of science.

In the case of the Messina earthquake there was considerable debate regarding how to go about the reconstruction. Three weeks after the earthquake, in the Monitore Tecnico Footnote 11 it was stated: “An error, for example, that we believe to see appearing on the horizon as a great danger, is that which corresponds to the ideas set out by the hon. Mr Bertolini, Minister of Public Works, with regard to the reconstruction of the towns that have been destroyed. He has suggested ruling out temporary constructions and instead adopting permanent buildings. Just how wrong this idea is can be demonstrated by a complex series of reasons. Above all, it would be unwise to construct permanent buildings straightaway, before seriously and in an in-depth manner studying the construction methods that should be adopted in order to guarantee that new buildings will certainly be able to resist any future seismic movements. These construction methods need to be discussed at length by the experts, and the need cannot be met by the suggestions, no doubt mainly theoretical, that may come from the Commission appointed by the Minister […]”.

Clearly, everything had changed.

And while we will discuss how history has repeated itself in recent years a bit later, now I wish to point out that in less than 4 months a Royal Decree was published containing the new Technical Standards,Footnote 12 in which (article 24) it is explicitly stated that when calculating the stability and resistance of buildings, the following must be taken into consideration: 1° the static actions due to the building’s own weight and overloading, increased by a percentage that represents the effect of the vertical vibrations; 2° the dynamic actions due to the horizontal seismic movement, representing them with accelerations applied to the masses of the building in two directions […].

Subsequently, the various commissions created went well beyond the expected predominantly theoretical suggestion, publishing, among others, in the Giornale del Genio CivileFootnote 13 a brief summary of modern seismic engineering, in which the following concepts are cited as fundamental:

-

1°

The theory that the effects of the dynamic actions on building elements can be compared to those produced by forces proportional to the masses both in the horizontal direction and the a vertical direction (in other words, engineers had finally discovered Newton and the forces of inertia);

-

2°

The opportunity, for the calculation of these forces, to refer to the proportions of the buildings that have proven to satisfactorily bear seismic shocks with considerable destructive power (in other words, since numerical data to be used to estimate the accelerations experienced by the structural masses are not available, let’s rely on a kind of back analysis in order to decide on the design value);

-

3°

The suitability, imposed by considerations of an economic nature, of allowing, for horizontal seismic forces, a greater tolerance with respect to the limits normally adopted as safety levels, in light of the exceptional nature of the actions and of the advantages of avoiding excessive stiffness (in other words, let’s accept a certain level of damage for the design earthquake, since we cannot afford to plan for an event that may happen in several centuries’ time);

-

4°

The preferred way to put this tolerance into action by undervaluing the horizontal seismic forces, reducing them to around 1/3 of their value (in other words, a reasonable numerical estimate is given to what we now call the behaviour or force reduction factor);

-

5°

The confidence that the margin provided by these safely estimated loads offers […] a sufficient guarantee of safety to people if not of absolute integrity to buildings (in other words, the first performance based design logic was defined);

the text continues with numbers 6, 7, 8 and 9, but I’m going to stop there.

One of the commissions drew up, among others, a report (July 1909)Footnote 14 that can be considered to be the forerunner of the earthquake hazard maps and the geological and geotechnical criteria to be applied in order to reduce seismic risk. Professor Torquato Taramelli made a significant contribution to this work and, together with the younger Oddone and Baratta, formed the then great Pavia school of seismology.

It is also worth mentioning that the suggestions of a commission focused on seismological elements continually referred to the Technical Standards, offering the necessary support to the calculations, in accordance with a pragmatically effective logic which did not always form the basis of later studies, namely carried out in the second half of the last century.

But let’s get back to the engineers.

It is now clear:

-

that the action of the earthquake can be represented with a series of horizontal forces obtained by multiplying the masses (M) by the relative accelerations (a M ):

$$V_{{\textrm{base}}} = \Sigma M_i a_{Mi}$$ -

that these forces must be balanced by the resistance of the structural elements, calculated using Hooke’s law, or basically writing the equation of motion as an equilibrium balance equation in which the force of inertia (mass by acceleration) is countered by an elastic force (stiffness, K, by displacement, d):

$$M \times a = K \times d$$

The problem is defining the design ground acceleration (and seismologists will try to solve this problem) and the amplification factor required to go from the acceleration of the ground to that of the structural masses.

The mass acceleration values indicated in various technical circulars published together with the standards are first situated between 1/12 and 1/8 of gravity,14 then increased to values between 1/8 and 1/6Footnote 15 (for vertical actions an increase in weight of 50% is suggested). These are low values, essentially based on sensations (at the current state of knowledge […] it appears that the stability of a building can be believed to be sufficiently guaranteed […] 14).

Another earthquake occurred to teach us more.

This time it was the El Centro earthquake,Footnote 16 one of the first cases in which recorded experimental data were available, which were obviously analogical. It was a violent earthquake, with a magnitude around 7. Acceleration peaks of 0.319 g and maximum ground displacements of 212 mm were recorded. These values would remain significant as an indication of the demand of a strong earthquake for decades, in particular with regard to acceleration, which, as we have seen, was considered to be the fundamental design parameter.

Based on these instrumental data, Maurice Biot perfected the solution to the second problem, the passage from acceleration of the ground to acceleration of the structure, using the concept of a response spectrum,Footnote 17 which enables us to calculate an amplification coefficient using a single structural parameter, the fundamental vibration period of the structure.

A great deal of people would continue working on response spectra, from George Housner,Footnote 18 a doctoral student at Caltech in 1940, to Nathan Newmark and Bill Hall at Urbana,Footnote 19 moving from response spectra to design spectra, from linear responses to non-linear responses, from deterministic logics to probabilistic logics, from accelerations to velocities and displacements (Fig. 10.5).

Values typical of the maximum amplification were identified around 2.5/3.0, which, when applied to ground accelerations of 0.3/0.4 g, can mean horizontal forces greater than those due to gravity on a system with linear behaviour.

The wisdom of the 1908 Earthquake Commission seems evident when it suggested a reduction to a third of the estimated forces from mass and acceleration, which were then unknown. Nevertheless, conventional logics based essentially on the sensations of the experts continued to be applied the world over, and in Italy in particular, rather than proceeding in this direction, i.e. acknowledging the values that must be dealt with and accepting justified reductions, which obviously involve damage.

Once again, the earthquake that started the new revolution in seismic engineering took place in California, not far from El Centro, in San Fernando.Footnote 20

The most striking cases are a dam, two motorway junctions and two hospitals.

At the dam (Pacoima Dam) ground accelerations greater than 1 g were recorded.

The case of the Olive View UCLA Medical Center (Fig. 10.6), opened a month earlier, became the subject of studies in all seismic engineering centres, including Italy, which, however, didn’t really wake up about this subject until 5 years later, with the Friuli earthquake.Footnote 21

The essential element that characterises the research activity and its translation into design standards consists of recognising the inadequacy of the representation of the behaviour of structure through linear laws. In effect, the acceptance of a non-linear behaviour, and therefore of the manifestation of structural damage, was implicitly involved in both reducing the design resistance to a third of the forces of inertia and designing for conventional values, possibly ten times lower than the expected demand. The point was therefore rather to recognise that structures designed according to similar criteria can give rise to completely different results, can collapse or can resist the violence of an earthquake with no problems. Credit and blame were quickly attributed to the greater or smaller capacity of a structure to deform plastically after having reached its yield level.

The concept of displacement ductility (μ) was defined as the ratio between the displacement at collapse (Δ u ) and the yield displacement (Δ y ).

Ductility became the fundamental myth of every research study, every standard, every application.

Rather than simply acknowledging the possibility of comparing demand and capacity in terms of displacements, people turned to ductility as a corrective parameter of strength. Structures continued to be designed and checked with a comparison between forces, modified depending on the deformation capacity. The design logic typical of actions due to gravity, which is always present, continued to be applied to actions assumed to take place once in 500 years. After the myth of the linear response of structures, new myths were born, which were even harder to be demolishedFootnote 22:

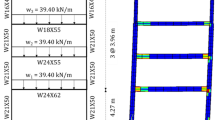

The myth of ductility and the behaviour factor. In every part of the world studies on ductility were carried out and definitions and conventions were created. In reality the conventional value of displacement at the elastic limit and at collapse meant that the same term defined values that could differ from one another by as much as three or four times. On the basis of conventional ductility a force reduction factor was defined, which, on apparently only the most rational basis, drew on the reduction coefficient introduced after the Messina earthquake. The reduction factor was applied to the entire structure, without taking into account ductilities of different structural elements that can vary considerably. A typical and striking case is that of a bridge with piers of different heights, in which it is easy to demonstrate that it is impossible to attribute the same ductility to different piers (Fig. 10.7).

The myth of elastic stiffness. It was assumed that the fundamental vibration period (T) of the structural system, and therefore its stiffness (K), could be determined at the beginning of the design:

On the basis of the period the design spectrum was entered, the acceleration (S a ) at the structural mass (M) was assessed and the resistance (V r ) to be attributed to the structure was calculated, by multiplying mass by acceleration and dividing by the behaviour factor (q):

Unfortunately, it is easy to prove that stiffness is not an independent variable, but depends on the strength, unknown at the beginning of the process. In addition, we must discuss which value of stiffness to use: the tangent initial one, that of a cracked structure, the secant to yield one, the secant to the design displacement?

The myth of refined analysis. The availability of complex calculation methods and more and more powerful calculators allowed geometrically refined representations of structures and the use of models with a large number of degrees of freedom. It became possible to carry out analyses that separately assess the various mode of vibration of the structure and then to combine them according to the mass participating in each mode. These approaches are extremely useful in many mechanical and structural applications, but can result in gross mistakes when we attempt to predict the non-linear response, which is characterised by a specific mechanism of damage and collapse, from the combination of a number of elastic mode of vibration, which have little or nothing to do with the post-elastic behaviour.

The myth of the conservation of displacements. Another fundamental theory on which essentially all codes are based consisted of the assumption that for structures with the same vibration period (in this context, with the same initial stiffness) a definite seismic action produces the same maximum displacement demand, regardless of the energy dissipation capacity of the system, and therefore of the form of its typical hysteresis loops. The antithesis of this myth, which is also false, can be found in the conviction of some researchers that the seismic problem can be tackled solely through energy balances. I could therefore call it the myth of energy and its antithesis.

Finally, the myth of the engineer (“let’s put one more re-bar”). I’ve left this one last, and I’ve given it a provocative and incomprehensible name, I know. But this is the most difficult taboo to overcome, the one that assumes that a greater strength, however distributed among the elements of a structure, in any case produces a greater safety against collapse. A false and dangerous conviction, discussed again later, pointing out the one true legacy from the seventies which nowadays can and should be used in design, i.e. the principle of hierarchy of strength or capacity design.

Once more, our teachers (earthquakes) made these and other problems clear.

Three events in quick succession (Loma PrietaFootnote 23, 1989, NorthridgeFootnote 24, 1994 and KobeFootnote 25, 1995) again shook the engineers’ certainties. In the first case a two-level viaduct collapsed in Oakland (Fig. 10.8), in the second considerable damage was caused to hundreds of kilometres of motorway viaducts, and the third shook the certainties of the country that considered itself to be the most advanced in the world in terms of seismic safety: Japan (Fig. 10.9).

After the Loma Prieta earthquake a reportFootnote 26 was published for the Governor of California, George Deukmejian, coordinated by the legendary George Housner (50 years after El Centro he was no longer a doctoral student). The report was entitled Competing Against Time. Housner wrote:

Future earthquakes in California are inevitable. Earthquakes larger than Loma Prieta with more intense ground shaking will occur in urban areas and have severe consequences – too large to continue “business as usual”. […] The Board of Inquiry has identified three essential challenges that must be addressed by the citizens of California, if they expect a future adequately safe from earthquakes:

• Ensure that earthquakes risks posed by new constructions are acceptable.

• Identify and correct unacceptable seismic safety conditions in existing structures.

• Develop and implement actions that foster the rapid, effective, and economic response to and recovery from damaging earthquakes.

[…] The State of California must not wait for the next great earthquake, and likely tens of billions of dollars damage and thousands of casualties, to accelerate hazard mitigation measures. […] Earthquakes will occur – whether they are catastrophes or not depends on our actions.

Eventually, risk becomes the object of discussion, being understood that probabilistic logics have to be adopted, and that design and strengthening rules have to be coherent with the available resources, not with an ideal level of safety.

It seems to go back to the time of philosophers and thinkers, with streams of words to illustrate various theories of performance based design (Fig. 10.10), attempts to systematise refined logics, in which various design earthquakes were defined, depending on the probability of occurrence (p) in a determined interval of time (T L ), or the average return period (T R ), and various performances to be required of structures, depending on their importance in the event of a catastrophe, and on the consequences in the event of damage and collapse:

For example, for an earthquake with a probability of 50% in 50 years, i.e. with an average period of return estimated in 72 years, it is required that the damage be basically insignificant, whereas for an event with a probability of 10% in the same interval of time (T R = 475 years) it is required that an important bridge remain in full usable condition or that a hospital maintain full functionality, but even fairly considerable damages to a residential building may be accepted, and so on.

These are clear and logical concepts, but it is not immediate to translate them into the answers to the only two questions of interest for a builder: which resistance should be assigned to a structure and how should this strength be distributed between the various structural elements.

There are no doubts about one fundamental aspect, however: the force to which a structure is subjected (and therefore its acceleration) is not a suitable variable to appropriately describe the expected damage, or the performance of the system. In fact, it is clear that very different levels of damage, corresponding for example to the usability of a building, the possibility of repairing it quickly, avoiding collapse, correspond to values of force, and of acceleration, which are not very different from one another (Fig. 10.11).

On the contrary, the various performances of interest are characterised by displacement values that differ greatly from one another, so that it is not hard to imagine forms of correspondence between expected performance and acceptable displacements, or, in a way that is much easier to apply, to define an adimensional displacement variable, to be used as a fundamental design parameter. A suitable parameter of this type is immediately identifiable in the relationship between horizontal displacement and height, is obviously an angle, and is normally called “drift”.

For example, in the case of a building it can refer to the relative displacement between two storeys divided by the storey height, and we can talk about interstorey drift, whereas in the case of a bridge it can refer to the displacement of the deck divided by the height of the pier.

It is on the basis of this fundamental observation, and in the acknowledgement of the insurmountable limits of any design method that uses forces and accelerations as fundamental variables, that Nigel PriestleyFootnote 27 developed what is to date the only method of displacement-based seismic design that can truly be implemented in practice, publishing a bookFootnote 28 about which Graham Powell, Professor Emeritus at UC Berkeley, opened his review, published in Earthquake Spectra, with the words: It is rare for a book on structural engineering design to be revolutionary. I believe that this is such a book. If you are involved in any way with seismic resistant structural design, this should be on your bookshelf, and you should read at least the first three chapters.

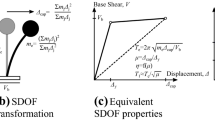

The book is the culmination of 15 years of research, in which dozens of doctoral students were involved, though its fundamental logic is simple and direct (Fig. 10.12):

-

design displacement (drift) values are defined for the various performances to be examined;

-

displacement design spectra are defined for events corresponding to the various performances;

-

the expected, or rather desired, structural behaviour is defined and a simplified model is created;

-

for each performance a reliable value of equivalent damping is estimated, through which the spectrum that represents the displacement demand is reduced;

-

the spectrum is entered with the design displacement and the corresponding stiffness is read;

-

multiplying stiffness by displacement the value of strength to be attributed to the structure is found;

-

the strength is distributed among the various resistant elements according to the assumed response.

Each step is further discussed below.

Design displacements. It is easy to approximately estimate the elastic limit of a structure using its geometry alone. For example, the secant yield rotation (θ y ) of a circular pier of a bridge (Fig. 10.13) can be estimated using the yield deformation of the steel (ɛ y ), its diameter (D) and its height (H), as:

Similarly, for a reinforced concrete frame with beams that are weaker than columns, the same variable can be estimated using only the span (l b ) and height (h b ) of the beams:

The design displacements may be similar to those calculated in this way to avoid significant structural damage, or considerably larger where the performance accepts to repair the structure after an event.

In any case interstorey displacement values able to limit non-structural damage must be taken into consideration, too. For example, stone walls could show significant damage, with drift values around 0.5%, whereas other non-structural elements, which are less sensitive to imposed displacements, could cope with values around 1% without particularly significant damage.

Displacement design spectra. The approximations that arose in the 1970s from the solution of Duhamel’s integral enabled to express the ordinates of a displacement spectrum (S d ) using those of the corresponding acceleration spectrum (S a ) using only the vibration period of the structure (T):

In reality, more recent studies clearly indicate that the correlation between the two spectra is much weaker, and today it seems more reliable and efficient for practical purposes to express the displacement spectrum as a bilinear with a second constant branch, using a “corner” period value (T c ) and the corresponding displacement (d max), defined on the basis of the moment magnitude (M w ) and the distance from the epicentre (r, in kilometres):

Equivalent viscous yield. In the equations of motion of a dynamic system, the term containing the damping coefficient, which, multiplied by the velocity, provided the third term of a balance equation, has always been a sort of free parameter, used to force numerical results to get closer to experimental evidence. In reality, the fact that a wider hysteresis loop tends to reduce the displacement demand is incontrovertible from experimental data. It is possible to express an equivalent viscous damping (ξ e ) using the hysteresis area of a loop (A h ) compared with the area of the triangle defined by maximum force (F m ) and displacement (d m ):

And on the basis of this equivalent damping value to define a displacement demand reduction factor (η ξ ):

It is easy to check that reasonable equivalent damping values seldom exceed 0.2/0.3 and that consequently the correction factor never exceeds 0.5. Furthermore, an error of 20% in the damping estimate would anyway produce an error in the displacement demand estimate of less than 10%, and in this business of engineers an error of 10% in the displacement estimate is a good approximation of an infinitesimal.

On the contrary, numerical analyses that overestimate the viscous damping contribution, by maintaining the proportionality to the initial stiffness even when this is considerably reduced, can lead to estimates that make the difference between a structure that survives and one that collapses.

Response and model. This approach has retained the old trick, or rather the brilliant idea, of Tom PaulayFootnote 29, which I referred to as capacity design, or hierarchy of resistances.

Tom explained how, in order to make ductile a chain a made of brittle links, it is sufficient to replace one of them with a ductile one, provided that its strength is slightly lower than that of the others, therefore yielding first, preventing the force from growing any further, and thus protecting all the other links.

Translated for seismic engineers: how to prevent brittle failure modes due to shear stresses by ensuring that the flexure failure modes are weaker, how to form plastic hinges in the beams by making them weaker than the connected columns, how to prevent collapses in the foundations by making them stronger than the vertical elements supported by them, and so on.

Distribution of strength. Acknowledging that stiffness and resistance are not independent variables implies the possibility of modifying the distribution of the horizontal forces amongst various elements simply by increasing the reinforcement of those which are geometrically less stiff. This results in the possibility of making more intelligent structures, reducing torsion problems, and bringing the centre of mass and the centre of resistance closer.

To those who are wisely wondering whether structures that are designed with an approach based on forces or on displacements are really different, I will only say here that the relationship between any parameter of intensity of motion and the strength to be assigned to a structure varies linearly when we refer to forces, whereas it varies quadratically when we refer to displacements (Fig. 10.14). I will also say that the reinforcement ratio in piers or walls with different geometry is constant when using displacements but variable with the square of the height or with the depth of the base when using forces. Only space prevents me from discussing how the displacement method is the only rational one when we wish to assess the safety of existing structures, in which there is no doubt that the perceived acceleration (S a ) is merely the ratio between resistance (V R ) and mass (M), regardless of the ground acceleration, thereby overturning the logic of elastic response:

We could stop here, but I can’t conclude without mentioning the two most recent earthquakes, the one that took place in China in May 2008Footnote 30 and the L’Aquila earthquakeFootnote 31 (Fig. 10.15).

I mention the first one only to establish a relationship of scale between the events. The L’Aquila earthquake seemed dramatic to us Italians, and it was dramatic. But how would we have coped with seventy thousand deaths instead of three hundred? How would we have dealt with eleven million homeless people instead of seventy thousand? Italy isn’t China? Of course, if we overlook Messina, 1908.

Less than a year passed and a great deal of research took place between the two events. And it is significant that while the Sichuan earthquake was taking place, in Pavia an earthquake simulator was being used to test buildings showing damage and collapse mechanisms which would then be reproduced in the real-life laboratory of L’Aquila.

Which brings me on to L’Aquila.

The first earthquake for more than a century in Italy whose focus was exactly below an important city.

From a seismology point of view, the new data were striking and opened up new debates about the reliability of the recently adopted earthquake hazard map, which no doubt represents a state of the art at an international level.Footnote 32 Some people question whether it is acceptable for ground accelerations in excess of 0.6 g to be recorded in an area where the expected acceleration with a 10% probability in 50 years, or with a period of return of 475 years, is equal to around 0.25 g, thus demonstrating that they have no understanding of the concept of a uniform probability spectrum.

If once again we limit ourselves to lessons for engineers, the most interesting topic relates to reconstruction, and in particular to the technical choices that have enabled us to construct 4,500 permanent houses of high quality in around 8 months, with an average production of around 3 million euros per day (Fig. 10.16). This is neither the time nor the place to discuss the extraordinary organisational machine,Footnote 33 the non-profit consortium led by the Eucentre foundation, which permitted to operate without a general contractor, the choice related to town-planning, architecture, energy, sustainability, installations.

Now a few words about the fundamental structural choices. It was crucial to use various technologies and different materials, such as wood, steel and concrete, adopting in all cases a high level of prefabrication. It was fundamental to start designing, preparatory works and calls for bids before knowing the construction sites, the characteristics of the ground and the morphology of the areas.

The solution was identified in the construction of two plates, one working as a foundation, the other supporting the buildings, separated by a series of columns and by a seismic isolation system made up of sliding devices on a spherical surfaces. Friction pendulum devices are derived from a brilliant idea, developed in its current technology in Berkeley in the early 1980s,Footnote 34 which is based on the behaviour of a pendulum (like that of cuckoo clocks, Fig. 10.17).

It is interesting to point out that in 1909 (after the Messina earthquake) seismic isolation technologies were proposed (one of which was conceptually similar to the isolators we are talking aboutFootnote 35), discussed and assessed, but ruled out for reliability reasons. Arturo DanussoFootnote 36 wrote: we immediately understand that if we could practically put a house on springs, like an elegant horse-drawn carriage, an earthquake would come and go like a peaceful undulation for the happy inhabitants of that house, but concluded: I think that a certain practical sense of construction alone is sufficient by itself to dissuade from choosing mechanical devices to support stable houses.

In this specific case the radius of oscillation is determined by the curvature of the sliding surface, which acts like the length of the suspension arm, and in a completely analogous way the vibration period (T) is a function of the radius of curvature alone (r) and of the acceleration of gravity (g), and therefore the stiffness (K) of the system is exclusively a function of the weight of the building (W = Mg) and of the radius.

If a seismic event occurs the upper plate may slide over the lower one, and the building constructed on this experiences accelerations, therefore forces, limited to values around a tenth of the acceleration of gravity, regardless of the violence of the earthquake. In the hypothetical event of a repeat of the L’Aquila earthquake, the acceleration experienced by the buildings would be reduced by around ten times.

So, a technology that is 30 years old, or a 100 years old, implemented with success in several projects, was for the first time employed systematically in one hundred and eighty five residential buildings, constructed in a few months on more than seven thousand isolators and tested reproducing a credible seismic motion on site on eleven buildings.

A much older idea, perhaps not decades, but millennia old, if we wish to interpret the calcatis ea substravere carbonibus, dein velleribus lanae, which Pliny attributed to the builders of the temple of Diana at Ephesus, as a seismic isolation measure.

And the circle is complete.

Now we wonder about the next earthquake, when and where it will happen, the energy it will release, the damage it will cause, the collapses, the victims. We don’t know and we will never know, but we can try to use in the best way the scarce resources that humanity can afford to dedicate to prevention.

I will finish by reminding once again the words that concluded the preface of the report Competing against time 25: Earthquakes will occur – whether they are catastrophes or not depends on our actions.

(On 12 January 2010 at 4.53 a 7.0 magnitude earthquake struck Haiti. On 27 February 2010 at 3.34 a 8.8 magnitude earthquake struck Chile. The lesson continues.)

Notes

- 1.

Breventano S (2007) Trattato del terremoto [treatise on earthquakes]. In: Albini P (ed) IUSS Press, Pavia, p 24: “In buildings he says the archivolts, the corners of the walls, the doors and the cellars are extremely secure, because they are resistant to reciprocal impact. Brick walls suffer less damage than those made of stone or marble. […]Emperor Trajan […] ordered that houses be built no higher than the measurement of seventy feet, so that if there was another earthquake, they would not be damaged as easily. Propping up buildings on one side and the other with beams is not completely pointless”.

- 2.

Ligorio P (2005) Libro di diversi terremoti [Book on various earthquakes]. In: Guidoboni E (ed) De Luca Editori d’Arte, Rome; the building is described on pages 93–97 of the edition cited, f. 58–61 of the work.

- 3.

Hooke R (1679) Lectiones Cutlerianæ, or A collection of lectures: physical, mechanical, geographical, & astronomical, Printed for John Martyn, London.

- 4.

Galilei G (1687) Dialogo sopra i due massimi sistemi del mondo [Dialogue concerning the two chief world systems], Florence.

- 5.

Newton I (1687) Philosophiae Naturalis Principia Mathematica. London.

- 6.

Ingegneri a Pavia tra formazione e professione [Engineering in Pavia between training and profession] In: Cantoni V, Ferraresi A (eds) Cisalpino. Istituto Editoriale Universitario – Monduzzi Editore, Milan, 2007.

- 7.

Voltaire (1756) Poème sur le désastre de Lisbonne, ou examen de cet axiome: tout est bien.

- 8.

Rousseau JJ (1756) Letter to Voltaire about the Lisbon earthquake (also known as Letter on Providence).

- 9.

San Francisco, 5:12 a.m., 18 Apr 1906, M w = 7.8 (estimated).

- 10.

Messina, 5:21 a.m., 28 Dec 1908, M w = 7.5 (estimated).

- 11.

Il Monitore Tecnico (journal on engineering, architecture, mechanics, electronics, railways, agronomy, cadastre and industrial – official body of the association of former students of the Politecnico di Milano), 20 Jan 1909.

- 12.

Royal Decree of 18 Apr 1909, no. 193, published in Official Gazette no. 95, on 22 Apr 1909.

- 13.

Instructions and examples of calculations for constructions that are stable against seismic actions, Giornale del Genio Civile, year LI, 1913 (the Commission that wrote this document comprised professors Ceradini, Canevazzi, Panetti, Reycend and Salemi Pace, and engineer Camerana).

- 14.

Report of the Royal Commission set up to identify the most suitable areas for the reconstruction of the urban areas affected by the earthquake that took place on 28 December 1908 or by other previous earthquakes. Printed by the R. Accademia dei Lincei, Rome, 1909.

- 15.

Lieutenant’s Decree of 19 August 1917, Italian Official Gazette, 10 Sept 1917.

- 16.

El Centro, California, 8:37 p.m., 18 May 1940, M w = 6.9. In reality the first accelerogram recording is from the Long Beach earthquake, in 1933, but which had a less significant impact on the development of knowledge.

- 17.

Biot MA (1934) Theory of vibration of buildings during earthquakes. Z Angew Matematik Mech 14(4):213–223. Critically discussed in: Trifunac MD (2006) Biot response spectrum. Soil Dyn Earthquake Eng 26:491–500.

- 18.

Housner G (9 Dec 1910, Saginaw, MI; 10 Nov 2008, Pasadena, CA) the teacher of all seismic engineers.

- 19.

Newmark NM, Hall WJ (1982) Earthquake Spectra and Design. Engineering Monographs, EERI, Oakland, CA.

- 20.

Sylmar, California, 6:01 a.m., 9 Feb 1971, M w = 6.6.

- 21.

Gemona, 21:06, 6 May 1976, M w = 6.4.

- 22.

Priestley MJN (2003) Myths and fallacies in earthquake engineering, revisited. The 9th Mallet Milne Lecture, IUSS Press, Pavia

- 23.

Loma Prieta, 5:04 p.m., 17 Oct 1989, M w = 6.9.

- 24.

Northridge, 4:31 a.m., 17 Jan 2004, M w = 6.7.

- 25.

Kobe, 5:46 a.m., 17 Jan 2005, M w = 6.8.

- 26.

Competing against time, Report to Governor George Deukmejian from the Governor’s Board of Inquiry on the 1989 Loma Prieta Earthquake, George W. Housner, Chairman, Department of General Service, North Highlands, CA, 1990.

- 27.

Michael John Nigel Priestley, 21 Jul 1943, Wellington, New Zealand, the true inventor of displacement-based design, friend and teacher.

- 28.

Priestley MJN, Calvi GM, Kowalsky MJ (2007) Displacement based seismic design of structures. IUSS Press, Pavia.

- 29.

Paulay T (26 May 1923, Sofron, Hungary; 28 Jun 2009, Christchurch, New Zealand), count, cavalry officer, refugee in Germany and New Zealand, famous professor, author of successful books, fine gentleman, kind and affectionate teacher.

- 30.

Wenchuan, 14:28, 12 May 2008, M w = 7.9.

- 31.

L’Aquila, 3:32, 6 Apr 2009, M w = 6.2.

- 32.

Crowley H, Stucchi M, Meletti C, Calvi GM, Pacor F (2009) Revisiting Italian design code spectra following the L’Aquila earthquake. Progettazione Sismica, 03/English, IUSS Press, Pavia, pp 73–82.

- 33.

Calvi GM, Spaziante V Reconstruction between temporary and definitive: The CASE project, ibidem, pp 221–250.

- 34.

Zayas V, Low S (1990) A simple pendulum technique for achieving seismic isolation. Earthquake Spectra 6(2).

- 35.

A double-slide isolator on curved surfaces, patented by M. Viscardini in 1909, described in: Barucci C, La casa antisismica [The antiseismic house], Gangemi, 1990.

- 36.

Il monitore Tecnico, 10 Aug 1909.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2010 Springer Science+Business Media B.V.

About this chapter

Cite this chapter

Calvi, G.M. (2010). Engineers Understanding of Earthquakes Demand and Structures Response. In: Garevski, M., Ansal, A. (eds) Earthquake Engineering in Europe. Geotechnical, Geological, and Earthquake Engineering, vol 17. Springer, Dordrecht. https://doi.org/10.1007/978-90-481-9544-2_10

Download citation

DOI: https://doi.org/10.1007/978-90-481-9544-2_10

Published:

Publisher Name: Springer, Dordrecht

Print ISBN: 978-90-481-9543-5

Online ISBN: 978-90-481-9544-2

eBook Packages: Earth and Environmental ScienceEarth and Environmental Science (R0)