Abstract

The Iris recognition system is claimed to perform with very high accuracy in given constrained acquisition scenarios. It is also observed that partial occlusion due to the presence of the eyelid can hinder the functioning of the system. State-of-the-art algorithms consider that the inner and outer iris boundaries are circular and thus these algorithms do not take into account the occlusion posed by the eyelids. In this paper, a novel low-cost approach for detecting and removing eyelids from annular iris is proposed. The proposed scheme employs edge detector to identify strong edges, and subsequently chooses only horizontal edges. 2-means clustering technique clusters the upper and lower eyelid edges through maximizing class separation. Once two classes of edges are formed, one indicating edges contributing to upper eyelid, another indicating lower eyelid, two quadratic curves are fitted on each set of edge points. The area above the quadratic curve indicating upper eyelid, and below as lower eyelid can be suppressed. Only non-occluded iris data can be fetched to the further biometric system. This proposed localization method is tested on publicly available BATH and CASIAv3 iris databases, and has been found to yield very low mislocalization rate.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

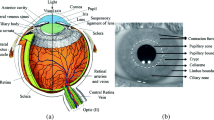

The iris is located between the white sclera and the black pupil and it is an annular portion of the eye [5]. The motivation behind ‘Iris Recognition’, a biometrical based innovation for individual recognizable proof and check, is to perceive an individual from his/her iris prints. An anatomy of iris reveals that iris patterns are highly random and has high level of stability. Being a biometric trait, it is highly distinctive. Iris patterns are different for every individual; the distinction even exists between indistinguishable twins. Even the iris patterns of left and right eye of the same individual differ from each other.

A common iris recognition framework incorporates four steps: acquisition, preprocessing, feature extraction and matching. Preprocessing comprises of three stages: segmentation, normalization and enhancement. During acquisition, a iris images are acquired successively from the subject with the help of a specifically designed sensor. Preprocessing involves in segmentation of the region of interest (RoI) i.e. detecting the pupillary and limbic boundary followed by converting the RoI to polar form from Cartesian form. Feature extraction is a typical procedure used to bring down the span of iris models and enhance classifier exactness. Then, feature matching is done by comparing the extracted feature of probe iris with a set of (gallery) features of candidate iris to determine a match/non-match. Segmentation of iris is a very crucial stage in an iris feature extraction. The aim is to segment the actual annular iris region from the raw image. The segmentation process highly affect the overall procedure of iris recognition i.e. normalization, enhancement, feature extraction and matching. Since segmentation process determines the overall performance of the recognition system, it is given utmost importance. Most segmentation routines in the literature describe that the pupillary and limbic boundaries of the iris are circular. Hence, model parameters are focused on determining the model that best fit these hypotheses. This leads to error, since the iris boundaries are not exactly circles.

2 Related Work

Daugman [4] has proposed a widely used iris segmentation scheme. In this scheme, his assumption is based on that both the inner boundary (pupillary boundary) and outer boundary (limbic boundary) of the iris can be represented by a circle in Cartesian plane. The circles in 2D plane can be described using the parameters \( (x_{0} ,y_{0} ) \) (coordinate of the center) and the radius \( r \). Hence, integro-differential operator can be used effectively to determining these parameters and is described by the equation below:

where \( I(x,y) \) is the eye image and \( G_{\sigma } (r) \) is a smoothing function determined by a Gaussian of scale \( \sigma \). Basically, the IDO looks for the center co-ordinates i.e. the parameters \( (x_{0} ,y_{0} ,r) \) over the entire image domain \( (x,y) \) for the maximum Gaussian blurred version of the partial derivatives (with respect to a different radius \( r \)). Hence, it works like a circular edge detector, which searches through the parameter space \( (x,y,r) \) to segment the most salient circular edge (limbic boundary).

Wildes [12] proposed a marginally diverse calculation which is generally in view of Daugman’s strategy. Wildes has performed a two stage method to the contour fitting. First, a gradient based edge detector is utilized to produce an edge-map from the crude eye picture. Second, the edge-map, which contains positive points can vote to instantiate specific roundabout form parameters. This voting plan is actualized by Hough transform.

Ma et al. [7] generally focus the iris area in the original image, and then uses edge detection technique and Hough transform to exactly detect the inner and outer boundaries in the determined region. The image is then projected in the vertical and horizontal direction to compute the center coordinates \( (X_{c} ,Y_{c} ) \) of the pupil approximately. Since the pupil is by and large darker than its surroundings, the center coordinates of the pupil is calculated as the coordinates corresponding to the minima of the two projection profiles.

where \( X_{c} \) and \( Y_{c} \) represent the coordinates of the pupil’ center in the original image \( I(X,Y) \). Then a more accurate estimate of the center coordinates of the pupil is computed. Their proposed technique considers a region of size \( 120 \times 120 \), centered at the point by selecting a reasonable threshold by applying adaptive method and using the gray level histogram of this region, followed by binarization. The resulting binary region, from where the centroid is found, is considered as the new estimate of the pupil coordinates. Then, the exact parameters of these two circles using edge detection are calculated. Canny operator is also considered by the authors in their experiments along with Hough transform.

Similar works, Masek [10] has proposed a method for the eyelid segmentation in which the iris and the eyelids have been separated through Hough transformation. In contrast to [8], Mahlouji and Noruzi [9] have used Hough transform to localize all the boundaries between the upper and lower eyelid regions. Cui et al. [3] have proposed two algorithms for the detection of upper and lower eyelids and tested on CASIA dataset. This technique has been successful in detecting the edge of upper and lower eyelids after the eyelash and eyelids were segmented. Ling et al. proposed an algorithm capable of segmenting the iris from images of very low quality [6] through eyelid detection. The algorithm described in [6] being an unsupervised one, does not require a training phase. Radman et al. have used the live-wire technique to localize the eyelid boundaries based on the intersection points between the eyelids and the limbic boundary [11].

3 Proposed Work

Most segmentation methods assume that iris is circular, but in practical iris is not completely circular as often occluded by eyelids [4, 7, 12]. For accurate iris recognition it is needed that these occluded regions must be removed. The procedure of iris segmentation starts from detecting papillary and limbic boundaries of the iris. Next the upper and lower eyelids are detected for accurate segmentation of the iris using 2-means clustering with the canny edge detector. The proposed scheme can be shown in Fig. 1 and the overall algorithm given in Algorithm 1.

After pupil and iris boundaries are detected, we get an annular iris image as shown in Fig. 1b. Wiener filter is used for smoothening the annular iris image. It is smoothed in order to reduce noise and to detect only prominent edges like eyelid boundaries. Then canny edge detector is applied on smoothed image. Next we apply a horizontal filter to the connected components formed by each edge. This filter computes the horizontality of each connected components and keeps only those edges which have high horizontality factor and neglecting others. Edges formed by eyelid boundaries have high horizontality factor.

After the horizontal filter applied we have roughly detected the eyelid boundaries. To categorize them as upper and lower eyelid boundaries we apply 2-means clustering technique, a specific case of \( k \)-means clustering [8] where \( k = 2 \). This is a fast and simple clustering algorithm, which is found to be adopted for many applications including image segmentation. The algorithm can be defined for the observations \( \{ x_{i} :i = 1, \ldots ,L\} \), where the goal is to partition the observations into \( K \) groups with means \( \bar{x}_{1} ,\bar{x}_{2} , \ldots ,\bar{x}_{K} \) such that

is minimized and \( K \) is then increased gradually and the algorithm terminates when a criterion is met. In our problem number of groups to be formed is two and thus we take \( K = 2 \).

To distinctly detect the eyelid boundaries, two quadratic curves are fitted on each set of edge points obtained after clustering. Once distinct boundaries are found, area above the curve indicates upper eyelid, and area below as the lower eyelid. These regions are unwanted and thus need to be removed before the recognition process.

4 Experimental Results

The proposed algorithm is implemented on BATH [1] and CASIAv3 [2] databases, we observe that in most images, the eyelid was successfully detected. Simulation results shows a localization of 92.74 and 94.62 % for BATH and CASIAv3 respectively in detecting upper eyelid, while the lower eyelid shows a localization of 83.15 and 87.64 % for the mentioned databases respectively. The experimental data have been tabulated in the Table 1 and Fig. 2 demonstrates some eyelid detection results for the stated databases.

5 Conclusion

Occlusion due to eyelids affects the performance of an iris biometrics. Hence to improve the accuracy of the system, with the proposed method, eyelid boundaries are detected and subsequently can be removed before the recognition process. In this paper, 2-means clustering algorithm is adopted in iris localization. An algorithm is presented that can efficiently detect the eyelid boundaries. Upon implementing the proposed eyelid detection algorithm on publicly available iris databases, CASIAv3 and BATH, it is observed that both the upper and lower eyelids are properly detected for most of the images.

References

Bath University Database: http://www.bath.ac.uk/elec-eng/research/sipg/irisweb

Chinese academy of sciences’ institute of automation (casia) iris image database v3.0: http://www.cbsr.ia.ac.cn/english/IrisDatabase.asp

Cui, J., Wang, Y., Tan, T., Ma, L., Sun, Z.: A fast and robust iris localization method based on texture segmentation. In: Defense and Security, pp. 401–408. International Society for Optics and Photonics (2004)

Daugman, J.G.: High confidence visual recognition of persons by a test of statistical independence. IEEE Trans. Pattern Anal. Mach. Intell. 15(11), 1148–1161 (1993)

Jain, A.K., Ross, P.F., Arun, A.: Handbook of Biometrics. Springer (2007)

Ling, L.L., de Brito, D.F.: Fast and efficient iris image segmentation. J. Med. Biol. Eng. 30(6), 381–391 (2010)

Ma, L., Tan, T., Wang, Y., Zhang, D.: Personal identification based on iris texture analysis. IEEE Trans. Pattern Anal. Mach. Intell. 25(12), 1519–1533 (2003)

MacQueen, J.: Some methods for classification and analysis of multivariate observations. In: Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability, vol. 1, pp. 281–297. University of California Press (1967)

Mahlouji, M., Noruzi, A.: Human iris segmentation for iris recognition in unconstrained environments. IJCSI Int. J. Comput. Sci. Issues 9(3), 149–155 (2012)

Masek, L.: Recognition of human iris patterns for biometric identification. Ph.D. thesis, Masters thesis, University of Western Australia (2003)

Radman, A., Zainal, N., Ismail, M.: Efficient iris segmentation based on eyelid detection. J. Eng. Sci. Technol. 8(4), 399–405 (2013)

Wildes, R.P.: Iris recognition: an emerging biometric technology. Proc. IEEE 85(9), 1348–1363 (1997)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer India

About this paper

Cite this paper

Sahu, B., Barpanda, S.S., Bakshi, S. (2016). Low Cost Eyelid Occlusion Removal to Enhance Iris Recognition. In: Nagar, A., Mohapatra, D., Chaki, N. (eds) Proceedings of 3rd International Conference on Advanced Computing, Networking and Informatics. Smart Innovation, Systems and Technologies, vol 43. Springer, New Delhi. https://doi.org/10.1007/978-81-322-2538-6_34

Download citation

DOI: https://doi.org/10.1007/978-81-322-2538-6_34

Published:

Publisher Name: Springer, New Delhi

Print ISBN: 978-81-322-2537-9

Online ISBN: 978-81-322-2538-6

eBook Packages: EngineeringEngineering (R0)