Abstract

Whether in the shape of real-life photographs, symbol-like drawings, or even avatar creations, research has been interested in the way a human face expresses and thereby transports a multitude of personal emotional states for decades. Starting with the early works of Darwin, moving on to large stimulus batteries such as Ekman and Friesen’s facial expression battery in the early 1970 s, faces in all kinds and shapes have been well-studied regarding various kinds of responses they elicit in someone perceiving a certain facial expression. Without a doubt, static displays of emotion capture an essential share of what is going on when our brains process facial expression. This has given rise to many powerful applications in modern life such as facial symbols in text and email messages, or eyes preventing costumers from shop lifting. However, calls for ecological validity together with the advancements in methods available for testing and recording human physiological responses have supported a more dynamic display of emotions. They exist as short morphing facial expressions of a few seconds or short video clips depicting actors with emotional and/or neutral facial expressions. These methods enable a deeper understanding of the temporal dynamics in facial processing and against the background of impaired facial emotion perception open the way for the investigation of possible differential impairments in patients. Recently, we have moved this a step further toward including facial expressions in naturalistic displays of emotionality in which spoken speech content and prosody added to a multimodal perception and processing of emotions. Together with a summary of the history of using facial expressions in empathy research as well as how dynamic stimuli contribute to an advancement in understanding the dynamics of facial expressions will be outlined in this chapter.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

6.1 Introduction

Every day, we experience a multitude of situations in which we infer more or less automatically, how people around us may be feeling. Some of them make it easy for us, verbally informing us by telling straight away whether they are in a good or bad spirit. With others, it is more subtle, they just ‘look sad.’ And even on the phone, a cheerful tone of voice may guide us to think that the person on the other end could be in a good mood. Yet, simply recognizing or ‘knowing’ about someone else’s state of mind does not do it all. We have to experience to some extent the other person’s feeling in order to be emotionally engaged ourselves, in order to feel some relevance to act upon. What happens when we do this? When we share the emotion of the other person and show a proactive and caring response to their happiness, sadness, anger, or surprise, this is called empathy. Empathy is a central construct in social cognition and is defined as the ability to recognize and adequately react emotionally to an affective message transferred by a human counterpart by sharing—to a certain degree—their emotion (de Vignemont and Singer 2006).

To study empathy and those subprocesses (e.g., emotion recognition) that eventually lead to a state of mutual understanding and social coherence, experiments can target those cues that transport emotions and that were mentioned above. The face is such an empathy cue and—being a central feature of a human being—facial expressions are relatively easy accessible to create experimental stimuli from. Whether in the shape of real-life photographs (Ekman and Friesen 1976), face symbols (Fox et al. 2000) or avatar creations (Moser et al. 2007), studies have used a multitude of facial aspects as stimulus material. The basic idea of these experiments is to study participants’ responses to the exposure of such stimuli, e.g., as valence ratings, physiological responses, or brain activation and to characterize them as correlates of empathy. However, not all studies in which participants are exposed to facial stimuli targeted empathy explicitly, but focused more on specific aspects of it, such as emotion recognition. In order to keep the scope of this chapter on experiments that meet the definition of empathy, we will thus only briefly mention studies that target only aspects or components of the social construct.

We will first present different theoretical assumptions regarding empathy and then try to sketch out an overview consisting of experiments that explicitly included facial expressions as stimuli. We then introduce some of our own studies that targeted empathy with a more holistic approach, taking into account different components of empathy in a multileveled approach. We conclude by presenting work in which we created and applied ecologically valid naturalistic stimulus material of emotional and of neutral facial expressions and assessed empathy within a multimodal setup along with speech prosody and content.

6.2 Theoretical Considerations of Empathy

What is empathy in the first place and what role do facial expressions play more specifically?

Empathy, as a mental process, which is aimed at establishing social coherence, is heterogeneous (Batson 2009). As Batson states, at least eight different phenomena relate to a typical situation in which we may feel empathic, and therefore, all correspond to the various definitions of empathy. Without the claim for completeness, we will roughly cluster them into three groups: Definitions that lead to theories which (a) focus on emotional mirroring and shared representations and that include simulation aspects, that (b) highlight cognitive-oriented aspects based on perspective-taking as well as theory-theories of mind, either focusing on the imagination of being another person or imagining to be in the other person’s situation, and lastly, theories that (c) focus on responses that are not necessarily isomorphic (such as pity in response to sadness rather than sadness itself), bordering on a conceptual overlap with sympathy.

Definitions within group (a), e.g., ‘knowing another person’s internal state’ and ‘posture or expression matching’ are much influenced by research investigating the (human) mirror neuron system (hMNS). Its basic idea aims at tangling out the processes that become relevant when observing another person performing an action and it is based on revolutionary macaque monkey work by the Italian neuroscientists around di Pellegrino and colleagues (1992) and Rizzolatti and colleagues (1996) who found a premotor cortex neuron excitation to be shared by both, observing and executing actions. Developed further and in its current shape, the hMNS is proposed to underlie humans’ ability to understand actions, but also beliefs and feelings by simulating aspects of the respective observed construct (for review please refer to Blakemore and Decety 2001; as well as Grezes and Decety 2001). This simulation aspect is also central in the multitude of ‘perception-action models’ of empathy that date back to the early works of Lipps (1903), who introduced empathy or ‘Einfühlung’ at the dawn of the twentieth century as a concept of intense feeling—not related to the self but another object.

An early theory within this framework that explicitly introduced to use facial expressions in the experimental setting to assess empathy was proposed by Meltzoff and colleagues by the Active Intermodal Mapping Hypothesis (AIM) (Meltzoff and Moore 1977) in which facial mapping was proposed to be based on intermodal mapping, i.e., matching-to-target behavior, originally carried out by infants interacting with their first bonding objects. This was already described by the social psychologist McDougall (1908). Preston and de Waal (2002) further developed the assumptions of the hMNS regarding empathy into a complex perception-action model. They proposed a shared neural network responsible for navigating in a physical environment that also helps us to navigate socially.

Group (b) composed of empathy definitions focusing on perspective-taking aspects which are needed to put onself into the shoes of the other person. They can be described as more general and cognitively driven in the concepts of mindreading, ‘Theory-of-mind’ (Frith and Frith 1999; Perner 1991) or mentalizing (Hooker et al. 2008) that cover cognitive aspects of perspective taking and processing of mental states. Hence, empathy was divided into a more emotional and a more cognitive part. Some authors even set ‘Theory-of-mind’ as equal to cognitive empathy. Others propose affective aspects of ‘Theory-of-mind’ to be supportive of establishing empathy (Hooker et al. 2008) such that people who use emotional information when inferring the mental states of others show higher empathy than those who do not use this information. A differentiation into more cognitive versus more emotional aspects of empathy can also be found on a neural level as recent neuroimaging studies (Fan et al. 2011) present activation networks, which are either consistently activated by affective-perceptive forms of empathy (e.g., right anterior insula, right dorsomedial thalamus, supplementary motor area, right anterior cingulate cortex, midbrain) or consistently activated by cognitive-evaluative forms of empathy (e.g., midcingulate cortex, orbitofrontal cortex, left dorsomedial thalamus) while the left anterior insula represents a shared neural region.

Lastly, group (c) definitions point to aspects of empathy that do not necessarily involve isomorphic feelings. This is sometimes translated with ‘empathic concern’ (Batson 1991) and is, regarding to some classifications (Preston et al. 2007), positioned toward a more basic response level of empathy, not involving matching of the emotional state. The latter group proposes this interesting classification system along the axes of ‘self-other distinction,’ ‘state matching,’ and ‘helping’ that enables yet another categorization in which the existing definitions and theories of empathy can be placed (Table 6.1).

6.3 Neuroscientific Theories of Empathy

The rise of the neurosciences at the turn of the centuries has certainly influenced conceptual approaches to empathy. The ‘social brain’ (Adolphs 2009; Dunbar and Shultz 2007; Gobbini et al. 2007; Kennedy and Adolphs 2012) is a term both innovative and promising as well as vague and a target of criticism at the same time, e.g., regarding its anthropocentric constructions (Barrett et al. 2007). Against the framework of a newly and rapidly developing ‘social neuroscience’ branch, empathy theories that explain the social construct on a neural level have become popular and influential as already evident in the previous section’s overview. Regarding simulation aspects, it is a common notion in the neuroscience of empathy to assume certain neural networks that are mutually corresponding to an emotional state originating in the self as well as an emotional state originating in observing or imitating another person. Perspective-taking aspects are included when proposing distinct neural networks that are serving self versus other processing explicitly (Ochsner et al. 2004). The list of studies that integrate brain imaging techniques into the experimental investigation of social constructs can be continued. Apart from testing the existing constructs and definitions, the social neurosciences have on the one hand provided physiological evidence of the phenomena that up to then remained a subject of verbal self-report or measurements in the periphery (e.g., galvanic skin response or heart beat). They have also extended our knowledge about mental processes while providing heuristic constructs that sketch out new frameworks in which empathy and related constructs can be placed. For example, in the ‘social-emotional-processing-stream’ by Ochsner (2008), the focus is on intertwining social and emotional phenomena through which social and emotional input is encoded, understood, and acted upon. Another prerequisite for the inclusion into the stream is that phenomena have a measurable and reliable neural correlate as well as a significant behavioral end. On a functional level, Ochsner differentiates into bottom–up and top–down processing within these areas and connects a neural network to these functional abilities. While structures such as the superior temporal sulcus integrate incoming information and evaluate it, other areas central in emotion processing such as the extended amygdala complex and the anterior insula are proposed to serve emotion recognition aspects as well as remapping by relaying interoceptive processing. The latter is proposed by Adolphs (2009) in his review on the ‘social brain.’ At this point, it may be appropriate to mention this almond-shaped group of nuclei with specific regards to facial expression. A long time ago, lesion studies have already shown that bilateral damage to the amygdalae can result in impairments to recognize emotional facial expressions (Adolphs and Tranel 2004) not last due to their strong anatomical connections to the visual system, as found in macaque monkeys (Freese and Amaral 2005; Stefanacci and Amaral 2002). This finding paved the way to the structures’ evaluative function, specifically in mostly appetitive and aversive emotional processing (Aggleton 2000; Balleine and Killcross 2006; Paton et al. 2006), but see other studies (Moessnang et al. 2013) that show its role in aversive conditioning of other modalities (here: olfaction). Moreover, a more general role in basic arousal and vigilance functions (Whalen 1999) has been proposed, even on an unconscious level (Whalen et al. 1998). Work by Kennedy and Adolphs (2012) includes the amygdala’s functions and relevance in an ‘amygdala network’ that coexists next to a ‘mentalizing,’ ‘empathy,’ as well as a ‘mirror/simulation/action-perception’ network. Each of these networks, including the amygdala’s, consists of structures that have been recognized either because of their significance in lesion studies or repeated findings in functional imaging studies focusing on social cognition. Again, due to its connections to occipito-temporal cortices, an exposed role in visually focused emotional processing, especially regarding a broad role in salience detection and evaluation, is stated, while the authors stress the importance of the amygdala’s role in networks that it subserves, rather than tagging it with a stand-alone functionality.

While neuroscientific models are impressive and can integrate a lot of psycho-biological theories, the underlying method must not be over-estimated. It has to be kept in mind that structures do not exclusively correspond to a single function. Neural structures and networks that are activated when subjects experience a certain emotional state, respond to the expressed emotion of another person, or try to cognitively infer the mental state of someone else are convergent zones that cross a statistical threshold after averaging a number of trials and subjects to increase the signal-to-noise ratio. They cannot provide insight into individual phenomenological experiences, they do not necessarily correspond to behavior (Ochsner 2008), and they are not exclusive in their nature but take part in many other related and sometimes (against the current state of knowledge) unrelated concepts.

Concluding, twenty years into empathy research, the seemingly simple and straightforward definition by de Vignemont and Singer (2006) that we presented in the introduction is by far not the only one that exists nor does one unifying theory explain it all. However, as these authors state, their definition is one that narrows down empathy from a broader concept that includes all kinds of affective reactions to someone else’s state of mind including cognitive perspective taking to one that explicitly requires an affective state that is isomorphic to another person’s state and is causally related to the latter while being able to differentiate into self and other within this process. The definition is presented with a theory of early and late contextual appraisal occurring either simultaneously with the emotional cue presentation (early appraisal model), or later on, modulating an earlier automatically elicited response to the emotional cue (late appraisal model). Summarizing, emotional cues profit from a contextual embedding so they can be interpreted correctly and justify empathy by the receiver.

6.4 Studying Empathy: From Theory into Experiments

How can empathy be operationalized to be studied and what is the difference between the multitudes of study protocols against the specific background of eliciting empathy by showing facial expressions?

Experimental investigations in the field of social cognition become increasingly popular. A recent search via PUBMED on the number of articles stating ‘emotional facial expressions empathy’ yields almost 100 hits, and this number even increases by almost 50 % when leaving out the keyword ‘emotional.’ As this chapter can only introduce an overview on the different approaches to study empathy, we hereby try to group them into studies that investigate various empathic responses by presenting facial displays of emotions such as pain, disgust, or fear, and studies that research motor aspects of empathy. These studies explicitly include a definition of empathy that goes beyond the mere perception, recognition, or evaluation of a facial stimulus which all have a relevance to empathy without explicitly aiming to assess it. We will conclude by introducing recent meta-analyses that shed light on the neural correlates of (not only) facially transported empathy. We will present exemplary studies that are representative of their group.

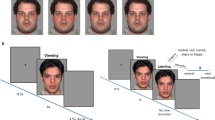

A direct stimulationFootnote 1 approach assumes that emotional states can be transferred via the presentation of various channels such as prosody, body language, facial expressions, empathy-eliciting stories, or, with respect to pain empathy, by presenting harmed body parts. The underlying assumptions, especially in those studies targeting the neural correlates, were that feelings or emotions should be neurally represented by a network sensitive to the subjective feeling of someone else’s emotion as well as to the compassionate feeling for them. Applied to pain this means, watching a person being hurt should trigger at least to some degree the other person’s feeling. This is even suggested to take place on an automatic and unconscious level (Adolphs 2009). Although the majority of studies investigating the responses to pain used visual displays of body extremities (e.g., feet or hands) in painful positions or undergoing painful treatment (Decety et al. 2008; Lamm et al. 2011; Morrison et al. 2013; Singer et al. 2004), some studies provided facially expressed pain (Botvinick et al. 2005; Lamm et al. 2007) and some used both (e.g., Vachon-Presseau et al. 2012; Fig. 6.1).

Modified from Vachon-Presseau et al. (2012), with friendly permission from Elsevier (license number 3243541056141)

Other emotions in direct stimulation approaches included disgust (Jabbi et al. 2007; Wicker et al. 2003), happiness (Hennenlotter et al. 2005; Jabbi et al. 2007), sadness (Harrison et al. 2006), or anger (de Greck et al. 2012), just to name a few. Apart from presenting these faces directly, gaze directionality (directed vs. averted) was also sometimes measured (Schulte-Rüther et al. 2007). Gaze did not yield activation differences on a neural level but showed effects on electrophysiological correlates of face perception as well as behavioral effects such as higher recognition accuracy (Soria Bauser et al. 2012) and higher emotion intensity ratings (Schulte-Rüther et al. 2007) for directed faces.

Besides the stimulus material, further methodological factors are relevant: What is the instruction for the participant, which responses are measured and recorded and what exactly defines a response as empathic?

Some studies merely instructed participants to passively view the presented emotions; for example, Wicker et al. (2003) presented subjects with visual displays of disgust and pleasantness as well as actual affective odors of disgusting or pleasant stimuli, to compare empathy to own emotional experiences. Although the task instruction was not to explicitly empathize, neural activation patterns suggested a shared network of own and vicarious affective experience when comparing observed with experienced disgust.

Others (Hennenlotter et al. 2005; Kircher et al. 2013) focused on executing and observing certain (emotional) facial expressions. Again, a common neural circuit of motor-, somatosensory, and limbic processing emerged, which is important for empathic understanding. In a recent study by Moore et al. (2012), it was shown that EEG mu component desynchronization took place toward happy and disgusted facial expressions, representing action simulation. This was irrespective of empathic task instruction (either try to experience emotions felt and expressed by the facial stimuli or to rate the faces’ attractiveness). In a task that consisted of observing or imitating emotional facial expressions, superior temporal cortex, amygdala, and insula may reflect the process of relaying information from action representations into emotionally salient information and empathy (Carr et al. 2003). Using an imitation/execution task, Braadbaart et al. (2014) associated imitation accuracy with trait empathy and replicated central structures of the human mirror neuron system during imitation. In addition, they could associate external trait empathy with brain activation in somatosensory regions, intraparietal sulcus, and premotor cortex during imitation, while imitation accuracy values correlated with activation in insula and motor areas. Shared activity was found in premotor cortex. Again, these findings strengthen the role of simulation or ‘action plans’ for empathy via a joint engagement of premotor and somatosensory cortices as well as the insula, holding an important role in socially regulating facial expressions.

Apart from studies based on human mirror neuron system assumptions, in which participants’ main task was motor-related by observing, imitating, or expressing certain emotional states, other studies required explicit ratings of the emotions presented. For example, Harrison et al. (2006) piloted their fMRI study by presenting emotional expressions in combination with different pupil sites and measuring behavioral responses regarding valence, intensity, attractiveness, but concentrated on age judgments during the functional measurement, combined with pupil diameter measurements. Pupil size influenced intensity ratings of sad emotional facial expressions and was mirrored by the participants, interpreted as a sign of emotional contagion. Another study (Hofelich and Preston 2012) challenged this view by stating that facial mimicry and conceptual encoding occurred automatically as a natural consequence of attended perception but should not be equated to trait empathy. Lamm et al. (2008) provided physiological and explicit rating data after focusing on electromyography to assess automatic facial mimicry in response to painful facial expressions in participants who were explicitly told to either imagine to be an observed person or to put him or herself into the situation of the observed person. They focused on the self-other differentiation within the empathy concept by manipulating the point of reference (see also Schulte-Rüther et al. 2008). Here, participants indicated the pain’s intensity and (un)pleasantness, which were not associated with the respective point of reference, but showed sensitivity to whether the painful stimulation was associated with an effective treatment or not. Brain imaging results (Lamm et al. 2007) revealed parts of the so-called ‘pain matrix’ (Derbyshire 2000) in the insula, anterior cingulate cortex, and the secondary somatosensory cortex to be activated as a function of perspective taking (here: the contrast ‘self’ vs. ‘other’).

Other groups used an explicit empathic task instruction to feel with another person’s facial expression and share their emotional state (de Greck et al. 2012). Participants then consciously rated how well they had managed to do so. The results showed the inferior frontal cortex as well as the middle temporal cortex to be involved in intentional empathy that complemented the literature regarding more controlled aspects of empathy.

Although task instructions in empathy studies varied to a great amount, a recent neuroimaging meta-analysis by Fan et al. (2011) explicitly required one of the following criteria to be considered in the study design: observing an emotional or sensory state of another person in a defined empathic context; sharing the emotional state of this other person or imagine the other’s feeling and actively judge the latter two, respectively; or brain activation, which was associable with a dispositional measure of empathy (e.g., questionnaire). As already stated, they summarized 40 fMRI studies and presented several empathy networks, cognitively driven or affectively driven, respectively, with a neural overlap in the left anterior insula.

One essential problem was, however, still obvious in this meta-analysis, namely the definition and operationalization of empathy remained heterogeneous, and only a few studies included more than one or two aspects of the complex construct. However, this endangered empathy to be lost in experiments targeting only emotion recognition or mere emotion perception without controlling for the subjective experience of the participants. In several studies, we therefore targeted three components of empathy experimentally, namely emotion recognition, affective responses as well as emotional perspective taking. The major advantage of this novel task combination was the simultaneous assessment of the different empathy components within one experiment including control tasks. This was, last but not least, the basis for studying specific impairments in disorders associated with altered social cognitive functions such as paranoid schizophrenia (Derntl et al. 2009, 2012) and enables a more detailed characterization of empathy deficits in this disorder while controlling for the well-known emotion recognition deficits in patients. For the emotion recognition task, we presented 60 colored Caucasian facial expressions of five basic emotions (happiness, sadness, anger, fear, disgust) and neutral expressions (Gur et al. 2002). Half of the stimuli were used for emotion recognition, the other half for the age discrimination control task. Subjects evaluated the emotion by selecting from two emotion categories; the correct emotion depicted or had to judge, which of two age decades was closer to the poser’s age, respectively. The affective responsiveness included 150 short written sentences describing real-life emotional situations, which are expected to induce basic emotions (the same emotions as described above), and situations that were emotionally neutral (25 stimuli per condition). Participants were asked to imagine how they would feel if they were in those situations. Again, to facilitate task comparisons, responses required subjects to choose the correct emotional facial expressions from two presented alternatives. For the emotional perspective taking task, participants viewed 60 items depicting scenes with two Caucasians involved in social interaction reflecting five basic emotions and neutral scenes (10 stimuli per condition). The face of one person was masked, and participants were asked to infer the respective emotion of the covered face. Responses were made similar by presenting two different emotional facial expressions or a neutral expression as alternative response categories.

The task revealed the differential underlying cerebral correlates of empathy components (Derntl et al. 2010), with the amygdala playing a major role. Generalizing over tasks and gender, activation in the inferior frontal and middle temporal gyri, the left superior frontal gyrus and the left posterior as well as middle cingulate gyrus, and the cerebellum characterized the common nodes of the empathy network.

The development of neuroimaging techniques has certainly enabled to map and localize the structures and network underlying empathy on a neural level (see as a recent meta-analysis by Moya-Albiol et al. 2010). Still, an obvious lack of homogeneous operationalizations across studies and (conscious) accessibility in an experimental setting pose difficulties on the neuroscientific approaches to empathy. Also, the existing subconceptualizations within the field of social cognition and specifically empathy suggest still new categorization options of the processes leading to empathy. Hypotheses-free approaches for data analysis could be one option to further advance the field and might present one solution for this dilemma. One example for this is the work by Nomi et al. (2008), who analyzed their fMRI data in a facial expression viewing paradigm with principal component analysis and presented principal components explaining distinct neural networks comprising of ‘mediating facial expressions,’ ‘identification of expressed emotions,’ ‘attention to these expressed emotions,’ and ‘sense of an emotional state.’

6.5 Facial Expressions in Social Communication: Multimodal Empathy

As stated before, empathy, more specifically, the contents leading to empathic responses are transmitted via different communication channels. As described in the previous paragraph, numerous studies used static facial displays to study empathy and its subcomponents (such as emotion recognition Adolphs 2002). In the last decade, the increasing requests for ecological validity together with the advancements in methods available for testing and recording human physiological responses demanded dynamic displays of emotion rather than static ones. Thecall came especially from those research groups, which initiated to study static and dynamic modalities within one experiment and encouraged to study ‘emotions in motion’ (Trautmann et al. 2009). This was motivated by the interest in dynamics of sensory processing and biological motion, but also implies a high relevance for empathy. The specific tasks ranged from investigating passive viewing (Sato et al. 2004), emotion recognition abilities (Trautmann et al. 2009; Weyers et al. 2006), emotion intensity ratings (Kilts et al. 2003), or affective responsiveness (Simons et al. 1999), respectively. Other studies restricted themselves to the use of dynamic displays only, such as Leslie et al. (2004), who used short video clips of emotional facial expressions in order to find a mirroring system for emotive actions.

These approaches paid tribute to the dynamic nature of facial expressions. Along with an increase in ecological validity, these studies also showed beneficial effects of dynamic stimulion behavioral responses (Ambadar et al. 2005) as well as autonomous parameters (Weyers et al. 2006).

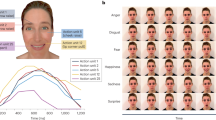

This expands the field of empathy research to multimodal integration of different sources of sensory information. Multiple senses interact when we make sense of the (social) world. In order to include other communication channels than visually presented faces, our group has developed an approach to study multimodal contributions to empathy stemming from different emotion cues, facial expressions, prosody, and speech content (Regenbogen et al. 2012a, b). We developed and evaluated naturalistic stimulus material (video clips of 11-s duration), which consisted of different social communication situations of sad, happy, disgusted, or fearful content. The combinations of emotionality in facial expressions, prosody, and speech content differed between several experimental conditions. Emotionality was presented via three channels or via two channels with the third held neutral or unintelligible. This enabled to study the joint presence of two emotional channels with and the effect of keeping one channel neutral, respectively. As previous results showed that the given attitude toward the stimulus material can significantly modulate the results (Kim et al. 2009) and also that presenting complete strangers can be aversive and lead to the opposite effect (Fischer et al. 2012), we instructed participants to simply regard the presented actor as a familiar communication partner and to rate their own and the other’s emotional state after the video. Empathy was operationalized by the congruence of participants’ ratings on the other’s and their own emotional experience (Fig. 6.2).

Modified from Regenbogen et al. (2012a, b), with friendly permission from Elsevier (license number 3243690538277) and Taylor & Francis (license number 3243691330032). 1. Abbreviations: E emotional, N neutral. 2 In the behavioral study, this was an explicit emotion and emotion intensity rating, in the fMRI study, this was shortened to a valence and intensity rating of self and other

Behaviorally, facial expressions were central for recognizing the other person’s emotion. Once facial expressions were experimentally held neutral, the recognition rates of 38 healthy participants decreased to approximately 70 % (compared to >95 % emotion accuracy when facial expressions were emotional). This was paralleled by participants’ autonomous arousal to video clips as measured by galvanic skin responses on the left-hand palm. The number of electrodermal responses significantly decreased once facial expressions did not transport emotionality anymore. From these results, we concluded a central role of emotional facial expression when establishing an empathic response in a social communication situation, first by positively influencing the ability to correctly recognize another person’s affective state, and second, by a beneficial role for multimodal integration of signals coming from other modalities (here, speech content and prosody).

In a subsequent study, using the same stimuli, we targeted the effects of emotional facial expressions and other cues on a neural level. In an fMRI design, we presented the same clips to participants while again refraining from an explicit empathy instruction. Explicit self and other valence and intensity ratings were acquired while whole-brain activation was measured in a design with events ranging between 9 and 11 s. Focusing on only face-related results, we could show that emotionality in the face specifically resulted in widespread activation of temporo-occipital areas, medial prefrontal cortex, as well as subcortical activation in basal ganglia, hippocampus, and superior colliculi. This was in line with the literature on dynamic face processing (Sato et al. 2004; Trautmann et al. 2009; Weyers et al. 2006) and confirmed that emotion in the human face enhanced arousal and salience. At the same time, experimental empathy and its components toward stimuli with a neutral facial expression were significantly lower compared to fully emotional stimuli. Facial expressions thus seem to be a major source of information for inferring the emotional state of a counterpart, especially when verbal information is neutral or incomprehensible as the latter conditions yielded the strongest activation in the fusiform gyri (Regenbogen et al. 2012b).

6.6 Outlook

The human face enables us to project inner subjective states to the outside world. Via fast detection mechanisms, our counterparts are able to perceive and recognize an emotional expression, its intensity, and react upon it. Via shared network representations, emotional states are to some degree mirrored by the other person, which, along with perspective-taking mechanisms and evaluation procedures, helps to create empathy. However, faces do not exist in empty space. Studies on multimodality or multisensory processing demonstrate this convincingly while at the same time pointing to the high relevance of a facial expression also in enhancing sensory acquisition of other cues (e.g., olfactory ones Susskind et al. 2008). Along with a challenge of the visual dominance effect (Collignon et al. 2008), it becomes clear that other emotional cues such as prosody and speech content are equally, if not significantly more related to the subjective affective experience within empathy (Regenbogen et al. 2012a).

Considering several components of empathy certainly helps to target the construct in a more holistic way. Further, external validation measures such as trait empathy questionnaires (e.g., Williams et al. 2013) help to characterize the concept in more detail and further support the experimental results. The biological bases can be analyzed with the variety of brain imaging methods available.

References

Adolphs, R. (2002). Recognizing emotion from facial expressions: Psychological and neurological mechanisms. Behavioral and Cognitive Neuroscience Reviews, 1, 21–61.

Adolphs, R. (2009). The social brain: Neural basis of social knowledge. Annual Review of Psychology, 60, 693–716.

Adolphs, R., & Tranel, D. (2004). Impaired judgments of sadness but not happiness following bilateral amygdala damage. Journal of Cognitive Neuroscience, 16, 453–462.

Aggleton, J. P. (Ed.). (2000). The amygdala: A functional analysis. New York: Oxford University Press.

Ambadar, Z., Schooler, J. W., & Cohn, J. F. (2005). Deciphering the enigmatic face: The importance of facial dynamics in interpreting subtle facial expressions. Psychological Science, 16, 403–410.

Balleine, B. W., & Killcross, S. (2006). Parallel incentive processing: An integrated view of amygdala function. Trends in Neurosciences, 29, 272–279.

Barrett, L., Henzi, P., & Rendall, D. (2007). Social brains, simple minds: Does social complexity really require cognitive complexity. Philosophical Transactions of the Royal Society of London B, 362, 561–575.

Batson, C. D. (1991). The altruism question: Toward a social-psychological answer. Hillsdale, NJ: Erlbaum.

Batson, C. D. (2009). These things called empathy. In J. Decety & W. Ickes (Eds.), The social neuroscience of empathy (pp. 3–16). Cambridge, Ma: MIT Press.

Blakemore, S. J., & Decety, J. (2001). From the perception of action to the understanding of intention. Nature Reviews Neuroscience, 2, 561–567.

Botvinick, M., Jha, A. P., Bylsma, L. M., Fabian, S. A., Solomon, P. E., & Prkachin, K. M. (2005). Viewing facial expressions of pain engages cortical areas involved in the direct experience of pain. Neuroimage, 25, 312–319.

Braadbaart, L., de Grauw, H., Perrett, D. I., Waiter, G. D., & Williams, J. H. (2014). The Shared neural basis of empathy and facial imitation accuracy. Neuroimage, 84, 365–367.

Carr, L., Iacoboni, M., Dubeau, M. C., Mazziotta, J. C., & Lenzi, G. L. (2003). Neural mechanisms of empathy in humans: A relay from neural systems for imitation to limbic areas. Proceedings of the National Academy of Sciences USA, 100, 5497–5502.

Chisholm, K., & Strayer, J. (1995). Verbal and facial measures of children's emotion and empathy. Journal of Experimental Child Psychology, 59, 299–316.

Collignon, O., Girard, S., Gosselin, F., Roy, S., Saint-Amour, D., Lassonde, M., et al. (2008). Audio-visual integration of emotion expression. Brain Research, 1242, 126–135.

de Greck, M., Wang, G., Yang, X., Wang, X., Northoff, G., & Han, S. (2012). Neural substrates underlying intentional empathy. Social Cognitive and Affective Neuroscience, 7, 135–144.

de Vignemont, F., & Singer, T. (2006). The empathic brain: How, when and why? Trends in Cognitive Sciences, 10, 435–441.

Decety, J., Michalska, K. J., & Akitsuki, Y. (2008). Who caused the pain? An fMRI investigation of empathy and intentionality in children. Neuropsychologia, 46, 2607–2614.

Derbyshire, S. W. G. (2000). Exploring the pain ‘‘neuromatrix’’. Current Review of Pain, 4, 467–477.

Derntl, B., Finkelmeyer, A., Eickhoff, S., Kellermann, T., Falkenberg, D. I., Schneider, F., et al. (2010). Multidimensional assessment of empathic abilities: Neural correlates and gender differences. Psychoneuroendocrinology, 35, 67–82.

Derntl, B., Finkelmeyer, A., Toygar, T. K., Hulsmann, A., Schneider, F., Falkenberg, D. I., et al. (2009). Generalized deficit in all core components of empathy in schizophrenia. Schizophrenia Research, 108, 197–206.

Derntl, B., Finkelmeyer, A., Voss, B., Eickhoff, S. B., Kellermann, T., Schneider, F., et al. (2012). Neural correlates of the core facets of empathy in schizophrenia. Schizophrenia Research, 136(1–3), 70–81.

di Pellegrino, G., Fadiga, L., Fogassi, L., Gallese, V., & Rizzolatti, G. (1992). Understanding motor events: A neurophysiological study. Experimental Brain Research, 91, 176–180.

Dunbar, R. I., & Shultz, S. (2007). Evolution in the social brain. Science, 317, 1344–1347.

Ekman, P., & Friesen, W. V. (1976). Pictures of facial affect. Palo Alto, CA: Consulting Psychologists Press.

Fan, Y., Duncan, N. W., de Greck, M., & Northoff, G. (2011). Is there a core neural network in empathy? An fMRI based quantitative meta-analysis. Neuroscience and Biobehavioral Reviews, 35, 903–911.

Fischer, A. H., Becker, D., & Veenstra, L. (2012). Emotional mimicry in social context: The case of disgust and pride. Frontiers in Psychology, 3, 475.

Fox, E., Lester, V., Russo, R., Bowles, R. J., Pichler, A., & Dutton, K. (2000). Facial expressions of emotion: Are angry faces detected more efficiently? Cognition and Emotion, 14, 61–92.

Freese, J. L., & Amaral, D. G. (2005). The organization of projections from the amygdala to visual cortical areas TE and V1 in the macaque monkey. Journal of Comparative Neurology, 486, 295–317.

Frith, C. D., & Frith, U. (1999). Interacting minds—A biological basis. Science, 286, 1692–1695.

Gobbini, M. I., Koralek, A. C., Bryan, R. E., Montgomery, K. J., & Haxby, J. V. (2007). Two takes on the social brain: A comparison of theory of mind tasks. Journal of Cognitive Neuroscience, 19(11), 1803–1814.

Grezes, J., & Decety, J. (2001). Functional anatomy of execution, mental simulation, observation, and verb generation of actions: A meta-analysis. Human Brain Mapping, 12(1), 1–19.

Gur, R. C., Sara, R., Hagendoorn, M., Marom, O., Hughett, P., Macy, L., et al. (2002). A method for obtaining 3-dimensional facial expressions and its standardization for use in neurocognitive studies. Journal of Neuroscience Methods, 115, 137–143.

Harrison, N. A., Singer, T., Rotshtein, P., Dolan, R. J., & Critchley, H. D. (2006). Pupillary contagion: Central mechanisms engaged in sadness processing. Social Cognitive and Affective Neuroscience, 1, 5–17.

Hennenlotter, A., Schroeder, U., Erhard, P., Castrop, F., Haslinger, B., Stoecker, D., et al. (2005). A common neural basis for receptive and expressive communication of pleasant facial affect. Neuroimage, 26, 581–591.

Hofelich, A. J., & Preston, S. D. (2012). The meaning in empathy: Distinguishing conceptual encoding from facial mimicry, trait empathy, and attention to emotion. Cognition and Emotion, 26, 119–128.

Hooker, C. I., Verosky, S. C., Germine, L. T., Knight, R. T., & D’Esposito, M. (2008). Mentalizing about emotion and its relationship to empathy. Social Cognitive and Affective Neuroscience, 3, 204–217.

Jabbi, M., Swart, M., & Keysers, C. (2007). Empathy for positive and negative emotions in the gustatory cortex. Neuroimage, 34, 1744–1753.

Kennedy, D. P., & Adolphs, R. (2012). The social brain in psychiatric and neurological disorders. Trends in Cognitive Sciences, 16(11), 559–572.

Kilts, C. D., Egan, G., Gideon, D. A., Ely, T. D., & Hoffman, J. M. (2003). Dissociable neural pathways are involved in the recognition of emotion in static and dynamic facial expressions. Neuroimage, 18, 156–168.

Kim, J. W., Kim, S. E., Kim, J. J., Jeong, B., Park, C. H., Son, A. R., et al. (2009). Compassionate attitude towards others’ suffering activates the mesolimbic neural system. Neuropsychologia, 47, 2073–2081.

Kircher, T., Pohl, A., Krach, S., Thimm, M., Schulte-Rüther, M., Anders, S., et al. (2013). Affect-specific activation of shared networks for perception and execution of facial expressions. Social Cognitive and Affective Neuroscience, 8, 370–377.

Krach, S., Cohrs, J. C., de Echeverría Loebell, N. C., Kircher, T., Sommer, J., Jansen A., et al. (2011). Your flaws are my pain: linking empathy to vicarious embarrassment. PLoS One. 13 6(4), e18675. doi: 10.1371/journal.pone.0018675

Lamm, C., Batson, C. D., & Decety, J. (2007). The neural substrate of human empathy: Effects of perspective-taking and cognitive appraisal. Journal of Cognitive Neuroscience, 19, 42–58.

Lamm, C., Decety, J., & Singer, T. (2011). Meta-analytic evidence for common and distinct neural networks associated with directly experienced pain and empathy for pain. Neuroimage, 54, 2492–2502.

Lamm, C., Porges, E. C., Cacioppo, J. T., & Decety, J. (2008). Perspective taking is associated with specific facial responses during empathy for pain. Brain Research, 1227, 153–161.

Leslie, K. R., Johnson-Frey, S. H., & Grafton, S. T. (2004). Functional imaging of face and hand imitation: Towards a motor theory of empathy. Neuroimage, 21, 601–607.

Lipps, T. (1903). Einfühlung, innere Nachahmung und Organempfindung. Archiv für die gesamte Psychologie, 1, 465–519.

McDougall, W. (1908). An Introduction to Social Psychology (23 ed.): University Paperbacks. Imprint of Methuen & Co (London) and Barnes & Noble (New York).

Meltzoff, A. N., & Moore, M. K. (1977). Imitation of facial and manual gestures by human neonates. Science, 198, 75–78.

Moessnang, C., Pauly, K., Kellermann, T., Kramer, J., Finkelmeyer, A., Hummel, T., et al. (2013). The scent of salience–is there olfactory-trigeminal conditioning in humans? Neuroimage, 77, 93–104.

Moore, A., Gorodnitsky, I., & Pineda, J. (2012). EEG mu component responses to viewing emotional faces. Behavioural Brain Research, 226, 309–316.

Morrison, I., Tipper, S. P., Fenton-Adams, W. L., & Bach, P. (2013). “Feeling” others’ painful actions: The sensorimotor integration of pain and action information. Human Brain Mapping, 34, 1982–1998.

Moser, E., Derntl, B., Robinson, S., Fink, B., Gur, R. C., & Grammer, K. (2007). Amygdala activation at 3T in response to human and avatar facial expressions of emotions. Journal of Neuroscience Methods, 161, 126–133.

Moya-Albiol, L., Herrero, N., & Bernal, M. C. (2010). The neural bases of empathy. Revista de Neurologia, 50, 89–100.

Nomi, J. S., Scherfeld, D., Friederichs, S., Schafer, R., Franz, M., Wittsack, H. J., et al. (2008). On the neural networks of empathy: A principal component analysis of an fMRI study. Behavioral and Brain Functions, 8(4), 41. doi:10.1186/1744-9081-4-41.

Ochsner, K. N. (2008). The social-emotional processing stream: Five core constructs and their translational potential for schizophrenia and beyond. Biological Psychiatry, 64, 48–61.

Ochsner, K. N., Knierim, K., Ludlow, D. H., Hanelin, J., Ramachandran, T., Glover, G., et al. (2004). Reflecting upon feelings: An fMRI study of neural systems supporting the attribution of emotion to self and other. Journal of Cognitive Neuroscience, 16, 1746–1772.

Paton, J. J., Belova, M. A., Morrison, S. E., & Salzman, C. D. (2006). The primate amygdala represents the positive and negative value of visual stimuli during learning. Nature, 439, 865–870.

Perner, J. (1991). Understanding the representational mind. Cambridge, Massachusetts: MIT Press.

Preston, S. D., Bechara, A., Damasio, H., Grabowski, T. J., Stansfield, R. B., Mehta, S., et al. (2007). The neural substrates of cognitive empathy. Social Neuroscience, 2, 254–275.

Preston, S. D., & de Waal, F. B. (2002). Empathy: Its ultimate and proximate bases. Behavioral and Brain Sciences, 25, 1–20; discussion 20–71.

Regenbogen, C., Schneider, D. A., Finkelmeyer, A., Kohn, N., Derntl, B., Kellermann, T., et al. (2012a). The differential contribution of facial expressions, prosody, and speech content to empathy. Cognition and Emotion, 26, 995–1014.

Regenbogen, C., Schneider, D. A., Gur, R. E., Schneider, F., Habel, U., & Kellermann, T. (2012b). Multimodal human communication-targeting facial expressions, speech content and prosody. Neuroimage, 60, 2346–2356.

Rizzolatti, G., Fadiga, L., Gallese, V., & Fogassi, L. (1996). Premotor cortex and the recognition of motor actions. Brain Research. Cognitive Brain Research, 3, 131–141.

Sato, W. S., Kochiyama, T., Yoshikawa, S., Naito, E., & Matsumura, M. (2004). Enhanced neural activity in response to dynamic facial expressions of emotion: An fMRI study. Cognitive Brain Research, 20, 81–91.

Schulte-Rüther, M., Markowitsch, H. J., Fink, G. R., & Piefke, M. (2007). Mirror neuron and theory of mind mechanisms involved in face-to-face interactions: A functional magnetic resonance imaging approach to empathy. Journal of Cognitive Neuroscience, 19, 1354–1372.

Schulte-Rüther, M., Markowitsch, H. J., Shah, N. J., Fink, G. R., & Piefke, M. (2008). Gender differences in brain networks supporting empathy. Neuroimage, 42, 393–403.

Simons, R. F., Detender, B. H., Roedema, T. M., & Reiss, J. E. (1999). Emotion processing in three systems: The medium and the message. Psychophysiology, 36, 619–627.

Singer, T., Seymour, B., O’Doherty, J., Kaube, H., Dolan, R. J., & Frith, C. D. (2004). Empathy for pain involves the affective but not sensory components of pain. Science, 303, 1157–1162.

Soria Bauser, D., Thoma, P., & Suchan, B. (2012). Turn to me: Electrophysiological correlates of frontal versus averted view face and body processing are associated with trait empathy. Frontiers in Integrative Neuroscience, 6, 106. doi: 10.3389/fnint.2012.00106.

Stefanacci, L., & Amaral, D. G. (2002). Some observations on cortical inputs to the macaque monkey amygdala: An anterograde tracing study. Journal of Comparative Neurology, 451, 301–323.

Susskind, J. M., Lee, D. H., Cusi, A., Feiman, R., Grabski, W., & Anderson, A. K. (2008). Expressing fear enhances sensory acquisition. Nature Neuroscience, 11, 843–850.

Trautmann, S. A., Fehr, T., & Herrmann, M. (2009). Emotions in motion: Dynamic compared to static facial expressions of disgust and happiness reveal more widespread emotion-specific activations. Brain Research, 1284, 100–115.

Vachon-Presseau, E., Roy, M., Martel, M. O., Albouy, G., Chen, J., Budell, L., et al. (2012). Neural processing of sensory and emotional-communicative information associated with the perception of vicarious pain. Neuroimage, 63, 54–62.

Weyers, P., Mühlberger, A., Hefele, C., & Pauli, P. (2006). Electromyographic responses to static and dynamic avatar emotional facial expressions. Psychophysiology, 43, 450–453.

Whalen, P. (1999). Fear, vigilance, and ambiguity: Initial neuroimaging studies of the human amygdala. Current Directions in Psychological Sciences, 7, 177–187.

Whalen, P. J., Rauch, S. L., Etcoff, N. L., McInerney, S. C., Lee, M. B., & Jenike, M. A. (1998). Masked presentations of emotional facial expressions modulate amygdala activity without explicit knowledge. Journal of Neuroscience, 18, 411–418.

Wicker, B., Keysers, C., Plailly, J., Royet, J.-P., Gallese, V., & Rizzolatti, G. (2003). Both of us disgusted in my insula: The common neural basis of seeing and feeling disgust. Neuron, 40, 655–664.

Williams, J. H., Nicolson, A. T., Clephan, K. J., de Grauw, H., & Perrett, D. I. (2013). A novel method testing the ability to imitate composite emotional expressions reveals an association with empathy. PLoS ONE, 8, 61941.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer India

About this chapter

Cite this chapter

Regenbogen, C., Habel, U. (2015). Facial Expressions in Empathy Research. In: Mandal, M., Awasthi, A. (eds) Understanding Facial Expressions in Communication. Springer, New Delhi. https://doi.org/10.1007/978-81-322-1934-7_6

Download citation

DOI: https://doi.org/10.1007/978-81-322-1934-7_6

Published:

Publisher Name: Springer, New Delhi

Print ISBN: 978-81-322-1933-0

Online ISBN: 978-81-322-1934-7

eBook Packages: Behavioral ScienceBehavioral Science and Psychology (R0)