Abstract

In the past few years, starting with the thought of physical stochastic systems and the principle of preservation of probability, a family of probability density evolution methods (PDEM) has been developed. It provides a new perspective toward the accurate design and optimization of structural performance under random engineering excitations such as earthquake ground motions and strong winds. On this basis, a physical approach to structural stochastic optimal control is proposed in the present chapter. A family of probabilistic criteria, including the criterion based on mean and standard deviation of responses, the criterion based on Exceedance probability, and the criterion based on global reliability of systems, is elaborated. The stochastic optimal control of a randomly base-excited single-degree-of-freedom system with active tendon is investigated for illustrative purposes. The results indicate that the control effect relies upon control criteria of which the control criterion in global reliability operates efficiently and gains the desirable structural performance. The results obtained by the proposed method are also compared against those by the LQG control, revealing that the PDEM-based stochastic optimal control exhibits significant benefits over the classical LQG control. Besides, the stochastic optimal control, using the global reliability criterion, of an eight-story shear frame structure is carried out. The numerical example elucidates the validity and applicability of the developed physical stochastic optimal control methodology.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Probability density evolution method

- Stochastic optimal control

- Control criteria

- Global reliability

- LQG control

1 Introduction

Stochastic dynamics has gained increasing interests and has been extensively studied. However, although the original thought may date back to Einstein [4] and Langevin [11] and then studied in rigorous formulations by mathematicians [8, 10, 37], the random vibration theory, a component of stochastic dynamics, was only regarded as a branch of engineering science until the early of 1960s (Crandall [2]; Lin [28]). Till early 1990s, the theory and pragmatic approaches for random vibration of linear structures were well developed. Meanwhile, researchers were challenged by nonlinear random vibration, despite great efforts devoted coming up with a variety of methods, including the stochastic linearization, equivalent nonlinearization, stochastic averaging, path-integration method, FPK equation, and the Monte Carlo simulation (see, e.g., [29, 30, 41]). The challenge still existed. On the other hand, investigations on stochastic structural analysis (or referred to stochastic finite element method by some researchers), as a critical component of stochastic dynamics, in which the randomness of structural parameters is dealt with, started a little later from the late 1960s. Till middle 1990s, a series of approaches were presented, among which three were dominant: the Monte Carlo simulation [32, 33], the random perturbation technique [6, 9], and the orthogonal polynomial expansion [5, 12]. Likewise with the random vibration, here the analysis of nonlinear stochastic structures encountered huge challenges as well [31].

In the past 10 years, starting with the thought of physical stochastic systems [13] and the principle of preservation of probability [19], a family of probability density evolution methods (PDEM) has been developed, in which a generalized density evolution equation was established. The generalized density evolution equation profoundly reveals the essential relationship between the stochastic and deterministic systems. It is successfully employed in stochastic dynamic response analysis of multi-degree-of-freedom systems [20] and therefore provides a new perspective toward serious problems such as the dynamic reliability of structures, the stochastic stability of dynamical systems, and the stochastic optimal control of engineering structures.

In this chapter, the application of PDEM on the stochastic optimal control of structures will be summarized. Therefore, the fundamental theory of the generalized density evolution equation is firstly revisited. A physical approach to stochastic optimal control of structures is then presented. The optimal control criteria, including those based on mean and standard deviation of responses and those based on exceedance probability and global reliability of systems, are elaborated. The stochastic optimal control of a randomly base-excited single-degree-of-freedom system with active tendon is investigated for illustrative purposes. Comparative studies of these probabilistic criteria and the developed control methodology against the classical LQG control are carried out. The optimal control strategy is then further employed in the investigation of the stochastic optimal control of an eight-story shear frame. Some concluding remarks are included.

2 Principle Equation

2.1 Principle of Preservation of Probability Revisited

It is noted that the probability evolution in a stochastic dynamical system admits the principle of preservation of probability, which can be stated as the following: if the random factors involved in a stochastic system are retained, the probability will be preserved in the evolution process of the system. Although this principle may be faintly cognized quite long ago (see, e.g., [36]), the physical meaning has been only clarified in the past few years from the state description and random event description, respectively [16–19]. The fundamental logic position of the principle of preservation of probability was then solidly established with the development of a new family of generalized density evolution equations that integrates the ever-proposed probability density evolution equations, including the classic Liouville equation, Dostupov-Pugachev equation, and the FPK equation [20].

To revisit the principle of preservation of probability, consider an n-dimensional stochastic dynamical system governed by the following state equation:

where \( {\mathbf{Y}} = {{({{Y}_1},{{Y}_2}, \cdots, {{Y}_n})}^{\rm{T}}} \) denotes the n-dimensional state vector, \( {{{\mathbf{Y}}}_0} = {{({{Y}_{{0,1}}},{{Y}_{{0,2}}}, \cdots, {{Y}_{{0,n}}})}^{\rm{T}}} \) denotes the corresponding initial vector, and \( {\mathbf{A}}( \cdot ) \) is a deterministic operator vector. Evidently, in the case that \( {{{\mathbf{Y}}}_0} \) is a random vector, \( {\mathbf{Y}}(t) \) will be a stochastic process vector.

The state equation (1) essentially establishes a mapping from \( {{{\mathbf{Y}}}_0} \) to \( {\mathbf{Y}}(t) \), which can be expressed as

where \( g( \cdot ),{{\rm G}_t}( \cdot ) \) are both mapping operators from \( {{{\mathbf{Y}}}_0} \) to \( {\mathbf{Y}}(t) \).

Since \( {{{\mathbf{Y}}}_0} \) denotes a random vector, \( \{ {{{\mathbf{Y}}}_0} \in {{\Omega }_{{{{t}_0}}}}\} \) is a random event. Here \( {{\Omega }_{{{{t}_0}}}} \) is any arbitrary domain in the distribution range of \( {{{\mathbf{Y}}}_0} \). According to the stochastic state equation (1), \( {{{\mathbf{Y}}}_0} \) will be changed to \( {\mathbf{Y}}(t) \) at time \( t \). The domain \( {{\Omega }_{{{{t}_0}}}} \) to which \( {{{\mathbf{Y}}}_0} \) belongs at time \( {{t}_0} \) is accordingly changed to \( {{\Omega }_t} \) to which \( {\mathbf{Y}}(t) \) belongs at time \( t \); see Fig. 1.

Since the probability is preserved in the mapping of any arbitrary element events, we have

It is understood that Eq. (4) also holds at \( t + \Delta t \), which will then result in

where \( {{{{\text{D(}} \cdot {)}}} \left/ {{{\text{D}}t}} \right.} \) operates its arguments with denotation of total derivative.

Equation (5) is clearly the mathematical formulation of the principle of preservation of probability in a stochastic dynamical system. Since the fact of probability invariability of a random event is recognized here, we refer to Eq. (5) as the random event description of the principle of preservation of probability. The meaning of the principle of preservation of probability can also be clarified from the state-space description. These two descriptions are somehow analogous to the Lagrangian and Eulerian descriptions in the continuum mechanics, although there also some distinctive properties particularly in whether overlapping is allowed. For details, refer to Li and Chen [17, 19].

2.2 Generalized Density Evolution Equation (GDEE)

Without loss of generality, consider the equation of motion of a multi-degree-of-freedom (MDOF) system as follows:

where \( \eta = ({{\eta }_1},{{\eta }_2}, \cdots, {{\eta }_{{{{s}_1}}}}) \) are the random parameters involved in the physical properties of the system. If the excitation is a stochastic ground accelerogram \( \xi (t) = {{\ddot{X}}_{\rm{g}}}(t) \), for example, then \( \Gamma = - {\mathbf{M1}} \), \( {\mathbf{1}} = {{(1,1, \cdots, 1)}^{\rm{T}}} \). Here \( {{ \ddot{\rm X}}},{{ \dot{\rm X}}},{\mathbf{X}} \) are the accelerations, velocities, and displacements of the structure relative to ground. \( {\mathbf{M}}( \cdot ),{\mathbf{C}}( \cdot ),{\mathbf{f}}( \cdot ) \) denote the mass, damping, and stiffness matrices of the structural system, respectively.

In the modeling of stochastic dynamic excitations such as earthquake ground motions, strong winds, and sea waves, the thought of physical stochastic process can be employed [14, 15, 27]. For general stochastic processes or random fields, the double-stage orthogonal decomposition can be adopted such that the excitation could be represented by a random function [22]

where \( \zeta = ({{\zeta }_1},{{\zeta }_2}, \cdots, {{\zeta }_{{{{s}_2}}}}) \).

For notational consistency, denote

in which \( s = {{s}_1} + {{s}_2} \) is the total number of the basic random variables involved in the system. Equation (6) can thus be rewritten into

where \( {\mathbf{F}}(\Theta, t) = \Gamma {{\ddot{\rm X}}_{\rm{g}}}(\zeta, t) \).

This is the equation to be resolved in which all the randomness from the initial conditions, excitations, and system parameters is involved and exposed in a unified manner. Such a stochastic equation of motion can be further rewritten into a stochastic state equation which was firstly formulated by Dostupov and Pugachev [3].

If, besides the displacements and velocities, we are also interested in other physical quantities \( {\mathbf{Z}} = {{({{Z}_1},{{Z}_2}, \cdots, {{Z}_m})}^{\rm{T}}} \) in the system (e.g., the stress, internal forces), then the augmented system \( ({\mathbf{Z}},\Theta ) \) is probability preserved because all the random factors are involved; thus, according to Eq. (5), we have [19]

where \( {{\Omega }_t} \times {{\Omega }_{\theta }} \) is any arbitrary domain in the augmented state space \( \Omega \times {{\Omega }_{\Theta }} \), \( {{\Omega }_{\Theta }} \) is the distribution range of the random vector \( \Theta \), and \( {{p}_{{{\mathbf{Z}}\Theta }}}({\mathbf{z}},\theta, t) \) is the joint probability density function (PDF) of \( ({\mathbf{Z}}(t),\Theta ) \).

After a series of mathematical manipulations, including the use of Reynolds’ transfer theorem, we have

which holds for any arbitrary \( {{\Omega }_{{{{t}_0}}}} \times {{\Omega }_{\theta }} \in \Omega \times {{\Omega }_{\Theta }} \). Thus, we have for any arbitrary \( {{\Omega }_{\theta }} \in {{\Omega }_{\Theta }} \)

and also the following partial differential equation:

Specifically, as \( m = 1 \), Eqs. (12) and (13) become, respectively,

and

which is a one-dimensional partial differential equation.

Equations (13) and (15) are referred to as generalized density evolution equations (GDEEs). They reveal the intrinsic connections between a stochastic dynamical system and its deterministic counterpart. It is remarkable that the dimension of a GDEE is not relevant to the dimension (or degree-of-freedom) of the original system; see Eq. (9). This distinguishes GDEEs from the traditional probability density evolution equations (e.g., Liouville, Dostupov-Pugachev, and FPK equations), of which the dimension must be identical to the dimension of the original state equation (twice the degree-of-freedom).

Clearly, Eq. (14) is mathematically equivalent to Eq. (15). But it will be seen later that Eq. (14) itself may provide additional insight into the problem. Particularly, if the physical quantity Z of interest is the displacement X of the system, Eq. (15) becomes

Here we can see the rule clearly revealed by the GDEE: in the evolution of a general dynamical system, the time variant rate of the joint PDF of displacement and source random parameters is proportional to the space variant rate with the coefficient being instantaneous velocity. In other words, the flow of probability is determined by the change of physical states. This demonstrates strongly that the evolution of probability density is not disordered but admits a restrictive physical law. Clearly, this holds for the general physical system with underlying randomness. This rule could not be exposed in such an explicit way in the traditional probability density evolution equations.

Although in principle the GDEE holds for any arbitrary dimension, in most cases, one- or two-dimensional GDEEs are adequate. For simplicity and clarity, in the following sections, we will be focused on the one-dimensional GDEE. Generally, the boundary condition for Eq. (15) is

the latter of which is usually adopted in first-passage reliability evaluation where \( {{\Omega }_{\rm{f}}} \) is the failure domain, while the initial condition is usually

where \( {{z}_0} \) is the deterministic initial value.

Solving Eq. (15), the instantaneous PDF of \( Z(t) \) can be obtained by

The GDEE was firstly obtained as the uncoupled version of the parametric Liouville equation for linear systems [16]. Then for nonlinear systems, the GDEE was reached when the formal solution was employed [18]. It is from the above derivation that the meanings of the GDEE were thoroughly clarified and a solid physical foundation was laid [19].

2.3 Point Evolution and Ensemble Evolution

Since Eq. (14) holds for any arbitrary \( {{\Omega }_{\theta }} \in {{\Omega }_{\Theta }} \), then for any arbitrary partition of probability-assigned space [1], of which the sub-domains are \( {{\Omega }_q} \)’s, \( q = 1,2, \cdots, {{n}_{\rm{pt}}} \) satisfying \( {{\Omega }_i} \cap {{\Omega }_j} = \emptyset, \forall i \ne j \) and \( \bigcup\nolimits_{{q = 1}}^{{{{n}_{\rm{pt}}}}} {{{\Omega }_q}} = {{\Omega }_{\Theta }} \), Eq. (14) constructed in the sub-domain then becomes

It is noted that

is the assigned probability over \( {{\Omega }_q} \) [1], and

then Eq. (20) becomes

According to Eq. (19), it follows that

There are two important properties that can be observed here:

-

1.

Partition of probability-assigned space and the property of independent evolution

The functions \( {{p}_q}(z,t) \) defined in Eq. (22) themselves are not probability density functions because \( \int_{{ - \infty }}^{\infty } {{{p}_q}(z,t){\text{\it d}}z} = {{P}_q} \ne 1 \), that is, the consistency condition is not satisfied. However, except for this violation, they are very similar to probability density functions in many aspects. Actually, a normalized function \( {{\tilde{p}}_q}(z,t) = {{{{{p}_q}(z,t)}} \left/ {{{{P}_q}}} \right.} \) meets all the conditions of a probability density function, which might be called the partial-probability density function over \( {{\Omega }_q} \). Equation (24) can then be rewritten into

$$ {{p}_Z}(z,t) = \sum\limits_{{q = 1}}^{{{{n}_{\rm{pt}}}}} {{{P}_q} \cdot {{{\tilde{p}}}_q}(z,t)} $$(25)It is noted that \( {{P}_q} \)’s are specified by the partition and are time invariant. Thus, the probability density function of \( Z(t) \) could be regarded as the weighted sum of a set of partial-probability density functions. What is interesting regarding the partial-probability density functions is that they are in a sense mutually independent, that is, once a partition of probability-assigned space is determined (consequently \( {{\Omega }_q} \)’s are specified), then a partial-probability density function \( {{\tilde{p}}_q}(z,t) \) is completely governed by Eq. (23) (it is of course true if the function \( {{p}_q}(z,t) \) is substituted by \( {{\tilde{p}}_q}(z,t) \)); the evolution of other partial-probability density functions, \( {{\tilde{p}}_r}(z,t),r \ne q \), has no effects on the evolution of \( {{\tilde{p}}_q}(z,t) \). This property of independent evolution of partial-probability density function means that the original problem can be partitioned into a series of independent subproblems, which are usually easier than the original problem. Thus, the possibility of new approaches is implied but still to be explored. It is also stressed that such a property of independent evolution is not conditioned on any assumption of mutual independence of basic random variables.

-

2.

Relationship between point evolution and ensemble evolution

The second term in Eq. (23) usually cannot be integrated explicitly. It is seen from this term that to capture the partial-probability density function \( {{\tilde{p}}_q}(z,t) \) over \( {{\Omega }_q} \), the exact information of the velocity dependency on \( \theta \in {{\Omega }_q} \) is required. This means that the evolution of \( {{\tilde{p}}_q}(z,t) \) depends on all the exact information in \( {{\Omega }_q} \); in other words, the evolution of \( {{\tilde{p}}_q}(z,t) \) is determined by the evolution of information of the ensemble over \( {{\Omega }_q} \). This manner could be called ensemble evolution.

To uncouple the second term in Eq. (23), we can assume

$$ \dot{X}(\theta, t) \doteq \dot{X}({{\theta }_q},t),{\text{ for }}\theta \in {{\Omega }_q} $$(26)where \( {{\theta }_q} \in {{\Omega }_q} \) is a representative point of \( {{\Omega }_q} \). For instance, \( {{\theta }_q} \) could be determined by the Voronoi cell [1], by the average \( {{\theta }_q} = \frac{1}{{{{P}_q}}}\int_{{{{\Omega }_q}}} {\theta {{p}_{\Theta }}(\theta )d\theta } \), or in some other appropriate manners. By doing this, Eq. (23) becomes

$$ \frac{{\partial {{p}_q}(z,t)}}{{\partial t}} + \dot{Z}({{\theta }_q},t)\frac{{\partial {{p}_q}(z,t)}}{{\partial z}} = 0,\quad q = 1,2, \cdots, {{n}_{\rm{pt}}} $$(27)The meaning of Eq. (26) is clear that the ensemble evolution in Eq. (23) is represented by the information of a representative point in the sub-domain, that is, the ensemble evolution in a sub-domain is represented by a point evolution.

Another possible manner of uncoupling the second term in Eq. (23) implies a small variation of \( {{p}_{{Z\Theta }}}(z,\theta, t) \) over the sub-domain \( {{\Omega }_q} \). In this case, it follows that

$$ \frac{{\partial {{p}_q}(z,t)}}{{\partial t}} + {{E}_q}[\dot{Z}(\theta, t)]\frac{{\partial {{p}_q}(z,t)}}{{\partial z}} = 0,{\ }q = 1,2, \cdots, {{n}_{\rm{pt}}} $$(28)where \( {{E}_q}[\dot{Z}(\theta, t)] = \frac{1}{{{{P}_q}}}\int_{{{{\Omega }_q}}} {\dot{Z}(\theta, t){{p}_{\Theta }}(\theta )d\theta } \) is the average of \( \dot{Z}(\theta, t) \) over \( {{\Omega }_q} \). In some cases, \( {{E}_q}[\dot{Z}(\theta, t)] \) might be close to \( \dot{Z}({{\theta }_q},t) \), and thus, Eqs. (27) and (28) coincide.

2.4 Numerical Procedure for the GDEE

In the probability density evolution method, Eq. (9) is the physical equation, while Eq. (15) is the GDEE with initial and boundary conditions specified by Eqs. (17) and (18). Hence, solving the problem needs to incorporate physical equations and the GDEE. For some very simple cases, a closed-form solution might be obtained, say, by the method of characteristics [18]. While for most practical engineering problems, numerical method is needed. To this end, we start with Eq. (14) instead of Eq. (15) because from the standpoint of numerical solution, usually an equation in the form of an integral may have some advantages over an equation in the form of a differential.

According to the discussions in the preceding section, Eqs. (23), (27), or (28) could be adopted as the governing equation for numerical solution. Equation (23) is an exact equation equivalent to the original Eqs. (14) and (15). In the present stage, numerical algorithms for Eq. (27) were extensively studied and will be outlined here.

It is seen that Eq. (27) is a linear partial differential equation. To obtain the solution, the coefficients should be determined first, while these coefficients are time rates of the physical quantity of interest as \( \{ \Theta = \theta \} \) and thus can be obtained through solving Eq. (9). Therefore, the GDEE can be solved in the following steps:

-

Step 1: Select representative points (RPs for short) in the probability-assigned space and determine their assigned probability. Select a set of representative points in the distribution domain \( {{\Omega }_{\Theta }} \). Denote them by \( {{\theta }_q} = ({{\theta }_{{q,1}}},{{\theta }_{{q,2}}}, \cdots, {{\theta }_{{q,s}}});{ }q = 1,2, \cdots, {{n}_{\rm{pt}}} \), where \( {{n}_{\rm{pt}}} \) is the number of the selected points. Simultaneously, determine the assigned probability of each point according to Eq. (22) using the Voronoi cells [1].

-

Step 2: Solve deterministic dynamical systems. For the specified \( \Theta = {{\theta }_q},{ }q = 1,2, \cdots, {{n}_{\rm{pt}}} \), solve the physical equation (Eq. 9) to obtain time rate (velocity) of the physical quantities \( \dot{Z}({{\theta }_q},t) \). Through steps 1 and 2, the ensemble evolution is replaced by point evolution as representatives.

-

Step 3: Solve the GDEE (Eq. 27) under the initial condition, as a discretized version of Eq. (18),

$$ {{\left. {{{p}_q}(z,t)} \right|}_{{t = {{t}_0}}}} = \delta (z - {{z}_0}){{P}_q} $$(29)by the finite difference method with TVD scheme to acquire the numerical solution of \( {{p}_q}(z,t) \).

-

Step 4: Sum up all the results to obtain the probability density function of \( Z(t) \) via the Eq. (24).

It is seen clearly that the solving process of the GDEE is to incorporate a series of deterministic analysis (point evolution) and numerical solving of partial differential equations, which is just the essential of the basic thought that the physical mechanism of probability density evolution is the evolution of the physical system.

3 Performance Evolution of Controlled Systems

Extensive studies have been done on the structural optimal control, which serves as one of the most effective measures to mitigate damage and loss of structures induced by disastrous actions such as earthquake ground motions and strong winds [7]. However, the randomness inherent in the dynamics of the system or its operational environment and coupled with the nonlinearity of structural behaviors should be taken into account so as to gain a precise control of structures. The reliability of structures, otherwise, associated with structural performance still cannot be guaranteed even if the responses are greatly reduced compared to the uncontrolled counterparts. Thus, the methods of stochastic optimal control have usually been relied upon to provide a rational mathematical context for analyzing and describing the problem.

Actually, pioneering investigations of stochastic optimal control by mathematician were dated back to semi-century ago and resulted in fruitful theorems and approaches [39]. These advances mainly hinge on the models of Itô stochastic differential equations (e.g., LQG control). They limit themselves in application to white noise or filtered white noise that is quite different from practical engineering excitations. The seismic ground motion, for example, exhibits strongly nonstationary and non-Gaussian properties. In addition, stochastic optimal control of multidimensional nonlinear systems is still a challenging problem in open. It is clear that the above two challenges both stem from the classical framework of stochastic dynamics. Therefore, a revolutionary scheme through physical control methodology based on PDEM is developed in the last few years [23–26].

Consider the multi-degree-of-freedom (MDOF) system represented by Eq. (9) is exerted a control action, of which the equation of motion is given by

where \( {\mathbf{U}}(\Theta, t) \) is the control gain vector provided by the control action, \( {{{\mathbf{B}}}_s} \) is a matrix denoting the location of controllers, and \( {{{\mathbf{D}}}_s} \) is a matrix denoting the location of excitations.

In the state space, Eq. (30) becomes

where \( {\mathbf{A}} \) is a system matrix, \( {\mathbf{B}} \) is a controller location matrix, and \( {\mathbf{D}} \) is a excitation location vector.

In most cases, Eq. (30) is a well-posed equation, and relationship between the state vector \( {\mathbf{Z}}(t) \) and control gain \( {\mathbf{U}}(t) \) can be determined uniquely. Clearly, it is a function of \( \Theta \) and might be assumed to take the form

It is seen that all the randomness involved in this system comes from \( \Theta \); thus, the augmented systems of components of state and control force vectors \( (Z(t),\Theta ) \), \( (U(t),\Theta ) \) are both probability preserved and satisfy the GDEEs, respectively, as follows [25]:

The corresponding instantaneous PDFs of \( Z(t) \) and \( U(t) \) can be obtained by solving the above partial differential equations with given initial conditions

where \( {{\Omega }_{\Theta }} \) is the distribution domain of \( \Theta \) and the joint PDFs \( {{p}_{{Z\Theta }}}(z,\theta, t) \) and \( {{p}_{{U\Theta }}}(u,\theta, t) \) are the solutions of Eqs. (34) and (35), respectively.

As mentioned in the previous sections, the GDEEs reveal the intrinsic relationship between stochastic systems and deterministic systems via the random event description of the principle of preservation of probability. It is thus indicated, according to the relationship between point evolution and ensemble evolution, that the structural stochastic optimal control can be implemented through a collection of representative deterministic optimal controls and their synthesis on evolution of probability densities. Distinguished from the classical stochastic optimal control scheme, the control methodology based on the PDEM is termed as the physical scheme of structural stochastic optimal control.

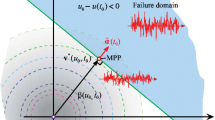

Figure 2 shows the discrepancy among the deterministic control (DC), the LQG control, and the physical stochastic optimal control (PSC) tracing the performance evolution of optimal control systems. One might realize that the performance trajectory of the deterministic control is point to point, and obviously, it lacks the ability of governing the system performance due to the randomness of external excitations. The performance trajectory of the LQG control, meanwhile, is circle to circle. It is remarked here that the classical stochastic optimal control is essentially to govern the system statistics to the general stochastic dynamical systems since there still lacks of efficient methods to solve the response process of the stochastic systems with strong nonlinearities in the context of classical random mechanics. The LQG control, therefore, just holds the system performance in mean-square sense and cannot reach its high-order statistics. The performance trajectory of the PSC control, however, is domain to domain, which can achieve the accurate control of the system performance since the system quantities of interest all admit the GDEEs, Eqs. (34) and (35).

4 Probabilistic Criteria of Structural Stochastic Optimal Control

The structural stochastic optimal control involves maximizing or minimizing the specified cost function, whose generalized form is typically the quadratic combination of displacement, velocity, acceleration and control force. A standard quadratic cost function is given by the following expression [34]:

where \( {\mathbf{Q}} \) is a positive semi-definite matrix, \( {\mathbf{R}} \) is a positive definite matrix, and \( {{t}_f} \) is the terminal time, usually longer than that of the excitation. As should be noted, the cost function of the classical LQG control is defined as the ensemble-expected formula of Eq. (38) that is a deterministic function in dependence upon the time argument. Its minimization is to obtain the minimum second-order statistics of the state as the given parameters of control policy and construct the corresponding control gain under Gaussian process assumptions. In many cases of practical interests, the probability distribution function of the state related to structural performance is unknown, and the control gain essentially relies on second-order statistics, while the cost function represented by Eq. (38) is a stochastic process, of which minimization is to make the representative solution of the system state globally optimized in case of the given parameters of control policy. This treatment would result in a minimum second-order statistics or the optimum shape of the PDF of system quantities of interests. It is thus practicable to construct a control gain relevant to a predetermined performance of engineering structures since the procedure developed in this chapter adapts to the optimal control of general stochastic systems. In brief, the procedure involves two step optimizations; see Fig. 3. In the first step, for each realization \( {{\theta }_q} \) of the stochastic parameter \( \Theta \), the minimization of the cost function Eq. (38) is carried out to build up a functional mapping from the set of parameters of control policy to the set of control gains. In the second step, the specified parameters of control policy to be used are obtained by optimizing the control gain according to the objective structural performance.

Therefore, viewed from representative realizations, the minimum of \( {{J}_1} \) results in a solution of the conditional extreme value of cost function. The functional mapping, for a closed-loop control system, from the set of control parameters to the set of control gains is yield by [25]

where \( \rm P \) is the Riccati matrix function.

As indicated previously, the control effectiveness of stochastic optimal control relies on the specified control policy related to the objective performance of the structure. The critical procedure of designing control system actually is the determination of parameters of control policy, that is, weighting matrices \( {\mathbf{Q}} \) and \( {\mathbf{R}} \) in Eq. (38). There were a couple of strategies regarding to the weighting matrix choice in the context of classical LQG control such as system statistics assessment based on the mathematical expectation of the quantity of interest [40], system robustness analysis in probabilistic optimal sense [35], and comparison of weighting matrices in the context of Hamilton theoretical framework [42]. We are attempting to, nevertheless, develop a family of probabilistic criteria of weight matrices optimization in the context of the physical stochastic optimal control of structures.

4.1 System Second-Order Statistics Assessment (SSSA)

A probabilistic criterion of weight matrices optimization based on the system second-order statistics assessment, including constraint quantities and assessment quantities, is proposed as follows:

Where \( {{J}_2} \) denotes a performance function, \( \tilde{Y} = \mathop{{\max }}\limits_t [\mathop{{\max }}\limits_i \left| {{{Y}_i}(\Theta, t)} \right|] \) is the equivalent extreme-value vector of the quantities to be assessed, \( \tilde{X} = \mathop{{\max }}\limits_t [\mathop{{\max }}\limits_i \left| {{{X}_i}(\Theta, t)} \right|] \) is the equivalent extreme-value vector of the quantities to be used as the constraint, \( {{\tilde{X}}_{\rm{con}}} \) is the threshold of the constraint, the hat “~” on symbols indicates the equivalent extreme-value vector or equivalent extreme-value process [21], and \( F[ \cdot ] \) is the characteristic value function indicating confidence level. The employment of the control criterion of Eq. (40) is to seek the optimal weighting matrices such that the mean or standard deviation of the assessment quantity \( \tilde{Y} \) is minimized when the characteristic value of constraint quantity \( \tilde{X} \) less than its threshold \( {{\tilde{X}}_{\rm{con}}} \).

4.2 Minimum of Exceedance Probability of Single System Quantity (MESS)

An exceedance probability criterion in the context of first-passage failure of single system quantity can be specified as follows:

where \( \Pr \left( \cdot \right) \) operates its arguments with denotation of exceedance probability, equivalent extreme-value vector \( \tilde{Y} \) is the objective system quantity, and \( H( \cdot ) \) is the Heaviside step function. The physical meaning of this criterion is that the exceedance probability of the system quantity is minimized [26].

4.3 Minimum of Exceedance Probability of Multiple System Quantities (MEMS)

An exceedance probability criterion in the context of global failure of multiple system quantities is defined as follows:

where equivalent extreme-value vectors of state and control force \( \tilde{Z},\tilde{U} \) are the objective system quantities. It is indicated that this control criterion characterizes system safety (indicated in the controlled inter-story drift), system serviceability (indicated in the controlled inter-story velocity), system comfortability (indicated in the constrained story acceleration), controller workability (indicated in the limit control force), and their trade-off.

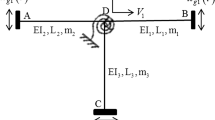

5 Comparative Studies

A base-excited single-story structure with an active tendon control system (see Fig. 4) is considered as a case for comparative studies of the control policies deduced from the above probabilistic criteria and the developed control methodology against the classical LQG. The properties of the system are as follows: the mass of the story is m = 1 × 105 kg; the natural circular frequency of the uncontrolled structural system is ω 0 = 11.22 rad/s; the control force of the actuator is denoted by \( f(t) \), α representing the inclination angle of the tendon with respect to the base, and the acting force \( u(t) \) on the structure is simulated; and the damping ratio is assumed to be 0.05. A stochastic earthquake ground motion model is used in this case [15], and the mean-valued time history of ground acceleration with peak 0.11 g is shown in Fig. 5.

The objective of stochastic optimal control is to limit the inter-story drift such that the system locates the reliability state, to limit the inter-story velocity such that the system provides the desired serviceability, to limit the story acceleration such that the system provides the desired comfortability, and to limit the control force such that the controller sustains its workability. The thresholds/constraint values of the inter-story drift, of the inter-story velocity, of the story acceleration, and of the control force are 10 mm, 100 mm/s, 3,000 mm/s, and 200 kN, respectively.

5.1 Advantages in Global Reliability-Based Probabilistic Criterion

For the control criterion of system second-order statistics assessment (SSSA), the inter-story drift is set as the constraint, and the assessment quantities include the inter-story drift, the story acceleration, and the control force. The characteristic value function is defined as mean plus three times of standard deviation of equivalent extreme-value variables. For the control criterion of minimum of exceedance probability of single system quantity (MESS), the inter-story drift is set as the objective system quantity, and the constraint quantities include the story acceleration and the control force. For the control criterion of minimum of exceedance probability of multiple system quantities (MEMS), the inter-story drift, inter-story velocity, and control force are set as the objective system quantities, while the constraint quantity is the story acceleration.

The comparison between the three control policies is investigated. The numerical results are listed in Table 1. It is seen that the effectiveness of response control hinges on the physical meanings of the optimal control criteria. As indicated in this case, the control criterion SSSA exhibits the larger control force due to the inter-story drift being only considered as the constraint quantity, which thus has lower inter-story drift. The control criterion MESS, however, exhibits the smaller control force due to the story acceleration and control force being simultaneously considered as the constraint quantities that result in a less reduction on the inter-story drift. The control criterion MEMS, as seen from Table 1, achieves the best trade-off between control effectiveness and economy in that the objective system quantities include the inter-story drift, together with inter-story velocity and control force. It thus has reason to believe that the multi-objective criterion in the global reliability sense is the primary criterion of structural performance controls.

5.2 Control Gains Against the Classical LQG

It is noted that the classical stochastic optimal control strategies also could be applied to a class of stochastic dynamical systems and synthesize the moments or the PDFs of the controlled quantities. The class of systems is typically driven by independent additive Gaussian white noise and usually modeled as the Itô stochastic differential equations. The response processes, meanwhile, exhibit Markov property, of which the transition probabilities are governed by the Fokker-Planck-Kolmogorov equation (FPK equation). It remains an open challenge in the civil engineering system driven by non-Gaussian noise. The proposed physical stochastic optimal control methodology, however, occupies the validity and applicability to the civil engineering system. As a comparative study, Fig. 6 shows the discrepancy of root-mean-square quantity vs. weight ratio, using the control criterion of SSSA, between the advocated method and the LQG control.

One could see that the LQG control would underestimate the desired control force when the coefficient ratio of weighting matrices locates at the lower value, and it would overestimate the desired control force when the coefficient ratio of weighting matrices locates at the higher value. It is thus remarked that the LQG control using the nominal Gaussian white noise as the input cannot design the rational control system for civil engineering structures.

6 Numerical Example

An eight-story single-span shear frame fully controlled by active tendons is taken as a numerical example, of which the properties of the uncontrolled structure are identified according to Yang et al. [38]. The floor mass of each story unit is m = 3.456 × 105 kg; the elastic stiffness of each story is k = 3.404 × 102 kN/mm; and the internal damping coefficient of each story unit c = 2.937 kN × sec/mm, which corresponds to a 2% damping ratio for the first vibrational mode of the entire building. The external damping is assumed to be zero. The computed natural frequencies are 5.79, 17.18, 27.98, 37.82, 46.38, 53.36, 58.53, and 61.69 rad/s, respectively. The earthquake ground motion model is the same as that of the preceding SDOF system, and the peak acceleration is 0.30 g. The control criterion MEMS is employed, and the thresholds/constraint values of the structural inter-story drifts, inter-story velocities, story acceleration, and the control forces are 15 mm, 150 mm/s, 2,000 kN, and 8,000 mm/s, respectively. For simplicity, the form of the weighting matrices in this case takes

The optimization results of the numerical example are shown in Table 2. It is seen that the exceedance probability of system quantities, rather than the ratio of reduction of responses, is provided when the objective value of performance function reaches to the minimum, indicating an accurate control of structural performance implemented. The optimization results also show that the stochastic optimal control achieves a best trade-off between effectiveness and economy.

Figure 7 shows typical PDFs of the inter-0-1-story drift of the controlled/uncontrolled structures at typical instants of time. One can see that the variation of the inter-story drift is obviously reduced. Likewise, the PDFs of the eight-story acceleration at typical instants show a reduction of system response since that distribution of the story acceleration has been narrowed (see Fig. 8). It is indicated that the seismic performance of the structure is improved significantly in case that the stochastic optimal control employing the exceedance probability criterion is applied.

7 Concluding Remarks

In this chapter, the fundamental theory of the generalized density evolution equation is firstly revisited. Then, a physical scheme of structural stochastic optimal control based on the probability density evolution method is presented for the stochastic optimal controls of engineering structures excited by general nonstationary and non-Gaussian processes. It extends the classical stochastic optimal control approaches, such as the LQG control, of which the random dynamic excitations are exclusively assumed as independent white noises or filter white noises. A family of optimal control criteria for designing the controller parameter, including the criterion based on mean and standard deviation of responses, the criterion based on Exceedance probability, and the criterion based on global reliability of systems, is elaborated by investigating the stochastic optimal control of a base-excited single-story structure with an active tendon control system. It is indicated that the control effect relies upon the probabilistic criteria of which the control criterion in global reliability operates efficiently and gains the desirable structural performance. The proposed stochastic optimal control scheme, meanwhile, of structures exhibits significant benefits over the classical LQG control. An eight-story shear frame controlled by active tendons is further investigated, employing the control criterion in global reliability of the system quantities. It is revealed in the numerical example that the seismic performance of the structure is improved significantly, indicating the validity and applicability of the developed PDEM-based stochastic optimal control methodology for the accurate control of structural performance.

References

Chen JB, Ghanem R, Li J (2009) Partition of the probability-assigned space in probability density evolution analysis of nonlinear stochastic structures. Probab Eng Mech 24(1):27–42

Crandall SH (1958) Random Vibration. Technology Press of MIT; John Wiley and Sons, New York

Dostupov BG, Pugachev VS (1957) The equation for the integral of a system of ordinary differential equations containing random parameters. Automatikai Telemekhanika 18:620–630

Einstein A (1905) Über Die Von Der Molecular-Kinetischen Theorie Der Wärme Geforderte Bewegung Von in Rhuenden Flüssigkeiten Sus-Pendierten Teilchen. Ann Phys (Leipzig) 17:549–560

Ghanem RG, Spanos PD (1991) Stochastic finite elements: a spectral approach. Springer, Berlin

Halder A, Mahadevar S (2000) Reliability assessment using stochastic finite element analysis. Wiley, New York

Housner GW, Bergman LA, Caughey TK et al (1997) Structural control: past, present, and future. J Eng Mech 123(9):897–971

Itô K (1942) Differential equations determining a Markoff process. Zenkoku Sizyo Sugaku Danwakasi, 1077

Kleiber M, Hien TD (1992) The stochastic finite element method. Wiley, Chichester

Kolmogorov A (1931) über die analytischen Methoden in der Wahrscheinlichkeitsrechnung. Math Ann 104(1):415–458

Langevin P (1908) Sur La Theorie Du Mouvement Brownien. C. R. Acad. Sci, Paris, pp 530–532

Li J (1996) Stochastic structural systems: analysis and modeling. Science Press, Beijing (in Chinese)

Li J (2006) A physical approach to stochastic dynamical systems. Sci Paper Online 1(2):93–104 (in Chinese)

Li J (2008) Physical stochastic models for the dynamic excitations of engineering structures. In: Advances in theory and applications of random vibration, 119–132, Tongji University Press, Shanghai (in Chinese)

Li J, Ai XQ (2006) Study on random model of earthquake ground motion based on physical process. Earthq Eng Eng Vib 26(5):21–26 (in Chinese)

Li J, Chen JB (2003) Probability density evolution method for dynamic response analysis of stochastic structures. In: Proceeding of the fifth international conference on stochastic structural dynamics, Hangzhou, China, pp 309–316

Li J, Chen JB (2006) Generalized density evolution equations for stochastic dynamical systems. Prog Nat Sci 16(6):712–719

Li J, Chen JB (2006) The probability density evolution method for dynamic response analysis of non-linear stochastic structures. Int J Numer Methods Eng 65:882–903

Li J, Chen JB (2008) The principle of preservation of probability and the generalized density evolution equation. Struct Saf 30:65–77

Li J, Chen JB (2009) Stochastic dynamics of structures. Wiley, Singapore

Li J, Chen JB, Fan WL (2007) The equivalent extreme-value event and evaluation of the structural system reliability. Struct Saf 29(2):112–131

Li J, Liu ZJ (2006) Expansion method of stochastic processes based on normalized orthogonal bases. J Tongji Univ (Nat Sci) 34(10):1279–1283

Li J, Peng YB (2007) Stochastic optimal control of earthquake-excited linear systems. In: Proceedings of 8th Pacific conference on earthquake engineering, Singapore, 5–7 Dec 2007

Li J, Peng YB, Chen JB (2008) GDEE-based stochastic control strategy of MR damping systems. In: Proceedings of 10th international symposium on structural engineering for young experts, Changsha, China, pp 1207–1212

Li J, Peng YB, Chen JB (2010) A physical approach to structural stochastic optimal controls. Probabilistic Engineering Mechanics 25(1):127–141

Li J, Peng YB, Chen JB (2011) Probabilistic criteria of structural stochastic optimal controls. Probab Eng Mech 26(2):240–253

Li J, Yan Q, Chen JB (2011) Stochastic modeling of engineering dynamic excitations for stochastic dynamics of structures. Probab Eng Mech 27:19–28

Lin YK (1967) Probabilistic Theory of Structural Dynamics. McGraw-Hill, New York

Lin YK, Cai GQ (1995) Probabilistic structural dynamics: advanced theory and applications. McGraw-Hill, New York

Lutes LD, Sarkani S (2004) Random vibrations: analysis of structural and mechanical systems. Butterworth-Heinemann, Amsterdam

Schenk CA, Schuëller GI (2005) Uncertainty assessment of large finite element systems. Springer, Berlin

Shinozuka M, Deodatis G (1991) Simulation of stochastic processes by spectral representation. Appl Mech Rev 44(4):191–204

Shinozuka M, Jan CM (1972) Digital simulation of random processes and its applications. J Sound Vib 25:111–128

Soong TT (1990) Active structural control: theory and practice. Longman Scientific & Technical, New York

Stengel RF, Ray LR, Marrison CI (1992) Probabilistic evaluation of control system robustness. IMA workshop on control systems design for advanced engineering systems: complexity, uncertainty, information and organization, Minneapolis, MN, 12–16 Oct 1992

Syski R (1967) Stochastic differential equations. Chapter 8. In: Saaty TL (ed) Modern nonlinear equations. McGraw-Hill, New York

Wiener N (1923) Differential space. J Math Phys 2(13):131–174

Yang JN, Akbarpour A, Ghaemmaghami P (1987) New optimal control algorithms for structural control. J Eng Mech 113(9):1369–1386

Yong JM, Zhou XY (1999) Stochastic controls: Hamiltonian systems and HJB equations. Springer, New York

Zhang WS, Xu YL (2001) Closed form solution for along-wind response of actively controlled tall buildings with LQG controllers. J Wind Eng Ind Aerodyn 89:785–807

Zhu WQ (1992) Random vibration. Science Press, Beijing (in Chinese)

Zhu WQ, Ying ZG, Soong TT (2001) An optimal nonlinear feedback control strategy for randomly excited structural systems. Nonlinear Dyn 24:31–51

Acknowledgements

The supports of the National Natural Science Foundation of China (Grant Nos. 50621062, 51108344) and the Exploratory Program of State Key Laboratory of Disaster Reduction in Civil Engineering at Tongji University (Grant No. SLDRCE11-B-04) are highly appreciated.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer India

About this paper

Cite this paper

Li, J., Peng, YB. (2013). Physical Perspective Toward Stochastic Optimal Controls of Engineering Structures. In: Chakraborty, S., Bhattacharya, G. (eds) Proceedings of the International Symposium on Engineering under Uncertainty: Safety Assessment and Management (ISEUSAM - 2012). Springer, India. https://doi.org/10.1007/978-81-322-0757-3_4

Download citation

DOI: https://doi.org/10.1007/978-81-322-0757-3_4

Published:

Publisher Name: Springer, India

Print ISBN: 978-81-322-0756-6

Online ISBN: 978-81-322-0757-3

eBook Packages: EngineeringEngineering (R0)