Abstract

Recent studies of cognitive science have convincingly demonstrated that human behavior, decision making and emotion depend heavily on “implicit mind,” that is, automatic, involuntary mental processes even the person herself/himself is not aware of. Such implicit processes may interact between partners, producing a kind of “resonance,” in which two or more bodies and brains, coupled via sensorimotor systems, act nearly as a single system. The basic concept of this project is that such “implicit interpersonal information (IIPI)” provides the basis for smooth and effective communication. We have been developing new methods to decode IIPI from brain activities, physiological responses, and body movements, and to control IIPI by sensorimotor stimulation and non-invasive brain stimulation. Here, we detail on two topics from the project, namely, interpersonal synchronization of involuntary body movements as IIPI, and autism as an impairment of IIPI. The findings of the project would provide guidelines for developing human-harmonized information systems.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Implicit interpersonal information (IIPI)

- Interpersonal synchronization

- Body movement

- Hyperscanning electroencephalogram (EEG)

- Eye movement

- Pupil diameter

- Autonomic nervous system

- Oxytocin

- Autism spectrum disorder

- Sensorimotor specificity

10.1 Introduction

Various kinds of telecommunication systems, such as smart phones, chat, and video conference systems, are widely used in the modern world. These systems enable us to communicate with others conveniently beyond the restriction of the physical space. Compared to face-to-face communication, however, it is often difficult to transmit subtle nuances and “atmosphere” with those systems. As a consequence, it typically leads to inefficient discussions, misunderstandings, and conflicts, which would be largely avoided in face-to-face communication.

The conventional approach to solve such problems is to improve the physical performance of the system (by increasing the size and resolution of a visual display, the number of channels of the audio signal, and the frequency band, and so on). Such technical improvements bring about certain improvements, but not sufficient. As far as the user is a human, any technological improvements can not be effective unless it is expedient to human characteristics and mechanisms. Moreover, communication may be rather disturbed by adding unnecessary information. Thus, conversation over the conventional telephone can be less stressful than that by using video conference system with high-definition screen.

We approach the problem from the other side, namely, a human-centered viewpoint. In the first place, what are the critical factors that determine the quality of human communication? Once such factors are identified, it is possible to establish the design principles for securing face-to-face communication quality even via remote communication systems. In addition, by controlling the critical information appropriately, it may be possible to implement communication systems with enhanced nuances and reduced disturbance comparing to face-to-face interaction. Also, the systematic knowledge of factors that determine the quality of communication will be applicable not only to the interaction of human-to-human, but also to the interaction of the human-to-computer systems, which will become quite common in the near future. Having these things in mind, we started this project in 2010 to identify factors that determine the quality of communication, and their neural basis.

The fundamental assumption of our project is that consciousness (or explicit mind) is only a fraction of the whole “mind” [1]. Recent findings of cognitive science convincingly demonstrated that human behavior, decision making and emotion depend not only on conscious deliberation, but heavily on “implicit mind,” that is, automatic, fast, involuntary mental processes even the person herself/himself is not aware of [2, 3]. Then, it would be natural that such implicit processes also play critical roles in human-to-human communication. In daily communication, needless to say, explicit information such as language and symbolic gestures is indispensable. At the same time, the internal state of a person can be represented by implicit information, such as subtle, unintended body movements and various physiological changes reflecting the activities of the autonomic and endocrine systems. Such implicit information can be thought of as rather “honest” information because it cannot be controlled voluntarily as in telling a lie using language. Even though the recipients are not aware of such implicit information, their feelings and decisions may be affected faithfully by the stimulus and the context. Such implicit processes may interact between partners, producing a kind of “resonance,” in which two or more bodies and brains, coupled via sensorimotor systems, act nearly as a single system. The general aim of this project is to test the general hypothesis that such “implicit interpersonal information (IIPI)” provides the basis for smooth communication (Fig. 10.1).

Concept of IIPI [1]. Human behavior, decision making and emotion depend not only on conscious deliberation, but heavily on “implicit mind,” that is, automatic, fast, involuntary mental processes even the person herself/himself is not aware of. In interpersonal communication, unconscious body movements of partners interact with one another, creating a kind of resonance. The resonance, or “implicit interpersonal information (IIPI),” may provide basis for understanding and sharing emotions, in addition to explicit language and gesture

To this end, we have been conducting several lines of research in parallel. The first is to identify and to decode IIPI. As the saying goes, “the eyes are more eloquent than the mouth.” In the context of decoding mental states from the eyes, gaze direction has been used extensively as an index of visual attention or interest. However, what is reflected on the eyes is not limited to mental states directed to or evoked by visual objects. We have been studying how to decode mental states such as saliency, familiarity, and preference not only for visual objects but also for sounds based on the information obtained from the eyes. Such information includes a kind of eye movement called microsaccade (small, rapid, involuntary eye movements, which typically occur once in a second or two during visual fixation) [4] and changes in pupil diameter (controlled by the balance of sympathetic and parasympathetic nervous systems, and reflects , to some extent, the level of neurotransmitters that control cognitive processing in the brain) [5, 6].

We have also been studying the responses of the brain and autonomic nervous systems , hormone secretion , and body movements. In one of such studies, we have developed a method to measure the concentration of oxytocin (a hormone considered to promote trust and attachment to others) in human saliva with the highest accuracy at present. This enabled us to identify a physiological mechanism underlying relaxation induced by music listening. Listening to music with a slow tempo promotes the secretion of oxytocin, which activates the parasympathetic nervous system, resulting in relaxation [7].

While above mentioned methods are for decoding the information about the implicit mental states of an individual, we have further proceeded to study the interpersonal interaction of such information. As an example, in Sect. 10.2, we will introduce in some detail the study on the interpersonal synchronization of implicit body actions.

The second line of research focuses on patients with communication disorders. We have been trying to identify the cause of impaired communication in high-functioning (i.e., without intellectual disorder) autism spectrum disorder (ASD) . We found that the basic sensory functions of ASD individuals often show specific patterns distinct from those of neurotypical (NT) individuals. The findings provide a fresh view that impaired communication in high-functioning ASD may, at least partly, due to the inability to detect IIPI, rather than higher-order problems such as the inference of other’s intention (“theory of mind” [8, 9]). We will cover this topic in some detail in Sect. 10.3.

The third line of research is the development of the methods to improve the quality of communication by controlling IIPI and/or neural processes involved in the processing of IIPI. We have identified factors that could occur in communication systems and hamper IIPI, such as transmission delay, asynchrony between sensory modalities, transmission loss of information about subtle facial expressions and body movements. Then we examined their impact on the quality of communication, tolerance and adaptability of users. These studies provide guidelines for achieving the same communication quality as face-to-face. Moreover, we have developed techniques to overcome the physical limitations of communication systems such as delay or asynchrony by controlling sensory information [10]. We have also been studying the method of non-invasive brain stimulation on neural sites involved in the processing of IIPI, such as the reward system deep inside the brain [11–13].

The fourth is the elucidation of neural mechanisms involved in the processing of IIPI. We conducted simultaneous measurement of brain activities of two parties performing a coordination task to identify relevant brain sites and to analyze the interaction of those sites across brains [14] (Sect. 10.2). Further, we established animal models (rats) of communication to study neural mechanisms underlying social facilitation and mirroring using invasive brain measurements and stimulation [15].

In the following sections, we will pick up a few among those research results.

10.2 Interpersonal Synchronization as IIPI

Social communication has been considered one of the most complex cognitive functions, partly due to its close relationship to language, and other explicit mental processes such as top-down executive control, inference and decision making. On the other hand, the recent discovery of the mirror neuron and the mirror system [16 ] point to somewhat different direction, i.e. a more automatic and spontaneous nature of social communication. So after all, is social communication at a higher cognitive, or a lower biological level of mental processing? – Both, obviously, but what is relatively neglected is the latter. Bodily synchrony in various species may be interpreted as a primitive biological basis of social behavior. Synchronization phenomena in croaking of frogs, glimmer of firefly, alarming call of birds, are just to name a few examples.

This line of consideration immediately raises a question as to whether synchrony provides a somatic basis of sociality in the human, and what underlying neural mechanisms makes it possible. In the following, we will provide one of the earliest evidence for such, and the tight link between behavioral and social synchrony.

Synchronization is, in a broad physical sense, coordination of rhythmic oscillators due to their interaction. Interpersonal body movement synchronization has been widely observed. A person’s footsteps unconsciously synchronize with those of a partner when two people are walking together, even though their foot lengths, and thus their intrinsic cycles, are different [17–19]. The phenomenon has been thought of as social self-organizing process [20]. Previous studies found that the degree of interpersonal body movement synchrony , such as finger tapping and drumming, predicted subsequent social ratings [21, 22]. The findings indicate a close relationship between social interface and body movement synchronization [23]. However, the mechanism of body movement synchrony and its relationship to implicit interpersonal interaction remain vague.

We thus aimed to evaluate unconscious body movement synchrony and implicit interpersonal interactions between two participants [14]. We also aimed to assess the underlying neural correlates and functional connectivity within and among the brain regions of two participants.

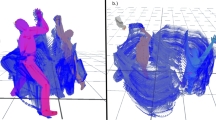

We measured unconscious fingertip movements between the two participants while simultaneously recording electroencephalogram (EEG) in a face-to-face setting (Fig. 10.2a, b). Participants were asked to straighten their arms, point and hold their index fingers toward each other, and look at the other participant’s fingertip. Face-to-face interactions simplify yet closely approximate real-life situations and reinforce the social nature of interpersonal interactions [24].

We believe that our implicit fingertip synchrony task, as well as unconscious footstep synchrony, interpersonal finger tapping and drumming synchrony, are all forms of social synchronization and our task is the simplest form of such. Thus, we hypothesized that interpersonal interaction between two participants would increase body movement synchronization and the interaction would be correlated with social traits of personality. The traits in turn would possibly be reflected in within- and among-brain synchronizations. More specifically, we expected experience-based changes of synchrony in sensorimotor as well as in theory-of-mind related networks, including the precuneus, inferior parietal and posterior temporal cortex [25, 26]. The parietal cortex is especially expected to be involved, given that implicit processing of emotional stimuli, as compared to explicit emotional processing, is associated with theta synchronization in the right parietal cortex [27]. Previous EEG simultaneous recording studies (i.e., hyperscanning EEG) also showed that the right parietal area played a key role in non-verbal social coordination and movement synchrony [28, 29].

Experimental setup and behavioral results [14]. a Session 1 Participants were asked to straighten their arms, point and hold their index fingers toward each other, and look at the other participant’s fingertip. They were instructed to look at the other participant’s finger while holding their own finger as stationary as possible. One participant was instructed to use left arm and the other was instructed to use right arm. Session 2 Same as the session 1, except participants changed the arm from left to right and from right to left respectively. Session 3 One participant (leader, who was randomly selected from the naïve participant pair) was instructed to randomly move his finger (in the approximate area of \(20 \times 20\) cm square) and the other (follower) was instructed to follow. Session 4 Same as the session 3, except participants changed the arm from left to right and from right to left respectively. Session 5 and 6 Same as the session 3 and 4, Session 7 and 8 Same as the session 1 and 2. We call sessions 1–2 the pre-training sessions, the sessions 3–6 the training sessions, and the 7–8 the post-training sessions. b Hyperscanning-EEG setup. The EEG data was passed through a client to a EEG server and database, which was regulated by an experiment controller. Client computers received fingertip movement information from the two participants. Two EEG recording systems were synchronized using a pulse signal from the control server computer delivered to both EEG recording systems. c Average cross correlation coefficients of fingertip movements in each condition (pre-training, post-training, and crosscheck validation) with its standard errors (gray). The training significantly increased finger movement correlation between the two participants (\(p<0.03\)). No significant correlation was found in crosscheck condition (i.e. cross correlation results after random shuffling of participants, \(p=0.62\)). Results are shown as means s.e.m. Statistical analyses performed using a two-tailed student’s t-test

Simultaneous functional magnetic resonance imaging (fMRI) of two participants, called hyperscanning, has been used to assess brain activity while participants can interact with each other [30, 31]. However, due to some technical limitations in fMRI, we believe that “hyperscanning EEG” of two participants may open new vistas on the neural mechanisms underlying social relationships and decision making [28, 29, 32, 33] by providing a tool for quantifying neural synchronization in face-to-face interactions with high temporal resolution [28, 34, 35].

Local neural synchronization can be detected by measuring frequency-specific power changes of each electrode component of the EEG. However, local power changes alone cannot provide evidence of large-scale network formation because it depends on oscillatory interactions between spatially distant cortical regions [36, 37], which may be critical for understanding neural mechanisms during interpersonal interaction. To address this issue, we used phase synchrony to quantify long-range functional connectivity; this would allow us to detect not only intra-brain, but also inter-brain connectivity.

Thus in short, we devised the novel combination of hyperscanning EEG and motion tracking with the implicit body movement synchronization paradigm [38]. The advantage of this experimental paradigm is twofold. First, we were able to detect an implicit-level interaction that is interpersonal and real time in nature (due to the instruction to the participant to keep its finger as stable as possible, neglecting the partner’s). Unlike previous studies mainly concentrated on explicit social interactions, we specifically aimed to identify an implicit process by minimizing explicit interaction. “Social yet implicit” is the key word. Despite the instruction, the partner’s finger movement turned out not to be entirely neglected at the implicit level, thus the participants tended to unconsciously synchronize each other.

The second advantage of the paradigm rests on the fact that since the instructions in our experimental paradigm were to stay stationary, movement artifacts were minimized in the EEG data. Robustness to noise during face-to-face interaction makes our experimental paradigm optimally sensitive to the underlying EEG dynamics and the functional connectivity of implicit interpersonal interaction.

We used such finger-to-finger task as an implicit synchrony measure in the pre- and the post-tests. Between them, was a training session where the two participants (the “leader” and the “follower”) need to move their fingers cooperatively. There was a control training session where the follower was asked to move his/her finger not cooperatively (as different as possible) with the leader’s movements.

We hypothesize that the cooperative training will increase both bodily and neural synchrony between the participants. We also hypothesize that such synchrony would be correlated with social trait of personality (measured by Leary’s scales for social anxiety [39].

Summary of the results are as follows:

First, finger movement correlation between two participants was significantly higher in the post-training, in comparison to the pre-training sessions (Fig. 10.2c). The maximum correlation coefficients occurred at the zero time lag. However, the non-social or the non-responsive training did not increase synchrony.

Second, we found significant negative correlations of the fingertip synchrony increase with each pair’s averaged scores of ‘Fear of Negative Evaluation’ and ‘Blushing Propensity’, indicating that the more the person has social anxiety, the less the fingertip synchrony increases.

Third, performing EEG-based source localization, we observed that theta (4–7.5 Hz) frequency activity in the precuneus (PrC) and beta (12–30 Hz) frequency activity in the right posterior middle temporal gyrus (MTG) increased significantly (Fig. 10.3a, b). The right inferior parietal and posterior temporal cortices have been suggested to play a critical role in various aspects of social cognition, such as theory of mind and empathy [25].

sLORETA source localization [14]. Source localization contrasting between the post- and pre-training in a theta (4–7.5 Hz) and b beta (12–30 Hz) frequency range. The training significantly increased the theta (4–7.5 Hz) activity in the precuneus (PrC) (BA7, \(X=-15\), \(Y=-75\), \(Z=50\); MNI coordinates; corrected for multiple comparisons using nonparametric permutation test, red \(p<0.01\), yellow \(p<0.001\)) and the beta (12–30 Hz) activity in the posterior middle temporal gyrus (MTG) (BA39, \(X=50\), \(Y=-74\), \(Z=24\); MNI coordinates; corrected for multiple comparisons using nonparametric permutation test, red \(p<0.05\), yellow \(p<0.01\)). c Regression analysis. Significant positive correlation between the fingertip synchrony change and ventromedial prefrontal cortex beta frequency power change between post- and pre-training sessions (BA11, \(X=15\), \(Y=65\), \(Z=-15\); MNI coordinates; regression with nonparametric permutation test, \(p<0.05\), \(n=20\))

We also found a significant positive correlation between the fingertip synchrony changes from post- to pre-training sessions and the ventromedial prefrontal cortex (VMPFC) theta frequency activity (Fig. 10.3c). The VMPFC has been indicated as a shared circuit for reflective representations of both self (i.e., introspection) and others (i.e., theory of mind) [9].

Finally and most critically, the functional connectivity analysis found that the overall number of significant phase synchrony in inter-brain connections increased after training, but not in intra-brain connections (Fig. 10.4a). Thus, the cooperative training increased synchrony not only between fingertip movements of the two participants but also between cortical regions across the two brains. Inter-brain connections were found mainly in the inferior frontal gyrus (IFG), anterior cingulate (AC), parahippocampal gyrus (PHG), and postcentral gyrus (PoCG) (phase randomization surrogate statistics, \(p < 0.000001\)) (Fig. 10.4b, c), overall consistent with our hypotheses above.

Inter- and intra-brain phase synchrony [14]. a The total number of functional connections that showed significant phase synchrony (phase randomization surrogate statistics, \(p<0.000001\)) of inter- and intra-brain in theta (4–7.5 Hz) and beta (12–30 Hz) frequency range (chi-square test, \(^{*}p<0.005\), \(^{**}p<0.0001\)). The overall number of significant phase synchrony increased after training in inter-brain connections, but not in intra. b Topography of the phase synchrony connections between all 168 cortical ROIs of the two participants (Left brain leader, right brain follower) when contrasting post- against pre-training (phase randomization surrogate statistics, \(p<0.000001\)) in theta (4–7.5 Hz) and c beta (12–30 Hz). Inter-brain connections were found mainly in the inferior frontal gyrus (IFG), anterior cingulate (AC), parahippocampal gyrus (PHG), and postcentral gyrus (PoCG)

The increase of fingertip synchrony after the training session indicates that the large, voluntary, and intentional mimicry affects the small, involuntary, and unintentional body movement synchronization afterwards. Two participants seem to build their own rhythmic structure during the intentional mimicry training, resulting in increased unintentional synchronization. It is consistent with, and extending the previous studies showing that motor mimicry increased implicit social interaction between two interacting participants [40], and that the spontaneous bi-directional improvisation (i.e. implicit synchronization) increased motor synchrony compared with the uni-directional imitation (i.e. explicit following) [41]. Correlations between the fingertip synchrony increase and the social anxiety scales further support that the increased fingertip synchrony could be a marker of implicit social interaction.

The significant increase of phase synchrony in inter-brain connections after the cooperative interaction (training) is theoretically informative, especially since the increase did not occur in several control conditions, including (1) mere repetition of the test session (thus not mere learning), (2) single subject interacting with a dot moving on the screen which was based on the recorded finger movement and (3) with a recorded video of another subject. Also, it should be noted that the phase synchrony increased only inter-brain, not intra-brain (Fig. 10.4).

In a big picture, the current findings argue against the commonsense modern view of the brain and the society—i.e., a very tight unity of a single brain, and very sparse and remote, interpersonal communication among them. Instead, the brain regions are connected inter-brain via bodily interactions, thus to form a loose dynamic coupling possibly in the daily natural interactions.

10.3 Autism as an Impairment of IIPI

Autism spectrum disorder (ASD) is a kind of developmental disorder (the course of development is non-typical due to the functional impairment of certain parts of the brain). The core syndromes of ASD are (1) disorder in mutual interpersonal relationship, (2) communication disorder, and (3) restricted interests and repetitive behavior, which usually become apparent around the ages of 1–3. Not a few individuals with high-functioning ASD notice their disorder when they grow up, facing with troubles in interpersonal relationship and inadaptability to school or work. In the scientific and clinical fields of ASD, the focus is usually on the disorders of interpersonal relationship and communication. For example, an influential theory of ASD claims that the core disorder of ASD is the lack of “theory of mind” (the ability to infer other’s intention, knowledge, and belief) [8, 9], and has been examined both from behavioral and neural viewpoints. We have a different view, putting more emphasis on the reception and production of IIPI, which are assumed to support smooth communication. Based on this view, we have been studying basic functions in vision and hearing, which play essential roles in the reception of IIPI.

10.3.1 Basic Auditory Processing in ASD

Individuals with ASD often have difficulty listening to a partner’s voice in the environment where multiple sounds coexist, such as a cafeteria, despite the fact that they are not diagnosed as hearing-impaired with a standard audiometric test. To figure out the cause of this difficulty, we performed a series of experiments evaluating basic auditory functions [42]. No ASD (\(N=26\)) and NT (\(N= 28\)) participants were diagnosed as hearing impaired in pure-tone audiometry. Additionally, no significant difference was found between the two groups in an auditory mono-syllable identification test. However, when background noise was superimposed on the mono-syllables, ASDs required significantly lower noise level to obtain the same performance level as NTs. Their complaint was thus supported by objective measurements.

To further explore the cause of the difficulty in selective listening in ASD, we examined various auditory functions including frequency resolution, temporal resolution, sensitivities to interaural time and level differences (ITD and ILD, respectively), pitch discrimination, and so on, and found a characteristic pattern of results for ASD. First, ASDs showed significantly lower sensitivity to ITD and ILD, which are major cues for sound direction in the horizontal plane. Second, a population of ASDs showed significantly lower sensitivity to the temporal fine structure (TFS) of sound waveforms. TFS is one of the cues for pitch [43]. Sound direction and pitch play essential roles in separating sound sources and directing attention to a specific sound source among multiple sound sources presented concurrently. It is quite natural that the impairment in the detection of those cues would cause difficulty in selective listening.

The finding also provides insights about the biological basis of ASD. The sounds received by the ears are first undergone frequency analysis in the cochlea, then transmitted via the brainstem nuclei and thalamus to the auditory cortex in the brain, where auditory perception takes place. ITD, ILD and TFS are detected at the brainstem nuclei [43, 44], suggesting that a population of ASD may have impairment in the brainstem. This is consistent with anatomical findings obtained from postmortal brains [45]. One should note that not all ASD individuals have the brainstem impairment, and those who have the brainstem impairment do not have impairment in other sites of the brain. What is important here is that a significant proportion of ASD individuals has subcortical impairment distinct from cortical ones usually related to interpersonal relationship and communication.

This finding also provides the possibility of objective diagnosis of ASD based on auditory experiments. If we select three critical items (mono-syllable identification under noise, detection of ITD, and detection of TFS) as axes and plot individual data of ASD and NT participants, a certain area in the three-dimensional space exclusively contained ASDs (Fig. 10.5). However, there is also a population of ASDs that cannot be distinguished from NTs with the three items. ASD may consist of separate subgroups, each of which has different biological bases.

Objective screening of ASD based on auditory sensitivities [42]. Three critical items of basic auditory functions (mono-syllable identification under noise, detection of interaural time difference, and detection of TFS) are selected as axes and individual data of ASD and NT participants are plotted. The pink-colored area in the three-dimensional space contained ASDs exclusively

Segregation of concurrent sounds with similar spectral uncertainties [46]. A The auditory stimuli contained one target sequence (red lines) and eight masker sequences (blue lines). The target sequence always had jittered frequencies within a fixed protected region. a The masker sequences had fixed frequencies outside the protected region for the non-jittered conditions. b The masker sequences had jittered frequencies outside the protected region for the jittered conditions. B Target detection thresholds in the ASD and control groups for the four conditions, indicated as mean standard error. The maskers were sent to the right ear for the monotonic conditions or to both ears for the diotic conditions. Both the ASD group and the control group were capable of segregating the target and the masker that carried different spatial information. On the other hand, for the monotic conditions, the significant difference between the jittered and non-jittered thresholds was observed in the control group, but this difference was not observed in the ASD group

We further compared the ability of grouping frequency components between ASD and NT [46]. This is a critical auditory function in multi-source environments, because when acoustic signals from different sound sources are mixed upon arrival at the ears, the auditory system has to organize (or, group) their frequency components by their features [47]. We showed that individuals with ASD performed, counterintuitively, better in terms of hearing a target sequence among distractors that had similar spectral uncertainties (Fig. 10.6). Their superior performance in this task indicates an enhanced discrimination between auditory streams with the same spectral uncertainties but different spectro-temporal details. The enhanced discrimination of acoustic components may be related to the absence of the automatic grouping of acoustic components with the same features, which results in difficulties in speech perception in a noisy environment.

An essential question here is whether such specificity of auditory functions in ASD is among the causes of the core syndromes of ASD, namely, disorders in interpersonal relationship and communication, or, simply coexists without causal relationship. It is premature to conclude, but the former possibility seems promising. If an infant cannot turn his/her head towards the direction of a calling voice in dairy situations where multiple sounds often coexist, and the specific auditory impairment is unnoticed by the surrounding people, interpersonal interactions would be severely limited, which may result in problems in the development of social abilities. Further studies are awaited to clarify this point.

10.3.2 Gaze of ASD

In the visual domain, as well as in the auditory (as indicated in the previous section), individuals with ASD may have some abnormality even at the sensory sampling level. For example, a general tendency among them to avoid gazing at the eyes has been reported. However, psychophysical tests conducted in lab settings often fail to show expected differences between ASD and neurotypical (NT) participants.

One reason is that general intelligence is not impaired in high-functioning ASD. ASD participants often employ cognitive strategies to mimic normal behavior. To assess spontaneous tendencies of ASD free from such cognitive strategies, we applied “Don’t look” paradigm to participants with varying Autism Quotient (AQ) scores [48, 49]. We presented face images to participants and measured their eye movements under the instruction not to look at the mouth. We predicted that high AQ participants may reveal their intrinsic eye-avoidance tendency in this condition, because their cognitive control would be “misdirected” and compensation strategies would not work. Consistent with the prediction, the AQ score was correlated with eye-avoidance tendency (Fig. 10.7).

Examples of gaze patterns in “Don’t look at the mouth” paradigm [49]. Thirty college students (undiagnosed, AQ average 24.4 (\(N=30\)); Male AQ average 25.5 (\(N = 20\)), Female AQ average 22.4 (\(N=10\))) viewed face images, while their gaze was monitored. The participants were asked to avoid looking at the eyes (not shown here) or the mouth. Gaze patterns were compared across High AQ (\(N=11\), AQ score 27–40, average 32.3) and Low AQ (\(N=13\), AQ score 10–21, average 18.2) groups. The green (red) blobs represent the gaze distribution of low (high) AQ participants, respectively. Low AQ participants tend to look at eyes, whereas high AQ participants tend to avoid the eyes

Gaze patterns in “Don’t look” paradigm [50]. NT participants (\(N=12\), Male 7, Female 5; AQ 11–39) and diagnosed ASD participants (\(N=9\), Male 5, Female 4; AQ 25–46) viewed paired face and flower images, while their gaze was monitored. The image pairs were arranged diagonally upper-right to lower-left, to minimize generic spatial biases. The participants were asked to avoid looking at the face or the flower in a 2-alternative forced-choice task. The red (blue) points represent the gaze distribution of ASD (NT) participants, respectively. ASDs looked less at face (\(p<0.04\); less at eyes) and more at blank, in “Don’t look at the flower” condition (right) (\(p<0.03\))

We further extend this paradigm to a situation where participants viewed paired face and flower images and have to avoid looking at either one [50]. It was found that ASDs had less difficulty suppressing orienting to faces. ASDs (or high AQ) and NTs (or low AQ) showed different gaze patterns across social (e.g., eyes) and non-social regions in a face (Fig. 10.8). The ”Don’t Look” paradigm would provide an objective and effective method for screening ASDs from NTs.

10.4 Future Directions of IIPI Research

In order to achieve human-harmonized information systems, scientific knowledge and technologies of measuring, decoding, and controlling human behaviors are vital. As to this goal, our findings on implicit interpersonal information are of importance, and our project has been based on, and points toward, the research agenda that smooth and effective interpersonal communication depends strongly on implicit, non-symbolic information that emerges from dynamic interactions among agents. The examples shown in this chapter clearly illustrate this and provide the scientific ground for future research and technological development, including the delay compensation by using implicit visual-motor responses [10], diagnosis procedures for autistic spectrum disorders based on auditory [42, 46] and gaze processing [48–50]. We have also developed a marketing method based on leaky attractiveness [51] and objective measurements of immersiveness by using autonomic nervous and hormonal responses [52].

Among possible fields of applications, most prospective fields are learning, teaching [53], collaborations in working, physical, art, and cultural activities. Most of collaborative (or collective) behaviors occur in dynamic, reciprocal interactions. This is particularly true when such activities involve many pieces of implicit agreement or tacit knowledge [54]. Recent advancements of neuroscience and cognitive sciences have examined the multifaceted and dynamic processes in explicit and implicit interpersonal interactions [14, 18, 29, 31, 41, 55–58]. However, how such interactions are manifested and regulated in everyday life is largely unknown. Furthermore, technological developments based on implicit interpersonal communications have yet to be seen.

We have hypothesized that there might be two potential barriers to further advance our knowledge and technologies of implicit interpersonal information. First, many researches have been focused on “reading the mind from the brain.” This is mainly because recent advances in brain imaging have been capturing interests of researchers. However, over the decade of our research projects on implicit interpersonal information, it has gradually become clear that there exists unnoticed (and therefore implicit) information among agents on surfaces of the agents’ bodies and even in the space between the body surfaces. Moreover, reading the body surfaces would lead to more knowledge and technological advances in implicit interpersonal information.

For example, think about in-person interactions between an athlete and a coach. There are many potential channels though which information are conveyed: direct conversations, explicit and implicit feedback from the coach by observing the athlete’s movement, explicit and implicit bodily feedback from the athlete’s own actions, explicit and implicit feedback from observations of his/her own performance, social encouragement and discouragement, life patterns of the athlete, etc. Patterns appearing on the “surfaces” of the athlete’s body (skin, perspiration, breathing pattern, movement patterns, etc.) would tell a lot of states of the athlete. In addition, we would know that there are atmospheres that lead to either good or bad performance, often consequential and sometimes independent of the consequence of interactions among agents. This may appear to transpire from nowhere yet can be felt by those involved in the interaction. However, it is rather difficult to describe what they actually are and even more difficult to implement processes that mediate such contexts. This is mainly because the agents are unaware of it when they are highly involved in activities. Therefore, “truly wearable” devices that do not interfere with users activities are highly desired in the near future.

Based on these ideas in minds and a recent advance in a performance material capable of measuring biometric information [59], we have started up a new project “Information Processing Systems based on Implicit Ambient Surface Information.” In this project, we apply the knowledge and technologies assimilated in the pas research projects and extend to understand of information that exists on the surfaces of human body and machines but are largely ignored (“Implicit Ambient Surface Information”). We utilize it to establish intelligent information processing systems for creative human-machine collaboration. Especially, we aim at developing technologies that recode and decode implicit body movements and physiological responses without disrupting natural behaviors. Additionally, we accumulate scientific knowledge for theoretical advances. In order to achieve this, we plan to set practical fields (e.g., sports) and to test the developed technologies, propose theories, and accomplish the higher quality of activities by humans with collaborations with machines.

As stated in [53], explicit representations of “minds” are consequence of implicit and reciprocal processes between two agents. Thus, the networks of “minds” (or societies) are not necessarily based on explicit knowledge and concepts but entangled with complex implicit interactions at myriad levels (“rhizome” [60]) Then, an important question is how to measure, decode, and even control such implicit interactions.

One way to do this, among others, is to have users (e.g., athletes) be aware of implicit processes by explicit or implicit feedback and let them think (or even not think) about how they might impact such implicit interaction. Conversely, to understand their performing context, coaches would also have to have metacognition and emotion regulations. Knowledge and technologies to be developed by the new project “Information Processing Systems based on Implicit Ambient Surface Information” will help explicit and implicit collaborations between humans, between humans and machines, between humans though machines, and possibly between machines.

References

M. Kashino, M. Yoneya, H.-I. Liao, S. Furukawa, Reading the implicit mind from the body. NTT Tech. Rev. 12(11) (2014)

A.R. Damasio, The Feeling of What Happens: Body and Emotion in the Making of Consciousness (Mariner Books, 2000)

D. Kahneman, Thinking (Fast and Slow, Farrar, Straus and Giroux, 2011)

M. Yoneya, H.-I. Liao, S. Kidani, S. Furukawa, M. Kashino. Sounds in sequence modulate dynamic characteristics of microsaccades. Association for Research in Otolaryngology MidWinter Meeting (2014)

H.-I. Liao, S. Kidani, M. Yoneya, M. Kashino, S. Furukawa. Correspondences among pupillary dilation response, subjective salience of sounds, and loudness. Psychonomic Bulletin and Review (in press)

S. Yoshimoto, H. Imai, M. Kashino, T. Takeuchi, Pupil response and the subliminal mere exposure effect. PLoS One 9(2), e90670 (2014)

Y. Ooishi, H. Mukai, K. Watanabe, S. Kawato, M. Kashino. The effect of the tempo of music on the secretion of steroid and peptide hormones into human saliva. The 35th Annual Meeting of the Japan Neuroscience Society (2012)

S. Baron-Cohen, A.M. Leslie, U. Frith, Does the autistic child have a ‘theory of mind’? Cognition 21(1), 37–46 (1985)

C. Keysers, V. Gazzola, Integrating simulation and theory of mind: from self to social cognition. Trends Cogn. Sci. 11, 194–196 (2007)

S. Takamuku, H. Gomi, in 34th European Conference on Visual Perception. Background visual motion reduces pseudo-haptic sensation caused by delayed visual feedback during letter writing (2011)

V.S. Chib, K. Yun, H. Takahashi, S. Shimojo, Noninvasive remote activation of the ventral midbrain by transcranial direct current stimulation of prefrontal cortex. Transl. Psychiatry 3, e268,44, (2013)

Y. Takano, T. Yokawa, A. Masuda, J. Niimi, S. Tanaka, N. Hironaka, A rat model for measuring the effectiveness of transcranial direct current stimulation using fMRI. Neurosci. Lett. 491, 40–43 (2011)

T. Tanaka, Y. Takano, S. Tanaka, N. Hironaka, T. Hanakawa, K. Watanabe, M. Honda, Transcranial direct-current stimulation increases extracellular dopamine levels in the rat striatum. Frontiers Syst. Neurosci. 7(6) (2013)

K. Yun, K. Watanabe, S. Shimojo, Interpersonal body and neural synchronization as a marker of implicit social interaction. Sci. Rep. 2, 959 (2012)

Y. Takano, M. Ukezono, An experimental task to examine the mirror system in rats. Sci. Rep. 4, 6652 (2014)

G. Rizzolatti, L. Craighero, The mirror-neuron system. Annu. Rev. Neurosci. 27, 169–192 (2004)

F.J. Bernieri, R. Rosenthal, Fundamentals of Nonverbal Behavior. (Cambridge University Press, Cambridge, 1991)

J.K. Burgoon, L. A. Stern, L. Dillman, Interpersonal adaptation: dyadic interaction patterns (Cambridge University Press, Cambridge, 1995)

R. Schmidt, M. Richardson. Coordination: Neural, behavioral and social dynamics. (Springer, New York, 2008)

Z. Neda, E. Ravasz, Y. Brechet, T. Vicsek, A.L. Barabasi, Self-organizing processes: the sound of many hands clapping. Nature 403, 849–850 (2000)

M.J. Hove, J.L. Risen, It’s all in the timing: Interpersonal synchrony increases affiliation. Soc. Cogn. 27, 949–961 (2009)

S. Kirschner, M. Tomasello, Joint drumming: social context facilitates synchronization in preschool children. J. Exp. Child Psychol. 102, 299–314 (2009)

A.R. Damasio, Descartes’ Error: Emotion, reason, and the human brain. (Grosset/Putnam, 1994)

U. Bronfenbrenner, The ecology of human development: Experiments by nature and design (Harvard University Press, Cambridge, 1979)

J. Decety, C. Lamm, The role of the right temporoparietal junction in social interaction: how low-level computational processes contribute to meta-cognition. Neuroscientist 13, 580–593 (2007)

M. Iacoboni, M.D. Lieberman, B.J. Knowlton, I. Molnar-Szakacs, M. Moritz, C.J. Throop, A.P. Fiske, Watching social interactions produces dorsomedial prefrontal and medial parietal BOLD fMRI signal increases compared to a resting baseline. NeuroImage 21, 1167–1173 (2004)

G.G. Knyazev, J.Y. Slobodskoj-Plusnin, A.V. Bocharov, Event-related delta and theta synchronization during explicit and implicit emotion processing. Neuroscience 164, 1588–1600 (2009)

G. Dumas, J. Nadel, B. Soussignan, J. Martinerie, L. Garnero, Inter-brain synchronization during social interaction. PLoS One 5, e12166 (2010)

E. Tognoli, J. Lagarde, G.C. DeGuzman, J.A. Kelso, The phi complex as a neuromarker of human social coordination. Proc. Natl Acad. Sci. 104, 8190 (2007)

B. King-Casas, D. Tomlin, C. Anen, C.F. Camerer, S.R. Quartz, P.R. Montague, Getting to know you: reputation and trust in a two-person economic exchange. Science 308, 78–83 (2005)

P.R. Montague, G.S. Berns, J.D. Cohen, S.M. McClure, G. Pagnoni, M. Dhamala, M.C. Wiest, I. Karpov, R.D. King, N. Apple, R.E. Fisher, Hyperscanning: simultaneous fMRI during linked social interactions. Neuroimage 16(4), 1159–1164 (2002)

F. de Vico Fallani, V. Nicosia, R. Sinatra, L. Astolfi, F. Cincotti, D. Mattia, C. Wilke, A. Doud, V. Latora, B. He, F. Babiloni. Defecting or Not Defecting: How to “Read” Human Behavior during Cooperative Games by EEG Measurements. PLoS One 5, e14187 (2010)

K. Yun, D. Chung, J. Jeong, in Proceedings of the 6th International Conference on Cognitive Science. Emotional Interactions in Human Decision Making using EEG Hyperscanning, pp. 327–330 (2008)

U. Lindenberger, S.C. Li, W. Gruber, V. Müller, Brains swinging in concert: cortical phase synchronization while playing guitar. BMC Neurosci. 10, 22 (2009)

G. Pfurtscheller, F.H. Lopes da Silva, Event-related EEG/MEG synchronization and desynchronization: basic principles. Clin. Neurophysiol. 110, 1842–1857 (1999)

S.L. Bressler, J.A.S. Kelso, Cortical coordination dynamics and cognition. Trends Cognitive Sci. 5, 26–36 (2001)

P. Fries, A mechanism for cognitive dynamics: neuronal communication through neuronal coherence. Trends Cogn. Sci. 9, 474–480 (2005)

K. Watanabe, M.O. Abe, K. Takahashi, S. Shimojo. Short-term active interactions enhance implicit behavioral mirroring. Soc. Neurosci. 832(20) (2011)

M.R. Leary, Social anxiousness: the construct and its measurement. J. Pers. Assess. 47, 66–75 (1983)

T.L. Chartrand, J.A. Bargh, The chameleon effect: the perception-behavior link and social interaction. J. Pers. Soc. Psychol. 76, 893–910 (1999)

L. Noy, E. Dekel, U. Alon, The mirror game as a paradigm for studying the dynamics of two people improvising motion together. Proc. Natl Acad. Sci. 108, 20947–20952 (2011)

M. Kashino, S. Furukawa, T. Nakano, S. Washizawa, S. Yamagishi, A. Ochi, A. Nagaike, S. Kitazawa, N. Kato, in Association for Research in Otolaryngology MidWinter Meeting. Specific deficits of basic auditory processing in high-functioning pervasive developmental disorders (2013)

H.E. Gockel, R.P. Carlyon, A. Mehta, C.J. Plack, The frequency following response (FFR) may reflect pitch-bearing information but is not a direct representation of pitch. J. Assoc. Res. Otolaryngol. 12(6), 767–782 (2011)

Y.E. Cohen, E.I. Knudsen, Maps versus clusters: different representations of auditory space in the midbrain and forebrain. Trends Neurosci. 22(3), 128–135 (1999)

R.J. Kulesza Jr, R. Lukose, L.V. Stevens, Malformation of the human superior olive in autistic spectrum disorders. Brain Res. 1367, 360–371 (2011)

I.-F. Lin, T. Yamada, Y. Komine, N. Kato, M. Kashino, The absence of automatic grouping processes in individuals with autism spectrum disorder. Sci. Rep. 22(5), 10524 (2015)

B.C. Moore, H.E. Gockel, Properties of auditory stream formation. Philos. Trans. R. Soc. Lond. B: Biol. Sci. 367(1591), 919–931 (2012)

E. Shimojo, D.-A. Wu, S. Shimojo, Don’t look at the mouth, but then where? – Orthogonal task reveals latent eye avoidance behavior in subjects with high Autism Quotient scores. Annual Meeting of the Vision Sciences Society (2012)

E. Shimojo, D.-A. Wu, S. Shimojo, Don’t look at the face—social inhibition task reveals latent avoidance of social stimuli in gaze orientation in subjects with high Autism Quotient scores. Annual Meeting of the Vision Sciences Society (2013)

C. Wang, E. Shimojo, D.-A. Wu, S. Shimojo, Don’t look at the mouth, but then where?—Orthogonal task reveals latent eye avoidance behavior in subjects with diagnosed ASDs: a movie version. J. Vision 14(10), 682 (2014)

C. Saegusa, J. Intoy, S. Shimojo, Visual attractiveness is leaky: the asymmetrical relationship between face and hair. Frontiers Psychol. 6, 377 (2015)

Y. Ooishi, M. Kobayashi, N. Kitagawa, K. Ueno, S. Ise, M. Kashino. Effects of speakers’ unconscious subtle movemens on listener’s autonomic nerve activity, The 37th Annual Meeting of the Japan Neuroscience Society (2014)

K. Watanabe, Teaching as a dynamic phenomenon with interpersonal interactions. Mind, Brain and Education 7(2), 91–100 (2013)

M. Polanyi, The Tacit Dimension (University of Chicago Press, Chicago, 1966)

J. Decety, J.A. Sommerville, Shared representations between self and other: a social cognitive neuroscience view. Trends Cogn. Sci. 7(12), 527–533 (2003)

G. Knoblich, N. Sebanz, The social nature of perception and action. Curr. Dir. Psychol. Sci. 15(3), 99–104 (2006)

I. Konvalnika, A. Roepstroff, The two-brain approach: how can mutually interacting brains teach us something about social interaction? Frontiers Hum. Neurosci. 6(215), 1–9 (2012)

N. Sebanz, H. Bekkering, G. Knoblich, Joint action: bodies and minds moving together. Trends Cogn. Sci. 10(2), 70–76 (2006)

S. Tsukada, H. Nakashima, K. Torimitsu, Conductive polymer combined silk fiber bundle for bioelectrical signal recording. PLoS One 7(4), e33689 (2012)

G. Deleuze, F. Guattari, A Thousand Plateaus. Minuit, 1980. (English translation: University of Minnesota Press, 1987)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer Japan

About this chapter

Cite this chapter

Kashino, M., Shimojo, S., Watanabe, K. (2016). Critical Roles of Implicit Interpersonal Information in Communication. In: Nishida, T. (eds) Human-Harmonized Information Technology, Volume 1. Springer, Tokyo. https://doi.org/10.1007/978-4-431-55867-5_10

Download citation

DOI: https://doi.org/10.1007/978-4-431-55867-5_10

Published:

Publisher Name: Springer, Tokyo

Print ISBN: 978-4-431-55865-1

Online ISBN: 978-4-431-55867-5

eBook Packages: Computer ScienceComputer Science (R0)