Abstract

Magnetoencephalography (MEG) has been used to explore basic auditory function in humans. In this chapter, major streams of clinical MEG studies based on auditory basic function were briefly reviewed according to the stimulation-measurement paradigms and introduce our recent studies as one possibility to expand a range of auditory experimental paradigm. Repetition of an identical stimulus is the simplest form of stimulus sequence. A paired-click paradigm, in which inter-pair interval is the only statistical variable, has been most commonly used in clinical application as a probe for sensory gating impairment. Given that another stimulus with different physical property is infrequently inserted into a series of identical stimulus repetition, the sequence becomes well-known oddball paradigms, which can probe impairment of the sensory memory trace. The common target of these paradigms has been the neural correlates of impairment in neuropsychiatric disorders, mostly language related. More than two distinct stimuli can be organized into statistical structure similar to language and music. Tone sequences with higher-order structure can potentially probe impairment of higher brain function. Finally, recent experiments in the authors’ laboratory are described as a seed for potential application.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Paired-click paradigm

- Oddball paradigm

- Statistical learning

- Markov stochastic model

- Magnetoencephalography

1 Introduction

Magnetoencephalography (MEG) is a noninvasive technique for investigating neuronal activity in the living human brain and has been used to study human auditory function since 1990. MEG is suitable to study auditory function due to two major advantages. First, the MEG does not produce any sound during measurement. The neuromagnetometer system measuring MEG activity is a completely passive-mode machine that solely measures magnetic flux produced by the neuronal current in the brain without any radiation or production of physical particles or electromagnetic waves. It is a great advantage for auditory researchers that the MEG can record neural activity in complete silence, avoiding contamination of acoustic stimulation delivered to the subjects with machine noise. Second, the MEG can well resolve the signals produced by the auditory cortices located bilaterally in the temporal lobes. It is more difficult to resolve electric potentials produced by the auditory cortex in each hemisphere, considering the symmetrical scalp potential when either side of the auditory cortex is activated in isolation. Additionally, any auditory stimulation cannot solely activate a single side of the auditory cortex by the anatomical limitation. Auditory information from each ear does radiate both sides of the auditory cortex with contralateral weighting, without utilizing specialized experimental conditions such as frequency tagging [17]. Furthermore, the electric potential reflects activity in the subcortical regions as well as in the auditory cortex whereas MEG predominantly reflects cortical responses. Thus, the MEG is an indispensable tool to noninvasively measure and analyze neural signals from the auditory cortices with high temporal and spatial resolution.

A considerable amount of studies concerning human auditory system has so far been reported, addressing selective attention [39], auditory stream segregation [81], scene analysis [20], and information masking. In this chapter, we will briefly review clinical MEG studies concerning basic auditory function from the viewpoint of experimental paradigms and describe our recent trials to expand experimental paradigm specifically suitable to auditory research.

2 Repetition

Simple averaging of the evoked responses to repeated stimuli (e.g., a click, tone, or noise burst) has long been used in clinical studies. In adults, the most prominent deflection of the auditory evoked responses (AEFs) to tone bursts with enough duration presented with sufficient interstimulus intervals for response recovery is N1m (magnetic counterpart of N1 potential) peaking approximately 100 ms after stimulus onset [21, 33, 44, 51]. Although tone clicks or bursts with rapidly rising envelope can elicit earlier magnetic responses such as brain stem [15] and middle latency responses [40], clinical application has been limited [31].

The AEF components, as well as auditory evoked potentials (AEPs) [19], are known to change with maturation [25, 84]. In children until the age of 7 years, the most prominent AEP component is P1, from which the later components N1 and P2 are branching (Fig. 5.1). The P1 (P1m) response shows a progressive and rapid decrease in latency along white matter maturation (i.e., myelination), which can be represented by increasing fractional anisotropy (FA) in diffusion tensor imaging. The white matter FA in the acoustic radiations of the auditory pathway, from the medial geniculate nucleus in the thalamus to the primary auditory cortex, is negatively correlated with P1m latency [60]. This latency shortening is delayed in children with autism [61, 62].

(a) Maturation of auditory evoked responses (Revised from [19]). Evoked potentials were recorded at Cz referenced to the right mastoid from normal-hearing participants (N = 8–12 for each age group), while a 23-ms speech syllable was presented with an interstimulus interval of 2 s. Averaged responses were filtered with a band-pass 4–30 Hz filter and grand-averaged within each age group. (b) Developmental latency shortening of P1m revealed by the two previous studies (Revised from [25] and [84])

The source for N1m responses to pure tones in the left hemisphere is known to be localized posterior (~14 mm) to that in the right hemisphere [14], resulting at least partially from the larger planum temporale in the left hemisphere. A significant reduction of the right-sided N1m anteriority consistent with neuroanatomical deviation was first reported in patients with schizophrenia [63]. Recently, similar reduction of the anteriority was replicated in patients with autism and an association between language functioning and the degree of asymmetry was suspected [68].

Gamma-band oscillatory activity in MEG has been considered as a reliable marker for cognitive function. Oscillatory activity can be evoked or induced by a variety of cognitive tasks. In auditory modality, repetition of click trains has often been used as stimuli to record the auditory steady-state response (ASSR), which exhibits resonant frequencies in response to the click trains at approximately 40 Hz most frequently [50, 54]. Previous MEG studies reported reduced gamma-band ASSR in patients with chronic schizophrenia [76] and in patients with bipolar disorder [49].

3 Paired Repetition

Paired-click paradigm has been used for clinical research since 1980s. Sensory gating function, which is considered to represent a very early stage of attention, has been studied using the paired-click paradigm [2]. In this paradigm, identical clicks (or short sounds) are presented as a pair “S1-S2” typically separated with 0.5 s at intertrial intervals of several to c.a. 10 s [48]. In normal subjects, the responses to the S2 are significantly reduced compared with those to the S1, which has been interpreted as a representation of sensory gating function to suppress incoming irrelevant sensory inputs. The most reliable neurophysiological index of the response reduction is considered to be P50 (or the magnetic counterpart P50m), which is generated near the primary auditory cortex peaking approximately 50 ms after the stimulus onset. In patients with schizophrenia, this amplitude reduction is less pronounced compared with normal subjects, which has been believed to account for cognitive symptoms such as trouble focusing induced by sensory overload.

The sensory gating effect was also studied by using MEG [8, 9, 13, 24, 27, 78]. The MEG sensory gating deficit in schizophrenia was also replicated with left hemispheric dominance [74] and was presumed partially resulting from a complex alteration of information processing [55] and impaired gating process associated with alpha oscillation [43]. In the schizophrenia group, anterior hippocampal volume was smaller, and both the P50 and M50 gating ratios were larger (worse) than in controls [75]. Patients with schizophrenia showed significantly higher P50m gating ratios to human voices specifically in the left hemisphere.

Moreover, patients with higher left P50m gating ratios showed more severe auditory hallucinations, while patients with higher right P50m gating ratios showed more severe negative symptoms [23]. Acoustic startle prepulse inhibition (PPI) is known as another index of sensory (sensorimotor) gating. However, previous studies found little correlation between the two measures, suggesting independent aspects of brain inhibitory functions [7, 26, 28].

Several MEG studies were conducted to investigate the pathophysiology of stuttering [4, 6, 66, 67, 77]. Using this paired-click paradigm, a previous study reported that stutterers exhibited impaired left auditory sensory gating and expanded tonotopic organization in the right hemisphere, which was consistent with a significant increase in the gray matter volume of the right superior temporal gyrus revealed by voxel-based morphometry [37]. The degree of PPI, on the other hand, was not correlated with the effect of altered auditory feedback on stuttering [3].

In this paradigm, the inter-pair interval is the only variable parameter. The predictabilities of the occurrence timing of S1 and S2 are quite asymmetric. The forthcoming S2 appears 0.5 s after the most recent S1, whereas the S1 appears several to c.a. 10 s after the most recent S2. Previous studies on temporal reproduction and sensorimotor synchronization revealed hierarchically organized chronometric function in humans [42, 56]. The neural substrates for temporal prediction in audition in this time range may involve cortical and subcortical temporal processing network (Fig. 5.2) [69]. Function assessed by this paradigm may include not only attention-mediated gating but also temporal processing.

A histogram of timing errors in a behavioral task of time-interval reproduction in a representative subject. Intervals of 0.25 and 0.5 s were well reproduced, whereas timing error increased drastically when the interval gets longer than 1 or 2 s. Behavioral performance of temporal reproduction correlated with neuromagnetic measures of P1m gating (unpublished data)

4 Oddball Paradigm

The oddball paradigm (first used by Squires et al. [72]) has been extensively used in a considerable range of MEG as well as EEG studies to probe human auditory system. A large part of the studies on human auditory function using oddball paradigms focused on the mismatch negativity (MMN) [46], which has been considered as an index of pre-attentive change detection processes occurred in the absence of directed attention. The conventional MMN is defined as a differential event-related component superimposed onto the response to oddballs. The MMN can hence be extracted by subtracting the responses to the standard stimuli from those to the rare stimuli in oddball paradigms. The MMN is one of the most promising neurophysiological candidates for biomarkers reflecting mental illness, such as schizophrenia (first reported by Shelley et al. [70]) and Alzheimer’s disease (first reported by Pekkonen et al. [53]).

Previous studies showed that the MMNm (mismatch fields; the magnetic counterpart of the MMN) produced by speech sound rather than tonal stimuli exhibited more marked reduction in schizophrenia [35]. The power of the speech-sound MMNm in the left hemisphere was positively correlated with gray matter volume of the left planum temporale in patients with schizophrenia, implying that a significant reduction of the gray matter volume of the left planum temporale may underlie functional abnormalities of fundamental language-related processing in schizophrenia (Fig. 5.3) [82]. Latency for speech-sound MMNm in adults with autism was prolonged compared to the normal subjects [34]. The prolonged peak latency of speech-sound MMNm was replicated in children with autism [59], and the delay was most evident in those with concomitant language impairment, which may validate the speech-sound MMNm as a biomarker for pathology relevant to language ability.

Scatterplot depicting a correlation between the phonetic MMN power in the left hemisphere and the gray matter volume of the left planum temporale in patients with schizophrenia (n = 13). (Revised from [82])

MMN generation is regulated through the N-methyl-D-aspartate-type glutamate receptor [30], which was supported by a recent study showing that the MMNm was modulated by genetic variations in metabotropic glutamate receptor 3 (GRM3) in healthy subjects [36]. It has been known that people with the variant of the GRM3 gene were at increased risk of developing bipolar disorder as well as schizophrenia [32]. The power of MMNm in the right hemisphere under the pure-tone condition was significantly delayed in patients with bipolar disorder [73]. MMNm elicited by pitch deviance could be a potential trait marker reflecting the global severity of bipolar disorder [71]. Recent advances in this research line can be found elsewhere [47].

In the research lines of basic studies, it was found that ensembles of physically different stimuli that share the same abstract feature could produce MMN (abstract-feature MMN) [64]. A notion of error signals in a framework of predictive processing may include MMN-like activities produced by cross-modal oddball paradigms such as visual-auditory [22, 79, 85, 87] and motor-auditory [86] links. In between the previous notion of stimulus repetition and oddball paradigms, roving standard paradigm has been receiving attention [10]. Using this paradigm, a recent study proposed two separate mechanisms involved in auditory memory trace formation [58].

5 Higher-Order Stochastic Sequence

Predictive coding frameworks of perception [16] tell us that most of the stimulus sequences used in AEF studies may have been too simple for our brain as a highly predictive organ. In other words, most of the event-related response studies may reflect the brain already adapted to (i.e., learned) the experimental paradigm (i.e., contrivances). Indeed, rule learning is achieved very rapidly even when complex rules are embedded in the sequence [5, 45].

The Markov chain [41] is one of the methods that can regulate statistical rules and systematically expand the scope of stochastic information processing in auditory system. The Markov chain model is a specific form of variable-order Markov models, which have been applied to a wide variety of research fields such as information theory, machine-learning, and human learning of artificial grammars [57]. The Markov property is described that the next state depends only on the recent state and not on the sequence of events that preceded it.

A conventional oddball paradigm in which standard (s) stimuli are repeatedly presented while infrequently replaced by the other rare (r) stimuli with a probability of p is described by the following two unconditional probability components: \( \left\{\mathrm{P}(s)=1-\mathrm{p},\mathrm{P}(r)=\mathrm{p}\right\} \). The oddball sequence is often constrained by pseudo-random replacement of rare stimuli so that the rare stimuli are not presented consecutively. The stimulus sequence in such a case is considered as a discrete-time Markov chain (DTMC) of one order and can be described by the following three conditional probability components: \( \left\{\mathrm{P}\left(s\Big|s\right)=\left(1-2\mathrm{p}\right)/\left(1-\mathrm{p}\right),\mathrm{P}\left(r\Big|s\right)=\mathrm{p}/\left(1-\mathrm{p}\right),\mathrm{P}\left(s\Big|r\right)=1\right\} \). The next stimulus “s or r” is statistically defined by the most recent stimulus “s or r” (Fig. 5.4a). The DTMC, thus involving conventional pseudo-random oddball sequences, can systematically control the randomness and regularities embedded in stimulus sequence.

(a) A state transition diagram of pseudo-random oddball sequences. From the law of total probability \( \mathrm{P}(r)=\mathrm{P}\left(r\left|s\right.\right)\mathrm{P}(s)+\mathrm{P}\left(r\left|r\right.\right)\mathrm{P}(r) \); \( \mathrm{P}(s)=\mathrm{P}\left(s\left|s\right.\right)\mathrm{P}(s)+\mathrm{P}\left(s\left|r\right.\right)\mathrm{P}(r) \), where \( \mathrm{P}\left(r\left|r\right.\right)=0,\ \mathrm{P}\left(s\left|r\right.\right)=1,\ \mathrm{P}(r)=\mathrm{p},\ \mathrm{and}\ \mathrm{P}(s)=1-\mathrm{p} \), we have the transitional probabilities \( \mathrm{P}\left(s\left|s\right.\right)=\left(1-2\mathrm{p}\right)/\left(1-\mathrm{p}\right)\ \mathrm{and}\ \mathrm{P}\left(r\left|s\right.\right)=\mathrm{p}/\left(1-\mathrm{p}\right)\ \left(\mathrm{p}<0.5\right) \). (b) A state transition diagram of a second-order Markov chain used in our study. The circled digits indicate two adjacent tones: “3,5” indicates tone 5 and is followed by tone 3. The arrows on the solid lines indicate transitions with 80 % probability and those on the dashed lines indicate transitions with 5 % probability each from the state “2,1.” All the other transition arrows with 5 % probability were ignored to avoid illegibility

Although many behavioral studies investigated auditory sequence learning or statistical learning [65], surprisingly few neurophysiological studies have been conducted to date [1, 12, 52]. To the best of our knowledge, Furl et al. first conducted an MEG study using stimulus sequences based on a Markov stochastic model [18]. In this study, MEG responses were recorded while listeners were paying attention to the auditory sequence. We carried out a study using Markov paradigm to clarify whether learning achievement was detected by a neurophysiological measure in ignoring condition, taking clinical susceptibility of ignoring condition into consideration. A difficulty of the sequence learning, which is parallel to the time that is needed to learn the sequence, depends on variabilities of stimuli, transition patterns, and the order of the Markov chains.

After pilot studies, we set the parameters so that the learning effect could be captured within the measurement period of 20–30 min. Figure 5.4b indicates an example of stochastic sequences used in our recent study [11].

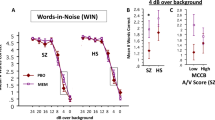

During exposure sequence progression, the responses to the tones that appeared with higher transitional probability decayed, whereas those to the tones that appeared with lower probability retained their amplitude. Temporal profiles of the source waveforms seem astonishingly similar to those depicted in previous MMN/MMNm studies (Fig. 5.5). The amplitude decay, which may reflect learning achievement, was more rapid in attending condition compared to ignoring condition (Fig. 5.6). Learning effect detected in between the first and second thirds of the explicit sequence was preserved in the last third of the sequence, in which spectral shifts occurred without changing relative pitch intervals (i.e., transposition), suggesting that the participants could recognize the transposed sequence as the same melody already learned in the preceding exposure sequence. In ignoring condition, the MEG data indicated that the participants could finally learn the melody.

Time courses of N1m amplitude changes along sequence progression in the ignoring (a) and attending conditions (b) (N = 14). The solid lines link N1m amplitudes for tones that appeared with higher transitional probability, and the dashed lines link N1m amplitudes for tones that appeared with lower transitional probability. The error bars indicate the standard error of the mean

The temporal profiles of the difference waveforms obtained from the responses to tones that appeared with higher and lower transitional probabilities were quite similar to the MMNm. The difference observed in this study cannot be explained by short-term effects based on the assumption that there are distinct change-specific neurons in the auditory cortex that elicit the MMN. On the other hand, the adaptation hypothesis assuming that preceding stimuli adapt feature-specific neurons was proposed to interpret the MMN [29].

The present results suggest that the adaptation to the statistical structure embedded in tone sequences may extend longer in timescale than sensory memory, which has been considered to work as a comparator between immediate past and forthcoming stimulus in previous studies using conventional oddball paradigm. A possible comparator that works during the statistical learning in this time range may involve the hippocampus [38].

Recently, Yaron et al. found that neurons in the rat auditory cortex were sensitive to the detailed structure of sound sequences over timescales of minutes, by controlling periodicity of deviant occurrence in oddball sequences [83]. On the other hand, Wilson et al. found a difference between humans and macaque monkeys despite a considerable level of cross-species correspondence [80]. These findings suggest that, although the mammals share auditory statistical learning mechanism expanding a timescale of minutes, there may be some difference between humans and monkeys when a statistical structure of the auditory sequence becomes as complex as human languages.

One of the main functions of our brain is to convert ensembles of individual occurrences in our environment into integrated knowledge by accumulating incidence and extracting hidden rules in order to better cope with future environmental changes. Introduction of higher-order stochastic rules into neurophysiological measure may give us a new scope for clinical pathological evaluation.

6 Conclusion

Clinical studies related to basic auditory function were briefly reviewed and our recent trials were described as a possible way to further investigate human auditory function. Any auditory sequence has statistics in the temporal and spectral axes. In discrete tone sequences, simple repetition {P(s) = 1} and random sequence {P(Si) = 1/i} are both ends of a broad statistical spectrum of the auditory events occurred in our living environment. Auditory sequences such as language and music have a hierarchical statistical structure, which can be interpreted as a context inspired by the human brain. Conversely, the human brain also processes sensory inputs in context-seeking fashion, which leads to integration of knowledge necessary to cope with forthcoming events at least cost. In any experimental approach to the human brain, such learning feature of the brain cannot be neglected. It must be noted that various levels of learning (e.g., gaiting, adaptation, reasoning) that involve distinct coupling of multiple brain regions, depending on the experimental paradigms or tasks, may underlie acquired neurophysiological data.

References

Abla D, Katahira K, Okanoya K. On-line assessment of statistical learning by event-related potentials. J Cogn Neurosci. 2008;20(6):952–64. doi:10.1162/jocn.2008.20058.

Adler LE, Pachtman E, Franks RD, Pecevich M, Waldo MC, Freedman R. Neurophysiological evidence for a defect in neuronal mechanisms involved in sensory gating in schizophrenia. Biol Psychiatry. 1982;17(6):639–54.

Alm PA. Stuttering and sensory gating: a study of acoustic startle prepulse inhibition. Brain Lang. 2006;97(3):317–21. doi:10.1016/j.bandl.2005.12.001.

Beal DS, Quraan MA, Cheyne DO, Taylor MJ, Gracco VL, De Nil LF. Speech-induced suppression of evoked auditory fields in children who stutter. NeuroImage. 2011;54(4):2994–3003. doi:10.1016/j.neuroimage.2010.11.026.

Bendixen A, Schroger E. Memory trace formation for abstract auditory features and its consequences in different attentional contexts. Biol Psychol. 2008;78(3):231–41. doi:10.1016/j.biopsycho.2008.03.005.

Biermann-Ruben K, Salmelin R, Schnitzler A. Right rolandic activation during speech perception in stutterers: a MEG study. NeuroImage. 2005;25(3):793–801. doi:10.1016/j.neuroimage.2004.11.024.

Braff DL, Light GA, Swerdlow NR. Prepulse inhibition and P50 suppression are both deficient but not correlated in schizophrenia patients. Biol Psychiatry. 2007;61(10):1204–7. doi:10.1016/j.biopsych.2006.08.015.

Clementz BA, Blumenfeld LD, Cobb S. The gamma band response may account for poor P50 suppression in schizophrenia. Neuroreport. 1997;8(18):3889–93.

Clementz BA, Dzau JR, Blumenfeld LD, Matthews S, Kissler J. Ear of stimulation determines schizophrenia-normal brain activity differences in an auditory paired-stimuli paradigm. Eur J Neurosci. 2003;18(10):2853–8.

Cowan N, Winkler I, Teder W, Naatanen R. Memory prerequisites of mismatch negativity in the auditory event-related potential (ERP). J Exp Psychol Learn Mem Cogn. 1993;19(4):909–21.

Daikoku T, Yatomi Y, Yumoto M. Implicit and explicit statistical learning of tone sequences across spectral shifts. Neuropsychologia. 2014;63:194–204. doi:10.1016/j.neuropsychologia.2014.08.028.

Daikoku T, Yatomi Y, Yumoto M. Statistical learning of music- and language-like sequences and tolerance for spectral shifts. Neurobiol Learn Mem. 2015;118:8–19. doi:10.1016/j.nlm.2014.11.001.

Edgar JC, Huang MX, Weisend MP, Sherwood A, Miller GA, Adler LE, Canive JM. Interpreting abnormality: an EEG and MEG study of P50 and the auditory paired-stimulus paradigm. Biol Psychol. 2003;65(1):1–20.

Elberling C, Bak C, Kofoed B, Lebech J, Saermark K. Auditory magnetic fields from the human cerebral cortex: location and strength of an equivalent current dipole. Acta Neurol Scand. 1982;65(6):553–69.

Erne SN, Scheer HJ, Hoke M, Pantew C, Lutkenhoner B. Brainstem auditory evoked magnetic fields in response to stimulation with brief tone pulses. Int J Neurosci. 1987;37(3–4):115–25.

Friston K. A theory of cortical responses. Philos Trans R Soc Lond B Biol Sci. 2005;360(1456):815–36. doi:10.1098/rstb.2005.1622.

Fujiki N, Jousmaki V, Hari R. Neuromagnetic responses to frequency-tagged sounds: a new method to follow inputs from each ear to the human auditory cortex during binaural hearing. J Neurosci. 2002;22(3):RC205.

Furl N, Kumar S, Alter K, Durrant S, Shawe-Taylor J, Griffiths TD. Neural prediction of higher-order auditory sequence statistics. NeuroImage. 2011;54(3):2267–77. doi:10.1016/j.neuroimage.2010.10.038.

Gilley PM, Sharma A, Dorman M, Martin K. Developmental changes in refractoriness of the cortical auditory evoked potential. Clin Neurophysiol: Off J Int Fed Clin Neurophysiol. 2005;116(3):648–57. doi:10.1016/j.clinph.2004.09.009.

Gutschalk A, Dykstra AR. Functional imaging of auditory scene analysis. Hear Res. 2014;307:98–110. doi:10.1016/j.heares.2013.08.003.

Hari R, Pelizzone M, Makela JP, Hallstrom J, Leinonen L, Lounasmaa OV. Neuromagnetic responses of the human auditory cortex to on- and offsets of noise bursts. Audiology. 1987;26(1):31–43.

Herholz SC, Lappe C, Knief A, Pantev C. Neural basis of music imagery and the effect of musical expertise. Eur J Neurosci. 2008;28(11):2352–60. doi:10.1111/j.1460-9568.2008.06515.x.

Hirano Y, Hirano S, Maekawa T, Obayashi C, Oribe N, Monji A, Kasai K, Kanba S, Onitsuka T. Auditory gating deficit to human voices in schizophrenia: a MEG study. Schizophr Res. 2010;117(1):61–7. doi:10.1016/j.schres.2009.09.003.

Hirano Y, Onitsuka T, Kuroki T, Matsuki Y, Hirano S, Maekawa T, Kanba S. Auditory sensory gating to the human voice: a preliminary MEG study. Psychiatry Res. 2008;163(3):260–9. doi:10.1016/j.pscychresns.2007.07.002.

Holst M, Eswaran H, Lowery C, Murphy P, Norton J, Preissl H. Development of auditory evoked fields in human fetuses and newborns: a longitudinal MEG study. Clin Neurophysiol: Off J Int Fed Clin Neurophysiol. 2005;116(8):1949–55. doi:10.1016/j.clinph.2005.04.008.

Hong LE, Summerfelt A, Wonodi I, Adami H, Buchanan RW, Thaker GK. Independent domains of inhibitory gating in schizophrenia and the effect of stimulus interval. Am J Psychiatry. 2007;164(1):61–5. doi:10.1176/appi.ajp.164.1.61.

Huang MX, Edgar JC, Thoma RJ, Hanlon FM, Moses SN, Lee RR, Paulson KM, Weisend MP, Irwin JG, Bustillo JR, Adler LE, Miller GA, Canive JM. Predicting EEG responses using MEG sources in superior temporal gyrus reveals source asynchrony in patients with schizophrenia. Clin Neurophysiol: Off J Int Fed Clin Neurophysiol. 2003;114(5):835–50.

Inui K, Tsuruhara A, Nakagawa K, Nishihara M, Kodaira M, Motomura E, Kakigi R. Prepulse inhibition of change-related P50m no correlation with P50m gating. Springerplus. 2013;2:588. doi:10.1186/2193-1801-2-588.

Jaaskelainen IP, Ahveninen J, Bonmassar G, Dale AM, Ilmoniemi RJ, Levanen S, Lin FH, May P, Melcher J, Stufflebeam S, Tiitinen H, Belliveau JW. Human posterior auditory cortex gates novel sounds to consciousness. Proc Natl Acad Sci U S A. 2004;101(17):6809–14. doi:10.1073/pnas.0303760101.

Javitt DC, Steinschneider M, Schroeder CE, Arezzo JC. Role of cortical N-methyl-D-aspartate receptors in auditory sensory memory and mismatch negativity generation: implications for schizophrenia. Proc Natl Acad Sci U S A. 1996;93(21):11962–7.

Kaga K, Kurauchi T, Yumoto M, Uno A. Middle-latency auditory-evoked magnetic fields in patients with auditory cortex lesions. Acta Otolaryngol. 2004;124(4):376–80.

Kandaswamy R, McQuillin A, Sharp SI, Fiorentino A, Anjorin A, Blizard RA, Curtis D, Gurling HM. Genetic association, mutation screening, and functional analysis of a Kozak sequence variant in the metabotropic glutamate receptor 3 gene in bipolar disorder. JAMA Psychiatry. 2013;70(6):591–8. doi:10.1001/jamapsychiatry.2013.38.

Kasai K, Asada T, Yumoto M, Takeya J, Matsuda H. Evidence for functional abnormality in the right auditory cortex during musical hallucinations. Lancet. 1999;354(9191):1703–4. doi:10.1016/S0140-6736(99)05213-7.

Kasai K, Hashimoto O, Kawakubo Y, Yumoto M, Kamio S, Itoh K, Koshida I, Iwanami A, Nakagome K, Fukuda M, Yamasue H, Yamada H, Abe O, Aoki S, Kato N. Delayed automatic detection of change in speech sounds in adults with autism: a magnetoencephalographic study. Clin Neurophysiol: Off J Int Fed Clin Neurophysiol. 2005;116(7):1655–64. doi:10.1016/j.clinph.2005.03.007.

Kasai K, Yamada H, Kamio S, Nakagome K, Iwanami A, Fukuda M, Yumoto M, Itoh K, Koshida I, Abe O, Kato N. Neuromagnetic correlates of impaired automatic categorical perception of speech sounds in schizophrenia. Schizophr Res. 2003;59(2–3):159–72.

Kawakubo Y, Suga M, Tochigi M, Yumoto M, Itoh K, Sasaki T, Kano Y, Kasai K. Effects of metabotropic glutamate receptor 3 genotype on phonetic mismatch negativity. PLoS One. 2011;6(10):e24929. doi:10.1371/journal.pone.0024929.

Kikuchi Y, Ogata K, Umesaki T, Yoshiura T, Kenjo M, Hirano Y, Okamoto T, Komune S, Tobimatsu S. Spatiotemporal signatures of an abnormal auditory system in stuttering. NeuroImage. 2011;55(3):891–9. doi:10.1016/j.neuroimage.2010.12.083.

Kumaran D, Maguire EA. Match mismatch processes underlie human hippocampal responses to associative novelty. J Neurosci. 2007;27(32):8517–24. doi:10.1523/JNEUROSCI.1677-07.2007.

Lee AK, Larson E, Maddox RK, Shinn-Cunningham BG. Using neuroimaging to understand the cortical mechanisms of auditory selective attention. Hear Res. 2014;307:111–20. doi:10.1016/j.heares.2013.06.010.

Lutkenhoner B, Krumbholz K, Lammertmann C, Seither-Preisler A, Steinstrater O, Patterson RD. Localization of primary auditory cortex in humans by magnetoencephalography. NeuroImage. 2003;18(1):58–66.

Markov AA. Extension of the limit theorems of probability theory to a sum of variables connected in a chain, Markov Chains, vol. 1. Wiley; 1971.

Mates J, Muller U, Radil T, Poppel E. Temporal integration in sensorimotor synchronization. J Cogn Neurosci. 1994;6(4):332–40. doi:10.1162/jocn.1994.6.4.332.

Mathiak K, Ackermann H, Rapp A, Mathiak KA, Shergill S, Riecker A, Kircher TT. Neuromagnetic oscillations and hemodynamic correlates of P50 suppression in schizophrenia. Psychiatry Res. 2011;194(1):95–104. doi:10.1016/j.pscychresns.2011.01.001.

Muhlnickel W, Elbert T, Taub E, Flor H. Reorganization of auditory cortex in tinnitus. Proc Natl Acad Sci U S A. 1998;95(17):10340–3.

Naatanen R, Astikainen P, Ruusuvirta T, Huotilainen M. Automatic auditory intelligence: an expression of the sensory-cognitive core of cognitive processes. Brain Res Rev. 2010;64(1):123–36. doi:10.1016/j.brainresrev.2010.03.001.

Naatanen R, Gaillard AW, Mantysalo S. Early selective-attention effect on evoked potential reinterpreted. Acta Psychol (Amst). 1978;42(4):313–29.

Naatanen R, Shiga T, Asano S, Yabe H. Mismatch negativity (MMN) deficiency: a break-through biomarker in predicting psychosis onset. Int J Psychophysiol. 2015;95(3):338–44. doi:10.1016/j.ijpsycho.2014.12.012.

Nagamoto HT, Adler LE, Waldo MC, Freedman R. Sensory gating in schizophrenics and normal controls: effects of changing stimulation interval. Biol Psychiatry. 1989;25(5):549–61.

Oda Y, Onitsuka T, Tsuchimoto R, Hirano S, Oribe N, Ueno T, Hirano Y, Nakamura I, Miura T, Kanba S. Gamma band neural synchronization deficits for auditory steady state responses in bipolar disorder patients. PLoS One. 2012;7(7):e39955. doi:10.1371/journal.pone.0039955.

Otsuka A, Yumoto M, Kuriki S, Hotehama T, Nakagawa S. Frequency characteristics of neuromagnetic auditory steady-state responses to sinusoidally amplitude-modulated sweep tones. Clin Neurophysiol: official journal of the International Federation of Clinical Neurophysiology. 2015. doi:10.1016/j.clinph.2015.05.002 (in press).

Pantev C, Hoke M, Lutkenhoner B, Lehnertz K, Kumpf W. Tinnitus remission objectified by neuromagnetic measurements. Hear Res. 1989;40(3):261–4.

Paraskevopoulos E, Kuchenbuch A, Herholz SC, Pantev C. Statistical learning effects in musicians and non-musicians: an MEG study. Neuropsychologia. 2012;50(2):341–9. doi:10.1016/j.neuropsychologia.2011.12.007.

Pekkonen E, Jousmaki V, Kononen M, Reinikainen K, Partanen J. Auditory sensory memory impairment in Alzheimer’s disease: an event-related potential study. Neuroreport. 1994;5(18):2537–40.

Picton TW, John MS, Dimitrijevic A, Purcell D. Human auditory steady-state responses. Int J Audiol. 2003;42(4):177–219.

Popov T, Jordanov T, Weisz N, Elbert T, Rockstroh B, Miller GA. Evoked and induced oscillatory activity contributes to abnormal auditory sensory gating in schizophrenia. NeuroImage. 2011;56(1):307–14. doi:10.1016/j.neuroimage.2011.02.016.

Poppel E. Pre-semantically defined temporal windows for cognitive processing. Philos Trans R Soc Lond B Biol Sci. 2009;364(1525):1887–96. doi:10.1098/rstb.2009.0015.

Reber AS. Implicit learning of artificial grammars. J Verbal Learn Verbal Behav. 1967;6:855–63.

Recasens M, Leung S, Grimm S, Nowak R, Escera C. Repetition suppression and repetition enhancement underlie auditory memory-trace formation in the human brain: an MEG study. NeuroImage. 2015;108:75–86. doi:10.1016/j.neuroimage.2014.12.031.

Roberts TP, Cannon KM, Tavabi K, Blaskey L, Khan SY, Monroe JF, Qasmieh S, Levy SE, Edgar JC. Auditory magnetic mismatch field latency: a biomarker for language impairment in autism. Biol Psychiatry. 2011;70(3):263–9. doi:10.1016/j.biopsych.2011.01.015.

Roberts TP, Khan SY, Blaskey L, Dell J, Levy SE, Zarnow DM, Edgar JC. Developmental correlation of diffusion anisotropy with auditory-evoked response. Neuroreport. 2009;20(18):1586–91. doi:10.1097/WNR.0b013e3283306854.

Roberts TP, Lanza MR, Dell J, Qasmieh S, Hines K, Blaskey L, Zarnow DM, Levy SE, Edgar JC, Berman JI. Maturational differences in thalamocortical white matter microstructure and auditory evoked response latencies in autism spectrum disorders. Brain Res. 2013;1537:79–85. doi:10.1016/j.brainres.2013.09.011.

Roberts TP, Schmidt GL, Egeth M, Blaskey L, Rey MM, Edgar JC, Levy SE. Electrophysiological signatures: magnetoencephalographic studies of the neural correlates of language impairment in autism spectrum disorders. Int J Psychophysiol. 2008;68(2):149–60. doi:10.1016/j.ijpsycho.2008.01.012.

Rojas DC, Teale P, Sheeder J, Simon J, Reite M. Sex-specific expression of Heschl’s gyrus functional and structural abnormalities in paranoid schizophrenia. Am J Psychiatry. 1997;154(12):1655–62.

Saarinen J, Paavilainen P, Schoger E, Tervaniemi M, Naatanen R. Representation of abstract attributes of auditory stimuli in the human brain. Neuroreport. 1992;3(12):1149–51.

Saffran JR, Aslin RN, Newport EL. Statistical learning by 8-month-old infants. Science. 1996;274(5294):1926–8.

Salmelin R, Schnitzler A, Schmitz F, Freund HJ. Single word reading in developmental stutterers and fluent speakers. Brain. 2000;123(Pt 6):1184–202.

Salmelin R, Schnitzler A, Schmitz F, Jancke L, Witte OW, Freund HJ. Functional organization of the auditory cortex is different in stutterers and fluent speakers. Neuroreport. 1998;9(10):2225–9.

Schmidt GL, Rey MM, Oram Cardy JE, Roberts TP. Absence of M100 source asymmetry in autism associated with language functioning. Neuroreport. 2009;20(11):1037–41. doi:10.1097/WNR.0b013e32832e0ca7.

Schwartze M, Tavano A, Schroger E, Kotz SA. Temporal aspects of prediction in audition: cortical and subcortical neural mechanisms. Int J Psychophysiol. 2012;83(2):200–7. doi:10.1016/j.ijpsycho.2011.11.003.

Shelley AM, Ward PB, Catts SV, Michie PT, Andrews S, McConaghy N. Mismatch negativity: an index of a preattentive processing deficit in schizophrenia. Biol Psychiatry. 1991;30(10):1059–62.

Shimano S, Onitsuka T, Oribe N, Maekawa T, Tsuchimoto R, Hirano S, Ueno T, Hirano Y, Miura T, Kanba S. Preattentive dysfunction in patients with bipolar disorder as revealed by the pitch-mismatch negativity: a magnetoencephalography (MEG) study. Bipolar Disord. 2014;16(6):592–9. doi:10.1111/bdi.12208.

Squires NK, Squires KC, Hillyard SA. Two varieties of long-latency positive waves evoked by unpredictable auditory stimuli in man. Electroencephalogr Clin Neurophysiol. 1975;38(4):387–401.

Takei Y, Kumano S, Maki Y, Hattori S, Kawakubo Y, Kasai K, Fukuda M, Mikuni M. Preattentive dysfunction in bipolar disorder: a MEG study using auditory mismatch negativity. Prog Neuropsychopharmacol Biol Psychiatry. 2010;34(6):903–12. doi:10.1016/j.pnpbp.2010.04.014.

Thoma RJ, Hanlon FM, Moses SN, Edgar JC, Huang M, Weisend MP, Irwin J, Sherwood A, Paulson K, Bustillo J, Adler LE, Miller GA, Canive JM. Lateralization of auditory sensory gating and neuropsychological dysfunction in schizophrenia. Am J Psychiatry. 2003;160(9):1595–605.

Thoma RJ, Hanlon FM, Petropoulos H, Miller GA, Moses SN, Smith A, Parks L, Lundy SL, Sanchez NM, Jones A, Huang M, Weisend MP, Canive JM. Schizophrenia diagnosis and anterior hippocampal volume make separate contributions to sensory gating. Psychophysiology. 2008;45(6):926–35. doi:10.1111/j.1469-8986.2008.00692.x.

Tsuchimoto R, Kanba S, Hirano S, Oribe N, Ueno T, Hirano Y, Nakamura I, Oda Y, Miura T, Onitsuka T. Reduced high and low frequency gamma synchronization in patients with chronic schizophrenia. Schizophr Res. 2011;133(1–3):99–105. doi:10.1016/j.schres.2011.07.020.

Walla P, Mayer D, Deecke L, Thurner S. The lack of focused anticipation of verbal information in stutterers: a magnetoencephalographic study. NeuroImage. 2004;22(3):1321–7. doi:10.1016/j.neuroimage.2004.03.029.

Weiland BJ, Boutros NN, Moran JM, Tepley N, Bowyer SM. Evidence for a frontal cortex role in both auditory and somatosensory habituation: a MEG study. NeuroImage. 2008;42(2):827–35. doi:10.1016/j.neuroimage.2008.05.042.

Widmann A, Kujala T, Tervaniemi M, Kujala A, Schroger E. From symbols to sounds: visual symbolic information activates sound representations. Psychophysiology. 2004;41(5):709–15. doi:10.1111/j.1469-8986.2004.00208.x.

Wilson B, Smith K, Petkov CI. Mixed-complexity artificial grammar learning in humans and macaque monkeys: evaluating learning strategies. Eur J Neurosci. 2015;41(5):568–78. doi:10.1111/ejn.12834.

Winkler I, Denham S, Mill R, Bohm TM, Bendixen A. Multistability in auditory stream segregation: a predictive coding view. Philos Trans R Soc Lond B Biol Sci. 2012;367(1591):1001–12. doi:10.1098/rstb.2011.0359.

Yamasue H, Yamada H, Yumoto M, Kamio S, Kudo N, Uetsuki M, Abe O, Fukuda R, Aoki S, Ohtomo K, Iwanami A, Kato N, Kasai K. Abnormal association between reduced magnetic mismatch field to speech sounds and smaller left planum temporale volume in schizophrenia. NeuroImage. 2004;22(2):720–7. doi:10.1016/j.neuroimage.2004.01.042.

Yaron A, Hershenhoren I, Nelken I. Sensitivity to complex statistical regularities in rat auditory cortex. Neuron. 2012;76(3):603–15. doi:10.1016/j.neuron.2012.08.025.

Yoshimura Y, Kikuchi M, Ueno S, Shitamichi K, Remijn GB, Hiraishi H, Hasegawa C, Furutani N, Oi M, Munesue T, Tsubokawa T, Higashida H, Minabe Y. A longitudinal study of auditory evoked field and language development in young children. NeuroImage. 2014;101:440–7. doi:10.1016/j.neuroimage.2014.07.034.

Yumoto M, Matsuda M, Itoh K, Uno A, Karino S, Saitoh O, Kaneko Y, Yatomi Y, Kaga K. Auditory imagery mismatch negativity elicited in musicians. Neuroreport. 2005;16(11):1175–8.

Yumoto M, Ogata E, Mizuochi T, Karino S, Kuan C, Itoh K, Yatomi Y. A motor-auditory cross-modal oddball paradigm revealed top-down modulation of auditory perception in a predictive context. Int Cogress Ser. 2007;1300:93–6.

Yumoto M, Uno A, Itoh K, Karino S, Saitoh O, Kaneko Y, Yatomi Y, Kaga K. Audiovisual phonological mismatch produces early negativity in auditory cortex. Neuroreport. 2005;16(8):803–6.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer Japan

About this chapter

Cite this chapter

Yumoto, M., Daikoku, T. (2016). Basic Function. In: Tobimatsu, S., Kakigi, R. (eds) Clinical Applications of Magnetoencephalography. Springer, Tokyo. https://doi.org/10.1007/978-4-431-55729-6_5

Download citation

DOI: https://doi.org/10.1007/978-4-431-55729-6_5

Published:

Publisher Name: Springer, Tokyo

Print ISBN: 978-4-431-55728-9

Online ISBN: 978-4-431-55729-6

eBook Packages: MedicineMedicine (R0)