Abstract

This paper proposed an adaptive tracking combined background weight with color-texture histogram on the basis of mean shift algorithm to achieve accurate tracking in complex scenes and similar background. Experimental results show that the proposed method is more efficient in dealing with complex background and occlusion than the traditional mean shift algorithm and corrected background-weighted mean shift algorithm with good computational efficiency.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Moving target tracking has been a hot issue in the field of computer vision and emerged various tracking algorithms [1, 2]. Mean shift (MS) algorithm [3] is applied to pattern recognition and image segmentation by Cheng [4] in 1995 for the first time and has been widely used in object tracking owing to its’ easy implementation, fast iterations and less adjustment parameters. However, Mean shift algorithm using a single color histogram to indicate the target characteristics, and rectangular target template contains background information, the target feature cannot be accurately distinguish and describe in complex scenes.

In order to improve tracking accuracy in complex scenes, Comaneci [5] put forward BWH-MS (Background-Weighted Histogram MS) algorithm, integrate the local background information into the target color histogram to reduce the influence of background. Ning [6] proved BWH-MS does not realize the background information into the target model and raised a corrected background-weighted histogram (CBWH) MS algorithm, improved the robustness in complex scene.

Texture information is a stable feature which can be used to improve the tracking robustness for it is not affected by light and background colors. Local Binary Pattern (LBP) descriptor [7] and its variants [8] (center symmetric local binary pattern (CS-LBP), local ternary pattern (LTP)) has applied to the target tracking due to the strong description ability and efficiency in computing texture feature extraction [9]. Literature [10] combined target texture with color histogram has improved tracking capabilities. These adaptive tracking algorithms based on color texture and Mean shift, although to some extent, improved the tracking performance in complex scenes, but did not consider background interference.

In this paper, an improved tracking which uses color histograms mixed with LBP texture to describe the target and compromises background-weighted based on CBWH-MS algorithm is proposed to solve the above problems effectively. Experiments show the improved method herein not only tracking more accurately in large background noise, but also more robust in complex scenes, and has better computational efficiency.

2 Mean Shift Tracking

Mean shift algorithm delineate a rectangle containing the interested object at the beginning frame by the mouse, the rectangular area called a target area, calculate the probability of each eigenvalue in the feature space of all the pixels in the target area for establishing the target model; establish target candidates model in possible candidate target region in subsequent frames; then measure Bhattacharyya coefficient between the target model and the target candidate models, seek to current position which obtain the maximum value of Bhattacharyya coefficient, thus achieving the target tracking.

2.1 Target Model

The target model based on weighted kernel function expressed as:

wherein: \( \hat{q}_{u} \) are value of histogram component u; m is component number; h is window width of kernel function \( k(x) \); \( \delta (x) \) is Kronecker Delta function; generally m value 32 or 16; \( b(x) \) is color index of histograms corresponding to pixel \( x_{i} \); constant C is Normalization factors with constraints: \( \sum\nolimits_{u = 1}^{m} {\hat{q}_{u} = 1} \), so get: \( C = {1 \mathord{\left/ {\vphantom {1 {\sum\nolimits_{i = 1}^{n} {k\left\| {\frac{{y_{0} - x_{i} }}{h}} \right\|} }}} \right. \kern-0pt} {\sum\nolimits_{i = 1}^{n} {k\left\| {\frac{{y_{0} - x_{i} }}{h}} \right\|} }}^{2} \)

2.2 Candidate Target Model

The probability density function of candidate target model expressed as:

wherein: \( C_{h} = {1 \mathord{\left/ {\vphantom {1 {\sum\nolimits_{i = 1}^{{n_{k} }} {k\left\| {\frac{{y_{0} - x_{i} }}{h}} \right\|} }}} \right. \kern-0pt} {\sum\nolimits_{i = 1}^{{n_{k} }} {k\left\| {\frac{{y_{0} - x_{i} }}{h}} \right\|} }}^{2} \) is Normalization factors with constraints: \( \sum\nolimits_{u = 1}^{n} {\hat{p}_{u} = 1} \).

2.3 Similarity Measure Target Model and Candidate Models

In order to determine the target location in the new frame, similarity between target histogram and candidate target histogram often using Bhattacharyya coefficient: \( \hat{\rho }(y) = \sum\nolimits_{u = 1}^{m} {\sqrt {\hat{p}_{u} (y)\hat{q}_{u} } } \). Expansion by Taylor formula:

where weighting factor is:

Candidate model in different candidate area in the current frame is calculated, so the candidate region that has the smallest Bhattacharyya coefficient is the position of the target. MS algorithm is to determine the position of a candidate who making maximum Bhattacharyya coefficient in the new frame.

3 Background-Weighted Color-Texture Histogram Tracking

3.1 LBP Texture

LBP feature is an efficient local texture characteristic, because it has rotational invariance and local gray invariance, have been widely used in texture classification, feature identify, and other fields. LBP feature calculate the gray value of each pixel and its adjacent pixel in picture, and use the binary pattern representing the comparison result to describe the texture. The Calculating formula is:

wherein: \( g_{c} \) is gray value of center pixel \( \left( {x_{c} ,y_{c} } \right) \); R is distance between the center pixel and adjacent pixels, P is the number of pixels in the field; \( g_{p} \) are gray values of P pixels in neighborhood whose center is \( \left( {x_{c} ,y_{c} } \right) \) and radius is R. Function \( s(x) \) defined as:

But the LBP get from formula (5) does not have rotational invariance, so it is not apply to the character modeling while the target posture is altering. For this problem, literature [11] gives a consistent pattern of LBP expansion:

wherein:

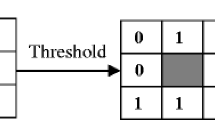

3.2 Combined Color-Texture Histogram

According to the definition of a consistent pattern of LBP expansion, each pixel of the target object can be calculated as a corresponding \( {\text{LBP}}_{P,R}^{riu2} \), when P = 8, R = 1, it ranging from 0 to 9, then calculated texture histogram of the target object, so combined three color channels R, G, B with one-dimensional texture channel to define joint color-texture histogram.However, experimental results show that the combination in this way does not improve tracking performance compared to the use of color histogram, especially do not have a strong ability to distinguish when the target is similar to the background. In order to establish joint color-texture histogram which can identify the background and target zones preferably and reduce interference in the flat areas of image, literature [8] retain only five kinds of uniform pattern in nine patterns. In addition, so as to enhance noise immunity of LBP for flat areas, add an interference factor r, the greater the absolute value of r is, the greater tolerance for gray value volatility. So in this paper using the following definitions:

Such texture description only consider severe pixels change severely, eliminate most flat elements of the background and target area, can make up the deficiencies of color histogram. Combine three color channels R, G, B and \( {\text{LBP}}_{8,1}^{riu2} \), define the joint color-texture histogram as:

wherein, \( {\text{bin}}(x_{i} ) \) represent area of color-texture histogram of pixel \( x_{i} \), \( \delta (x) \) is Kronecker Delta function; C is Normalization constant, with \( \sum\nolimits_{u = 1}^{m} {q_{u} = 1} \), so get:

Select the dimension, the algorithm has \( m = 8 \times 8 \times 8 \times 5 \), the previous three dimension represent three color channel R, G, B, the fourth dimension represent selected five kinds of texture patterns in \( {\text{LBP}}_{8,1}^{riu2} \).

3.3 The Probability Distribution of Color-Texture Feature Based on Background Weights

Due to the target is usually represented by rectangular region which inevitably contains some background information, in order to reduce the interference of background information, the paper introduce background weights to the definition of color texture, redefine the target model, and candidate target model:

New target model:

New candidate target model:

wherein: \( \left\{ {v_{u} = \hbox{min} \left( {\hat{o}^{ * } /\hat{o}_{u} ,1} \right)} \right\}_{u = 1, \ldots ,m} \) are background weights; \( \left\{ {\hat{o}_{u} } \right\}_{u = 1, \ldots ,m} \left( {\sum\nolimits_{i = 1}^{m} {\hat{o}_{u} } = 1} \right) \) are background models, three times size of the target model; \( \hat{o}^{ * } \) is nonzero minimum of \( \left\{ {\hat{o}_{u} } \right\}_{u = 1, \ldots ,m} \). Calculating weight of candidate target area pixel \( x_{i} \) calculation according to background-weighted histogram:

In the first frame, the target is initialized to give its initial position and the background feature model \( \left\{ {\hat{o}_{u} } \right\}_{u = 1, \ldots ,m} \left( {{\kern 1pt} \sum\nolimits_{i = 1}^{m} {\hat{o}_{u} } = 1} \right) \). Compute background characteristics of the current frame \( \left\{ {\hat{o}_{u}^{{\prime }} } \right\}_{u = 1, \ldots ,m} \) and \( \left\{ {v_{u}^{{\prime }} } \right\}_{u = 1, \ldots ,m} \); then, calculate Bhattacharyya coefficient between it and the previous background model \( \left\{ {\hat{o}_{u} } \right\}_{u = 1, \ldots ,m} \), just as:

If \( \rho < \varepsilon \), background show large changes, update background model:

Calculating target model using \( \left\{ {v_{u}^{{\prime }} } \right\}_{u = 1, \ldots ,m} \) and formula (12).

Else, do not update background model.

3.4 Color-Texture and Background-Weighted Histogram MS Algorithm

The procedure of the adaptive algorithm is:

-

Step1:

Initialized, obtain the initial target position \( y_{0} \). Calculating \( \hat{q} \) of target model and \( \left\{ {\hat{o}_{u} } \right\}_{u = 1, \ldots ,m} \) of background model according to Eq. (10); Calculating \( \left\{ {v_{u} } \right\}_{u = 1, \ldots ,m} \) and \( \hat{q}^{{\prime }} \) according to Eq. (12);

-

Step2:

Assigning \( k \leftarrow 0 \);

-

Step3:

Calculating \( \hat{p}\left( {y_{0} } \right) \) of candidate target model in the current frame according to Eq. (13);

-

Step4:

Calculating \( \omega_{i}^{'} \) according to Eq. (14);

-

Step5:

Calculating new location \( y_{1} \) according to Eq. (3);

-

Step6:

Assigning \( d \leftarrow \left\| {y_{1} - y_{0} } \right\|{\kern 1pt} {\kern 1pt} \), \( y_{0} \leftarrow y_{1} \), \( k \leftarrow k + 1 \); \( \varepsilon_{1} \leftarrow 0.1 \), maximum of iteration is N.

-

if \( d < \varepsilon_{1} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\text{or}}{\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} k > N \), computing the current background characteristics of \( \left\{ {\hat{o}^{\prime}_{u} } \right\}_{u = 1, \ldots ,m} \) and \( \left\{ {v_{u}^{{\prime }} } \right\}_{u = 1, \ldots ,m} \); if \( \rho < \varepsilon_{ 2} \), update background model: \( \left\{ {\hat{o}_{u} } \right\}_{u = 1, \ldots ,m} \leftarrow \left\{ {\hat{o}_{u}^{{\prime }} } \right\}_{u = 1, \ldots ,m} \) \( \left\{ {v_{u} } \right\}_{u = 1, \ldots ,m} \leftarrow \left\{ {v_{u}^{{\prime }} } \right\}_{u = 1, \ldots ,m} \), update the target model: \( \left\{ {\hat{q}^{\prime}_{u} } \right\}_{u = 1, \ldots ,m} \). Go to step2 for tracking of the next frame.

-

Else, \( k \leftarrow k + 1 \) and go to step3.

-

4 Results and Discussions

To test the proposed algorithm, select a few standard test video sequence to conduct tracking experiment while comparing with traditional Mean shift tracking algorithm (MS) based only on the color histogram and tracking algorithm (CBWH-MS) based on the background-weighted color histogram. From top to bottom, MS, CBWH-MS, improved algorithm in this paper, respectively.

As Fig. 1, select video of pedestrians passing through the pole to test robustness under occlusion of the improved algorithm. After the pole, for the target is blocked, MS algorithm and CBWH-MS algorithm cannot distinguish target from occlusion environment, resulting in poor tracking performance. Improved algorithm track well, indicating that the improved algorithm have robustness in occlusion compared with the above two algorithms.

As Fig. 2, select video of fast shaking toy cat to test robustness in complex background and computational efficiency of the improved algorithm. In the process of rapid movement of the toy cat, MS algorithm affected by complicated background, it is difficult to distinguish the target from the environment, CBWH-MS method added the background information, but slightly worse than improved algorithm with fusion of texture features in tracking, indicating that the improved algorithm improved the robustness in complex environments. At the same time, it is possible to track fast-moving target, show that the real-time performance of the improved algorithm is to meet the requirements.

Finally, computing performance of three algorithms were compared, as shown in Table 1, the computational efficiency of the proposed method is less efficient than the classic MS algorithm, but almost the same with CBWH-MS algorithm, to meet the real-time requirements.

5 Conclusions

This paper presents improved color-texture and background-weighted histogram MS algorithm, in the feature selection, chosen a blend of traditional RGB color histograms and texture features based on LBP. To reduce the influence of background on targeting updated background-weighted information selectively to accommodate larger changes of the background and complex environment. Compared to traditional mean shift tracking algorithm (MS) based only on the color histogram and tracking algorithm (CBWH-MS) based on the background-weighted color histogram, the improved algorithm is possible to target more accurately and tracking more effectively in complex scenes, and has good computational efficiency.

References

Chen DY, Chen ZW (2013) Mean shift robust object tracking based on feature saliency. J Shanghai Jiao Tong Univ 47(11):1807–1812

Wang YX, Zhang YJ (2010) Meanshift object tracking through 4-D scale space. J Electron Inf Technol 32(7):1626–1632

Fukunaga K, Hostellerl D (1975) The estimation of the gradient of a density function with applications in pattern recognition. IEEE Trans Inf Theory 21(1):32–40

Cheng Y (1995) Mean shift mode seeking and clustering. IEEE Trans Pattern Anal Mach Intell 17(8):790–799

Ramesh DCV, Meer P (2000) Real-time tracking of non-rigid objects using mean shift. In: IEEE Conference on computer vision and pattern recognition, pp 142–149

Ning J, Zhang L, Zhang D, Wu C (2012) Robust mean shift tracking with corrected background-weighted histogram. IET Comput Vis 6:62–69

Heikkia M, Pietikainen M, Schmid C (2009) Description of interest regions with local binary patterns. Pattern Recogn 42(3):425–426

Tan X, Triggs B (2010) Enhanced local texture feature sets for face recognition under difficult lighting conditions. Image Process 19(6):1635–1650

Zhang HY, Hu Z (2014) Mean shift tracking method combining local ternary number with hue information. J Electron Inf Technol 36(3):624–630

Dai YM, Wei W, Lin YN (2012) An improved mean-shift tracking algorithm based on color and texture feature. J Zhejiang Univ (Eng Sci) 46(2):212–217

Heikkia M, Pietikainen M (2006) A texture-based method for modeling the background and detecting moving objects. IEEE Trans Pattern Anal Mach Intell 28(4):657–662

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Chen, L., Huang, Q., Pang, L., Su, F. (2016). A Robust Tracking Combined with Texture Feature and Background-Weighted Color Histogram. In: Liang, Q., Mu, J., Wang, W., Zhang, B. (eds) Proceedings of the 2015 International Conference on Communications, Signal Processing, and Systems. Lecture Notes in Electrical Engineering, vol 386. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-662-49831-6_78

Download citation

DOI: https://doi.org/10.1007/978-3-662-49831-6_78

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-662-49829-3

Online ISBN: 978-3-662-49831-6

eBook Packages: EngineeringEngineering (R0)