Abstract

This Chapter introduces parameterized, or parametric, Model Order Reduction (pMOR). The Sections are offered in a prefered order for reading, but can be read independently. Section 5.1, written by Jorge Fernández Villena, L. Miguel Silveira, Wil H.A. Schilders, Gabriela Ciuprina, Daniel Ioan and Sebastian Kula, overviews the basic principles for pMOR. Due to higher integration and increasing frequency-based effects, large, full Electromagnetic Models (EM) are needed for accurate prediction of the real behavior of integrated passives and interconnects. Furthermore, these structures are subject to parametric effects due to small variations of the geometric and physical properties of the inherent materials and manufacturing process. Accuracy requirements lead to huge models, which are expensive to simulate and this cost is increased when parameters and their effects are taken into account. This Section introduces the framework of pMOR, which aims at generating reduced models for systems depending on a set of parameters.

Section 5.2, written by Gabriela Ciuprina, Alexandra Ştefănescu, Sebastian Kula and Daniel Ioan, provides robust procedures for pMOR. This Section proposes a robust specialized technique to extract reduced parametric compact models, described as parametric SPICE-like netlists, for long interconnects modeled as transmission lines with several field effects such as skin effect and substrate losses. The technique uses an EM formulation based on partial differential equations (PDE), which is discretized to obtain a finite state space model. Next, a variability analysis of the geometrical data is carried out. Finally, a method to extract an equivalent parametric circuit is proposed.

Section 5.3, written by Michael Striebel, Roland Pulch, E. Jan W. ter Maten, Zoran Ilievski, and Wil H.A. Schilders, covers ways to efficiently determine sensitivity of output with respect to parameters. First direct and adjoint techniques are considered with forward and backward time integration, respectively. Here also the use of MOR via POD (Proper Orthogonal Decomposition) is discussed. Next, techniques in Uncertainty Quantification are described. Here pMOR techniques can be used efficiently.

Section 5.4, written by Kasra Mohaghegh, Roland Pulch and E. Jan W. ter Maten, provides a novel way in extending MOR to Differential-Algebraic Systems. Here new MOR techniques for reducing semi-explicit system of DAEs are introduced. These techniques are extendable to all linear DAEs. Especially pMOR techniques are exploited for singularly perturbed systems.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Singular Value Decomposition

- Proper Orthogonal Decomposition

- Multiple Input Multiple Output

- Model Order Reduction

- Uncertainty Quantification

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Parametric Model Order Reduction

Model Order Reduction (MOR) techniques are a set of procedures which aim at replacing a large-scale model of a physical system by a lower dimensional model which exhibits similar behavior, typically measured in terms of its input-output response.Footnote 1 Reducing the order or dimension of these models, while guaranteeing that the input-output response is accurately captured, is crucial to enable the simulation and verification of large systems [1–3, 33]. Since the first attempts in this area [31], the methods for linear model reduction have greatly evolved and can be broadly characterized into two types: those that are based on subspace generation and projection methods [13, 27], and those based on balancing techniques [26, 30] (sometimes also referred to as Singular Value Decomposition (SVD)-based [2]). Hybrid techniques that try to combine some of the features of each family have also been presented [18, 19, 21, 29].

Although previously ignored when analyzing or simulating systems, parameter variability can no longer be disregarded as it directly impacts system behavior and performance. Accounting for the effects of manufacturing or operating variability, such as geometric parameters, temperature, etc., leads to parametric models whose complexity must be tackled both during the design and verification phases. For this purpose, Parametric MOR (pMOR, also known as Parameterized MOR) techniques that can handle parameterized descriptions are being considered as essential in the determination of correct system behavior. The systems generated by pMOR procedures must retain the ability to model the effects of both geometric and operating variability, in order to accurately predict behavior and optimize designs.

Several pMOR techniques have been developed for modeling large-scale parameterized systems. Although the first approaches were based on perturbation based techniques, such as [17, 25], the most common and effective ones appear to be extensions of the basic projection-based MOR algorithms [27, 29] to handle parameterized descriptions. An example of these are multiparameter moment-matching pMOR methods [8] which can generate accurate reduced models that capture both frequency and parameter dependence. The idea is to match, via different approaches, generalized moments of the parametric transfer function, and build an overall projector. Sample-based techniques have been proposed in order to contain the large growth in model order for multiparameter, high accuracy systems [28, 37]. They rely on sampling the joint multi-dimensional frequency and parameters space. This approach allows the inclusion of a priori knowledge of the parameter variation, and provides some error estimation. However, the issue of sample selection becomes particularly relevant when done in a potentially high-dimensional space.

1.1 Representation of Parametric Systems

In order to include parametric systems inside an efficient simulation flow, the parametric dependence should be explicit. This means that it must be possible to access the parameter values and modify them inside the same representation, while avoiding, if possible, re-computing the parametric systems, i.e. to perform another extraction.

Parameters usually affect the geometrical or electrical properties of the layout, and thus, most of these variations can be represented as modifications of the values of the system matrices inside a state-space descriptor. For this reason, in most cases, the input and output ports are not affected by these variations (this of course depends on how the system is built), and in the case when they are in fact affected, these variations can be shifted to the inner states. The variability leads to a dependence of the extracted circuit elements on several parameters, of electrical or geometrical origin. This dependence results in a parametric state-space system representation, which in descriptor form can be written as

where \(C,G \in \mathbb{R}^{n\times n}\) are again, respectively, the dynamic and static matrices, \(B \in \mathbb{R}^{n\times p}\) is the matrix that relates the input vector \(u \in \mathbb{R}^{p}\) to the inner states \(x \in \mathbb{R}^{n}\) and \(L \in \mathbb{R}^{q\times n}\) is the matrix that links those inner states to the outputs \(y \in \mathbb{R}^{q}\). The elements of the matrices C and G, as well as the states of the system x, depend on a set of P parameters λ = [λ 1, λ 2, …, λ P ] which model the effects of the mentioned uncertainty. This time-domain descriptor yields a parametric dependent frequency response modeled via the transfer function

for which we seek to generate a reduced order approximation, able to accurately capture the input-output behavior of the system for any point in the parameter space

In general, one attempts to generate a reduced order model whose structure is, as much as possible, similar to the original, i.e. exhibiting a similar parametric dependence. The “de facto” standard used in most of the literature for representing a parametric system is based on a Taylor series expansion with respect to the parameters (shown here for first order in the frequency domain):

where G 0 and C 0 are the nominal values of the matrices, whereas G i and C i are the sensitivities with respect to the parameters. Novel extraction methodologies can efficiently generate such sensitivity information [5, 12].

A nice feature of this representation is that this explicit parameter dependence allows to obtain a reduced, yet similar representation when a projection scheme is applied

where \(\hat{C}_{i} = V ^{T}C_{i}V\), \(\hat{G}_{i} = V ^{T}G_{i}V\), \(\hat{B} = V ^{T}B\) and \(\hat{L} = \mathit{LV }\).

Some questions may be raised about the order neccessary for an accurate representation of the parametric model. This depends on the range of variation and the effect of each parameter, and therefore is not trivial to ascertain.

However, some literature presents interesting results in this area [4, 6], with the conclusion that low order (first order in most cases) Taylor series are a good and useful approximation to the real parametric system. As it will be shown later, this statement has important consequences from the point of view of some parametric algorithms, especially those which rely on moment matching techniques.

1.2 Reduction of Parametric Systems

The most straight-forward approach for the reduction of such a parametric system is to apply nominal techniques. A first possibility is to apply nominal reduction methodologies on the perturbed system. This means that the model in (5.4) is evaluated for a set of parameter values. This model is no longer parametric, and thus standard reduction methodologies can be applied on it. However, once a “perturbed” system is evaluated and reduced, the parameter dependence is lost, and thus the result is a system which is no longer parametric, and therefore only accurate for a set of parameters.

A slightly different approach that overcomes this issue is to apply the projection on the Taylor series approximation. In this case, depending on the framework applied, we can distinguish two cases:

-

First, in a projection methodology, the projector is computed from the nominal system, and later applied on the nominal and on the sensitivity matrices, obtaining a model as in (5.5).

-

Second, in the case of Balanced Truncation realizations, the computation of the Gramians is done via the nominal system, but the balancing and the truncation is done both on the nominal matrices and on the sensitivities.

These methods, although not oriented to accurately capture the behavior of the system under variation of the parameters, can yield good approximations in cases of small variations or mild effect of the parameters. However, they are not reliable, and their performance heavily depends on the system.

1.2.1 Pertubation Based Parametric Reduction

The first attemps to handle and reduce systems under variations were focused on perturbation techniques.

One of the earliest attempts to address this variational issue was to combine perturbation theory with moment matching MOR algorithms [25] into a Perturbation-based Projector Fitting scheme. To model the variational effects of the interconnects, an affine model was built for the capacitance and conductance matrices,

where now C 0 and G 0 are the nominal matrix values, i.e., the value of the matrices under no parameter variation, and C i and G i , i = 1, …, P, are their sensitivities with respect to those parameters. For small parameter variations, the projection matrix obtained via a moment-matching type algorithm such as PRIMA also may show small perturbations. To capture such effect, several samples in the parameter space were drawn G(λ 1, …, λ P ) and C(λ 1, …, λ P ), and for each sample PRIMA was applied resulting a projector. A fitting methodology was later applied in order to determine the coefficients of a parameter dependent projection matrix

To obtain a reduced model, both the parametric system and the projector are evaluated with the parameter set. Projection is applied and the reduced model obtained. However, this reduced model is only valid for the used parameter set. If a reduced model for a different parameter set is needed, the evaluation and projection must be applied again, what makes hard to include this method in a simulation environment.

Another method combined perturbation theory with the Truncated Balanced Realization (TBR) [26, 30] framework. A perturbation matrix was theoretically obtained starting from the affine models shown in (5.6) [17]. This matrix was applied via a congruence transformation over the Gramians to address the variability, obtaining a set of perturbed Gramians. These in turn were used inside a Balancing Truncation procedure. As with most TBR-inspired methods, this one is also expensive to compute and hard to implement. The above methods have obvious drawbacks, perhaps the most glaring of which is the heavy computation cost required for obtaining the reduced models and the limitation that comes from perturbation based approximations, possibly leading to inaccuracy in certain cases.

1.2.2 Multi-dimensional Moment Matching

Most of the techniques in the literature extend the moment matching paradigm [13, 27, 34] to the multi-dimensional case. They usually rely on the implicit or explicit matching of the moments of the parametric transfer function (5.2). These moments depend not only on the frequency, but on the set of parameters affecting the system, and thus are denoted as multi-dimensional or multi-parameter moments.

This family of algorithms assumes that a model based on the Taylor Series expansion can be used for approximating the behavior of the conductance and capacitance, G(λ) and C(λ), expressed as a function of the parameters

where \(G_{i_{1},\ldots,i_{P}}\) and \(C_{i_{1},\ldots,i_{P}}\) are the multidimensional Taylor series coefficients. This Taylor series can be extended up to the desired (or required) order, including cross derivatives, for the sake of accuracy. If this formulation is used, the structure for parameter dependence may be maintained if the projection is not only applied to the nominal matrices, but to the sensitivities as well.

Multiple methodologies follow these basic premises, but they differ in how and which such moments are generated and used in the projection stage.

The Multi-Parameter Moment Matching method [8] relies on a single-point expansion of the transfer function (5.2) in the joint space of the frequency s and the parameters λ 1, …, λ P , in order to obtain a power series in several variables,

where \(M_{k,k_{s},k_{1},\ldots,k_{P}}\) is a k-th (k = k s + k 1 + … + k P ) order multi-parameter moment corresponding to the coefficient term \(s^{k_{s}}\,\lambda _{1}^{k_{1}}\ldots \lambda _{P}^{k_{P}}\).

A basis for the subspace spanned from these moments can be built and the resulting orthonormal basis V can be used as a projection matrix for reducing the original system

This parametrized reduced model matches up to the k-th order multi-parameter moment of the original system.

However, the main inefficiencies of this method are twofold:

-

On the one hand, this method generates pure multi-dimensional moments (see Eq. (5.9)), which means that the number of moments grows dramatically (all the possible combinations for a given order must be done) when the number of parameters is increased, even for a modest number of moments for each parameter. For this reason, the reduced model size grows exponentially with the number of parameters and the moments to match.

-

On the other hand, the process parameters fluctuate in a small range around their nominal value, whereas the frequency range is much larger, and a higher number of moments are necessary in order to capture the global response for the whole frequency range. This algorithm treates the frequency as one parameter more, which turns to be highly innefficient.

An improvement of the previous approach is to perform a Low-Rank Approximation of the multi-dimensional moments [22]. An SVD-based low-rank approximation of the generalized moments, G −1 G i and G −1 C i (which are related to the multidimensional moments), is applied. Then, separate subspaces are built from these low-rank approximations for every parameter. The global projector is obtained from the orthonormalization of the nominal moments (computed via Arnoldi for example), and the moments of the subspaces related to the parameters. The projector is applied on the Taylor Series approximation to obtain a reduced parametric model. This approach, although providing more flexibility and improving the matching, requires the low-rank SVD of the generalized moments, which comes at a cost of O(n 3), i.e., limiting its applicability to small-medium size problems.

A different multi-dimensional moment matching approach was also presented in [16], called Passive Parameterized Time-Domain Macro Models. It relies on the computation of several subspaces, built separately for each dimension, i.e. the frequency s (to which respect k s block moments are obtained in a basis denoted as Q s ) and the parameter set λ (generating the basis Q i which match \(k_{\lambda _{i}}\) block moments with respect to parameter λ i ). These independent subspaces can be efficiently computed using standard nominal approaches, e.g. PRIMA. Once all the subspaces have been computed, an orthonormal basis can be obtained so that its columns span the joint of all subspaces. For example, in the affine Taylor Series representation, using Krylov spaces Kr(A, B, k) (matrix A, multi-columns vector B, moments k):

where subscript i refers to the i-th parameter λ i , and the parameter related moments have been generalized to any shifted frequency s. QR stands for the QR-factorization based orthonormalization. Applying the resulting matrix V in a projection scheme ensures that the parametric Reduced Order Model matches k s moments of the original system with respect to the frequency, and k i moments with respect to the parameter λ i . If the cross-term moments are needed for accuracy reasons, the subspace that spans these moments can also be included by following the same scheme.

A different approach is explored in CORE [23]. Here an explicit moment matching with respect to the parameters is first done, via Taylor-series expansion, followed by an implicit moment matching in frequency (via projection). The first step in done by expanding the state space vector x and the matrices G and C in its Taylor Series only with respect to the parameters,

where G 0, …, 0, C 0, …, 0 and x 0, …, 0(s) are the nominal values for the matrices and the states vector, respectively. The remaining \(G_{i_{1},\ldots,i_{P}}\), \(C_{i_{1},\ldots,i_{P}}\) and \(x_{i_{1},\ldots,i_{P}}\) are the sensitivities with respect to the parameters. Explicitly matching the coefficients of the same powers leads to an augmented system, in which the parametric dependence is shifted to the output related matrix L A :

The second step applies a typical nominal moment matching procedure (e.g. PRIMA [27]) to reduce this augmented system. This is possible because the matrices G A , C A and B A used to build the projector do not depend on the parameters. The projector is latter applied on all the matrices of the augmented system in (5.14). Furthermore, the Block Lower Triangular structure of the system matrices G A and C A can be exploited in recursive algorithms to speed-up the reduction stage. This two-step approach allows to increase the number of the matched multi-parameter moments with respect to other techniques, for a similar reduced order. In principle, in spite of the larger size of the augmented model, the order of the reduced system can be much smaller than in the previous cases. On the other hand, the structure of the dependence with respect to the parameters is lost, since the parametric dependence is shifted to the later projected output related L matrix. The projection mixes all the parameters, losing the possibility of modifying them without need of recomputation. This method also has the disadvantage that the explicit computation of the moments with respect to the parameters can lead to numerical instabilities. The method, although stability-preserving, is unable to guarantee passivity preservation.

Some algorithms [24, 37] try to match the same moments as CORE, but in a more numerical stable and efficient fashion, using Recursive and Stochastic Approaches. They generalize the CORE paradigm up to an arbitrary expansion order with respect to the parameters, and apply an iterative procedure in order to compute the frequency moments related to the nominal matrices, and the ones obtained from the parametric part (this means, to obtain a basis for each block of states x i in (5.14), but without building such system).

where s k is the expansion point for the Krylov subspace generation, and V i j is the j-th moment with respect to the frequency for the i-th parameter. This general recursive scheme, here presented for first order with respect to the parameters, can be extended to any (independent) order with respect to each parameter.

The technique in [37] uses a tree graph scheme, in which each node is associated to a moment, and the branches represent recursive dependences among moments. Each tree level contains all the moments of the same multi-parameter order. On this tree, a random sampling approach is used to select and generate some representative moments, preventing the exponential growth.

On the other hand, the technique in [24] advocates for an exhaustive computation at each parameter order. This means that all the moments for zero-parameter order (i.e. nominal), are computed until no rank is added. The same procedure is repeated for first order with respect to all parameters. If the model is not accurate, more order with respect to the parameters can be added.

Notice that both schemes provide a large degree of flexibility, as different orders with respect to each parameter and with respect to the frequency can be applied. In both approaches, the set of all the moments generated is orthonormalized, so an overall projector is obtained. This is used inside a congruence transformation on the Taylor Series approximation (5.4), to generate a reduced model in the same representation. Another advantage of these methodologies is that the passivity is PRIMA-like preserved, and the basis is built in a numerical stable fashion.

1.2.3 Multi-dimensional Sampling

Another option present in the literature relies on sampling schemes for capturing the variational nature of the parametric model. They are applied for the building of a projector to later apply congruence tranformation on the original model.

A simple generalization of the multi-point moment matching framework [11] to a multi-dimensional space can be done via Variational Multi-Point Moment Matching. Small research has been devoted to this family of approaches, but one algorithm can be found in [22]. The flexibility it provides is also one of its main drawbacks, as the methodology can be applied in a variety of schemes, from a single-frequency multi-parameter sampling to a pure multi-dimensional sampling. From these expansion points, several moments are computed following a typical moment matching scheme. The orthonormalization of the set of moments provides the overall projector which is applied in a congruence reduction scheme. However, it is hard to determine the number and placement of samples, and the number of moments to match with respect to the frequency and to the parameters.

Another scheme, which overcomes some of the issues of the previous approach is the Variational Poor Man’s TBR [28]. This approach is based on the statistical interpretation of the algorithm (see [29] for details) and enhances its applicability to multiple dimensions. In this interpretation, the Gramian X λ is seen as a covariance matrix for a Gaussian variable x λ , obtained by exciting the (presumed stable) system with u involving white noise. Rewriting the Gramian as

where p(λ) is the probability density of λ in the parameter space, S λ . Just as in PMTBR, a quadrature rule can be applied in the overall parameter plus frequency space to approximate the Gramian via numerical computation. But in this case the weights are chosen taking into account the Probability Density Function (PDF) of λ i and the frequency constraints. This can be generalized to a set of parameters, where a joint PDF of all the parameters can be applied on the overall parameter space, or the individual PDF of each parameter can be used. This possibility represents an interesting advantage, since a-priori knowledge of the parameters and the frequency can be included in order to constrain the sampling and yield a more accurate reduced model. The result of this approach is an algorithm which generates Reduced Order Models whose size is less dependent on the number of parameters. In the deterministic case, an error analysis and control can be included, via the eigenvalues of the SVD. However, in the variational case only an expected error bound can be given:

where r is the reduced order and n the original number of states. On the other hand, in this method the issue of sample selection, already an important one in the deterministic version, becomes even more relevant, since the sampling must now be done in a potentially much higher-dimensional space.

1.3 Practical Consideration and Structure Preservation

Inside the pMOR realm, the moment matching algorithms based on single point expansion may not be able to capture the complete behavior along the large frequency range required for common RF systems, and may lead to excessively large models if many parameters are taken into account. Therefore the most suitable techniques for the reduction seem to be the multipoint ones. Among those techniques, Variational PMTBR [28] offers a reliable framework with some interesting features that can be exploited, such as the inclusion of probabilistic information and the trade off between size and error, which allows for some control of the error via analysis of the singular values related to the dropped vectors. On the other hand, it requires a higher computational effort than the multi-dimensional moment matching approaches, as it is based on multidimensional sampling schemes and Singular Value Decomposition (SVD), but the compression ratio and reliability that it offers compensates this drawback. The effort spent in the generation of such models can be amortized when the reduced order model generated is going to be used multiple times. This is usually the case for parametric models, as the designer may require several evaluations for different parameter sets (e.g. in the case of Monte Carlo simulations, or optimization steps). Furthermore, this technique offers some extra advantages when combined with block structured systems [14], such as the block-wise error control with respect to the global input-output behaviour, which can be applied to improve the efficiency of the reduction. This means that each block can be reduced to a different order depending on its relevance in the global response.

An important point to recall here is that the block division may not reflect different sub-domains. Different sub-divisions can be done to address different hierarchical levels. For instance, it may be interesting to divide the complete set in sub-domains connected by hooks, which generates a block structured matricial representation. But inside each block corresponding to a sub-domain, another block division may be done, corresponding either to smaller sub-domains or to a division related to the different kind of variables used to model each domain (for example, in a simple case, currents and voltages). This variable related block structure preservation has already been advocated in the literature [15] and may help the synthesis of and equivalent SPICE-like circuit [35] in the case that is required. Figure 5.1 presents a more intuitive depiction of the previous statements, in which a two domain example is shown with its hierarchy, and each domain has also some inner hierarchy related to the different kind of variables (in this case, voltages and currents, but it can also be related to the electric and magnetic variables, depending on the formulation and method used for the generation of the system matrices).

The proposed flow starts from a parametric state-space descriptor, such as (5.1), which exhibits a multi-level hierarchy, and a block parametric dependence (as different parameters may affect different sub-domains). The matrices of size n have K domains, each with size n i , n = ∑ i n i . For instance, for the static part,

where λ {ij} is the set of parameters affecting \(G_{\mathit{ij}} \in \mathbb{R}^{n_{i}\times n_{j}}\). Then we perform the multi-dimensional sampling, both in the frequency and the parameter space. For each point we generate a matrix or vector z j (a matrix in case B includes multiple inputs)

where C(λ) and G(λ) are the global matrices of the complete domain, with n degrees of freedom (dofs). To generate the matrix \(z_{j} \in \mathbb{R}^{n\times m}\), with m the number of global ports, we can apply a direct procedure, meaning a factorization (at cost O(n β), with 1. 1 ≤ β ≤ 1. 5 for sparse matrices) and a solve (at cost O(n α), with 1 ≤ α ≤ 1. 2 for sparse matrices). Novel sparse factorization schemes can be applied to improve the efficiency [9, 10]. In cases when a direct method may be too expensive iterative procedures may be used [32].

The choice of the sampling points may be an issue, as there is no clear scheme or procedure that is known to provide an optimal solution. However, as stated in [28], the accuracy of the method does not depend on the accuracy of the quadrature (and thus in the sampling scheme), but on the subspace generated. For this reason, a good sampling scheme is to perform samples in the frequency for the nominal system, and around these nominal samples, perform some parametric random sampling in order to capture the vectors that the perturbed system generates. The reasoning behind this scheme is that for small variations, such as the ones resulting from process parameters, the subspace generated along the frequency is generally more dominant than the one generated by the parameters. In addition, under small variations, the nominal sampling can be used as a good initial guess for an iterative solver to generate the parametric samples. For the direct solution scheme, to generate P samples (and thus Pm vectors) for the global system has a cost of O(Pn α +Pn β). Note that since m is the number of global (or external) ports, the number of vectors is smaller than if we take all the hooks into account.

The next step is the orthonormalization, via SVD, of the Pm vectors for generating a basis of the subspace in which to project the matrices. Here an independent basis V i , \(i \in \left \{1,\ldots,K\right \}\), can be generated for each i-th sub-domain. To this end the columns in z j are split according to the block structure present in the system matrices (i.e., the n i rows for each block), and an SVD is performed on each of these set of vectors, at a cost of O(n i (Pm)2), where n i is the size of the corresponding block, and n = ∑ i n i . For each block, the independent SVD allows to drop the vectors less relevant for the global response (estimated by the dropped singular value ratio, as presented in [28]). This step generates a set of projectors, \(V _{i} \in \mathbb{R}^{n_{i}\times q_{i}}\), with q i ≪ n i the reduced size for the i-th block of the global system matrix. These projectors can be placed in the diagonal blocks of an overall projector, that can be used for reducing the initial global matrices to an order q = ∑ i q i . This block diagonal projector allows a block structure (and thus sub-domain) preservation, increasing the sparsity of the ROM with respect to that of the standard projection. This sparsity increase is particularly noticeable in the case of the sensitivities (if a Taylor series is used as base representation), as the block parameter dependence is maintained (e.g. in the static matrix)

The total cost for the procedure can be approximated by

1.4 Examples

1.4.1 L-Shape

As a first example we present a simple L-shape interconnect structure depending on the width of the metal layer. Figure 5.2 shows the frequency response for a fixed parameter value, of the nominal system, the Taylor series approximation (both of order 313), and the reduction models obtained with several parametric approaches:

-

Nominal reduction of the Taylor Series, via PRIMA, of order 25,

-

Multi-dimensional moment matching, via CORE, of order 25,

-

Multi-dimensional moment matching, via Passive Parameterized Time-Domain Macro Models technique, of order 20,

-

And Multi-dimensional sampling, via Variational PMTBR, of order 16.

Figure 5.3 shows the same example, but in this case the response of the systems with respect of the parameter variation, for a given frequency point. It is clear that the parametric Model Order Reduction techniques are able to capture the parametric behavior, whereas the nominal approach (PRIMA) fails to do so, even for high order.

(Top): Frequency response of the L-shape example. The original, both the nominal and the Taylor series for a fixed parameter value, of order 313, and the reductions via PRIMA, CORE, Passive Parameterized Time-Domain Macro Models (PP TDM), and variational PMTBR (VPMTBR), of different orders. (Bottom): Relative error of the reduction models with respect to the original Taylor series approximation

1.4.2 U-Coupled

This is a simple test case, which has two U-shape conductors; each of the conductors ends represent one port, having one terminal voltage excited (intentional terminal, IT) and one terminal connected to ground. A clear illustration of the setting is given by Fig. 5.4. The distance (d) separating the conductors and the thickness (h) of the corresponding metal layer are parameterized. The complete domain is partitioned into three sub-domains, each of them connected to the others via a set of hooks (both electric, EH, and magnetic, MH). The domain hierarchy and parameter dependence are kept after the reduction, via Block Structure Preserving approaches. The Full Wave EM model was obtained via Finite Integration Technique (FIT) [7], and its matrices present a Block Structure that follows the domain partitioning. Table 5.1 shows the characteristics of the original system. Each sub-domain is affected by a parameter. The left and right sub-domains contain the conductors, and thus are affected by the metal thickness h. The middle domain width varies with the distance between the two conductors, and thus is affected by parameter d. For each parameter the first order sensitivity is taken into account, and a first order Taylor Series (TS) formulation is taken as the original system.

For the reduction we apply three techniques. First, a Nominal Block Structure Preserving (BSP) PRIMA [36], with a single expansion point and matching 50 moments, is applied. This leads to a 100-vector generated basis, that after BSP expansion produces a 300-dofs Reduced Order Model (ROM). Second, a BSP procedure coupled with a Multi-Dimensional Moment Matching (MDMM) approach [16], is tried. The basis will match 40 moments with respect to the frequency, and 30 moments with respect to each parameter. The orthonormalized basis has 196 vectors, that span a BSP ROM of size 588. Third, the proposed BSP VPMTBR, with 60 multidimensional samples, and a relative tolerance of 0. 001 for each block, is studied. This process generates different reduced sizes for each block: 85, 90 and 85, with a global size of 260.

Figure 5.5 shows the relative error in the frequency transfer function at a parameter set point for the three ROMs w.r.t. the Taylor series. PRIMA and MDMM approaches fail to capture the behavior with the order set, but the proposed approach performs much better even for a lower order. Figure 5.6 shows the response change with the variation of parameter d at a single frequency point (Parameter Impact). PRIMA and MDMM only present accuracy for the nominal point, whereas the proposed method maintains the accuracy for the parameter range.

1.4.3 Double Spiral

This is an industrial example, composed by two square integrated spiral inductors in the same configuration as the previous example (See Fig. 5.7). The complete domain has two ports, and 104, 102 Dofs. The example also depends on the same two parameters, the distance d between spirals, and the thickness h of the corresponding metal layer. In this case a single domain is used, but the BSP approach is applied on the inner structure provided by the different variables in the FIT method (electric and magnetic grid). For the reduction, the proposed BSP VPMTBR methodology is benchmarked against a nominal BSP PRIMA (400 dofs) methodology, and compared with the original Taylor Series formulation. The ROM size in this case is 142 and 165 respectively for the blocks. The results are presented in the Figs. 5.8 and 5.9. Figure 5.8 shows the frequency relative error of the ROMs with respect to the original Taylor Series. PRIMA, although accurate for the nominal response, fails to capture the parametric behavior, whereas the proposed method succeeds in modeling such behavior. This is also the conclusion that can be drawn from the parameter impact in Fig. 5.9.

1.5 Conclusions

We conclude that Parametric Model Order Reduction techniques are essential for addressing parameter variability in the simulation of large dynamical systems.

Representation of the state space based on Taylor series expansion with respect to the parameters provide the flexibility and accuracy required by efficient simulation. This reresentation approach can be combined with projection-based methods to generate structural equivalent reduced models.

Single-point based moment-matching approaches are suitable for small variations and local approximations, but usually suffer from several drawbacks when applied to EM based models operating in a wide frequency range. Multi-point based approaches, although computationally more expensive, are more reliable and generate more compressed models. Thus, the generation cost can be amortized in the simulation stages.

Combination of the projection methodologies with Block Structure Preserving approaches can be done efficiently in parametric environments. Further advantages can be obtained in this case, such as different compression order for each block based on its relevance in the global behavior, higher degree of sparsification of the nominal matrices, and in particular, of the sensitivities, and the maintenance of the block domain hierarchy and block parameter dependence after reduction.

2 Robust Procedures for Parametric Model Order Reduction of High Speed Interconnects

Due to higher integration and increasing of running frequency, full Electromagnetic Models (EM) are needed for an accurate prediction of the real behavior of integrated passives and interconnects in currently designed chips [45].Footnote 2 In general, if on-chip interconnects are sorted with respect to their electric length, they may be categorized in three classes: short, medium and long. While the short interconnects have simple circuit models with lumped parameters, the extracted model of the interconnects longer than the wave length has to consider the effect of the distributed parameters, as well. Fortunately, the long interconnects have usually the same cross-sectional geometry along their extension. If not, they may be decomposed in straight parts connected by junction components (Fig. 5.10). The former are represented as transmission lines (TLs) whereas the latter are modeled as common passive 3D components.

Due to the fact that the lithographic technology is pushed today to work at its limit, the variability of geometrical and physical properties cannot be neglected. That is why, to obtain robust devices, the variability analysis is necessary even during the design stage [44, 55].

This Section proposes a robust specialized technique to extract reduced parametric compact models, described as parametric SPICE like netlists, for long interconnects modeled as transmission lines with several field effects such as skin effect and substrate losses. The technique uses an EM formulation based on partial differential equations (PDE), which is discretized to obtain a finite state space model. Next, a variability analysis of the geometrical data is carried out. Finally, a method to extract an equivalent parametric circuit is proposed. The procedure is validated by applying it on a study case for which experimental data is available.

2.1 Field Problem Formulation: 3D – PDE Models

Long interconnects and passive components with significant high frequency field effects, have to be modeled by taken into consideration Full Wave (FW) electromagnetic field equations. Typical examples of such parasitic effects are: skin effect and proximity, substrate losses, propagation retardation and crosstalk. Only Maxwell equations in FW regime

complemented with the constitutive equations which describe the material behavior:

can model these effects. While material constants are known for each subdomain (Si, Al, SiO2), vectorial fields \(\boldsymbol{B},\boldsymbol{H},\boldsymbol{E},\boldsymbol{D}:\varOmega \times [0,T) \rightarrow \mathbb{R}^{3}\) and the scalar field \(\rho:\varOmega \times [0,T) \rightarrow \mathbb{R}\) are the unknowns of the problem. They can be univocal determined in the simple connected set Ω, which is the computational domain, for zero initial conditions (\(\boldsymbol{B} =\boldsymbol{ 0},\boldsymbol{D} =\boldsymbol{ 0}\) for t = 0), if appropriate boundary conditions are imposed.

According to authors’ knowledge, the best boundary conditions which allow the field-circuit coupling are those given by the electric circuit element (ECE) formulation [54]. Considering \(S_{1}^{{\prime}},S_{2}^{{\prime}},\ldots,S_{n}^{{\prime}}\subset \partial \varOmega\) a disjoint set of surfaces, called terminals (Fig. 5.11), the following boundary conditions are assumed:

Condition (5.24) interdicts the magnetic coupling between the domain and its environment, (5.25) interdicts the galvanic coupling and the capacitive coupling through the boundary excepting for the terminals and (5.26) interdicts the variation of the electric potential over the terminal, thus allowing the connection of the device to exterior electric circuit nodes. For each terminal, k = 1, …, n the voltage and the current can be univocal defined:

where C k ′ is an arbitrary path on the device boundary ∂ Ω, that starts on S k ′ and ends on S n ′, where, by convention, the n-th terminal is considered as reference, i.e. u n = 0. If we assume that the terminals are excited in voltage, then u k , k = 1, 2, …, n − 1 are input signals and i k , k = 1, 2, …, n − 1 are output signals. Equations (5.24) ÷ (5.26) define a multiple input multiple output (MIMO) linear system with n − 1 inputs and n − 1 outputs, but with a state space of infinite dimension. In the weak form of Maxwell’s equations, state variables, \(\boldsymbol{H},\boldsymbol{E}\) belong to the Sobolev space H(curl, Ω) [39]. Uniqueness theorem of the ECE field problem [54] generates the correct formulation of the transfer function \(\mathbf{Y}(s): \mathbb{C} \rightarrow \mathbb{C}^{(n-1)\times (n-1)}\), which represents the matrix of the terminals admittance for a complex frequency s. The relation

defines a linear transformation in the frequency domain of the terminal voltages vector \(\mathbf{u} \in \mathbb{C}^{n-1}\) to the currents vector \(\mathbf{i} \in \mathbb{C}^{n-1}\).

2.2 Numeric Discretization and State Space Models

PDE models are too complex for designers needs. The approach we propose for the extraction of the electric models is schematically represented in Fig. 5.12. The left block corresponds to the formulation described in the previous section.

The next important step in the EM modeling is the discretization of the PDEs. One of the simplest methods to carry out this, is based on the Finite Integration Technique (FIT), a numerical method able to solve field problems based on spatial discretization “without shape functions”. Two staggered orthogonal (Yee type) grids are used as discretization mesh [42]. The centers of the primary cells are the nodes of the secondary cells. The degrees of freedom (dofs) used by FIT are not local field components as in FEM or in FDTD, but global variables, i.e., electric and magnetic voltages u e , u m , electric currents i, and magnetic and electric fluxes ϕ, ψ assigned to the grid elements: edges and faces, respectively. They are associated to these grids elements in a coherent manner (Fig. 5.13).

By applying the global form of electromagnetic field equations on the mesh elements (elementary faces and their borders), a system of differential algebraic equations (DAE), called Maxwell Grid Equations (MGE) is obtained:

FIT combines MGE with Hodge’s linear transform operators, which approximate the material behavior (5.23):

The main characteristics of the FIT method are:

-

There is no discretization error in the MGE fundamental Eqs. (5.29) ÷ (5.33). All numerical errors are hold by the discrete Hodge operators (5.34).

-

An equivalent FIT circuit (Fig. 5.14), having MGE + Hodge as equations may be easily build. The graphs of the two constituent mutually coupled sub-circuits are exactly the two dual discretization grids; therefore the complexity of the equivalent circuit has a linear order with respect to the number of grid-cells [49].

-

MGE are:

-

Sparse: matrices \(\boldsymbol{G}_{m},\boldsymbol{C}_{e}\) and \(\boldsymbol{G}_{e}\) are diagonal and matrices \(\boldsymbol{C},\boldsymbol{D}\) have maximum six non-zero entries per row,

-

Metric-free: matrices C – the discrete-curl and D – the discrete-div operators have only 0, +1 and −1 as entries,

-

Mimetic: in Maxwell equations curl and div operators are replaced by their discrete counterparts C and D, and

-

Conservative: the discrete form of the discrete charge conservation equation is a direct consequence of both Maxwell and as well as of the MGE equations.

-

Due to these properties the numerical solutions have no spurious modes.

Considering FIT Eqs. (5.29), (5.31), and (5.34) with the discrete forms of boundary conditions (5.24) ÷ (5.27) a linear time-invariant system is defined having the same input-output quantities as (5.28), but the state equations:

where \(\boldsymbol{x} = [\boldsymbol{u}_{m}^{T},\boldsymbol{u}_{e}^{T},\boldsymbol{i}^{T}]^{T}\) is the state space vector, consisting of electric voltages \(\boldsymbol{u}_{e}\) defined on the electric grid used by FIT, magnetic voltages \(\boldsymbol{u}_{m}\) defined on the magnetic grid and output quantities \(\boldsymbol{i}\). Equations can be written such that only two semi-state space matrices (\(\boldsymbol{C}\) and \(\boldsymbol{G}\)) are affected by geometric parameters (denoted by α in what follows). Considering all terminals voltage-excited, the number of inputs is always equal to the number of outputs. Since output currents are components of the state vector, the matrix \(\boldsymbol{L} =\boldsymbol{ B^{T}}\) is merely a selection matrix.

For instance, the structure of the matrices in the case of voltage excitation is the following:

There are six sets of rows, corresponding to the six sets of equations. The first group of equations is obtained by writing Faraday’s law for inner elementary electric loops. \(\boldsymbol{G}_{m}\) is a diagonal matrix holding the magnetic conductances that pass through the electric loops. The block \(\left [\begin{array}{cc} \boldsymbol{B}_{1} & \boldsymbol{B}_{2} \end{array} \right ]\) has only 0, 1, − 1 entries, describing the incidence of inner branches and branches on the boundary to electric faces. The second group corresponds to Ampere’s law for elementary magnetic loops. \(\boldsymbol{C}_{i}\) and \(\boldsymbol{G}_{i}\) are diagonal matrices, holding the capacitances and electric conductances of the inner branches. The third group represents Faraday’s law for electric loops on the boundary. \(\boldsymbol{B}_{\mathit{Sl}}\) has only 0, 1, − 1 entries, describing the incidence of electric branches included in the boundary to the electric boundary faces. The forth row is obtained from the current conservation law for all nodes on the boundary excepting for the nodes on the electric terminals. \(\boldsymbol{G}_{\mathit{Sl}}\) and \(\boldsymbol{C}_{\mathit{Sl}}\) hold electric conductances and capacitances directly connected to boundary. The fifth set of equations represents current conservation for electric terminals. \(\boldsymbol{G}_{\mathit{TE}}\) and \(\boldsymbol{C}_{\mathit{TE}}\) hold electric conductances and capacitances that are directly connected to electric terminals. \(\boldsymbol{S}_{E}\) is the connexion matrix between electric branches and terminals path. The last row is the discrete form of (5.27), obtained by expressing the voltages of electric terminals as sums of voltages along open paths from terminals to ground, \(\boldsymbol{P}_{E}\) being a topological matrix that holds the paths that connect electric terminals to ground.

Thus, the top left square block of \(\boldsymbol{C}\) is diagonal and the top left square bloc of \(\boldsymbol{G}\) is symmetric. The size of this symmetric bloc corresponds to the useful magnetic branches and to the useful inner electric branches. Its size is dominant over the size of the matrix, therefore, solving or reduction strategies that take into consideration this particular structure are useful.

The discretized state-space system given by (5.35) describes the input output relationship in the frequency domain

similar to (5.28), but having as transfer (circuit) function:

which is a rational function with a finite number of poles.

In conclusion, the discretization of the continuous model leads to a model represented by a MIMO linear time invariant system described by the state equations of finite size. Even if this is an important step ahead in the extraction procedure, the state space dimension is still too large for designer’s needs, therefore a further modeling step aiming an order reduction is required.

2.3 Transmission Lines: 2D + 1D Models

In this section, aiming to reduce the model extraction effort, we will exploit the particular property of interconnects of having invariant transversal section along their extent. We assume that the field has a similar structure as a transversal electromagnetic wave that propagates along the line. The typical interconnect configuration (Fig. 5.15) considered consists of n parallel conductors having rectangular cross section, permeability μ = μ 0, permittivity ɛ = ɛ 0 and conductivity σ k , k = 1, 2, ⋯ , n, placed in a SiO2 layer (σ d , ɛ d , possibly dependent on y) placed above a silicon substrate (σ s , ɛ s ).

If the field is decomposed in its longitudinal (oriented along the line, which is assumed to lie along the Oz axis) and the transversal components (oriented in the xOy plane)

then Maxwell’s Equations can be separated into two groups:

called transversal equations and

called propagation equations.

The following hypotheses are adopted:

-

The volume charge density ρ and the displacement current density \(\frac{\partial \mathbf{E}} {\partial t}\) are neglected both in conductors and in the substrate.

-

The following “longitudinal” terms E z = 0, H z = 0 are canceled in the transversal equations, neglecting the field generated by eddy currents.

-

The longitudinal conduction current is neglected in dielectric J z = 0, but not in the conductors.

-

Since the conductances σ k of the conductors are much bigger than the dielectric conductance σ d , the transversal component of the electric field is neglected in the line conductors and in the substrate:

$$\displaystyle{ \mathbf{E}_{t} = \frac{1} {\sigma _{k}}\mathbf{J}_{t} =\boldsymbol{ 0}. }$$(5.42)

Under these hypotheses the transversal equations have the following form (where (k) = conductor, (s) = substrate, (d) = dielectric):

identical with the steady state electromagnetic field equations. For this reason, the electric field admits a scalar electric potential V (x, y, z, t), whereas the magnetic field admits a vector magnetic potential A(x, y, z, t) = k A(x, y, z, t) with longitudinal orientation, so that:

Thus, the propagation equations become:

By assuming an asymptotic behavior of potentials, the integration of the propagation equations yields to:

where C is a curve in the plane z = constant, which starts from the infinity and stops in the computation point of the field H z , n is the normal to the curve, oriented so that the line element is

From (5.42) it follows that the potential V is constant on every transversal cross-section of the conductors and zero in the substrate:

From relations (5.43) and (5.44) it follows that, in the transversal plane, the electric field has the same distribution as an electrostatic field. By using the uniqueness theorem of the electrostatic field it results that the function V (x, y, z, t) is uniquely determined by the potentials of the conductors V k . Consequently, due to the linearity, the per unit length (p.u.l.) charge of conductors and the current loss through the dielectric are:

where c km is the p.u.l. capacitance, and g km is the p.u.l. conductance between the conductor k and the conductor m.

By integrating E z equation from (5.47) over the surface S k and H z equation from (5.47) along the path ∂ S k which bounds this surface, the following propagation equations for potentials are obtained:

where \(r_{k}^{0} = 1/(\sigma _{k}A_{S_{k}})\) is the p.u.l. d.c. resistance of the conductor k, and

are the average values of the two potentials on the cross-section of the conductor k.

By computing the average values of the magnetic potential as in [58] and by substituting (5.50), (5.51) in (5.52) the following expressions are obtained in zero initial conditions:

where l km 0 are the p.u.l. external inductances (self inductances for k = m and mutual inductances for k ≠ m) of the conductors (k) and (m) where the return current is distributed on the surface of the substrate, and l km (t) are “transient p.u.l. inductances”, defined as the average values on S k of the vector potential A obtained in zero initial conditions by a unity step current injected in conductor (m).

For zero initial conditions for the currents i m (z, 0) = 0, for the potential v m (z, 0) = 0 and for the field \(\boldsymbol{B}_{k}^{0}(s) =\boldsymbol{ 0}\), the Laplace transform of (5.54) and (5.55) can be written as:

which is identical to the operational form of the classical Transmission Lines (TLs) Telegrapher’s equations, but where the p.u.l. inductances depend on s (implicitly on the frequency in a time-harmonic regime). In order to extract these dependencies, a magneto-quasi-static (MQS) field problem has to be solved.

2.4 Numeric Extraction of Line Parameters

Models with various degrees of fineness can be established for TLs. The coarsest ones are circuit models with lumped parameters, such as the pi equivalent circuit for a single TL shown in Fig. 5.16. As expected, the characteristic of such a circuit is appropriate only at low frequencies, over a limited range, and for short lines. Even chaining similar cells, the result is not appropriate.

At high frequencies, the distributed effects have to be considered as an important component of the model. Proper values for the line parameters can be obtained only by simulating the electromagnetic field. The extraction of line parameters is the main step in TLs modeling since the behavior of a line with a given length can be computed from them. For instance, for a multiconductor transmission line, from the per unit length parameters matrices R, L, C and G the transfer matrix for a line of length l can be computed as

From them, other parameters (impedance, admittance or scattering) can be computed. The simplest method to extract constant matrices of the line resistance R, capacitance C and inductance L, respectively, is to solve the field equations numerically in steady-state electric conduction (EC), electrostatics (ES) and magnetostatics (MS) regimes. Empirical formulas may also be found in the literature, such as the ones given in [62] for the line capacitance. None of them take the frequency dependence of p.u.l. parameters into account.

A first attempt to take into consideration the frequency effect, which becomes important at high frequencies, is to compute the skin depth in the conductor and to use a better approximation for the resistance. In [52] we proposed a much more accurate estimation of frequency dependent line parameters based on the numerical modeling of the EM field including the semiconductor substrate. The previous section is the theoretical argument of this approach in which two complementary problems are solved, the first one describing the transversal behavior of the line from which Y l (ω) = G(ω) + jω C(ω) is consequently extracted, and the second one describing the longitudinal behavior of the line from which Z l (ω) = R(ω) + jω L(ω) is extracted.

Since the first field problem is dedicated to the computation of the transversal capacitances between wires and their loss conductances, according to the previous section, the natural choice is to solve a 2D problem of the transversal electro-quasi-static (EQS) field in dielectrics, considering the line wires as perfect conductors with given voltage. The boundary conditions are of Dirichlet type V = 0 on the lower electrode, and open boundary conditions (e.g. Robin, SDI or appropriate ELOB [50]) on the other three sides. A dual approach, such as dFIT [51] allows a robust and accurate parameter extraction.

The second field problem focuses on the longitudinal electric and the generated transversal magnetic field. Consequently, a short line-segment (with only one cell layer) is considered. The magneto-quasi-static (MQS) regime of the EM field is appropriate for the extraction of Z l (ω). However, for our simulations we used a our FIT solver for Full Wave (FW) ECE problems. The magnetic grid is 2D, thus ensuring the TM mode of propagation.

In order to eliminate the transversal distribution of the electric field, the lower electrode is prolongated over the entire far-end cross-section of the rectangular computational domain, which thus has perfect electric conductor (PEC) boundary conditions E t = 0 on two of their faces. On the three lateral faces, open-absorbing boundary conditions are the natural choice, whereas on the near-end cross-section the natural boundary conditions are those of the Electric Circuit Element (ECE): B n = 0, n × curlH = 0 excepting for the wire traces, where E t = 0. These conditions ensure the correct definition of the terminals voltages, and consequently of the impedance/admittance matrix (Fig. 5.17).

These boundary conditions are the field representation of the line segment with short-circuit at the far-end, whereas the 2D EQS problem is the field representation of the segment-line with open far-end.

The transversal component is finally subtracted from the FW-TM simulation to obtain an accurate approximation of the line impedance, as given by

This subtraction is carried out according to a pi-like equivalent net for the simulated short segment (Fig. 5.18). Finally, the line parameters are:

where

where Δ l is the length of the considered line-segment and Z TM is the impedance matrix extracted from the TM field solution.

This numerical approach to extract the line parameters, named the two fields method, is more robust and may be applied without difficulties to multi-wire lines. The obtained values of the line parameters are frequency dependent, taking into consideration proximity and skin effects as well as losses induced in the conducting substrate.

2.5 Variability Analysis of Line Parameters

The simplest way to analyze the parameter variability is to compute first order sensitivities. These are derivatives of the device characteristics with respect to the design parameters. The sensitivities of the line parameters are essential to estimate the impact of small variations on the device behavior. Moreover, the sensitivity of the terminal behavior of interconnects can also be estimated.

For instance, in the case of a single TL, having the global admittance given by

the sensitivities of the terminal admittance with respect to a parameter can be computed as:

where the sensitivities of

can be computed if the sensitivities of the p.u.l. parameters ∂ R∕∂ α, etc. are known.

In the case of a multiconductor TL with n conductors the sensitivity of the admittance matrix Y of dimension (2n × 2n) is computed by means of the sensitivity of the transfer matrix

also of dimension (2n × 2n), knowing that

In the formulas above, all the sub-blocks are of dimensions (n × n). For instance

The transfer matrix T is computed with (5.57) and its sensitivity is

where

Thus, the basic quantities needed to estimate the sensitivity of the admittance are the sensitivities of the p.u.l. parameters. By using a direct differentiation technique, as explained in [41] the sensitivities of the EQS and TM problems with respect to the parameters that vary, i.e. \(\partial \mathbf{Y}_{\mathit{EQS}}/\partial \alpha \quad \mbox{ and}\quad \partial \mathbf{Z}_{\mathit{TM}}/\partial \alpha\) are computed. Then, the sensitivity of the MQS mode is computed by taking the derivative of (5.58):

Finally, the sensitivities of the p.u.l. parameters are:

The values of the sensitivities thus obtained depend on the frequency as well.

2.6 Parametric Models Based on Taylor Series

Continuous improvements in today’s fabrication processes determine smaller chip sizes and smaller device geometries. Process variations induce changes in the properties of metallic interconnect between devices.

Simple parametric models are often obtained by truncating the Taylor series expansion for the quantity of interest. This requires the computation of the derivatives of the device characteristics with respect to the design parameters [55]. Let us assume that \(y(\alpha _{1},\alpha _{2},\cdots \,,\alpha _{n}) = y(\boldsymbol{\alpha })\) is the device characteristic which depends on the design parameters \(\boldsymbol{\alpha }= [\alpha _{1},\alpha _{2},\cdots \,,\alpha _{n}]\). The quantity y may be, for instance, the real or the imaginary part of the device admittance at a given frequency or any of the p.u.l. parameters. The parameter variability is thus completely described by the real function, y, defined over the design space S, a subset of \(\mathbb{R}^{n}\). The nominal design parameters correspond to the particular choice \(\boldsymbol{\alpha }_{0} = [\alpha _{01}\alpha _{02}\cdots \alpha _{0n}]\).

2.6.1 Additive Model (A)

If y is smooth enough then its truncated Taylor Series expansion is the best polynomial approximation in the vicinity of the expansion point \(\boldsymbol{\alpha }_{0}\). For one parameter (n = 1), the additive model is the first order truncation of the Taylor series:

If we denote by y(α 0) = y 0 the nominal value of the output function, by \(\frac{\partial y} {\partial \alpha } (y_{0}) \frac{\alpha _{0}} {y_{0}} = S_{\alpha }^{y}\) the relative first order sensitivity and by (α −α 0)∕α 0 = δ α the relative variation of the parameter α, then the variability model based on (5.73) defines an affine [60] or additive model (A):

To ensure a relative validity range of the first order approximation of the output quantity less a given threshold t 1, the absolute variation of the parameter must be less than

where D 2 is an upper limit of the second order derivative of the output quantity y with respect to parameter α [41].

For the multiparametric case, one gets:

Similar with one parameter case, the relative sensitivities w.r.t. each parameter are denoted by \(\frac{\partial y} {\partial \alpha _{k}}(\boldsymbol{\alpha }_{0})\frac{\alpha _{0k}} {y_{0}} = S_{\alpha _{k}}^{y}\) and the relative variations of the parameters by δ α k = (α k −α 0k )∕α 0k , the additive model (A) for n parameters being given by:

Thus, each new independent parameter taken into account adds a new term to the sum [52]. The additive model is simply a normalized standard version of a linearly truncated Taylor expansion.

Instead of using this truncated expansion may be numerically favorable to expand some transformation F(y) of y instead. Two particular choices for F have practical importance: identity and inversion as it will be indicated below.

2.6.2 Rational Model (R)

The rational model is the additive model for the reverse quantity 1∕y. It is obtained from the first order truncation of the Taylor Series expansion for the function 1∕y. For n = 1, if we denote by \(r(\alpha ) = \frac{1} {y(\alpha )}\), it follows that:

We define the relative first order sensitivity of the reverse circuit function:

Consequently, we obtain the rational model for n = 1:

It can be easily shown that the reverse relative sensitivity is \(S_{\alpha }^{\frac{1} {y} } = -S_{\alpha }^{y}\). For the multiple parameter case, the rational model is:

If the circuit function y is for instance the admittance, its inverse 1∕y is the impedance. In the time domain, these two transfer functions correspond to a device excited in voltage or in current, respectively. Consequently, the choice between additive and rational models for the variability analysis of the circuit functions in frequency domain can be interpreted as a change in the terminal excitation mode in the time domain state representation. Choosing the appropriate terminal excitation, the validity range of the parametric model based on first order Taylor series approximation can be dramatically extended.

2.7 Parametric Circuit Synthesis

We have shown in [48] that one of the most efficient order reduction method for the class of problems we address is the Vector Fitting (VFIT) method proposed in [47], improved in [43, 46] and available at [61]. It finds the transfer function matching a given frequency characteristic. Thus, in the frequency domain, for the output quantity \(\boldsymbol{y}(s)\), this procedure finds the poles p m (real or complex conjugate pairs), the residuals \(\boldsymbol{k}_{m}\) and the constant terms \(\boldsymbol{k}_{0}\) and \(\boldsymbol{k}_{\infty }\) of a rational approximation of the output quantity (e.g an admittance):

The resulting approximation has guaranteed stable poles and the passivity can be enforced in a post-processing step [43]. The transfer function (5.82) can be synthesized by using the Differential Equation Macromodel (DEM) [57]. Our aim is to extend DEM to take into consideration the parameterization.

To simplify the explanations, we assume a single input single output system, excited in voltage. It follows that the output current is given by (5.83), where x m (s) is a new variable defined by (5.84).

By applying the inverse Laplace transformation to (5.83) and (5.84), relationships (5.85) and (5.86) are obtained:

If we use the following matrix notations

then equations of the system (5.85), (5.86) can be written in a compact form as

2.7.1 Case of Real Poles

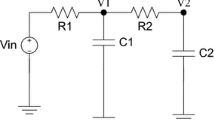

In the case in which all poles (and consequently, all the residuals) are real, Eq. (5.85) can be synthesized by the circuit shown in Fig. 5.19 which consists of a capacitor having the capacitance k 0, in parallel with a resistor having the conductance k ∞ , in parallel with q voltage controlled current sources, their parameters being the residuals k m .

Equation (5.86) can be synthesized by the circuit in Fig. 5.20, where x m is the voltage across a unity capacitor, connected in parallel with a resistor having the conductance − p m and a voltage controlled current source, controlled by the input voltage u.

We would like to include the parametric dependence into the VFIT model and in the synthesized circuit. To keep the explanations simple, we assume that there is only one parameter that varies, i.e. the quantity α is a scalar. Assuming that keeping the order q is satisfactory for the whole range of the variation of this parameter, this means that (5.82) can be parameterized as:

Without loss of generality, we can assume that the additive model is more accurate than the rational one. If not, the reverse quantity is used, which is equivalent, for our class of problems, to change the excitation of terminals from voltage excited to current excited, and use an additive model for the impedance \(\boldsymbol{z} =\boldsymbol{ y}^{-1}\). The additive model (5.74) can be written as

where here \(\boldsymbol{y}\) is a matrix function. By combining (5.91) and (5.92) we obtain an approximate additive model based on VFIT:

From (5.91) it follows that the sensitivity of the VFIT approximation needed in (5.93) is

The sensitivity \(\partial \boldsymbol{y}/\partial \alpha\) can be evaluated with (5.62) for as many frequencies as required and thus the sensitivities of poles and residues in (5.94) can be computed by solving the linear system (5.94) by least square approximation. Finally, by substituting (5.94) and (5.91) in (5.93), the final parameterized and frequency dependent model is obtained:

Expression (5.95) has the advantage that it has an explicit dependence with respect both to the frequency s = j ω and parameter α, is easy to implement and feasible to be synthesized as a net-list having components with dependent parameters, as explained below.

If we denote by

where k ∗ = k ∗(α 0) then Eq. (5.95) can be written as

where

The output current is thus

where the first term can be synthesized with a circuit similar to the one in Fig. 5.19 but where the k ∗ parameters depend on α, and the second term

adds q new parallel branches to the circuit (Fig. 5.21). It is useful to write (5.101) as

The part that depends on s in (5.102) can be synthesized by a second order circuit, such as the one in Fig. 5.22.

The current through the coil is

To obtain the expression in (5.102) it is necessary that LC = 1∕p m 2, LG = −2∕p m , for instance, we can chose G = −p m , L = 2∕p m 2, C = 1∕2. Thus, the parameterized circuit is given by the sub-circuits in Figs. 5.21, 5.20 and 5.23. The circuit that corresponds to the output equations has new branches with current controlled current sources. Only this sub-circuit contained parameterized components.

Another possibility to derive a parameterized circuit is to do as follows. In (5.101) we denote by

and by

Relationships (5.104) and (5.105) are equivalent to

which correspond in the time domain to

Equations (5.108) and (5.109) can be synthesized with the subcircuit shown in Fig. 5.24. In this case the circuit that corresponds to the output equation is the one in Fig. 5.25. In brief, the parameterized reduced order circuit can be either the one in Figs. 5.21, 5.20 and 5.23 or in Figs. 5.25, 5.20 and 5.24. In both approaches only the circuit that corresponds to the output equation is parameterized. The second approach has the advantage that can be generalized for a transfer function having complex poles as well.

2.7.2 Case of Complex Poles

2.7.2.1 Nominal Differential Equation Macromodel

If some of the q poles are complex, then they appear in conjugated pairs since they are the roots of the characteristic equation corresponding to a real matrix. We assume for the beginning that the transfer function has only one pair of complex conjugate poles: p = a +jb and p ∗ = a −jb. In this case the transfer function is

The numerator can be a real polynomial in s only if k 1 and k 2 are complex conjugated residues: k 1 = c +jd, k 2 = c −jd. In this case, the matrices in (5.87) are

In order to obtain a real coefficient equation, a matrix transformation is introduced. The system (5.89) becomes

where

Let

The transformation \(\boldsymbol{\hat{A}} =\boldsymbol{ V }\boldsymbol{A}\boldsymbol{V }^{-1}\) is a similarity transformation, preserving the eigenvalues of the matrix and thus the characteristic polynomial of the system.

The two equations corresponding to the complex conjugated pair of poles

become after applying the similarity transformation

If there are several pairs of complex conjugated poles, Eq. (5.118) will be true for any of these pairs and, by renaming p → p m , \(\hat{x}_{1} \rightarrow \hat{ x}_{m}^{{\prime}}\), \(\hat{x}_{2} \rightarrow \hat{ x}_{m}^{{\prime\prime}}\), a → a m , b → b m , the synthesized circuit is shown in Fig. 5.26.

In general, if the system has q poles out of which q r are real and q c = (q − q r )∕2 are pairs of complex conjugate poles, then the synthesis will be done as follows: for each real pole m = 1, …, q r , let k m be the residue corresponding to the pole; for each pair of complex conjugate poles m = 1, …, q c let the pole be p m ′ = a m + jb m , with the corresponding residue k m ′ = c m + jd m . An equivalent circuit for the output equation is shown in Fig. 5.27. It consists of the following elements connected in parallel:

-

A capacitance k 0;

-

A conductance k ∞ ,

-

q r voltage controlled current sources (having the parameter k m , controlled by the voltages x m ),

-

q c voltage controlled current sources (having the parameter \(-\sqrt{2}c_{m}\), controlled by the voltages \(\hat{x}_{m}^{{\prime}}\))

-

q c voltage controlled current sources (having the parameter \(\sqrt{2}d_{m}\), controlled by the voltages \(\hat{x}_{m}^{{\prime\prime}}\)).

The voltages x m are defined on the q r subcircuits that correspond to real poles (Fig. 5.20) and the voltages \(\hat{x}_{m}^{{\prime}}\), \(\hat{x}_{m}^{{\prime\prime}}\) are defined on the q c subcircuits that correspond to the pair of complex conjugate poles (Fig. 5.26).

2.7.2.2 Parametric DEM

To derive the parametric circuit in the case of complex poles, we could proceed as we did in the first approach for real poles. This would conduce to a transfer function of order 4, which is not obvious how it can be synthesized. The second approach can be extended to the case of complex poles, as follows.

Let’s consider Eqs. (5.108) and (5.109) written for a pair of complex conjugate poles p 1 = a +jb, p 2 = a −jb:

By using the matrix notations

it follows that (5.119) ÷ (5.122) can be written in a compact form as

and by applying the similarity transformation described in the previous section it follows that

where \(\boldsymbol{V }\boldsymbol{A}\boldsymbol{V }^{-1}\) is given by (5.115). It is straightforward to derive that

Thus, the Eqs. (5.124) and (5.125) corresponding to the two complex-conjugated poles become after applying the similarity transformation

If there are several pairs of complex conjugated poles, equations above will be true for any of these pairs and, by renaming p → p m , \(\hat{g}_{1} \rightarrow \hat{ g}_{m}^{{\prime}}\), \(\hat{g}_{2} \rightarrow \hat{ g}_{m}^{{\prime\prime}}\), \(\hat{f}_{1} \rightarrow \hat{ f}_{m}^{{\prime}}\), \(\hat{f}_{2} \rightarrow \hat{ f}_{m}^{{\prime\prime}}\), \(a \rightarrow a_{m}\), b → b m , the synthesized circuit is shown in Fig. 5.28.

The new terms added in the output equations are

In general, if the system has q poles out of which q r are real and q c = (q − q r )∕2 are pairs of complex conjugate poles, then the parametric synthesis will be done as follows: for each real pole m = 1, …, q r , let k m be the residue corresponding to the pole; for each pair of complex conjugate poles m = 1, …, q c let the pole be p m ′ = a m + jb m , with the corresponding residue k m ′ = c m + jd m . The equivalent circuit for the parametric output equation is shown in Fig. 5.29. It consists of the following elements connected in parallel:

-

A parameterized capacitance k 0(α) = k 0 + (α −α 0)∂ k 0∕∂ α,

-

A parameterized conductance k ∞ (α) = k ∞ + (α −α 0)∂ k ∞ ∕∂ α,

-

q r voltage controlled current sources (having as parameter the parameterized value k m (α) = k m + (α −α 0)∂ k m ∕∂ α, controlled by the voltages x m ),

-

q c voltage controlled current sources (having as parameter the parameterized value \(-\sqrt{2}c_{m}(\alpha )\), controlled by the voltages \(\hat{x}_{m}^{{\prime}}\)),

-

q c voltage controlled current sources (having the parameter \(\sqrt{2}d_{m}(\alpha )\), controlled by the voltages \(\hat{x}_{m}^{{\prime\prime}}\)),

-

q r voltage controlled voltage sources (having the parameter (α −α 0)k m , controlled by the voltages f m ,

-

q c voltage controlled current sources (having the parameter \(-\sqrt{2}c_{m}(\alpha -\alpha _{0})\), controlled by the voltages \(\hat{f}_{m}^{{\prime}}\)),

-

q c voltage controlled current sources (having the parameter \(\sqrt{2}d_{m}(\alpha -\alpha _{0})\), controlled by the voltages \(\hat{f}_{m}^{{\prime\prime}}\)).

The voltages x m are defined on the q r subcircuits that correspond to real poles (Fig. 5.20), the voltages \(\hat{x}_{m}^{{\prime}}\), \(\hat{x}_{m}^{{\prime\prime}}\) are defined on the q c subcircuits that correspond to the pair of complex conjugate poles (Fig. 5.26), the voltages f m are defined on the q r subcircuits that correspond to real poles (Fig. 5.24), the voltages \(\hat{f}_{m}^{{\prime}}\) and \(\hat{f}_{m}^{{\prime\prime}}\) are defined on the q c subcircuits that correspond to the complex poles (Fig. 5.28).

2.8 Case Study