Abstract

The use of precomputed data to speed up a cryptographic protocol is commonplace. For instance, the owner of a public point \(P\) on an elliptic curve can precompute various points of the form \([2^k]P\) and transmit them together with \(P\). One inconvenience of this approach though may be the amount of information that needs to be exchanged. In the situation where the bandwidth of the transmissions is limited, this idea can become impractical. Instead, we introduce a new scheme that needs only one extra bit of information in order to efficiently and fully determine a point of the form \([2^k]P\) on a binary elliptic curve. It relies on the x-doubling operation, which allows to compute the point \([2^k]P\) at a lower cost than with \(k\) regular doublings. As we trade off regular doublings for \(x\)-doublings, we use multi-scalar multiplication techniques, such as the Joint Sparse Form or interleaving with NAFs. This idea gives rise to several methods, which are faster than Montgomery’s method in characteristic \(2\). A software implementation shows that our method called \(\text {x-JSF}_2\) induces a speed-up between 4 and 18 % for finite fields \({\mathbb {F}}_{2^d}\) with \(d\) between \(233\) and \(571\). We also generalize to characteristic \(2\) the scheme of Dahmen et al. in order to precompute all odd points [3]\(P\), [5]\(P, \dots , [2t - 1]P\) in affine coordinates at the cost of a single inversion and some extra field multiplications. We use this scheme with \(x\)-doublings as well as with the window NAF method in López–Dahab coordinates.

This work was partially supported by ARC Discovery grant DP110100628.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

We refer readers to [28] for Sutantyo, Daniel a general introduction to elliptic curves. An ordinary elliptic curve \(E\) defined over the finite field \({\mathbb {F}}_{2^d}\) can always be written with an equation of the form

This is an example of a curve in Weierstraß form. A point \(P=(x_1,y_1)\) satisfying (1) is an affine point and the set of rational points of \(E\), denoted by \(E({\mathbb {F}}_{2^d})\), corresponds to

where \(P_\infty \) is a special point called the point at infinity. The set \(E({\mathbb {F}}_{2^d})\) can be endowed with an abelian group structure under a point addition operation, denoted by \(+\), with \(P_\infty \) as the identity element. Naturally, this addition leads to the scalar multiplication

for an integer \(n \geqslant 1\) and a point \(P\in E({\mathbb {F}}_{2^d})\). Given \(n\) and \(P\), there are very efficient techniques to compute \([n]P\), see Sect. 2.3. But the converse problem, known as the Elliptic Curve Discrete Logarithm Problem (ECDLP), appears to be intractable for a well chosen elliptic curve defined over \({\mathbb {F}}_{2^d}\) with \(d\) prime. Therefore the security of many protocols in elliptic curve cryptography relies on the hardness of the ECDLP. This makes scalar multiplication the most ubiquitous operation in any elliptic curve based protocols. See [3, 5, 6, 14] for further discussions specific to the use of elliptic curves in cryptography.

2 State of the Art

2.1 Affine and López–Dahab Coordinates

Consider the elliptic curve \(E\) as in (1) and the point \(P = (x_1,y_1) \in E({\mathbb {F}}_{2^d})\). The double \(P_2 = [2]P\) with coordinates \((x_2, y_2)\) can be obtained with one inversion, two multiplications and one squaring. We abbreviate this as \(\text {I}+ 2\text {M}+ \text {S}.\) There are similar formulas to compute the addition of two points at the cost of \(\text {I}+ 2\text {M}+ \text {S}\) as well. In \({\mathbb {F}}_{2^d}\), represented by a polynomial basis, a squaring involves only a reduction. Thus it costs much less than a generic multiplication. Computing the inverse of an element \(\alpha \in {\mathbb {F}}_{2^d}\) is more complex. We rely usually on the Extended Euclidean \(\gcd \) algorithm or on the relation \(\alpha ^{-1}= \alpha ^{2^d -2}\). In software, an inversion can be cheaper than \(10\text {M}\), whereas the same operation can be extremely time consuming on platforms such as embedded devices. This explains why an alternate representation of \(E\) in projective coordinates is often considered. The equation

is an homogenized version of the equation \(E\) where the point \(P\) is represented in projective-like López–Dahab (LD) coordinates [17] by the triple \((X_1: Y_1: Z_1)\). When \(Z_1 = 0\), the point \(P\) is the point at infinity whereas for \(Z_1 \ne 0\), \(P\) corresponds to the affine point \((X_1/Z_1, Y_1/Z_1^2)\). The complexity of a doubling in LD coordinates is \(3\text {M}+ 1\times a_6 + 5\text {S}\), including one multiplication by the fixed element \(a_6\).

The addition of two points requires \(13\text {M}+ 4\text {S}\) in general, but only \(8\text {M}+ 5\text {S}\) in case at least one of the points is in affine coordinates, or in other words its \(Z\)-coordinate is equal to \(1\). This operation, that is very useful in practice, is referred to as a mixed addition [8]. See [4] for all the corresponding formulas.

2.2 Decompression Techniques in LD Coordinates

In the following, we show how the \(x\)-coordinate of a point together with one extra bit is enough to fully recover the point in affine coordinates. Indeed, let us consider a point \(P\) that is not the point at infinity nor a point of order \(2\) and which is represented by \((X_1:Y_1:Z_1)\) in LD coordinates. Assuming that we ignore \(Y_1\) but know \(X_1\) and \(Z_1\) as well as the last bit of \(Y_1/(X_1Z_1)\), let us see how to determine the affine coordinates \(x_1\) and \(y_1\) of \(P\). Before we start, note that \(Y_1/(X_1Z_1)\) is equal to \(y_1/x_1\), so it is independent of the choice of the \(Z\)-coordinate of \(P\) in LD format.

Since \(P\) is on (2), it follows that \(Y_1\) is a root of the quadratic equation

The other root is \(Y_1 + X_1Z_1\). The two solutions correspond to the points \(P\) and \(-P\). Because of the assumption on \(P\), we have \(X_1Z_1\ne 0\) so the change of variable \(U = T\alpha \) where \(\alpha = 1/(X_1Z_1)\) is valid and leads to the new equation

with \(\beta = \alpha X_1^2 + a_2 + \alpha ^2a_6Z_1^4.\) To solve this equation let us introduce the half-trace function \({H}\) defined by

When \(d\) is odd, it is well known that the solutions of Eq. (3) are \(H(\beta )\) and \(H(\beta ) + 1\).

Clearly, we have \(x_1 = \alpha X_1^2\). Now, let us explain how to find the correct value of \(y_1\). It is easy to see that the solutions of (3) correspond to \(y_1/x_1\) and \(y_1/x_1 + 1\). So we can use the least significant bit of a root to identify the correct \(y_1\). Let \(b\) be the last bit of \(y_1/x_1\), then if the last bit of \(H(\beta )\) is equal to \(b\), set \(y_1 = H(\beta ) x_1\), otherwise \(y_1 = (H(\beta ) + 1) x_1\). The public point \(P\) can therefore be represented as \((x_1, b)\) and we can fully determine \(P\) in affine coordinates with \(\text {I}+ \text {H}+ 4\text {M}+ 1\times \sqrt{a_6}+ 2\text {S}\), where \(\text {H}\) is the complexity to evaluate the half-trace function.

As the half-trace function is linear, its computation can be sped up significantly provided that there is enough memory to store some precomputed values. With those enhancements, we have in general \(\text {H}\sim \text {M}\). See [2, 14, 15] for details.

Next, we review how to compute \([n]P\). Note that throughout the paper the coordinates of \([n]P\) are denoted by \((x_n, y_n)\) or \((X_n:Y_n:Z_n)\).

2.3 Classical Scalar Multiplication Techniques

The simplest, yet efficient, way to perform a scalar multiplication \([n]P\) is the double and add method, which is a straightforward adaptation of the square and multiply algorithm used to compute an exponentiation. Given the binary representation of \(n\), denoted by \((n_{\ell -1}\dots n_0)_2\), a doubling is performed at each step followed by an addition if \(n_i = 1\). The double and add method is therefore intrinsically linked to the binary representation of \(n\). This is no surprise as the method used to perform the scalar multiplication \([n]P\) is often related to the representation of the integer \(n\). Other choices are available to represent \(n\), for instance in base \(2^k\) for a fixed parameter \(k\), or signed digits. A signed-digit expansion for an integer \(n\) is of the form

where \(S\) is a finite set of acceptable coefficients. This is particularly interesting as a negative coefficient \(-c\) in the representation of \(n\) induces the use of the point \(-[c]P\) in the computation of \([n]P\). Note that \(-[c]P\) can be obtained virtually for free from the point \([c]P\) in affine coordinates. The Non-Adjacent Form (NAF) [20, 21] is especially relevant as the density of the expansion, i.e. the number of nonzero terms divided by the total length is equal to \(\frac{1}{3}\) on average. Also for a given \(n\), the NAF has the lowest density among all signed-digit expansions of \(n\) with coefficients in \(\{-1,0,1\}\). A generalization of the NAF, called window NAF of size w [18, 23, 27] and denoted by \(\text {NAF}_w\) achieves an average density equal to \(\frac{1}{w+1}\) for the set \(S\) of digits containing \(0\) and all the odd integers strictly less than \(2^{w-1}\) in absolute value. For maximal efficiency, the points \([c]P\) are precomputed in affine coordinates for all the positive \(c\) in \(S\). See [11] or [14] for more details on NAF and window NAF expansions.

It is often required, for instance during the verification phase of the ECDSA signature protocol, to perform a double-scalar multiplication of the form \([n_1]Q_1 + [n_0]Q_0\). Instead of computing each scalar multiplication separately, it is more efficient to combine the binary expansions of \(n_1\) and \(n_0\) in

Mimicking the double and add method, we process a sequence of joint-bits, instead of a sequence of bits. At each step, a doubling is then followed by an addition of \(Q_1\), \(Q_0\), or \(Q_1+Q_0\) depending on the value of the joint-bit that is considered, i.e. \(\left( {\begin{array}{c}1\\ 0\end{array}}\right) \), \(\left( {\begin{array}{c}0\\ 1\end{array}}\right) \), or \(\left( {\begin{array}{c}1\\ 1\end{array}}\right) \). This idea, often attributed to Shamir is in fact a special case of an idea of Straus [26]. Mixing this idea with signed-digit representations gives birth to the Joint Sparse Form (JSF) [24] that can be seen as a generalization of the NAF for double-scalar multiplication. Indeed, the joint-density of the JSF is equal to \(\frac{1}{2}\) on average and is optimal across all the joint signed-digit representations of \(n_1\) and \(n_0\). Note that the points \(Q_1\), \(Q_0\), \(Q_1+Q_0\), and \(Q_1-Q_0\) must be precomputed in affine coordinates for maximal efficiency. The JSF method is the standard way for computing a double-scalar multiplication when both points are not known in advance or when the amount of memory available on the device performing the computation is limited and does not allow the use of more precomputed values. Otherwise, interleaving with NAFs [14, Algorithm 3.51] gives excellent results. The principle of this approach is simply to form

where \((v_{k-1} \dots v_0)\) and \((u_{k-1} \dots u_{0})\) are the window NAF expansions of \(n_1\) and \(n_0\), possibly padded with a few zeroes at the beginning. Note that we precompute only the points \([3]Q_i\), \([5]Q_i, \dots , [2^w-1]Q_i\) for \(i = 0\) and \(1\) and not all the combinations \([2s+1]Q_1 \pm [2t+1]Q_0\) as this option is too costly in most situations. It remains to compute all the doublings together and then perform at most two mixed additions at each step. Obviously, it is easy to generalize this idea to efficiently compute the \(k+1\) scalar multiplications \([n_k]Q_k + \cdots + [n_0]Q_0\) simultaneously provided that the points \([3]Q_i\), \([5]Q_i\), ..., \([2^w-1]Q_i\), for \(i =0, \dots , k\) are all precomputed.

2.4 Fixed Point Scalar Multiplication Using Precomputations

There are faster scalar multiplications techniques when the point \(P\) is known in advance and when some precomputations are available. For instance, we could considerably reduce the number of doublings, if not totally avoid them, by considering precomputed points of the form \([2^{ki}]P\) for a fixed \(k\). Three methods, namely the Euclidean, Yao, and fixed-base comb, make use of this space-time trade-off to greatly reduce the complexity of a scalar multiplication. See [11, Sect. 9.3] for a presentation of those different methods.

If we consider a specific protocol such as ECDSA, Antipa et al. [1] show how to speed up the signature verification process by introducing the precomputed multiple \([2^k]P\) of the public point \(P\), with \(k = {\lceil \ell /2\rceil }\). Tests show that the speed-up is significant, more than 35 % of the time saved, but this approach shares a drawback with the techniques discussed so far: it requires to transmit an important amount of additional data on top of the public point \(P\).

When exchanging large volume of data is not practical, for instance because the bandwidth of the network is limited, the methods described in this part do not apply, even if the point \(P\) is known in advance. Instead, Montgomery’s method is perfectly suited, as it allows to perform arithmetic on an elliptic curve using only the \(x\)-coordinate of \(P\).

2.5 Montgomery’s Method

Montgomery developed an efficient arithmetic for special elliptic curves defined over a prime field [19]. Ultimately, it relies on the possibility to derive the \(x\)-coordinate of \(P+Q\) from the \(x\)-coordinates of \(P\), \(Q\), and \(P-Q\). This approach has been generalized to binary curves by López and Dahab [16]. Indeed, starting from \(P\) in LD coordinates and assuming that we know at each step the \(X\) and \(Z\) coordinates of \([u]P\) and of \([u+1]P\), we can determine the \(X\) and \(Z\) coordinates of \([2u]P\) and \([2u+1]P\). See [16, 25] for the actual formulas. The full scalar multiplication \([n]P\) can then be computed with Montgomery’s ladder, which requires an addition and a doubling at each step. If the square root of \(a_6\) is precomputed, the complexity of the scheme is \((5\text {M}+ 1\times \sqrt{a_6}+4\text {S})\) per bit of the scalar.

3 Applications of \(x\)-doublings to Scalar Multiplication

We have just seen that the \(x\)-coordinate of \([2]P\) only depends on the \(x\)-coordinate of the point \(P\) itself. Namely, if \(P = (X_1:Y_1:Z_1)\), we have \([2]P\) given by \(X_2 = X_1^4 + a_6Z_1^4 = (X_1^2 + \sqrt{a_6} Z_1^2)^2\) and \(Z_2 = X_1^2Z_1^2\). In the following, we refer to this operation as an x-doubling. We can compute an \(x\)-doubling with \(\text {M}+ 1\times \sqrt{a_6} + 3\text {S}\). This means that an \(x\)-doubling saves \(2\text {M}+ 2\text {S}\) compared to a regular doubling in LD coordinates. A doubling in LD coordinates costs \(3\text {M}+ 1\times a_6 + 5\text {S}\) in the same conditions. From now on, we assume that \(\sqrt{a_6}\) is precomputed and does not enjoy any special property so that \(1\times \sqrt{a_6} = 1\text {M}\).

We propose to speed up the scalar multiplication \([n]P\) by replacing some regular LD doublings with \(x\)-doublings. To take full advantage of this operation, our idea is to determine the \(x\)-coordinate of \([2^k]P\) using \(k\) successive \(x\)-doublings and then recover the \(y\)-coordinate using decompression techniques and one extra known bit of information.

3.1 Double Scalar Multiplication and \(x\)-Doublings

Let \(\ell \) be the bit size of the order of a point \(P\) and \(k = \lfloor \ell /2 \rfloor \). Let \((x_1, b)\) be the public information corresponding to the point \(P\), as explained in Sect. 2.2. We assume that the owner of the point \(P\) has also precomputed \(b_k\), i.e. the last bit of \(y_{2^k}/x_{2^k}\), and made it public.

Let \(n\) be an integer of size \(\ell \) bits. It is clear that \([n]P = [n_1]Q_1 + [n_0]Q_0\) where \(n = 2^k n_1 + n_0\), \(Q_1 = [2^k]P\), and \(Q_0 = P\). The \(X\) and \(Z\) coordinates of \(Q_1\) can be obtained with \(k\) straight \(x\)-doublings at a cost of \((2\text {M}+ 3\text {S})k\). Then we can recover the \(y\)-coordinates of \(Q_0\) and \(Q_1\) using \(b\) and \(b_k\) with decompression techniques. For that, we compute \(\beta = \alpha x_1^2 +a_2 + a_6\alpha ^2\) and \(\beta _k = \alpha _k X_{2^k}^2 + a_2 + a_6\alpha _k^2Z_k^4\) where \(\alpha = 1/x_1\) and \(\alpha _k = 1/(X_{2^k}Z_{2^k})\). Montgomery’s trick [19] allows to obtain \(\alpha \) and \(\alpha _k\) with only one inversion and three extra multiplications. Then we compute the half-trace of \(\beta \) and \(\beta _k\) and identify the correct root with the bits \(b\) and \(b_k\). Assuming that \(a_6\) is a random element of \({\mathbb {F}}_{2^d}\) and that \(\sqrt{a_6}\) has been precomputed, the complexity to determine \(Q_0\) and \(Q_1\) in affine coordinates is \(\text {I}+ 2\text {H}+ 12\text {M}+ 4\text {S}\). This approach can easily be generalized to retrieve an arbitrary number of points. It then takes \(\text {I}+ t\text {H}+ (7t - 2)\text {M}+ 2t\text {S}\) to fully determine \(Q_0\), \(Q_1\), ..., \(Q_{t-1}\) in affine coordinates once the \(X\) and \(Z\) coordinates of those points have been obtained using \(x\)-doublings.

Now that we have \(Q_0\) and \(Q_1\) in affine coordinates, we can compute \([n]P = [n_1]Q_1 + [n_0]Q_0\) with any standard double scalar multiplication technique, for instance the JSF, see Sect. 2.3. In this case, the complexity is approximately \(\ell /2\) \(x\)-doublings, \(\ell /2\) regular doublings, and \(\ell /4\) mixed additions using the four points \(Q_0\), \(Q_1\), \(Q_0+Q_1\), and \(Q_0-Q_1\). Note that \(Q_0+Q_1\) and \(Q_0-Q_1\) should be computed simultaneously with \(\text {I}+ 4\text {M}+ 2\text {S}\).

This new approach, called \(x\)-JSF, compares favorably against the window \(\text {NAF}_w\) method in LD coordinates and Montgomery’s approach. Both are very popular methods in practice for a wide range of extension degrees when only the \(x\)-coordinate of the point \(P\) is transmitted. The \(x\)-JSF requires essentially \(5\text {M}+ 5.25\text {S}\) per bit compared to \(6\text {M}+ 4\text {S}\) for Montgomery’s method. Adding the complexities of all the steps involved, including the cost of precomputations, and assuming that \(\text {H}= \text {M}\), we obtain the following result.

Proposition 1

Let \(n\) be an integer of binary length \(\ell \). The average complexity of the \(x\)-JSF method to compute the scalar multiplication \([n]P\) is

Proof

Starting from the \(x\)-coordinate of \(P\), we do \(\frac{\ell }{2}\) consecutive \(x\)-doublings with \(\bigl (\text {M}+\textstyle {\frac{3}{2}}\text {S}\bigr )\ell \). We need \(\text {I}+ 12\text {M}+ 4\text {S}\) to form the two quadratic equations and \(2\text {M}\) to solve them in order to obtain \(P\) and \([2^{\lfloor \ell /2\rfloor }]P\) in affine coordinates. We then need \(\text {I}+ 4\text {M}+ 2\text {S}\) to compute the sum and difference of those two points in affine coordinates again. Then, we perform \(\frac{\ell }{2}\) regular doublings, with \(\bigl (2\text {M}+\textstyle {\frac{5}{2}}\text {S}\bigr )\ell \), and \(\frac{\ell }{4}\) mixed additions on average, with \(\bigl (2\text {M}+\textstyle {\frac{5}{4}}\text {S}\bigr )\ell \). Finally, we express the point in affine coordinates with \(\text {I}+ 2\text {M}+ \text {S}\).

3.2 Trading Off More Doublings for \(x\)-Doublings

Previously, we explained how to replace approximately \(50\,\%\) of normal doublings by \(x\)-doublings. Since an \(x\)-doubling is significantly cheaper than a doubling, it is natural to try to increase this ratio, i.e. replace more doublings with \(x\)-doublings. A simple idea is for instance to work with three shares instead of two, i.e. fix \(k = \lfloor \ell /3 \rfloor \) and write \(n = 2^{2k} n_2 + 2^k n_1 + n_0\). If we fix \(Q_0 = P\), \(Q_1 = [2^{k}]P \) and \(Q_2 = [2^{2k}]P\), we see that \([n]P = [n_2]Q_2 + [n_1]Q_1 + [n_0]Q_0\). We denote by \(w\)-\(w\)-\(w\) the generalization of the interleaving with NAFs method, where the \(\text {NAF}_w\) expansions of \(n_2\), \(n_1\), and \(n_0\) are stacked together as follows

and processed from left to right using Straus’ idea, see Sect. 2.3. The only points that we precompute are \([3]Q_i\), \([5]Q_i\),..., \([2^{w-1} - 1]Q_i\), for \(i \in [0,2].\)

Proposition 2

Let \(n\) be an integer of binary length \(\ell \). The average complexity of the \(w\)-\(w\)-\(w\) interleaving with NAFs method to compute the scalar multiplication \([n]P\) with \(w > 3\) is

For \(w = 3\), the complexity is simply

Proof

The proof is similar to Proposition 1. It is clear that we need \(2\frac{\ell }{3}\) successive \(x\)-doublings and \(\frac{\ell }{3}\) normal doublings, plus a certain number of additions, which depends on the size of the window \(w\). The exact number can be derived via some probabilistic analysis. Given that the density of the \(\text {NAF}_w\) is \(\frac{1}{w+1},\) we may assume that the probability for a coefficient in a long expansion to be nonzero is \(\frac{1}{w+1}.\) It follows that the \(w\)-\(w\)-\(w\) interleaving method requires \(\frac{\ell }{w+1}\) mixed additions on average. To determine the precomputations, we could compute \([2]Q_0\), \([2]Q_1\), and \([2]Q_2\) in LD coordinates, make those three points affine simultaneously, before performing \(3(2^{w-2} - 1)\) LD mixed additions, and converting all the resulting points to affine simultaneously again. This approach only needs two inversions. Instead, in our implementation as well as in this analysis, we perform all the computations directly in affine coordinates for simplicity. The formula follows by adding all the different contributions.

The complexity is very low, but in practice the main drawback of this approach is the number of precomputations, which grows as \(3\times 2^{w-2}\). Another method along those lines, called \(x-\mathrm{JSF_{2}}\), requires less storage and involves splitting \(n\) in four shares as \(2^{3k}n_3 + 2^{2k}n_2 + 2^{k}n_1 + n_0\) with \(k = \lfloor \ell /4 \rfloor \). The points \(Q_3 = [2^{3k}]P\), \(Q_2 = [2^{2k}]P\), \(Q_1 = [2^{k}]P\), \(Q_0 = P\) are determined after \(3\frac{\ell }{4}\) straight \(x\)-doublings. Four extra bits of information are necessary to fully recover the points. Then we compute the JSF expansions of \(n_3\) and \(n_2\) and of \(n_1\) and \(n_0\), together with the precomputed affine points \(Q_3\pm Q_2\), and \(Q_1\pm Q_0\). We then need \(\frac{\ell }{4}\) regular LD doublings as well as \(\frac{\ell }{4}\) mixed additions. The following result follows immediately.

Proposition 3

Let \(n\) be an integer of binary length \(\ell \). The average complexity of the \(\text {x-JSF}_2\) method to compute the scalar multiplication \([n]P\) is

Proof

We perform \(3\frac{\ell }{4}\) \(x\)-doublings, then need \(\text {I}+ 30\text {M}+ 8\text {S}\) to recover the four points \(Q_0\), \(Q_1\), \(Q_2\), and \(Q_3\) in affine coordinates. We need \(2(\text {I}+ 4\text {M}+ 2\text {S})\) to compute \(Q_3\pm Q_2\) and \(Q_1\pm Q_0\). Then, we perform \(\frac{\ell }{4}\) regular doublings and \(\frac{\ell }{4}\) mixed additions on average. Finally, we express the point in affine coordinates with \(\text {I}+ 2\text {M}+ \text {S}\).

Tests show that the \(\text {x-JSF}_2\) achieves a speed-up close to 18 % over the fastest known method in \(\mathbb {F}_{2^{571}}\), i.e. Montgomery’s method. See Sect. 5 for details.

3.3 Trading Off Even More Doublings for \(x\)-Doublings

In some sense, the \(\text {x-JSF}_2\) relies on interleaving with JSFs. Generalizing this idea, the \(\text {x-JSF}_t\) uses \(2^t\) shares, each of size \(\ell /{2^t}\) bits, and needs \(\frac{2^t - 1}{2^t}\) \(x\)-doublings and \(\frac{1}{2^t}\) regular doublings. Arranging the scalars two by two, and computing their corresponding JSF expansions, we see that \(\frac{\ell }{4}\) mixed additions are necessary on average, provided that we precompute \(2^{t-1}\) pairs of points of the form \(Q_{2i+1}{\pm } Q_{2i}\).

To further reduce the number of regular doublings without using precomputations, we turn our attention to a method first described by de Rooij, but credited to Bos and Coster [10]. As previously, write \(n\) in base \(2^k\), for a well chosen \(k\). It follows that \([n]P = [n_{t-1}]Q_{t-1} + \cdots + [n_0]Q_0,\) where \(Q_i = [2^{ki}]P\).

The main idea of the Bos–Coster method is to sort the shares in decreasing order according to their coefficients and to recursively apply the relation

where \(n_2 > n_1\) and \(q = \lfloor n_2/n_1 \rfloor \). The process stops when there is only one nonzero scalar remaining. Because the coefficients are roughly of the same size throughout the process, we have \(q=1\) at each step almost all the time. This implies that \([n]P\) can be computed almost exclusively with additions once the shares \(Q_i\)’s are obtained via \(x\)-doublings.

Clearly, this approach requires \(k(t-1)\) successive \(x\)-doublings and \(t\) point reconstructions. The precise number of additions involved is much harder to analyze but can be approximated by \(\frac{\ell }{\log k}\) for reasonable values of \(k\). So, with the Bos–Coster method, we have replaced almost all the doublings by \(x\)-doublings, but one detail plays against us. Most of the additions that we need are full additions and not mixed additions as it was the case for the \(x\)-JSF and interleaving with NAFs. Indeed, even if the different points \(Q_{0}, \dots , Q_{t-1}\) are initially expressed in affine coordinates, then after a few steps of the algorithm, it is no longer the case and subsequent additions need to be performed in full. Those full additions are too expensive to make this scheme competitive with Montgomery’s method.

Next, we investigate Yao’s method [29]. As for the previous approach, we express \(n\) in base \(2^k\) as \((n_{t-1} \dots n_0)_{2^k}\) and we consider the points \(Q_i = [2^{ki}]P\), for \(i = 0\), ..., \(t-1\), obtained with \(x\)-doublings only. Note that we can rewrite the sum \([n]P = [n_{t-1}]Q_{t-1} + \cdots + [n_0]Q_0\) as

We deduce the following algorithm. Starting from \(T = P_\infty \), \(R = P_\infty \), and \(j = 2^k - 1\), we repeat \(R = R + Q_i\) for each \(i\) such that \(n_i = j\), followed by \(T = T + R\) and \(j = j -1\) until \(j = 0\).

To update \(R\), we use mixed additions, but the statement \(T = T + R\) requires a full LD addition. Therefore, the complexity of this approach is essentially \(k(t-1)\) successive \(x\)-doublings, \(t\) point reconstructions, \(\bigl (1- \frac{1}{2^k}\bigr )t\) mixed additions on average, and \(2^k\) full additions. In order to minimize the number of full additions, we need to keep \(k\) low, which means increasing \(t\). This increases the number of mixed additions. Also it is quite expensive to retrieve a point in affine coordinates from its \(X\), \(Z\)-coordinates and the last bit of \(y/x\). As explained in Sect. 3.1, we need \(\text {H}+ 7\text {M}+ 2\text {S}\) per additional point \(Q_i\) to fully recover it in affine coordinates. This proves to be too much and all the parameters \(k\) that we tried failed to introduce any improvement over Montgomery’s method.

3.4 Generic Protocol Setup Compatible with \(x\)-doublings

The purpose of this article is to evaluate the relevance of \(x\)-doublings to perform a scalar multiplication, not to precisely describe how to use this operation in a specific protocol. However, it seems that the most realistic setup to use one of the schemes presented in Sects. 3.1 and 3.2 is for the owner of a point \(P\) to precompute and store the last bit \(b_k\) of \(y_{2^k}/x_{2^k}\), for all \(k \in [0, d+1]\). This of course is done only once at the very beginning and does not affect the security of the scheme. Since \(P\) is public, anybody can perform the computations and retrieve those bits. The other party can then access \(x_1\), the \(x\)-coordinate of \(P\), as well as a few bits \(b_k\). At most four bits are sufficient to deliver a significant speed-up with the \(\text {x-JSF}_2\) approach, see Sect. 5. The choice of those bits does not reveal anything on the scalar \(n\) except maybe its size, which we do not see as a problem.

4 Affine Precomputations with Sole Inversion in Char \(2\)

The other contribution of this paper is a generalization of the work of Dahmen et al. [9] to precompute all the affine points required by the \(\text {NAF}_w\) method with just one inversion. Indeed, starting from the affine point \(P\), Dahmen et al. show how to obtain \([3]P\), \([5]P\),..., \([2t-1]P\) also in affine coordinates with \(\text {I}+ (10t-11)\text {M}+ 4t\text {S}\). But their work only addresses the case of large odd characteristic.

With a generalized scheme, we can precompute all the points necessary for the \(w\)-\(w\)-\(w\) interleaving with NAFs method with just three inversions instead of \(3\times 2^{w-2}\), using affine arithmetic. We mimic their approach and follow three easy steps. First, we compute all the denominators involved. Then we apply Montgomery’s inversion trick [19] that combines \(j\) inversions in \({\mathbb {F}}_{2^d}\) at the expense of one inversion and \(3j-3\) field multiplications. Finally, we reconstruct all the points. The total complexity to compute \([2]P\), \([3]P\), \([5]P\), ..., \([2t-1]P\) for \(t>2\) is \(\text {I}+ (11t - 13)\text {M}+2t\text {S}\). See the Appendix for the actual algorithm. When we only need \([3]P\), for instance for the \(\text {NAF}_3\), we compute \([3]P\) directly following the approach explained in [7]. Note however that \(\text {I}+ 6\text {M}+ 4\text {S}\) are enough to determine \([3]P\), saving one multiplication.

5 Tests and Results

To validate the use of the \(x\)-doubling operation and the methods described in Sect. 3, we have implemented all of them in C++ using NTL 6.0.0 [22] built on top of GMP 5.1.2 [13]. The program is compiled and executed on a quad core i7-2620 at 2.70Ghz.

In the following, we test some of the techniques described in Sects. 2 and 3 to perform a scalar multiplication on a random curve defined over \({\mathbb {F}}_{2^d}\) for \(d = 233\), \(409\), and \(571\). Namely, we compare the following methods: Montgomery (Mont.), window NAF in LD coordinates with \(w\in [2,6]\) (\(\mathrm{NAF}_w\)), \(x\)-JSF, \(\text {x-JSF}_2\), and interleaving with \(\mathrm{NAFs}\) (\(w\)-\(w\)-\(w\)). Note that \(\underline{\mathrm{NAF}}_w\) and \(\underline{w-w-w}\) are slightly different variants where the precomputations are obtained with the sole inversion technique, explained in Sect. 4. We generate a total of \(100\) curves of the form

where \(a_6\) is a random element of \({\mathbb {F}}_{2^d}^*\). For each curve, a random point \(P\) is created as well as \(100\) random scalars selected in the interval \([0, 2^d + 2^{d/2} - 1]\). We assume that the point \(P\) needs to be decompressed for all the methods. The different methods are then tested against the same curves, points, and scalars. The computations are timed over \(10\) repetitions.

Together with the average timings of the best methods in each category, we present the average number of basic operations required to compute \([n]P\). Those basic operations, i.e. inversion, half-trace computation, multiplication, and squaring in \({\mathbb {F}}_{2^d}\) are respectively represented by \(\text {I}_{d}\), \(\text {H}_{d}\), \(\text {M}_{d}\), and \(\text {S}_{d}\). In any case we have, \(\text {I}_{d}/\text {M}_{d}\) between \(8\) and \(10\), and \(\text {S}_{d}/\text {M}_{d}\) between \(0.14\) and \(0.23\).

See Table 1 for the actual figures, which features timings and operation counts of the \(x\)-JSF, the \(\text {x-JSF}_2\), as well as the fastest interleaving with NAFs methods among \(w\)-\(w\)-\(w\), for \(w \in [2,5]\) and among \(\underline{w-w-w}\) again for \(w \in [2,5]\). Table 1 also includes the number of stored points required by each method (#\(\mathcal {P}\)), and the improvement, if any, over Montgomery’s method and the fastest window NAF method.

With our implementation, the \(\text {x-JSF}_2\) breaks even with Montgomery’s method around \(d= 233\) and enjoys a much bigger speed-up for larger degrees, reflecting Proposition 3. The interleaving with NAFs method 5-5-5 is the fastest of all for \(d = 571\), with a speed-up that is close to \(20\,\%\).

Remark 1

A careful reader would have noticed that for \(d=233\), 3-3-3 is faster than 4-4-4. This is surprising for two reasons. First, 4-4-4 is faster than 3-3-3 Second, it is more efficient, given the value of the ratio \(\text {I}_{233}/\text {M}_{233}\), to determine the precomputations using the single inversion approach. So 4-4-4 should be faster. This is confirmed by an analysis of the average numbers of multiplications and squarings required. Indeed, we need \(1112\text {M}+ 1120\text {S}\) for 4-4-4, against \(1143\text {M}+ 1165\text {S}\) for 3-3-3. We observe the same phenomenon for degrees \(d = 163\) and \(d = 283\), but not for \(d = 409\) or \(d = 571\). We explain this by the large number of variables needed to determine the precomputations when \(w > 3\). See the Appendix for details. For \(w = 3\), the formulas are simpler, requiring much less intermediate storage. This overhead of declaring and manipulating extra variables tends to have less impact for larger degrees because multiplications and squarings take relatively longer.

6 Conclusion and Future Work

We have shown how to make use of \(x\)-doublings to compute a scalar multiplication on a binary elliptic curve. Our main approach is to trade off regular doublings for cheaper \(x\)-doublings using classical multi-scalar multiplication techniques.

Unfortunately, it seems impossible to generalize the use of \(x\)-doublings in large characteristic, since solving quadratic equations is much slower than in characteristic \(2\).

A possible generalization of this work would be to investigate which endomorphisms different from doublings enjoy similar properties, i.e. have an \(x\)-coordinate that can be computed efficiently and independently from the \(y\)-coordinate. Certain endomorphisms \([k]P\) that can be split as the product of two isogenies on special families of curves are known to have this property [12]. It would be interesting to see what kind of improvements those endomorphisms could bring when it comes to computing a scalar multiplication.

References

Antipa, A., Brown, D., Gallant, R.P., Lambert, R., Struik, R., Vanstone, S.A.: Accelerated verification of ECDSA signatures. In: Preneel, B., Tavares, S. (eds.) SAC 2005. LNCS, vol. 3897, pp. 307–318. Springer, Heidelberg (2006)

Avanzi, R.M.: Another look at square roots (and other less common operations) in fields of even characteristic. In: Adams, C., Miri, A., Wiener, M. (eds.) SAC 2007. LNCS, vol. 4876, pp. 138–154. Springer, Heidelberg (2007)

Avanzi, R.M., Cohen, H., Doche, C., Frey, G., Lange, T., Nguyen, K., Vercauteren, F.: Handbook of elliptic and hyperelliptic curve cryptography. In: Avanzi, R.M., Cohen, H., Doche, C., Frey, G., Lange, T., Nguyen, K., Vercauteren, F. (eds.) Discrete Mathematics and its Applications. Chapman & Hall, Boca Raton (2005)

Bernstein, D.J., Lange, T.: Explicit-formulas database. http://www.hyperelliptic.org/EFD/

Blake, I.F., Seroussi, G., Smart, N.P.: Elliptic Curves in Cryptography. London Mathematical Society Lecture Note Series, vol. 265. Cambridge University Press, Cambridge (1999)

Blake, I.F., Seroussi, G., Smart, N.P.: Advances in Elliptic Curve Cryptography. London Mathematical Society Lecture Note Series, vol. 317. Cambridge University Press, Cambridge (2005)

Ciet, M., Joye, M., Lauter, K., Montgomery, P.L.: Trading inversions for multiplications in elliptic curve cryptography. Des. Codes Crypt. 39(2), 189–206 (2006)

Cohen, H., Miyaji, A., Ono, T.: Efficient elliptic curve exponentiation using mixed coordinates. In: Ohta, K., Pei, D. (eds.) ASIACRYPT 1998. LNCS, vol. 1514, pp. 51–65. Springer, Heidelberg (1998)

Dahmen, E., Okeya, K., Schepers, D.: Affine precomputation with sole inversion in elliptic curve cryptography. In: Pieprzyk, J., Ghodosi, H., Dawson, E. (eds.) ACISP 2007. LNCS, vol. 4586, pp. 245–258. Springer, Heidelberg (2007)

de Rooij, P.: Efficient exponentiation using precomputation and vector addition chains. In: De Santis, A. (ed.) EUROCRYPT 1994. LNCS, vol. 950, pp. 389–399. Springer, Heidelberg (1995)

Doche, C.: Exponentiation. In: [3], pp. 145–168 (2005)

Doche, Ch., Icart, T., Kohel, D.R.: Efficient scalar multiplication by isogeny decompositions. In: Yung, M., Dodis, Y., Kiayias, A., Malkin, T. (eds.) PKC 2006. LNCS, vol. 3958, pp. 191–206. Springer, Heidelberg (2006)

Free Software Foundation. GNU Multiple Precision Library. http://gmplib.org/

Hankerson, D., Menezes, A.J., Vanstone, S.A.: Guide to Elliptic Curve Cryptography. Springer, Heidelberg (2003)

Knudsen, E.W.: Elliptic scalar multiplication using point halving. In: Lam, K.-Y., Okamoto, E., Xing, Ch. (eds.) ASIACRYPT 1999. LNCS, vol. 1716, pp. 135–149. Springer, Heidelberg (1999)

López, J., Dahab, R.: Fast Multiplication on Elliptic Curves over \(GF\)(2\(_{\rm m}\)) without Precomputation. In: Koç, C., Paar, C. (eds.) CHES 1999. LNCS, vol. 1717, pp. 316–327. Springer, Heidelberg (1999)

López, J., Dahab, R.: Improved algorithms for elliptic curve arithmetic in \(GF\)(2\(_{\rm n}\)). In: Tavares, S., Meijer, H. (eds.) SAC 1998. LNCS, vol. 1556, pp. 201–212. Springer, Heidelberg (1999)

Cohen, H., Miyaji, A., Ono, T.: Efficient elliptic curve exponentiation using mixed coordinates. In: Ohta, K., Pei, D. (eds.) ASIACRYPT 1998. LNCS, vol. 1514, pp. 51–65. Springer, Heidelberg (1998)

Montgomery, P.L.: Speeding the Pollard and elliptic curves methods of factorisation. Math. Comp. 48, 243–264 (1987)

Morain, F., Olivos, J.: Speeding up the computations on an elliptic curve using addition-subtraction chains. Inf. Theor. Appl. 24, 531–543 (1990)

Reitwiesner, G.: Binary arithmetic. Adv. Comput. 1, 231–308 (1962)

Shoup, V.: NTL: A Library for doing Number Theory. http://www.shoup.net/ntl

Solinas, J.A.: Improved algorithms for arithmetic on anomalous binary curves. Technical Report CORR 99–46, CACR. http://cacr.uwaterloo.ca/techreports/1999/corr99-46.pdf (1999)

Solinas, J.A.: Low-weight binary representations for pairs of integers. Combinatorics and Optimization Research Report CORR 2001–41, University of Waterloo (2001)

Stam, M.: On montgomery-like representations for elliptic curves over GF\((2^{k})\). In: Desmedt, Y.G. (ed.) Public Key Cryptography — PKC 2003. LNCS, vol. 2567. Springer, Heidelberg (2003)

Straus, E.G.: Addition chains of vectors (problem 5125). Amer. Math. Mon. 70, 806–808 (1964)

Takagi, T., Yen, S.-M., Wu, B.-C.: Radix-r non-adjacent form. In: Zhang, K., Zheng, Y. (eds.) ISC 2004. LNCS, vol. 3225, pp. 99–110. Springer, Heidelberg (2004)

Washington, L.C.: Elliptic Curves: Number Theory and Cryptography. Discrete Mathematics and its Applications. Chapman & Hall, Boca Raton (2003)

Yao, A.C.C.: On the evaluation of powers. SIAM J. Comput. 5(1), 100–103 (1976)

Acknowledgments

We would like to thank Tanja Lange and Daniel J. Bernstein as well as the reviewers of this article for their numerous comments and suggestions, which greatly contributed to improve its contents.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix: Affine Precomputations with Sole Inversion in Characteristic 2

Appendix: Affine Precomputations with Sole Inversion in Characteristic 2

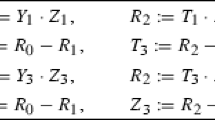

Let \(P = (x_1,y_1)\) be a point on the curve \(y^2 +xy = x^3 + a_2x^2 + a_6\). For \(t > 2\), the following procedure computes the points \([2]P\), \([3]P\), \([5]P, \dots , [2t-1]P\) with \(\text {I}\) + \((11t - 13)\text {M}\) + \(2t\text {S}\).

Rights and permissions

Copyright information

© 2014 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Doche, C., Sutantyo, D. (2014). Faster Repeated Doublings on Binary Elliptic Curves. In: Lange, T., Lauter, K., Lisoněk, P. (eds) Selected Areas in Cryptography -- SAC 2013. SAC 2013. Lecture Notes in Computer Science(), vol 8282. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-662-43414-7_23

Download citation

DOI: https://doi.org/10.1007/978-3-662-43414-7_23

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-662-43413-0

Online ISBN: 978-3-662-43414-7

eBook Packages: Computer ScienceComputer Science (R0)