Abstract

The purpose of this study is to analyze the potential effects of unobserved heterogeneity on the cost efficiency measurement of electricity distribution systems within the framework of incentive regulation schemes such as price- or revenue cap. In particular, we decompose the benchmarking process into two steps: In the first step, we attempt to identify classes of distribution system operators functioning in similar environments and with comparable network and structural characteristics. For this purpose, we apply a latent class model. In the second step, best practice is obtained within each class, based on deterministic and stochastic frontier models. The results show that the decomposition of the benchmarking process into two steps and the consideration of technology classes can reduce the unobserved heterogeneity within classes, hence, reducing the unexplained variation that could be mis-specified as inefficiency.

The views expressed in this paper are those of the authors and do not necessarily reflect the opinion of the institutions with which they are affiliated. The responsibility for all errors lies solely with the authors.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

In the last two decades the electricity distribution sector in Europe has witnessed a wave of regulatory reforms aimed mainly at improving the economic efficiency. Thereby, information on several efficiency concepts in production theory, including scale and scope efficiency as well as cost efficiency has become very important. The concept of cost efficiency is a measure of the regulated electricity distribution company’s ability to minimize costs, given specific demand and market conditions. Cost inefficiency, also called ‘X-inefficiency’, occurs when the company fails to produce with full efficiency at the cost frontier, defined by best-practice companies.

Regulatory authorities increasingly use empirical cost norms, such as parametric or non-parametric benchmarking methods, in various incentive regulation schemes (Haney and Pollitt 2009). One of the most widely used regulatory regimes in electricity networks is price- or revenue-cap regulation (often denoted CPI-X regulation, cf. Littlechild (1983)). This method determines a maximum price or revenue index in real terms, less a productivity improvement parameter, referred to as the ‘X-factor’.Footnote 1 The X-factors include a general productivity improvement requirement (usually called the ‘general X-factor’) and potentially an individual efficiency improvement parameter (frequently denoted the ‘Xi-factor’ or the individual X-factor). Whereas the purpose of the general X-factor is to share the productivity gains in the sector between the consumers and the companies, the individual term is intended to eliminate incumbent efficiency differences between companies. The exact translation of an estimated static cost inefficiency to an annual real productivity target (Xi) depends on the allowed period to catch up inefficiency, the type of inefficiency detected (capital and/or operating costs) and the type of by-pass mechanism (Z) used for certain costs that may be proportional to the inefficiency (e.g. network losses). Notwithstanding, the mechanism allows the regulator to set differentiated price or revenue caps based on the individual company’s empirically estimated productive efficiency performance.Footnote 2 An alternative to the CPI-X regulation, addressing the arbitrariness of the adjustment parameters and the risk induced by the lag, is the yardstick regulation paradigm (cf. Shleifer 1985). In this model, the reimbursement of the regulated firm is linked to a dynamic norm, excluding the cost report of the specific company in its calculation. Although Shleifer presented the model for a stylized cost function, the use of frontier analysis tools enables the application of yardstick methods also to multi-output production and service provision. Several regulators in Europe, thereof Germany and Norway, use DEA for dynamic yardstick regimes in electricity distribution regulation.Footnote 3

However, the increasing use of efficiency analysis has raised serious concerns among regulators and companies regarding the reliability of efficiency estimates.Footnote 4 In fact, empirical evidence suggests that the estimates are sensitive to the adopted efficiency measurement approach.Footnote 5 This implies that the choice of the approach may have important effects on the financial situation of the companies as well as on the industrial structure of the regulated sector.

One important dimension affecting the reliability of efficiency estimates is the presence of unobserved factors. The regulated companies operate in different regions with various environmental and network characteristics that are only partially observed. This heterogeneity in the service area is an important factor to consider in a benchmarking analysis. Recall that the purpose of the benchmarking method is to create a cost norm for efficient, structurally comparable companies under similar operating conditions. Some methods of estimating efficiency take account of such unobserved factors, but in different ways. Generally, in deterministic models such as the non-parametric linear programming approach, the unobserved factors that influence the level of production costs are not considered in the analysis. The explicit assumption in these approaches is that all relevant cost differences are captured by observed variables. The few efficiency analysis models addressing part of the unobserved heterogeneity factors are parametric and based on panel data. The seminal paper for the development of models for unobserved heterogeneous factors is Greene (2005). The main idea is to introduce an individual effect in an econometric model capturing the unobserved heterogeneous factors that remain constant over time. The main problem hereby is that the individual effects can capture also part of the inefficiency that remains constant over time. In addition, the complexity of the models developed by Greene (2005) and the entailed assumptions remain important obstacles in applying panel data models in regulatory practice. Given that the unobserved factors are considered differently in various models, the resulting estimates can vary across methods. The magnitude of variation depends on the importance of the unobserved factors, which might change from one case to another.

To address this problem, we propose an alternative strategy for improve efficiency measurement methodology in the presence of unobserved heterogeneity. In our strategy, we decompose the benchmarking process into two steps: In the first step, we attempt to identify classes of companies that operate in similar environments and with comparable network and structural characteristics. For this purpose, we apply a latent class model. In the second step, the best practice is obtained within each class, based on deterministic and stochastic frontier models. Provided that the identified classes contain reasonably comparable cases and assuming a reasonable explanatory power for the variables included in the model specification, any deterministic or stochastic approach can be used to estimate efficiency.

The outline of this chapter is as follows: Sect. 1 reviews some of the most commonly used approaches to efficiency measurement. Section 2 addresses the cost model specifications and estimation methods. Section 3 introduces the data and Sect. 4 provides the estimation results for both steps and measures of cost efficiency for different frontier models in the second step. We draw our conclusions in Sect. 5.

2 Review on Approaches to Efficiency Measurement

This section briefly reviews some of the most commonly used frontier approaches to cost efficiency measurement, based on more extensive reviews in Kumbhakar and Lovell (2003), Murillo-Zamorano (2004), Coelli et al. (2005), Cornwell and Smith (2008), Greene and William (2008), Kumbhakar and Lovell (2003), and Farsi and Filippini (2009).Footnote 6 The focus here is mainly on cost efficiency and on cost functions, the argumentation is analogously valid for production functions and productive efficiency (under a set of regularity conditions, cf. Shepard (1953) and Nerlove (1963)). The frontier approach assumes that full cost efficiency is defined by those companies that are identified as the best-practice peers. All other companies are assumed to operate above the cost frontier, hence to have non-zero inefficiency.

Economic literature has developed two different frontier paradigms to empirically measure cost efficiency.Footnote 7 The first is based on a non-parametric deterministic and the second on an econometric approach, sometimes also referred to the parametric approach.

Non-parametric approaches, such as the Data Envelopment Analysis (DEA), proposed by Farrell (1957) and Charnes et al. (1978), use linear programming to construct a company’s efficiency frontier, which is considered as a deterministic function of the observed variables. These methods are non-parametric in the sense that they do not impose any specific functional form or distribution assumption, i.e. it is assumed that the data are free of noise. Thanks to their relative simplicity and availability, such methods, in particular DEA, are quite popular among both researchers and regulators in energy distribution networks. The DEA models can be input- or output-oriented and one of the a priori assumptions concerns the returns to scale. The models can be specified as constant returns to scale (CRS), variable returns to scale (VRS), non-increasing returns to scale (NIRS), non-decreasing returns to scale (NDRS), free disposal hull (FDH) and free replicability hull (FRH), where the latter two merely impose disposability and additivity, but not convexity of the production space. A basic DEA formulation calculating the minimal cost under VRS for company i in a sample of N companies with k inputs and m outputs would be

where w i and x i are k × 1 vectors representing input prices and quantities for company i; y i is an m × 1 vector representing the given output bundle; X and Y are input and output matrices namely, a k × N and an m × N matrix consisting of the input and output bundles for all companies in the sample; N is an N × 1 vector of ones; and λ is an N × 1 vector of non-negative constants to be estimated. The VRS property is satisfied through the convexity constraint (N’λ = 1) that ensures that only similar-sized companies are benchmarked against each other. The linear programming algorithm finds a piece-wise linear isoquant in the input-space, which corresponds to the minimum costs of producing the given output at any given point. Cost efficiency (CE) finally is measured by the minimum feasible input bundle for each company relative to its actual input bundle, i.e. \( CE_{i} = w_{i}^{\prime } x_{i}^{*} /w_{i}^{\prime } x_{i}^{0} \).

In contrast to non-parametric methods, most of the econometric approaches include estimating an empirical cost function, where the observed variables should include a vector of outputs (q) and a vector of input prices (p). The remaining unobserved part, the residual, is completely (in deterministic models) or partially (in stochastic models) assigned to inefficiency.

The first econometric frontier models that appeared in the literature were deterministic and estimated by OLS. Usually, their cost function is expressed in logarithms as

where C it is total cost incurred by the unit i at time t, f(.) is a parametric cost function, q it and p it are vectors of outputs and input prices, respectively, β is the vector of parameters and α the intercept to estimate, and ε it is the residual. As the error term in deterministic models only reflects the inefficiency, it is assumed to be non-negative. Therefore, Winsten (1957) suggested shifting the estimated intercept down by the minimal residual value. This model is called Corrected OLS (COLS). The cost efficiency of unit i in the COLS model is thus given by exp(−u it ) with u it = ε it – min(ε it ) ≥ 0. Afriat (1972) proposed a slightly different model, usually referred to as Modified OLS (MOLS), where the OLS intercept is shifted by the expected value of the inefficiency term that is, E(u it ). The cost efficiency of unit i at time t in the MOLS model is thus given by exp(−u it ) with u it = ε it + E(u it ). The efficiency term u it is not necessarily positive (some units are below the cost frontier). Truncation at zero assigns the respective units with full efficiency.

Deterministic models are similar to DEA and other linear programming models in that the best practice (the cost frontier) is a fixed function that does not vary across observations or units. As main drawback, these models attribute the residual entirely to inefficiency, i.e. they do not account for other sources of stochastic variation such as measurement errors.Footnote 8, Footnote 9 Nevertheless, deterministic models are still widely used in applied economic literature and in regulation (see e.g. Haney and Pollitt (2009)).

To overcome the drawbacks of deterministic models, Aigner et al. (1977) and Meeusen and van den Broeck (1977) proposed a stochastic frontier model (SFA), which divides the residual ε it into two parts: u it is reflecting inefficiency, and v it is capturing the random noise. The basic cost function of the stochastic frontier model can be written as

With certain distribution assumptions on u it and v it , this model can be estimated using the Maximum Likelihood (ML) estimation method. Typically, it is assumed that the inefficiency term u it has a one-sided non-negative distribution that is, a normal distribution truncated at zero: u it ~ |N(0,\( \sigma_{u}^{2} \))|,Footnote 10 and the random noise term v it is normally distributed: v it ~ N(0,\( \sigma_{v}^{2} \)). Additionally, u it and v it are considered as being independently distributed from each other. As in the models above, one would expect the most efficient unit to take u it = 0, and the efficiency value to be calculated as exp(−u it ). Unfortunately, E(u it ) cannot be calculated for an individual unit. Jondrow et al. (1982) proposed therefore a different estimator to measure efficiency. This estimator is based on the conditional expectation function of the residual, (E[u it |ε it ]), and is known as the JLMS estimator referring to the authors.Footnote 11 This is a highly non-linear function that only slightly increases the inefficiency for units close to the frontier leaving no unit with full efficiency. The other estimator proposed by these authors is based on the conditional mode (M[u it |ε it ]) that normally assigns full efficiency to several units. It has been used much less in the empirical literature than the JLMS estimator.

The models described so far can be applied either to cross-sectional or panel data. However, the panel structure in the data is ignored, as these models require pooling all observations and treating them as being independent from each other. Temporal variations can be captured using time trends or time-interactions. Moreover, these models are not suited to account for unmeasured, i.e. unobserved heterogeneity. This is due to the fact that with pooled data, each observation is considered as a single, discrete unit. With only one observation per unit, it is not possible to disentangle efficiency and time-invariant, unit-specific heterogeneity. Therefore, the presence of unobserved heterogeneity influences the estimation results of the regressors in case of correlation, or the residuals (referred to heterogeneity bias, Chamberlain (1982)). The structure of panel data offers the opportunity to apply models that account for the individual effect that should capture the unobserved heterogeneity and hence free from the heterogeneity bias. The time dimension in panel data sets allows us to observe the same unit repeatedly over a certain time span. This enables us to extract time-invariant factors such as unit-specific characteristics that do not necessarily accrue to the unit’s inefficiency, but do affect the costs across different networks. Especially structural inefficiencies (inefficiency that is constant over time) and inefficiencies following a certain time path can be better identified using panel data. Most of the developments of the panel data models go back to the stochastic frontier models of Aigner et al. (1977) and Meeusen and van den Broeck (1977) expressed in Eq. (3).

An early application to panel data of this stochastic frontier model was the Random Effects (RE) model by Pitt and Lee (1981) which was estimated by ML and assumed that the inefficiency uit is fixed through time, but still half-normally distributed: u i ~ |N(0,\( \sigma_{u}^{2} \))|. Important variations of this model were presented by Schmidt and Sickles (1984) who relaxed the distribution assumption, and by Battese and Coelli (1988) who assumed a truncated normal distribution. Schmidt and Sickles (1984) also proposed a Fixed Effects (FE) model to avoid the possible heterogeneity bias in case of correlation of uit with the explanatory variables. One of the drawbacks of models with time-invariant efficiency is that time-varying components of heterogeneity are entirely interpreted as random noise. Therefore, Cornwell et al. (1990), Kumbhakar (1990) and Battese and Coelli (1992) suggested the first stochastic models allowing the cost efficiency to vary over time. However, the first two models developed were vulnerable to multicollinearity and the third was characterized by a deterministic functional form of the inefficiency term over time.

The main restriction of all of the models presented above is that unobserved factors are assumed to be random over time. This implies that time-invariant factors such as physical network and environmental characteristics are not considered as heterogeneity. The family of ‘true’ panel data models (Kumbhakar 1991) and Polachek and Yoon (1996) as precursor models of Greene (2005) extend the original stochastic frontier model as it is formulated in Eq. (3) by adding a unit-specific time-invariant factor accounting for the individual effect.Footnote 12 Hence, apart from the random noise component, these models include two stochastic terms for unobserved heterogeneity, one for time-varying and one for time-invariant individual effects. This model can be written as

where α i is the time-invariant unit-specific factor and the model is estimated by Maximum Simulated Likelihood (MSL). In a RE framework, α i is an iid random component and must not be correlated with the observed variables. In a FE framework, α i is a constant parameter for every unit.Footnote 13 As in all ML models, the inefficiency component can be measured by the JLMS estimator of Jondrow et al. (1982). Assuming that physical network and environmental characteristics do not vary considerably over time and that the inefficiency is time-varying, these models help to separate unobserved time-invariant effects from efficiency estimates. However, if inefficiency is persistent over time, these models underestimate the inefficiency systematically, e.g. if managers take wrong decisions in every period or make the same mistakes again and again, the corresponding consequences in terms of inefficiency are detected as time-invariant unit-specific heterogeneity and not as inefficiency. As noted in Greene (2008), the ‘truth’ doubtless lies somewhere between the two strong assumptions.

The idea of observed parameter variability was early applied to a precise indication of heterogeneity of the production environment by Kalirajan and Obowona (1994) in the stochastic frontier context. A similar random parameter (RP) model was also formulated by Greene (2005), which is a generalization of the True Effects models in that not only the constant but also the parameters of the observed variables are unit-specific indicating the effect of different environments or technologies. This model is estimated by MSL. As noted by Greene (2008), the estimation of the MSL of this model can be numerically cumbersome.

Another approach to accommodate heterogeneity among units into the model is followed by latent class (LC) models. Originally introduced by Lazarsfeld and Henry (1968), LC identifies distinct class membership among subjects regarding their cost structure and estimates a separate cost function for each of these classes simultaneously.Footnote 14 LC models can be regarded as the discrete counterparts of RP models. With a sufficient large number of classes, LC approximates a fully parameterized RP model. The LC model can be written as:

The subscript i denotes the unit, and u it and v it are defined as above. α j is the constant and β j is a vector of discrete random parameters identified in j = 1, 2, …, J classes, assuming that each observation in the sample follows a specific technology. These technologies differ from each other in the values of model parameters {α i , β i , σ i }. This vector includes also a set of prior probabilities that determines the fraction of each latent class in the sample. It is defined as a discrete random vector with the following distribution:

The subscript j denotes the latent class with J being the number of classes. The choice of J is usually based on diagnostic criteria such as the Akaike Information Criterion (AIC) or the Bayesian Information Criterion (BIC).Footnote 15 These criteria indicate the optimal number of classes from an informational perspective, but cannot be used for statistical inference. After the estimation of the LC model, posterior probabilities \( \widehat{{P_{j} }} \) can be calculated for each observation from Bayes rule.

The choice of the econometric models presented so far is usually not straightforward. For instance, Farsi and Filippini (2009) have found several studies that report discrepancies in efficiency estimates between different models and approaches.Footnote 16 Such discrepancies are partly due to methodological sensitivity in the estimation of individual efficiency scores and partly due to different consideration of unobserved heterogeneity factors, which are particularly relevant in network industries such as electricity distribution. Panel data models can be used to control for the firm- or network specific unobserved heterogeneity. The use of panel data models is especially interesting as data for several years have become available to an increasing number of regulators in many countries. The complexity of such models remains however an important obstacle in applying panel data models in regulation. The effort in disentangling inefficiency variations from unobserved factors such as statistical noise due to error and omitted variables is a crucial element of all frontier models, in both cross sectional and panel data. The statistical modeling challenge has a parallel in practice: benchmarking can only be effective to the extent that for any specific company with given characteristics, there exists a set of comparable companies upon which a ‘best practice’ can be constructed.

Therefore, as previously discussed, we propose an alternative strategy in this paper to consider unobserved heterogeneity factors in that we decompose the benchmarking analysis into two steps: In the first step, we attempt to identify classes of companies that operate in similar environments and with comparable network and structural characteristics. For that step, we use a latent class model. In the second step, best practice is obtained within each class applying different benchmarking methods. Provided that the identified classes include reasonably comparable cases and assuming a reasonable explanatory power for the variables included in the model specification, any deterministic or stochastic approach can provide accurate values of efficiency. Therefore, we use the DEA, MOLS and SFA methods for the second step. In the next section, we will apply this approach using a sample of Norwegian electricity distribution companies.

3 Cost Model Specification and Estimation Methods

We specify a cost model that explains total costs of the Norwegian electricity distribution system operators (DSO) with two input and one output variable, one environmental factor and one network characteristics. We write this model as follows:

where the dependent variable TC represents the total costs of the DSO. P L and P C are the input prices of labor and capital, respectively. Q is the delivered electricity, D the network density and S, finally, the share of high voltage network. For a complete description of the data and variables, see Sect. 4.

For the identification of the comparable technology classes in the first step, we apply a Latent Class (LC) approach (cf. Lazarsfeld and Henry (1968), see Sect. 2)Footnote 17 to estimate the cost model in Eq. (7).Footnote 18 Using a Cobb-Douglas functional form and imposing the linear homogeneity restriction, the LC model in Eq. (5) can be adapted to:

where subscript i denotes the electricity distribution company i = 1, 2, …, I, subscript t the years 1998–2002, and ε it ~ N(0,σ i ) the error term. The subscript j denotes the latent class with J being the number of classes.

After the identification of comparable technology classes, we estimate the cost efficiency in the second step separately for each class. As the heterogeneity within classes is expected to be low due to comparable technologies, any deterministic or stochastic approach can be considered. For general overviews on approaches to efficiency measurement, see e.g. Murillo-Zamorano (2004) or Greene (2008), or, for an empirical application, Farsi and Filippini (2009). With respect to current regulatory practice (see Haney and Pollitt (2009) for an overview over 40 countries), we apply the three following, most prevalent methods: The Data Envelopment Analysis (DEA, proposed by Farrell (1957) and Charnes et al. (1978)), the Modified OLS (MOLS, proposed by Afriat (1972)) and the Stochastic Frontier Analysis (SFA, proposed by Aigner et al. (1977)).

DEA is a non-parametric method to calculate cost efficiency as a deterministic function of the observed variables, i.e. it is assumed that the data are free of stochastic variation due to measurement errors or noise. The cost model given in Eq. (7) can be readily used for the efficiency measurement with the DEA method. Assuming variable returns to scale (VRS), the Eq. (1) reduces to the following minimization problem:

where Y it represents the vector of the output bundle including output Q it and output characteristics D it and S it , as both characteristics take resources. However, in the DEA model, D is defined as the inverse of the network density, since a higher network density implies lower costs. N and λ are vectors of ones and non-negative constants, respectively. Cost efficiency (CE) is measured as the minimum feasible costs for each company relative to its actual costs, i.e. CE it = TC * /TC it .

MOLS and SFA are parametric methods that use regression techniques to construct the efficiency frontier. Both require the specification of a functional form of the cost function as well as assumptions about the error term(s). Similar to Eq. (8) in the first step, we estimate cost model in Eq. (7) using a Cobb-Douglas functional form and impose the linear homogeneity restriction. The MOLS and SFA models in Eqs. (2) and (3) can be adapted to:

The MOLS approach is based on the OLS estimation. The residuals ε it are corrected using a constant shift, which is the expected value of the inefficiency term, E(u it ). The cost efficiency in the MOLS is thus deterministic and given by CE it = exp(−u it ) with u it = ε it + E(u it ). u it is not necessarily positive, as some units may lie below the cost frontier. Truncation at zero assigns the respective units with full efficiency.

The SFA approach is based on the Maximum Likelihood estimation. The residuals ε it are composed of the inefficiency term u it and the random noise term v it . In this study, it is assumed that u it follows one-sided non-negative distribution, i.e. a normal distribution truncated at zero: u it ~ |N(0,\( \sigma_{u}^{2} \))|, and that v it is normally distributed: v it ~ N(0,\( \sigma_{v}^{2} \)). Additionally, u it and v it are considered as being independently distributed from each other. The cost efficiency in the SFA is thus stochastic and given by CE it = exp(−u it ).

In order to compare the results from this two-step approach with that of a conventional analysis, we estimate the three models (DEA, MOLS, SFA) also in one step, i.e. without consideration of classes, but for the whole sample. The resulting tables are given in the Appendix.

4 Data

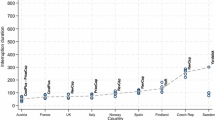

The data we use for this study consist of a balanced panel of 555 observations from 111 companies that have operated in the Norwegian power distribution sector from 1998 to 2002.Footnote 19 The available information includes total costs, labor costs, full time equivalents, total transformer capacity, distributed electricity, number of customers, line length for each low and high voltage, and year dummies. Table 1 provides a descriptive summary of the balanced panel data set for the variables included in the models.

From this data, we calculated the variables included in the models as follows: The dependent variable (TC) is the total network costs excluding the cost of purchased electricity. It is measured in millions Norwegian Kroner (NOK) and is in real terms; hence it is adjusted for inflation. TC includes all DSO’s network costs consisting of both operating and capital expenditures. The explanatory variables involve two input price variables, one output variable and one environmental and one network characteristic, hence the DSO’s are here considered to be single-product firms. The input price variables include a price for labor (P L ) and a price for capital (P C ). We derived P L by dividing labor costs by the number of full-time equivalents. P C is an approximation to the real capital price, calculated as a residual price by dividing non-labor costs by the installed transformer capacity. The output is given by the delivered electricity (Q), measured in gigawatt hours (GWh). The environmental variable is the network density (D), represented by total number of customers divided by total network length in kilometers. The network characteristic (S) is modeled by the share of high voltage network length and total network length.

5 Results

In the first step, we first determine the optimal number of classes J of the LC model. Using the model specification in Eq. (8), we applied LC models to the data in Table 1 with two to six classes.Footnote 20 The specification diagnostics obtained by this analysis show that J = 4 is the optimal number of classes for the BIC and J = 6 for the AIC. In cases with J > 4, we observed some implausible values for the regression coefficients, e.g. statistically insignificant values for the output. Considering the appealing statistical features of the BIC, we adopted this criterion and selected four classes.

The estimation results of this LC model estimated in the first step are summarized in Table 2. These results show four distinctive technology classes with significant coefficients in most of the cases. Differences in the coefficients indicate that there are variations in marginal costs and technological characteristics across these classes. We see throughout all classes that total cost increases with higher input prices and higher outputs and in three classes with an increasing share of high voltage networks. As expected, operation with density reduces costs. Differences in coefficients indicate that there are variations in marginal costs and technical characteristics across classes. Prior class probabilities indicate also different class sizes.

Table 3 provides a descriptive summary of the observed variables for each class as identified by the estimated posterior class probabilities. These probabilities show that the operators can be distinguished with high probabilities. The fact that even in the worst cases, minimum probabilities are greater than 0.5 suggests that operators can be classified without ambiguity. The resulting classes have at least 100 observations, which is large enough for reasonable degrees of freedom for the second step estimations. The values of the observed variables in each class indicate that we can distinguish in an approximate manner certain features that characterize each class. Class 1 faces low input prices and a high customer density, whereas Class 2 has high input prices and medium customer density. Classes 3 and 4 face intermediate values for most of the variables except for a relatively low customer density in Class 3.

The estimation results for the MOLS and the SFA estimated in the second step for each class separately are summarized in Table 4. Other than the first step, the estimations are based on cost model specification in Eq. (11) and on subsamples of the data in Table 1, given by the four classes of the first step. In general, the coefficients are of the same magnitude as in the LC model in the first step. The coefficients of the MOLS and the SFA differ slightly because of different assumptions on the error term. The signal-to-noise ratio λ is significant for three classes indicating skewness and existence of inefficiency. The insignificant value of λ in class two means that standard errors of the inefficiency terms are low compared to that of the noise terms, which will results in low inefficiency values for this class.

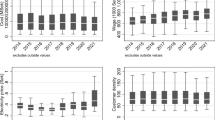

The results of the efficiency analysis for the four classes and three models each are summarized in Table 5. The average efficiency value ranges from 0.56 for DEA in Class 1 to 0.98 for SFA in Class 2. In general, the average efficiency values are lowest in Class 1 for all three models and highest in Class 2 for MOLS and SFA. The highest average efficiency value for DEA is in Class 3 with 0.81. The standard deviations are highest in Class 1 for all three models and lowest in Class 2 for SFA, indicated already by the insignificant lambda in Table 4. Throughout all three models, SFA produces higher efficiency values than DEA and MOLS. This is expected since the model considers statistical noise. Another typical feature is that whereas DEA and MOLS assign full efficiency to several observations, SFA does not classify any operator as fully efficient. The minimum values are low in Class 1 for all three models. In particular, DEA attributes considerably lower minimum efficiency estimates for all classes than the other models.

In general, the efficiency values are higher and more realistic than the corresponding scores of a conventional analysis performed in one step (given in Table 7 in the Appendix). The decomposition of the benchmarking process into two steps and the consideration of technology classes has reduced unobserved heterogeneity within classes and, hence, reduced the unexplained variance previously claimed as inefficiency. Therefore, conventional cross-sectional or pooled models might underestimate cost efficiency.

6 Summary and Conclusions

Regulatory authorities increasingly use benchmarking practices to identify a company’s individual efficiency in various incentive regulation schemes such as price- or revenue cap. The identification of cost efficiency in electricity distribution is a challenging task, as the companies operate in different regions with various environmental and network characteristics that are only partially observed. Therefore, the purpose of this study was to analyze cost efficiency in electricity distribution under consideration of these unobserved heterogeneity factors.

In order to disentangle cost efficiency variations from unobserved factors, we proposed an alternative strategy that decomposes the benchmarking process into two steps: The first step is to identify classes of comparable companies in order to reduce unobserved heterogeneity within classes and the second to obtain the best practice for each class.

The analysis in the first step has revealed four distinct latent classes. These classes can be characterized in an approximate manner by different observed variables, mainly by input prices and customer density. The analysis in the second step applying DEA, MOLS and SFA frontier methods has shown that average efficiency values vary considerably among methods and classes. In general, DEA has produced lowest and SFA highest values. Companies in Class 1 are on average considerably less efficient than companies in the other classes, and the variation in efficiency scores in Class 1 is highest. This class involves clearly the largest and most heterogeneous companies concerning output.

Most importantly, the efficiency values are generally higher and more plausible than the corresponding scores of a conventional single-step analysis. The decomposition of the benchmarking process into two steps and the consideration of technology classes has reduced unobserved heterogeneity within classes and, hence, reduced the unexplained variance previously claimed as inefficiency. Therefore, conventional cross-sectional or pooled models might underestimate the real cost efficiency values. This in turn could lead to too incommensurate regulatory measures in account of the affected companies, especially if price or revenue cap regulation as incentive regulation scheme is in force.

Notes

- 1.

In addition to inflation, the changes beyond companies’ control may include changes in input factor prices and exogenous changes in demand and network characteristics, generally referred to as ‘Z-factors’.

- 2.

The level of productive efficiency or cost efficiency of a firm is composed by the levels of technical and allocative efficiency. For a discussion of these concepts see Kumbhakar and Lovell (2003).

- 3.

The theory for dynamic applications of DEA in yardstick and a comparison with a conventional CPI-X approach are found in Agrell et al. (2005a).

- 4.

Shuttleworth (2005) provides a critical overview of the problems coming along with the use of benchmarking in the regulation of electricity networks.

- 5.

- 6.

The latter review includes also sections on the traditional production theory and on scale and scope economies.

- 7.

A third paradigm, the Bayesian approach is only little-known in applied science. Readers interested in Bayesian stochastic frontier models (sometimes also assigned to non-parametric models) are referred to van den Broeck et al. (1994).

- 8.

Semi-parametric frontier models such as quantile regression (Koenker and Bassett 1978) sometimes count as deterministic models. Unlike least squares methods, quantile regression techniques do not approximate the conditional mean of the response variable, but either its median or quantiles and offer therefore a systematic strategy for examining the entire distribution of the population. Readers interested in applied quantile regression models for efficiency measurement are referred to Behr (2010) and to Knox et al. (2007).

- 9.

In real regulatory application, regulators use specific outlier detection and elimination methods to reduce the impact of, and incentives for, errors in the reference set, see Agrell and Niknazar (2014).

- 10.

Other extensions of the SFA model have considered exponential, gamma, or truncated normal distributions for the inefficiency term.

- 11.

Jondrow et al. in (1982).

- 12.

The term ‘true’ refers to the FE and RE models fully described in Greene (2005).

- 13.

An alternative version of the True FE model uses dummy variables for every unit. However, this specification may be affected by the ‘incidental parameter problem’, especially in short panel data sets.

- 14.

- 15.

However, compared to the BIC, the AIC corrects the likelihood function only by the sample size and not by the number of parameters to estimate. This is a clear disadvantage with increasing number of classes.

- 16.

See e.g. Jensen (2000), Jamasb and Pollitt (2003), Street (2003), Estache et al. (2004), Farsi and Filippini (2004). The results show substantial variations in estimated efficiency scores and, for some of them, in efficiency rankings across different approaches (econometric and non-parametric) and among model specifications.

- 17.

- 18.

All estimations have been conducted by Nlogit software version 4.0.

- 19.

In order to get a balanced panel data set, we extracted this data from the data that has been used in several scientific studies (Agrell et al. (2005a, b)) as well as in a research project financed by the Norwegian Water Resources and Energy Directorate partly reported in Agrell and Bogetoft (2009) and a research project financed by Swiss Federal Institute of Energy reported in Filippini et al. (2011).

- 20.

Using several specifications, we also tried models with seven or more classes. Due to non-convergence we could not estimate any models with more than six classes.

References

Afriat SN (1972) Efficiency estimation of production functions. Int Econ Rev 13(3):568–598

Agrell PJ, Bogetoft P (2009) Harmonizing the nordic regulation of electricity distribution. In: Bjørndal E, Bjørndal M, Pardalos PM, Rönnqvist M (eds) Energy natural resources and environmental economics. Springer, 293–316

Agrell PJ, Bogetoft P, Tind J (2005a) DEA and dynamic yardstick competition in scandinavian electricity distribution. J Prod Anal 23(2):173–201

Agrell PJ, Bogetoft P, Tind J (2005b) Efficiency evaluation with convex pairs. Adv Model Optim 7:211–237

Agrell PJ, Niknazar P (2014) Structural and behaviorial robustness in applied best-practice regulation. Soc-Econ Plan Sci 48(1):89–103

Aigner D, Knox Lovell CA, Schmidt P (1977) Formulation and estimation of stochastic frontier production function models. J Econometr 6(1):21–37

Battese GE, Coelli TJ (1992) Frontier production functions, technical efficiency, and panel data: With application to paddy farmers in India. J Prod Anal 3:153–169

Battese GE, Coelli TJ (1988) Prediction of firm-level technical efficiencies with a generalized frontier production function and panel data. J Econometr 38:387–399

Behr A (2010) Quantile regression for robust bank efficiency score estimation. Eur J Oper Res 200:568–581

Chamberlain G (1982) Multivariate regression models for panel data. J Econom 18:5–46

Charnes A, Cooper WW, Rhodes EL (1978) Measuring the efficiency of decision making units. Eur J Oper Res 2:429–444

Coelli TJ, Prasada Rao DS, O’Donnell C, Battese GE (2005) An introduction to efficiency and productivity anlaysis. Springer, New York

Cornwell CM, Smith PC (2008) Stochastic frontier analysis and efficiency estimation. In: Sevestre P, Mátyás L (eds) The econometrics of panel data. Springer, New York, pp 697–726

Cornwell C, Schmidt P, Sickles RC (1990) Production frontiers with cross-sectional and time-series variation in efficiency levels. J Econom 46:185–200

Cullmann A (2010) Benchmarking and firm heterogeneity: a latent class analysis for German electricity distribution companies. Empirical Economics 1–23

Estache A, Rossi M, Ruzzier C (2004) The case for international coordination of electricity regulation: evidence from the measurement of efficiency in South America. J Regul Econ 25(3):271–295

Farrell MJ (1957) The measurement of productive efficiency. J Roy Stat Soc Ser A(Part 3):253–290

Farsi M, Filippini M (2004) Regulation and measuring cost-efficiency with panel data models: Application to electricity distribution utilities. Rev Ind Organ 25(1):1–19

Farsi M, Filippini M (2009) Efficiency measurement in the electricity and gas distribution sectors. In: Hunt LC, Evans J (eds) International handbook on the economics of energy. Edward Elgar, pp 598–623

Farsi M, Filippini M, Greene WH (2006) Application of panel data models in benchmarking analysis of the electricity distribution sector. Ann Pub Coop Econ 77(3):271–290

Filippini M, Agrell PJ, Farsi M, Koller M (2011) Messung der produktiven Effizienz in EVU—Eine Anwendung zur Anreizregulierung in der Schweiz. Studie im Auftrag des Bundesamtes für Energie (BFE). Centre for Energy Policy and Economics (CEPE), ETH Zurich

Greene WH (2005) Reconsidering heterogeneity in panel data estimators of the stochastic frontier model. J Econom 126(2):269–303

Greene WH (2008) The econometric approach to efficiency analysis. In: Fried HO, Knox, Lovell CA, Schmidt SS (eds) The measurement of productive efficiency and productivity growth. Oxford University Press, Oxford, pp 92–250

Haney AB, Pollitt MG (2009) Efficiency analysis of energy networks: an international survey of regulators. Energy Policy 37:5814–5830

Jamasb TJ, Pollitt MG (2003) International benchmarking and regulation: an application to European electricity distribution utilities. Energy Policy 31(15):1609–1622

Jensen U (2000) Is it efficient to analyse efficiency rankings? Empirical Economics 25(2):189–208

Jondrow J, Knox Lovell CA, Materov IS, Schmidt P (1982) On the estimation of technical inefficiency in the stochastic frontier production function model. J Econom 19(2–3):233–238

Kalirajan KP, Obowona MB (1994) Frontier production function: the stochastic coefficients approach. Oxford Bull Econ Stat 56(1):87–96

Knox KJ, Blankmeyer EC, Stutzman JR (2007) Technical efficiency in Texas nursing facilities: a stochastic production frontier approach. J Econ Finan 31(1):75–86

Koenker R, Bassett G Jr (1978) Regression quantiles. Econometrica 46(1):33–50

Kumbhakar SC (1990) Production frontiers, panel data, and time-varying technical efficiency. J Econom 46:201–211

Kumbhakar SC (1991) Estimation of technical inefficiency in panel data models with firm- and time-specific effects. Econ Lett 36:43–48

Kumbhakar SC, Knox Lovell CA (2003) Stochastic frontier analysis. Cambridge University Press, Cambridge

Lazarsfeld PF, Henry NW (1968) Latent structure analysis. Houghton Mifflin, Boston

Littlechild SC (1983) The regulation of British telecommunications’ profitability. Department of Trade and Industry, London

Meeusen W, van den Broeck J (1977) Efficiency estimation from Cobb-Douglas production functions with composed error. Int Econ Rev 18(2):435–444

Murillo-Zamorano Luis R (2004) Economic efficiency and frontier techniques. J Econ Surv 18(1):46

Nerlove M (1963) Returns to scale in electricity supply. In: Christ C (ed) Measurement in economics: studies in mathematical economics and econometrics in memory of Yehuda Grunfeld, vol 21. Stanford University Press, Stanford

Orea L, Khumbhakar SC (2004) Efficiency measurement using stochastic frontier latent class model. Empirical Economics 29(1):169–183

Pitt MM, Lee L-F (1981) The measurement and sources of technical inefficiency in the Indonesian weaving industry. J Dev Econ 9(1):43–64

Polachek SW, Yoon BJ (1996) Panel estimates of a two-tiered earnings frontier. J Appl Econ 11:169–178

Schmidt P, Sickles RC (1984) Production frontiers and panel data. J Bus Econ Stat 2(4):367–374

Shepard RW (1953) Cost and production functions. Princeton University Press, Princeton, New Jersey

Shleifer A (1985) A theory of yardstick competition. Rand J Econ 16(3):319–327

Shuttleworth G (2005) Benchmarking of electricity networks: practical problems with its use for regulation. Util Policy 13(4):310–317

Street A (2003) How much confidence should we place in efficiency estimates? Health Econ 12(11):895–907

van den Broeck J, Koop G, Osiewalski J, Steel MFJ (1994) Stochastic frontier models : a Bayesian perspective. J Econom 61(2):273–303

Winsten CB (1957) Discussion on Mr. Farrells paper. J Roy Stat Soc Ser A (General) 120(3):282–284

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer-Verlag Berlin Heidelberg

About this chapter

Cite this chapter

Agrell, P.J., Farsi, M., Filippini, M., Koller, M. (2014). Unobserved Heterogeneous Effects in the Cost Efficiency Analysis of Electricity Distribution Systems. In: Ramos, S., Veiga, H. (eds) The Interrelationship Between Financial and Energy Markets. Lecture Notes in Energy, vol 54. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-55382-0_12

Download citation

DOI: https://doi.org/10.1007/978-3-642-55382-0_12

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-55381-3

Online ISBN: 978-3-642-55382-0

eBook Packages: EnergyEnergy (R0)