Abstract

We consider neural field models in both one and two spatial dimensions and show how for some coupling functions they can be transformed into equivalent partial differential equations (PDEs). In one dimension we find snaking families of spatially-localised solutions, very similar to those found in reversible fourth-order ordinary differential equations. In two dimensions we analyse spatially-localised bump and ring solutions and show how they can be unstable with respect to perturbations which break rotational symmetry, thus leading to the formation of complex patterns. Finally, we consider spiral waves in a system with purely positive coupling and a second slow variable. These waves are solutions of a PDE in two spatial dimensions, and by numerically following these solutions as parameters are varied, we can determine regions of parameter space in which stable spiral waves exist.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Neural field models are generally considered to date back to the 1970s [1, 41], although several earlier papers consider similar equations [4, 25]. These types of equations were originally formulated as models for the dynamics of macroscopic activity patterns in the cortex , on a much larger spatial scale than that of a single neuron. They have been used to model phenomena such as short-term memory [36], the head direction system [43], visual hallucinations [19, 20], and EEG rhythms [7].

Perhaps the simplest formulation of such a model in one spatial dimension is

where

-

w is symmetric, i.e. w(−x) = w(x),

-

\(\lim _{x\rightarrow \infty }w(x) = 0\),

-

\(\int _{-\infty }^{\infty }w(x)\mathit{dx} < \infty \),

-

w(x) is continuous,

and f is a non-decreasing function with \(\lim _{u\rightarrow -\infty }f(u) = 0\) and \(\lim _{u\rightarrow \infty }f(u) = 1\) [12, 36]. The physical interpretation of this type of model is that u(x, t) is the average voltage of a large group of neurons at position \(x \in \mathbb{R}\) and time t, and f(u(x, t)) is their firing rate, normalised to have a maximum of 1. The function w(x) describes how neurons a distance x apart affect one another. Typical forms of this function are purely positive [6], “Mexican hat” [19, 26] (positive for small x and negative for large x) and decaying oscillatory [18, 36]. To find the influence of neurons at position y on those at position x we evaluate f(u(y, t)) and weight it by w(x − y). The influence of all neurons is thus the integral over y of w(x − y)f(u(y, t)). In the absence of inputs from other parts of the network, u decays exponentially to a steady state, which we define to be zero. Equation (5.1) is a nonlocal differential equation, with the nonlocal term arising from the biological reality that we are modelling. Typically, researchers are interested in either “bump ” solutions of (5.1), for which f(u(x)) > 0 only on a finite number of finite, disjoint intervals, or front solutions which connect a region of high activity to one of zero activity [12] (see Chap. 7). Note that this type of model is invariant with respect to spatial translations, which is reflected in the fact that w appears as a function of relative position only (i.e. x − y), not the actual values of x and y.

The function f is normally thought of as a sigmoid (although other functions are sometimes considered [26]), and in the limit of infinite steepness it becomes the Heaviside step function [12, 36]. In this case stationary solutions are easily constructed since to evaluate the integral in (5.1) we just integrate w(x − y) over the interval(s) of y where f(u(y, t)) = 1. The stability of these solutions can be determined by linearising (5.1) about them and using the fact that the derivative of the Heaviside function is the Dirac delta function [6, 40].

When f is not a Heaviside, constructing stationary solutions becomes more difficult and we generally have to do so numerically. A stationary solution of (5.1) satisfies

In all but Sect. 5.4 of this chapter we consider only spatially-localised solutions, i.e. ones for which u and all of its relevant spatial derivatives decay to zero as | x | → ∞. Generally speaking, integral equations such as (5.2) are not studied in as much detail as differential equations. As a result more methods for analysis—and software packages—exist for the numerical solution of differential equations, as opposed to integral equations. For these reasons we consider rewriting (5.2) as a differential equation for the function u(x). The key to doing so is to recognise that the integral in (5.2) is a convolution . This observation provides several equivalent ways of converting (5.2) into a differential equation.

The first method involves recalling that the Fourier transform of the convolution of two functions is the product of their Fourier transforms. Thus, denoting by F[u](k) the Fourier transform of u(x), where k is the transform variable, Fourier transforming (5.2) gives

where “×” indicates normal multiplication. Suppose that the Fourier transform of w was a rational function of k 2, i.e.

where P and Q are polynomials. Multiplying both sides of (5.3) by Q(k 2) we obtain

Recalling that if the Fourier transform of u(x) is F[u](k), then the Fourier transform of u ′ ′(x) is − k 2 F[u](k), the Fourier transform of u ′ ′ ′ ′(x) is k 4 F[u](k) and so on, where the primes indicate spatial derivatives, taking the inverse Fourier transform of (5.5) gives

where D 1 and D 2 are linear differential operators involving only even derivatives associated with Q and P respectively [32]. As an example, consider the decaying oscillatory coupling function

where b is a parameter (plotted in Fig. 5.1 (left) for b = 0. 5), which has the Fourier transform

For this example D 2 is just the constant 4b(b 2 + 1) and

and thus (for this choice of w) Eq. (5.2) can be written

Our decision to consider only spatially-localised solutions validates the use of Fourier transforms and gives the boundary conditions for (5.10), namely

The other method for converting (5.2) into a differential equation is to recall that the solution of an inhomogeneous linear differential equation can be formally written as the convolution of the Green’s function of the linear differential operator together with the appropriate boundary conditions , and the function on the right hand side (RHS) of the differential equation. Thus if w was such a Green’s function, we could recognise (5.2) as being the solution of a linear differential equation with f(u) as its RHS.

Using the example above one can show that the Green’s function of the operator (5.9) with boundary conditions (5.11), i.e. the solution of

satisfying (5.11), where δ is the Dirac delta function, is

and thus the solution of (5.10)–(5.11) is (5.2). This second method, of recognising that the coupling function w is the Green’s function of a linear differential operator, is perhaps less easy to generalise, so we concentrate mostly on the first method in this chapter. An important point to note is that the Fourier transform method applies equally well to (5.1), i.e. the full time-dependent problem. Using the function (5.7) and keeping the time derivative one can convert (5.1) to

Clearly stationary solutions of (5.14) satisfy (5.10), but keeping the time dependence in (5.14) enables us to determine the stability of these stationary solutions via linearisation about them.

Note that the Fourier transform of (1∕2)e − | x | is 1∕(1 + k 2), and thus for this coupling function (5.2) is equivalent to

Also, the Fourier transform of the “wizard hat ” w(x) = (1∕4)(1 − | x | )e − | x | is \(k^{2}/(1 + k^{2})^{2}\), giving the differential equation [12]

and thus a variety of commonly used connectivity functions are amenable to this type of transformation. (See also [26] for another example.)

The model (5.1) assumes that information about activity at position y propagates instantaneously to position x, but a more realistic model could include a distance-dependent delay:

where v > 0 is the velocity of propagation of information. Equation (5.17) can be written

where

and K(x, t) = w(x)δ(t − | x | ∕v) [12, 33]. Recognising that both integrals in (5.19) are convolutions, and making the choice w(x) = (1∕2)e − | x | , one can take Fourier transforms in both space and time and convert (5.19) to

This equation was first derived by [30], and these authors may well have been the first to use Fourier transforms to convert neural field models to PDEs. We will not consider delays here, but see [15] for a recent approach in two spatial dimensions.

2 Results in One Spatial Dimension

We now present some results of the analysis of (5.14), similar to those in [36]. From now on we make the specific choice of the firing rate function

where κ > 0 and \(h \in \mathbb{R}\) are parameters, and H is the Heaviside step function. The function (5.21) for typical parameter values is shown in Fig. 5.1 (right). Note that if h > 0 then f(0) = 0.

We start with a few comments regarding Eqs. (5.10) and (5.11). Firstly, Eq. (5.10) is reversible under the involution \((u,u^{{\prime}},u^{{\prime\prime}},u^{{\prime\prime\prime}})\mapsto (u,-u^{{\prime}},u^{{\prime\prime}},-u^{{\prime\prime\prime}})\) [18]. Secondly, spatially-localised solutions of (5.10) can be regarded as homoclinic orbits to the origin, i.e. orbits for which u and all of its derivatives tend to zero as x → ±∞. Linearising (5.10) about the origin one finds that it has eigenvalues b ± i and − b ± i, i.e. the fixed point at the origin is a bifocus [34], and thus the homoclinic orbits spiral into and out of the origin. Thirdly, Eq. (5.10) is Hamiltonian , and homoclinic orbits to the origin satisfy the first integral

where

This Hamiltonian nature can be exploited to understand the solutions of (5.10)–(5.11) and the bifurcations they undergo as parameters are varied [18]. See for example [11] for more details on homoclinic orbits in reversible systems.

We are interested in stationary spatially-localised solutions of (5.14), and how they vary as parameters are varied. Figure 5.2 shows the result of following such solutions as the parameter h (firing threshold) is varied. As was found in [18, 35] the family of solutions forms a “snake ” with successively more large amplitude oscillations added to the solution as one moves from one branch of the snake to the next in the direction of increasing max(u). (Note that b, not h, was varied in [18, 35].) Similar snakes of homoclinic orbits have been found in other reversible systems of fourth-order differential equations [10, 42], and Faye et al. [21] very recently analysed snaking behaviour in a model of the form (5.1).

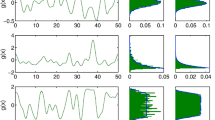

Figure 5.3 shows three solutions from the family shown in Fig. 5.2, all at h = 0. 5. Solutions at A and C are stable, and are referred to as “1-bump” and “3-bump” solutions, respectively, since they have 1 and 3, respectively, regions for which u > h. The solution at B is an unstable 3-bump solution. Stability of solutions was determined by linearising (5.14) about them. The curve in Fig. 5.2 shows N-bump solutions which are symmetric about the origin, where N is odd. A similar curve exists for N even (not shown) and asymmetric solutions also exist [17]. In summary, spatially-localised solutions of (5.10) are generic and form families which are connected in a snake -like fashion which can be uncovered as parameters are varied. For more details on (5.10)–(5.11) the reader is referred to [36]. We next consider the generalisation of neural field models to two spatial dimensions and again investigate spatially-localised solutions.

3 Two Dimensional Bumps and Rings

Neural field equations are easily generalised to two spatial dimensions, and the simplest are of the form

where \(\mathbf{x} \in \mathbb{R}^{2}\) and w and f have their previous meanings. Note that w is a function of the scalar distance between points x and y. Spatially-localised solutions of equations of the form (5.24) have only recently been analysed in any depth [9, 16, 22–24, 29, 35, 38]. The study of such solutions is harder than in one spatial dimension for the following reasons:

-

Their analytical construction involves integrals over subsets of the plane rather than over intervals.

-

The determination of the stability of, say, a circular stationary solution is more difficult because perturbations which break the rotational symmetry must be considered.

-

Numerical studies require vastly more mesh points in a discretisation of the domain.

However, the use of the techniques presented in Sect. 5.1 has been fruitful for the construction and analysis of such solutions. One important point to note is that the techniques cannot be applied directly when the function w is one of the commonly used ones mentioned above. For example, if \(w(x) = e^{-x} -\mathit{Me}^{-\mathit{mx}}\) (of Mexican-hat type when 0 < M < 1 and 0 < m < 1) then its Fourier transform is

where \(\mathbf{k} \in \mathbb{R}^{2}\) is the transform variable. Rearranging and then taking the inverse Fourier transform one faces the question as to what a differential equation containing an operator like \((1 -\nabla ^{2})^{3/2}\) actually means [15]. One way around this is to expand a term like \((1 + \vert \mathbf{k}\vert ^{2})^{3/2}\) around | k | = 0 as \(1 + (3/2)\vert \mathbf{k}\vert ^{2} + O(\vert \mathbf{k}\vert ^{4})\) and keep only the first few terms, thus (after inverse transforming) giving one a PDE. This is known as the long wavelength approximation [37]; see [15] for a discussion.

A more fruitful approach is to realise that neural field models are qualitative only, and we can gain insight from models in which the functions w and f are qualitatively correct. Thus we have some freedom in our choice of these functions. The approach of Laing and co-workers [32, 35, 36] was to use this freedom to choose not w, but its Fourier transform. If the Fourier transform of w is chosen so that the Fourier transform of (5.24) can be rearranged and then inverse transformed to give a simple differential equation, and the resulting function w is qualitatively correct (i.e. has the same general properties as connectivity functions of interest) then one can make much progress.

As an example, consider the case when

where A, B and M are parameters [35]. Taking the Fourier transform of (5.24), using (5.26), and rearranging, one obtains

and upon taking the inverse Fourier transform one obtains the differential equation

The function w is then defined as the inverse Fourier transform of its Fourier transform, i.e.

where J 0 is the Bessel function of the first kind of order 0 [35]. (w(x) is the Hankel transform of order 0 of F[w].) Figure 5.4 shows a plot of w(x) for parameter values M = 1, A = 0. 4, B = 0. 1. We see that it is of a physiologically-plausible form, qualitatively similar to that shown in Fig. 5.1 (left). We have thus formally transformed (5.24) into the PDE (5.28).

The function w(x) defined by (5.29) for parameter values M = 1, A = 0. 4, B = 0. 1

As a start we consider spatially-localised and rotationally-invariant solutions of (5.28), which satisfy

with

where u is now a function of radius r and time t only. We can numerically find and then follow stationary solutions of (5.30)–(5.31) as parameters are varied. For example, Fig. 5.5 shows the effects of varying h for solutions with u(0) > 0 and u ′ ′(0) < 0. We see a snaking curve similar to that in Fig. 5.2, and as we move up the snake, on each successive branch the solution gains one more large amplitude oscillation.

For any particular solution, \(\overline{u}(r)\) on the curve in Fig. 5.5 one can find its stability by linearising (5.28) about it. To do this we write

where 0 < ε ≪ 1 and m ≥ 0 is an integer, the azimuthal index. We choose this form of perturbation in order to find solutions which break the circular symmetry of the system. Substituting (5.32) into (5.28) and keeping only first order terms in ε we obtain

Since this equation is linear in ν we expect solutions of the form \(\nu (r,t) \sim \mu (r)e^{\lambda t}\) as t → ∞, where λ is the most positive eigenvalue associated with the stability of \(\overline{u}\) (which we assume to be real) and μ(r) is the corresponding eigenfunction .

Thus to determine the stability of a circularly-symmetric solution with radial profile \(\overline{u}(r)\), we solve (5.33) for integer m ≥ 0 and determine λ(m). If N is the integer for which λ(N) is largest, and λ(N) > 0, then this circularly-symmetric solution will be unstable with respect to perturbations with D N symmetry, and the radial location of the growing perturbation will be given by μ(r).

For example, consider the solution shown solid in the left panel of Fig. 5.6. This solution exists at h = 0. 42, so in terms of active regions (where u > h) this solution corresponds to a central circular bump with a ring surrounding it. Calculating λ(m) for this solution we obtain the curve in Fig. 5.6 (right). (We do not need to be restricted to integer m for the calculation.) We see that for this solution N = 6, and thus we expect a circularly-symmetric solution of (5.28) with radial profile given by \(\overline{u}(r)\) to be unstable at these parameter values, and most unstable with respect to perturbations with D 6 symmetry . The eigenfunction μ(r) corresponding to λ(6) is shown dashed in Fig. 5.6 (left). It is spatially-localised around the ring at r ≈ 7, so we expect the instability to appear here.

Left: the solid curve shows \(\overline{u}(r)\) at the point indicated by the circle in Fig. 5.5. The dashed curve shows the eigenfunction μ(r) corresponding to λ(6). Right: λ(m) for the solution shown solid in the left panel. The integer with largest λ is N = 6

Figure 5.7 shows the result of simulating (5.28) with an initial condition formed by rotating the radial profile in Fig. 5.6 (left) through a full circle in the angular direction, and then adding a small random perturbation to u at each grid point. The initial condition is shown in the left panel and the final state (which is stable) is shown in the right panel. We see the formation of six bumps at the location of the first ring, as expected. This analysis has thus successfully predicted the appearance of a stable “7-bump” solution from the initial condition shown in Fig. 5.7 (left). (We used a regular grid in polar coordinates, with domain radius 30, using 200 points in the radial direction and 140 in the angular. The spatial derivatives in (5.28) were approximated using second-order accurate finite differences.)

Left: the solid curve shows \(\overline{u}(r)\) at the point indicated by the point A in Fig. 5.8. The dashed curve shows the eigenfunction μ(r) corresponding to λ(3). Right: λ(m) for the solution shown solid in the left panel. The integer with largest λ is N = 3

We can also consider stationary solutions of (5.30)–(5.31) for which u(0) < 0 and u ′ ′(0) > 0, i.e. which have a “hole” in the centre. Following these solutions as h is varied we obtain Fig. 5.8. As in Fig. 5.5 we see a snake of solutions, with successive branches having one more large amplitude oscillation. We will consider the stability of two solutions on the curve in Fig. 5.8; first, the solution at point A, shown in the left panel of Fig. 5.9. This solution corresponds to one with just a single ring of active neurons. Calculating λ(m) for this solution we obtain the curve in Fig. 5.9 (right), and we see that a circularly-symmetric solution of (5.28) with radial profile given by this \(\overline{u}(r)\) will be most unstable with respect to perturbations with D 3 symmetry. The eigenfunction μ(r) corresponding to N = 3 is shown dashed in Fig. 5.9 (left), and it is localised at the first maximum of \(\overline{u}(r)\).

Figure 5.10 shows the result of simulating (5.28) with an initial condition formed by rotating the radial profile in Fig. 5.9 (left) through a full circle in the angular direction, and then adding a small random perturbation to u at each grid point. The initial condition is shown in the left panel and the final state (which is stable) is shown in the right panel. We see the formation of three bumps at the first ring, as expected.

Now consider the solution at point B in Fig. 5.8. This solution, shown in Fig. 5.11 (left) corresponds to one with two active rings . An analysis of its stability is shown in Fig. 5.11 (right) and we see that it is most unstable with respect to perturbations with D 9 symmetry , and that these should appear at the outer ring . Figure 5.12 shows the result of simulating (5.28) with an initial condition formed by rotating the radial profile in Fig. 5.11 (left) through a full circle in the angular direction, and then adding a small random perturbation to u at each grid point. The initial condition is shown in the left panel and the final state (which is stable) is shown in the right panel. We see the formation of nine bumps at the second ring, as expected.

Left: the solid curve shows \(\overline{u}(r)\) at the point indicated by the point B in Fig. 5.8. The dashed curve shows the eigenfunction μ(r) corresponding to λ(9). Right: λ(m) for the solution shown solid in the left panel

In summary we have shown how to analyse the stability of rotationally-symmetric solutions of the neural field equation (5.24), where w is given by (5.29), via transformation to a PDE. Notice that for all functions \(\overline{u}\) shown in the left panels of Figs. 5.6, 5.9 and 5.11, λ(0) < 0, i.e. these are stable solutions of (5.30). However, they are unstable with respect to some perturbations which break their rotational invariance. The stable states for all three examples considered consist of a small number of spatially-localised active regions.

Similar results to those presented in this section were obtained subsequently by [38] using a Heaviside firing rate function , which allowed for the construction of an Evans function to determine stability of localised patterns. These authors also showed that the presence of a second, slow variable could cause a rotational instability of a pattern like that in Fig. 5.10 (right), resulting in it rotating at a constant speed. Very recently, instabilities of rotationally-symmetric solutions were addressed by considering the dynamics of the interface dividing regions of high activity from those with low activity, again using the Heaviside firing rate function [14] (and Coombes chapter). Several other authors have also recently investigated symmetry breaking bifurcations of spatially-localised bumps [9, 16]. We now consider solutions of two-dimensional neural field equations which are not spatially-localised, specifically, spiral waves.

4 Spiral Waves

The function w used in the previous section was of the decaying oscillatory type (Fig. 5.4). Another form of coupling of interest is purely positive, i.e. excitatory. However, without some form of negative feedback , activity in a neural system with purely excitatory coupling will typically spread over the whole domain. With the inclusion of some form of slow negative feedback such as spike frequency adaptation [13] or synaptic depression [31], travelling pulses of activity are possible [1, 12, 19]. In two spatial dimensions the analogue of a travelling pulse is a spiral wave [2, 3], which we now study. Let us consider the system

where \(\varOmega \subset \mathbb{R}^{2}\) which, in practice, we choose to be a disk, and the firing rate function is

where h and β are parameters. This system is very similar to that in [24] and is the two-dimensional version of that considered in [23, 39]. If we choose the coupling function to be

then, using the same ideas as above (and ignoring the fact that we are not dealing with spatially-localised solutions) (5.34) is equivalent to

We choose boundary conditions

for all θ and t,where R is radius of the circular domain and we have written u in polar coordinates. The two differences between the system considered here and that in [32] are that here we use the firing rate function F (Eq. (5.36)), which is non-zero everywhere (the function f (Eq. (5.21)) was used in [32]), and the boundary conditions given in (5.39) are different from those in [32].

The function w(r) defined by (5.37)

The function w(r) defined by (5.37) is shown in Fig. 5.13 and we see that it is positive and decays monotonically as r → ∞. For a variety of parameters, the system (5.34)–(5.35) supports a rigidly-rotating spiral wave on a circular domain. To find and study such a wave we recognise that rigidly-rotating patterns on a circular domain can be “frozen” by moving to a coordinate frame rotating at the same speed as the pattern [2, 3, 5]. These rigidly rotating patterns satisfy the time-independent equations

where ω is the rotation speed of the pattern and θ is the angular variable in polar coordinates. Rigidly rotating spiral waves are then solutions of (5.40)–(5.41), together with a scalar “pinning” equation [2, 32] which allows us to determine ω as well as u and a. In practice, one solves (5.41) to obtain a as a function of u and substitutes into (5.40), giving the single equation for u

Having found a solution \(\overline{u}\) of (5.42) its stability can be determined by linearising (5.34)–(5.35) about \((\overline{u},\overline{a})\), where

As we have done in previous sections, we can numerically follow solutions of (5.42) as parameters are varied, determining their stability.

In Fig. 5.14 we show ω as a function of A and also indicate the stability of solutions. Interestingly, there is a region of bistability for moderate values of A. Typical solutions (of both u and a) at three different points on the curve are shown in Fig. 5.15. In agreement with the results in [32] we see that as A (the strength of the negative feedback) is decreased, more of the domain becomes active, and as A is increased, less of the domain is active. The results of varying h (the threshold of the firing rate function) are shown in Fig. 5.16. We obtain results quite similar to those in Fig. 5.14—as h is decreased, more of the domain becomes active, and vice versa, and we also have a region of bistability. Figure 5.17 shows the result of varying τ: for large τ the spiral is unstable. The bifurcations seen in Figs. 5.14, 5.16 and 5.17 are all generic saddle-node bifurcations. In principle they could be followed as two parameters are varied, thus mapping out regions of parameter space in which stable spiral waves exist.

We conclude this section by noting that spiral waves have been observed in simulations which include synaptic depression rather than spike frequency adaptation [8, 31], and also seen experimentally in brain slice preparations [27, 28].

5 Conclusion

This chapter has summarised some of the results from [32, 35, 36], in which neural field equations in one and two spatial dimensions were studied by being converted into PDEs via a Fourier transform in space. In two spatial dimensions we showed how to investigate the instabilities of spatially-localised “bumps ” and rings of activity, and also how to study spiral waves . An important technique used was the numerical continuation of solutions of large systems of coupled, nonlinear, algebraic equations defined by the discretisation of PDEs. Since the work summarised here was first published a number of other authors have used some of the techniques presented here to further investigate neural field models [9, 15, 21, 26, 31, 33].

References

Amari, S.: Dynamics of pattern formation in lateral-inhibition type neural fields. Biol. Cybern. 27(2), 77–87 (1977)

Bär, M., Bangia, A., Kevrekidis, I.: Bifurcation and stability analysis of rotating chemical spirals in circular domains: boundary-induced meandering and stabilization. Phys. Rev. E 67(5), 056,126 (2003)

Barkley, D.: Linear stability analysis of rotating spiral waves in excitable media. Phys. Rev. Lett. 68(13), 2090–2093 (1992)

Beurle, R.L.: Properties of a mass of cells capable of regenerating pulses. Philos. Trans. R. Soc. B: Biol. Sci. 240(669), 55–94 (1956)

Beyn, W., Thümmler, V.: Freezing solutions of equivariant evolution equations. SIAM J. Appl. Dyn. Syst. 3(2), 85–116 (2004)

Blomquist, P., Wyller, J., Einevoll, G.: Localized activity patterns in two-population neuronal networks. Phys. D: Nonlinear Phenom. 206(3–4), 180–212 (2005)

Bojak, I., Liley, D.T.J.: Modeling the effects of anesthesia on the electroencephalogram. Phys. Rev. E 71, 041,902 (2005). doi:10.1103/PhysRevE.71.041902. http://link.aps.org/doi/10.1103/PhysRevE.71.041902

Bressloff, P.C.: Spatiotemporal dynamics of continuum neural fields. J. Phys. A: Math. Theor. 45(3), 033,001 (2012). http://stacks.iop.org/1751-8121/45/i=3/a=033001

Bressloff, P.C., Kilpatrick, Z.P.: Two-dimensional bumps in piecewise smooth neural fields with synaptic depression. SIAM J. Appl. Math. 71(2), 379–408 (2011). doi:10.1137/100799423. http://link.aip.org/link/?SMM/71/379/1

Burke, J., Knobloch, E.: Homoclinic snaking: structure and stability. Chaos 17(3), 037,102 (2007). doi:10.1063/1.2746816. http://link.aip.org/link/?CHA/17/037102/1

Champneys, A.: Homoclinic orbits in reversible systems and their applications in mechanics, fluids and optics. Phys. D: Nonlinear Phenom. 112(1–2), 158–186 (1998)

Coombes, S.: Waves, bumps, and patterns in neural field theories. Biol. Cybern. 93(2), 91–108 (2005)

Coombes, S., Owen, M.: Bumps, breathers, and waves in a neural network with spike frequency adaptation. Phys. Rev. Lett. 94(14), 148,102 (2005)

Coombes, S., Schmidt, H., Bojak, I.: Interface dynamics in planar neural field models. J. Math. Neurosci. 2(1), 1–27 (2012)

Coombes, S., Venkov, N., Shiau, L., Bojak, I., Liley, D., Laing, C.: Modeling electrocortical activity through improved local approximations of integral neural field equations. Phys. Rev. E 76(5), 051,901 (2007)

Doubrovinski, K., Herrmann, J.: Stability of localized patterns in neural fields. Neural comput. 21(4), 1125–1144 (2009)

Elvin, A.: Pattern formation in a neural field model. Ph.D. thesis, Massey University, New Zealand (2008)

Elvin, A., Laing, C., McLachlan, R., Roberts, M.: Exploiting the Hamiltonian structure of a neural field model. Phys. D: Nonlinear Phenom. 239(9), 537–546 (2010)

Ermentrout, B.: Neural networks as spatio-temporal pattern-forming systems. Rep. Prog. Phys. 61, 353 (1998)

Ermentrout, G.B., Cowan, J.D.: A mathematical theory of visual hallucination patterns. Biol. Cybern. 34, 137–150 (1979)

Faye, G., Rankin, J., Chossat, P.: Localized states in an unbounded neural field equation with smooth firing rate function: a multi-parameter analysis. J. Math. Biol. 66(6), 1303–1338 (2013)

Folias, S.E.: Nonlinear analysis of breathing pulses in a synaptically coupled neural network. SIAM J. Appl. Dyn. Syst. 10, 744–787 (2011)

Folias, S., Bressloff, P.: Breathing pulses in an excitatory neural network. SIAM J. Appl. Dyn. Syst. 3(3), 378–407 (2004)

Folias, S.E., Bressloff, P.C.: Breathers in two-dimensional neural media. Phys. Rev. Lett. 95, 208,107 (2005). doi:10.1103/PhysRevLett.95.208107. http://link.aps.org/doi/10.1103/PhysRevLett.95.208107

Griffith, J.: A field theory of neural nets: I: derivation of field equations. Bull. Math. Biol. 25, 111–120 (1963)

Guo, Y., Chow, C.C.: Existence and stability of standing pulses in neural networks: I. Existence. SIAM J. Appl. Dyn. Syst. 4, 217–248 (2005). doi:10.1137/040609471. http://link.aip.org/link/?SJA/4/217/1

Huang, X., Troy, W., Yang, Q., Ma, H., Laing, C., Schiff, S., Wu, J.: Spiral waves in disinhibited mammalian neocortex. J. Neurosci. 24(44), 9897 (2004)

Huang, X., Xu, W., Liang, J., Takagaki, K., Gao, X., Wu, J.Y.: Spiral wave dynamics in neocortex. Neuron 68(5), 978–990 (2010)

Hutt, A., Rougier, N.: Activity spread and breathers induced by finite transmission speeds in two-dimensional neural fields. Phys. Rev. E 82, 055,701 (2010). doi:10.1103/PhysRevE.82.055701. http://link.aps.org/doi/10.1103/PhysRevE.82.055701

Jirsa, V.K., Haken, H.: Field theory of electromagnetic brain activity. Phys. Rev. Lett. 77, 960–963 (1996). doi:10.1103/PhysRevLett.77.960. http://link.aps.org/doi/10.1103/PhysRevLett.77.960

Kilpatrick, Z., Bressloff, P.: Spatially structured oscillations in a two-dimensional excitatory neuronal network with synaptic depression. J. Comput. Neurosci. 28, 193–209 (2010)

Laing, C.: Spiral waves in nonlocal equations. SIAM J. Appl. Dyn. Syst. 4(3), 588–606 (2005)

Laing, C., Coombes, S.: The importance of different timings of excitatory and inhibitory pathways in neural field models. Netw. Comput. Neural Syst. 17(2), 151–172 (2006)

Laing, C., Glendinning, P.: Bifocal homoclinic bifurcations. Phys. D: Nonlinear Phenom. 102(1–2), 1–14 (1997)

Laing, C., Troy, W.: PDE methods for nonlocal models. SIAM J. Appl. Dyn. Syst. 2(3), 487–516 (2003)

Laing, C., Troy, W., Gutkin, B., Ermentrout, G.: Multiple bumps in a neuronal model of working memory. SIAM J. Appl. Math. 63, 62 (2002)

Liley, D., Cadusch, P., Dafilis, M.: A spatially continuous mean field theory of electrocortical activity. Netw. Comput. Neural Syst. 13(1), 67–113 (2002)

Owen, M., Laing, C., Coombes, S.: Bumps and rings in a two-dimensional neural field: splitting and rotational instabilities. New J. Phys. 9, 378 (2007)

Pinto, D., Ermentrout, G.: Spatially structured activity in synaptically coupled neuronal networks: I. Traveling fronts and pulses. SIAM J. Appl. Math. 62(1), 206–225 (2001)

Pinto, D., Ermentrout, G.: Spatially structured activity in synaptically coupled neuronal networks: II. Lateral inhibition and standing pulses. SIAM J. Appl. Math. 62(1), 226–243 (2001)

Wilson, H., Cowan, J.: A mathematical theory of the functional dynamics of cortical and thalamic nervous tissue. Biol. Cybern. 13(2), 55–80 (1973)

Woods, P., Champneys, A.: Heteroclinic tangles and homoclinic snaking in the unfolding of a degenerate reversible Hamiltonian-Hopf bifurcation. Phys. D: Nonlinear Phenom. 129(3–4), 147–170 (1999). doi:10.1016/S0167-2789(98)00309-1. http://www.sciencedirect.com/science/article/pii/S0167278998003091

Zhang, K.: Representation of spatial orientation by the intrinsic dynamics of the head-direction cell ensemble: a theory. J. Neurosci. 16(6), 2112–2126 (1996)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer-Verlag Berlin Heidelberg

About this chapter

Cite this chapter

Laing, C.R. (2014). PDE Methods for Two-Dimensional Neural Fields. In: Coombes, S., beim Graben, P., Potthast, R., Wright, J. (eds) Neural Fields. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-54593-1_5

Download citation

DOI: https://doi.org/10.1007/978-3-642-54593-1_5

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-54592-4

Online ISBN: 978-3-642-54593-1

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)