Abstract

In order to overcome the non-crease misjudgment of feather quill, a novel decision fusion algorithm is proposed. An improved Radon transformation is used to extract moment invariants of gray and grads dual-mode of target region and singular value decomposition is provided here to obtain feature vectors, respectively; then creases recognition is performed according to feature vectors of the dual-mode. Finally, the final recognition result of the system is achieved by the fusion of recognition results of the dual-mode at the decision level. Experimental results show that this new method can overcome the limitations of single-modal and reduce the misjudgment of non-crease effectively.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

14.1 Introduction

Badminton is a labor-intensive products with about ten detection steps from feather selecting to finished badminton. In the whole steps, parameter extraction of feather quill (Referred to as “FQ”) is a key link for feather grading. Traditional detection methods exist disadvantage as follows: manual operation, high labor intensity, instability in sorting quality. At present, we have do some study of the problem [1–3]. Due to slender structure of FQ with variable width, camber and curvature, in addition, boundary between crease of FQ and background is fuzzy, this all lead to misdetection of the large number of good FQ. As most of FQs without creases in actual production, detection method requires not only effective feature extraction, but also a high degree of non-crease detection accuracy. The machine vision in detect defects of electronics manufacturing, machinery manufacturing, textile, metallurgy, paper, packaging and agricultural industries has a wide range of applications, and its detection algorithm is highly targeted does not have the versatility [4–8].Because of the fuzzy boundary of the crease, this is a better choice for crease extraction and recognition based on regional shapes. Moments are often used to represent image features, such as Hu-moment [9], Zernike-moment [10–12], etc. However, these invariant moments have huge computation and are susceptible to noise interference.

The statistical analysis result of FQ crease shows that most of the FQ crease are straight-line segment with regular width which is approximately perpendicular to the radial physiological textures. In view of this feature, this paper firstly uses local-angle Radon transform to extract moment invariants of gray and horizontal gradient dual-mode of target region, then uses Singular Value Decomposition (SVD) to get feature vector of the two kinds of modal to eliminate the influence of physiological textures; secondly, according to the feature vector, two recognition results of crease can be get; finally, based on above two recognition results, the final recognition result of the image is achieved by the fusion of recognition results of the dual-mode at the decision level. The results of recognition experiment show that this method, which not only has better noise immunity ability but also reduces the rate of misjudgment of non-crease effectively, has practical application value.

14.2 Radon Transform Descriptor

By definition the Radon transform of an image is determined by a set of projections of the image along lines taken at different angles. Let \( f\left( {x,y} \right) \) be an image. Its Radon transform is defined by [13]:

where \( \delta \left( t \right) \) is the Dirac delta-function (\( \delta \left( t \right) = 1 \) if \( x = 0 \) and 0 elsewhere), \( \theta \in \left[ {0,\pi } \right] \) and. In other words, \( R\left( {\theta ,t} \right) \) is the integral of f over the line \( L_{{\left( {\theta ,\,t} \right)}} \) defined by \( x\cos \theta + y\sin \theta = t \). Consequently, the Radon transform of an image is determined by a set of projections of the image along lines taken at different angles. An image recognition framework should allow explicit invariance under the operations of translation, rotation, scaling. But it will be difficult to recover all the parameters of the geometric transformations from the Radon transform [see Eq. (14.1)]. To overcome this problem, we propose an Radon transform [14].

Let the following transform be:

where \( A_{f} = \int_{ - \infty }^{\infty } {R(\theta ,t)dt} = \int_{ - \infty }^{\infty } {\int_{ - \infty }^{\infty } {f(x,y)dxdy} } \). We can show the following properties.

Translation of a vector \( \vec{u} = \left( {x_{0} ,y_{0} } \right):g(x,y) = f\left( {x + x_{0} ,y + y_{0} } \right) \)

\( R\left\{ {g(x,y)} \right\} = R(\theta ,t + x_{0} \cos \theta + y_{0} \sin \theta ) \). Substituting Eq. (14.2), we obtain:

Scaling of \( \lambda \):

\( g\left( {x,y} \right) = f\left( {\frac{x}{\lambda },\frac{y}{\lambda }} \right):A_{g} = \lambda^{2} A_{f} ,R\left\{ {g(x,y)} \right\} = \lambda R_{f} \left( {\theta ,\frac{t}{\lambda }} \right). \): Substituting Eq. (14.2), we obtain:

Rotation by \( \theta_{0} :R_{f} \left( {\theta ,t,\alpha } \right) = R_{f} \left( {\theta + \theta_{0} ,t,\alpha } \right) \).

The area of image \( A_{f} \) can be calculated using any \( \theta \). To summarize, the \( R_{\alpha } \) is invariant moment under translation and scaling if the transform is normalized by a scaling factor \( \alpha \left( {\alpha \in Z^{ + } ,\alpha > 1} \right) \). A rotation of the image by an angle \( \theta_{0} \) implies a shift of the Radon transform in the variable \( \theta_{0} \). In the next section we propose an extension to solve this drawback.

14.3 Proposed Scheme

Taking on different values of scaling factor \( \alpha \) [see Eq. (14.2)], matrix invariants \( R = \left[ {R_{2} ,R_{3} ,\; \ldots ,\;R_{\alpha } } \right] \) can be constructed, \( R_{i} \left( {2 \le i \le \alpha } \right) \) is i order invariant moment. In terms of matrix invariants, we particularly focus on Singular Value Decomposition (SVD) [15] to extract algebraic features which represent intrinsic attributions of an image. The SVD is defined as follows: \( R = U\sum {V^{T} } \), where \( R \) is an m × n real matrix, U is an m × m real unitary matrix, and V is an n × n real unitary matrix. \( \sum { = diag\left( {\lambda_{1} ,\lambda_{2} ,\; \ldots \;\lambda_{n} ,0,\; \ldots ,\;0} \right)} \) is an m × n diagonal matrix containing singular values \( \lambda_{1} \ge \lambda_{2} \ge ,\; \ldots ,\; \ge \lambda_{n} > 0 \): let \( \eta = \left( {\lambda_{1} ,\lambda_{2} ,\; \ldots ,\;\lambda_{n} } \right) \) be invariant feature vector of an image.

Raw material for badminton usually is duck feather or goose feather with radial physiological textures which is approximately perpendicular to FQ crease. However, using SVD can eliminate the differences of rotated image and lead to confusion physiological texture and creases. From Eq. (14.2), we can know Radon transform is determined by a set of projections at angle \( \theta \in \left[ {0,\pi } \right] \). The proposed scheme, called local-angle Radon transform (Referred to as “LR transform “), is LR transform is determined by a set of projections at orientation angle \( \theta \in \left[ {0^{ \circ } ,\,15^{ \circ } } \right] \cup \left[ {165^{ \circ } ,\,180^{ \circ } } \right] \). This method by reducing the angle can eliminate the impact of physiology cal texture.

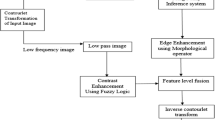

14.4 Crease Detection Algorithm

In order to improve the detection accuracy, sidelight image with side-lighting is operated. Below is a brief summary of this algorithm. Assume getting the target region as suspected crease sub-image \( f_{i} \) after pretreatment. Then calculating the LR transform of \( f_{i} \) in gray domain and horizontal gradient domain. Combining with SVD, we can get recognition results \( r_{gray} \) and \( r_{grads} \) of gray and grads dual-mode, respectively. Assume also a set of \( r_{gray} = \left\{ {0,1} \right\} \), \( r_{grads} = \left\{ {0,1} \right\} \), where 0 represents crease, 1 represents non-crease. For decision level fusion is a high-level integration with better anti-interference ability and fault tolerance and can effectively reflect the different types of information for each side of the target.

After getting two recognition results \( r_{gray} \) and \( r_{grads} \) of dual-mode, using decision level fusion method can obtain the final recognition result \( f_{r} \cdot f_{r} \) can be obtained by \( r_{gray} \) and \( r_{grads} \) as follows: \( f_{r} = \left\{ \begin{array}{ll} 1,&{\text{f}}_{\text{gray}} (i) + r_{\text{grads}} (i) = 2 \hfill \\ 0,& else \end{array} \right. \), where 0 represents crease, 1 represents non-crease. The entire algorithm is defined as follows:

-

(1)

Compute LR transform of \( f_{i} \) in gray and horizontal gradient domain.

-

(2)

Construct matrix invariants R.

-

(3)

Using SVD to extract invariant features \( \eta_{i} \) and compute \( d_{i} = \left\| {\eta_{i} } \right\| \).

-

(4)

Label \( r_{gray} = 0 \) or \( r_{grads} = 0 \), if \( d_{i} > k \) and 1 otherwise; k is experience value.

-

(5)

Compute \( f_{r} \).

14.5 Analysis of Experimental Results

In this paper, experimental samples are collected from feather detection system, and raw material is duck feather. The detection system through CCD camera obtains feather image with side-lighting. Homemade feather acquisition system and its corresponding schematic diagram are shown in Figs. 14.1 and 14.2. From sidelight image (Fig. 14.3), we can get FQ image (Fig. 14.4) by segmentation technique. The testing database contains 356 non-crease sub-images and 79 crease sub-images. During the pretreatment of FQ, they have been obtained suspected crease sub-images, and have been confirmed and compared with physical objects. Because of slender structures and camber of FQ, these sub-images have abandoned edge in order to reduce effects of edge plus noise.

14.5.1 Comparison of Feature Vector

The experimental samples are shown in Fig. 14.5. Figure 14.5a is a non-crease sub-image; Fig. 14.5b is crease image of FQ root by noise interference, and the crease just only is half of the width; the crease’s width of Fig. 14.5c is smaller; the crease of Fig. 14.5d has certain inclination and is not exactly perpendicular to the horizontal direction. After the pretreatment of the normalized Fig. 14.5, feature vector is obtained using Hu moment, Zernike moment, LR transform. The LR transform is performed in gray domain and gradient domain. Projection angle interval of LR transform is 1 degree and singular value as the recognition feature is obtained by 2, 3, 4 order invariant moments, as shown in Table 14.1. Because of limited space, the Table 14.1 gives only the first two singular values. As can be seen from the table, the distinction of singular value obtained by proposed method in gray and gradient domain is clear; and singular value obtained by Hu moment, Zernike moment have no obvious difference. Table 14.2 shows the singular value after the common Radon transform. Through contrasting singular value with Table 14.1, we can know singular value get larger because of the influence of radial physiological texture in the gray domain; and in horizontal gradient domain, the distinction between crease and no-crease gets smaller. The results show LR transform is more suitable for crease recognition than Radon transform.

14.5.2 Recognition Comparison

In this paper, we use recognition rate index to measure performance for crease and non-crease recognition fusion before and after. Table 14.3 has presented crease and non-crease recognition rate by the LR transform. From the table, we can remark that the crease recognition rate has declined after fusion in gray domain (hereinafter referred to as gray-rate) and in gradient domain (hereinafter referred to as gradient-rate); non-crease recognition rate after fusion has big improvement over the previous two. Amount of non-crease feather is far more than amount of crease feather in the actual production, so the proposed fusion strategies to improve the non-crease recognition rate meets the demand for industrial production.

14.6 Conclusion

This paper puts forward a new method of FQ crease recognition. It uses local-angle Radon transform to extract invariant moment of FQ combining with SVD to get features (singular value) which have invariance in translation and scale to eliminate the disruption of radial physiological texture. Comparing to Hu moment and Zernike moment, the experimental results show that this method has good robustness and better distinguish effect. Finally decision fusion achieves high recognition rate of non-crease. The method has some value for on-site testing with 60 ms average running time in vc++ 6.0 environment.

References

Liu H, Wang R, Li X (2011) Improved algorithm of feather image segmentation based on active contour model. J Comput Appl 32(8):2246–2245 (in Chinese)

Liu H, Wang R, He Z (2011) Slender object extraction for image segmentation based on centerline snake model. Opto Electron Eng 38(9):124–129 (in Chinese)

Liu H, Wang R, Li X (2011) The finite ridgelet transform for defeat deteetion of quill. ADME2011 9:931–936 (in Chinese)

Ruan J (2009) Research on non-planar measurement of surface roughness based on texture index from grey level co-occurrence matrix. Yantai University, Yantai (in Chinese)

Zheng XL, Li M, Luo HY et al (2013) Application of a new method based on mathematical morphology in brain tissue segmentation. Chinese J Sci Instrum 31(2): 464–469 (in Chinese)

Subirats P, Dumoulin J, Legeay V et al (2006) Automation of pavement surface crack detection using the continuous wavelet transform. In: 2006 international conference on image processing, Florence, Italy, pp 3951–3954

Zhang H, Wu Y, Kuang Z (2009) An efficient scratches detection and inpainting algorithm for old film restoration. In: Proceedings of the 2009 international conference on information technology and computer science. IEEE Press, Washington, DC, pp 75–78

Sorncharean S, Phiphobmongkol S (2008) Crack detection on asphalt surface image using enhanced grid cell analysis. In: 4th IEEE international symposium on electronic design, test and applications, Hong Kong, pp 49–54

Hu MK (1962) Visual pattern recognition by moment invariants. IEEE Trans Inf Theor 8(1):179–187

Teh CH, Chin RT (1988) On image analysis by the methods of moments. IEEE Trans Pattern Anal Mach Intell 10(4):496–512

Kan C, Srinath MD (2002) Invariant character recognition with Zernike and orthogonal fourier-mellin moments. Pattern Recogn 35(1):143–154

Liao SX, Pawlak M (1998) On the accuracy of Zernike moments for image analysis. IEEE Trans Pattern Anal Mach Intell 20(12):1358–1364

Deans SR (1983) The radon transform and some of its applications. Wiley, New York

Lv Y (2008) Shape affine invariant feature extraction and recognition. National Defense Science and Technology University, Changsha (in Chinese)

AlShaykh OK, Doherty JF (1996) Invariant image analysis based on radon transform and SVD. IEEE Trans Circ Syst II Analog Digital Signal Process 43(2):123–133

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Yue, H., Wang, R., Zhang, J., He, Z. (2013). Fusion of Gray and Grads Invariant Moments for Feather Quill Crease Recognition. In: Sun, Z., Deng, Z. (eds) Proceedings of 2013 Chinese Intelligent Automation Conference. Lecture Notes in Electrical Engineering, vol 256. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-38466-0_14

Download citation

DOI: https://doi.org/10.1007/978-3-642-38466-0_14

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-38465-3

Online ISBN: 978-3-642-38466-0

eBook Packages: EngineeringEngineering (R0)