Abstract

In this paper, a semi-supervised dimensionality reduction algorithm for feature extraction, named LRDPSSDR, is proposed by combining local reconstruction with dissimilarity preserving. It focuses on local and global structure based on labeled and unlabeled samples in learning process. It sets the edge weights of adjacency graph by minimizing the local reconstruction error and preserves local geometric structure of samples. Besides, the dissimilarity between samples is represented by maximizing global scatter matrix so that the global manifold structure can be preserved well. Comprehensive comparison and extensive experiments demonstrate the effectiveness of LRDPSSDR.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Local reconstruction

- Dissimilarity preserving

- Semi-supervised dimensionality reduction

- Face recognition

13.1 Introduction

Face recognition has become one of the most challenging problems in the application of pattern recognition. Face image is a high dimension vector, so numerous dimension reduction techniques have been proposed over the past few decades [1], in Principal component analysis (PCA) [2] and Linear discriminant analysis (LDA) [3] are widely used. Both PCA and LDA assume feature space lie on a linearly embedded manifold and aim at preserving global structure. However, many researches have shown that the face images possibly reside on a nonlinear submanifold [4]. When using PCA and LDA for dimensionality reduction, they will fail to discover the intrinsic dimension of image space.

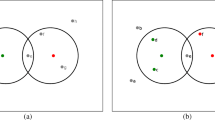

By contrast, manifold learning considers the local information of samples, aiming to directly discover the globally nonlinear structure. The most important manifold learning algorithms include isometric feature mapping (Isomap) [5], locally linear embedding (LLE) [6], and Laplacian eigenmap (LE) [7]. Though these methods are appropriate for representation of nonlinear structure, they are implemented restrictedly on training samples and can not show explicit maps on new testing samples in recognition. Therefore, locality preserving projection (LPP) [8] is proposed, but it only can focus on the local information of training samples. To remedy this deficiency, unsupervised discriminant analysis (UDP) [9] is introduced, which can consider the local structure as well as global structure of samples. However, it only uses unlabeled data. Therefore, semi-supervised methods to deal with insufficient labeled data could be learned. It can be directly applied in the whole input space, while the out-of-sample problem can be effectively solved.

This paper simultaneously investigates two issues. First, How to extract the effective discriminant feature by using labeled samples? Second, How to minimize local scatter matrix and maximize global scatter matrix simultaneity, to preserve the local and global structure information?

The rest of this paper is organized as follows. In Sect. 13.2, we give the details of LRDPSSDR. The experimental results based on two commonly used face databases demonstrate the effectiveness and robustness of proposed method in Sect. 13.3. Finally, the conclusions are summarized in Sect. 13.4.

13.2 Locally Reconstruction and Dissimilarity Preserving Semi-supervised Dimensionality Reduction

13.2.1 Local Reconstruction Error

Suppose \( {\mathbf{X}} = \left[ {x_{1} ,x_{2} , \ldots ,x_{l} ,x_{l\, + \,1} , \ldots ,x_{l\, + \,u} } \right] \) be a set of training samples that include l labeled samples and u unlabeled samples, belonging to c classes. For each sample, we find its k nearest neighbors \( N_{k} (x_{i} ) \) from \( {\mathbf{X}} \), where \( N_{k} (x_{i} ) \) is the index set of the k nearest neighbors of \( x_{i} \). To preserve local structure, design following objective function:

where \( \left\| \bullet \right\| \) denotes the Euclidean norm, with two constraints:

-

(1)

\( \sum\limits_{j} {C_{ij} = 1} \), \( i = 1,\;2, \ldots ,l\, + \,u \)

-

(2)

\( C_{ij} = 0 \), if \( x_{j} \) does not belong to the set of k nearest neighbors of \( x_{i} \).

Given samples in low-dimensional linear embedding space as follows:

where \( tr( \bullet ) \)is the notation of trace. Local scatter matrix is defined as follows:

where \( {\mathbf{M = (I - C)}}^{T} {\mathbf{(I - C)}} \), \( {\mathbf{I}} \) is an \( (l + u) \times (l + u) \) identity matrix, and matrix \( {\mathbf{C}} \) is calculated by LLE [6] algorithm.

With above two constraints, the local reconstruction weight is invariant to rotation, rescaling, and translation. So, it can preserve local structure of samples.

13.2.2 Dissimilarity Preserving

Let \( {\mathbf{X}} = \left[ {x_{1} ,x_{2} , \ldots ,x_{l} ,x_{l\, + \,1} , \ldots ,x_{l\, + \,u} } \right] \) be a matrix and each column is a training samples, belonging to c classes. Represent any \( x_{i} \) in term of its projection sequence \( G(x_{i} ) \), coefficient vector \( W(x_{i} ,G(x_{i} )) \), and residue \( R(x_{i} ,G(x_{i} )) \). Suppose \( G(x_{i} ) = \left[ {g_{i1} ,g_{i2} , \ldots ,g_{id} } \right] \) are the eigenvectors corresponding to the first d largest eigen-values in PCA. Thus, each image can be represented as a linear combination:

where \( \hat{x}_{i} \) denotes the approximation of \( x_{i} \), and the residue \( R(x_{i} ,G(x_{i} )) \) is given by

When \( x_{1} \) is projected on projection sequence \( G(x_{2} ) \) of \( x_{2} \), noting the corresponding coefficient vector \( W(x_{1} ,G(x_{2} )) \) and residue \( R(x_{1} ,G(x_{2} )) \). Based on above factor, we can design the dissimilarity measure as follows:

where \( \xi \in \left[ {0,1} \right] \) indicates the relative importance of the residue and the corresponding coefficients, when both are projected onto the projection sequence \( G(x_{2} ) \) of \( x_{2} \), we have

where \( D_{R} (x_{1} ,x_{2} ) \) is the difference between the residues of \( x_{1} \) and \( x_{2} \), and \( D_{W} (x_{1} ,x_{2} ) \) compares their corresponding coefficients, which is represented as:

Define the objective function of dissimilarity preserving as follows:

where \( {\mathbf{H}}_{ij} \) is the dissimilarity weight matrix, and \( {\mathbf{H}}_{ij} = \varphi (x_{i} ,x_{j} ) \).

Further, the global scatter matrix is defined as follows:

where \( \sum\limits_{j} {{\mathbf{H}}_{ij} } \) is a diagonal matrix, \( {\mathbf{L = D - H}} \) is a Laplacian matrix.

As a result, by maximizing the global scatter matrix can make the nearby samples of the same class become as compact as possible and simultaneously the nearby samples belonging to different classes become as far as possible.

13.2.3 The Algorithm of LRDPSSDR

According to above detail analysis, we have two scatter matrices based on labeled data and unlabeled data. Associated them with Fish criterion, a semi-supervised learning method will be derived. Design the objective function as follows:

where \( \alpha ,\beta > 0 \) are the regularization parameters. The optimization problem solution of formula (13.12) could obtain by following eigen-equation:

Based on above discussion, the proposed algorithm LRDPSSDR is briefly stated as below:

Step 1: For the given training data set, use PCA for dimensionality reduction.

Step 2: Calculate within-class scatter matrix \( {\mathbf{S}}_{b} \) and total scatter matrix \( {\mathbf{S}}_{t} \) for labeled data.

Step 3: Construct local scatter matrix \( {\mathbf{S}}_{L} \) using formula (13.3) and global scatter matrix \( {\mathbf{S}}_{N} \) using formula (13.11).

Step 4: The optimal transformation matrix \( {\mathbf{W}} \) is formed by the d eigenvectors corresponding to the first d largest non-zero eigen-value of formula (13.13).

Step 5: Project the training data set onto the optimal projection vectors obtained in Step 4, and then use the nearest neighbor classifiers for classification.

13.3 Experiments

In this section, we investigate the performance of our algorithm for face recognition and compare it with PCA, LDA, LPP, and UDP. The KNN classifier is used. The regularization parameters are set as \( \alpha = \beta = 0.1,\;\xi = 0.5 \).

13.3.1 Experiment on the Yale Dataset

The database consists of 165 face images of 15 individuals. These images are taken under different lighting condition and different facial expression. All images are gray scale and normalized to a resolution of 100 × 80 pixels. In the experiment, we select the first five images from each individual to form the training samples and the remaining six images as testing samples. Some typical images are shown in Fig. 13.1.

Figure 13.2 show that the recognition rate of LRDPSSDR method has significantly improvement compared to other four algorithms with the increase in the number of projection axis dimension. The projection axis dimension from 20 to 75 stages, the recognition rate tends stable. It can achieve a maximum value when projection axis dimension reach 55.

Table 13.1 gives the maximum recognition rate of five algorithms. It is not difficult to see that our method is the best according to Table 13.1 and Fig. 13.2.

13.3.2 Experiment on the AR Dataset

The database consists of 3120 face images 120 individuals. These face images are captured under varying facial expressions, lighting conditions and occlusions. The size of every face image is gray scale and normalized to a resolution of 50 × 40 pixels. In the experiment, we select the first seven images from each individual to form the training samples and next seven images (most are covered) as testing samples. Some typical images are shown in Fig. 13.3.

From Fig. 13.4, we can see first that the proposed LRDPSSDR method outperforms PCA, LDA, LPP and UDP, and second that our method is more robust in different lighting conditions and various facial expressions. With the projection axis dimension increase, the recognition rate raises from 30 to 140 stages. It can achieve a maximum value when projection axis dimension is 140.

Table 13.2 shows the maximum recognition rate of five algorithms. It is obvious that our method is better than other methods, so the effectiveness and robustness of LRDPSSDR is further verified.

13.4 Conclusion

In this paper, we present a semi-supervised learning algorithm LRDPSSDR for dimension reduction, which can make use of both labeled and unlabeled data. The algorithm is realized based on both local reconstruction error and dissimilarity preserving, which not only preserves the intraclass compactness and the interclass separability, but also describes local and global structure of samples.

References

Cevikalp H, Neamtu M, Wilkes M, Barkana A (2005) Discriminative common vectors for face recognition. IEEE Trans Pattern Anal Mach Intell 27(1):4–13

Turk M, Pentland A (1991) Eigenface for recognition. J Cogn Neurosci 3(3):72–86

Zheng WS, Lai JH, Yuen PC (2009) Perturbation LDA: learning the difference between the class empirical mean and its expectation. Pattern Recogn 42(5):764–779

Chen H-T, Chang H-W, Liu T-L (2005) Local discriminant embedding and its variants. In: Proceedings of IEEE computer society conference on computer vision and pattern recognition, vol 2, pp 846–853

Tenenbaum JB, de Silva V, Langford JC (2000) A global geometric framework for nonlinear dimensionality reduction. Science 290:2319–2323

Roweis ST, Saul LK (2000) Nonlinear dimensionality reduction by locally linear embedding. Science 290:2323–2326

Belkin M, Niyogi P (2003) Laplacian eigenmaps for dimensionality reduction and data representation. Neural Comput 15(6):1373–1396

He XF, Niyogi, P (2003) Locality preserving projections. In: Proceedings of the 16th annual neural information processing systems conference, Vancouver, pp 153–160

Yang J, Zhang D (2007) Globally maximizing, locally minimizing: unsupervised discriminant projection with applications to face and palm biometrics. IEEE Trans Pattern Anal Intell 29(4):650–664

Acknowledgments

We wish to thank the National Science Foundation of China under Grant No. 61175111, and the Natural Science Foundation of the Jiangsu Higher Education Institutions of China under Grant No. 10KJB510027 for supporting this work.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Li, F., Wang, Z., Zhou, Z., Xue, W. (2013). Local Reconstruction and Dissimilarity Preserving Semi-supervised Dimensionality Reduction. In: Sun, Z., Deng, Z. (eds) Proceedings of 2013 Chinese Intelligent Automation Conference. Lecture Notes in Electrical Engineering, vol 256. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-38466-0_13

Download citation

DOI: https://doi.org/10.1007/978-3-642-38466-0_13

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-38465-3

Online ISBN: 978-3-642-38466-0

eBook Packages: EngineeringEngineering (R0)