Abstract

Background noise, room reflections, or interfering sound sources represent a challenge for daily one-to-one communication, particularly for hearing-impaired listeners, even when wearing hearing aid devices. Through a modeling approach, this project investigated how peripheral hearing loss impairs the processing of spatial cues in adverse listening conditions. A binaural model in which the peripheral processor can be tuned to account for individual hearing loss was developed to predict localization in anechoic and reverberant rooms. Hearing impairment was accounted for by a loss of sensitivity, a loss of cochlear compression and reduced frequency selectivity. A spatial cue-selection mechanism processed the output of the binaural equalization-&-cancellation processor to evaluate the localization information’s reliability based on interaural coherence. The simulations in anechoic environment suggested that the sound-source-location estimates become less reliable and blurred in the case of reduced audibility. Simulations in rooms suggested that the broadening of the auditory filters reduces the fidelity of spectral cues and affects the internal representation of interaural level differences. The model-based analysis of hearing-aid processing showed that amplification and compression used to recover audibility also partially recovered the internal representation of the spatial cues in the impaired auditory system. Future work is needed to extend and experimentally validate the model. Overall, the current model represents a first step towards the development of a dedicated research tool for investigating and understanding the processing of spatial cues in adverse listening conditions, with a long-term goal of contributing to solving the cocktail-party problem for normal hearing and hearing-impaired listeners

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Ambient noise, room reflections or the presence of multiple interfering talkers are acoustic factors that can make daily communication challenging. Normal-hearing, NH, people can nevertheless typically communicate almost effortlessly in such adverse conditions [1]. In contrast, hearing-impaired, HI, people often experience major speech-communication difficulties, even when they use hearing aids, HAs. The auditory system uses various acoustic cues, such as common temporal onsets and offsets, spectral content, harmonicity as well as spatial information, to decompose an acoustic scene into its components belonging to the different sound sources [1].

The underlying processes involved in auditory scene analysis are still not very well understood; in particular, the effects of hearing impairment on speech communication in adverse listening conditions have not yet been clarified. The present study focuses on the processing of spatial cues in the auditory system. Whereas the processing of relatively simple spatial sounds is reasonably well-understood in NH listeners, the consequences of hearing impairment for the processing and perception of spatial sounds is not well understood. An important question is, for example, how sensorineural hearing loss, occurring at a peripheral processing level and representing the most common type of impairment, affects the processing of spatial cues and the perception of localization. A better understanding of the representation of spatial information in the impaired auditory system could also help understanding the difficulties that the HI listeners experience when communicating in adverse conditions.

For NH listeners, localization of single anechoic sounds has been widely studied and can be well predicted by existing binaural, cross-correlation-based models—compare [2–4]. In contrast, only very few studies have considered auditory localization in noisy conditions—for instance, [5–11]—as well as in reverberant environ-ments—such as [12–15]. Most of these studies found that localization is mainly affected at low signal-to-noise ratios, SNR, and largely limited by audibility, but a more detailed understanding of the limiting factors are still missing. It is generally assumed that the auditory system has developed various strategies for robust localization in these challenging conditions, which includes the (weighted) integration of localization information across time, frequency, and auditory cues, as well as the suppression of wall reflections. The localization of broadband noise with interaural time differences, ITD, that vary across frequency has been described by [16–18], among others. Several studies have shown that localization accuracy is modified by signals preceding and succeeding in time—see [19]. Based on a binaural localization model [20] proposes that, when background noise is present, integration of localization information across time and/or frequency is required. The integration of localization information across ITDs and interaural level differences, ILD, is, for example, reflected in trading experiments—compare [3]. Different auditory mechanisms that help a listener to localize sounds in reverberant environments are summarized by the precedence effect, PE,—see, for instance [21]. In order to model some aspects of these more advanced auditory processes, mechanisms such as contra-lateral inhibition [22] as well as cue selection [23] have been proposed.

Localization in HI listeners can be reduced as a result of different factors [24–27]. ITD discrimination thresholds may be decreased in HI listeners [28, 29] although thresholds vary largely across subjects and may be even as low as for NH listeners [24, 30, 31]. Localization performance in quiet is only reduced in listeners with unilateral hearing loss or substantial low-frequency hearing loss, for instance, [32–34], or with conductive hearing loss [27]. In [35] it is shown that horizontal localization of click-trains in HI listeners is basically as good as in NH listeners when the stimuli are presented at most comfortable levels. However, the performance drops at lower levels due to reduced sensitivity—as measured by an audiogram. Considering horizontal localization, [36] showed that HI listeners show poorer performance than NH listeners when localizing click trains in directional white noise. This decrease in performance is most likely linked to a decrease in audibility, which is caused by a decrease in sensitivity as well as an increase in the amount of temporal and spectral masking—see [25, 27]. Several studies have investigated the PE in HI listeners by measuring the localization dominance of the direct sound over a reflection. In general, the results vary largely across both HI and NH listeners, such that most studies could not reveal any significant reduction in localization dominance in HI listeners. However, a recent study by [37] showed a significant reduction in localization dominance in a large number of HI subjects which was highly correlated with hearing loss. In [38], it is pointed out that, for ongoing sounds, the fine structure of the signal is important for the PE to operate. Given that fine-structure processing is often impaired in HI listeners, it is expected that the PE is less effective. Since cognitive processes may affect localization in natural environments [39], it may be speculated that reduced cognitive capabilities as well as reduced working memory capacity, as often observed in elderly HI listeners will also compromise localization in complex acoustic environments—see [27]. In addition to the above laboratory-based psychoacoustic evidence, questionnaire-based field studies revealed that HI listeners experience localization difficulties in their daily life, which is more severe with increasing hearing loss [40, 41]. Hence, although auditory localization in HI listeners has been widely studied, the detailed underlying processes, particularly in challenging acoustic environments, are still poorly understood.

Sound localization with bilateral HAs, has been widely studied with different device types, listening configurations, source signals, algorithms, and microphone positions. For moderate to severe hearing losses, HA amplification effectively restores audibility up to about 4 kHz and, therefore, enables localization—see, for instance [26]. Subjective studies based on questionnaires generally reported a clear benefit in localization when a second HA is applied [41, 42]. When audibility is not an issue, most psychoacoustic studies agree that, when compared to the unaided case, bilateral amplification slightly deteriorates sound localization performance in the frontal horizontal plane [26, 43–45]. Undisturbed horizontal localization performance has only been reported for HI listeners with rather normal low-frequency hearing and open HA fittings [45, 46], namely, listeners that have access to natural localization cues at low frequencies. According to [47], front-back localization is disturbed by any type of HA due to the obstruction of the pinna by the earmold or the actual HA module. In [47] and [26] it is reported that horizontal localization, in particular front-back confusions, improved for listeners with remaining low- and high-frequency hearing, when no earmold occluded the ear canal and, therefore, natural localization cues were available. In [46] and [48], neither a benefit nor a detrimental effect on front-back confusions has been found for various types of HAs. This contradiction to the findings of [26] may be due to the fact that the later studies applied HAs with an increased bandwidth of 7–8 kHz instead of 5–6 kHz. Both studies also reported that behind-the-ear devices, BTE, produce an increased number of front-back confusions relative to in-the-canal, ITC, in-the-ear, ITE, or in-the-pinna, ITP, devices. In [44] it is reported that the front-back confusions as observed in BTE–HAs can be reduced by applying directional microphones, such as cardiods, instead of omni-directional microphones—in particular at high-frequencies. Moreover, [44] showed that independent bilateral wide-dynamic-range compression, WDRC, can slightly reduce horizontal localization performance due to compressed ILDs. This effect should be removed when a binaural signal link is applied to coordinate the WDRCs at the two HAs. Super-directional beamformers, which combine the signals arriving at all microphones of the left and right HAs, are able to preserve localization in simple acoustical environments—compare [48–50]. However, it is still unclear how well they preserve localization in complex and, in particular, reverberant environments. Hence, HAs have a significant effect on auditory localization, but how a given device, type of fitting, compensation strategy, and/or advanced signal processing, affect an individual HI listener, is not well understood.

In order to better understand the consequences of an individual’s hearing loss, including the effect of a HA, an auditory localization model framework would be useful. If successful, such a framework could then also be used to test and optimize the signal processing and compensation strategies applied in a HA for an individual HI listener. As a first step into this direction, a binaural signal processing model framework is proposed in this chapter. The approach is novel as it analyses NH versus individualized HI processing in conditions of signal detection and localization in noisy and/or reverberant situations. The peripheral processing in the model includes the dual-resonance nonlinear, DRNL filterbank proposed in earlier monaural studies [51]. The characteristics of the peripheral processor can be adjusted in both channels—representing the left and the right ear—such that it accounts for sensorineural hearing impairment due to a loss of cochlear compression. Such compression loss is commonly associated with broader auditory filters, i.e., decreased frequency selectivity and reduced sensitivity in terms of audibility. The binaural processor of the model is an equalization-and-cancellation, EC, processor similar to the one proposed by [52]. For the back end, depending on the particular task and outcome measure, the model assumes an optimal detector in conditions of signal detection or discrimination, or a localization unit for the prediction of sound source localization. The localization back end also includes a cue-selection mechanism inspired by the work proposed in [23], selecting reliable spatial information from a complex spatial-excitation pattern.

The overall structure of the model and the main properties of the individual processing steps will be presented first, including the simulation of hearing loss in the peripheral processing stage of the model. The model will be evaluated in classical conditions of binaural signal detection, and then be applied to localization conditions with two concurrent talkers in anechoic and reverberant rooms, assuming either NH or specific types of hearing impairment. Finally, effects of HA signal processing on sound localization will be considered and the benefits and limitations of current compensation strategies for restoring spatial cues will be discussed in the framework of the presented model.

Block diagram of the proposed model. At the input to the model, the signals can be processed by a HA algorithm or convolved with BRIRs. The left- and it right-ear input signals are processed in the peripheral processor, that is, middle-ear transformation, basilar-membrane filtering, filterbank, inner hair-cell transformation, and logarithmic compression, and then compared in an EC-type binaural processor. Two different pathways to the central processor are considered. The optimal detector deals with signal-detection conditions. The cue selector extracts the spatial positions of external sound sources

2 Binaural Signal Processing Model

2.1 Overall Structure

A block diagram of the processing model is shown in Fig. 1. When appropriate, incoming signals entering the ears can be convolved with binaural room impulse responses, BRIR, and/or applied to individual left- and right-channel HA processing. The structure of the model is similar to previous signal-driven binaural models and can be divided into three parts, namely, peripheral, binaural and central processing. The peripheral processing includes middle-ear transformation, spectral filtering, hair-cell transduction and logarithmic compression. The output of the left and right channels feed an EC type binaural processor that outputs binaural-activity maps along an internal ITD, and ILD, dimension, denoted by \(\tau \) and \(\alpha \), respectively. The information on the binaural-activity map is then either evaluated by an optimal detector, to predict binaural detection, such as ITD and ILD thresholds or tone-in-noise detection thresholds, or by a cue selection mechanism that outputs localization information. The details of the processing are presented in the following sections.

2.2 Peripheral Processing

The peripheral processing of the model consists of four main stages. The middle-ear filter simulates the middle-ear transduction and corresponds to the processing proposed by [51]. It is realized by a 512-point finite-impulse-response filter of which the coefficients have been fitted to empirical stapes-displacement measurements reported by [53]. Essentially, it is a symmetric bandpass filter that peaks at 800 Hz and has 20-dB/decade slopes.

The processing on the basilar membrane, BM, is simulated by a DRNL filterbank, as proposed in [51]. The nonlinearities of the filterbank are reflected as a change in filter bandwidth with level and by the nonlinear input/output, I/O, function in response to tones. The bandwidth of the filter is equal to that of a \(4\mathrm{th}\)-order gammatone filter at low stimulation levels and increases with level. Typical I/O functions for on-frequency stimulation, i.e., at the characteristic frequency, consists of three segments a linear segment for input levels below 30–40 dB SPL, a compressive segment for levels between 30–40 and 60–70 dB SPL, and a linear or near-linear segment for higher input levels. The transition levels between the linear and compressive parts depend on the frequency and the individual listener to which the DRNL parameters are fitted to—outlined further below. An illustration of the auditory filter shapes and I/O functions can be found in Fig. 2 of [54].

The hair-cell transduction process is roughly estimated by a half-wave rectification and a second-order lowpass filter with a cutoff frequency of 1 kHz, as used in several previously described auditory models—for instance, [52, 54, 55]. The main effect of this stage is to reduce information on the temporal fine structure, TFS, and extract the envelope of the stimulus representation at high frequencies.

The last stage of the peripheral processor is a static logarithmic compression. This reflects a simplification of the processing assumed in the models of [55, 56] and [52], where a combination of adaptation loops has been considered to result in either a close-to-logarithmic compression for the processing of stationary portions in the stimuli or in linear processing for the fluctuating portions of the stimuli. The adaptation loops enabled the original models to account for intensity discrimination as well as forward masking data. Such adaptive properties were not considered in the model proposed here. The logarithmic compression used in the present model generates a linear internal mapping of the sound pressure levels of the stimuli. Such a mapping, in combination with the level equalization stage in the binaural processor—described below—realizes an internal representation of the ILDs that corresponds to the ILDs of the acoustic input signals.

A key feature of the proposed model is that the peripheral part can be adapted to approximate the processing of individual NH and HI listeners. The increase in absolute hearing threshold, a reduction or loss of peripheral, BM, compression and a broadening of the auditory filters are accounted for in the peripheral processor. The absolute hearing threshold is partly determined in the model by the gain in the DRNL. A frequency-dependent noise, referred to here as audibility noise, was added to the input signals to simulate increased absolute thresholds. The audibility noise was assumed to be independent across ears and across frequency. The level of the noise was adjusted using the optimal-detector back end of the model framework, such that the detection thresholds for 300-ms pure tones corresponded to the considered individual listener’s audiogram. The loss of compression and the associated increase in auditory filter bandwidths were accounted for through a fit of several parameters of the DRNL model to results from temporal masking curves in different conditions, as described in detail in [57].

An average NH listener with an audiogram as reflected in the ISO standard [58] was chosen to represent NH. The DRNL parameters for normal and impaired hearing were taken from [57]. In particular, subject HI8 was chosen for the present study. This listener was considered to have lost compression and had elevated hearing thresholds of 40 and 60 dB HL at 500 and 4 kHz, respectively.

Left I/O functions of the DRNL module. Right I/O functions of the entire peripheral processor Functions are shown for NH (solid curves) and HI8 (dashed curves) at 500 Hz (circles) and 4 kHz (diamonds). The dotted curves in the right panel represent results obtained without the presence of audibility noise

Figure 2 depicts the I/O functions of the DRNL stage for NH and for HI8 at 500 Hz and 4 kHz. The absence of compression for subject HI8 is reflected by the linear functions at both frequencies. The associated reduced frequency selectivity for this listener can be seen in Fig. 9 of [57]. Further, Fig. 2 shows the corresponding I/O functions for the entire peripheral processing block, where the dotted curves represent the I/O functions without the presence of audibility noise, reflecting logarithmic functions of the form {output \(=\) a*log(b*input \(+\) 1)}, with a and b depending on frequency and the DRNL parameters. The presence of the audibility noise produces a constant output value for input levels below the absolute hearing thresholds at the respective frequency for the individual listener.

2.3 The Binaural Processor

The binaural stage represents an EC processor—see, for instance [52, 59] or [60]. It is implemented in a similar way as in the binaural processing model of [52]. It differs, however, from the model in [52] in the way that ILDs are coded. The preprocessed left- and right-ear signals are compared in the binaural stage at their respective frequency channels. The binaural processor consists of an array of excitation-and-inhibition, EI, units, each assigned with a characteristic ITD, \(\tau \)[ms], and a characteristic ILD, \(\alpha \) [dB]. As a result, the binaural processor realizes a discrete equalization in time and level, followed by the cancellation operation that calculates the difference between the equalized left- and right-ear signals. The difference is squared to obtain the power of the binaural signal. The output of a single EI unit is thus expressed as follows,

Here, \(L_{i}\)(t) denotes the left-ear preprocessed signal from frequency channel i, and \(R_{i}\)(t) denotes the corresponding right-ear preprocessed signal. In contrast to the binaural processor in [52], internal ILDs, \(\alpha \), are linearly processed. This processing, in combination with the logarithmic mapping of level realized by the logarithmic compression of in the peripheral processor, provides a mapping of the physical ILDs into \(\alpha \)-values. Furthermore, a 30-ms long double-sided exponential window limits the temporal resolution of the binaural processor, a phenomenon often referred to as binaural sluggishness [61].

The binaural processor outputs frequency- and time-dependent binaural-activity maps along the \(\tau \)- and \(\alpha \)-axes, whereby the activity, E, provides an estimate of the interaural coherence. A null activity corresponds to interaural signals that are perfectly correlated, and an increasing activity reflects a reduced interaural coherence. The location of the minimum activity, thus, provides an estimate of the considered source direction. The resolution limit of the model is provided by a constant-variance internal noise added to the binaural-activity map as an independent signal at the output of each EI elements.

2.4 Central Processing

Two central processors are integrated in the model and applied to the output of the monaural and binaural preprocessing: an optimal detector, effective in conditions of signal detection, and a localization device including a spatial-cue selection process for the prediction of sound source localization in adverse conditions.

The optimal detector corresponds to the one described in [52]. The binaural detector retrieves the information provided in the binaural-activity map. Following the monaural signal paths, a 10-ms-long double-sided exponential window is applied before the detection stage to limit monaural temporal resolution in both channels. Furthermore, a similar integrator with a time constant of 30 ms is part of the binaural-activity map generation. Both monaural and binaural information are optimally combined across time and frequency channels. Details of the implementation can be found in [52].

The activity represented in the binaural-activity map is minimal at those values of \(\tau \) and \(\alpha \) that correspond to the ITD and ILD created by the sound sources. The localization of a sound source is therefore achieved by finding the \(\tau \) and \(\alpha \) values corresponding to points of minimum activity, PMAs, on the binaural-activity map. For convenience, the extracted \(\tau \)- and \(\alpha \)-value of the PMAs will be referred to as ITD and ILD throughout this chapter. The amount of activity at the PMA provides a measure of the interaural cross-correlationFootnote 1 or coherence whereby a low activity refers to a high coherence. Based on this coherence measure, a cue-selection mechanism that is similar to the one proposed by [23] is implemented here. The cue selector is used to disentangle spatial information in adverse acoustic conditions including room reverberation, multiple talkers or background noise. Consequently, only instantaneous localization predictions that provide a coherence that is above a pre-defined threshold are considered, that is, PMAs on the binaural-activity map that are below a pre-defined threshold. The threshold depends on frequency and the considered acoustic environment—see [23]. The cue-selection operation is thus assumed to increase the reliability of the localization estimation.

3 Model Evaluation

3.1 ITD and ILD Detection

Here the model’s ability to predict just-noticeable difference for ITDs and ILDs is studied. Model predictions for ITD and ILD detection thresholds are shown in Fig. 3. The left panel of Fig. 3 shows ITD detection thresholds as obtained with 1-ERB-wide noise band stimuli as a function of the center frequency of the noise between 150 and 1.5 kHz. For the measured and simulated data, a 2-down 1-up adaptive procedure was used in combination with a 3-alternative forced-choice paradigm. Each presentation interval was 300 ms long. The predicted frequency dependence of the ITD threshold corresponds well to that observed in the measured data. The threshold function shows the well-known pattern with a minimum at about 1 kHz. The threshold decrease with increasing frequencies up to 1 kHz results from the constant absolute-phase sensitivity of the model. The threshold increases for frequencies above 1 kHz, which is due to the increasing loss of temporal fine structure that leads to loss of information regarding the carrier signal. The effects is modeled by low-pass filtering in the hair-cell transduction stage.

Left ITD-detection thresholds as a function of the center frequency of one-ERB-wide noise bands. Own data represented by diamonds and data from [62] by squares. Right ILD detection thresholds. Experimental data are represented by open symbols and model data by filled symbols

Figure 3 shows measured and simulated ILD thresholds. The model accounts for the data very well for frequencies up to about 1 kHz but discrepancies can be observed at higher frequencies. Above 2 kHz, the model predicts an increasing ILD threshold up to about 4 dB, with increasing frequency, whereas the measured data stay roughly constant within 1–2 dB. The properties of the assumed middle-ear transfer function, the DRNL filter and the hair-cell transduction stage cause the continuous increase of the simulated ILD threshold. While the discrepancies should be minimized in future model developments, they are considered moderate for the purpose of the present study. However, one of the consequences of the mismatch is that the cue-selection mechanism will slightly underestimate the reliability of ILD information above 1 kHz.

3.2 Localization with Normal Hearing

The baseline localization ability of the model is presented for a NH listener in an anechoic environment and in a room. Similar to [23], the acoustic scenario consists of two concurrent talkers located in the horizontal plane. Each talker utters a different phonetically balanced sentence taken from [63] recorded by the same male speaker. The waveforms of the anechoic-speech excerpts of the two talkers are shown in Fig. 4. The speech signals are 2 s long and were sampled at 16 kHz. Due to the intrinsic amplitude modulations in speech signals, the energy fluctuations over time can greatly differ between the two talkers. These specific waveforms were chosen to have instants where only one speaker dominates as well as instants when the speech signals overlap.

Temporal waveforms of the left and right interfering speaker—taken from [63]

Two acoustic environments were considered an anechoic environment and a reverberant room. In the anechoic environment, the location of the speaker was simulated by imposing ITDs corresponding to the difference in arrival time at the two ears [64] and imposing ILDs taken from measurements of [65]. The two talkers were symmetrically located at \(\pm 45^{\circ }\) azimuth angle in the horizontal plane, which corresponds to ITDs of \(\pm \)0.375 ms and ILDs of \(\pm \)5 dB assuming a head diameter of 18 cm. All localization simulations were conducted without internal binaural noise.

Top Activity on binaural-activity maps at the PMAs. Middle The same but for ILD positions of the PMAs. Bottom The same for ITD positions. Data are shown for a NH listener at 500 Hz (left) and 4 kHz (right). Bold sections of the curves represent the information selected by the cue-selection mechanism. The horizontal dashed line in the top panels represents the cue selection threshold. Horizontal dashed lines in the middle and bottom panels represent the actual locations of the two talkers

The information as received, processed, and selected by the cue-selection mechanism as a function of time is shown in Fig. 5 at 500 Hz and 4 kHz. The minimum activity, MA, on the binaural-activity map is shown in the top panels. The middle and bottom panels show the position of the PMAs, map along the \(\tau \)-axis—corresponding to the estimated ITD of the sound sources—and along the \(\alpha \)-axis—corresponding to the estimated ILD of the sound sources. As in [23], a cue-selection threshold was arbitrarily chosen for each frequency, represented by the horizontal dashed line in the top panels. Bold lines indicate the EI activity below the selection threshold and the corresponding time instants were considered as moments at which the localization information was reliable. At these time instants, the estimated ITD and ILD were selected by the cue-selection mechanism—bold sections of the curve in the middle and bottom panels. For this anechoic acoustical scenario, 65–75 % of the localization information was selected by the cue-selection mechanism. Localization information was rejected when the MA was higher than the selection thresholds, which occurred when both speakers were simultaneously speaking, for example at time 0.5, 1.4 or 1.6 s.

Histograms of the selected ITDs and ILDs at 500 and 4 kHz. The stimuli consisted of two talkers symmetrically separated by 45\(^\circ \) on the horizontal plane in an anechoic environment with the actual sound locations indicated by the dashed lines. The source location estimates are shown for a NH listener in the top-right window of each panel by the distinct darker spots

The ITDs and ILDs that were estimated as reliable by the cue-selection mechanism were accumulated in time into histograms—Fig. 6. ITD and ILD histograms are shown for 500 Hz and 4 kHz. Both histograms show two peaks matching the actual locations of the two speakers represented by the dashed lines. The two-dimensional histograms, shown in the top-right part of each panel, combine the individual ITD and ILD histograms. Grey shades code the frequency of the selected ITDs and ILDs, with darker areas representing a large number of occurrences. At both frequencies, two distinct darker spots are visible and represent the localization estimates of the two speakers. The size of the darker spots, reflecting the localization blur, strongly depends on the duration of the temporal integrator of the binaural processor for this simulation, which was conducted in an anechoic environment and for a NH listener. A shorter integration time would increase the localization blur. Moreover, the statistic of the ITDs and ILDs could also be used as an input parameter to other processing stages as for instance a speech processor, as discussed in [66], this volume.

The localization of the two speakers was also simulated for a reverberant room. The same two speech signals as considered for the simulations in the anechoic environment were convolved with binaural room impulse responses, BRIR. The speakers were placed at a distance of 1 m and, as in the anechoic environment, at \(\pm 45^{\circ }\) on the horizontal plane in front of the listener. The BRIRs were derived from a simulated cafeteria with a reverberation time of about 0.45 s. The cafeteria was simulated with the room-acoustic simulation software ODEON and translated into binaural signals using a HRTF data set measured with a Bruel and Kjær 4128C head-and-torso simulator, HATS, with purpose built behind-the-ear, BTE, HA dummies from Phonak mounted above the HATS’ pinnae [67]. Here, the BRIRs recorded with the HATS in-ear microphones were used—mind that in Sect. 4, which considers the effect of HA, the corresponding BRIRs recorded with the frontal BTE microphones are used.

Histograms of the selected ITDs and ILDs at 500 Hz and 4 kHz. The stimuli consisted of two speakers symmetrically separated by 45\(^\circ \) in the horizontal plane in a reverberant environment— a cafeteria. The source location estimates are shown for a NH listener in the top-right window of each panel by the distinct darker spots

Figure 7 shows the ITD and ILD histograms as well as the localization estimates for the simulation in the reverberant environment. The layout of the figure corresponds to that of Fig. 6. As in the anechoic environment, the two-dimensional histograms show two distinct darker spots representing the location estimates of the two speakers, although the localization blur is larger here. This increase is due to the reflections on hard surfaces that occur in the reverberant room and reduce the correlation between the left and right ear signals [68]. Due to the room reflections, the activity on the binaural-activity map is elevated and the cue selection thresholds were increased by about 30 % relative to simulations in the anechoic condition. For this room condition, the cue-selection mechanism evaluated that only 40–60 % of the information were reliable, that is, about 10–20 % less than under the anechoic condition.

3.3 Localization with Hearing Impairment

The effect of peripheral hearing impairment on localization is discussed in the following. First, the effects of a loss of sensitivity in anechoic environment are analyzed in the framework of the model. Then, the effect of reduced frequency selectivity on spectral cues and on the internal representation of the ILDs is studied. The acoustical scenarios are the same as the ones considered above for NH.

3.3.1 Effects of Reduced Sensitivity

The simulations estimate a HI impaired listener with hearing thresholds of 40 and 60 dB HL at 500 and 4 kHz, respectively, corresponding to HI8 in [57].

Top Activity on binaural-activity maps at the PMAs. Middle ILD positions of the PMAs. Bottom ITD positions of the PMAs. Data are shown for an HI listener with hearing thresholds of 40 and 60 dB HL at 500 Hz (left) and 4 kHz (right). Bold lines represent the information selected by the cue-selection mechanism. The horizontal dashed line in the top panels represents the cue-selection threshold. Horizontal dashed lines in the middle and bottom panels represent the actual locations of the two talkers

Figure 8 indicates the information received, processed and selected by the cue- selection mechanism in the simulations for the listener with reduced sensitivity. The corresponding results for the NH listener were shown in Fig. 5. As visible in the top panels, the loss of sensitivity leads to an overall increase and a compression of the dynamic range of activity in the PMAs. Consequently, the activity fluctuates only slightly around its average value which lies above the cue-selection threshold, effectively de-activating the localization ability of the model. Assuming the same cue-selection threshold for NH and HI listeners, the simulations suggest that it should be difficult to reliably evaluate the localization of the two speakers for the considered HI listener. Furthermore, for the simulation of the HI listener, even if the cue-selection threshold was increased, the cue-selection mechanism would still be greatly impaired due to compression in the activity.

Histograms of the ITDs and ILDs present on the binaural-activity maps for a simulated HI listener with hearing thresholds of 40 and 60 dB HL at 500 Hz and 4 kHz, respectively. The stimuli were the same as in Fig. 6. The source location estimates are shown in the top-right window of each panel by the darker spots

Figure 9 shows the time-accumulated histograms of the ITDs and ILDs. Because the reduced sensitivity de-activated the cue-selection mechanism, all ITDs and ILDs of the PMAs were considered here. At 500 Hz the ITD and ILD histograms are more similar to a single Gaussian distribution rather than a bimodal distribution. The two-dimensional histogram in the top-right corner shows a single cloud of spatial information, indicating a low interaural correlation of the input signals of the binaural processor. Thus, this estimate of a single diffuse sound source is a result of the reduced sensitivity modeled by the audibility noise, which was assumed independent between the left and right ear. In a condition of a severe sensitivity loss, the audibility noise dominates the internal representations and causes the decorrelated pattern on the binaural-activity maps.

The audibility noise is less dominant at 4 kHz where the ITD histogram shows two peaks at the actual positions of the two talkers. The ILD histogram, however, is very narrow and centered on the median position. It should be mentioned that impaired temporal coding or impaired binaural processing, as for example represented by an increased ITD jitter, has not been considered in the present study. These factors could further deteriorate the representation of ITD cues, as further discussed in Sect. 5. Additional simulations considering various degrees of sensitivity loss—not reported here—showed that the blur on sound source localization estimates gradually increases with increasing sensitivity loss until—as shown here—the audibility noise dominates and individual sources become undistinguishable. Since most listeners with a symmetric sensorineural hearing loss are able to successfully localize anechoic sound sources—when presented at moderate sound levels—only very little localization information seems to be sufficient for accurate localization. As the amount of localization information is significantly reduced in NH listeners when moving from the anechoic to the reverberant condition—see Sect. 3.2—the already reduced localization information available in HI listeners in anechoic conditions suggests that localization will be further reduced in reverberant condition and localization would break down. However, in order to further investigate the effect of HL on localization in reverberant conditions, experimental data are required that allows quantitative comparison with corresponding model predictions.

3.3.2 Effects of Reduced Frequency Selectivity

Beyond the loss of sensitivity, the model can be used to analyze the consequences of broadening of the auditory filters on localization in rooms where the filtering realized by HRTFs or BRIRs provides spectral cues that contribute to the estimation of the sound-source direction—for instance [3].

HRTFs have a very fine spectral resolution. Auditory filters have, however, bandwidths ranging from about 25 Hz at low frequencies to 2200 Hz at high frequencies [69]. Spectral cues are therefore not available to the binaural system with as fine a spectral resolution as represented in HRTFs. This reduction of spectral cue resolution could be even stronger for HI listeners, whose auditory filters are typically broader [25].

In order to investigate the effect of reduced frequency selectivity on spectral cues, the spectral decomposition of the left ear response of one of the BRIR used for localization predictions in Sect. 3.2 was calculated with the DRNL filterbank considered either for a NH listener or for a HI listener with 20 % broader auditory filters—see listener HI8 in [57].

As auditory-filter bandwidth increases with level in the DRNL, the function was amplified to reach a level corresponding to 65 dB SPL. The RMS power at the output of each filter of the filterbank was calculated as a function center frequency of the filter to form an auditory-based excitation pattern. To isolate the effect of reduced frequency selectivity, no loss of sensitivity was included. Thus, the audibility noise was the same in the NH and HI listener models.

The excitation patterns of the BRIR for both the NH and HI listener are shown in Fig. 10. Due to the difference in gain in the DRNL between the two listeners—compare left panel of Fig. 2—the curve for the HI listener has been shifted up by 8 dB to simplify the comparison. In line with previous studies simulating the effect of spectral smearing in HI listeners—for instance [70]—the present simulations show that the excitation pattern obtained for the HI listener is much smoother than the one calculated for the NH listener. The difference between the two patterns can amount to 1–2 dB at individual frequencies. Due to the nonlinear nature of the DRNL, this difference will be even larger for lower levels. This spectral smoothing suggests that the broadening of the auditory filters will reduce spatial spectral cues.

The consequence of the auditory-filter broadening is also represented in the binaural-activity maps. Figure 11 shows the ILD histogram at 4 kHz for a NH listener and a HI listener with a normal audiogram and 20 % broader filter. These histograms contain all ILDs of the PMAs present on the binaural-activity map without cue-selection. See the right panel of Fig. 7 for a representation of the selected ILDs and the estimated sound source locations for the NH listener when cue-selection is employed. For the NH listener model, the ILD histogram has a Gaussian-like shape with a range that spans 14 dB from \(-\)7 to \(+7\) dB. However, for the HI listener model, the ILD range spans 8 dB, that is, \(-\)4.5 to \(+3.5\) dB. The analysis provided in the present study also suggests that ITDs remain largely unaltered by cochlear broadening, which is consistent with the idea that they are more determined by properties of the temporal waveform of the stimuli. Although these results indicate that filter broadening impairs the internal representation of ILDs, further investigations are required to study the effect of frequency selectivity on sound localization in more detail.

4 Evaluation of Hearing-Aid Signal Processing Regarding Localization

The proposed model framework may be used to evaluate the effect of HA, processing on the internal representation of spatial cues and on the localization performance in adverse conditions. The evaluation reported in this section uses the same adverse conditions as already used before, namely, two talkers placed at \(\pm \)45\(^\circ \) azimuth angle in the horizontal plane in an anechoic environment.

The basic function of a HA is to provide amplification and to restore audibility. Due to the limited range of input levels for HI listeners, this process is combined with a WDRC in modern HAs. Some potential benefits and drawbacks of the amplification and WDRC processing are evaluated here in the context of localization and in the framework of the presented model.

The same HI listener model with hearing thresholds of 40 and 60 dB HL at 500 Hz and 4 kHz as in Sect. 3.3 was considered. The only change was the addition of HA processing of the input signals of the model. The HA processing was simulated by a model of two binaurally unlinked 30-channel compressive HAs, which were implemented according to [71] and fitted to the audiogram of the modeled HI listener—see HI8 in [57]—using the NAL-NL2 prescription [27]. The simulated HAs were BTE–HAs with omnidirectional directivity and fitted with tight ear molds. The attack time constant was set to 5 ms, the release time constant to 50 ms, and moderate channel coupling was applied, that is, the parameters were set to \(c_\mathrm{D}=0.2\) and \(c_\mathrm{U}=0.7\). With regard to the model parameters, the cue-selection threshold was the same as in the simulations for the NH and HI listeners, and the audibility noise level was the same as for the simulations with the non-aided HI listener with reduced audibility—Sect. 3.2.

Top Activity on binaural-activity maps at the PMAs. Middle: (top), ILD positions of the PMAs. Bottom ITD positions of the PMAs. Data are shown for an HI listener with hearing thresholds of 40 and 60 dB HL at 500 Hz and 4 kHz, respectively, aided by compressive binaurally-unlinked HAs. Data are shown for 500 Hz (left) and 4 kHz (right). Bold lines represent the information selected by the cue-selection mechanism. The horizontal dashed line in the top panels represents the cue selection threshold. Horizontal dashed lines in the middle and bottom panels represent the actual locations of the two talkers

The corresponding internal representations of the stimuli are shown in Fig. 12. A comparison with Figs. 5 and 8 illustrates the effect of HA processing. Restoring the audibility partially recovers the dynamic range in the EI activity at the PMAs and causes an overall decrease of activity, with information passing below the cue-selection thresholds (top panels). This outcome is the result of the amplification of the signals by the HAs, which increases the level of the internal representation of the speech signal as compared to the audibility noise. The ILDs and ITDs of the PMAs, shown in the middle and bottom panels, are also more similar to those observed in Fig. 5 for the NH subject, even though some differences remain.

Histograms of the ITDs and ILDs present on the binaural-activity maps for a simulated aided HI listener with hearing thresholds of 40 and 60 dB HL at 500 Hz and 4 kHz, respectively. The stimuli were the same as in Figs. 6 and 9. The source-location estimates are shown in the top-right window of each panel by the distinct darker spots

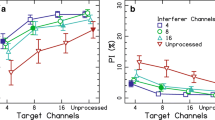

As a result of the HA processing, the EI activity has decreased and the cue-selection mechanism can successfully operate for the HI listener. The corresponding localization results are shown in Fig. 13. The ITDs of the two speakers can be successfully predicted at both frequencies. However, the ILDs are only partially recovered and generally underestimated. Assuming the same cue-selection threshold as used for simulations with the NH listener, only 20–30 % of the information was selected for this aided HI listener, suggesting a less reliable localization estimation than for the NH listener.

Although not reported here, in the case that two linear HAs or two binaurally linked HAs are fitted to the HI listener, the estimated ILDs are slightly increased but no systematic improvement in the cue-selection mechanism can be observed. Further research is required to better understand the effect of compression in general as well as binaurally coordinated compression.

5 Summary and Conclusion

In the present study, a new binaural model framework was proposed that aims at predicting sound localization in NH, HI and aided HI listeners. The preprocessing of the model consists of a non-linear peripheral processor and an EC binaural processor. EC based binaural processors have traditionally been used to predict binaural tone-in-noise detection—for example, [60, 72]—as well as ITD and ILD detection and discrimination—[72]. Since the proposed model applies a monaural and binaural processor that is very similar to the ones described in [57] and [52], the model framework is able to predict various aspects of monaural and binaural detection. In the present study, the EC binaural processor was applied to predict localization. In the binaural-activity maps generated by the EC processor, a low activity reflects a high interaural correlation and estimates of the positions of sound sources were derived from the positions of the PMAs. In the model, localization in adverse conditions was achieved with the EC-processor followed by a cue-selection mechanism inspired by the cross-correlation- based framework proposed by [23]. The cue-selection mechanism was used to evaluate the reliability of the localization information estimated by the positions of the PMAs.

The proposed framework was used to estimate the effects of peripheral hearing impairment on localization. In the current version of the model, hearing impairment was simulated in terms of either reduced audibility or loss of cochlear compression associated with reduced frequency selectivity. Limited audibility was simulated by adding an internal audibility noise to the model input signals. The corresponding predictions showed that the localization estimates, became increasingly blurry with decreasing input-signal to audibility-noise ratio. The simulations also showed that a reduction in audibility impaired the assumed cue-selection mechanism due to an increase and a compression of activity in the PMAs. Future research needs to address a broader range of hearing losses, including asymmetric HLs.

The effects of a simulated loss of compression and the resulting broadening of the auditory filters on localization were tested under a reverberant-room condition. The reduced frequency selectivity led to a spectral smearing of the spectral cues provided by the BRIRs and an alteration of the locations of the PMAs on the binaural-activity map such that the internal representation of the ILDs was mostly located around the median plane. The internal representation of the ITD was, however, hardly affected by the loss of compression.

The study also considered the potential benefit of basic HAs for localization. While the primary function of a HA is to restore audibility, it was shown that including HA processing to the inputs of the model of hearing impairment improves the cues available for localization. In the model, restoring audibility leads to an increase of the signal to-noise ratio at the input and, thus, to an increased reliability of the spatial cues. The analysis also indicated that restoring audibility was beneficial for the assumed cue-selection process as it helped to restore the dynamic range in the PMA activity to that seen in the NH model. Further research is required to better understand the effect of more advanced HAs on localization. In particular, the effect of coordinated compression of bilaterally-fitted HAs should be considered as well as the effect of signal-enhancement strategies such as super-directional beamformers or dereverberation algorithms—compare [27, 73].

The gradual removal of TFS in the hair-cell stage was found to be well suited to predict the increase of ITD detection thresholds above 1 kHz. However, as the ITD of the PMAs is present in both the signal envelope and TFS and, because no binaural noise was considered in the localization simulations, the ITD of the PMAs at high frequency was not limited. This model prediction should be experimentally investigated for NH and HI listeners in future work. Furthermore, the model currently evaluates localization information in individual auditory channels. However, most signals, such as speech, are broadband, and a spectral integration of the spatial information is performed by the hearing system [18]. The nature and modeling of this spectral integration requires further study.

The proposed model framework is a first step towards the development of a dedicated research tool for investigating and understanding the processing of spatial cues in adverse listening conditions, with the long-term goal of contributing to solving of the cocktail-party problem for NH and HI listeners. Future work will be pursued to extend the model and to validate it experimentally.

Notes

- 1.

The term interaural coherence denotes the amplitude of the normalized interaural cross-correlation function for maximum ITDs of \(\pm \)1 ms.

References

A. W. Bronkhorst, The Cocktail party phenomenon: A review of research on speech intelligebility in multiple talker conditions, Acta Acustica united with Acustica, 86:117–128, 2000.

H. S. Colburn, Computational models of binaural processing In: Auditory Computation, H. Hawkins and T. McMullen, Eds., Springer, Berlin, 1996, 332–400.

J. Blauert, Spatial Hearing: the psychophysics of human sound localization, The MIT Press, 1997.

M. Dietz, S. D. Ewert and V. Hohmann, Auditory model based direction estimation of concurrent speakers from binaural signals, Speech Comm., 53:592–605, 2011.

M. D. Good and R. H. Gilkey, Sound localization in noise: The effect of signal-to-noise ratio, Am. J. Oto., 99:1108–1117, 1996.

K. S. Abouchacra, D. C. Emanuel, I. M. Blood and T. R. Letowski, Spatial perception of speech in various signal to noise ratios, Ear Hear., 19:298–309, 1998.

C. Lorenzi, S. Gatehouse and C. Lever, Sound localization in noise in normal-hearing listeners, J. Acoust. Soc. Am., 105:1810–1820, 1999.

M. L. Hawley, R. Y. Litovsky and H. S. Colburn, Speech intelligibility and localization in a multi-source environment, J. Acoust. Soc. Am., 105:3436–3448, 1999.

R. Drullman and A. W. Bronkhorst, Multichannel speech intelligibility and speaker recognition using monaural, binaural and 3D auditory presentation, J. Acoust. Soc. Am., 107:2224–2235, 2000.

E. H. A. Langendijk, D. J. Kistler and F. L. Wightman, Sound localization in the presence of one or two distractors, J. Acoust. Soc. Am., 109:2123–2134, 2001.

N. Kopco, V. Best and S. Carlile, Speech localization in a multitalker mixture, J. Acoust. Soc. Am., vol. 127:1450–1457, 2010.

N. Kopco and B. Shinn-Cunningham, Auditory Localization in Rooms: Acoustic analysis and behavior, in 32nd Intern. Acoust. Conf. - EAA symp., Slovakia., 2002.

M. Rychtáriková, T. van den Bogaert, G. Vermeir and J. Wouters, Binaural sound source localization in real and virtual rooms, J. Aud. Eng. Soc. 57:205–220, 2009.

M. Rychtáriková, T. van den Bogaert, G. Vermeir and J. Wouters, Perceptual validation of virtual room acoustics: Sound localisation and speech understanding, Appl. Acoust., 72:196–204, 2011.

J. M. Buchholz, V. Best and G. Keidser, Auditory localization in reverberant multi-source environments by normal-hearing and hearing-impaired listeners, in IHCON Conf., Lake Tahoe, USA, 2012.

J. Raatgever, On the binaural processing of stimuli with different interaural phase relations, PhD Thesis, Techn. Univ. Delft, The Netherlands, 1980.

R. M. Stern, A. S. Zeiberg and C. Trahiotis, Lateralization of complex binaural stimuli: A weighted-image model, J. Acoust. Soc. Am., 84:156–165, 1988.

N. Le Goff, J. M. Buchholz and T. Dau, Spectral integration of interaural time differences in auditory localization, in Proc. 21st Intern. Congr. Acoust., ICA 2013, 2013.

N. Le Goff, “Processing interaural differences in lateralization and binaural signal detection”, PhD thesis, Techn. Univ. Eindhoven, The Netherland, 2010.

J. Nix and V. Hohmann, Sound source localization in real sound fields based on empirical statistics of interaural parameters, J. Acoust. Soc. Am., 119:463–479, 2006.

R. Y. Litovsky, H. S. Colburn, W. A. Yost and S. J. Guzman, The precedence effect, J. Acoust. Soc. Am., 106:1633–1654, 1999.

W. Lindemann, Extension of a binaural cross-correlation model by means of contralateral inhibition. I. Simulation of lateralization of stationary signals., J. Acoust. Soc. Am., 80:1608–1622, 1986.

C. Faller and J. Merimaa, Source localization in complex listening situations: Selection of binaural cues based on interaural coherence, J. Acoust. Soc. Am., 116: 3075–3089, 2004.

N. I. Durlach, C. L. Thompson and H. S. Colburn, Binaural interaction of impaired listeners. A review of past research, Audiology, 20:181–211, 1981.

B. C. J. Moore, Cochlear Hearing Loss, Wiley, 2007.

D. Byrne and W. Noble, Optimizing sound localization with hearing aids, Trends Amplif., 3:51–73, 1998.

H. Dillon, Hearing Aids, Boomrang Press, 2012.

D. B. Hawkins and F. L. Wightman, Interaural time discrimination ability of listeners with sensorineural hearing loss, Audiology, 19:495–507, 1980.

L. Smith-Olinde, J. Koehnke and J. Besing, Effects of sensorineural hearing loss on interaural discrimination and virtual localization, J. Acoust. Soc. Am., 103:2084–2099, 1998.

H. S. Colburn, Binaural interaction and localization with various hearing impairments, Scand. Audiol. Suppl., 15:27–45, 1982.

K. J. Gabriel, J. Koehnke and H. S. Colburn, Frequency dependence of binaural performance in listeners with impaired binaural hearing, J. Acoust. Soc. Am., 91: 336–347, 1992.

R. Haeusler, H. S. Colburn and E. Marr, Sound localization in subjects with impaired hearing. Spatial-discrimination and interaural-discrimination tests, Acta Otolaryngol. Suppl., 400:1–62, 1983.

U. Rosenhall, The influence of hearing loss on directional hearing, Scand. Audiol., 14:187–189, 1985.

W. Noble, D. Byrne and B. Lepage, Effects on sound localization of configuration and type of hearing impairment, J. Acoust. Soc. Am., 95:992–1005, 1994.

W. Noble, D. Byrne and K. T. Horst, Auditory localization, detection of spatial separateness, and speech hearing in noise by hearing impaired listeners, J. Acoust. Soc. Am., 102:2343–2352, 1995.

C. Lorenzi, S. Gatehouse and C. Lever, Sound localization in noise in hearing-impaired listeners, J. Acoust. Soc. Am., 105:3454–3463, 1999.

M. A. Akeroyd and F. H. Guy, The effect of hearing impairment on localization dominance for single-word stimuli, J. Acoust. Soc. Am., 130:312–323, 2011.

B. U. Seeber and E. R. Hafter, Failure of the precedence effect with a noise-band vocoder, J. Acoust. Soc. Am., 129:1509–1521, 2011.

R. R. Leech, B. Gygi, J. Aydelott and F. Dick, Informational factors in identifying environmental sounds in natural auditory scenes, J. Acoust. Soc. Am., 126:3147–3155, 2009.

W. Noble, K. Ter-Horst and D. Byrne, Disabilities and handicaps associated with impaired auditory localization, J. Am. Acad. Audiol., 6:129–140, 1995.

W. Noble and S. Gatehouse, Effects of bilateral versus unilateral hearing aid fitting on abilities measured by the speech, spatial, and qualities of hearing scale (SSQ), Int. J. Audiol., 45:172–181, 2006.

M. Boymans, S. T. Govers, S. E. Kramer, J. M. Festen and W. A. Dreschler, Candidacy for bilateral hearing aids: a retrospective multicenter study, J. Speech Language Hear. Res., 52:130–140, 2009.

T. Van den Bogaert, T. J. Klasen, M. Moonen, L. V. Deun and J. Wouters, Horizontal localization with bilateral hearing aids: Without is better than with, J. Acoust. Soc. Am., 119:515–526, 2006.

G. Keidser, K. Rohrseits, H. Dillon, V. Hamacher, L. Carter, U. Rass and E. Convery, The effect of multi-channel wide dynamic range compression, noise reduction, and the directional microphone on horizontal localization performance in hearing aid wearers, Inter. J. Audiol., 45:563–579, 2006.

T. Van den Bogaert, E. Carette and J. Wouters, Sound source localization using hearing aids with microphones placed behind-the-ear, in-the-canal, and in-the-pinna, Inter. J. Audiol., 50:164–176, 2011.

V. Best, S. Kalluri, S. McLachlan, S. Valentine, B. Edwards and S. Carlile, A comparison of CIC and BTE hearing aids for three-dimensional localization of speech, Int. J. Audiol., 49:723–732, 2010.

W. Noble, S. Sinclair and D. Byrn, Improvement in aided sound localization with open earmolds: observations in people with high-frequency hearing loss, J. Am. Acad. Audiol., 9: 25–34, 1998.

T. Van den Bogaert, S. Doclo, J. Wouters and M. Moonen, The effect of multi-microphone noise reduction systems on sound source localization by users of binaural hearing aids, J. Acoust. Soc. Am., 124:484–497, 2008.

T. J. Klasen, T. V. d. B., M. Moonen and J. Wouters, Binaural noise reduction algorithms for hearing aids that preserve interaural time delay cues, IEEE Trans. Signal Process, 55:1579–1585, 2007.

J. Mejia, G. Keidser, H. Dillon, CV. Nguyen, and E. Johnson, The effect of a linked bilateral noise reduction processing on speech in noise performance. In Speech Perception and Auditory Disorders, ed. by T. Dau, J.C. Dalsgaard, M.L. Jepsen, and T. Poulsen, 2011

E. A. Lopez-Poveda and R. Meddis, A human nonlinear cochlear filterbank, J. Acoust. Soc. Am., 110:3107–3118, 2001.

J. Breebaart, S. van de Par and A. Kohlrausch, Binaural processing model based on contralateral inhibition. I. Model structure, J. Acoust. Soc. Am., 110:1074–1088, 2001.

R. L. Goode, M. L. Killion, K. Nakamura and S. Nishihara, New knowledge about the function of the human middle ear: Development of an improved analogue model, Am. J. Otol., 15:145–154, 1994.

M. L. Jepsen, S. D. Ewert and T. Dau, A computational model of human auditory signal processing and perception, J. Acoust. Soc. Am., 124:422–438, 2008.

T. Dau, D. Püschel and A. Kohlrausch, A quantitative model of the “effective” signal processing in the auditory system. II. Simulations and measurements, J. Acoust. Soc. Am., 99:3623–3631, 1996.

T. Dau, B. Kollmeier and A. Kohlrausch, Modeling auditory processing of amplitude modulation. I. Detection and masking with narrow banc carriers, J. Acoust. Soc. Am., 102:2892–2905, 1997.

M. L. Jepsen and T. Dau, Characterizing auditory processing and perception in individual listeners with sensorineural hearing loss, J. Acoust. Soc. Am., 129:262–281, 2011.

ISO 226:2003 Normal equal-loudness-level contours. International Organization for Standardization, ISO, Geneva.

A. Kohlrausch, J. Braasch, D. Kolossa and J. Blauert. An introduction to binaural processing. In J. Blauert, editor, The technology of binaural listening, chapter 1. Springer, Berlin-Heidelberg-New York NY, 2013.

N. Durlach, Equalization and cancellation theory of binaural masking level-level differences, J. Acoust. Soc. Am., 35:1205–1218, 1963.

D. W. Grantham and F. L. Wightman, Detectability of varying interaural temporal differences, J. Acoust. Soc. Am., 63: 511–523, 1978.

R. Klumpp and H. Eady, Some Measurements of Interaural Time Difference Thresholds, J. Acoust. Soc. Am., 28:859–860, 1956.

IEEE recommended practice for speech quality measurements, 1969.

B.C. Moore, An introduction to the psychology of hearing, \(4^{\rm th}\) Ed. Academic Press, London, 1997.

W. E. Feddersen, T. T. Sandel, D. C. Teas and L. A. Jeffress, Localization of High-Frequency Tones, J. Acoust. Soc. Am., 29:988–991, 1957.

A. Schlesinger and C. Luther, Optimization of binaural algorithms for maximum predicted speech intelligibility. In J. Blauert, editor, The technology of binaural listening, chapter 11. Springer, Berlin-Heidelberg-New York NY, 2013.

C. Orinos and J. Buchholz, Measurement of a complete set of HRTFs for in-ear and hearing aid microphones on a Head and Torso Simulator, J. Acoust. Soc. Am., submitted, 2013.

H. Kutruff, Room acoustics, Elsevier, 1973.

B. R. Glasberg and B. C. J. Moore, Derivation of auditory filter shapes from notched noise data, Hear. Res., 47:103–138, 1990.

T. Baer and B. C. J. Moore, Effects of spectral smearing on the intelligibility of sentences in noise, J. Acoust. Soc. Am., 94:1229–1241, 1993.

J. M. Buchholz, A real-time hearing-aid research platform (HARP): realization, calibration, and evaluation, Acta Acustica united with Acustica, under revision, 2013.

J. Breebaart, S. van de Par and A. Kohlrausch, Binaural processing model based on contralateral inhibition. II. Dependence on spectral parameters, J. Acoust. Soc. Am., 110:1089–1104, 2001.

J. M. Kates, Digital Hearing Aids, Plural Publishing, 2008.

Acknowledgments

The authors are indebted to two anonymous external reviewers for constructive suggestions with regard to an earlier version of the manuscript.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer-Verlag Berlin Heidelberg

About this chapter

Cite this chapter

Le Goff, N., Buchholz, J.M., Dau, T. (2013). Modeling Horizontal Localization of Complex Sounds in the Impaired and Aided Impaired Auditory System. In: Blauert, J. (eds) The Technology of Binaural Listening. Modern Acoustics and Signal Processing. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-37762-4_5

Download citation

DOI: https://doi.org/10.1007/978-3-642-37762-4_5

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-37761-7

Online ISBN: 978-3-642-37762-4

eBook Packages: EngineeringEngineering (R0)