Abstract

This chapter describes a model framework for evaluating the precision of as to which interaural time differences, ITD, are represented in the left- and right-ear auditory-nerve responses. This approach is very versatile, as it allows not only for the evaluation of spiking neuronal responses from models of intact inner ears but also of responses of the deaf ears of cochlear implantees. The model framework delivers quantitative data and, therefore, enables comparisons between different cochlear-implant coding strategies. As the model of electric excitation of the auditory nerve also includes effects such as channel crosstalk, neuronal adaptation and mismatch of electrode positions between left and right ears, its predictive power is much higher than an analysis of the electrical impulses delivered to the electrodes. Evaluation of a novel fine-structure-coding strategy as used by a major implant manufacturer, revealed that, in a best case scenario, sophisticated strategies should be able to provide ITD cues with sufficient precision for sound localization. However, whether these cues can actually be exploited by cochlear implant users has yet to be determined by listening tests. Nevertheless, the model framework introduced here is a valuable tool for the development and pre-evaluation of bilateral cochlear implant coding strategies.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Cochlear implants, CIs, are the most successful neuroprostheses available today, with approximately 219,000 people implanted worldwide—as of December 2010 [60]. Modern CIs often provide good speech intelligibility, but there is room for improvement. Due to the limited number of independent channels [71], the spectral representation of music is less detailed for cochlear-implant users than for normal-hearing subjects, and they perform more poorly in adverse acoustic environments, such as in a cocktail-party scenario with multiple simultaneous sound sources [12, 50]. To sustain communication in such conditions, humans have developed the remarkable ability to focus on a single speaker even within a highly modulated background noise consisting of concurrent speakers and/or additional noise sources. In such scenarios binaural hearing plays a major role. Time and level differences between the right and left ear are exploited by the auditory system to localize the sound sources and segregate the acoustic information focused upon [3].

As CIs were initially developed for unilateral implantation only, they lack some important prerequisites required for precise sound localization. Automatic gain control, AGC, in CIs is required to compress the large dynamic range of acoustic signals to the limited dynamic range available for electric stimulation of the auditory nerve. Automatic gain-control systems in CIs reflect a compromise between conflicting requirements. Actually, they must handle intense transients but at the same time minimize disturbing side effects such as “breathing” or “pumping” sounds and distortions of the temporal envelope of speech. This conflict can be solved with a dual time constant compression system [78]. However, in contemporary CIs, AGCs in bilateral cochlear implants work independently from each other, which can degrade the coding of interaural level differences ILDs. In addition, the accuracy of temporal coding in CIs clearly does not yet come close to the precision reached in the intact hearing organ. Given the many limitations involved in the artificial electrical stimulation of the auditory nerve, especially the effects of severe channel crosstalk, it is unclear if and which changes of the coding strategies will improve spatial hearing.

To answer these questions, quantitative models have been developed that help better understand the complex mechanisms involved in the electrical excitation of neurons. Pioneering model-based investigations [17, 35, 54, 63, 64] were initiated with the goal to improve CIs. They tried to optimize stimulus parameters like the stimulation frequency, pulse width and shape. The investigation of Motz and Rattay [55] harnessed the models to improve coding strategies implemented in a speech processor. The modeling approaches can be separated in three different categories, namely, point neuron models, multi-compartment models and population models.

-

Point neuron models try to capture the detailed dynamic properties of the neurons. Motz and Rattay used them to explain neuronal responses to sinusoidal stimuli and current pulses [54, 64]. Dynes [20] extended these models to capture the refractory period of the neurons more precisely. With the introduction of CI-coding strategies with high stimulation rates, it became important to investigate the stochastic behavior of the auditory nerve to electric stimulation [8, 9] and rate-dependent de-synchronization effects [69]. Mino et al. [53] captured channel noise by modeling the stochastic open- and closed states of the sodium-ion-channel population with Markov chains. Most recent models aimed to describe the adaptation of the auditory nerve to electric stimulation at high stimulation rates [37, 85].

-

Multi-compartment models are an extension of point neuron models and were introduced by McNeal [51]. They are important for investigating effects of electrode position and configuration, for instance, monopolar versus bipolar. Multi-compartment models can predict how and where action potentials are elicited in the axon of a neuron [52, 85]. They are also essential for investigating how cell morphology affects their dynamical properties [67, 74], and how the field spread in the cochlea affects the stimulation of neurons along the cochlea [7, 25, 27].

-

Population models are required to replicate neuronal excitation patterns along the whole cochlea. Therefore, they usually require modeling of thousands of neurons. For electrical stimulation, large populations of neurons are required to investigate rate-intensity functions [9] and neuronal excitation patterns for speech sounds [32]. Due to the large number of modeled neurons, these population models were implemented with computationally less expensive stochastic spike response models. Nevertheless, the increasing computational power of modern computer clusters has enabled us to model also large neuron populations based on biophysically plausible Hodgkin-Huxley-like ion-channel models [58]. In these models refractoriness and spike rate adaptation result from ion-channel dynamics.

Modeling higher levels of neuronal processing is hindered by the large complexity involved and therefore is limited to a few special cases. Basic perceptual properties like intensity perception [8] or forward masking [32] can be captured by deriving a neuronal representation that corresponds to the respective psychophysical data. More cognitive processes can usually not be predicted with models based on single neurons. However, in recent years, machine-learning techniques based on neuronal features [23, 59] were adopted to tackle highly complex tasks such as the prediction of speech understanding in noise.

The investigation reported in this chapter focuses on a similarly complex task, namely, sound localization. The ability to localize sound sources in complex listening environments has fascinated researchers over decades. Models developed in the fifties have been extended and improved by many researchers, but their basic concepts remain valid until today. The models can be divided into two basic groups, namely, coincidence models and equalization-&-cancellation models—see [43], this volume.

-

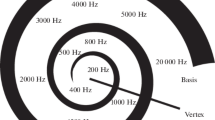

Coincidence models Jeffress postulated neuronal coincidence detectors fed by two delay lines with signals traveling in opposite directions within each tonotopic center-frequency channel [38]. This model was extended to predict basic ILD sensitivity [11]. The coincidence model was combined with a simplified inner-ear model to model the basilar membrane and inner hair cells, consisting of a filter bank, an automatic gain control, AGC, a low-pass filter, and a rectifier. References [13–15, 76, 77] provide quantitative predictions on binaural interaction. Blauert and his colleagues Lindemann and Gaik [46] used a complementary approach adding ILD sensitivity to the Jeffress model. Later in this chapter this model will be applied to predict sound-source localization.

-

Equalization-&-cancellation models These models have primarily been developed to predict binaural masking differences [19, 42]. Recently, cancellation features were added to the Jeffress-Colburn model [4–6].

2 Modeling Hearing in Cochlear Implant Users

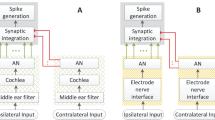

This chapter presents a modular model framework to simulate auditory nerve responses elicited by a cochlear implant. The framework is schematically depicted in Fig. 1. It consists of a speech processor, a model of the electrical field spread caused by the implanted electrode array and a model of auditory nerve fibers along the inner ear.

The model was duplicated for the case of two ears to evaluate binaural interaction—see Sects. 2.5 and 3.1. The nerve responses are then evaluated by “cognitive” stages, which, for example, can be an automatic speech-recognition system or a system that estimates the position of a sound source. Every part of the model can be exchanged by a more or less computationally expensive realization, where the complexity required depends on the scientific question.

2.1 Speech Processor

In the speech processor input signals, in this model audio-files in .sph and .wav format, are processed by a coding strategy that converts the physical sound signals into electric-current pulse trains—compare Fig. 2. The pulse trains are fed into the inner ear via the electrode array. The coding strategy replaces the processing steps that usually take place in the inner ear and translate the physical sound signal into a representation that can be processed by the neuronal system. Because of the limited information-transmission capacity between implant and the neural system, coding strategies are predominantly optimized for speech coding. They process a limited frequency range, usually between 100 Hz and 8 kHz in 12–22 frequency bands. The large dynamic range of natural sounds requires effective AGC systems. Whereas many coding strategies were developed by the different manufacturers [87], in this chapter a generic implementation of one of the most successful coding principles, the continuous interleaved sampling, CIS, strategy [84] is introduced. In the CIS strategy, the signal is first filtered into frequency bands, then the spectral envelope is extracted. The temporal fine structure is discarded. The spectral envelope is then sampled using biphasic rectangular pulses, which are delivered to one electrode at a time, that is, interleaved.

This implementation applied a dual-time-constant front-end AGC [78], followed by a filter bank. The envelope was extracted in each channel with a Hilbert transformation. The amplitudes of the envelopes are mapped individually for each CI user and electrode between threshold level, THR, and maximum comfort level, MCL. Note that the dynamic range for electrical stimulation is extremely narrow. The difference between THR and MCL is only in the order of 10–20 dB [28, 86]. Mapping is implemented using power-law or logarithmic-compression functions [48]. The small dynamic range that is available for electric stimulation causes another severe limitation, namely, if two electrodes were stimulated simultaneously, their overlapping fields would sum up and cause overstimulation of neurons. The electrodes in CIs are therefore stimulated one after the other, that is, interleaved. Extensions of the CIS concept try to reconstruct the phase locking, as is observed in neuronal responses at low frequencies [41]. In CIs, this can be achieved by implementing a filter bank followed by slope-sensitive zero-crossing detectors—compare Fig. 2—that trigger the stimulation pulses [88].Footnote 1 This technique is used in the fine structure coding strategies (FSP, FS4, FS4-p) in the MED-EL MAESTRO cochlear implant system.

In summary, the FS strategies transmit the envelope information with their basal electrodes at high temporal resolution and code additional FS phase information with their most apical electrodes—which is conceptually similar to what happens in the intact inner ear. With this model framework it is now possible to estimate how much of this additional phase information is actually transmitted by the auditory nerve fibers and available for sound localization, and how much of it is corrupted by the limitations of electrical stimulation.

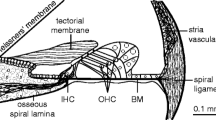

2.2 Electrode Model

One of the most severe limitations in modern cochlear implants is imposed by the electrical crosstalk between stimulation electrodes. The CI electrode array is usually inserted in scala tympani and immersed in perilymph—which has a conductivity of approx. 0.07 k\(\Omega \)mm [30, 66]. The neurons of the auditory nerve are inside the modiolar bone, which has a much higher conductivity of approx. 64 k\(\Omega \)mm [26, 30, 66]. Due to these anatomical constraints, the current spreads predominantly along the cochlear duct [30, 83]. This problem limits the number of independent electrodes to a value of about 7–8 [18, 24, 36] and a single electrode can excite auditory nerve fibers almost along the whole cochlea [30]. The amount of channel crosstalk is dependent on many factors and varies from CI user to CI user [21]. Different methods to measure the spread of excitation provide values between 1 and 4 dB/mm [33, 44, 57, 62]. The electrode array was modeled with 12–22 contacts as electrical point sources. The electrical excitation of a neuron in an electrical field is governed by the activating function [64], which is the second derivative of the electrical potential in the direction of the axon.

For a point current source, \(I\), in a homogeneous isotropic medium, the activating function can be calculated as

where \(V_\mathrm{ex }\) is the extracellular potential field, \(\rho \) the mean conductivity of the surrounding tissue—3 k\(\Omega \)mm [67]. \(x\), \(y\) and \(z\) are the coordinates according to Fig. 3. The value of this function was calculated at a distance \(x\) of 500 \(\upmu \)m from the electrode in the modiolus, where the electrical stimulation most likely elicits the action potentials in the auditory nerve fibers. Because of the coiling of the cochlea, the current spread can only be solved with three-dimensional models [7, 66, 83]. For simplicity, coiling of the cochlear ducts was neglected and therefore no across-turn stimulation occurred in this model. As the activating function for a homogeneous medium underestimates current spread, which would lead to unrealistic focal stimulation of a neuron population, the activating function was calculated only at the position of the electrode \(z=0\). For the current spread in the \(z\) direction an exponential decay with 1 dB/mm was assumed, which was found experimentally [33, 44, 57, 62]—worst-case scenario.

2.3 Model of a Single Nerve Fiber

One of the most important steps in this model is the excitation of the auditory nerve. The theory behind this model is reviewed in [29, 49]. A biophysically plausible model was implemented that is based on Hodgkin-Huxley-like ion channels—including hyperpolarization-activated cation channels, HPAC, high-threshold potassium channels, \(K_{HT}\), and low-threshold potassium channels, \(K_{LT}\). Such ion channels are also found in cochlear nucleus neurons. Due to their large time constants, the auditory nerve exhibits adaptation to electrical stimulation [56]. Conductances and time constants were corrected for a body temperature of 37 ºC. The electrical equivalent circuit of the model is shown in Fig. 4.

The equations and parameters for the models are taken from [68] (see also Table 1)—with the units ms and mV. \(V_M\) denotes the trans-membrane voltage. The gating variables of the different channels, \(x \in \left\{ w; z; n; p; r\right\} \), are voltage-dependent and they converge with a time constant of \(\tau _x\) to their equilibrium value \(x_\infty \) as described by the following differential equation.

The behavior of the ion channels is described in the next equations.

(i) Low threshold, \(K^{+}\)-channel, \(K_{LT}\),

(ii) High-threshold, \(K^{+}\)-channel \(K_{HT}\),

(iii) Hyperpolarization-activated cation current, \(i_{h}\),

(iv) Fast Na\(+\) current, \(i_{Na}\),

The electrical stimulation of neurons in an electric field was analyzed according to Rattay [65] as

In this equation, \(\tau = \rho _{m} c_m\) denotes the time constant of the passive membrane and \(\lambda = \sqrt{\rho _{m} / \rho _{a}}\) the length constant of an axon. If a long axon is assumed in the field, the term \(d^2V_{m}/dx^2\) can be neglected if the neuron is at rest. Then the external electrical stimulation acts like a virtual internal current source, which is proportional to \( d/4\,\rho _{a}\), \(d^{2}V_{Ex}/dx^{2}\), with axon diameter d. Therefore the equation for a section of the axon can be described by the equation

If the analysis is restricted to the compartment, where the action potential is elicited, it is not necessary to solve the equations for all compartments—this would require a computationally intensive multi-compartment model. Instead, it is sufficient to check if this compartment—that is, the compartment where the activating function has its maximum—elicits an action potential. This would then, in the case of a multi-compartment model, propagate along the axon. Following this analysis, it is possible to reduce the model complexity to a single-compartmental model , which allows to calculate the response of a large number of neurons. The nonlinear ion-channel equations are solved in the time domain with the exponential Euler rule [10]. The model presented so far is deterministic and has therefore a fixed, although dynamic, threshold. Recordings from the auditory nerve in laboratory animals show that neurons exhibit a stochastic behavior also for electrical stimulation. This behavior was modeled by including a stochastic current source—compare Fig. 4. Physiological recordings show that a single neuron exhibits a dynamic range in the order of 1–5 dB [72, 73]. In this model a dynamic range of 2.5 dB was modeled by adjusting the current amplitude of the white noise source accordingly. However, the dynamic range of a single neuron is still too small to explain the dynamic range observed in CI users.

2.4 Population Model of the Auditory Nerve: Individual Model for CI Users

The analysis of the coding of complex sounds like speech requires a large population of neurons along the cochlea. When the stimulation current increases more neurons are excited, which extends the dynamic range for electrical stimulation. Surviving neurons in the spiral ganglion have variations in their axonal diameters, namely, 1.2–2.5 \(\upmu \)m [45], and are located at different distances from the stimulating electrode—compare Fig. 5.

Factors which extend the dynamic range of the spiral ganglion neuron, SGN population comprises of the channel noise from an individual neuron and different thresholds of the single fibers due to different axon diameters and the varying distances between electrodes and cells. The dynamic range due to channel noise is about 2.5 dB, variations of the diameter contribute up to 6 dB, and the distance between electrode and SGNs up to 12.5 dB. With appropriate SGN populations, CI users with dynamic ranges between 3 and 21 dB can be modeled. A larger dynamic range requires a larger SGN population and in turn longer computing times. The SGN are distributed along the length of the basilar membrane according to cell counts by [22, 75] in hearing impaired subjects. Given that SGNs degenerate further in deaf subjects [2, 40] and limitations in computational power, the model results presented here include up to 6.000 SGNs. SGNs were randomly distributed along the length of the cochlea and also the distance of the cells to the electrode, therefore the population was not uniformly distributed—Fig. 5.

2.5 Sound-Localization Model

For sound localization experiments, left- and right-ear signals were processed with normal-hearing, NH or CI-listening models, which provide auditory-nerve-fiber, ANF, responses for further evaluations. Here the inner ear model from Wang [82] was selected as the NH reference. In the case of CI hearing, the acoustic input was processed by two independent models of speech processors, followed by two models of electrically evoked ANF/SGN responses—see Fig. 6.

Schematic of the used framework. Each channel of a binaural acoustic signal is scaled and analyzed by both speech processors independently and transformed into firing patterns for each of the implant electrodes, according to the used coding strategy. The spike trains of the ANFs are then calculated from the electric field gradients. The Lindemann model, as binaural back end, performs the localization task in one frequency band

Commonly a speech processor uses an AGC to scale the input signal to the limited dynamic range of electrical hearing—see Sect. 2.1. Then a designated coding strategy translates the acoustic input into stimulation patterns for each electrode. Note that there is no common synchronization between the two CIs. As a result, the AGC and stimulation delivered to the electrodes at the two ears run independent on both implants. Applying independent gains to the left and right CI alters ILD cues in the acoustic signals and unsynchronized firing patterns obscure ITD cues. In the following a best case scenario, where both implants are synchronized will be further investigated.

Lindemann developed a binaural model [46, 47] originally intended for NH listeners. This model is based on the Jeffress model [38] and assumes that coincidence neurons receive input from a tapped delay line from each side of the tonotopic representation of the cochlea. The coincidence detecting neurons are located along the delay lines such that they fire at specific ITDs.

This process is mathematically described as a cross-correlation function, as defined in Eq. 21. \(l(n)\) and \(r(n)\) denote the discrete left and right input signals. The cross-correlation output is a value of signal energy as a function of time delay.

The Lindemann model extends the correlation delay line of the Jeffress model by introducing inhibitory elements, which adds ILD sensitivity to the model. This is modeled with attenuation elements along the delay-line. By this arrangement, ILDs are mapped to a corresponding cross-correlation time. The model does not consider any correlation between different frequency bands in the hearing system. The sharpness of the correlation peaks depends on the inhibition parameter. Larger values will sharpen the peaks. In addition, the model features a temporal integration element to stabilize the output for non stationary input signals. For further details see [47].

3 Testing the Model

3.1 Test Set-Up

For testing purposes a binaural signal generator was implemented (Fig. 7), which provides an acoustic two channel signal carrying ITD and ILD information.

The simulated listening setup consists of a sound source that is circling around the listener’s head at 1 Hz. The distance between the two ears was set to 150 mm. An emitted wavefront will reach both ears at different times and thus invoke location-dependent ITDs. ILDs were evoked with a frequency-independent attenuation component, when required. By intention, no head shadow effect was included to control ILD and ITD independently from each other.

3.2 Results

3.2.1 ANF-Response Patterns

Figure 8 shows smoothed response patterns for 6,000 auditory nerve fibers in response to the spoken utterance /ay/ from the ISOLET database [16]—female speaker fcmc0-A1-t, upper trace, 72.8 dB(A). Smoothing was achieved with a 10 ms Hamming window as low-pass filter.

Response pattern of auditory nerve fibers in response to the spoken utterance /ay/. Top panel acoustic signal. Second intact-ear model [82], 60 high-spontaneous-rate ANFs per frequency channel, averaged with a 10 ms Hamming window—right column Averaged firing rate over whole utterance. Third response to electric stimulation with CIS strategy. Bottom same with FS4 strategy. The electrode positions are shown schematically on the left hand side. The electrical field spread, here 1 dB/mm, see Sect. 2.2, limits the spatial resolution of electrical stimulation

Response pattern from the area marked in Fig. 8 at high temporal resolution—0.4 ms time bins. Left intact ear. Middle implanted ear with CIS, Right with FS4 coding strategy. Upper traces show the response probabilities of a population of neurons at the position of the most apical electrode in a time bin

Figure 9 shows spike patterns with high temporal resolution for the acoustic signals, CIS and FS4. The high-resolution figures for the normal-hearing model show very strong phase locking to the fundamental frequency of approx. 220 Hz of the speech signal—which is not coded at all by the CIS strategy. In the case of FS4 strategy, there are phase-locked responses, which are, however, obscured by additional spikes that are elicited by other nearby electrodes due to electrical crosstalk.

3.2.2 Lindemann Example with Rectified Bandpass-Filter Input

Adapting the original example from the Lindemann model, a loudspeaker playing a 500 Hz pure tone was circling the listener at a rate of 1 Hz, in a virtual anechoic listening scenario. The signal pre-processing for the original Lindemann NH model consists of a filter bank with half-wave rectification and low-pass filtering to mimic the output of an inner hair cell.

Output of the Lindemann cross-correlation for a low-frequency pure tone of 500 Hz, a original input, b spike-trains derived from an auditory model. A positive/negative Lindemann cross-correlation time-delay indicates a sound-source positioned right/left of the median-sagittal plane of the head. A sound source, circling the head once per second leads to a deviation of max. 0.441 ms in the cross-correlation time-delay

The output of the model is shown in Fig. 10a, which shows that the model localizes the sound source with ease. The signal started with an interaural delay of 0 ms, corresponding to a source location of \(0^\circ \), and performed two clockwise rotations. The maximum time delay of \(+0.4\) ms was reached at 0.25 and 1.25 s for the right side and \(-0.4\) ms at 0.75 and 1.75 s for the left side, which corresponded to a location at \(\pm 90^\circ \). The 500 Hz-analysis shows that the model analyzes the simulated time delay correctly. Note that for a 500 Hz sinusoidal input, the cross-correlation time delay has a repetition period of 2 ms. Nevertheless, only delays smaller than 1 ms are considered, as the distance between the ears is only 150 mm.

3.2.3 Lindemann Model with Spike Count Input

The Lindemann model can be used with spike-count data of the ANF as well. By use of the NH-listener-ANF model as described in Sect. 2.4, the spike response of two ANF populations from the left and right cochleae was calculated and processed by the Lindemann model. Figure 10b shows the Lindemann cross-correlation for the circling source emitting a 500 Hz pure tone, when using spike counts derived from the Wang model—see [82]. The circling can be clearly seen, although the image looks noisier than the original model, what results from the probabilistic nature of the spikes.

NH-listener localization of a moving speaker saying the German sentence “Britta gewann drei schwere Steine”. b Short-time spectrogram of the acoustic input signal in a 200-Hz band, a corresponding Lindemann cross-correlation using ANF spike counts. Note the fricatives /s/ and /sch/ occurring at 1 and 1.4 s in the 200-Hz-band spectrogram, do not provide enough energy for the Lindemann model to localize

As the localization of a pure sine wave is a somewhat artificial example, the model was also tested with speech sounds. Results are illustrated in Fig. 11a. When this sentence is radiated from the moving speaker, it can be well localized using the Lindemann cross-correlation even with ANF spike count inputs simulated with the NH-listener model in the 200-Hz low-frequency region.

3.2.4 Localization with Cochlear Implants

As sound localization in complex acoustic environments is still poor for most of the CI users, the question arises if—and if yes, how well—today’s coding strategies can preserve binaural cues. In the following, two commercial coding strategies are compared, namely, MED-EL’s former CIS strategy and their current FS4 strategy.

Speech samples derived from the binaural signal generator were processed with the speech processor and the two coding strategies—see Fig. 7. The ideal assumption was made that both ears, implants and fittings were identical. Before the electrically-evoked spike responses of the ANF is calculated, the electrode-stimulation patterns can be used as inputs to the Lindemann cross-correlation. This is advantageous when comparing coding strategies as no neuronal model is required yet, allowing us to track the point at which the binaural localization cues are compromised.

Figure 12 shows that the Lindemann model fails to localize the speech sample in the case of the CIS strategy, but succeeds in the case of FS4. Therefore, it can be concluded that FS4, with its fine structure channels, preserves the temporal-fine-structure-ITD cues needed for localization, but CIS does not. In the case of the CIS strategy, the Lindemann cross-correlation only outputs values at multiples of 0.6 ms, which is due to the CIS stimulation rate of \(\approx \)1,600 pulses per second in each channel. For FS4, the Lindemann correlation shows a time-delay resolution of \({\approx }0.2\) ms. This is possible because the FS4 strategy breaks-up the CIS rule and dedicates a higher sampling rate to the FS channels.

Speech-localization comparison of different coding strategies with the German speech sample from Fig. 11b. The output of apical electrode #2 of CIS and FS4 coding strategies is used as input to the Lindemann cross-correlation module. Whereas the model is unable to localize the moving sound source using the CIS strategy, the FS4 clearly manages to provide cues required for sound localization to the CI user. a CIS electrode 2. b FS4 electrode 2

Lindemann cross-correlation with ANF-spike-count input of two locations. Location (a) is directly at electrode #2 at 26.9 mm from base, and (b) between electrodes #3 and 4 at 23.8 mm from the base. The speech-sample input was processed by the FS4-coding strategy. From that data the circling source movement can be identified, but the Lindemann cross-correlation is much more distorted than the NH-listener example from Fig. 11a. The channel crosstalk occurring in electrical stimulation—see Sect. 2.2—is one of the reasons for deterioration of the Lindemann cross-correlation

The FS4 coding strategy itself preserves localization cues. However, it is still possible that the CI-electrode crosstalk—see Sect. 2.2—deteriorates sound localization cues. For that reason the ANF module was added to the model, and the response for the electrically-evoked hearing was processed further with the Lindemann module. Figure 13 shows results from two different locations, the first next to electrode #2 at 26.9 mm from the base and the second between electrodes #3 and 4 at 23.8 mm from the base. Compared to the NH case and to electrode-stimulation patterns, results are worse, but the main shape is still observable—which is indeed a major breakthrough for a fully-featured coding strategy for speech input. In less ideal cases, the location cues deteriorate. This is visible at the more basal location, which lies between two electrodes and also gets more input from the CIS electrodes due to electrical crosstalk. If left and right electrodes were inserted at different depths, position mismatch could further reduce correlations. However, as yet it not clear how much mismatch can be counterbalanced by the brain’s ability to adapt to unusual cues as long as they are consistent.

4 Discussion and Conclusion

This chapter describes a framework to evaluate the extent as to which features required for sound localization are preserved by cochlear-implant coding strategies. Where a correlation model similar to the one proposed by Jeffress [38] is most likely implemented in the barn owl, investigations of the mammalian neuronal sound-localization pathway indicate that humans probably have two systems that extract ILDs and ITDs separately, and probably not with coincidence neurons to estimate the interaural cross correlation—for a review see [31]. Nevertheless, even if the neuronal processing schemes to extract cues for sound localization are still not yet completely understood, it is quite clear that ILDs and ITDs are extracted somehow. This investigation focused on the evaluation of ITD cues and used the model proposed by Lindemann. Thus, there is little doubt that the fundamental findings derived from this procedure hold true even if actual neuronal systems process localization cues somewhat differently.

The Lindemann model was adopted in such a way so that it can process electrical pulse-trains and neuronal spike trains. The analysis was limited to the low-frequency range where neuronal responses exhibit strong phase-locking. At low frequencies, level differences are usually small and ITD processing is assumed to be often dominant in human sound localization [3]. The results presented here show that ITD coding works well also for neuronal spike trains despite their probabilistic behavior. For auditory-nerve spike trains, the Lindemann cross-correlation is more variable as compared to its original input—see Fig. 10a—nevertheless, ITDs are clearly coded. This holds true not only for pure tones but also for complex speech sounds—compare Fig. 11a.

When electrical pulse trains delivered from a CIS coding strategy for one cochlear implant channel was analyzed, it was observed that ITD coding breaks down completely—compare Fig. 12a. This is not surprising, because the pulse train delivered to a single channel, here 1,600 Hz, codes the temporal envelope of the filtered sound signal and was never intended to provide ITD cues with sufficient precision. Given that the left and right processors are not synchronized, the time difference between left and right pulse train is arbitrary and is likely to change over time due to small deviations of the internal clock frequencies.

However, it is known that CI users are indeed able to localize sound sources, albeit less precisely than normal-hearing subjects [39, 61]. CI users almost exclusively use ILDs [70, 80], which was excluded in this investigation. The model results coincide partly with these findings. The Lindemann model was not able to predict sound localization based on ILD cues for CIS strategies, because the cross-correlation mechanism locked on the temporal structure of the signal—see Fig. 12. The temporal precision of the pulse trains from a CIS strategy is not sufficient for, and may even be detrimental to, sound localization. Therefore, ITDs must be ignored by CI users with CIS strategies, if the neuronal system is able to extract them at all at the high stimulation rates used in contemporary CIS strategies.

Nevertheless, FS coding strategies might indeed be able to transmit ITDs with sufficient temporal precision. For instance, the FS4 strategy tested here was found to provide useful ITDs coding, at least at the level of the pulse train of a single electrode—compare Fig. 12. The temporal precision is \(\approx \)0.2 ms, which is considerable higher than for the CIS strategy due to the higher sampling rate dedicated to the FS channels. When the responses at the level of neuronal spike trains were analyzed—compare Fig. 13—a large degradation of the ITD coding caused by channel crosstalk was found. Therefore, channel crosstalk does not only lead to a spectral smearing of the information but also affects the precision of temporal coding. Where this model predicts that, at least in the best case scenario, there is at least some ITD information left in the neuronal excitation pattern of the auditory nerve, it is unclear if and to what extent this information can actually be extracted by the auditory system.

In summing up, the model proposed in this chapter generates spiking auditory nerve responses and provides a quantitative evaluation of temporal cues for sound localization. The ability of the sound-localization model to process neuronal spike trains makes the model very versatile. It is possible to evaluate not only responses of the intact ear but also of the deaf inner ear provided with a cochlear implant. The model delivers quantitative data and therefore enables comparisons between different cochlear implant coding strategies. As the model of electric excitation of the auditory nerve also includes effects such as channel crosstalk, neuronal adaptation and mismatch of electrode positions between left and right ear,Footnote 2 its predictive power goes far beyond pure analysis of the output patterns of implants, which is how contemporary coding strategies were developed. Nevertheless, up to now, this model only extends up to the level of the auditory nerve and can, thus, not answer the question of whether ITDs can still be processed by higher levels of the auditory pathway. Where this final evaluation always has to be done with CI users, this framework provides important answers to the question of how well binaural cues are coded at the first neuronal level, and it allows the design and even the emulation of the required listening experiments. Given the long development cycles including design, fabrication, approval, implantation, and finally extensive measurements in a large group of CI users to yield statistically significant results, the benefit of this approach cannot be overestimated.

Notes

- 1.

This concept is realized in the MAESTRO cochlear implant system by MED-EL in the lowest-frequency channels, which stimulate the most apical electrodes.

- 2.

Data not shown.

References

P. J. Basser. Cable equation for a myelinated axon derived from its microstructure. Med. Biol. Eng. Comput., 31 Suppl:S87–S92, 1993.

P. Blamey. Are spiral ganglion cell numbers important for speech perception with a cochlear implant? Am. J. Otol., 18:S11–S12, 1997.

J. Blauert. Spatial hearing: The psychophysics of human sound localization. 2nd, revised ed. MIT Press, Berlin-Heidelberg-New York NY, 1997.

J. Breebaart, S. van de Par, and A. Kohlrausch. Binaural processing model based on contralateral inhibition. I. model structure. J. Acoust. Soc. Am., 110:1074–1088, 2001.

J. Breebaart, S. van de Par, and A. Kohlrausch. Binaural processing model based on contralateral inhibition. II. dependence on spectral parameters. J. Acoust. Soc. Am., 110:1089–1104, 2001.

J. Breebaart, S. van de Par, and A. Kohlrausch. Binaural processing model based on contralateral inhibition. III. dependence on temporal parameters. J. Acoust. Soc. Am., 110:1105–1117, 2001.

J. J. Briaire and J. H. Frijns. Field patterns in a 3d tapered spiral model of the electrically stimulated cochlea. Hear. Res., 148:18–30, 2000.

I. C. Bruce, M. W. White, L. S. Irlicht, S. J. O’Leary, and G. M. Clark. The effects of stochastic neural activity in a model predicting intensity perception with cochlear implants: low-rate stimulation. IEEE Trans. Biomed. Engr. 46(12):1393–1404, 1999.

I. C. Bruce, M. W. White, L. S. Irlicht, S. J. O’Leary, S. Dynes, E. Javel, G. M. Clark. A stochastic model of the electrically stimulated auditory nerve: single-pulse, response. IEEE Trans. Biomed. Engr. 46:617–629, 1999.

J. Certaine. The solution of ordinary differential equations with large time constants. Mathematical methods for digital computers, pages 128–132, 1960.

C. Cherry and B. M. Sayers. Experiments upon the total inhibition of stammering by external control, and some clinical results. J. Psychosom. Res., 1:233–246, 1956.

G. Clark. Cochlear implants: Fundamentals and applications. New York: Springer, 2003.

H. S. Colburn. Theory of binaural interaction based on auditory-nerve data. I. general strategy and preliminary results on interaural discrimination. J. Acoust. Soc. Am., 54:1458–1470, 1973.

H. S. Colburn. Theory of binaural interaction based on auditory-nerve data. II. detection of tones in noise. J. Acoust. Soc. Am., 61:525–533, 1977.

H. S. Colburn and J. S. Latimer. Theory of binaural interaction based on auditory-nerve data. III. joint dependence on interaural time and amplitude differences in discrimination and detection. J. Acoust. Soc. Am., 64:95–106, 1978.

R. Cole, Y. Muthusamy, and M. Fanty. The isolet spoken letter database, 1990.

J. Colombo and C. W. Parkins. A model of electrical excitation of the mammalian auditory-nerve neuron. Hear. Res., 31:287–311, 1987.

M. Dorman, K. Dankowski, G. McCandless, and L. Smith. Consonant recognition as a function of the number of channels of stimulation by patients who use the symbion cochlear implant. Ear Hear., 10:288–291, 1989.

N. I. Durlach. Equalization and cancellation theory of binaural masking-level differences. J. Acoust. Soc. Am., 35:1206–1218, 1963.

S. B. C. Dynes. Discharge characteristics of auditory nerve fibers for pulsatile electrical stimuli. PhD thesis, Massachusetts Institute of Technology, 1996.

E. Erixon, H. Högstorp, K. Wadin, and H. Rask-Andersen. Variational anatomy of the human cochlea: implications for cochlear implantation. Otol. Neurotol., 30:14–22, 2009.

E. Felder and A. Schrott-Fischer. Quantitative evaluation of myelinated nerve fibres and hair cells in cochleae of humans with age-related high-tone hearing loss. Hear. Res., 91:19–32, 1995.

S. Fredelake and V. Hohmann. Factors affecting predicted speech intelligibility with cochlear implants in an auditory model for electrical stimulation. Hear. Res., 2012.

L. M. Friesen, R. V. Shannon, D. Baskent, and X. Wang. Speech recognition in noise as a function of the number of spectral channels: comparison of acoustic hearing and cochlear implants. J. Acoust. Soc. Am., 110:1150–1163, 2001.

J. H. Frijns, J. J. Briaire, and J. J. Grote. The importance of human cochlear anatomy for the results of modiolus-hugging multichannel cochlear implants. Otol. Neurotol., 22:340–349, 2001.

J. H. Frijns, S. L. de Snoo, and R. Schoonhoven. Potential distributions and neural excitation patterns in a rotationally symmetric model of the electrically stimulated cochlea. Hear. Res., 87:170–186, 1995.

J. H. Frijns, S. L. de Snoo, and J. H. ten Kate. Spatial selectivity in a rotationally symmetric model of the electrically stimulated cochlea. Hear. Res., 95:33–48, 1996.

Q.-J. Fu. Loudness growth in cochlear implants: effect of stimulation rate and electrode configuration. Hear. Res., 202:55–62, 2005.

W. Gerstner and W. M. Kistler. Spiking neuron models. Cambridge University Press, 2002

J. H. Goldwyn, S. M. Bierer, and J. A. Bierer. Modeling the electrode-neuron interface of cochlear implants: effects of neural survival, electrode placement, and the partial tripolar configuration. Hear. Res., 268:93–104, 2010.

B. Grothe, M. Pecka, and D. McAlpine. Mechanisms of sound localization in mammals. Physiol. Rev., 90:983–1012, 2010.

V. Hamacher. Signalverarbeitungsmodelle des elektrisch stimulierten Gehörs - Signal-processing models of the electrically-stimulated auditory system. PhD thesis, IND, RWTH Aachen, 2004.

R. Hartmann and R. Klinke. Impulse patterns of auditory nerve fibres to extra- and intracochlear electrical stimulation. Acta Otolaryngol Suppl, 469:128–134, 1990.

I. Hochmair, P. Nopp, C. Jolly, M. Schmidt, H. Schösser, C. Garnham, and I. Anderson. MED-EL cochlear implants: state of the art and a glimpse into the future. Trends Amplif, 10:201–219, 2006.

I. J. Hochmair-Desoyer, E. S. Hochmair, H. Motz, and F. Rattay. A model for the electrostimulation of the nervus acusticus. Neuroscience, 13:553–562, 1984.

A. E. Holmes, F. J. Kemker, and G. E. Merwin. The effects of varying the number of cochlear implant electrodes on speech perception. American Journal of Otology, 8:240–246, 1987.

N. S. Imennov and J. T. Rubinstein. Stochastic population model for electrical stimulation of the auditory nerve. IEEE Trans Biomed Eng. 56:2493–2501, 2009.

L. A. Jeffress. A place theory of sound localization. J. Comp. Physiol. Psychol., 41:35–39, 1948.

S. Kerber and B. U. Seeber. Sound localization in noise by normal-hearing listeners and cochlear implant users. Ear Hear., 33:445–457, 2012.

A. M. Khan, O. Handzel, B. J. Burgess, D. Damian, D. K. Eddington, and J. B. Nadol, Jr. Is word recognition correlated with the number of surviving spiral ganglion cells and electrode insertion depth in human subjects with cochlear implants? Laryngoscope, 115:672–677, 2005.

N. Y.-S. Kiang. Discharge patterns of single fibers in the cat’s auditory nerve. Special technical report, 166, Massachusetts Institute of Technology, 1965.

W. E. Kock. Binaural localization and masking. J. Acoust. Soc. Am., 22:801, 1950.

A. Kohlrausch, J. Braasch, D. Kolossa, and J. Blauert. An introduction to binaural processing. In J. Blauert, editor, The technology of binaural listening, chapter 1. Springer, Berlin-Heidelberg-New York NY, 2013.

A. Kral, R. Hartmann, D. Mortazavi, and R. Klinke. Spatial resolution of cochlear implants: the electrical field and excitation of auditory afferents. Hear. Res., 121:11–28, 1998.

M. C. Liberman and M. E. Oliver. Morphometry of intracellularly labeled neurons of the auditory nerve: correlations with functional properties. J. Comp. Neurol., 223:163–176, 1984.

W. Lindemann. Extension of a binaural cross-correlation model by contralateral inhibition. I. simulation of lateralization for stationary signals. J. Acoust. Soc. Am., 80:1608–1622, 1986.

W. Lindemann. Extension of a binaural cross-correlation model by contralateral inhibition. II. the law of the first wave front. J. Acoust. Soc. Am., 80:1623–1630, 1986.

P. C. Loizou. Signal-processing techniques for cochlear implants. 18(3):34–46, 1999.

J. Malmivuo and R. Plonsey. Bioelectromagnetism: principles and applications of bioelectric and biomagnetic fields. Oxford University Press, USA, 1995.

H. J. McDermott. Music perception with cochlear implants: a review. Trends Amplif, 8:49–82, 2004.

D. R. McNeal. Analysis of a model for excitation of myelinated nerve. BME-23:329–337, 1976.

H. Mino, J. T. Rubinstein, C. A. Miller, and P. J. Abbas. Effects of electrode-to-fiber distance on temporal neural response with electrical stimulation. 51:13–20, 2004.

H. Mino, J. T. Rubinstein, and J. A. White. Comparison of algorithms for the simulation of action potentials with stochastic sodium channels. Ann. Biomed. Eng., 30:578–587, 2002.

H. Motz and F. Rattay. A study of the application of the hodgkin-huxley and the frankenhaeuser-huxley model for electrostimulation of the acoustic nerve. Neuroscience, 18:699–712, 1986.

H. Motz and F. Rattay. Signal processing strategies for electrostimulated ear prostheses based on simulated nerve response. Perception, 16:777–784, 1987.

M. H. Negm and I. C. Bruce. Effects of i(h) and i(klt) on the response of the auditory nerve to electrical stimulation in a stochastic hodgkin-huxley model. Conf Proc IEEE Eng Med Biol Soc, 2008:5539–5542, 2008.

D. A. Nelson, G. S. Donaldson, and H. Kreft. Forward-masked spatial tuning curves in cochlear implant users. J. Acoust. Soc. Am., 123:1522–1543, 2008.

M. Nicoletti, P. Bade, M. Rudnicki, and W. Hemmert. Coding of sound into neuronal spike trains in cochlear implant users. In 13th Ann. Meetg. German Soc. Audiol., (DGA), 2010

M. Nicoletti, M. Isik, and W. Hemmert. Model-based validation framework for coding strategies in cochlear implants. In Conference on Implantable Auditory Prostheses (CIAP), 2011.

NIH Publication No. 11–4798. Cochlear implants, March 2011.

P. Nopp, P. Schleich, and P. D’Haese. Sound localization in bilateral users of MED-EL combi 40/40+ cochlear implants. Ear Hear., 25:205–214, 2004.

S. J. O’Leary, R. C. Black, and G. M. Clark. Current distributions in the cat cochlea: a modelling and electrophysiological study. Hear. Res., 18:273–281, 1985.

C. W. Parkins and J. Colombo. Auditory-nerve single-neuron thresholds to electrical stimulation from scala tympani electrodes. Hear. Res., 31:267–285, 1987.

F. Rattay. Analysis of models for external stimulation of axons. 33:974–977, 1986.

F. Rattay. The basic mechanism for the electrical stimulation of the nervous system. Neuroscience, 89:335–346, 1999.

F. Rattay, R. N. Leao, and H. Felix. A model of the electrically excited human cochlear neuron. II. influence of the three-dimensional cochlear structure on neural excitability. Hear. Res., 153:64–79, 2001.

F. Rattay, P. Lutter, and H. Felix. A model of the electrically excited human cochlear neuron. I. contribution of neural substructures to the generation and propagation of spikes. Hear. Res., 153:43–63, 2001.

J. S. Rothman and P. B. Manis. The roles potassium currents play in regulating the electrical activity of ventral cochlear nucleus neurons. J. Neurophysiol., 89:3097–3113, 2003.

J. T. Rubinstein, B. S. Wilson, C. C. Finley, and P. J. Abbas. Pseudospontaneous activity: stochastic independence of auditory nerve fibers with electrical stimulation. Hear. Res., 127:108–118, 1999.

B. U. Seeber and H. Fastl. Localization cues with bilateral cochlear implants. J. Acoust. Soc. Am., 123:1030–1042, 2008.

R. V. Shannon. Multichannel electrical stimulation of the auditory nerve in man. II. Channel interaction. Hear. Res., 12:1–16, 1983.

R. K. Shepherd and E. Javel. Electrical stimulation of the auditory nerve. I. Correlation of physiological responses with cochlear status. Hear. Res., 108:112–144, 1997.

R. K. Shepherd and E. Javel. Electrical stimulation of the auditory nerve: II. Effect of stimulus waveshape on single fibre response properties. Hear. Res., 130:171–188, 1999.

J. E. Smit, T. Hanekom, A. van Wieringen, J. Wouters, and J. J. Hanekom. Threshold predictions of different pulse shapes using a human auditory nerve fibre model containing persistent sodium and slow potassium currents. Hear. Res., 269:12–22, 2010.

H. Spoendlin and A. Schrott. The spiral ganglion and the innervation of the human organ of corti. Acta Otolaryngol. (Stockh.), 105:403–410, 1988.

R. Stern, Jr and H. S. Colburn. Theory of binaural interaction based in auditory-nerve data. IV. A model for subjective lateral position. J. Acoust. Soc. Am., 64:127–140, 1978.

R. M. Stern and C. Trahiotis. Models of binaural interaction. In B. C. J. Moore, editor, Hearing, Handbook of perception and cognition, chapter 10, pages 347–387. Academic Press, second edition, 1995.

B. Stöbich, C. M. Zierhofer, and E. S. Hochmair. Influence of automatic gain control parameter settings on speech understanding of cochlear implant users employing the continuous interleaved sampling strategy. Ear Hear., 20:104–116, 1999.

I. Tasaki. New measurements of the capacity and the resistance of the myelin sheath and the nodal membrane of the isolated frog nerve fiber. Am. J. Physiol., 181:639–650, 1955.

R. van Hoesel, M. Böhm, J. Pesch, A. Vandali, R. D. Battmer, and T. Lenarz. Binaural speech unmasking and localization in noise with bilateral cochlear implants using envelope and fine-timing based strategies. J. Acoust. Soc. Am., 123:2249–2263, 2008.

B. N. W. Schwartz and R. Stämpli. Longitudinal resistance of axoplasm in myelinated nerve fibers of the frog. Pflügers Archiv European Journal of Physiology, 379:R41, 1979.

H. Wang. Speech coding and information processing in the peripheral human auditory system. PhD thesis, Technische Universität München, 2009.

D. Whiten. Electro-anatomical models of the cochlear implant. PhD thesis, Massachusetts Institute of Technology, 2007.

B. S. Wilson, C. C. Finley, D. T. Lawson, R. D. Wolford, D. K. Eddington, and W. M. Rabinowitz. Better speech recognition with cochlear implants. Nature, 352:236–238, 1991.

J. Woo, C. A. Miller, and P. J. Abbas. The dependence of auditory nerve rate adaptation on electric stimulus parameters, electrode position, and fiber diameter: a computer model study. J. Assoc. Res. Otolaryngol., 11:283–296, 2010.

F.-G. Zeng, G. Grant, J. Niparko, J. Galvin, R. Shannon, J. Opie, and P. Segel. Speech dynamic range and its effect on cochlear implant performance. J. Acoust. Soc. Am., 111:377–386, 2002.

F.-G. Zeng, S. Rebscher, W. Harrison, X. Sun, and H. Feng. Cochlear implants: System design, integration, and, evaluation. 1:115–142, 2008.

C. Zierhofer. Electrical nerve stimulation based on channel-specific sequences. European Patent, Office, WO/2001/013991, 2001.

Acknowledgments

This work was supported by the German Federal Ministry of Education and Research within the Munich Bernstein Center of Computational Neuroscience, ref.# 01GQ1004B and 01GQ1004D, and MED-EL Innsbruck. The authors thank V. Hohmann, F.-M. Hoffmann, P. Nopp, J. Blauert and two anonymous reviewers for helpful comments.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer-Verlag Berlin Heidelberg

About this chapter

Cite this chapter

Nicoletti, M., Wirtz, C., Hemmert, W. (2013). Modeling Sound Localization with Cochlear Implants. In: Blauert, J. (eds) The Technology of Binaural Listening. Modern Acoustics and Signal Processing. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-37762-4_12

Download citation

DOI: https://doi.org/10.1007/978-3-642-37762-4_12

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-37761-7

Online ISBN: 978-3-642-37762-4

eBook Packages: EngineeringEngineering (R0)