Abstract

The conventional photodiode, available in every CMOS process as a PN junction, can be enriched by smart electronics and therefore achieve interesting performance in the implementation of 3D Time-Of-Flight imagers. The high level of integration of deep submicron technologies allows the realization of 3D pixels with interesting features while keeping reasonable fill-factors.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

The conventional photodiode, available in every CMOS process as a PN junction, can be enriched by smart electronics and therefore achieve interesting performance in the implementation of 3D Time-Of-Flight imagers. The high level of integration of deep submicron technologies allows the realization of 3D pixels with interesting features while keeping reasonable fill-factors.

The pixel architectures described in this chapter are “circuit-centric”, in contrast with the “device-centric” pixels that will be introduced in the chapter talking about photo-mixing devices chap. 4. The operations needed to extract the 3D measurement are performed using amplifiers, switches, and capacitors. The challenges to face with this approach are the implementation of per-pixel complex electronic circuits in a power- and area-efficient way.

Several approaches have been pursued, based on modulated or pulsed light, with pros and cons: the modulated light allows exploiting the high linearity of the technique while the pulsed light enables fast 3D imaging and increased signal-to-noise ratio. Different techniques will be introduced aimed at improving the rejection to background light, which is a strong point of the electronics-based 3D pixels.

1 Pulsed I-TOF 3D Imaging Method

In the following a detailed theoretical analysis of 3D imaging based on pulsed indirect Time-Of-Flight (I-TOF) will introduce the operating principle and the main relationships between the physical quantities. The first pioneering work on electronics-based sensors for 3D is described in [1] and employed the pulsed indirect TOF technique explained in the following paragraphs.

1.1 Principle of Operation

Pulsed I-TOF 3D imagers rely on the indirect measurement of the delay of a reflected laser pulse to extract the distance information. Several integration windows can be defined, leading to a number of integrated portions of the reflected laser pulse: each window, properly delayed, can map a different distance range [2].

In particular, as depicted in Fig. 1, the principle of operation exploits the integration of a “slice” of the reflected pulse during a temporal window (W1), whose beginning determines the spatial offset of the measurement. So, the more the laser pulse is delayed (the farther the object), the larger will be the integrated value. Since the pulse amplitude depends also on the object reflectivity and distance, the same measure must be done also with a window that allows complete integration of the laser pulse (W0), for either near or far objects. Then the latter value can be used to normalize the integral of the sliced pulse and to obtain a value that is linearly correlated to the distance. Note that in W1 also the complementary part of the pulse can be used, with similar results [3].

The removal of background light can be performed by changing the polarity of the integration during the windows W0 and W1: the background contribution is cancelled at the end of the integration.

The realization of a 3D frame is obtained by accumulation of several laser pulses for each window in order to increase the signal-to-noise ratio of the integrated voltage: for a given frame rate this number of accumulations is typically limited by the specifications of the illuminator, which usually are lasers with a maximum duty-cycle of about 0.1 %.

Ideally, if the laser pulse, of length Tp, is fired with no delays with respect to the beginning of window W0, the following equation allows the distance measurement to be extracted:

The maximum measurable distance zmax with two integration windows is defined by the length of the pulse and requires a minimum delay between W0 and W1 of Tp, while the length of the integration windows is at minimum equal to 2Tp if the background subtraction is implemented.

1.2 Signal and Noise Analysis

The performance of an electronics-based pulsed I-TOF system can be theoretically predicted; the considerations start from the power budget and optics: given a power density Pd on a surface, the power hitting the pixel is obtained as follows:

where FF is the pixel fill-factor, Apix is the area, τopt is the optics transmission and F# is the optics f-number.

While the background power density depends on the illumination level, the reflected laser pulse amplitude depends on the reflectivity ρ, distance z and beam divergence θ; so, with the hypothesis that the laser beam projects the total power Plaser on a square, the power density on the target can be expressed as:

Background and laser pulses contribute each other to the generated electrons:

where the wavelength λ, quantum efficiency QE and integration time Tint may be different for the two contributions. As already shown in (1), since the output voltage is obtained integrating on the same capacitance, the distance can be calculated from the charge integrated during W0 and W1:

.

The background contributes ideally with zero charge to the total amplitude, so for m accumulations the number of electrons Nlaser from eq. (4) gives:

These values are affected by the photon shot noise [4]; in particular, the background contribution to the noise is not null and has an equivalent integration time of 4Tp, obtaining:

Using the known formula for the ratio error propagation, the distance uncertainty is obtained, taking into account only quantum noise of generated electrons:

This equation allows calculating the shot noise limit of a pulsed light I-TOF imager, and also to evaluate the effect of the background light on the sensor precision, assuming that the cancellation is perfect. However, electronics noise typically dominates in this kind of 3D imager and cannot be neglected: on the other hand, the electronics noise strongly depends on the actual implementation of the sensor.

2 Case Study 1: 50 × 30-Pixel Array with Fully Differential 3D Pixel

The sensor described in [1], called “3DEye”, is a 3D image sensor of 50 × 30 pixels and implements a fully differential structure in the pixel to realize the operations previously described in Fig. 1.

2.1 Sensor Design

The 3DEye pixel can be described with the picture of Fig. 2. The input is provided by two photodiodes, where one is active and receives the incoming light, while the other is a blind dummy to keep the symmetry of the circuit.

Applying the results of the previous paragraph to the 3DEye pixel, the INT signal determines the observation window allowing the photogenerated current to be integrated onto the capacitors and the chopper at the input allows cancellation of the background signal through integration of the input with alternating sign.

Due to the relatively large time between accumulations (a single accumulation lasts only hundreds of nanoseconds), to avoid common mode drift at the input, as well as undesired charge integration, the INT switches are opened and the RES switches are closed. The RES switches are closed together with INT only once, at the beginning of the accumulation.

The architecture of Fig. 2 has some clear advantages that can be summarized in the following list:

-

The circuit is simple and clean and is easy to analyze

-

The fully-differential topology guarantees reliability and low offsets

-

The background cancellation is very effective

On the other side, there are some drawbacks that must be considered before scaling the architecture:

-

The area used by the dummy photodiode is somehow “wasted”

-

The noise is quite high due to kTC that adds each accumulation

-

The output swing is limited due to undesired charge sharing and injection

The data that will be used in the calculations is referred to the measurement conditions of the 3DEye demonstrator and is summarized in Table 1.

2.1.1 Theoretical Limit

The graphs of Fig. 3 show the absolute and relative error of the measured distance, with 32 accumulations, in the specific case 20 fps operation, with the data of Table 1 and Eq. (10). Each chart presents the precision without background light, and with 50 klx of background light. The integration of each component has been considered to be respectively 4Tp, Tp, Tp·z/zmax, as stated before.

As it can be seen, the error increases with the distance due to the weakening of the reflected laser pulse; moreover the background acts as a noise source, worsening the performance.

2.2 Circuit Analysis

The main source of uncertainty in the circuit of Fig. 2 is the kTC noise of the switches [5]. When a switch opens on a capacitor, it samples its thermal noise onto that capacitor which results to be independent from the switch resistance and with a standard deviation of √kT/C: to obtain the noise expression, each switch must be considered individually.

The switches of the chopper exchange the sign four times each measurement, but if this happens when the RES is active, their contribution is not added.

The reset switch acts during the initial reset sampling its noise on the integration capacitances, and during its opening before each accumulation. The total noise at the output due to reset switch is:

The integration switches open twice per accumulation (laser and background), and sample on one side onto the integration capacitances and on the other onto the photodiodes; the latter is then cancelled by the RES switch. After the last accumulation the INT switch remains closed while all the chopper switches open, in such a way that it is possible to read-out the pixel. The final noise due to integration switch is:

Another contribution is the thermal noise of the operational amplifier that in the same way of the kTC is sampled and added to the total output noise during each release of the INT switch. So the fully-differential OTA noise (see [6]) can be expressed as:

2.3 Measurements and Outlook

Using Eqs. (6) and (7) for the calculation of the integrated charge during the two windows, Eqs. (8) and (9) for the shot noise, and Eqs. (11–13) for the electronics noise, it is possible to obtain all the necessary data in the case of medium illumination (50 lx or 0.073 W/m2, typical living room condition).

At the same time, it is possible to measure and compare the actual and expected values at different distances.

As it can be seen from Table 2, the noise amplitude due to the quantum nature of electrons is lower than the electronics noise. Taking into account all noise sources and using the formula for the error propagation, the predicted distance uncertainty results in very good agreement with the measurements and shows a large electronics noise contribution.

Example of the operation of the sensor in a 3D imaging system can be seen in Fig. 4, where sensor output of a hand indicating number “3” in front of a reference plane is shown. Both grayscale back-reflected light and color-coded 3D are given.

2.3.1 Improving the Sensor

The analytic tools developed in the last paragraphs allow prediction of future improvements of the 3DEye architecture by exploiting scaling with deep submicron technologies.

Starting with the optimistic hypothesis of the feasibility of the same electronics in 30 μm pitch with 30 % fill factor, the new performances of the hypothetic scaled sensor can be calculated, with enhanced range up to 7.5 m (50 ns laser pulse).

In Fig. 5 the forecast of the performance limit is plotted against the distance: it can be seen that the reduction of the pixel size strongly affects the precision.

With an integration capacitance CINT of 10 fF (for a 0.18 μm technology it is almost the minimum that can be reliably implemented), and an estimated photodiode capacitance of 70 fF, also the electronics noise can be taken into account. The integrated voltage and the shot noise in the case of medium illumination (50 lx or 0.073 W/m2, typical living room condition) and for two different distances is shown in Table 3.

The obtained numbers tell that the improvements in fill-factor and integration capacitance are not enough to compensate for the weaker signal. Therefore, it is clear that a scaling of the pixel is only possible with a different architecture.

3 Case Study 2: 160 × 120-Pixel Array with Two-Stage Pixel

From the analysis of the previous paragraph, it becomes clear that the main point is to reduce the electronics noise contribution. This can be achieved by pursuing several objectives:

-

Increase the number of accumulations, and speed-up the readout of the sensor

-

Maximize the fill-factor and the gain of the integrator

-

Perform pixel-level correlated double sampling (CDS) to remove reset noise

3.1 Sensor Design

In order to accumulate the integrated value, without repeatedly sampling and summing noise on a small integration capacitance, the value must be transferred from the integrator to an accumulator stage using a larger capacitance. Such a circuit can be implemented in a single ended fashion, simplifying the complexity and optimizing the SNR, and can also be used as a CDS to remove most of the integrator’s noise contribution.

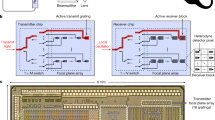

The resulting two-stage architecture can be seen in Fig. 6: the two stages have a reset signal, and are connected by a switch network. The network operates in three modes that are identified by the control signals:

-

INT: the integrator is connected to C1 which is charged to VINT

-

FWD: C1 is connected to the 2nd stage and summed to the value in C2

-

BWD: C1 is connected to the 2nd stage and subtracted to the value in C2

To achieve the I-TOF behavior the phases should be arranged in such a way that the difference between the end and start of the observation windows is calculated: this can be done also including the CDS for the first stage.

As Fig. 7 shows, with proper arrangement of FWD and BWD it is possible to perform a true CDS and background light removal: the waveforms can be repeated without resetting the second stage, so accumulating the signal on VOUT. The result is that only the portion of laser pulse that arrives between the first and second falling edges of the INT signal is integrated and summed to the output.

Figure 8 shows the block schematic of the sensor architecture, which includes logic for addressing, column amplifiers for fast sampling and readout, and driving electronics for digital and analog signal distribution.

3.2 Circuit Analysis

The same noise analysis done on the fully-differential pixel can be performed for the two-stage pixel. The contributions to be considered are the first and second stage kTC noise, and the first and second stage OTA noise. Thanks to the CDS operation, the first stage kTC noise is cancelled, so only the second stage kTC noise is relevant. There are three terms in the second stage kTC noise:

-

one-shot sampling on C2 when resetting the second stage

-

kTC due to INT opening on C1 capacitor, 4 times for each accumulation

-

kTC due to FWD/BWD on the “-”node of the OTA, 4 × for each accumulation

The total kTC noise is then:

As far as the OTA noise is of concern, in the first stage it is given by the OTA noise sampled onto the C1 capacitor at the end of each INT signal, while the OTA noise sampled during the reset is removed by the CDS. The equation takes into account a single-input amplifier and the transfer function to the output C1/C2. The second stage samples the OTA noise once during reset, then at FWD/BWD opening, 4 times for each accumulation.

3.2.1 Two-Stage Pixel Simulation

The implementation of the pixel uses the following capacitances of CINT = 9.5 fF, C1 = 65 fF, C2 = 130 fF, while the estimated photodiode capacitance, at 0.9 V bias results CPD = 80 fF. A complete distance measurement simulation can be carried out, with the conditions of Table 4, which refer to the final testbench for the sensor.

Using the parameter of Table 4, together with the calculations at the beginning of the chapter and Eqs. (14) and (15), it is possible to extract some performance forecast. In Fig. 9 the calculated distance, compared with the ideal one, is plotted: at small distances the higher number of accumulations saturates (W0 saturates first), while the line has a smaller slope with respect to the ideal characteristics due to the non-ideal response of the integrator in time. In Fig. 10 the precision of the distance measurement is extracted.

3.3 Test Setup and Measurement Results

The sensor, implemented in a CMOS 0.18 μm technology, is visible in Fig. 11: a detail of the pixels is shown in the inset.

The size of the chip is 5 × 5 mm2 and it contains 160 × 120 pixels with pitch of 29.1 μm implementing the circuit of Fig. 6.

3.3.1 Description of Setup

The camera board has to be mounted on an optical bench; the 905 nm laser source is installed immediately above the optics to ensure good matching between excitation and captured image, as visible in Fig. 12: it allows 50 ns-wide pulses at a maximum duty-cycle of 0.1 %. Each of the three modules has a peak power of 75 W, for a total peak power of 225 W.

A large panel white panel with estimated reflectivity of 40 % is used as a target and placed in front of the camera-laser system.

3.3.2 Distance Measurements

The charts of Figs. 13 and 14 show the measured distance and the achievable precision, respectively, for different number of accumulations. In particular, from 16 to 128 accumulations the frame rate results to be of 65.0 fps down to 27.8 fps, with an average illuminator power from 26 to 89 mW.

The measurements compare well with the estimation of previous paragraphs, taking into account the reduced reflectivity of the target employed in the experimental conditions.

A scene showing a teddy bear and an apple, acquired averaging several frames for a total acquisition time of 7 s, is shown in Fig. 15.

4 Correlation I-TOF 3D Imaging Method

The time of flight correlation method implies that an optical signal with phase shifted rectangular sequence modulation illuminates the scenery, whereby a small part of it reflects back to the sensor. The backscattered light is subsequently in each pixel correlated with internally generated signal, which is also of rectangular shape but with fixed phase. The correlation function of these two (presumably rectangular) signals is of a triangular shape, formed by N discrete phase steps that are applied to the illumination source. Due to the usage of digital devices for the signal generation, it makes sense for N to be a power of two, and is here chosen to be N = 16. The continuous-time correlation function is now defined as

where s REC represents received optical power, and s CLK internal generated signal. In order to sample the correlation triangle at N points, the phase of the modulation signal that controls the light source is shifted throughout equally spaced phase steps so that \( s_{MOD} \left( t \right) = s_{CLK} \left( {t - \frac{n}{{Nf_{MOD} }}} \right) \) with n as a phase step counter. The correlation process (multiplication and integration) is performed in each pixel separately and will be discussed in detail in the next section.

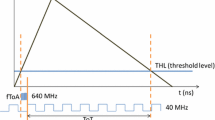

For the calculation of the distance information, the phase displacement information φTOF is essential. The extraction of φTOF is done by applying a discrete Fourier transform (DFT) on the correlation triangle and recovering thereby the phase of the fundamental wave. Figure 16 depicts the control signal timings as well as the differential pixel output ΔV OUT , which is the correlation triangle function.

The corresponding fundamental wave of the correlation triangle is sketched additionally. All control signals shown in Fig. 16 are generated and controlled by means of an FPGA and applied to the illumination source and to the sensor as shown in Fig. 17. Each phase step begins with a reset for providing the same initial conditions prior to every integration process. After the reset process is completed, an integration interval takes place defining the differential voltage. Eventually, a read cycle is applied for the read out purpose. The corresponding phase step output voltage of the sensor is thereby only applied to the output during the read cycle. An additional control signal (refresh) that eliminates the extraneous light contributions interrupts the integration process after every 1.6 μs consequently providing initial common mode voltages (see pixel output signals in Fig. 16).

4.1 Sensors Architecture

The full optical distance measurement system consists of three main components, which are located on separated PCBs: the sensor itself as an optoelectronic integrated circuit (OEIC); the illumination source, which is implemented as a red laser for single pixel characterisation and as a LED source for full sensor characterisation; and an FPGA PCB for control and signal processing. Figure 17 shows a block diagram of these units and the interconnection setup. As mentioned in the previous section, the sent optical signal must undergo a phase shift of 16 phase steps in order to obtain a correlation triangle, whereby, the signal that is applied to the correlation circuit in each pixel remains the same for all 16 phase steps. Previous results based on this approach used a bridge correlator circuit that served for the background light extinction (BGLX) as reported in [7] or an operational amplifier in [8]. Both designs suffered from relatively high power consumption and in the later approach also large pixel area, amounting to 109 × 241 μm². In the following, a radical improvement in terms of background light suppression capability, as well as power consumption will be presented.

This section describes two TOF pixel sensors reported in [9] and [10] with different implementations of BGLX techniques. The first approach, consisting of 2 × 32 pixels configured in a dual line architecture, is described in Sect. 5. In Sect. 6, another technique for BGLX comprising 16 × 16 pixel array is shown. Both sensors are fabricated in a 0.6 μm BiCMOS technology including photodiodes with anti-reflection coating. However, in both chips only CMOS devices are exploited for the circuit design.

4.2 Signal and Noise Analysis

The received optical signal is converted by the photodiode into a photocurrent in the TOF sensor. The hereby generated current IMOD is a function of the photodiode’s responsivity R and the light power Ppix of the received optical signal. The relationship between the received light power Ppix at the pixel and the transmitted light power Plaser is

taking into account that any atmospheric attenuation was neglected. In Eq. (17) ρ is the reflection property of the target, w is the edge length of the squared pixels, z TOF is the distance between pixel and object and FF is the optical pixel fill factor. Considering that a responsivity R amounts to 0.33 A/W at 850 nm and received light power Ppix is in the nanowatts range, a photocurrent IMOD in the nanoampere range can be expected. This current is accompanied by the photon noise current \( i_{MOD} = \sqrt {q{{I_{MOD} } \mathord{\left/ {\vphantom {{I_{MOD} } {T_{\Updelta } }}} \right. \kern-0pt} {T_{\Updelta } }}} \), where q is the electron charge and T Δ is the effective integration time in a complete correlation triangle covering all 16 phase steps. The noise current I MOD is the limiting factor of optical systems which is caused by photon noise [11]. Further noise components based on background light i BGL , bias current i 0 , A/D-conversation i A/D , switching process during correlation (kT/C) i kT/C and other electrical noise contributions i EL like output buffer noise, substrate noise, power supply noise, can be transferred to the input of the circuit. At the cathode of the photodiode DPD a signal-to-noise ratio (SNR) of a single correlation triangle SNRΔ can be given by

The dominating parts of the upper equation are the kT/C-noise and the noise current due to the bias which is expected to be in the same order of magnitude as the switching noise. The measurement accuracy is primarily determined by the bias current I 0 , since this current is about 5 to 10 times higher than the current due to background light illumination I BGL . It should be noted that Eq. (18) is an implicit function of the total time spent for integration TΔ behind the noise components. However, this equation is absolutely independent of the number of the phase steps within TΔ. The standard deviation for distance measurements is given by

This equation is based on SNRΔ as the characteristic number for the influence of stochastic sources of errors. For non-perfect rectangular modulation the prefactor in Eq. (19) is not valid and has to be adapted. By means of this equation measurement accuracy at a certain distance can be predicted in advance.

5 Case Study 3: 2 × 32 Dual-Line TOF Sensor

In this subsection a dual line TOF sensor with a 2 × 32 pixel array and a total chip area of 6.5 mm2 (see Fig. 18) is presented. Additional parts like output buffers for driving the measurement equipment, a logic unit for processing the smart bus signals and a phase-locked-loop (PLL) based ring-oscillator for optional generation of the shifted modulation signals on-chip in combination with a 50 Ω-driver dedicated to drive the illumination source, are included in the chip. The required signals for the chip can be internally generated by the PLL or in an FPGA. The main drawback of using the internally generated signals is that substrate and power supply noise affect the performance of the sensor. Each pixel covers an area of 109 × 158 μm2 including an optical active area size of 100 × 100 μm2, which results in an fill-factor of ~58 %. The pixel consumes 100 μA at a supply voltage of 5 V, whereby, the internal OPA dominates the pixel power consumption. The power consumption of the whole chip is nearly 80 mW, whereof 92 % are used by the output buffers and the on-chip phase generator.

5.1 Sensor Circuit

The circuit of a single pixel used in the dual line sensor is shown in Fig. 19. It features fully differential correlation according to the underlying double-correlator concept presented in [12]. The received photocurrent I PH is directed through transistors T1 and T2 to the corresponding integration capacitors CI1 and CI2. Dummy transistors T1d and T2d were added in the path to compensate charge which is injected during the integration interval. Two memory capacitors CM1 and CM2 with the transistors T3–T9 form the circuit part for the BGLX. The BGLX process is controlled by the \( \overline{bglx} \) signal, which is deduced from the refresh signal. During the read process the voltages at the integration capacitors CI1 and CI2 are buffered to the output by activating the select signal. Afterwards, throughout the readout process, the OPA output voltage has to be defined. This is done by transistor T10 and a XNOR logic gate. Transistor T10 and the XNOR are also used during the reset cycle, where they provide a short to the integration capacitors. The 2-stage OPA has to provide an output swing of ±1.5 V with a fast slew rate for regulating the cathode voltage of the photodiode DPD according to VPD at the positive input. A gain of 68 dB and a transit frequency of 100 MHz of the OPA are adequate for achieving the requested regulation.

Before starting with the integration, a reset is applied to the circuit by forcing Φ1 and Φ2 to high and \( \overline{bglx} \) to low. Thereby CI1 and CM1 as well as CI2 and CM2 are cleared via T1–T6 in combination with activating T9 and T10. After the circuit reset is performed, the accumulation of the photocurrent I PH starts by setting Φ1 = \( \overline{{\Upphi_{2} }} \) and \( \overline{bglx} \) to high. Depending on the level of Φ1 and Φ2 and thus on the modulation clock, the photogenerated current is collected either in CI1 or CI2. The two memory capacitors CM1 and CM2 are switched during the integration in parallel. Hence, the half of photogenerated charge is stored in them, presupposing that all four capacitors are of the same size. Thereby the integrated photocurrent I PH consists of two parts due to intrinsic modulated light I MOD and the background light I BGL . The charge stored in each memory capacitor is (I BGL + I MOD )/2, while the charge stored in CI1 and CI2 is an identical amount of I BGL /2 and the portion of I MOD due to the correlation.

BGLX technique is necessary since the current due to background light I BGL is in the μA range for light conditions of around 100 klx and thus more than 1000 times larger than the part of the modulated light I MOD , which is in the nA or even pA range. The BGLX process is provided in periodic refresh intervals during one system clock period. Thereby the \( \overline{bglx} \) signal is set to low, while the modulation signals Φ1 and Φ2 continue with operation as during the integration period. The common node of DPD, CM1 and CM2 is connected to VPD due to the low state of the \( \overline{bglx} \) signal. As in the integration phase transistors T1 and T2 continue passing the photocurrent to the integration capacitors CI1 and CI2 during the half-cycles. Additionally transistors T3 and T4 are controlled with the same clock like T1 and T2. The load of the capacitors CM1 and CM2 is sensed by the OPA through the low-ohmic switching transistors T5 and T6. Afterwards the OPA compensates with ICOMP for the charge applied to its differential input. This process leads to subtraction of charges due to ambient light in the integration capacitors CI1 and CI2. Since each memory capacitor is loaded with a charge proportional to I BGL /2 + I MOD /2, only a part originating from I MOD /2 is subtracted from the integration capacitors. This involves a common-mode-corrected integration at both capacitors after each BGLX cycle, enabling a background light suppression up to 150 klx.

5.2 Test Setup and Measurement Results

As depicted in Fig. 17 the setup for characterizing the sensor performance consists of three components. The illumination PCB is mounted on a small aluminium breadboard in front of the PCB carrying the TOF sensor. For the dual line pixel sensor a 1-inch lens with a focal length of 16 mm and an F number of 1.4 was used. Since a strong background light source for illuminating a complete sensor’s field of view up to 150 klx was not available, single pixel measurements were done for characterizing the pixel under high background light conditions. In this case a collimated light from a red laser at 650 nm with an average optical output power of 1 mW was employed as an illumination source. Furthermore the usage of a laser spot illumination would be of advantage due to a high modulation bandwidth of the laser diode. The applied modulation frequency was f MOD = 10 MHz, leading to a non-ambiguity range of 15 m. For measurements with the dual line TOF pixel sensor a field of illumination according to the sensor’s field of view (FOV) is necessary. Therefore, a laminar illuminated FOV is provided by a modulated LED source with a total optical power of nearly 900 mW at 850 nm. The LED source consists of four high-power LED devices of type SFH4230 in combination with 6° collimator lenses each, whereby each LED is supplied with 1 A at 10 MHz and emits an optical power of 220 mW. Each LED has its own driver circuit, consisting of a buffer which drives the capacitive load of the gate of an NMOS switching transistor with a low on-resistance and a serial resistance for operating point stabilisation. Due to the angular field of illumination, this illumination source has a lower light power compared to the laser spot illumination source used for the characterisation of a single pixel. By means of the 6° collimator lenses an optical power density of 8 W/m2 is achieved at a distance of 1 m. Therefore, eye safety conditions are achieved for distances d > 10 cm. A circular cut-out between the LED pairs was made for mounting a lens system along the optical axis. Furthermore, a 50 Ω terminated differential input converts the modulation clock to a single-ended signal for the level converter, which provides the signal for the following LED driver circuit. The modulation clock for the illumination source is provided by the FPGA.

As an object, a 10 × 10 cm2 non-cooperative white paper target with about 90 % reflectivity was used for characterization in single-pixel measurements. Thereby the target was moved by a motorized trolley with a linear range of 0.1 to 3.2 m. The upper range is limited by the laboratory room and thus the length of the linear axis. For characterizing the dual line TOF pixel sensor a moving white paper target and a laboratory scenery where used, respectively. The robustness to extraneous light was verified by a cold-light source with a colour temperature of 3200 K that illuminated the white paper target with up to 150 klx. After the digitalization of the correlation triangle the digitalized data pass the DFT-block in the FPGA, whereby the amplitude and phase of the fundamental wave are extracted. Once the phase acquisition is done, the distance can be calculated presupposing known measurement conditions as explained in [12].

5.2.1 Single Pixel Characterization

For characterizing a single pixel, measurements with 100 measured distance points are recorded at a step size of 10 cm, while the measurement time for a single point was 50 ms. The collected data were analysed and the standard deviation σ zTOF as well as the linearity error e lin were obtained. Figure 20a depicts the results of these measurements. The accuracy is deformed in the near field due to defocusing of the sensor. Excluding this region, a standard deviation of a few centimetres and a linearity error within ±1 cm is characteristic for this measurement range. The impact of background light, which leads to an about 1000 times larger photocurrent compared to the photocurrent due to the modulation light is depicted in Fig. 20b for measurements at a distance of 1 m. In this figure Δz is the relative displacement. The best result Δz = 10 cm for 150 klx is achieved by setting the BGLX period to 0.4 μs as depicted in the figure. Here, it should be again mentioned that no optical filters where used to supress the undesired light.

5.2.2 Dual Line Sensor Array Characterization

For the measurements of the multi pixel sensor the illumination source described in the corresponding section was used. The measurements show a standard deviation which is about 3.5 times higher than the single pixel results with the red laser as a direct consequence of lower reception power. Furthermore the linearity error also increases within a range of ±2 cm. Measurement results with the 2 × 32 pixel sensor are illustrated in Fig. 21, whereby the 10 × 10 cm2 white paper target was moved back out of the sensor axis. For each position 100 records are sampled, whereby a total measurement time of 50 ms was used for each point. In this depiction, pixels with amplitudes below 1 mV were masked out. The amplitude value at a distance of 3.5 m corresponds to a current IMOD = 23 pA.

6 Case Study 4: 16 × 16 Pixel Array TOF Sensor Design

In this subsection a 16 × 16 TOF sensor is presented. A micrograph of the chip is shown in Fig. 22. The sensor is based on another approach that can supress 150 klx, as well as the approach presented before. The whole chip covers an area of about 10 mm2, whereby a single pixel consisting of the photodiode and the readout circuitry has a size of 125 × 125 μm2 and an optical fill factor of ~66 %. Furthermore, some pixel logic, a reference pixel and a 12-bit analogue-to-digital converter are included in the chip. The A/D converter can be used for readout with 1 MS/s. The offset problem due to propagation delay of signals in the measurement setup can be fixed by means of the reference pixel, which is located outside the array. All 256 + 1 pixels are addressed by the select line. Thereby the readout mechanism is managed by a shift register, which is clocked by the select signal. This signal passes a “1” from pixel column to pixel column [13]. For digitalizing, the output voltage can be either converted by using the built-in ADC or by means of an external ADC. Since no OPA is used for BGLX, the consumed current per pixel is 50 times smaller compared to the 2 × 32 pixel sensor presented above, amounting to only 2 μA at a supply voltage of 5 V. A disadvantage of the low current consumption is a decreased bandwidth of the pixel circuit. However, the bandwidth is still high enough to meet the TOF requirements at 10 MHz square-wave modulation.

6.1 Sensor Circuit

Figure 23 depicts the single pixel circuit. The pixel performs the correlation operation, which implies, similarly as in the first approach, a reset at the beginning of each phase step, an integration interval of several fundamental clock cycles afterwards, and readout at the end. The generated photocurrent from the photodiode DPD is directed through transistors T6 and T7 to the integration capacitors C1 and C2. Both mentioned transistors are switched by the modulating clock signals Φ1 and Φ2 while the refresh signal is at low level. During the integration, a refresh process occurs periodically for removing the background light contribution that is stored onto capacitors C1 and C2. After 95 μs of repeated interchange of correlating integration and refresh activities, the differential voltages ΔVOUT is read out. Thereby, the read signal forces Φ1 and Φ2 to ground which leads in directing I PH over T5. With the pixel’s select signal the output buffers are enabled and the differential output voltage ΔVOUT is traced. During the integration process, transistors T10 and T11 regulate the voltage at the cathode of the photodiode to a constant value, suppressing thereby the influence of the photodiode capacitance CPD. Transistor T10 needs to be biased with I0 = 1 μA to ensure a sufficiently large regulation bandwidth of 100 MHz for the 10 MHz square-wave modulation signals. This current is supplied by an in-pixel current source and mirrored by the transistors T11–T13. Since the current is mirrored, another 1 μA is drawn by the amplifying transistor T11 so that in total the pixel consumption can be kept at, as low as, 2 μA.

The BGLX operation is in this circuit ensured with the refresh signal. After some integration cycles refresh is activated to process the background light extinction. In addition to it, Φ1 and Φ2 are forced to ground. As a consequence, the transistor pairs T1 and T2 as well as T6 and T7 are switched in the high-ohmic region and isolate the integration capacitors. The still generated photocurrent IPH is thereby bypassed over transistor T5. Transistors T3 and T4 force an anti-parallel connection of the integration capacitors due to the high state of the refresh signal, as described in [14]. Hence, the charge caused by background light is extinguished while keeping the differential information in each capacitor, which is depicted in Fig. 16. The refresh time interval is in this approach only half of a clock cycle of the double line sensor approach described earlier. After the background light extinction process the reset signal is forced to the low level and the integration can be continued.

6.2 Test Setup and Measurement Results

The test setup for the single pixel sensor was the same as presented already in Sect. 5.2. Also the test setup for the 16 × 16 TOF pixel array sensor was nearly the same as for the dual line TOF pixel sensor. The only difference was in the used lens for the light collection. Here, a commercial aspheric 0.5-inch lens with a focal ratio F ≈ 1 was used. The lens’ focal length of 10 mm guarantees a blur free picture over the total measurement range from 0 to 3.2 m. Furthermore, for characterizing the 16 × 16 TOF pixel array sensor a laboratory scenery was used.

6.2.1 Single Pixel Characterization

Similarly as by the dual line pixel sensor, a single pixel characterization for the 16 × 16 array sensor was performed, exploiting the same 10 × 10 cm2 white paper target under the same measurement conditions (100 measurements at each step and 10 cm steps). The results are depicted in Fig. 24a. A standard deviation σ zTOF is below 1 cm up to 1 m and below 5 cm up to 3 m while the linearity error e lin remains within –1/+2-cm band. The influence of background light at a distance of 1.5 m is depicted in Fig. 24b, achieving a displacement of Δz = 5 cm for background light of 100 klx and Δz = 15 cm for background light of 150 klx. The increase of Δz can be explained by a shift of the pixel’s operating point due to the BGL-induced photocurrent.

6.2.2 Pixel Sensor Array Characterization

Laboratory scenery consisting of a black metal ring (at 0.4 m), a blue pen (at 0.6 m), a blue paper box (at 1.3 m) and the right arm of the depicted person which is wearing a red pullover at a distance of 2.2 m has been captured. Figure 25 clearly shows the 3D plot of this scenery and the colour coded distance information. The sensor chip is able to provide range images in real-time with 16 frames per second. Rough information about background light conditions can be extracted out of the common-mode information in the output signal, which could be used for correcting the effects on distance measurements in future implementations.

7 Discussion and Comparison

In this chapter, two techniques for the implementation of indirect Time-Of-Flight imaging sensors have been described in detail. Both methods adapt well to implementations making extensive use of electronics inside the pixel to perform extraction of the 3D information.

While the pulsed method allows obtaining a simple way to extract the 3D image, with a minimum of two windows measurement, the correlation method balances the need for more acquisitions and complex processing with a higher linearity of the distance characteristics. At the same time, the pulsed technique can perform background removal few hundreds of nanoseconds after light integration, while the correlation method performs the operation after several integration cycles, which may introduce bigger errors in case of bright moving objects. Both techniques allow achieving a very good rejection to background light signal, up to the value of 150 klux measured for the correlation method, which is a fundamental advantage of electronics-based 3D sensors.

Implementations of imaging sensors for both methods demonstrate the potential of the techniques to obtain moderate array resolutions, from 16 × 16 pixels up to 160 × 120 pixels at minimum pixel pitch of 29.1 μm. In all implementations, efforts in the optimization of electronics can bring to satisfactory fill-factors, and power consumption down to 2 μA per pixel in the case of the correlation-based pixel array. Integrated circuits are designed using different technologies without any special option, thus making this an attractive point for these techniques: no special process optimizations are needed, allowing easy scaling and porting of the circuits to the technology of choice.

Looking at the 3D camera system point of view, pulsed TOF requires the use of laser illuminator due to the high required power of the single pulse, while the correlation method has a relaxed requirement which allows the use of LEDs with a lower peak power. On the contrary, pulsed laser allows better signal-to-noise ratio thanks to the strong signal, while using modulated LEDs the signal amplitude becomes a very critical point, thus settling pixel pitch on 109 μm for the linear sensor and 125 μm for the array sensor. Anyway, both systems assess the average illuminator power requirements on similar orders of magnitude, and in such conditions both easily reach distance precision in the centimetres range.

The trend of electronics-based 3D sensors follows the reduction of pixel pitch and increase of resolution: it is likely to happen that these types of sensors will find applications where their strong points are needed, such as high operating frame-rate and high background suppression capability. Still several improvements can be done, like parallelization of acquisition phases and optimization of the circuit area occupation, which can bring to better distance precision. Therefore, applications in the field of robotics, production control, safety and surveillance can be envisaged, and future improvements could further enlarge this list.

References

R. Jeremias, W. Brockherde, G. Doemens, B. Hosticka, L. Listl, P. Mengel, A CMOS photosensor array for 3D imaging using pulsed laser, IEEE International Solid-State Circuits Conference (2001), pp. 252–253

D. Stoppa, L. Viarani, A. Simoni, L. Gonzo, M. Malfatti, G. Pedretti, A 50 × 30-Pixel CMOS Sensor for TOF-Based Real Time 3D Imaging, 2005 Workshop on Charge-Coupled Devices and Advanced Image Sensors (Karuizawa, Nagano, Japan, 2005)

M. Perenzoni, N. Massari, D. Stoppa, L. Pancheri, M. Malfatti, L. Gonzo, A 160×120-pixels range camera with in-pixel correlated double sampling and fixed-pattern noise correction. IEEE J. Solid-State Circuits 46(7), 1672–1681 (2011)

A. El Gamal, H. Eltoukhy, CMOS Image Sensors IEEE Circuits and Devices Magazine, vol. 21, no. 3 (2005), pp. 6–20

R. Sarpeshkar, T. Delbruck, C.A. Mead, White noise in MOS transistors and resistors. IEEE Circuits Devices Mag. 9(6), 23–29 (1993)

O. Sgrott, D. Mosconi, M. Perenzoni, G. Pedretti, L. Gonzo, D. Stoppa, A 134-pixel CMOS sensor for combined Time-Of-Flight and optical triangulation 3-D imaging. IEEE J. Solid-State Circuits 45(7), 1354–1364 (2010)

K. Oberhauser, G. Zach, H. Zimmermann, Active bridge-correlator circuit with integrated PIN photodiode for optical distance measurement applications, in Proceeding of the 5th IASTED International Conference Circuits, Signals and Systems, 2007, pp. 209–214

G. Zach, A. Nemecek, H. Zimmermann, Smart distance measurement line sensor with background light suppression and on-chip phase generation, in Proceeding of SPIE, Conference on Infrared Systems and Photoelectronic Technology III, vol. 7055, 2008, pp. 70550P1–70550P10

G. Zach, H. Zimmermann, A 2 × 32 Range-finding sensor array wit pixel-inherent suppression of ambient light up to 120klx, IEEE International Solid-State Circuits Conference (2009), pp. 352–353

G. Zach, M. Davidovic, H. Zimmermann, A 16 × 16 pixel distance sensor with in-pixel circuitry that tolerates 150 klx of ambient light. IEEE J. Solid-State Circuits 45(7), 1345–1353 (2010)

P. Seitz, Quantum-noise limited distance resolution of optical range imaging techniques. IEEE Trans Circuits Syst 55(8), 2368–2377 (2008)

A. Nemecek, K. Oberhauser, G. Zach, H. Zimmermann, Time-Of-Flight based pixel architecture with integrated double-cathode photodetector. in Proceeding IEEE Sensors Conference 278, 275–278 (2006)

S. Decker, R.D. McGrath, K. Brehmer, C.G. Sodini, A 256 × 256 CMOS imaging array with wide dynamic range pixels and column-parallel digital output. IEEE J. Solid-State Circuits 33(12), 2081–2091 (1998)

C. Bamji, H. Yalcin, X. Liu, E.T. Eroglu, Method and system to differentially enhance sensor dynamic range U.S. Patent 6,919,549, 19 July 2005

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer-Verlag Berlin Heidelberg

About this chapter

Cite this chapter

Perenzoni, M., Kostov, P., Davidovic, M., Zach, G., Zimmermann, H. (2013). Electronics-Based 3D Sensors. In: Remondino, F., Stoppa, D. (eds) TOF Range-Imaging Cameras. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-27523-4_3

Download citation

DOI: https://doi.org/10.1007/978-3-642-27523-4_3

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-27522-7

Online ISBN: 978-3-642-27523-4

eBook Packages: Earth and Environmental ScienceEarth and Environmental Science (R0)