Abstract

At present, optical measurement methods are the most powerful tools for basic and applied research and inspection of the characteristic properties of a variety of materials, especially following the development of lasers and computers. Optical measurement methods are widely used for optical spectroscopy including linear and nonlinear optics and magneto-optics, conventional and unconventional optical microscopy, fiber optics for passive and active devices, optical recording for CD/DVD and MO disks, and various kinds of optical sensing.

In this chapter, as an introduction to the following sections, the concept and fundamentals of optical spectroscopy are described in Sect. 11.1, including optical measurement tools such as light sources, detectors and spectrometers, and standard optical measurement methods such as reflection, absorption, luminescence, scattering, etc. A short summary of laser instruments is also included. In Sect. 11.2 the microspectroscopic methods that have recently become quite useful for nano-science and nano-technology are described, including single-dot/molecule spectroscopy, near-field optical spectroscopy and cathodo-luminescence spectroscopy using scanning electron microscopes. In Sect. 11.3 magneto-optics such as Faraday rotation is introduced and the superlattice of semi-magnetic semiconductors is applied for the imaging measurement of magnetic flux patters of superconductors as an example of spintronics. Section 11.4 is devoted to fascinating subjects in laser spectroscopy, such as nonlinear spectroscopy, time-resolved spectroscopy and THz spectroscopy. In Sect. 11.5 fiber optics is summarized, including transmission properties, nonlinear optical properties, fiber gratings, photonic crystal fibers, etc. In Sect. 11.6 optical recording technology for high-density storage is described in detail, including the measurement methods for the characteristic properties of phase-change and magneto-optical materials. Finally, in Sect. 11.7 a variety of optical sensing methods are described, including the measurement of distance, displacement, three-dimensional shape, flow, temperature and, finally, the human body for bioscience and biotechnology.

This chapter begins with a section on basic technology for optical measurements. Sections 11.2–11.4 deal with advanced technology for optical measurements. Finally Sects. 11.5–11.7 discuss practical applications to photonic devices.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Fundamentals of Optical Spectroscopy

Light Source

There are many light sources for use in scientific and industrial measurements [11.1,2,3,4]. This subsection deals with the features of various light sources. For source selection, various characteristics should be considered, such as wavelength range, radiant flux, directionality, stability in time and space, lifetime, area of emission, and temporal behavior. Spectral output, whether it is a continuum, a line, or a continuum-plus-line source, should also be considered. No light source covers all wavelengths simultaneously from the ultraviolet (UV) to infrared (IR) wavelength region. Although a blackbody with extremely high temperature could realize such an ideal light source, the melting point of the materials that form the electrodes must be extremely high, and it is impossible to construct it. We, therefore, should select an adequate source that covers the required wavelength region from the UV to the IR region. In general, we use a gas discharge lamp for the UV region and a radiation source from a solid for the visible and the IR region. At present, many kinds of light sources covering each wavelength region, as shown in Fig. 11.1, are available. Those can be broadly classified into: 1) thermal radiation sources such as a tungsten filament lamp (W lamp) and an incandescent lamp in the IR region such as a Nernst glower and a glouber, 2) arc lamps such as a high-pressure xenon arc lamp (Xe lamp), a high- or low-pressure mercury lamp, a hydrogen and a deuterium-hydrogen arc lamp (D2 lamp) based on electrical discharge in gas, 3) a light-emitting diode (LED) or a laser diode (LD) basing on emission from the pn junctuon of a semiconductor, and 4) narrow-line sources using an atomic or molecular transition lines such as a hollow cathode discharge tube and an electrodeless discharge lamp, including many kinds of lasers. Recently, synchrotron radiation and terahertz emission in the far-UV and submillimeter wavelength regions, respectively, have become available, mainly for research purposes.

Among those light sources, one of the most stable, well-known, and intensively characterized in the visible and the near-IR region is the W lamp (or tungsten-filament white bulb). Although the spectral emissivity of tungsten is about 0.5 in the visible region and about 0.25 in the near-IR region, its spectral distribution of emission agrees relatively well with that of Planckʼs blackbody radiation. The W lamp can be used in an arbitrary color temperature up to 3100 K. However, more than 90% of the total emission energy is distributed in the IR wavelength region and less than 1% in the UV region below 400 nm. Therefore, it cannot be used in the UV region. The UV region, however, is especially important in the field of spectrochemical analysis. To cover the lower energy in the UV region, the D2 lamp is used in combination with the W lamp, although the D2 lamp has some problems in terms of emission stability and ease of operation.

For the tungsten white bulb, a vacuum bulb is used for color temperatures up to 2400 K. A gas bulb sealed in nitrogen, argon, or krypton gas at around 1 atm is used for color temperatures between 2000 and 2900 K. For color temperatures around 3100 K, a tungsten-halogen bulb is used. In order to prevent deposition of tungsten atoms onto the inner surface of the bulb, the pressure of argon or krypton gas is maintained at several atmospheres and the bulb is made mechanically rigid by using quartz. Furthermore, by mixing a small amount of halogen molecules such as I2, Br2, or Cl2 into the gas, the decrease in transmittance due to the deposition of tungsten atoms on the inner surface of the bulb is effectively prevented. This process is known as a halogen cycle. To make the process effective, the bulb temperature must be kept relatively high. The lifetime of the lamp is extended by about two times compared to a lamp that does not benefit from this process.

One of the most significant developments in light sources during the last 15 years is blue or UV LEDs. Blue and UV LDs have even appeared. The blue LED is used mainly for traffic signals and the blue and UV LD as recording or read-out light sources for circular discs and digital videodiscs. However, they also have great potential as light sources for scientific measurement. At present, UV LEDs and UV LDs with an emission wavelength around 365–375 nm are commercially available. Such LEDs and LDs are based on emission from gallium nitride (GaN) materials. GaN is a direct-transition-type semiconductor and has an energy gap of about 3.44 eV at room temperature, which corresponds to the UV emission wavelength. By adding indium (In) and aluminum (Al) to the GaN, one can obtain blue emission. When using gallium phosphorus (GaP) instead of GaN materials, and when adding In and Al, one can obtain green emission. When adding only In, one can obtain red emission. Another light source to be noted is the white LED, which has been commercialized rapidly as a back-illumination light source for liquid crystal displays. The white LED consists of a blue or UV LED and fluorescent materials deposited onto the LED in the same package. The blue or UV LED is used as an excitation light source for the fluorescent materials, which may be yttrium aluminum garnet (YAG) materials or some kinds of rare-earth compounds. The excitation light and fluorescence together make the white light. A high-power white LED exceeding 5 W has been developed. Figure 11.2 shows typical emission spectra of such LEDs and that of the UV LD.

For scientific measurements or for spectrochemical analyses, a pulsed light source in the UV wavelength region is important, for example, for distance measurements, fluorescence lifetime measurements, and so on. For such requirements, a picosecond light pulsar with a pulse duration around 60 ps, a wavelength of 370 nm, and a repetition frequency of 100 MHz has appeared on the market. However, in general, such a laser has a problem in wavelength selection and cost. To solve such problems, a technique to drive the Xe lamp in a nanosecond pulsed mode has been developed [11.5,6,7]. A technique to modulate the Xe lamp sinusoidally has also been developed so that it can be used in combination with a lock-in light-detection scheme. The UV or the blue LED also can be driven with a large current pulse, resulting in a pulse duration less than 1.5 ns [11.8,9,10].

Photosensors

This subsection deals with sensing features of various photosensors [11.1,2,3,4]. The photosensor is the terminology used for a photodetector when used for a sensing purpose. Photodetectors can be broadly classified into quantum-effect (QE) detectors (or photon detector) and thermal detectors. The QE detector can be subdivided into an external and an internal type, as shown in Table 11.1 Furthermore, the internal type is subdivided into a photoconductive (PC) detector, a photovoltaic (PV) detector, and a photoelectromagnetic (PEM) detector. Figure 11.3 shows the spectral response of each photodetector.

The operating principle of the external QE detector is based on the photoelectron emissive effect of a metal. Representative detectors are a phototube (PT) and a photomultiplier tube (PMT). A variety of photosensitive cathodes, having different spectral responses, are available from the UV to the near-IR wavelength region. A photocathode whose principal component is gallium arsenic (GaAs) gives high quantum efficiency and has sensitivity at longer wavelengths exceeding 1 μm. The PT consists of two electrodes sealed in a vacuum tube: a photocathode and an anode. The PT had been used for light sensing at relatively high powers. At present, it is mainly used for measuring ultra-short light pulses by taking advantage of its simple structure. Such a special PT is known as a biplaner type. The PMT consists of the photocathode, the anode, and 6–12 stages of dynodes aligned between the two electrodes. The role of each dynode is to emit a larger number of secondary electrons than are incident on it. The total amplification factor is typically E{6}, depending on the number of dynodes and the applied voltage. Because of its stability, wide dynamic range, and large specific detectivity D*, the PMT is widely used for precise light detection. The PMT can be considered as a constant-current source with high impedance. Therefore, the intensity of the output signal is mainly determined by a value of the load resistor. The response time is determined by a time constant calculated from the load resistor and an output capacitance. By cooling the photocathode and adopting a photon counting technique, shot-noise-limited weak-light measurement is possible. Recently, a small type of a metal-packaged PMT has become available [11.11]. By taking advantage of its shorter electron-transit time and smaller time spread of secondary electrons, a new gating technique with a resolution time of less than 0.3 ns has been proposed [11.12].

The operating principle of the PC detector is based on the photoconductive effect of a semiconductor. The electric conductivity of materials, especially semiconductors, varies depending on the intensity of the incident light. For UV and visible wavelength regions, intrinsic semiconductors are used. For the IR region, impurity semiconductors are used. The upper limit on wavelength sensitivity for intrinsic semiconductors is determined by the band gap energy (Eg) and that for impurity semiconductors by the ionized potential of the impurities. Generally, CdS (Eg = 2.4 eV) and CdSe are (Eg = 1.8 eV) used in the UV and the visible region. For the IR region, PbS, PbSe, PbTe, and Hg1–xCd x Te are used, where a cooling procedure is often required to suppress noise.

The operating principle of the PV detector is based on the photovoltaic effect. When a light flux whose energy is larger than the energy gap of the pn junction of a semiconductor is incident, a photoinduced voltage proportional to the incident light intensity is generated. Silicon detectors are popular and can be used from the visible to the near-IR region. The dynamic range for the incident intensity is more than E{5}. To achieve sensitivity in the UV region, detectors with a processed surface or made from GaAsP have been devised; commercially these are known as blue cells. Compared to PC detectors, the PV detector gives a faster response time. Another advantage is that it requires no power supply. The PV detector has two operation modes: the photovoltaic mode and photoconductive mode (or photodiode mode). In the photovoltaic mode, the detector is used with zero bias voltage and the detector is considered as a constant-voltage source with low internal resistance. In the photoconductive mode, the detector is used with a reverse bias voltage and can be considered as a constant-current source with high impedance. The photoconductive mode gives a wide dynamic range and a fast response. When one needs a high-speed subnanosecond response, use of a pin photodiode or an avalanche photodiode (APD) should be considered [11.13,14].

The PEM detector utilizes contributions of an electron–hole pair to the photovoltage. The electron–hole pair is generated on the surface of an intrinsic semiconductor such as InSb. By applying the external magnetic field to the semiconductor during a diffusion process, the pair is divided in opposition directions, each contributing to the voltage. This type of detector is, however, not commonly used now.

In a thermal detector, optical power absorbed on the surface of the detector is converted to thermal energy and a temperature detector measures the resulting change of temperature. A variety of techniques have been developed to attain high-speed response and high sensitivity, which is a tradeoff. Although, in principle, an ideal thermal detector does not have a wavelength-dependent sensitivity, one cannot realize such an ideal detector. Typical thermal detectors are thermocouples, thermopiles, pneumatic detectors, Golay cells, pyroelectric detectors etc.

A multichannel (MD) detector has been developed that integrates many internal QE detectors onto a silicon substrate. Electric charges produced by the incident light, usually in the UV and visible range, are accumulated on individual detectors. A metal–oxide–semiconductor-type (MOS) detector employs electric switches to read out the electric charge. A charge-coupled device (CCD) has a larger integration density than the MOS type because of the simplicity of the process of charge accumulation and transfer. The sensitivity is 107–108 photons/cm2 and the dynamic range is 103–104. Recently, infrared image sensors using HdCdTe have appeared in the market. This MD can be used not only as a spectral photosensor but also as a position sensor.

To select the optical detector, the fundamental issues to be considered are: 1) spectral response, 2) sensitivity, 3) detection limit, and 4) time response. Concerning the spectral response, spectral matching with the light source should be considered. The spectral distribution of the background light also should be taken into account. The sensitivity of the detector is defined by the ratio of the intensity of the output signal to that of the incident light. Generally, overall (or all-spectral) sensitivity is employed. To determine the sensitivity, a standard light source whose spectral distribution is known is used as the incident light: a tungsten lamp of 2856 K for the UV and the visible region and a pseudo-blackbody furnace of 500 K for the IR region. The detection limit is usually represented by the noise-equivalent power (NEP) or the specific detectivity D*, where NEP = PVn/Vs and , where P is the radiation flux, Vs is the output signal, Vn is the root mean squared value of output noise, A is area of the detector, and Δf is the noise-equivalent frequency bandwidth. The time response is represented by a step response or a steady-state frequency response. The step response is represented by a rise time or a fall time, which are used especially for detectors with a nonlinear response. For high-speed detectors, the time constant is important; this is calculated by the internal resistance of the detector and the parallel output capacitance. Impedance matching with the following electronics is also important.

Wavelength Selection

In a practical measurement, it is often necessary to select a suitable wavelength from the light source. This section deals with some wavelength-selection techniques [11.1,2,3,4]. In order to select the monochromatic or quasi-monochromatic light, we usually use a dispersion element, such as an optical filter, a prism, and a diffraction grating. Generally, the diffraction grating is installed in a monochromator (MON). To gather all spectral information simultaneously, one uses a polychromator (POL) in combination with a multichannnel detector (MD). In the IR region, a Michelson-type interferometer is sometimes used for a Fourier-transform spectrometer (FTS) [11.15]. For extremely high spectral-resolution measurements, a Fabry–Pérot interferometer should be considered. [11.16] Figure 11.4 shows various elements, devices, systems applicable in each wavelength region.

The prism has been used as the main dispersion element. Dispersion occurs in the prism primarily because of the wavelength dependence of the refractive index of the prism material. Many materials for the prism have been used, for example, glass (350 nm–1 μm), quartz (185 nm–2.7 μm), CaF (125 nm–9 μm), NaCl (200 nm–17 μm), KCl (380 nm–21 μm). When the wavelength is λ (or λ +Δ λ) and the angle between the incident beam and the deviated monochromatic ray is θ (or θ +Δ θ), the angular dispersion, Δθ/Δλ, is given by , where n and α are the refractive index and the apex angle of the prism, respectively. In order to increase the spectral resolution, λ/Δλ, we should use a prism with a long base L because .

A plane diffraction grating is made by ruling parallel closely spaced grooves on a thin metal layer deposited on glass. When collimated light flux strikes the grating in a plane perpendicular to the direction of the grooves, different wavelengths are diffracted and constructively interfere at different angles. Although there are two types of gratings, transmission and reflection, the reflection type is invariably used for wavelength selection. If the incident angle is α and the diffraction angle is β, measured from the normal to the grating plane, and the groove interval is d, the following grating formula holds, d( sin α + sin β) = mλ, where m is the order of diffraction. In the formula, the order m as well as the diffraction angle β is taken as positive for diffraction on the same side of the grating normal as the incident ray and negative on the opposite side. Then, the angular dispersion is given by and the resolution power is given by λ/Δλ = mN, where N is the total number of grooves. From the formula, we can understand that many wavelengths are observed for a specified diffraction angle β for a given α and d. This phenomenon is known as overlapping orders. From the following two equations, , we can obtain ; the value Δλ is called the free spectral range. The overlapping orders are usually separated by limiting the source bandwidth with a broadband filter, called the order sorter, or with a predisperser.

Figure 11.5 shows a typical shape of the cross section of the groove. When the angle between the grating plane and the long side of the groove is γ so that γ − α = β − γ, the incident and the diffracted light satisfy the relation for specular reflection on the long side of the triangle. Then, we obtain the relation . When, we put α = β = γ and m= 1, then 2d sin γ = λ. Such a wavelength λ and angle γ are called the blaze wavelength and angle, respectively. The blazed grating is often called an echellete. In this situation, the maximum diffraction efficiency is obtained. For high-resolution spectral measurements, an echelle grating is used, which is a relatively coarse grating with large blaze angles. The steep side of the groove is employed at very high orders.

A concave grating is the same as a plane grating but the grooves are ruled on a concave mirror so that the grooves become a series of equally spaced straight lines when projected onto a plane perpendicular to the straight line connecting the center of the concave shape and a center of its curvature. A circle, whose diameter is equal to the radius of the curvature and which is on a plane perpendicular to the grooves, is called the Rowland circle. Rays starting from a point on the Rowland circle and diffracted by the grating are focused onto a point on the same circle. The two points form an optical conjugate pair with respect to each other. Usually, an entrance and an exit slit are placed on the two points. A problem concerning the concave grating is the presence of relatively large astigmatism. It is, however, used for the UV region because no additional reflection optical element that introduces reflection energy loss is necessary. Recently, various kinds of holographic gratings have been developed to solve the problem of aberration, including astigmatism.

When choosing a wavelength-selection method, significant parameters to be considered are the dispersion characteristics, resolution power, solid angle and F-number (or optical throughput factors), degree of stray light, and optical aberration. One of the most convenient and simplest ways is to use a spectroscopic filter such as a color glass filter or an interference filter. However, those lack versatility. The MON is a multipurpose apparatus, which has a grating, an entrance, an exit slit, additional optics, and a wavelength-selection mechanism in one box, by which monochromatic light can be extracted. Many types of mounting and optical arrangement of the optics including the grating, have been proposed.

Although the dispersion-type MON is widely used for the purpose of wavelength selection, it has some drawbacks. In principle, its optical throughput is not large because of the presence of the entrance slit. Furthermore, because of the requirement of the wavelength-scanning mechanism for measuring a continuum spectrum, the total number of wavelength elements limits the signal-gathering time allocatable to a unit wavelength element, resulting in lowering signal-to-noise (SNR) ratio. On the contrary, the FTS has optical throughput and multiplex advantages over the dispersion-type MON. This is because the FTS requires no entrance slit and the entire spectrum is measured simultaneously in the form of an interferogram. However, the multiplex advantage is given only when the detector noise is dominant, such as for an IR detector. Nevertheless, it is sometimes used in the visible region. This is because of the presence of the optical throughput advantage, high precision in wavenumber, and the possibility of realizing extremely high spectral resolution. However, even for the dispersion-type MON, when used in a form of a POL together with the MD, the multichannel advantage is generated. Table 11.2 summarizes the SNR of the FTS, (SNR)FTS, and that of the POL with an MD, (SNR)POL, over that of the dispersion MON with a single detector, (SNR)MON, for cases of when the detector noise, the shot noise, and the scintillation noise is dominant. In the table, n is the total number of spectral elements.

Reflection and Absorption

Reflection or absorption spectra provide rich information on the energy levels of the material, such as inner or valence electrons, vibrations or rotations of molecules or defects in condensed matters, a variety of energy gaps and elementary excitations, e.g. phonons and excitons.

Reflection and Transmission

When a light beam incident on a material surface passes through the material, some of the light is reflected at the surface, while the rest propagates through the material. During the propagation the light is attenuated due to absorption or scattering. The coefficients of reflectivity R and transmittance T are defined as the ratio of the reflected to the incident power and the transmitted to the incident power, respectively. If there is no absorption or scattering, R + T = 1. A schematic diagram for measuring R or T is shown in Fig. 11.6. A tunable light source, a combination of a white light (Sect. 11.1.1) and a monochromator (Sect. 11.1.3) or a tunable laser (Sect. 11.1.5), is used here. Details of detectors are reviewed in Sect. 11.1.2. Changing the wavelength λ of the tunable light source, one can obtain a reflectivity spectrum R(λ) or a transmittance spectrum T(λ). For convenience the white light directly irradiates the sample and the reflected or transmitted light is detected with a combination of a spectrometer and an array detector, e.g. CCD, if the luminescence from the sample caused by the white light is negligible.

If the beam propagates in the x direction, the intensity I(x) at position x satisfies the following relation

Here α is called the absorption coefficient. The integrated form is

where I0 is the intensity of the incident light. The position at x = 0 corresponds to the surface of the material. This relation is called Beerʼs law. If the scattering is negligible, the transmittance T is described by

where R1 and R2 are the reflectivities of the front and back surfaces, respectively, and l is the sample thickness.

Optical Constants

If the light propagates in the x direction, the electric field is described by

where k is the wavevector of the light and ω is an angular frequency. In a transparent material with refractive index n, k and the wavelength in vacuo λ are related each other through

This formula can be generalized to the case of an absorbing material by introducing the complex refractive index [11.17]

where κ is called the extinction coefficient. The imaginary part of leads to an exponential decay of the electric field; the absorption coefficient can be described by

The amplitude reflectivity r, the ratio of the electric field of the incident light to that of the reflected light, in the case of normal incidence is described by [11.18]

If we define the real and imaginary part of r by

then the (intensity) reflectivity can be described by

and conversely, n and k are written as

Ellipsometry enables us to obtain the amplitude reflectivity [11.19]. Thus one can obtain the complex refractive index by measuring the reflectivity.

The optical response of the material, e.g. light propagation described in the complex refractive index, originates from the polarization induced by the incident light. If the electric field E of the light is weak and within linear regime (cf. Sect. 11.4), the polarization P is given by

where ε0 and χ are the vacuum dielectric constant and the electric susceptibility, respectively. The electric displacement is

where ε is the complex dielectric constant;

where ε1 and ε2 are the real and imaginary parts of ε. From the Maxwell equation [11.1],

and conversely,

Kramers–Kronig Relations

The Kramers–Kronig relations allow us to find the real (imaginary) part of the response function of a linear passive system, if one knows the imaginary (real) part at all frequencies. The relations are derived from the principle of causality [11.17]. In the case of the complex refractive index, the relations are written as

where P indicates the Cauchy principal value of the integral. Using these relations one can calculate n from κ, and vice versa. The θ in (11.9) is calculated from the reflectivity R using the following formula

thus n and κ are determined using (11.11, 11.12) from the reflectivity spectrum. This analysis is very useful for materials with strong absorption in which only the reflectivity is measurable. The Kramers–Kronig analysis of reflection spectra with synchrotron radiation, which covers extremely wide wavelength regions from the far-IR to x-rays, reveals the electronic structures of a huge number of materials [11.20].

The Lorentz Oscillator Model (Optical Response of Insulators)

The responses of bound (valence) electrons in insulators can be written by the equation of motion of a damped harmonic oscillator

where m and e are the mass and charge of the electron, γ is the damping constant, ω0 is the resonant frequency, E0 is the amplitude of the electric field of the light. If we assume x(t) = x0 exp (− iωt),

Thus the polarization is given by

where n is the number of electrons per unit volume. Now we can write the electric displacement

where the electric susceptibility χbackground accounts for all other contributions to the polarization. Using (11.15,11.16), we obtain the following equations

The dielectric constants in the low and high frequency limit are defined as

Figure 11.7 shows the frequency dependence of the optical constants introduced in this section.

Typical Absorption Spectrum of Insulators

A schematic plot of a typical absorption spectrum of insulators is shown in Fig. 11.8. There are sharp absorption lines due to phonons in the far-infrared region and due to excitons in the visible or ultraviolet region; a phonon is a quantized lattice vibration and an exciton is a bound state of an electron and hole like a hydrogen atom. The shape of the absorption or reflection spectra of phonons or excitons can be analyzed by using the Lorentz oscillator model. If the coupling between the photon and the phonon (exciton) is strong, we have to introduce a coupled mode of a photon and a phonon (exciton), phonon–polariton (exciton–polariton) [11.21]. Phonon sidebands usually accompany the exciton absorption lines and provide information on the phonons and excitons [11.22].

Above the exciton lines, strong interband absorption is observed. The absorption edge is caused by the onset of the interband transition, in which free electrons and free holes are created simultaneously across the band gap of the insulator. We can obtain a variety of information on the band structure of the material from the interband absorption spectra. Between the phonon and exciton lines there are two weak bands: the multiphonon absorption band due to the combination of several phonons lies around the mid-infrared region and the Urbach tail appears as the onset of optical absorption in the near-infrared or visible region at finite temperature. The shape of the Urbach tail is expressed as [11.21]

where σ is an empirical steepness parameter. The σ indicates the strength of the exciton–phonon coupling, because the Urbach tail is caused by phonon-assisted processes. Generally, there is a minimum in the absorption coefficient between the multiphonon region and the Urbach tail. In the case of SiO2 glasses this minimum lies in the near-infrared region around 1 eV. Thus optical fibers operate between 1.2–1.6 μm (Sect. 11.1.5).

Drude Model (Optical Response in Metals)

The responses of free electrons in metals can be written by the equation of motion (11.24) without a restoring force

If we assume x(t) = x0 exp (iωt) ,

Thus the polarization is given by

where τ is the relaxation time and ωp is called the plasma frequency. Figure 11.9 shows the reflectivity R in the case of γ = 0 using (11.10). Perfect reflection occurs for ω ≤ ωp, and then R decreases for ω > ωp, approaching zero.

Electric conductivity can be generalized to the optical frequency region. The current density j is related to the velocity of free electrons and the electric field through

where σ is called the optical conductivity. From (11.23) and (11.27),

where

σ0 corresponds to the DC conductivity. Thus the DC conductivity can be estimated by purely optical measurements without electrical contacts. Optical conductivity is written in terms of the dielectric function as follows

The Kramers–Kronig relations of the optical conductivity are as follows

where σ1(σ2) is the real (imaginary) part of the σ.

Optical Conductivity of Superconductors

Superfluid electrons in superconductors can move without dissipation, therefore one can take the limit τ−1 → 0 in (11.37),

where n S corresponds to the superfluid electron density. Accordingly, the real part σ1 for the superfluid electrons is zero unless ω = 0. Using (11.40), σ1 is expressed by a delta function,

Finally, the optical conductivity of superfluid electrons is given by

Superconductivity originates from the formation of Cooper pairs. In the higher-frequency region above the binding energy of the Cooper pair, σ1 should be finite. A schematic illustration of optical conductivity spectrum in superconductors is depicted in Fig. 11.10. Here Δ is called the Bardeen–Cooper–Schrieffer (BCS) gap parameter and 2Δ corresponds to the energy gap in the superconductor [11.17]. Thus we can measure the superconducting gap by optical spectroscopy in the infrared region.

Luminescence and Lasers

Materials emit light by spontaneous emission when electrons in the excited states drop to a lower level. The emitted light is called luminescence. Such materials with excited electrons can amplify the incident light via stimulated emission, which is utilized in lasers, an acronym for light amplification by stimulated emission of radiation.

Emission and Absorption of Light

The processes of spontaneous emission, stimulated emission and absorption are illustrated in Fig. 11.11 in the case of a two-level system.

The rate equations are as follows

where N1 and N2 are the populations of a ground state | 1〉 and an excited state | 2〉, respectively, ρ(ν) is an energy density of the incident light, and A21, B21 and B12 are Einstein coefficients. The right-hand side of (11.43) shows spontaneous emission (Fig. 11.11a) of a photon with the energy hν = E2 − E1. A radiative lifetime τR of the excited state is defined by

The right-hand side of (11.44) shows stimulated emission (Fig. 11.11b) from | 2〉 to | 1〉. The rate is proportional to the energy density at the resonant frequency ν. Equation (11.45) represents absorption (Fig. 11.11c) from | 1〉 to | 2〉. As seen in the derivation of Beerʼs law, (11.1), the rate of absorption is proportional to the energy density of the incident light. Combining (11.43–11.45), we obtain the rate equation

In the steady state, N1/dt = N2/dt = 0, then

In thermal equilibrium, the Planck distribution for cavity radiation is

and the Boltzmann distribution between two levels is

From (11.48–11.50) we obtain the Einstein relations

Luminescence

Luminescence is categorized as follows

-

1.

Photoluminescence (PL) The reemission of light after absorbing an excitation light. Details are described in this section.

-

2.

Electroluminescence (EL) The emission of light caused by an electric current flowing through the material. This is utilized in optoelectronic devices: the light emitting diode (LED) and the laser diode (LD).

-

3.

Cathodoluminescence (CL) The emission of light due to irradiation by an electron beam. Details are explained in Sect. 11.2.

-

4.

Chemiluminescence The emission of light caused by a chemical reaction. Bioluminescence which originates in an organism belongs to chemiluminescence.

The process involved in luminescence does not simply correspond to the reverse process of absorption in condensed matter. Nonradiative processes, e.g. a phonon-emission process, compete with the radiative process. Hence the decay rate of an excited state 1/τ is described by

where the two terms on the right-hand side represent the radiative and nonradiative decay rates, respectively. The luminescence efficiency or quantum efficiency η is defined by

If the radiative lifetime τR is faster than the nonradiative lifetime τNR, luminescence is a main de-excitation process and luminescence spectroscopy is a powerful method for the investigation of the excited state. Time-resolved measurements introduced in Sect. 11.4.3 provide direct information on 1/τR. In many cases, nonradiative decay processes give rise to heating of the material. Therefore, photocalorimetric or photoacoustic spectroscopy is utilized to obtain the information on the nonradiative decay processes [11.23].

PL Spectroscopy

The experimental set-up for the PL measurement is shown in Fig. 11.12. The sample is excited with a laser or a lamp. The PL spectrum is obtained by using array detectors, e.g. a CCD, or by scanning the wavelength of the spectrometer with a PMT. The sample is usually mounted in a cryostat to cool it to liquid nitrogen or helium temperatures, because the nonradiative process is activated at higher temperature. Conversely, the temperature dependence of PL gives information on the nonradiative decay mechanism. The spectra obtained should be corrected to take into account the sensitivity of the detection system (the spectrometer and the CCD or PMT), while this correction is not required in the case of reflection and absorption measurements, in which the response function of the detection system is canceled in the calculation of I/I0 (see (11.1)). Reabsorption effects should also be taken into account [11.22] if the frequency region of the luminescence overlaps that of the absorption. Time-resolved PL spectroscopy provides the radiative decay time and direct information on the relaxation process in the excited states. The experimental set-up will be reviewed in Sect. 11.4.3.

PL excitation spectroscopy (PLE) in which the detection wavelength is fixed and the excitation wavelength is scanned allows the absorption spectrum to be measured in the case that direct transmission measurements are impossible because of very weak absorption or an opaque surface of the material. PLE spectroscopy is similar to ordinary absorption measurements but is subject to the condition that there exists a relaxation channel from the (higher) excited state to the emission state being monitored. Fluorescence line narrowing (FLN) or luminescence line narrowing is a high-resolution spectroscopic technique that uses laser excitation to selected specific subpopulations optically from the inhomogeneously broadened absorption band of the sample, as shown in Fig. 11.23a,b [11.24]. One can obtain the homogeneous width using FLN spectroscopy (Sect. 11.2).

Focussed position dependence of exciton luminescence of ZnCdSe quantum dots observed at 2 K by micro-photoluminescence spectroscopy. The positions moved on a straight line are given in units of μm (after [11.29])

Optical Gain

Laser action arises from stimulated emission, while spontaneous emission prevents lasing. Using (11.43, 11.44, 11.51) the ratio between the rates of stimulated emission and spontaneous emission is calculated as

This ratio is less than unity if T is positive. Hence a negative temperature is required for the lasing. From (11.50) this negative temperature corresponds to N2 > N1, which is called population inversion.

If population inversion is realized, the incident light, called seed light, is amplified by stimulated emission. In the case that the seed light originates from the luminescence of the material itself, amplified spontaneous emission (ASE) appears, as shown in Figure 11.13.

Optical gain is calculated using (11.44,11.45) as an extension of Beerʼs law (11.1)

where g(ν) is a spectral function which describes the frequency spectrum of the spontaneous emission. Then, using the Einstein relation (11.51), we obtain

where I0 and I are the input and output light intensities, respectively. G(ν) is called the gain coefficient.

The population inversion can be obtained in the following ways

-

1.

Optical pumping This method is used in solid-state lasers (except LDs) and dye lasers.

-

2.

Electric discharge Gas lasers and flash lamps which are used for the optical pumping of solid state lasers, e.g. Nd:YAG laser.

-

3.

Electron beam Large excimer lasers are pumped with a large-volume electron beam.

-

4.

Current injection This method allows compact, robust and efficient laser device (LDs).

Laser Configuration

A combination of the population-inverted medium and the optical cavity which gives optical feedback provides the laser oscillation, which is like an electronic oscillator. Thus the laser consists of a laser medium, a pumping source and an optical cavity. Figure 11.14 shows a schematic arrangement of a laser. ASE with the correct frequency and direction of propagation is reflected back and forth through the laser medium. One of the mirrors, called the output coupler, is partially transparent to extract the light within the cavity. The cavity acts as a Fabry–Pérot resonator, so that the cavity modes are formed inside the cavity. The mode separation is expressed by

where l is a cavity length. We see lasing in the frequency region in which the intensity is above the threshold, as shown in Fig. 11.15. As seen in the figure, several modes oscillate simultaneously, which is called multi-mode operation. The random phases between these laser modes may cause a chaotic behavior of the output power. To avoid this effect, there are two solutions: single-mode operation in which a single cavity mode is selected by introducing another interferometer within the cavity and mode-locked operation, which is introduced in Sect. 11.4.2. The former operation achieves very narrow line widths down to 1 Hz.

Typical Lasers

Typical lasers are concisely summarized in the following. The lasers are classified depending on the laser media: gas, liquid and solid. Solid-state lasers are categorized into rare-earth metal lasers, transition-metal lasers and semiconductor lasers (LD). There are two laser operation modes: continuous wave (CW) and pulsed.

Gas lasers utilize atomic or molecular gases, as shown in Table 11.3. Though they are fixed-wavelength lasers in principle, multiple lines exist in molecular gas lasers and tunable operation is possible in CO2 lasers. Excimer lasers utilize an excited diatomic molecule excimer, which is unstable in the ground state, and provide high-intensity pulses at UV wavelengths.

Dye lasers provide tunable operation, because dyes are organic molecules and have broad vibronic emission bands due to interaction with the solvent. Figure 11.16 shows the tuning range of typical dye lasers.

Solid-state lasers with transition-metal ions, as summarized in Table 11.4, also show broad emission bands (except the ruby laser) caused by the strong interaction between 3d electrons and phonons, e.g. the Ti ion in sapphire provides very wide tuning range shown in Fig. 11.16 and are widely used, in particular, as ultrafast pulsed lasers (Sect. 11.4.2).

Solid-state lasers with rare-earth ions, as summarized in Table 11.5, work as fixed-wavelength lasers because of the narrow emission lines due to the weak interaction between 4f ions and their environments. They are pumped with flash lamps or LDs and, are themselves used for the optical pumping of tunable lasers.

Finally, semiconductor diode lasers, as summarized in Fig. 11.17, are nowadays most widely applied in tiny light sources for optical fiber communication, optical recording of CDs, DVDs, MOs, etc. Current injection is used for the laser pumping, which makes their combination with electronic circuitry feasible.

Scattering

Scattering is the phenomenon in which the incident light changes its wavevector or frequency. Scattering is called elastic if the frequency is unchanged, or inelastic if the frequency changes.

Elastic Scattering

This phenomenon occurs due to variation of the refractive index of the material. The scattering can be classified into two types depending on the size of the variation a as follows [11.18]

-

1.

Rayleigh scattering: in the case of a ≪ λ The probability (cross section) of Rayleigh scattering is proportional to 1/λ4.

-

2.

Mie scattering: in the case of a ≥ λ The size dependence of the probability of the Mie scattering is not simple but is approximately proportional to 1/λ2 in the case of a ≈ λ. This phenomenon enables us to monitor the sizes of the particles in the air or in transparent liquid.

Inelastic Scattering

This phenomenon occurs due to fluctuation of the electric susceptibility of electrons or lattices in a material. The electric field E of the incident light and the polarization P of the material are described by (Sect. 11.1.4)

If the fluctuation of the electric susceptibility can be written by

the polarization of the material is described as

The first term corresponds to the Rayleigh scattering and the second term means that new frequency components with ω ± Ω, called Raman scattering, arises from the fluctuation. The down- and upshifted components are called the Stokesscattering and the anti-Stokes scattering, respectively.

In the case of the Raman scattering due to phonons, the Stokes (anti-Stokes) process corresponds to a phonon emission (absorption), as shown in Fig. 11.19. The scattering caused by acoustic phonons has a special name: Brillouin scattering. The frequency shift with respect to the incident light is called the Raman shift, and is determined by the phonon energy. In other words, the energy of the phonons or other elemental excitations can be obtained by Raman spectroscopy. Nowadays Raman spectroscopy is indispensable for material science and is applied to a huge number of materials [11.25].

Selection Rules for Raman Scattering

In the case of Raman or Brillouin scattering of phonons in crystals, energy and momentum conservation rules hold

where ωi and ki(ωs, ks) are the frequency and wavevector of the incident (scattered) photon and Ω and K are those of the phonon. The plus sign corresponds to the Stokes process, which is shown in Fig. 11.20 and the minus sign corresponds to the anti-Stokes process.

If the incident light is in the optical region (from IR to UV), the wavevector is negligibly small in comparison with the Brillouin zone of the crystal. Hence, phonons with q ≈ 0 are usually observed in the Raman scattering.

In a crystal with inversion symmetry, the phonon modes which are observed in the Raman scattering, called Raman-active modes, are not infrared active (not observed in the infrared absorption), and vice versa. This is called the rule of mutual exclusion [11.26].

In general, the coefficient χ′ of the Raman scattering term in (11.61) is a tensor and is related to a Raman tensor R, which is determined by the symmetry of the crystal or molecule. The intensity of the Raman scattering I is proportional to

where e i and es are the polarization vectors of the incident and scattered light, respectively. The configuration for Raman spectroscopy is specified as k i (e i es)ks and the allowed combination of the polarizations are found if the Raman tensor R is given. This is called the polarization selection rule [11.26].

Electronic Raman Scattering

An electronic transition as well as a phonon is observed in the Raman scattering. This is called electronic Raman scattering. This is a very useful probe for plasmons in semiconductors, magnons in magnetic materials, or in determining the superconducting gap and the symmetry of the order parameter of superconductors, in particular, strongly correlated electron systems; a new type of elementary excitation was found by this technique [11.27].

Resonant Raman Scattering

If the frequency of the incident light ωi approaches the resonance of the material ω0, the scattering probability is enhanced and the process is called resonant Raman scattering. In this case violation of the selection rules and multiple-phonon scattering occur. In the just-resonant case (ωi ≈ ω0), the discrimination between the scattering, which is a coherent process, and the luminescence, an incoherent process, is a delicate problem. The time-resolved measurement of resonant Raman scattering reveals the problem and provides information on the relaxation process of the material [11.28].

Experimental Set-up

The configuration for Raman spectroscopy is similar to that used for luminescence spectroscopy, but a spectrometer with less stray light is required, because strong incident laser or Rayleigh scattering is located near the signal light. A double or triple spectrometer instead of a single spectrometer is usually used in Fig. 11.12 to reduce the stray light. An alternative method is to cut the laser light with a very narrow-line sharp-cut filter placed just in front of the entrance slit of a single spectrometer. This kind of filter is called a notch filter, which is a kind of dielectric multilayer interference filter.

Microspectroscopy

In nanoscience and nanotechnology the optical spectroscopic study of the individual properties of nanostructured semiconductor materials or biomolecules with ultrahigh spatial resolution is useful. This is achieved by avoiding the inhomogeneity caused by differences in the size, shape or surrounding environment. This kind of spectroscopy is called single-quantum-dot or single-molecule spectroscopy. In this section, we will introduce the principles and the application of three kinds of microspectroscopic methods based on conventional microscopy, near-field optical microscopy and cathodoluminescence spectroscopy with the use of scanning electron microscopy.

Optical Microscopy

Since light has a wave nature and suffers from diffraction, the spatial resolution of an optical microscope cannot go below approximately a half of the optical wavelength: the so-called diffraction limit. The typical set-up of microphotoluminescence spectroscopy is illustrated in Fig. 11.21. A laser beam for the photoexcitation source is focused on a sample surface with a spot diameter of about 1 μm through an objective lens with a high magnification factor. The luminescence from the sample is collected by the same objective lens and passed through an achromatic beam splitter to separate the luminescence from the scattered light of the excitation laser, and the luminescence image is focused onto a CCD camera or the luminescence spectrum is analyzed through the combination of spectrometer and intensified CCD camera.

The principle of single-quantum-dot or single-molecule spectroscopy is illustrated in Fig. 11.22. For example, the luminescence from the ensemble of quantum dots of semiconductors having a size distribution shows the inhomogeneous spectral broadening due to the size-dependent luminescence peak energy, as shown in Fig. 11.22a. If the spot size of the focused point is comparable to the mean separation distance between the quantum dots, the number of quantum dots detected by the objective lens is limited and the sharp luminescence lines with discrete photon energies are detected, as shown in Fig. 11.22c. If the distribution of the dots is dilute enough, one can detect a single dot, as shown in Fig. 11.22b where the line width is limited by intrinsic homogeneous broadening corresponding to the inverse of the phase relaxation time of the excited state.

As an example of laser microphotoluminescence spectroscopy, Fig. 11.23 shows the result of the ZnCdSe quantum dots grown on a ZnSe substrate [11.29]. Although the diameter of the quantum dots is 10 nm on average and has a wide size distribution, the microphotoluminescence spectra show the spiky structures that critically depend on the spot position of observation. From the top to the bottom spectra, the spot position is shifted successively by 10 μm distance. The bottom spectrum is taken at the original position to check the reproducibility, from which one notes that the change in the spectra comes from fluctuation not in time but in position.

As another example of single-molecule spectroscopy, Fig. 11.24 illustrates the microluminescence excitation spectroscopy for light-harvesting complexes LH2 acting as an effective light antenna in photosynthetic purple bacteria at 2 K [11.30]. The complexes contain two types of ring structure of bacterio-chlorophyll molecules (BChl a) with 9 and 18 molecules stacked against each other. Since the 9- and 18-molecule rings have their absorption bands at 800 and 860 nm, respectively, the ensemble of LH2 complexes, as illustrated in curve (a), shows two broad peaks with inhomogeneous broadening caused by different surrounding environment. On the other hand, when the complexes are dilutely dispersed in polyvinyl acetate (PVA) polymer film, individual complexes are found to show different spectra, as illustrated in curves (b)–(f). Here sharp structures are found around 800 nm, while still broad structures around 860 nm. The former result indicates the localization of photoexcitation energy at one molecule, while the latter, indicates delocalization over the ring.

Comparison of fluorescence-excitation spectra for an ensemble of LH2 complexes (a), and for several individual LH2 complexes (b–f) of photosynthetic bacteria at 1.2 K (after [11.30])

Near-field Optical Microscopy

In order to realize the spatial resolution beyond the diffraction limit, one can illuminate the sample with an extremely close light source of evanescent wave having large wavevectors produced from an aperture smaller than the wavelength of light. Here, the lateral resolution is mainly limited by the aperture size of the light source, if the distance between the light source and the sample surface is much smaller than the wavelength of light. Such a microscopy is called near-field optical microscopy. A schematic diagram of near-field microscope is illustrated in Fig. 11.25. One end of the optical fiber is sharpened by melting or chemically etching and used as a microprobe tip not only for the optical tips but also for atomic-force tips. To avoid the leakage of the light from the side of the tip end, the tip end is coated with Al or Au. The distance of the probe tip end from the surface of the sample is kept constant to within a few tens of nanometers using the principle of the atomic-force microscope (AFM). Laser light is sent through the optical fiber and the light emitted from the ultra-small aperture that illuminates the sample surface with a spot size similar to the aperture size (illumination mode). The transmitted or luminescent light from the sample is collected by an objective lens and detected by a photodetector such as photomultiplier. For luminescence measurements a band-pass filter or a monochromator is placed before the photodetector. In some case to improve the spatial resolution the reflected or luminescence light is again collected by the same probe tip (illumination/collection mode). The lateral position of the tip end or the sample is scanned on the X–Y plane and the two-dimensional intensity image of the optical response of the sample can be recorded together with the topographical image of the sample surface. The minimum spatial resolution using the optical fiber tip end is considered practically to be a few tens of nanometers.

Figure 11.26 illustrates the example of images of the double monolayer of a self-organized array of polystyrene microparticles with a diameter of 1 μm on a glass substrate [11.31]. Figure 11.26a shows the AFM image of the sample surface where the close packed hexagonal array is observed. The near-field transmission image using light from a 514.5 nm Ar ion laser is shown in Fig. 11.26b. Inside one microparticle indicated by a white circle, one can see seven small bright spots with a characteristic pattern. The spot size is about 150 nm, which is restricted by the aperture size. Since the distance between these spots depends on the wavelength of light, the pattern represents nanoscale field distribution of a certain electromagnetic wave mode standing inside the particle double layer.

4.5 μm ×4.5 μm images of a double monolayer film of self-organized 1.0-mm polystyrene spherical particles on a glass substrate: (a) AFM topographic image, and (b) SNOM optical transmission image (after [11.31])

Another example of monitoring the spatial distribution of the wave function of electronic excited states is shown in Fig. 11.27 [11.32]. The near-field luminescence images of confined excitons and biexcitons in GaAs single quantum dots are observed using the illumination/collection mode with a probe tip with an aperture size of less than 50 nm. The size of the image of the exciton is found to be larger than that of the biexciton, reflecting the difference in effective sizes for the translational motion of the electronically excited quasi-particles.

High-resolution photoluminescence SNOM images of (a) X – exciton state, and (b) XX – biexciton state for a single GaAs quantum dot. The corresponding photoluminescence spectrum is also shown in (c) (after [11.32])

Cathodoluminescence (SEM-CL)

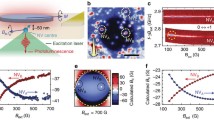

Cathodoluminescence (CL) spectroscopy is one of the techniques that can be used to obtain extremely high spatial resolution beyond the optical diffraction limit. Cathodoluminescence refers to luminescence from a substance excited by an electron beam, which is usually measured by means of the system based on a scanning electron microscope (SEM), as illustrated in Fig. 11.28. The electron beam is emitted from an electron gun of the SEM, collected by electron lenses and focused on a sample surface. The luminescence from the sample is collected by an ellipsoidal mirror, passed through an optical fiber and sent to a spectrometer equipped with a CCD camera. Lateral resolutions less than 10 nm are available in CL measurement, since the de Broglie wavelength of electrons is much shorter than light wavelengths. Moreover, energy- and wavelength-dispersive x-ray spectroscopy can be carried out simultaneously due to the high energy excitation of the order of keV. However, there are some difficulties in CL spectroscopy that are common to the observation of SEM images. A tendency toward charge accumulation at the irradiated spot requires that specimens have an electric conductivity, since it induces an electric field which disturbs the radiative recombination of carriers. In addition, incident electrons with high kinetic energy often give rise to degradation of the sample. In CL spectroscopy, it is important to recognize those properties and to treat samples with a metal coating if needed.

Figure 11.29 illustrates an example of CL measurement on a system based on SEM. Spatial distribution of spectrally integrated CL intensity as well as SEM image is obtained, as shown in Fig. 11.29a,b. The sample is ZnO:Zn which corresponds to ZnO with many oxygen vacancies near the surface, and is a typical green phosphor. The CL image consists of 100 × 100 pixels, and the brightness of each pixel shows the CL intensity under the excitation within an area of 63 × 63 nm2. The CL intensity is different among spatial positions at the nanoscale. The CL spectrum for each pixel can also be derived from this measurement, and the feature varies according to positions.

The penetration depth of incident electrons under electron-beam excitation is controllable by changing the accelerating voltage [11.33]. Electrons with high kinetic energy are able to penetrate deeper than photons which penetrate at most up to the depth corresponding to the reciprocal of the absorption coefficient, and therefore internal optical properties of substances can be examined in CL measurement. An example of accelerating voltage dependence of CL spectra is illustrated in Fig. 11.30. The sample is once again ZnO:Zn, in which free-exciton luminescence by photoexcitation is not observed at room temperature, since excitons are separated into electrons and holes due to the electric field in the surface depletion layer [11.34]. For an accelerating voltage of 2 kV, at which the penetration depth of incident electrons is comparable to the reciprocal of the absorption coefficient of photons, the CL spectrum does not show any structure in the exciton resonance region. On the other hand, the free exciton luminescence appears for an accelerating voltage of 5 kV, at which the penetration depth of the incident electrons is estimated to be about five times larger than that of photons. The luminescence is highly enhanced for an accelerating voltage of 10 kV, at which incident electrons are considered from the estimation of the penetration depth to spread throughout the electron-injected ZnO:Zn particle. Although the total number of carriers in the particle increases with the accelerating voltage, the change in carrier density should be small because of the increase in excitation volume, i.e. nonlinear enhancement of the luminescence is not attributed to any high density effects. These facts indicate that injected electrons penetrate into the internal region where many excitons can recombine radiatively due to the lower concentration of oxygen vacancies, and the width of the depletion layer in the particle is of the order of the reciprocal of the absorption coefficient.

The electric field in the depletion layer can be screened by increasing the density of photoexcited carriers. However, photoexcitation with high carrier density also induces strong nonlinear optical response near the exciton resonance region, such as exciton–exciton scattering and electron–hole plasmas [11.35]. In CL measurements, nonlinear effects do not appear in ZnO:Zn, since the carrier density under electron-beam excitation in the system based on SEM is much lower than that under photoexcitation using pulsed lasers. The free exciton luminescence does not appear with low accelerating voltage and low beam current, as shown in Fig. 11.30, whereas it can be observed with larger beam current. In CL spectroscopy, the internal electric field in the depletion layer is weakened with high efficiency and the free exciton luminescence near the surface can be observed without high density effects, since electrons are directly supplied into the oxygen vacancies, which are a source of the internal field.

Magnetooptical Measurement

Faraday and Kerr Effects

It is well known in magnetooptical effect that the polarization plane of an electromagnetic wave propagating through matter is rotated under the influence of a magnetic field or the magnetization of the medium [11.36]. This effect is called the Faraday effect, named after the discoverer Michael Faraday [11.37]. This effect is phenomenologically explained as the difference of the refractive index between right and left circular polarizations. In this effect the angle of optical rotation is called the Faraday rotation angle. In the case of low applied magnetic field the Faraday rotation angle θF is proportional to the sample thickness l and the applied magnetic field H. Thus θF is written as

where V is called the Verdet constant. The Faraday effect appears even without a magnetic field in an optically active medium, e.g. saccharide, etc. Furthermore, the magnetic Kerr effect is the Faraday effect for reflected light [11.38]. This effect is ascribed to the phase difference between right and left circular polarizations when the electromagnetic wave is reflected on the surface of a magnetic material.

For practical use the Faraday effect is utilized for imaging of magnetic patterns. These magnetic patterns have been experimentally studied by various techniques,

-

1.

moving a tiny magnetoresistive or Hall-effect probe over the surface,

-

2.

making powder patterns with either ferromagnetic or superconducting (diamagnetic) powders (Bitter decoration technique),

-

3.

using the Faraday magnetooptic effect in transparent magnetic materials in contact with the surface of a superconducting film as a magnetooptic layer (MOL).

In order to get a high-resolution image of the magnetic pattern, one of these methods, Faraday microscopy (3 above), is the most useful [11.39,40]. A schematic drawing of the Faraday imaging technique is shown in Fig. 11.31.

The linearly polarized light enters the MOL, in which the Faraday effect occurs and is reflected at the mirror layer. In areas without flux, no Faraday rotation takes place. This light is not able to pass through the analyzer that is set in a crossed position with respect to the polarizer, hence the superconducting regions stay dark in the image. On the other hand, in regions where flux penetrates, the polarization plane of the incident light is rotated by the Faraday effect so that some light passes through the crossed analyzer, thus the normal areas will be brightly imaged.

Figure 11.31 shows the case of nonzero reflection angle, whereas in the experiment perpendicular incident light is normally used (Faraday configuration).

The Faraday rotation angle is transformed into light intensity levels. The sample surface is imaged onto a CCD detector array. In the case of crossed polarizer and analyzer the intensity of the signal from the CCD detector is

where r is the spatial coordinate on the CCD surface, λ is the wavelength of the incident light, B is the applied magnetic field, θF is the Faraday angle, and I0 is the light intensity reflected by the sample. I1 is the background signal ascribed to the dark signal of the CCD and residual transmission through the crossed polarizer and analyzer. When the analyzer is uncrossed by an angle θ,

The angular position θ of the analyzer should be adjusted to obtain the best contrast between superconducting (θF = 0) and normal (θF ≠ 0) areas. By changing the sign of θ, normal areas can appear brighter or darker than superconducting areas. In our experiment the angle θ is set to yield black in normal areas and gray in superconducting areas.

Application to Magnetic Flux Imaging

Experimental Set-up

Magnetooptical imaging is performed using a pumped liquid-helium immersion-type cryostat equipped with a microscope objective. This objective, with a numerical aperture of 0.4, is placed in the vacuum part of the cryostat and can be controlled from outside. The samples are studied in a magnetic field applied from exterior coils. The optical set-up is similar to a reflection polarizing microscope as shown in Fig. 11.32. Before measurement the samples are zero-field cooled to 1.8 K. The indium-with-QWs sample is illuminated with linearly polarized light from a Ti:sapphire laser, through a rotating diffuser to remove laser speckle. In the case of a lead-with-EuS sample a tungsten lamp with an interference filter is used the light source. Reflected light from the sample passes through a crossed or slightly uncrossed analyzer and is focused onto the CCD camera. The spatial resolution of 1 μm is limited by the numerical aperture of the microscope objective.

Magnetooptic Layers

Conventional magnetooptic layers

As for typical conventional MOLs, essentially, thin layers of Eu-based MOL (Eu chalcogenides, e.g. EuS and EuF2 mixtures, EuSe) and doped yttrium iron garnet (YIG) films have been used [11.41]. These are usable up to the critical temperature of the ferromagnetic–paramagnetic transition (≈15–20 K) because their Verdet constants decrease with increasing temperature.

Since EuS undergoes ferromagnetic ordering below Tc ≈16.3 K, a mixture of EuS with EuF2 is better used. EuF2 stays paramagnetic down to very low temperatures, therefore the ordering temperature of the mixture can be tuned by the ratio EuS:EuF2. But there are several problems; difficulty of preparation due to difference of melting temperatures, and the need for a coevaporation technique.

Then the single-component EuSe layer has been further used because, even in the bulk, EuSe is paramagnetic down to 4.6 K and has a larger Verdet constant. Below 4.6 K, EuSe becomes metamagnetic, however, the reappearance of magnetic domains in the EuSe layers is not seen down to 1.5 K. However, there is also a problem owing to the toxicity of Se compounds.

On the other hand, due to their high transition temperature (Curie temperature), bismuth- and gallium-doped yttrium-iron garnets (YIG) have been developed and used for the study of high-Tc superconductors. They are disadvantageous since they show ferrimagnetic domains, however, these MOLs are developed further by the introduction of ferrimagnetic garnet films with in-plane anisotropy. Using such films, the optical resolution is about 3 μm, but a direct observation of the magnetic flux patterns is possible and the advantages of the garnet films, i.e. high magnetic field sensitivity, large temperature range, are retained.

Generally this kind of MOL often has the demerit of poor spatial resolution because of their thickness of several micrometers. Furthermore, self-magnetic ordering that may modify the flux distributions in superconducting samples may limit their use. However, it was recently reported that an optimized ferrite garnet film allowed the observation of single flux quanta in superconducting NbSe2 [11.42].

Novel magnetooptic layers

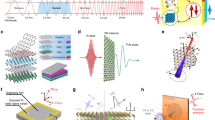

In this section we refer to an alternative type of MOL [11.39,40] based on semimagnetic semiconductor (SMSC) Cd1–xMn x Te. It consists of SMSC (also called diluted magnetic semiconductor (DMS)) Cd1–xMn x Te quantum wells (QWs) embedded in a semiconductor–metal optical cavity. It is well-known that SMSCs exhibit a large Faraday rotation mainly due to the giant Zeeman splitting of the excitonic transition ascribed to sp–d exchange interactions between spins of magnetic material and band electron spins. The most advantageous point is no self-magnetic ordering due to paramagnetic behavior of Mn ions. Therefore, it is very convenient since there is no possibility to modify the magnetic flux patterns of intermediate state of type-I superconductors. There are several other advantages of this MOL. It is easy to increase Faraday rotation by making an optical cavity (metal/semiconductor/vacuum) with a thickness of = (2n + 1)λ/4. Multiple reflections of light take place inside the cavity. Moreover, in order to adjust the cavity thickness at the desired wavelength, a wedged structure is constructed. The highest spatial resolution is obtained when the superconducting film is evaporated directly onto the MOL. This is because, the smaller the distance between the MOL and the sample, the better the magnetic imaging becomes since there is little stray-field effect.

In addition to these ideas already proposed for conventional MOL (EuSe) [11.41], there is another strong point. Using QWs is also interesting due to low absorption, easy adjustment of the balance between absorption and Faraday rotation by choosing the number of QWs, and the possibility to have a thin active layer (QWs) in a thick MOL in order to keep good spatial resolution. When a Cd1–xMn x Te QW is inserted in an optical cavity the Faraday rotation can be further increased by using a Bragg structure, that is, placing the QWs at antinodes of the electric field in the optical cavity.

In order to make an optical cavity Al, or the superconductor itself if it is a good reflector, should be evaporated on top of the cap layer as a back mirror. In order to obtain the largest Faraday rotation, a minimum of the reflectivity spectrum has to be matched with the QWs transition. This is the resonance condition. However, the reflectivity, which decreases at the QW transition when the resonance condition is fulfilled, has to be kept to a reasonable level that is compatible with a good signal-to-noise ratio. Therefore an optimum number of QWs has to be found in multi-quantum-well structures. The Mn composition of the QWs also has to be optimized. It governs not only the Zeeman splitting of the excitonic transition but also the linewidth.

The time decay of the magnetization of the Mn ions in SMSC is known to be fast, in the subnanosecond range, since it is governed by spin–spin relaxation rather than by spin–lattice relaxation [11.43,44]. This opens the way for time-resolved imaging studies with good temporal resolution, e.g. the study of the dynamics of flux penetration. On the other hand, there are also problems in fabrication. It is troublesome to remove the GaAs substrate by chemical etching while retaining fragile layers. Furthermore, the chemical etching solution strongly reacts with some metals e.g. lead.

Lead with europium sulfide magnetooptic layers

Since lead reacts strongly with the chemical etching solution used to remove the GaAs substrate from the SMSC sample, we tried to use EuS as a MOL. The sample is shown in Fig. 11.33.

The thickness of the EuS MOL fabricated by Joule-effect evaporation on a 0.4 mm glass substrate is 145 ±15 nm, hence it is thin enough for good spatial resolution. A 150-nm-thick Al layer is evaporated on the EuS MOL as a mirror in order to get high reflectivity. The EuS MOL is pressed onto Pb with a weight and left overnight.

A typical reflectivity spectrum and Faraday angle curve of EuS MOL are displayed in Fig. 11.34. The Ti:sapphire laser is tuned to 700 nm to get good reflected light and large Faraday rotation angle from the sample. Indeed EuS MOL may be expected to disturb the flux pattern, but no self-magnetic domain could be observed in this EuS sample, probably because the layer consists of a mixture of EuS and EuO. Figure 11.35 shows the images of the magnetic flux pattern at the surface of a 120-μm-thick superconducting lead film for magnetic field values of 20 mT. The temperature is 2 K, that is, much lower than the critical temperature of lead (7.18 K). The critical field of lead at 2 K is Hc(2 K) =74.1 mT.

The image of the magnetic flux patterns at the surface of 120-μm-thick superconducting Pb revealed with an EuS magneto-optical layer in an applied magnetic field of 20 mT (h = 0.270). Normal and superconducting domains appear in black and gray, respectively. The image size is 233 μm ×233 μm. The temperature is T =2 K (after T. Okada, unpublished)

The raw image has to be processed in order to correct the intensity fluctuations of the reflected light for thickness fluctuations in the MOL and for the sensitivity of CCD detector. In order to obtain an intensity level proportional to the Faraday angle, the gray level of each pixel should be calculated as

where I H α is the raw gray level obtained for an applied field H and an analyzer angle α and θ is the Faraday angle. The quality of the image is further improved by Fourier-transform filtering, but the magnetic contrast is not very good because the contact between lead and the MOL may not be as good as for an evaporated metallic sample.

Indium with quantum-well magnetooptic layers

The sample consists of an indium layer as the superconducting material and a Cd1–xMn x Te/Cd1–yMg y Te heterostructure as the MOL. The structure is sketched in Fig. 11.36 [11.40].

The semiconductor heterostructure was grown by molecular beam epitaxy. The Cd0.85Mg0.15Te buffer was deposited on (001)GaAs substrate without rotation of the sample holder, resulting in a slight gradient of both the thickness of the buffer and its refractive index. The buffer was followed by three 10-nm Cd0.94Mn0.06Te QWs separated by Cd0.85Mg0.15Te barriers that were 55.8 nm thick. A 10-μm-thick indium layer was then evaporated directly on top of the 61.7-nm Cd0.85Mg0.15Te cap layer. The MOL is designed as an optical cavity and indium serves both as the superconducting layer and the cavity back mirror. The first and third QWs are nearly located at antinodes of the electric field in the cavity in order to enhance Faraday rotation. The indium-covered side was glued onto a glass plate and the GaAs substrate was removed by mechanical thinning and selective chemical etching. For the Faraday microscopy the spatial resolution was checked as 1 μm, with a magnetic resolution of 10 mT; the range of temperature for use should be up to 20 K.

A typical reflectivity spectrum and Faraday angle curve are displayed in Fig. 11.37. The reflectivity spectrum presents an interference pattern associated with the metal/semiconductor/vacuum optical cavity. This pattern shows a spectral shift when the illuminating spot is scanned along the sample surface, according to the thickness variation of the cavity. The maximum Faraday angle is observed when a minimum of reflectivity is matched with the QW transition energy (the cavity resonance condition). The peak Faraday angle was found to vary linearly with the applied magnetic field H. The measured slope equals 54.4° T−1 at the QWs (e1-hh1) exciton transition. Fig. 11.38 shows an intermediate state structure at the surface of the indium superconducting layer obtained at T =1.9 K at magnetic field values 6.3 mT. The critical field of indium at 1.9 K is 19.4 mT.

The image of the magnetic flux patterns at the surface of 10-μm-thick superconducting In film revealed with the Cd1–xMn x Te QWs structure as the magneto-optical layer in an applied magnetic field of 6.3 mT (h = 0.325). Normal and superconducting domains appear in black and gray, respectively. The edge of the indium film can be seen on the right-hand side of images where the flux pattern disappears. The image size is 527 μm ×527 μm. The analyzer was uncrossed by α =20° with respect to the polarizer. The temperature is T =1.9 K (after T. Okada, unpublished)

Black and gray areas are normal and superconducting state, respectively. The intricate flux pattern results from the competition between long-range repulsive magnetic interactions between normal zones and short-range interactions due to the positive interfacial energy between normal and superconducting areas [11.45,46].

In the same way as for the lead sample, the raw images were processed in order to eliminate intensity fluctuations of the reflected light due to thickness inhomogeneities of the MOL and the sensitivity of CCD detector. The quality of the image is further improved compared with Fig. 11.35.

Nonlinear Optics and Ultrashort Pulsed Laser Application

Nonlinear optical effects with lasers are utilized in frequency conversion, optical communication (Sect. 11.5) and spectroscopy of materials. This chapter deals with nonlinear optics and its application to pulsed lasers.

Nonlinear Susceptibility

Definition of Nonlinear Susceptibility and Symmetry Properties

Nonlinear optical phenomena originate from nonlinearity of materials. Linear and nonlinear polarization induced in the material is expressed as follows