Abstract

Sound transfer from the human ear to the brain is based on three quite different neural coding principles when the continuous temporal auditory source signal is sent as binary code in excellent quality via 30,000 nerve fibers per ear. Cochlear implants are well-accepted neural prostheses for people with sensory hearing loss, but currently the devices are inspired only by the tonotopic principle. According to this principle, every sound frequency is mapped to a specific place along the cochlea. By electrical stimulation, the frequency content of the acoustic signal is distributed via few contacts of the prosthesis to corresponding places and generates spikes there. In contrast to the natural situation, the artificially evoked information content in the auditory nerve is quite poor, especially because the richness of the temporal fine structure of the neural pattern is replaced by a firing pattern that is strongly synchronized with an artificial cycle duration. Improvement in hearing performance is expected by involving more of the ingenious strategies developed during evolution.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

1 The Hair Cell Transforms Mechanical into Neural Signals

The exceptional performance and the extreme high sensitivity of the auditory system are excellent examples of evolution. It was developed together with the lateral line organ of fishes, a sensory organ that consists of a canal running along both sides of the body, communicating via sensory pores through scales to the exterior. Analyzing the vibrations of the surrounding water as spatial and temporal functions, the lateral line system helps the fish to avoid collisions, to orient itself in relation to water currents, and to locate prey. This way it is a touch sense over distance. Among such amphibians as frogs, lateral line organs and their neural connections disappear during the metamorphosis of tadpoles because as adults they need no longer to feed under water. The higher land-inhabiting vertebrates (reptiles, birds, and mammals) do not possess the lateral line organs anymore. However, their deeply situated labyrinthine sense organs use the same principle of detecting information from fluid motion via hair cells (Fig. 12.1). Cell membrane voltage fluctuations of a single hair cell are able to respond synchronized to high audible input frequencies of 20 kHz in man, and to even essentially higher frequencies in bats, whales, or dolphins.

Scheme of a typical inner hair cell (IHC). The main task of hair cells in sensory systems is to detect forces which deflect their hairs (stereocilia). The apical part of the cell including the stereocilia enters the endolymphatic fluid, which is characterized by its high potassium concentration [K + ]. The transmembrane voltage of − 40 mV for IHC and − 70mV for outer hair cells (OHC) is caused by the K concentration gradient between cell body and cortilymph. Current influx that changes the receptor potential occurs mainly through the ion channels of the stereocilia: stereociliary displacement to the lateral side of the cochlea causes an increase in ion channel open probability and hence depolarization of the receptor potential, whereas stereociliary displacement to the medial side results in hyperpolarization. Small variations in the receptor potential (about 0.1 mV) cause a release of neurotransmitter which may cause spiking in the most sensitive fibers of the auditory nerve [1]

Detailed temporal information resulting from the mechanical input signal is recorded by the hair cell as intracellular potential fluctuations. But most of the temporal fine structures would be lost if a single neural connection as schematically shown in Fig. 12.1 has to handle signal transfer to the brain because of the slower operation of neural signals. This problem is solved by a change from serial to parallel coding: a single human inner hair cell has in average eight synaptic release zones that distribute the information to eight spiking nerve fibers. This method is called volley principle (Fig. 12.2).

The volley principle. A periodic acoustic stimulus will cause firing patterns in different axons innervating one inner hair cell, e.g., as marked in this regular example with (a)–(e). The combined signal contains all the minima of sound even when no single fiber is able to fire with the high frequency of the source signal. After [2]

In the following, we will introduce the three differing neural auditory coding principles used by nature to handle the wide range of 120 dB for audible signal amplitudes with hair cells of extremely smaller operating ranges. With cochlear implants, many deaf people obtain auditory perception by electrical stimulation of the auditory nerve. A disadvantage in hearing quality with the currently available devices is, however, that signal processing strategies mimic only a single method of the three natural principles.

2 The Human Ear

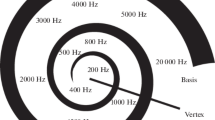

In the healthy ear, the acoustic signal which is physically a vibration of air pressure causes an analogous movement of the hairs of the hair cells because the motion is mechanically transformed into the liquid environment (Fig. 12.3). The hair cells finally transform the acoustical input into neural signals. Frequency and loudness are the two essential items of the acoustical input that are transmitted via nerve fibers from the inner ear to the brain. Sometimes the cochlea is called a frequency analyzer because of its mechanical tuning properties. This way frequency sensitivity is a function of the place of stimulation, i.e., the hair cells at the beginning of the cochlea response sensitively to high frequencies, whereas only deep tones will reach the hair cells at the apical end of the hearing organ. Increasing the sound intensity causes higher firing rates in an increasing number of auditory nerve fibers.

(a) The main parts of the human ear. Part of a cross section marked as A–A is shown in (b). (c) and (d) are front and top view schemata of the uncoiled cochlea. The shaded area in (d) represents the basilar membrane which is the elastic part between the upper and the lower chambers. The 35-mm long basilar membrane is stiffer against the basal end but broadens from 0.1 to 0.5 mm to the apical end and it changes the resonance properties continuously. When a tone starts with a compression, the stapes will press the oval window membrane inside (broken lines in (c)). As a consequence, part of the perilymphatic fluid will escape through the helicotrema, which connects the scala vestibule and the scala tympani. The higher pressure in the scala vestibuli bends also the basilar membrane downward. The broad widening (long broken line in (c)) sharpens with time when sound is presented periodically at constant frequency. (b) If the pressure in the scala vestibuli is higher than that in the scala tympani, the organ of Corti and other parts of the scala media move down due to the compliance of the basilar membrane. The resistance of Reisner’s membrane is very low and can be neglected. The movement of the basilar membrane will be registered by the organ of Corti, which transforms the mechanical movement of the basilar membrane into neural signals with the help of the inner hair cells. At the base of an inner hair cell, several synapses are situated. Each of these synapses is connected with an axon of the auditory nerve. The axons of the inner hair cells send their information to the brain (afferent neurons). Three rows of outer hair cells receive their information mostly from the brain (efferent neurons)

3 Place Theory Versus Temporal Theory

There is an old controversy whether the inner ear uses the place coding principle or the temporal structure of the spiking pattern to transmit the information of an acoustic signal. The “place theory” was introduced by Helmholtz. He assumed already that: (1) the basilar membrane consists of segments with varying stiffness, (2) frequency selectivity is based on the resonance of a corresponding part, and (3) each part is connected with a nerve fiber [3]. The most extreme form of the “temporal theory” was put forward by Rutherford, who speculated that each hair cell in the cochlea responds to every tone entering the inner ear [4].

Hundred years later both theories were applied independently as basis for two essentially different signal processing strategies in cochlear implants, and every success concerning acoustic perception seemed to underline the validity of just one of both theories. Early implants transmitted the electrical stimulus as a continuous voltage, analog to an amplitude compressed acoustical input to a single active electrode placed, e.g., in the scala tympani close to the nerve fibers of the auditory nerve [5]. As vowel discrimination is possible with such a single channel method, this was a proof against a pure place theory. On the other hand, all modern cochlear implants distribute the signals based only on the frequency distribution in the acoustic source signal according to the tonotopic organization of the cochlea that is according to the place principle. Neglecting the temporal fine structure of the input is one reason for deficient speech understanding with cochlear implants, especially in noisy environment. In a computer simulation study of inner ear mechanics, we demonstrated that the sharp frequency location needs time for development [6] and it may be more important for music than speech perception responses.

We should learn from hearing physiology that the brain uses several strategies in parallel to obtain the relevant information from the auditory input signal. One method which is not much considered is the ability to suppress neural signals to avoid “memory overflows” but without losing important inputs and to handle a mixture of neural spiking activity with and without information.

An interesting example is the visual system. When the lighting is gradually reduced the visual receptor cell input changes from cones to rods, demanding for a quite different signal management – but usually we are not aware that our visual information changes from colorful to black and white. Another important method is the handling of noise to detect weak signal, known as stochastic resonance [7]. Stochastic resonance is essentially a statistical phenomenon resulting from an effect of noise on information transfer and processing that is observed in both man-made and naturally occurring nonlinear systems [8].

4 Noise-Enhanced Auditory Information

At the threshold of hearing, the auditory system is able to detect vibrations of the basilar membrane with maximum amplitudes smaller than 10 − 10 m. Stochastic stereociliary movement caused by Brownian motions is with 3.5 nm [9] about 50 times stronger than the deterministic influence from the acoustic threshold signal. The Brownian motion is partly responsible for a phenomenon called “spontaneous activity”: without any acoustic stimulus firing rates up to 160 spikes/s have been measured in auditory nerve fibers. The spontaneous firing rate of a nerve is strongly related to its sensitivity: the group of fibers without spontaneous activities are not stimulated by low-level signals at all [10]. However, fibers with high spontaneous activity transport the information of very weak stimuli by evolving regularities in their interspike times (time intervals between spikes). Nearly two-third of all auditory nerve fibers have high spontaneous activities with more than 40 spikes/s in silence [11] (Fig. 12.4). Therefore, in a healthy human ear, about 20,000 fibers are expected to react this way to low-level signals. However, due to the tonotopic principle, weak sinusoidal signals will cause only some hundreds of fibers to respond to the input signal and the number of neurons answering to an even weaker acoustic stimulus will further decrease. Our research group has simulated both the stereociliary movement as reaction to the Brownian motion of the inner ear fluids [12] and the IHC voltage changes caused by stereociliary motions [1]. It was shown that the contribution of Brownian motion can be approximated by Gaussian noise, low pass filtered mainly by the influences of the resistance and the capacity of the hair cell membrane.

Distribution of spontaneous firing rates in the auditory nerve of chinchillas. Three groups of fibers can be detected: fibers with (1) no or low spontaneous rate (19% of the fibers have less than 5 spikes/s), (2) medium, and (3) high ( > 40 spikes/s) spontaneous rate. The fibers of group 3 are able to detect low-level acoustic signals [11]

In contrast to the hypothesis that noise limits perception [13], it is shown that for a signal which is too weak to reach threshold level, neural signaling is possible when noise is added. Such noisy signals result from thermal Brownian motion as well as by active forces from outer hair cells (Fig. 12.5). However, it is an additional task for the neural system to check whether an external signal is contained in the noisy neural input, and for positive cases to become aware of the signal features. The signal extraction method is based on the higher spiking probability during those periods where the positive part of the tone contributes to enlargements of the noise + tone signal.

Simulated voltage changes in an inner hair cell caused by a 500-Hz sinus tone and by the additional influence of noise (zigzag curve corresponds to the sinus + noise signal). An exponentially shaped “threshold curve” is shown which is used for calculating the firing times in a specific auditory nerve fiber: the threshold curve goes down to a minimum threshold value of about 0.1 mV. If IHC voltage change crosses this value, neurotransmitter release can occur, but afterward, for a special time (recovery period), spiking is rendered in a way described by the threshold curve. Note that the sinusoidal signal is too small to reach the threshold curve. Only with the help of noise, it can be detected in the neural pattern. Amplitude of the sinusoidal signal is 0.02 mV, rms (round mean square) amplitude of noise: 0.2 mV resulting in a signal-to-noise ratio of 0.1

The next examples show simplified computer simulations of the first 5 ms of basilar membrane motion when a 1000 Hz tone is applied. Based on finite element simulations of inner ear forces, we assume that the tip of the longest inner hair cell stereocilia vibrate with an amplitude similar in size with that of the basilar membrane at the same position [14]. According to Fig. 12.6, spiking is therefore expected at places as marked by flash arrows in noiseless environment.

Basilar membrane motion as function of time (0–5 ms) and space (0–35 mm) from a simple mechanical cochlear model. A weak pure 1,000 Hz tone causes vibrations in the 35-mm long centerline of the uncoiled basilar membrane, which finally has a region that reaches periodically a threshold limit of 2 nm. According to the tonotopic principle, the strongest vibration is at a specific position marked by an arrow on the left side. As indicated by the curves close to this arrow, amplitudes rise and fall exponentially as functions of basilar membrane’s length coordinate. Decrease is quicker than increase, and this is the reason why low frequencies cannot be detected (quick signal lost) at positions a few millimeter after the “point of resonance” (right side upward from the arrow = apical direction)

By adding noise as done in Fig. 12.5, the signal seems to disappear (Fig. 12.7). The situation becomes clearer as soon as more sensor elements are involved (Fig. 12.8). The source signal is now easy to recognize, from both the tonotopic and the temporal pattern: The frequency is recognized from the position with maximum regularity (place theory) as well as from the periodicity in spiking pattern (temporal theory) seen as dark stripes with 1 mm distances in Fig. 12.8. Under no-noise condition, a basilar membrane region with about 120 IHCs reaches the 2 nm threshold. This region is marked by two horizontal lines in Fig. 12.8. Remarkably the neural pattern becomes organized also outside of this supra-threshold band. In the next task, signal intensity is reduced by a factor 5 leading to a signal-to-noise ratio of 0.33 (Fig. 12.9). The 5 ms window again has now too few data for a definite decision. However, as most acoustical signals have a longer duration, we use now the next ten periods of 5 ms and we find in the superposition of this 50 ms data (Fig. 12.9, right) regularities even when covering about the upper half of the picture with a piece of paper. Shifting the paper in vertical direction gives an estimate for the detectable intensity corresponding to the threshold of hearing of a 50 ms, 1000 Hz tone.

Simulated neurotransmitter release from 70 inner hair cells that are equally distributed along the 35 mm length of basilar membrane. Gray levels indicate the basilar membrane maximum amplitudes for the 70 lines as functions of time, darkness corresponds with amplitude size – white means 2 nm are not reached. Left: signal without noise, center: noise without signal, right: signal + noise. All 70 hair cells cause spikes in the auditory nerve as marked by the gray rectangles, but the common input signal is lost in noise

Neurograms of the auditory nerve (spiking pattern) as pictures of neurotransmitter release from all the 350 inner hair cells that are within an “octave region” containing maximum vibration. Same method as in Fig. 12.7. Left: signal without noise, center: noise without signal, right: signal + noise

Same situation as in Fig. 12.8 but for a five times smaller acoustical input signal. Left: signal without noise is empty, the place is used by a line showing the basilar membrane maximum vibration amplitude for 350 positions of IHCs with a highest value of 0.7 nm. Center: signal + noise; an order in the pattern is difficult to recognize. Right: superposition of ten 5 ms windows makes the order easy to see

5 Auditory Neural Network Sensitivity Can be Tested with Artificial Neural Networks

In a rather silent environment, we receive a lot of spontaneous activity and permanently the auditory part of the brain has to find out whether the auditory nerve input delivers just noise or noise with signal. Note that the signal can be a pure tone, a combination of tones, speech, or even noise. Just now, we have treated this task visually, that is by solving a related image recognition problem. We know that the visual system is rather good for such a job. Interestingly, artificial neural network techniques are also rather successful for image recognition tasks, and therefore we tested this tool to see what our brain can do in a similar situation using the biological neural network [15]. We found, e.g., that sinusoidal stimuli with a signal-to-noise ratio as low as 1/10 can be recognized from the simulated firing pattern of a single auditory nerve fiber. This seems to be rather a theoretical result as a 20 s data set is needed, and we cannot assume to store the single fiber data for such a long duration biologically. However, the same data will be selected by 100 fibers with high spontaneous activities from the same region in 200 ms which is more realistic. One should be aware about a quadratic relation for the sensitivity when all data are processed. This means we need to analyze a 200-ms interval from 400 fibers to reduce threshold of hearing by a factor of 2, resulting in a signal noise ratio of 1/20. Developing this technique, nature had to find a balance between number of fibers, their post-processing units, and the sensitivity of hearing. It seems to be a good choice that every inner hair cell has connections to several auditory nerve fibers, and most of them have spontaneous activities to support the “stochastic resonance phenomenon.”

6 Cochlear Implants Versus Natural Hearing

After the pace maker for the heart, cochlear implants are the most successful devices for electrical nerve or muscle stimulation. It is surprising that auditory perceptions with these devices are rather acceptable in spite of the fact that they produce a quite unnatural spiking pattern in the auditory nerve fibers. The main difference to natural pattern is a strong synchronization of spiking times in large populations of fibers. With electrical stimulation, it is possible to obtain a bit higher spiking activities in the single nerve fibers, but the advantage of the volley principle (Fig. 12.2) is lost. The individuality of signaling using nearly 30,000 data lines in parallel is missed, and this is a pity as the richness of the neural auditory pattern is needed for a high fidelity quality in acoustic perception. Excellent development was done in miniaturization to obtain small devices which can be hidden behind or even in the ear. Many cochlear implant users have a bad speech understanding even under best acoustical conditions.

An essential restriction in obtaining more natural firing patterns results from the stimulation strategies generally used in human cochlear implants: all modern implants are purely based on the place principle. A common method is to activate in a cyclic manner up to 22 stimulating electrodes which are distributed along the cochlea: Their individual stimulus intensity locally activate the cochlear nerve according to the spectral characteristics of the auditory signal. But instead of additional support with temporal auditory information, the cycle period generates a virtual constant nonnatural temporal rhythm which is conducted by all stimulated fibers.

In relation with the third biological coding principle, several authors have investigated in adding noisy signals to the stimulus [16, 17]. A shortcoming of this approach is, however, that the added noise favors phase locking to the stronger signal parts of the noise and sustains artificially noise-related perceptions rather than really support the volley principle.

In general, electrical stimulation with cochlear implants obeys to the “all or nothing” low: either a spike is generated in an auditory nerve fiber or not. This principle causes phase locking between stimulus and response, and therefore much sharper poststimulus histograms for electrical than acoustical stimulation. The addition of noise is of some help but cannot achieve the natural distribution of fiber activation. However, a high-frequency background signal disturbs the synchronized firing within populations of neighbored fibers.

Therefore, a better method to enhance the temporal fine structure according to the volley principle seems the constant stimulation with a high-frequency signal with an intensity close to the threshold of fibers in the vicinity of the electrode. This signal alone will generate some stochastic individual firing that interrupts common phase-locked response rhythms as a consequence of refractory properties of the already pseudo-spontaneous spiking fibers. The phenomenon was discovered and analyzed to some extent by Rattay [18, 19, 20] and others [21, 22, 23].

7 Discussion

Our knowledge about the neural coding principles in mammalian auditory nerve fibers is primarily based on animal experiments. Single cell recordings enlightened our understanding how an acoustical signal is represented in the spiking pattern of the auditory nerve [6, 10, 24, 25, 26, 27]. As the main elements of mammalian cochleae are quite similar and because of the restrictions for gathering human data, it is generally assumed that the same firing behavior can be expected in man. However, in the somatic region afferent human cochlear neurons are quite unique [28]. First, most neurons are not shielded by myelinated in this region, and second, many of them are gathered to clusters with two to four [29] neurons having a common insulation by myelin.

This morphological difference is of major relevance for the propagation of an action potential (AP) in the healthy ear and also responsible for quite different excitation patterns in case of cochlear implants. Both human particularities are expected to affect the neural pattern essentially, resulting in a specific human physiologic hearing performance. A first analysis of the electrical features of a non- or poorly myelinated somatic region demonstrated that the human afferent cochlear neuron is essentially lesser robust in spike conduction as that of cat and guinea pig, the preferred experimental animals [30]. Is loss of myelin in the somatic region a human imperfection caused perhaps by a genetic defect? A larger delay and a reduction in sensory information by loss of spikes seem to result in disadvantages only. Tylstedt and Rask-Andersen [31] speculate whether unique formations between human spiral ganglion cells may constitute interactive transmission pathways. These may be in the low-frequency region and may increase plasticity and signal acuity related to the coding of speech.

8 Conclusion

It is surprising that a combination of inner ear mechanics and a sensor cell type with a rather small operating range allows the high-quality detection of acoustic signals within a range of more than six orders. Impressing is furthermore how those noisy elements which cannot be eliminated, like Brownian motion of hair cell stereocilia, are used by nature for signal amplification in one of three quite different signaling strategies. When we recognize anatomical curiosities, e.g., the clustering of cell bodies in the auditory nerve which is unique in man, we should try to understand the neurophysiologic consequences, especially when we develop neuroprostheses that have to replace the sensory input. On one hand, it is unbelievable that artificially created neural pattern with a lot of nonnatural characteristics results at least in a low quality hearing in deaf people. But the challenge is to find solutions that add more of the natural features into the artificially evoked patterns.

References

F. Rattay, I.C. Gebeshuber, A.H. Gitter, The mammalian auditory hair cell: a simple electric circuit model. J. Acoust. Soc. Am. 103(3), 1558–1565 (1998)

E.G. Wever, Theory of Hearing (Wiley, New York, 1949)

H. Helmholtz, Die Lehre von den Tonempfindungen als Physiologische Grundlage für die Theorie der Musik (Vieweg, Braunschweig, 1863) [English translation: A.J. Ellis, On the Sensations of Tones as a Physiological Basis for the Theory of Music (Longmans Green, London, 1875)]

W. Rutherford, A new theory of hearing. J. Anat. Physiol. 21, 166–168 (1886)

E.S. Hochmair, I.J. Hochmair-Desoyer, Percepts elicited by different speech-coding strategies, in Cochlear Prostheses, vol 405, ed. by C.W. Parkins, S.W. Anderson, 268–279 (1983) (Ann. NY Acad. Sci.)

F. Rattay, P. Lutter, Speech sound representation in the auditory nerve: computer simulation studies on inner ear mechanisms. Z. Angew. Math. Mech. 77(12), 935–943 (1997)

F. Moss, L.M. Ward, W.G. Sannita, Stochastic resonance and sensory information processing: a tutorial and review of application. Clin. Neurophysiol. 115, 267–281 (2004)

K. Wiesenfeld, F. Moss, Stochastic resonance and the benefits of noise: from ice ages to crayfish and SQUIDs. Nature 373, 33–36 (1995)

W. Denk, W.W. Webb, A.J. Hudspeth, Mechanical properties of sensory hair bundles are reflected in their Brownian motion measured with a laser interferometer. Proc. Natl. Acad. Sci. USA 86, 5371–5375 (1989)

M.B. Sachs, P.J. Abbas, Rate versus level functions of auditory nerve fibers in cats: Tone burst stimuli. J. Acoust. Soc. Am. 56, 1835–1847 (1974)

E.M. Relkin, J.R. Doucet, Recovery from prior stimulation. I: relationship to spontaneous firing rates of primary auditory neurons. Hear. Res. 55, 215–222 (1991)

W.A. Svrcek-Seiler, I.C. Gebeshuber, F. Rattay, T.S. Biro, H. Markum, Micromechanical models for the Brownian motion of hair cell stereocilia. J. Theor. Biol. 193(4), 623–630 (1998)

W. Bialek, Quantum noise and the threshold of hearing. Phys. Rev. Lett. 54, 725–728 (1985)

W. Müller, Untersuchung der Nachschwingzeit in der Cochlea unter Berücksichtigung der Reissnerschen Membran (in German), Master Thesis, TU Vienna, 1996

F. Rattay, A. Mladenka, J. Pontes Pinto, Classifying auditory nerve patterns with neural nets: a modeling study with low level signals. Simul. Pract Theory 6, 493–503 (1998)

R.P. Morse, E.F. Evans, Enhancement of vowel encoding for cochlear implants by addition of noise. Nat. Med. 2, 928–932 (1996)

M. Chatterjee, M.E. Robert, Noise enhances modulation sensitivity in cochlear implant listeners: stochastic resonance in a prosthetic sensory system? J. Assoc. Res. Otolaryngol. 2(2), 159–171 (2001)

F. Rattay, High frequency electrostimulation of excitable cells. J. Theor. Biol. 123, 45–54 (1986)

F. Rattay, Electrical Nerve Stimulation: Theory, Experiments and Applications (Springer Wien, New York, 1990)

F. Rattay, Basics of hearing theory and noise in cochlear implants. Chaos Solitons Fractals 11, 1875–1884 (2000)

L. Litvak, B. Delgutte, D. Eddington, Auditory nerve fiber responses to electric stimulation: modulated and unmodulated pulse trains. J. Acoustic. Soc. Am. 110(1), 368–379 (2001)

R.S. Hong, J.T. Rubinstein, Conditioning pulse trains in cochlear implants: effects on loudness growth. Otol. Neurotol. 27(1), 50–56 (2006)

B.S. Wilson, R. Schatzer, E.A. Lopez-Poveda, X. Sun, D.T. Lawson, R.D. Wolford, Two new directions in speech processor design for cochlear implants. Ear. Hear. 26(4), 73S–81S Suppl. S (2005)

N.Y.S. Kiang, Discharge Pattern of Single Fibres in the Cat’s Auditory Nerve (MIT Press, Cambridge, 1965)

J.F. Brugge, D.J. Anderson, J.E. Hind, J.E. Rose, Time structure of discharges in single auditory nerve fibers of the squirrel monkey in response to complex periodic sounds. J. Neurophysiol. 32(3), 386–401 (1969)

S.A. Shamma, Speech processing in the auditory system. I: the representation of speech sounds in the responses of the auditory nerve. J. Acoust. Soc. Am. 78, 1612–1621 (1985)

E. Javel, Shapes of cat auditory nerve fiber tuning curves. Hear. Res. 81(1–2), 167–188 (1994)

J.B. Nadol, Jr, Comparative anatomy of the cochlea and auditory nerve in mammals. Hear. Res. 34, 253–266 (1988)

S. Tylstedt, A. Kinnefors, H. Rask-Andersen, Neural interaction in the human spiral ganglion: a TEM study. Acta Otolaryngol. 117(4), 505–512 (1997)

F. Rattay, P. Lutter, H. Felix, A model of the electrically excited human cochlear neuron. I. Contribution of neural substructures to the generation and propagation of spikes. Hear. Res. 153(1–2), 43–63 (2001)

S. Tylstedt, H. Rask-Andersen, A 3-D model of membrane specializations between human auditory spiral ganglion cells. J. Neurocytol. 30(6), 465–473 (2001)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2011 Springer-Verlag Berlin Heidelberg

About this chapter

Cite this chapter

Rattay, F. (2011). Improving Hearing Performance Using Natural Auditory Coding Strategies. In: Gruber, P., Bruckner, D., Hellmich, C., Schmiedmayer, HB., Stachelberger, H., Gebeshuber, I. (eds) Biomimetics -- Materials, Structures and Processes. Biological and Medical Physics, Biomedical Engineering. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-11934-7_12

Download citation

DOI: https://doi.org/10.1007/978-3-642-11934-7_12

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-11933-0

Online ISBN: 978-3-642-11934-7

eBook Packages: Physics and AstronomyPhysics and Astronomy (R0)