Abstract

Speech coding is the art of reducing the bit rate required to describe a speech signal. In this chapter, we discuss the attributes of speech coders as well as the underlying principles that determine their behavior and their architecture. The ubiquitous class of linear-prediction-based coders is used as an illustration. Speech is generally modeled as a sequence of stationary signal segments, each having unique statistics. Segments are encoded using a two-step procedure: (1) find a model describing the speech segment, (2) encode the segment assuming it is generated by the model. We show that the bit allocation for the model (the predictor parameters) is independent of overall rate and of perception, which is consistent with existing experimental results. The modeling of perception is an important aspect of efficient coding and we discuss how various perceptual distortion measures can be integrated into speech coders.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

The Objective of Speech Coding

In modern communication systems, speech is represented by a sequence of bits. The main advantage of this binary representation is that it can be recovered exactly (without distortion) from a noisy channel (assuming proper system design), and does not suffer from decreasing quality when transmitted over many transmission legs. In contrast, analog transmission generally results in an increase of distortion with the number of legs.

An acoustic speech signal is inherently analog. Generally, the resulting analog microphone output is converted to a binary representation in a manner consistent with Shannonʼs sampling theorem. That is, the analog signal is first band-limited using an anti-aliasing filter, and then simultaneously sampled and quantized. The output of the analog-to-digital (A/D) converter is a digital speech signal that consists of a sequence of numbers of finite precision, each representing a sample of the band-limited speech signal. Common sampling rates are 8 and 16 kHz, rendering narrowband speech and wideband speech, respectively, usually with a precision of 16 bits per sample. For the 8 kHz sampling rate a logarithmic 8-bit-per-sample representation is also common.

Particularly at the time of the introduction of the binary speech representation, the bit rate produced by the A/D converter was too high for practical applications such as cost-effective mobile communications and secure telephony. A search ensued for more-efficient digital representations. Such representations are possible since the digital speech contains irrelevancy (the signal is described with a higher precision than is needed) and redundancy (the rate can be decreased without affecting precision). The aim was to trade off computational effort at the transmitter and receiver for the bit rate required for the speech representation. Efficient representations generally involve a model and a set of model parameters, and sometimes a set of coefficients that form the input to the model. The algorithms used to reduce the required rate are called speech-coding algorithms, or speech codecs.

The performance of speech codecs can be measured by a set of properties. The fundamental codec attributes are bit rate, speech quality, quality degradation due to channel errors and packet loss, delay, and computational effort. Good performance for one of the attributes generally leads to lower performance for the others. The interplay between the attributes is governed by the fundamental laws of information theory, the properties of the speech signal, limitations in our knowledge, and limitations of the equipment used.

To design a codec, we must know the desired values for its attributes. A common approach to develop a speech codec is to constrain all attributes but one quantitatively. The design objective is then to optimize the remaining attribute (usually quality or rate) subject to these constraints. A common objective is to maximize the average quality over a given set of channel conditions, given the rate, the delay, and the computational effort.

In this chapter, we attempt to discuss speech coding at a generic level and yet provide information useful for practical coder design and analysis. Section 14.2 describes the basic attributes of a speech codec. Section 14.3 discusses the underlying principles of coding and Sect. 14.4 applies these principles to a commonly used family of linear predictive (autoregressive model-based) coders. Section 14.5 discusses distortion criteria and how they affect the architecture of codecs. Section 14.6 provides a summary of the chapter.

Speech Coder Attributes

The usefulness of a speech coder is determined by its attributes. In this section we describe the most important attributes and the context in which they are relevant in some more detail. The attributes were earlier discussed in [14.1,2].

Rate

The rate of a speech codec is generally measured as the average number of bits per second. For fixed-rate coders the bit rate is the same for each coding block, while for variable-rate coders it varies over time.

In traditional circuit-switched communication systems, a fixed rate is available for each communication direction. It is then natural to exploit this rate at all times, which has resulted in a large number of standardized fixed-rate speech codecs. In such coders each particular parameter or variable is encoded with the same number of bits for each block. This a priori knowledge of the bit allocation has a significant effect on the structure of the codec. For example, the mapping of the quantization indices to the transmitted codewords is trivial. In more-flexible circuit-switched networks (e.g., modern mobile-phone networks), codecs may have a variable number of modes, each mode having a different fixed rate [14.3,4]. Such codecs with a set of fixed coding rates should not be confused with true variable-rate coders.

In variable-rate coders, the bit allocation within a particular block for the parameters or variable depends on the signal. The bit allocation for a parameter varies with the quantization index and the mapping from the quantization index to the transmitted codeword is performed by means of a table lookup or computation, which can be very complex. The major benefit of variable-rate coding is that it leads to higher coding efficiency than fixed-rate coders because the rate constraint is less strict.

In general, network design evolves towards the facilitation of variable-rate coders. In packet-switched communication systems, both packet rate and size can vary, which naturally leads to variable-rate codecs. While variable-rate codecs are common for audio and video signals, they are not yet commonplace for speech. The requirements of low rates and delays lead to a small packet payload for speech signals. The relatively large packet header size limits the benefits of the low rate and, consequently, the benefit of variable-rate speech coding. However, with the removal of the fixed-rate constraint, it is likely that variable-rate speech codecs will become increasingly common.

Quality

To achieve a significant rate reduction, the parameters used to represent the speech signal are generally transmitted at a reduced precision and the reconstructed speech signal is not a perfect copy of the original digital signal. It is therefore important to ensure that its quality meets a certain standard.

In speech coding, we distinguish two applications for quality measures. First, we need to evaluate the overall quality of a particular codec. Second, we need a distortion measure to decide how to encode each signal block (typically of duration 5-25 ms). The distortion measure is also used during the design of the coder (in the training of its codebooks). Naturally, these quality measures are not unrelated, but in practice their formulation has taken separate paths. Whereas overall quality can be obtained directly from scoring of speech utterances by humans, distortion measures used in coding algorithms have been defined (usually in an ad hoc manner) based on knowledge about the human auditory system.

The only true measure of the overall quality of a speech signal is its rating by humans. Standardized conversational and listening tests have been developed to obtain reliable and repeatable (at least to a certain accuracy) results. For speech coding, listening tests, where a panel of listeners evaluates performance for a given set of utterances, are most common. Commonly used standardized listening tests use either an absolute category rating, where listeners are asked to score an utterance on an absolute scale, or a degradation category rating, where listeners are asked to provide a relative score. The most common overall measure associated with the absolute category rating of speech quality is the mean opinion score (MOS) [14.5]. The MOS is the mean value of a numerical score given to an utterance by a panel of listeners, using a standardized procedure. To reduce the associated cost, subjective measures can be approximated by objective, repeatable algorithms for many practical purposes. Such measures can be helpful in the development of new speech coders. We refer to [14.6,7,8] and to Chap. 5 for more detail on the subject of overall speech quality.

As a distortion measure for speech segments variants of the squared-error criterion are most commonly used. The squared-error criterion facilitates fast evaluation for coding purposes. Section 14.5 discusses distortion measures in more detail. It is shown that adaptively weighted squared error criteria can be used for a large range of perceptual models.

Robustness to Channel Imperfections

Early terrestrial digital communication networks were generally designed to have very low error rates, obviating the need for measures to correct errors for the transmission of speech. In contrast, bit errors and packet loss are inherent in modern communication infrastructures.

Bit errors are common in wireless networks and are generally addressed by introducing channel codes. While the integration of source and channel codes can result in higher performance, this is not commonly used because it results in reduced modularity. Separate source and channel coding is particularly advantageous when a codec is faced with different network environments; different channel codes can then be used for different network conditions.

In packet networks, the open systems interconnection reference (OSI) model [14.9] provides a separation of various communication functionalities into seven layers. A speech coder resides in the application layer, which is the seventh and highest layer. Imperfections in the transmission are removed in both the physical layer (the first layer) and the transport layer (the fourth layer). The physical layer removes soft information, which consists of a probability for the allowed symbols, and renders a sequence of bits to the higher layers. Error control normally resides in the transport layer. However, the error control of the transport layer, as specified by the transmission control protocol (TCP) [14.10], and particularly the automatic repeat requests that TCP uses is generally not appropriate for real-time communication of audiovisual data because of delay. TCP is also rarely used for broadcast and multicast applications to reduce the load on the transmitter. Instead, the user datagram protocol (UDP) [14.11] is used, which means that the coded signal is handed up to the higher network layers without error correction. It is possible that in future systems cross-layer interactions will allow the application layer to receive information about the soft information available at the physical layer.

Handing the received coded signal with its defects directly to the application layer allows the usage of both the inherent redundancy in the signal and our knowledge of the perception of distortion by the user. This leads to coding systems that exhibit a graceful degradation with increasing error rate. We refer to the chapter on voice over internet protocol (IP) for more detail on techniques that lead to robustness against bit errors and packet loss.

Delay

From coding theory [14.12], we know that optimal coding performance generally requires a delay in the transfer of the message. Long delays are impractical because they are generally associated with methods with high computational and storage requirements, and because in real-time environments (common for speech) the user does not tolerate a long delay.

Significant delay directly affects the quality of a conversation. Impairment to conversations is measurable at one-way delays as low as 100 ms [14.13], although 200 ms is often considered a useful bound.

Echo is perceivable at delays down to 20 ms [14.14]. Imperfections in the network often lead to so-called network echo. Low-delay codecs have been designed to keep the effect of such echo to a minimum, e.g., [14.15]. However, echo cancelation has become commonplace in communication networks. Moreover, packet networks have an inherent delay that requires echo cancelation even for low-delay speech codecs. Thus, for most applications codecs can be designed without consideration of echo.

In certain applications the user may hear both an acoustic signal and a signal transmitted by a network. Examples are flight control rooms and wireless systems for hearing-impaired persons. In this class of applications, coding delays of less than 10 ms are needed to attain an acceptable overall delay.

Computational and Memory Requirements

Economic cost is generally a function of the computational and memory requirements of the coding system. A common measure of computational complexity used in applications is the number of instructions required on a particular silicon device. This is often translated into the number of channels that can be implemented on a single device.

A complicating factor is that speech codecs are commonly implemented on fixed-point signal processing devices. Implementation on a fixed-point device generally takes significant development effort beyond that of the development of the floating-point algorithm.

It is well known that vector quantization facilitates an optimal rate versus quality trade-off. Basic vector quantization techniques require very high computational effort and the introduction of vector quantization in speech coding resulted in promising but impractical codecs [14.16]. Accordingly, significant effort was spent to develop vector quantization structures that facilitate low computational complexity [14.17,18,19]. The continuous improvement in vector quantization methods and an improved understanding of the advantages of vector quantization over scalar quantization [14.20,21] has meant that the computational effort of speech codecs has not changed significantly over the past two decades, despite significant improvement in codec performance. More effective usage of scalar quantization and the development of effective lattice vector quantization techniques make it unlikely that the computational complexity of speech codecs will increase significantly in the future.

A Universal Coder for Speech

In this section, we consider the encoding of a speech signal from a fundamental viewpoint. In information-theoretic terminology, speech is our source signal. We start with a discussion of the direct encoding of speech segments, without imposing any structure on the coder. This discussion is not meant to lead directly to a practical coding method (the computational effort would not be reasonable), but to provide an insight into the structure of existing coders. We then show how a signal model can be introduced. The signal model facilitates coding at a reasonable computational cost and the resulting coding paradigm is used by most speech codecs.

Speech Segment as Random Vector

Speech coders generally operate on a sequence of subsequent signal segments, which we refer to as blocks (also commonly known as frames). Blocks consist generally, but not always, of a fixed number of samples. In the present description of a basic coding system, we divide the speech signal into subsequent blocks of equal length and denote the block length in samples by k. We neglect dependencies across block boundaries, which is not always justified in a practical implementation, but simplifies the discussion; it is generally straightforward to correct this omission on implementation. We assume that the blocks can be described by k-dimensional random vectors X

k with a probability density function  for any x

k ∈ℝk, the k-dimensional Euclidian space (following convention, we denote random variables by capital letters and realizations by lower case letters).

for any x

k ∈ℝk, the k-dimensional Euclidian space (following convention, we denote random variables by capital letters and realizations by lower case letters).

For the first part of our discussion (Sect. 14.3.2), it is sufficient to assume the existence of the probability density function. It is natural, however, to consider some structure of the probability density  based on the properties of speech. We commonly describe speech in terms of a particular set of sounds (a distinct set of phones). A speech vector then corresponds to one sound from a countable set of speech sounds. We impose the notion that speech consists of a set of sounds on our probabilistic speech description. We can think of each sound as having a particular probability density. A particular speech vector then has one of a set of possible probability densities. Each member probability density of the set has an a prior probability, denoted as p

I

(i), where i indexes the set. The prior probability p

I

(i) is the probability that a random vector X

k is drawn from the particular member probability density i. The overall probability function of the random speech vector

based on the properties of speech. We commonly describe speech in terms of a particular set of sounds (a distinct set of phones). A speech vector then corresponds to one sound from a countable set of speech sounds. We impose the notion that speech consists of a set of sounds on our probabilistic speech description. We can think of each sound as having a particular probability density. A particular speech vector then has one of a set of possible probability densities. Each member probability density of the set has an a prior probability, denoted as p

I

(i), where i indexes the set. The prior probability p

I

(i) is the probability that a random vector X

k is drawn from the particular member probability density i. The overall probability function of the random speech vector  is then a mixture of probability density functions

is then a mixture of probability density functions

where 풜 is the set of indexes for component densities and  is the density of component i. These densities are commonly

referred to as mixture components. If the set of mixture components is characterized by continuous parameters, then the summation must be replaced by an integral.

is the density of component i. These densities are commonly

referred to as mixture components. If the set of mixture components is characterized by continuous parameters, then the summation must be replaced by an integral.

A common motivation for the mixture formulation of (14.1) is that a good approximation to the true probability density function can be achieved with a mixture of a finite set of probability densities from a particular family. This eliminates the need for the physical motivation. The family is usually derived from a single kernel function, such as a Gaussian. The kernel is selected for mathematical tractability.

If a mixture component does correspond to a physically reasonable speech sound, then it can be considered a statistical model of the signal. As described in Sect. 14.3.4, it is possible to interpret existing speech coding paradigms from this viewpoint. For example, linear prediction identifies a particular autoregressive model appropriate for a block. Each of the autoregressive models of speech has a certain prior probability and this in turn leads to an overall probability for the speech vector. According to this interpretation, mixture models have long been standard tools in speech coding, even if this was not explicitly stated.

The present formalism does not impose stationarity conditions on the signal within the block. In the mixture density, it is reasonable to include densities that correspond to signal transitions. In practice, this is not common, and the probability density functions are usually defined based on the definition that the signal is stationary within a block. On the other hand, the assumption that all speech blocks are drawn from the same distribution is implicit in the commonly used coding methods. It is consistent with our neglect of interblock dependencies. Thus, if we consider the speech signal to be a vector signal, then we assume stationarity for this vector signal (which is a rather inaccurate approximation). Strictly speaking, we do not assume ergodicity, as averaging over a database is best interpreted an averaging over an ensemble of signals, rather than time averaging over a single signal.

Encoding Random Speech Vectors

To encode observed speech vectors x

k that form realizations of the random vector X

k, we use a speech codebook

that consists of a countable set of k-dimensional vectors (the code vectors). We can write

that consists of a countable set of k-dimensional vectors (the code vectors). We can write  , where c

k

q

∈ℝk and 풬 is a countable (but not necessarily finite) set of indices. A decoded vector is simply the entry of the codebook that is pointed to by a transmitted index.

, where c

k

q

∈ℝk and 풬 is a countable (but not necessarily finite) set of indices. A decoded vector is simply the entry of the codebook that is pointed to by a transmitted index.

The encoding with codebook vectors results in the removal of both redundancy and irrelevancy. It removes irrelevancy by introducing a reduced precision version of the vector x k, i.e., by quantizing x k. The quantized vector requires fewer bits to encode than the unquantized vector. The mechanism of the redundancy removal depends on the coding method and will be discussed in Sect. 14.3.3.

We consider the speech vector, X

k, to have a continuous

probability density function in ℝk. Thus, coding based on the finite-size speech codebook  introduces distortion. To minimize the distortion associated with the coding, the encoder selects the code vector (codebook entry) c

k

q

that is nearest to the observed vector x

k according to a particular distortion measure,

introduces distortion. To minimize the distortion associated with the coding, the encoder selects the code vector (codebook entry) c

k

q

that is nearest to the observed vector x

k according to a particular distortion measure,

Quantization is the operation of finding the nearest neighbor in the codebook. The set of speech vectors that is mapped to a particular code vector c k q is called a quantization cell or Voronoi region. We denote the Voronoi region as 풱 q ,

where we have ignored that generally points exist for which the inequality is not strict. These are boundary points that can be assigned to any of the cells that share the boundary.

Naturally, the average [averaged over  ] distortion of the decoded speech vectors differs for different codebooks. A method for designing a coder is to find the codebook, i.e., the set

] distortion of the decoded speech vectors differs for different codebooks. A method for designing a coder is to find the codebook, i.e., the set  , that minimizes the average distortion over the speech probability density, given a constraint on the transmission rate. It is not known how to solve this problem in a general manner. Iterative methods (the Lloyd algorithm and its variants, e.g., [14.22,23,24]) have been developed for the case where |풬| (the cardinality or number of vectors in

, that minimizes the average distortion over the speech probability density, given a constraint on the transmission rate. It is not known how to solve this problem in a general manner. Iterative methods (the Lloyd algorithm and its variants, e.g., [14.22,23,24]) have been developed for the case where |풬| (the cardinality or number of vectors in  ) is finite. The iterative approach is not appropriate for our present discussion for two reasons. First, we ultimately are interested in structured quantizers that allow us to approximate the optimal codebook and structure is difficult to determine from the iterative method. Second, as we will see below for the constrained-entropy case, practical codebooks do not necessarily have finite cardinality. Instead of the iterative approach, we use an approach where we make simplifying assumptions, which are asymptotically accurate for high coding rates.

) is finite. The iterative approach is not appropriate for our present discussion for two reasons. First, we ultimately are interested in structured quantizers that allow us to approximate the optimal codebook and structure is difficult to determine from the iterative method. Second, as we will see below for the constrained-entropy case, practical codebooks do not necessarily have finite cardinality. Instead of the iterative approach, we use an approach where we make simplifying assumptions, which are asymptotically accurate for high coding rates.

A Model of Quantization

To analyze the behavior of the speech codebook, we construct a model of the quantization (encoding-decoding) operation. (This quantization model is not to be confused with the probabilistic signal model described in the next subsection.) Thus, we make the quantization problem mathematically tractable. For simplicity, we use the squared error criterion (Sect. 14.5 shows that this criterion can be used over a wide range of coding scenarios). We also make the standard assumption that the quantization cells are convex (for any two points in a cell, all points on the line segment connecting the two points are in the cell). To construct our encoding-decoding model, we make three additional assumptions that cannot always be justified:

-

1.

The density

is constant within each quantization cell. This implies that the probability that a speech vector is inside a cell with index q is

is constant within each quantization cell. This implies that the probability that a speech vector is inside a cell with index q is

(14.4)

(14.4)where V q is the volume of the k-dimensional cell.

-

2.

The average distortion for speech data falling within cell q is

(14.5)

(14.5)where C is a constant. The assumption made in (14.5) essentially means that the cell shape is fixed. Gersho [14.25], conjectured that this assumption is correct for optimal codebooks.

-

3.

We assume that the countable set of code vectors

can be represented by a code-vector density, denoted as g(x

k). This means that the cell volume now becomes a function of x

k rather than the cell index q; we replace V

q

by V(x

k). To be consistent we must equate the density with the inverse of the cell volume:

can be represented by a code-vector density, denoted as g(x

k). This means that the cell volume now becomes a function of x

k rather than the cell index q; we replace V

q

by V(x

k). To be consistent we must equate the density with the inverse of the cell volume:

(14.6)

(14.6)The third assumption also implies that we can replace D q by D(x k).

The three assumptions listed above lead to solutions that can generally be shown to hold asymptotically in the limit of infinite rate. The theory has been observed to make reasonable predictions of performance for practical quantizers at rates down to two bits per dimension [14.26,27], but we do not claim accuracy here. The theory serves as a vehicle to understand quantizer behavior and not as an accurate predictor of performance.

The code-vector density g(x k) of our quantization model replaces the set of code vectors as the description of the codebook. Our objective of finding the codebook that minimizes the average distortion subject to a rate constraint has become the objective of finding the optimal density g(x k) that minimizes the distortion

subject to a rate constraint.

Armed with our quantization model, we now attempt to find the optimal density g(x k) (the optimal codebook) for encoding speech. We consider separately two commonly used constraints on the rate: a given fixed rate and a given average rate. As mentioned in Sect. 14.2.1, the former rate constraint applies to circuit-switched networks and the latter rate constraint represents situations where the rate can be varied continuously, such as, for example, in storage applications and packet networks.

We start with the fixed-rate requirement, where each codebook vector c k q is encoded with a codeword of a fixed number of bits. This is called constrained-resolution coding. If we use a rate of R bits per speech vector then we have a codebook cardinality of N = 2R and the density g(x k) must be consistent with this cardinality:

We have to minimize the average distortion of (14.7) subject to the constraint (14.8) (i.e., subject to given N). This constrained optimization problem is readily solved with the calculus of variations. The solution is

where the underlining denotes normalization to unit integral over ℝk and where R is the bit rate per speech vector. Thus, our encoding-decoding model suggests that, for constrained-resolution coding, the density of the code vectors varies with the data density. At dimensionalities k >> 1 the density of the code vectors approximates a simple scaling of the probability density of the speech vectors since k/(k + 2) → 1 with increasing k.

In the constrained-resolution case, redundancy is removed by placing the codebook vectors such that they reflect the density of the data vectors. For example, as shown by (14.9), regions of ℝk without data have no vectors placed in them. This means no codewords are used for regions that have no data. If we had placed codebook vectors there, these would have been redundant. Note that scalar quantization of the k-dimensional random vector X k would do precisely that. Similarly, regions of low data density get relatively few code vectors, reducing the number of codewords spent in such regions.

Next, we apply our quantization model to the case where the average rate is constrained. That is, the codeword length used to encode the cell indices q varies. Let us denote the random index associated with the random vector X k as Q. The source coding theorem [14.12] tells us the lowest possible average rate for uniquely (so it can be decoded) encoding the indices with separate codewords is within one bit of the index entropy (in bits)

The entropy can be interpreted as the average of a bit allocation, − log 2[p Q (q)], for each index q. Neglecting the aforementioned within one bit, the average rate constraint is H(Q) = R, where R is the selected rate. For this reason, this coding method is known as constrained-entropy coding. This neglect is reasonable as the difference can be made arbitrarily small by encoding sequences of indices, as in arithmetic coding [14.28], rather than single indices. We minimize the distortion of (14.7) subject to the constraint (14.10), i.e., subject to given H(Q) = R. Again, the constrained optimization problem is readily solved with the calculus of variations. In this case the solution is

where  is the differential entropy

of X

k in bits, and where H(Q) is specified in bits. It is important to realize that special care must be taken if

is the differential entropy

of X

k in bits, and where H(Q) is specified in bits. It is important to realize that special care must be taken if  is singular, i.e., if the data lie on a manifold.

is singular, i.e., if the data lie on a manifold.

Equation (14.11) implies that the the code vector density is uniform across ℝk. The number of code vectors is countably infinite despite the fact that the rate itself is finite. The codeword length − log 2[p Q (q)] increases very slowly with decreasing probability p Q (q) and, roughly speaking, long codewords make no contribution to the mean rate.

In the constrained-entropy case, redundancy is removed through the lossless encoding of the indices. Given the probabilities of the code vectors, (ideal) lossless coding provides the most efficient bit assignment that allows unique decoding, and this rate is precisely the entropy of the indices. Code vectors in regions of high probability density receive short codewords and code vectors in regions of low probability density receive long codewords.

An important result that we have found for both the constrained-resolution and constrained-entropy cases is that the structure of the codebook is independent of the overall rate. The code-vector density simply increases as 2R (cf. (14.9) and (14.11), respectively) anywhere in ℝk. Furthermore, for the constrained-entropy case, the code vector density depends only through the global variable h(X k) on the probability density.

Coding Speech with a Model Family

Although the quantization model of Sect. 14.3.3 provides interesting results, a general implementation of a codebook for the random speech vector X k leads to practical problems, except for small k. For the constrained-resolution case, larger values of k lead to codebook sizes that do not allow for practical training procedures for storage on conventional media. For the constrained-entropy case, the codebook itself need not be stored, but we need access to the probability density of the codebook entries to determine the corresponding codewords (either offline or through computation during encoding). We can resolve these practical coding problems by using a model of the density. Importantly, to simplify the computational effort, we do not assume that the model is an accurate representation of the density of the speech signal vector, we simply make a best effort given the tools we have.

The model-based approach towards reducing computational complexity is suggested by the mixture model that we discussed in Sect. 14.3.1. If we classify each speech vector first as corresponding to a particular sound, then we can specify a probability density for that sound. A signal model specifies the probability density, typically by means of a formula for the probability density. The probability densities of the models are typically selected to be relatively simple. The signal models reduce computational complexity, either because they reduce codebook size or because the structural simplicity of the model simplifies the lossless coder. We consider models of a similar structure to be member of a model family. The selection of a particular model from the family is made by specifying model parameters.

Statistical signal models are commonly used in speech coding, with autoregressive modeling (generally referred to as linear prediction coding methods) perhaps being the most common. In this section, we discuss the selection of a particular model from the model family (i.e., the selection of the model parameters) and the balance in bit allocation between the model and the specification of the speech vector.

Our starting point is that a family of signal models is available for the coding operation. The model family can be any model family that provides a probability assignment for the speech vector x k. We discuss relevant properties for coding with signal models. We do not make the assumption that the resulting coding method is close to a theoretical performance bound on the rate versus distortion trade-off. As seid, we also do not make an assumption about the appropriateness of the signal model family for the speech signal. The model probabilities may not be accurate. However it is likely that models that are based on knowledge of speech production result in better performance.

The reasoning below is based on the early descriptions of the minimum description length (MDL) principle for finding signal models [14.29,30,31]. These methods separate a code for the model and a code for the signal realization, making them relevant to practical speech coding methods whereas later MDL methods use a single code. Differences from the MDL work include a stronger focus on distortion, and the consideration of the constrained-resolution case, which is of no interest to modeling theory.

Constrained-Entropy Case

First we consider the constrained-entropy

case, i.e., we consider the case of a uniform codebook. Each speech vector is encoded with a codebook where each cell is of identical volume, which we denote as V. Let the model distribution be specified by a set of model parameters, θ. We consider the models to have a probability density, which means that a particular parameter set θ corresponds to a particular realization of a random parameter vector Θ. We write the probability density of X

k assuming the particular parameter set θ as  . The corresponding overall model density is

. The corresponding overall model density is

where the summation is over all parameter sets. The advantage of selecting and then using models  from the family over using the composite model density

from the family over using the composite model density  is a decrease of the computational effort.

is a decrease of the computational effort.

The quantization model of Sect. 14.3.3 and in particular (14.4) and (14.10), show that the constrained-entropy encoding of a vector x

k assuming the model with parameters θ requires  bits. In addition, the decoder must receive side information specifying the model.

bits. In addition, the decoder must receive side information specifying the model.

Let  be the parameter vector that maximizes

be the parameter vector that maximizes  and, thus, minimizes the bit allocation

and, thus, minimizes the bit allocation  . That is,

. That is,  is the maximum-likelihood model

(from the family) for encoding the speech vector x

k. The random speech vectors X

k do not form a countable set and as a result the random parameter vector

is the maximum-likelihood model

(from the family) for encoding the speech vector x

k. The random speech vectors X

k do not form a countable set and as a result the random parameter vector  generally does not form a countable set for conventional model families such as autoregressive models. To encode the model, we must discretize it.

generally does not form a countable set for conventional model families such as autoregressive models. To encode the model, we must discretize it.

To facilitate transmission of the random model index, J, the model parameters must be quantized and we write the random parameter set corresponding to random index J as θ(J). If p J (j) is a prior probability of the model index, the overall bit allocation for the vector x k when encoded with model j is

where the term  represents the additional (excess) bit allocation required to encode x

k with model j over the bit allocation required to encode x

k with the true maximum-likelihood model from the model family.

represents the additional (excess) bit allocation required to encode x

k with model j over the bit allocation required to encode x

k with the true maximum-likelihood model from the model family.

With some abuse of notation, we denote by j(x k) the function that provides the index for a given speech vector x k. In the following, we assume that the functions θ(j) and j(x k) minimize l. That is, we quantize θ so as to minimize the total number of bits required to encode x k.

We are interested in the bit allocation that results from averaging over the probability density  of the speech vectors,

of the speech vectors,

where E{ ⋅} indicates averaging over the speech vector probability density and where  is the random bit allocation that has I as realization. In (14.14), the first term describes the mean bit allocation to specify the model, the second term specifies the mean excess in bits required to encode X

k assuming θ(j(x

k)) instead of assuming the optimal

is the random bit allocation that has I as realization. In (14.14), the first term describes the mean bit allocation to specify the model, the second term specifies the mean excess in bits required to encode X

k assuming θ(j(x

k)) instead of assuming the optimal  , and the third term specifies the mean number of bits required to encode X

k if the optimal model is available. Importantly, only the third term contains the cell volume that determines the mean distortion of the speech vectors through (14.7).

, and the third term specifies the mean number of bits required to encode X

k if the optimal model is available. Importantly, only the third term contains the cell volume that determines the mean distortion of the speech vectors through (14.7).

Assuming validity of the encoding model of Sect. 14.3.3, the optimal trade-off between the bit allocation for the model index and the bit allocation for the speech vectors X k depends only on the mean of

which is referred to as the [14.32]. The goal is to find the functions θ(⋅) and j(⋅) that minimize the index of resolvability over the ensemble of speech vectors. An important consequence of our logic is that these functions, and therefore the rate allocation for the model index, are dependent only on the excess rate and the probability of the quantized model. As the third term of (14.14) is missing, no relation to the speech distortion exists. That is the rate allocation for the model index J is independent of distortion and overall bit rate. While the theory is based on assumptions that are accurate only for high bit rates, this suggests that the bit allocation for the parameters becomes proportionally more important at low rates.

The fixed entropy for the model index indicates, for example, that for the commonly used linear-prediction-based speech coders, the rate allocation for the linear prediction parameters is independent of the overall rate of the coder. As constrained-entropy coding is not commonly used for predictive coding, this result is not immediately applicable to conventional speech coders. However, the new result we derive below is applicable to such coders.

Constrained-Resolution Case

Most current speech coders were designed with a constrained-resolution (fixed-rate) constraint, making it useful to study modeling in this context. We need some preliminary results. For a given model, with parameter set θ, and optimal code vector density, the average distortion over a quantization cell centered at location x k can be written

where we have used (14.7) and (14.9). We take the expectation of (14.16) with respect to the true probability density function  and obtain the mean distortion for the constrained-resolution case:

and obtain the mean distortion for the constrained-resolution case:

Equation (14.17) can be rewritten as

We assume that k is sufficiently large that, in the region where  is significant, we can use the expansion u ≈ 1 + log (u) for the term

is significant, we can use the expansion u ≈ 1 + log (u) for the term  and write

and write

Having completed the preliminaries, we now consider the encoding of a speech vector x k. Let L (m) be the fixed bit allocation for the model index. The total rate is then

The form of (14.20) shows that, given the assumptions made, we can define an equivalent codeword length

for each speech codebook entry. The equivalent codeword length represents the spatial variation of the distortion. Note that this equivalent codeword length does not correspond to the true codeword length of the speech vector codebook, which is fixed for the constrained-resolution case. For a particular codebook vector x

k, the equivalent codeword length is

for each speech codebook entry. The equivalent codeword length represents the spatial variation of the distortion. Note that this equivalent codeword length does not correspond to the true codeword length of the speech vector codebook, which is fixed for the constrained-resolution case. For a particular codebook vector x

k, the equivalent codeword length is

Similarly to the constrained-entropy case, we can decompose (14.21) into a rate component that relates to the encoding of the model parameters, a component that describes the excess equivalent rate resulting from limiting the precision of the model parameters, and a rate component that relates to optimal encoding with optimal (uncoded) model parameters:

We can identify the last two terms as the bit allocation for x k for the optimal constrained-resolution model for the speech vector x k. The second term is the excess equivalent bit allocation required to encode the speech vector with model j over the bit allocation required for the optimal model from the model family. The first two terms determine the trade-off between the bits spent on the model, and the bits spent on the speech vectors. These two terms form the index of resolvability for the constrained-resolution case:

As for the constrained-entropy case, the optimal set of functions θ(j) and j(x k) (and, therefore, the bit allocation for the model) are dependent only on the speech vector density for the constrained-resolution case. The rate for the model is independent of the distortion selected for the speech vector and of the overall rate. With increasing k, the second term in (14.23) and (14.15) becomes identical. That is the expression for the excess rate for using the quantized model parameters corresponding to model j instead of the optimal parameters is identical.

The independence of the model-parameter bit allocation of the overall codec rate for the constrained-entropy case is of great significance for practical coding systems. We emphasize again that this result is valid only under the assumptions made in Sect. 14.3.3. We expect the independence to break down at lower rates, where the codebook  describing the speech cannot be approximated by a density.

describing the speech cannot be approximated by a density.

The results described in this section are indeed supported, at least qualitatively, by the configuration of practical coders. Table 14.1 shows the most important bit allocations used in the adaptive-multirate wideband (AMR-WB) speech coder [14.4]. The AMR-WB coder is a constrained-resolution coder. It is seen that the design of the codec satisfies the predicted behavior: the bit allocation for the model parameters is essentially independent of the rate of the codec, except at low rates.

Model-Based Coding

In signal-model-based coding we assume the family is known to the encoder and decoder. An index to the specific model is transmitted. Each model corresponds to a unique speech-domain codebook. The advantage of the model-based approach is that the structure of the density is simplified (which is advantageous for constrained-entropy coding) and that the required number of codebook entries for the constrained-resolution case is smaller. This facilitates searching through the codebook and/or the definition of the lossless coder.

The main result of this section is that we can determine the set of codebooks for the models independently of the overall rate (and speech-vector distortion). The result is consistent with existing results. The result of this section leads to fast codec design as there is no need to check the best trade-off in bit allocation between model and signal quantization.

When encoding with a model-based coding it is advantageous first to identify the best model, encode the model index j, and then encode the signal using codebook  that is associated with that particular model j. The model selection can be made based on the index of resolvability.

that is associated with that particular model j. The model selection can be made based on the index of resolvability.

Coding with Autoregressive Models

We now apply the methods of Sect. 14.3 to a practical model family. Autoregressive model families are commonly used in speech coding. In speech coding this class of coders is generally referred to as being based on linear prediction. We discuss coding based on a family that consists of a set of autoregressive models of a particular order (denoted as p). To match current practice, we consider the constrained-resolution case.

We first formulate the index of resolvability in terms of a spectral formulation of the autoregressive model. We show that this corresponds to the definition of a distortion measure for the model parameters. The distortion measure is approximated by the commonly used Itakura-Saito and log spectral distortion measures. Thus, starting from a squared error criterion for the speech signal, we obtain the commonly used (e.g., [14.33,34,35,36,37]) distortion measures for the linear-prediction parameters. Finally, we show that our reasoning leads to an estimate for the bit allocation for the model. We discuss how this result relates to results on autoregressive model estimation.

Spectral-Domain Index of Resolvability

Our objective is to encode a particular speech vector x k using the autoregressive model. To facilitate insight, it is beneficial to make a spectral formulation of the problem. To this purpose, we assume that k is sufficiently large to neglect edge effects. Thus, we neglect the difference between circular and linear convolution.

The autoregressive model assumption implies that x k has a multivariate Gaussian probability density

R θ is the model autocorrelation matrix

where A a lower-triangular Toeplitz matrix with first column σ[1, a 1, a 2,⋯ , a p , 0,⋯ , 0]T, where the a i are the autoregressive model parameters (linear-prediction parameters), and p is the autoregressive model order and the superscript H is the Hermitian transpose. Thus, the set of model parameters is θ ={ σ, a 1,⋯ , a p }i=1,⋯,p. We note that typically p = 10 for 8 kHz sampling rate and p = 16 for 12 kHz and 16 kHz sampling rate.

When k is sufficiently large, we can perform our analysis in terms of power spectral densities. The transfer function of the autoregressive model is

where σ is a gain. This corresponds to the model power spectral density

In the following, we make the standard assumption that A(z) is minimum-phase.

Next we approximate (14.24) in terms of power spectral densities and the transfer function of the autoregressive model. Using Szegöʼs theorem [14.38], it is easy to show that, asymptotically in k,

We also use the asymptotic equality

where x(z) =∑i=0

k−1x

i

z

−i for x

k = (x

1,⋯ , x

k

) and  .

.

Equations (14.28) and (14.29) can be used to rewrite the multivariate density of (14.24) in terms of power spectral densities. It is convenient to write the log density:

We use (14.30) to find the index of resolvability for the constrained-resolution case. We make the approximation that k is sufficiently large that it is reasonable to approximate the exponent k/(k + 2) by unity in (14.23). This implies that we do not have to consider the normalization in this equation. Inserting (14.30) into (14.23) results in

The maximum-likelihood estimate

of the autoregressive model  given a data vector x

k is a well-understood problem, e.g., [14.39,40]. The predictor parameter estimate of the standard Yule-Walker solution method has the same asymptotic density as the maximum-likelihood estimate [14.41].

given a data vector x

k is a well-understood problem, e.g., [14.39,40]. The predictor parameter estimate of the standard Yule-Walker solution method has the same asymptotic density as the maximum-likelihood estimate [14.41].

To find the optimal bit allocation for the model we have to minimize the expectation of (14.31) over the ensemble of all speech vectors. We study the behavior of this minimization. For notational convenience we define a cost function

Let θ be a particular model from a countable model set 풞 Θ (L (m)) with a bit allocation L (m) for the model. Finding the optimal model set 풞 Θ (L (m)) is then equivalent to

If we write

then (14.33) becomes

If we interpret D(L (m)) as a minimum mean distortion, minimizing (14.35) is equivalent to finding a particular point on a rate-distortion curve. We can minimize the cost function of (14.34) for all L (m) and then select the L (m) that minimizes the overall expression of (14.35). Thus only one particular distortion level, corresponding to one particular rate, is relevant to our speech coding system. This distortion-rate pair for the model is dependent on the distribution of the speech models. Assuming that D(L (m)) is once differentiable towards L (m), then (14.35) shows that its derivative should be − 1 at the optimal rate for the model.

A Criterion for Model Selection

We started with the notion of using an autoregressive model family to quantize the speech signal. We found that we could do so by first finding the maximum-likelihood estimate  of the autoregressive model parameters, then selecting from a set of models 풞

Θ

(L

(m)) the model nearest to the maximum-likelihood model based on the cost function

of the autoregressive model parameters, then selecting from a set of models 풞

Θ

(L

(m)) the model nearest to the maximum-likelihood model based on the cost function  and then quantizing the speech given the selected model. As quantization of the predictor parameters corresponds to our model selection, it is then relevant to compare the distortion measure of (14.32) with the distortion measures that are commonly used for the linear-prediction parameters in existing speech coders.

and then quantizing the speech given the selected model. As quantization of the predictor parameters corresponds to our model selection, it is then relevant to compare the distortion measure of (14.32) with the distortion measures that are commonly used for the linear-prediction parameters in existing speech coders.

To provide insight, it is useful to write  , where R

w

(eiω) represents a remainder power-spectral density that captures the spectral error of the maximum likelihood model. If the model family is of low order, then R

w

(eiω) includes the spectral fine structure. We can rewrite (14.32) as

, where R

w

(eiω) represents a remainder power-spectral density that captures the spectral error of the maximum likelihood model. If the model family is of low order, then R

w

(eiω) includes the spectral fine structure. We can rewrite (14.32) as

Interestingly, (14.36) reduces to the well-known Itakura-Saito criterion [14.42] if R w (eiω) is set to unity.

It is common (e.g., [14.43]) to relate different criteria through the series expansion  . Assuming small differences between the optimal model

. Assuming small differences between the optimal model  and the model from the set θ, (14.36) can be written

and the model from the set θ, (14.36) can be written

Equation (14.37) needs to be accurate only for nearest neighbors of  .

.

We can simplify (14.37) further. With our assumptions for the autoregressive models, R θ (z) is related to monic minimum-phase polynomials through (14.27) and the further assumption that their gains σ are identical (i.e., is not considered here), this implies that

This means that we can rewrite (14.37) as

Equation (14.39) forms the basic measure that must be optimized for the selection of the model from a set of models, i.e., for the optimal quantization of the model parameters.

If we can neglect the impact of R w (z), then (using the result of (14.38)) minimizing (14.39) is equivalent to minimizing

which is the well-known mean squared log spectral distortion, scaled by the factor k/4. Except for this scaling factor, (14.39) is precisely the criterion that is commonly used (e.g., [14.35,44,45]) to evaluate performance of quantizers for autoregressive (AR) model parameters. This is not unreasonable as the neglected modeling error R w (eiω) is likely uncorrelated with the model quantization error.

Bit Allocation for the Model

The AR model is usually described with a small number of parameters (as mentioned, p = 10 is common for 8 kHz sampling rate). Thus, the spectral data must lie on a manifold of dimension p or less in the log spectrum space. At high bit allocations, where measurement noise dominates (see also the end of Sect. 14.3.3), the manifold dimension is p and the spectral distortion is expected to scale as

where β is a constant and N AR is the number of spectral models in the family and L (m) = log (N AR). At higher spectral distortion levels, it has been observed that the physics of the vocal tract constrains the dimensionality of the manifold. This means that (14.41) is replaced by

with κ < p. This behavior was observed for trained codebooks over a large range in [14.44] (similar behavior was observed for cepstral parameters in [14.46]) and for specific vowels in [14.47]. The results of [14.44] correspond to κ = 7.1 and β = 0.80.

The mean of (14.31) becomes

Differentiating towards L (m) we find that the optimal bit allocation for the AR model selection to be

which is logarithmically dependent on k. Using the observed data of [14.44], we obtain an optimal rate of about 17 bits for 8 kHz sampled speech at a 20 ms block size. The corresponding mean spectral distortion is about 1.3 dB. The distortion is similar to the mean estimation errors found in experiments on linear predictive methods on speech sounds [14.48].

The 17 bit requirement for the prediction parameter quantizer is similar to that obtained by the best available prediction parameter quantizers that operate on single blocks and bounds obtained for these methods [14.35,49,50,51,52]. In these systems the lowest bit allocation for 20 ms blocks is about 20 bits. However, the performance of these coders is entirely based on the often quoted 1 dB threshold for transparency [14.35]. The definition of this empirical threshold is consistent with the conventional two-step approach: the model parameters are first quantized using a separately defined criterion, and the speech signal is quantized thereafter based on a weighted squared error criterion. In contrast, we have shown that a single distortion measure operating on the speech vector suffices for this purpose.

We conclude that the definition of a squared-error criterion for the speech signal leads to a bit allocation for the autoregressive model. No need exists to introduce perception based thresholds on log spectral distortion.

Remarks on Practical Coding

The two-stage approach is standard practice in linear-prediction-based (autoregressive-model-based) speech codecs. In the selection stage, weighted squared error criteria in the so-called line-spectral frequency (LSF) representation of the prediction parameters are commonly used, e.g., [14.34,35,36,37]. If the proper weighting is used, then the criterion can be made to match the log spectral distortion measure [14.53] that we derived above.

The second stage is the selection of a speech codebook entry from a codebook corresponding to the selected model. The separation into a set of models simplifies this selection. In general, this means that a speech-domain codebook must be available for each model. It was recently shown that the computational or storage requirements for optimal speech-domain codebooks can be made reasonable by using a single codebook for each set of speech sounds that are similar except for a unitary transform [14.54]. The method takes advantage of the fact that different speech sounds may have similar statistics after a suitable unitary transform and can, therefore, share a codebook. As the unitary transform does not affect the Euclidian distance, it also does not affect the optimality of the codebook.

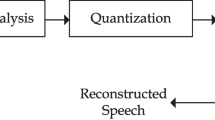

In the majority of codecs the speech codebooks are generated in real time, with the help of the model obtained in the first stage. This approach is the so-called analysis-by-synthesis approach. It can be interpreted as a method that requires the synthesis of candidate speech vectors (our speech codebook), hence the name. Particularly common is the usage of the analysis-by-synthesis approach for the autoregressive model [14.16,55]. While the analysis-by-synthesis approach has proven its merit and is used in hundreds of millions of communication devices, it is not optimal. It was pointed out in [14.54] that analysis-by-synthesis coding inherently results in a speech-domain codebook with quantization cells that have a suboptimal shape, limiting performance.

Distortion Measures and Coding Architecture

An objective of coding is the removal of irrelevancy. This means that precision is lost and that we introduce a difference between the original and the decoded signal, the error signal. So far we have considered basic quantization theory and how modeling can be introduced in this quantization structure. We based our discussion on a mean squared error distortion measure for the speech vector. As discussed in Sect. 14.2.2, the proper measure is the decrease in signal quality as perceived by human listeners. That is, the goal in speech coding is to minimize the perceived degradation resulting from an encoding at a particular rate. This section discusses methods for integrating perceptually motivated criteria into a coding structure.

To base coding on perceived quality degradation, we must define an appropriate quantitative measure of the perceived distortion. Reasonable objectives for a good distortion measure for a speech codec are a good prediction of experimental data on human perception, mathematical tractability, low delay, and low computational requirements.

A major aspect in the definition of the criterion is the representation of the speech signal the distortion measure operates on. Most straightforward is to quantize the speech signal itself and use the distortion measure as a selection criterion for code vectors and as a means to design the quantizers. This coding structure is commonly used in speech coders based on linear-predictive coding. An alternative coding structure is to apply a transform towards a domain that facilitates a simple distortion criterion. Thus, in this approach, we first perform a mapping to a perceptual domain (preprocessing) and then quantize the mapped signal in that domain. At the decoder we apply the inverse mapping (postprocessing). This second architecture is common in transform coders aimed at encoding audio signals at high fidelity.

We start this section with a subsection discussing the squared error criterion, which is commonly used because of its mathematical simplicity. In Subsects. 14.5.2 and 14.5.3 we then discuss models of perception and how the squared error criterion can be used to represent these models. We end the section with a subsection discussing in some more detail the various coding architectures.

Squared Error

The squared-error criterion is commonly used in coding, often without proper physical motivation. Such usage results directly from its mathematical tractability. Given a data sequence, optimization of the model parameters for a model family often leads to a set of linear equations that is easily solved.

For the k-dimensional speech vector x k, the basic squared-error criterion is

where the superscript ‘H’ denotes the Hermitian conjugate and  is the reconstruction vector upon encoding and decoding. Equation (14.45) quantifies the variance of the signal error. Unfortunately, variance cannot be equated to loudness, which is the psychological correlate of variance. At most we can expect that, for a given original signal, a scaling of the error signal leads to a positive correlation between perceived distortion and squared error.

is the reconstruction vector upon encoding and decoding. Equation (14.45) quantifies the variance of the signal error. Unfortunately, variance cannot be equated to loudness, which is the psychological correlate of variance. At most we can expect that, for a given original signal, a scaling of the error signal leads to a positive correlation between perceived distortion and squared error.

While the squared error in its basic form is not representative of human perception, adaptive weighting of the squared-error criterion can lead to improved correspondence. By means of weighting we can generalize the squared-error criterion to a form that allows inclusion of knowledge of perception (the formulation of the weighted squared error criterion for a specific perceptual model is described in Subsects. 14.5.2 and 14.5.3). To allow the introduction of perceptual effects, we linearly weight the error vector  and obtain

and obtain

where H is an m × k matrix, where m depends on the weighting invoked. As we will see below, many different models of perception can be approximated with the simple weighted squared-error criterion of (14.46). In general, the weighting matrix H adapts to x k, that is H(x k) and

can be interpreted as a perceptual-domain representation of the signal vector for a region of x k where H(x k) is approximately constant.

The inclusion of the matrix H in the formulation of the squared-error criterion generally results in a significantly higher computational complexity for the evaluation of the criterion. Perhaps more importantly, when the weighted criterion of (14.46) is adaptive, then the optimal distribution of the code vectors (Sect. 14.3.3) for constrained-entropy coding is no longer uniform in the speech domain. This has significant implications for the computational effort of a coding system.

The formulation of (14.46) is commonly used in coders that are based on an autoregressive model family, i.e., linear-prediction-based analysis-by-synthesis coding [14.16]. (The matrix H then usually includes the autoregressive model, as the speech codebook is defined as a filtering of an excitation codebook.) Also in the context of this class of coders, the vector H x k can be interpreted as a perceptual-domain vector. However, because H is a function of x k it is not straightforward to define a codebook in this domain.

The perceptual weighting matrix H often represents a filter operation. For a filter with impulse response [h 0, h 1, h 2,⋯ ], the matrix H has a Toeplitz structure:

For computational reasons, it may be convenient to make the matrix H H H Toeplitz. If the impulse response has time support p then H H H is Toeplitz if H is selected to have dimension (m + p) × m [14.19].

Let us consider how the impulse response [h 0, h 1, h 2,⋯ ] of (14.48) is typically constructed for the case of linear-predictive coding. The impulse response is constructed from the signal model. Let the transfer function of the corresponding autoregressive model be, as in (14.26)

where the a i are the prediction parameters and σ is the gain. A weighting that is relatively flexible and has low computational complexity is then [14.56]

where γ 1 and γ 2 are parameters that are selected to accurately describe the impact of the distortion on perception. The sequence [h 0, h 1, h 2,⋯ ] of (14.48) is now simply the impulse response of H(z). The parameters γ 1 and γ 2 are selected to approximate perception where 1 ≥ γ 1 > γ 2 > 0. The filter A(z/γ 1) deemphasizes the envelope of the power spectral density, which corresponds to decreasing the importance of spectral peaks. The filter 1/A(z/γ 2) undoes some of this emphasis for a smoothed version of the spectral envelope. The effect is roughly that 1/A(z/γ 2) limits the spectral reach of the deemphasis A(z/γ 1). In other words, the deemphasis of the spectrum is made into a local effect.

To understand coders of the transform model family, it is useful to interpret (14.46) in the frequency domain. We write the discrete Fourier transform (DFT) as the unitary matrix F and the define a frequency-domain weighting matrix W such that

The matrix W provides a weighting of the frequency-domain vector F x k. If, for the purpose of our discussion, we neglect the difference between circular and linear convolution and if H represents a filtering operation (convolution) as in (14.48), then W is diagonal. To account for perception, we must adapt W to the input vector x k (or equivalently, to the frequency-domain vector F x k) and it becomes a function W(x k): Equation (14.50) could be used as a particular mechanism for such weighting. However, in the transform coding context, so-called masking methods, which are described in Sect. 14.5.2, are typically used to find W(x k).

As mentioned before, the random vector Y m = H X k (or, equivalently, the vector WF X k) can be considered as a perceptual-domain description. Assuming smooth behavior of H(x k) as a function of x k, this domain can then be used as the domain for coding. A codebook must be defined for the perceptual-domain vector Y m and we select entries from this codebook with the unweighted squared error criterion. This approach is common in transform coding. When this coding in the perceptual domain is used, the distortion measure does not vary with the vector y m, and a uniform quantizer is optimal for Y m for the constrained-entropy case. If the mapping to the perceptual domain is unique and invertible (which is not guaranteed by the formulation), then y m = H(x k)x k ensures that x k is specified when y m is known and only indices to the codebook for Y m need to be encoded. In practice, the inverse mapping may not be unique, resulting in problems at block boundaries and the inverse may be difficult to compute. As a result it is common practice to quantize and transmit the weighting W, e.g., [14.57,58].

Masking Models and Squared Error

Extensive quantitative knowledge of auditory perception exists and much of the literature on quantitative descriptions of auditory perception relates to the concept of masking, e.g., [14.59,60,61,62,63]. The masking-based description of the operation of the auditory periphery can be used to include the effect of auditory perception in speech and audio coding. Let us define an arbitrary signal that we call the masker. The masker implies a set of second signals, called maskees, which are defined as signals that are not audible when presented in the presence of the masker. That is, the maskee is below the masking threshold. Masking explains, for example, why a radio must be made louder in a noisy environment such as a car. We can think of masking as being a manifestation of the internal precision of the auditory periphery.

In general, laws for the masking threshold are based on psychoacoustic measurements for the masker and maskee signals that are constructed independently and then added. However, it is clear that the coding error is correlated to the original signal. In the context of masking it is a commonly overlooked fact that, for ideal coding, the coding error signal is, under certain common conditions, independent of the reconstructed signal [14.12]. Thus, a reasonable objective of audio and speech coding is to ensure that the coding error signal is below the masking threshold of the reconstructed signal.

Masking is quantified in terms of a so-called masking curve. We provide a generalized definition of such a curve. Let us consider a signal vector x

k with k samples that is defined in ℝk. We define a perceived-error measurement domain by any invertible mapping ℝk →ℝm. Let {e

m

i

}i∈{0,⋯,m−1} be the unit-length basis vectors that span ℝm. We then define the m-dimensional masking curve

as [14.64]  , i ∈ 0,⋯ , m − 1, where the scalar

, i ∈ 0,⋯ , m − 1, where the scalar  is the just-noticeable difference

(JND) for the basis vector e

m

i

. That is, the vector

is the just-noticeable difference

(JND) for the basis vector e

m

i

. That is, the vector  is precisely at the threshold of being audible for the given signal vector.

is precisely at the threshold of being audible for the given signal vector.

Examples of the masking curve can be observed in the time and the frequency domain. The frequency-domain representation of x k is F x k. Simultaneous masking is defined as the masking curve for F x k, i.e., the just-noticeable amplitudes for the frequency unit vectors e 1, e 2, etc. In the time domain we refer to nonsimultaneous (or forward and backward) masking depending on whether the time index i of the unit vectors e i is prior to or after the main event in the masker (e.g., an onset). Both the time-domain (temporal) and frequency-domain masking curves are asymmetric and dependent on the loudness of the masker. A loud sound leads to a rapid decrease in auditory acuity, followed by a slow recovery to the default level. The recovery may take several hundreds of ms and causes forward masking. The decrease in auditory acuity before a loud sound, backward masking, extends only over very short durations (at most a few ms). Similar asymmetry occurs in the frequency domain, i.e., in simultaneous masking. Let us consider a tone. The auditory acuity is decreased mostly at frequencies higher than the tone. The acuity increases more rapidly from the masker when moving towards lower frequencies than when moving towards higher frequencies, which is related to the decrease in frequency resolution with increasing frequency. A significant difference exists in the masking between tonal and noise-like signals. We refer to [14.63,65,66] for further information on masking.

The usage of masking is particularly useful for coding in the perceptual domain with a constraint that the quality is to be transparent (at least according to the perceptual knowledge provided). For example, consider a transform coder (based on either the discrete cosine transform or the DFT). In this case, the quantization step size can be set to be the JND as provided by the simultaneous masking curve [14.57].

Coders are commonly subject to a bit-rate constraint, which means knowledge of the masking curve is not sufficient. A distortion criterion must be defined based on the perceptual knowledge given. A common strategy in audio coding to account for simultaneous masking is to use a weighted squared error criterion, with a diagonal weighting matrix H that is reciprocal of the masking threshold [14.27,58,67,68,69,70]. In fact, this is a general approach that is useful to convert a masking curve in any measurement domain:

where it is understood that the weighting matrix H is defined in the measurement domain. To see this consider the effect of the error vector  on the squared error:

on the squared error:

Thus, the points on the masking curve are defined as the amplitudes of basis vectors that lead to a unit distortion. This is a reasonable motivation for the commonly used reciprocal-weighting approach for the squared-error criterion defined by the weighting described in (14.52). However, it should be noted that for this formulation the distortion measure does not vanish below the masking threshold. A more-complex approach where the distortion measure does vanish below the JND is given in [14.71].

Auditory Models and Squared Error

The weighting procedure of (14.52) (possibly in combination with the transform to the measurement domain) is an operation that transforms the signal to a perceptually relevant domain. Thus, the operation can be interpreted as a simple auditory model. Sophisticated models of the auditory periphery that directly predict the input to the auditory nerve have also been developed, e.g., [14.72,73,74,75,76,77]. Despite the existence of such quantitative models of perception, their application in speech coding has been limited. Only a few examples [14.78,79] of the explicit usage of existing quantitative knowledge of auditory perception in speech coding speech exist. In contrast, in the field of audio coding the usage of quantitative auditory knowledge is common. Transform coders can be interpreted as methods that perform coding in the perceptual domain, using a simple perceptual model, usually based on (simultaneous) masking results.

We can identify a number of likely causes for the lack of usage of auditory knowledge in speech coding. First, the structure of speech coders and the constraint on computational complexity naturally leads to speech-coding-specific models of auditory perception, such as (14.50). The parameters of these simple speech-coding-based models are optimized directly based on coding performance. Second, the perception of the periodicity nature of voiced speech, often referred to as the perception of pitch, is not well understood in a quantitative manner. It is precisely the distortion associated with the near-periodic nature of voiced speech that is often critical for the perceived quality of the reconstructed signal. An argument against using a quantitative model based on just-noticeable differences (JNDs) is that JNDs are often exceeded significantly in speech coding. While the weighting of (14.52) is reasonable near the JND threshold value, it may not be accurate in the actual operating region of the speech coder. Major drawbacks of using sophisticated models based on knowledge of the auditory periphery are that they tend to be computationally expensive, have significant latency, and often lead to a representation that has many more dimensions than the input signal. Moreover the complexity of the model structure makes inversion difficult, although not impossible [14.80].

The complex structure of auditory models that describe the functionality of the auditory periphery is time invariant. We can replace it by a much simpler structure at the cost of making it time variant. That is, the mapping from the speech domain to the perceptual domain can be simplified by approximating this mapping as locally linear [14.79]. Such an approximation leads to the sensitivity matrix