Abstract

This paper considers the efficient methods and high- performance parallel technologies for the numerical solution of the multi-dimensional initial boundary value problems, with a complicated geometry of a computational domain and contrast properties of a material on the heterogeneous multi-processor systems with distributed and hierarchical shared memory. The approximations with respect to time and space are carried out by implicit schemes on the quasi-structured grids. At each time step, the iterative algorithms are used for solving the systems of linear or nonlinear equations that, in general, are non-symmetric with a special choice of the initial guess. The scalable parallelism is provided by two-level iterative domain decomposition methods, with parameterized intersection of subdomains in the Krylov subspaces, which are accelerated by means of a coarse grid correction and polynomial or other types of preconditioning. A comparative analysis of the performance and speed up of the computational processes is presented, based on a simple model of parallel computing and data structures.

The work is supported by the Russian Science Foundation (grant 14-11-00485 P) and the Russian Foundation for Basic Research (grant 16-29-15122 ofi-m).

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

- Nonstationary boundary value problems

- High-order approximations

- Stability

- Initial guess

- Iterative processes

- Domain decomposition

- Scalable parallelism

1 Introduction

In this paper, we consider various numerical approaches to solving multi-dimensional initial boundary value problems (IBVPs) for nonstationary partial differential equations (PDEs) in complicated computational domains with real data, and offer a comparative analysis of their performance on modern heterogeneous multi-processor systems (MPS) with distributed and hierarchical shared memory. In general, we will assess the efficiency of numerical solutions of a class of mathematical problems by the volume of computational resources required to provide the accuracy needed on a particular type of MPS. Of course, such a statement is not quite clear, and we should refine many details in this important concept. A simple way to do this consists in measuring run time and using these measurements as a performance criterion. Other tools could be the estimation of computing time and communication time based on a certain model for the implementation of the problem on MPS. In what follows, we will use the second approach and consider simple representations for both the arithmetical execution time \(T_a\) and the communication time \(T_c\). In total, the performance of the numerical solution of the problem will be defined by the run time \(T_t = T_a + T_c\). We suppose here that the arithmetical units do not work during data transfer, although the whole picture can be more complicated.

The performance is characterized by two main aspects: mathematical efficiency of numerical methods and computational technologies for software implementation on a particular hardware architecture. The algorithmic issues depend on two main mathematical stages: discrete approximation of the original continuous problem and numerical solution of the resulting algebraic task. It is important that we do not examine model problems but problems with real data: multi-dimensional boundary value problems in computational domains with a complicated geometry, multi-connected and multi-scaled (in general) piece-wise smooth boundaries and contrast properties of a material, which provide singularities of the solution to be sought. It means that in order to ensure a high numerical resolution and accuracy of the computational model, we must use fine grids with a very small time step \(\tau \) and a spatial step h. So we have, in principle, a “super task” with a very large number of degrees of freedom (d. o. f.) or a high dimension of the corresponding discrete problem. In general, the original problem can be nonlinear and multi-disciplinary or multi-physical, i.e. it is described either by a system of PDEs or by the corresponding variational relations for unknown vector functions. Also, the mathematical statement may not be a direct one with all the coefficients of the equations given and with initial and boundary conditions, it may be instead an inverse problem that includes variable parameters to be found from the condition of minimization of some given objective functional of the unknown solution. For simplicity, however, we will mainly consider direct IBVPs for a single linear scalar equation. A review of the corresponding models can be found in [11] (see also the literature cited therein). We also do not consider in detail other computational steps of the mathematical modeling (grid generation, post-processing, visualization of the results, etc.) since they are of a more general type and are almost defined by the problem specifications.

The approximation approaches are divided into temporal and spatial discretizations. If we carry out the spatial approximation at first by the finite volume method, the finite element method or any other method [2], then we will obtain a system of ordinary differential equations (ODEs). There are various explicit and implicit, multi-stage and/or multi-step algorithms of different orders [3] that may be applied to solve such a system. It is important to remark that modern computational trends give preference to methods of high order of accuracy since they make it possible to decrease the amount of data communication, which is not only a slow operation but an energy consuming process.

If we use schemes that are implicit with respect to time, thereby providing a stable procedure for numerical integration, it will be necessary to solve at each step a system of linear algebraic equations (SLAEs) of special type, with large sparse matrices. This is the most expensive computational stage as it requires a large number of arithmetical operations and a big amount of memory, and both grow nonlinearly when the number of d. o. f. increases [4]. In this case, the main tool to ensure a high performance is the scalable parallelization of domain decomposition methods (DDM), which belongs to a special field of computational algebra (see, for example, [5,6,7]). A detailed review of parallelization approaches for nonstationary problems is presented in [8, 9]. In what follows, we will consider direct IBVPs only, whereas the ideal of engineering problems consists in solving inverse problems, which involves computing optimized parameters of the mathematical model under the condition of constrained optimization of a given objective functional. However, this is a topic that requires a special research.

The paper is structured as follows. In Sect. 2, the example of the heat transfer equation is considered regarding various aspects of temporal and spatial approximations. Section 3 deals with geometrical and algebraic issues of DDM as applied to nonstationary problems. In the last Section, we discuss an application of the given analysis for the parallel solution of practical problems.

2 Discretization Issues of Nonstationary Problems

Let us consider the initial boundary value problem (IBVP)

where t and \(\mathbf x \) are, respectively, temporal and spatial variables; \(u^{0}(\mathbf x )\) is a given initial guess; L is some differential operator, possibly, a nonlinear one and, in general, a matrix operator. In this case, the unknown \(u=(u_1,\ldots ,u_{N_u})^T\) is a vector function. We call task (1) a multi-disciplinary or multi-physics problem. Here \(\bar{\varOmega }\) denotes a bounded d-dimensional computational domain with boundary \(\varGamma = \bigcup ^{N_\varGamma }_{k=1}\varGamma _k\); l is a boundary-condition operator, which can be of various types \(l_i\) (Dirichlet, Neumann or Robin) at the corresponding boundary segments \( \varGamma _i\); f and g are functions that may depend on the unknown solution. We suppose that IBVP (1) describes a practical problem with real data. This means, for example, that the computational domain \(\bar{\varOmega }\) may have a complicated geometry, possibly, with multi-connected piecewise smooth curvilinear boundary surfaces \(\varGamma _k\). As an illustration, the following linear scalar differential operator of the second order is considered in (1):

The corresponding boundary conditions can be written down as

where \(\mathbf n \) denotes the outward unit normal to \(\varGamma _k\).

Note that if the original system of PDEs is complex and has temporal derivatives of high order, it can always be transformed into a first order real system by including additional unknown functions. Also, formulas (1) can describe an inverse problem if it contains variable parameters \(p=(p_1,\ldots ,p_{N_p})^T\), which should be optimized by means of the minimization of a prescribed objective functional. For simplicity, the original IBVP is written in the classical differential form, and it can be re-described in a variational style. It is supposed that the input data ensures the smoothness of the numerical methods in all cases. One more remark: in general, some boundary segments \(\varGamma _k\) can move, but we will primarily consider the boundary \(\varGamma \) fixed.

The approximation of the original problem (1) can be made in two steps. In the first step, we generate a spatial grid \(\varOmega ^h\), which, in the three-dimensional case (\(d=3\)), for example, a set of nodes (vertices), edges, faces (possibly, curvilinear), and finite elements or volumes. After applying the spatial approximation using the finite volume method, the finite element method, the discontinuous Galerkin method or other approaches, we obtain a system of N ordinary differential equations:

where \(\dot{u}\) denotes the time derivative of u, and the components of the vector \(f^h=\{f_l\}\) and of the matrices \(B=\{b_{l,l}\}\) and \(A=\{a_{l,l}\}\) may, in general, depend on the unknown solution.

In a simple case, the unknown vector \(u^h=\{u^h_l\}\) consists of approximate nodal values of the original solution \((u)^h=\{u(\mathbf x _l)\}\) but, basically, it can include, for instance, other functionals, and some derivatives of u at different points. The vector \((u) ^h \) of the discretized unknown solution satisfies the equation

where \(\psi ^h\) is the spatial approximation (truncation) error of Eq. (4), h is the maximal distance between neighboring grid nodes, and \(\gamma > 0\) is the order of the approximation. The matrix A in (4) can be defined as

where \(\varOmega ^h\) can be considered to be a set of indices that determine the number \(N=O(h^{-1})\) of all unknowns, and \(\omega _l\) denotes the stencil of the lth node, i.e. the set of neighboring nodes. In other words, \(\omega _l\) is the union of the column numbers of the nonzero elements in the lth row of the matrix A (the number of such values will be denoted as \(N_l\)). The total set made up by all \(\omega _l\), \(l=1,\ldots ,N\), determines the portrait of the sparse matrix A (\(N_l\ll N\)). Note that \(N_l\) does not depend on the matrix dimension N, which can be estimated as \(N\approx 10^7 \div 10^{10}\) for a large-size real problem. Moreover, for \(d=3\), we have \(N_l\approx 10\div 30\) for the first or the second order schemes, whereas \(N_l>100\) for the fourth to sixth orders of accuracy.

To solve ODEs (4), it is possible to apply various multi-stage and/or multi-step numerical integrators of different orders of accuracy with respect to the time step \(\tau _n\). For simplicity, we consider the two-step weighted scheme

where n is a time-step number; \(\theta =0\) corresponds to the explicit Euler method, otherwise, we have an implicit algorithm. If \(\theta = 1/2\), formula (7) corresponds to the Crank–Nicolson scheme, which has the second order approximation error \(\psi ^\tau =O(\tau ^2)\), \(\tau = \max _n\{\tau _n\}\); besides, \(\psi ^\tau =O(\tau )\) for \(\theta \ne 1/2\). Here and in what follows, we omit the index “h” for the sake of brevity. If we denote by \((u)^n\) a vector whose components are the values of the exact solution \(u(t_n,\mathbf x _l)\), and substitute it for \(u^n\) in (7), then we have

where \(\psi ^n=\psi ^\tau +\psi ^h\) is the total, i.e. temporal and spatial, approximation error of the numerical scheme.

If relations (7) are nonlinear, we should use quasi-linearization for each n, i.e. apply the iterative process and solve SLAEs at each “nonlinear” step.

In the implicit scheme with \(\theta \ne 0\), we have to solve a large algebraic system by some iterative approach, even for the original linear IBVP, since direct (noniterative) algorithms are too expensive in our case (matrices \(B+\tau _n\theta A\) are supposed to be nonsingular). Finally, from (7), we do not calculate \(u^{n+1}\) but some approximate value \(\tilde{u}^{n+1}\), which produces the residual vector

Now let us determine the total vector of the original solution, \(z^{n+1}=(u)^{n+1} - \tilde{u}^{n+1}\). It follows from (8) and (9) that the vectors \(z^{n+1}\), \(r^n\) and \(\psi ^n\) are connected by a relation that, for the reduced original problem (the elements of the matrices A and B, as well as those of the vectors \(f^n\) are supposed to be independent of u and t), can be written down as

If

for some vector norm, then we obtain from (10) the following estimate:

It follows from the considerations above that if the iterative residual \(r^n\) at each time step has the same order of accuracy as the approximation error \(\psi ^n\), then the total solution error does not change the order of accuracy. One important issue in solving a nonstationary problem consists in choosing the initial guess for the iterative solution of SLAEs at each time step. It is natural that the \(u^n\) values would be a good approximation to \(u^{n+1}\) to reduce the number of iterations, provided that the time step \(\tau _n\) is sufficiently small. Another simple approach is based on the linear extrapolation with respect to time:

In this case, we need to save the numerical solution for one additional time step. One of the popular methods for solving ODEs is based on the application of predictor-corrector schemes. For example, if we use in (7) the Crank–Nicolson scheme, for which \(\theta =1/2\) and \(\psi ^\tau =O(\tau ^2)\), or any other implicit method, this involves including a preliminary predictor stage for computing an approximate value of \(u^{n+1}\) by the simple explicit formula

where B is a diagonal or another easily invertible matrix, and \(\hat{u}^{n+1}\) is considered to be a predicted value of \({u}^{n+1}\). It can be interpreted as a zero iteration, \(u^{n+1,0}=\hat{u}^{n+1}\), and corrected by m iterations of the form

This approach is called \(PC^m\) and in practice provides an acceptable small residual

in a few iterations.

An improved idea to choose the initial guess can be proposed based on the least-squares method (LSM; see [10]). Let us save several previous time-step solutions \(u^{n-1},\ldots , u^{n-q}\), and compute the value \(u^{n+1,0}\) by means of the linear combination

The system of Eq. (7) can be rewritten as

So it follows from relation (16) that the initial residual \(r^{n+1,0}=g^{n+1}-Cu^{n+1,0}\) of system (17) satisfies the equality

Formally, here we can set \(r^{n+1,0}=0\) and obtain overdetermined SLAE for the vector c:

The generalized normal (with a minimal residual) solution of this system can be computed by the SVD (Singular Value Decomposition) algorithm or by the least-squares method (LSM), which gives the same result in exact arithmetics. The LSM gives the “small” symmetric system

which is nonsingular if W is a full-rank matrix. It is easy to verify that Eq. (20) implies the orthogonality property of the residual:

Note that, instead of the LSM approach (20), (21), it is possible to apply the so-called deflation principle [11], which uses the following orthogonality property instead of (21):

In this case, we have to solve SLAE

to determine the vector c. If this vector is computed from system (20) or (23), then the initial guess \(u^{n+1,0}\) for SLAEs (17) is determined from (16). For solving system (17) at each time step, it is natural to apply some preconditioned iterative method in Krylov subspaces. The stopping criterion of such iterations is

for some given tolerance \(\varepsilon \ll 1\). If condition (24) is satisfied, we set \(u ^{n+1} =u ^{n+1,m}\) and go to the next time step.

3 Geometrical and Algebraic Issues of Algorithms

The general scheme of solution of nonsteady IBVPs can be described as having two main parts. The first one consists in generating an algebraic system at each time step. Usually, this stage is parallelized easily enough, with a linear speedup when the number of computer units grows. The more complicated stage includes solving the algebraic system of equations, linear or nonlinear (SLAEs or SNLAEs); such tasks require a large amount of computational resources (memory and number of arithmetic operations) as the number of d. o. f. grows.

If we have SNLAEs at each time step, the solution methods involve a two-level iterative process. At first, some type of quasi-linearization is applied, and at each “nonlinear” iteration (Newton or Jacobi type, for example), we need to solve SLAEs, usually with a large sparse ill-conditioned matrix. This second stage will be the main issue in our considerations in what follows.

The main tool to achieve scalable parallelism on modern MPS is based on a domain decomposition method that can be interpreted in an algebraic or geometrical framework. Also, domain decomposition methods can be considered at both the continuous and the discrete levels. We use the second approach and suppose that the original computational domain \(\varOmega \) has already been discretized into a grid computational domain \(\varOmega ^{h}\). So, in what follows, the DDM is implemented only in grid computational domains, and the upper index “h” will be omitted for brevity.

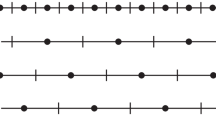

Let us decompose \(\varOmega \) into P subdomains (with or without overlap):

Here \(\varGamma _{q}\) is the boundary of \(\varOmega _{q}\), which is composed of the segments \(\varGamma _{q,q^{\prime }}\), \(q^{\prime }\in \omega _{q}\), and \(\omega _{q}=\{q_{1},\ldots ,q_{M_{q}}\}\) is a set of \(M_{q}\) contacting or conjugate subdomains. Formally, we can also denote by \(\varOmega _{0}=R^{d}\setminus \varOmega \) the external subdomain:

where \(\varGamma ^{i}_{q}=\bigcup _{q^{\prime }\ne 0}\varGamma _{q,q^{\prime }}\) and \(\varGamma _{q,0}=\varGamma ^{e}_{q}\) stand for the internal and external parts of the boundary of \(\varOmega _{q}\). We also define the overlap \(\varDelta _{q,q^{\prime }}=\varOmega _{q}\bigcap \varOmega _{q^{\prime }}\) of neighboring subdomains. If \(\varGamma _{q,q^{\prime }}=\varGamma _{q^{\prime },q}\) and  , then the overlap of \(\varOmega _{q}\) and \(\varOmega _{q^{\prime }}\) is empty. In particular, we suppose in (25) that each of the P subdomains has no intersection with \(\varOmega _{0}\) (

, then the overlap of \(\varOmega _{q}\) and \(\varOmega _{q^{\prime }}\) is empty. In particular, we suppose in (25) that each of the P subdomains has no intersection with \(\varOmega _{0}\) ( ).

).

The idea of the DDM involves the definition of the sets of IBVPs that should be equivalent to the original problem (1) in all subdomains:

Interface conditions in the form of Robin boundary conditions (instead of (3), for simplicity) are imposed in each segment of the internal boundaries of the subdomains, with the operators \(l_{q,q^{\prime }}\) from (27):

Here \(\alpha _{q^{\prime }}=\alpha _{q}\) and \(\beta _{q^{\prime }}=\beta _{q}\); \(\mathbf n _{q}\) is the outer normal to the boundary segment \(\varGamma _{q,q^{\prime }}\) of the subdomain \(\varOmega _{q}\). Strictly speaking, two pairs of different coefficients, \(\alpha ^{(1)}_{q}, \beta ^{(1)}_{q}\) and \(\alpha ^{(2)}_{q}, \beta ^{(2)}_{q}\), should be given for conditions of type (28) on each piece \(\varGamma _{q,q^{\prime }}, q^{\prime }\ne 0\), of the internal boundary. For example, \(\alpha ^{(1)}_{q}=1, \beta ^{(1)}_{q}=0\) and \(\alpha ^{(2)}_{q}=0, \beta ^{(2)}_{q}=1\) formally correspond, respectively, to the continuity of the solution sought and its normal derivative. The additive Schwarz algorithm in DDM is based on an iterative process in which the BVPs in each subdomain \(\varOmega _{q}\) are solved simultaneously, and the right-hand sides of the boundary conditions in (27) and (28) are taken from the previous iteration.

We implement the domain decomposition in two steps. At the first one, we define subdomains \(\varOmega _{q}\) without overlap, i.e. contacting grid subdomains have no common nodes, and each node belongs to only one subdomain. Then we define the grid boundary \(\varGamma _{q}=\varGamma ^{0}_{q}\) of \(\varOmega _{q}\), as well as the extensions of \(\bar{\varOmega }^{t}_{q}=\varOmega ^{t}_{q}\cup \varGamma ^{t}_{q}\), \(\varOmega ^{0}_{q}=\varOmega _{q}\), \(t=0,\ldots ,\varDelta \), layer by layer:

Here \(\varDelta \) stands for the parameter of extension or overlap.

At each time step, the algebraic interpretation of the DDM, after the approximations of BVPs (27) and (28), is described by the block version of SLAEs (17),

where indices “\(n+1\)” have been omitted for brevity; \(C_{q,q}\) and \(u_{q}, f_{q}\in \mathcal {R}^{N^{\varDelta }_{q}}\) are a block diagonal matrix and subvectors with components belonging to the corresponding subdomain \(\varOmega ^{\varDelta }_{q}\); \(N^{\varDelta }_{q}\) is the number of nodes in \(\varOmega ^{\varDelta }_{q}\).

The implementation of the interface conditions between adjacent subdomains can be described as follows. Let the lth node be a near-boundary one in the subdomain \(\varOmega _{q}\). Then we write down the corresponding equation in the form

Here \(\theta _{l}\) is some parameter that corresponds to different types of boundary conditions at the boundary \(\varGamma _{q}\), namely \(\theta _{l}=0\) corresponds to the Dirichlet condition, \(\theta _{l}=1\) corresponds to the Neumann condition, and \(\theta _{l}\in (0, 1)\) corresponds to the Robin boundary condition.

If we denote \(D= \mathrm {block}\)-\(\mathop {\mathrm {diag}}\{D_{l,l}\}\), then a simple variant of DDM is described as the Schwarz (or block Schwarz–Jacobi) iterative method

Improved versions of this approach are given by preconditioned algorithms in Krylov subspaces. Firstly, let us consider the advanced choice of the preconditioning matrices.

In the case of an overlapping domain decomposition, the additive Schwarz iterative algorithm is defined by the corresponding preconditioning matrix \(B_{AS}\), which can be described as follows (see [7]). For the subdomain \(\varOmega ^{\varDelta }_{q}\) with overlap parameter \(\varDelta \), we define a prolongation matrix \(R^{T}_{q,\varDelta }\in \mathcal {R}^{N,N^{\varDelta }_{q}}\) that extends the vectors \(u_{q}=\{u_{l},\;l\in \varOmega ^{\varDelta }_{q}\}\in \mathcal {R}^{N^{\varDelta }_{q}}\) to \(\mathcal {R}^{N}\) according to the relations

The transpose of this matrix defines a restriction operator that restricts vectors in \(\mathcal {R}^{N}\) to the subdomain \(\varOmega ^{\varDelta }_{q}\). The diagonal block of the preconditioning matrix \(B_{AS}\), which represents the restriction of the discretized BVP to the qth subdomain, is expressed by \(\hat{C}_{q}=R_{q,\varDelta }CR^{T}_{q,\varDelta }\). In these terms, the additive Schwarz preconditioner is defined as

Also, it is possible to define the so-called restricted additive Schwarz (RAS) preconditioner by considering the prolongation \(R^{T}_{q,0}\) instead of \(R^{T}_{q,\varDelta }\), i.e.

Note that \(B_{RAS}\) is a nonsymmetric matrix, even if C is a symmetric one.

The third way to define the preconditioner consists in the weighted determination of the iterative values in the intersections of the subdomains. For example, if the set of node indexes \(S^h_q=\bigcap _{q'}\varOmega ^h_{q'}\) belongs to \(n_q^{s+1}\) grid subdomains \(\varOmega ^h_{q'}\), and we have \(n_q^{s+1}\) different values of \(u^{s+1}_l\) for \(l\in S^h_q\), then it is natural to compute the real next iterative value of the subvector \(u_q^{n+1}\) by means of the least-squares condition for the corresponding residual subvector.

Another type of preconditioning matrix which is used for DDM iterations in Krylov subspaces is responsible for the coarse grid correction or aggregation approach, which is based on a low-rank approximation of the original matrix C. We define a coarse grid, or macrogrid, \(\varOmega _{c}\) and the corresponding coarse space with \(N_{c}\ll N\) degrees of freedom, as well as some basic functions \(w^{k}\in \mathcal {R}^{N}\), \(k=1,\ldots ,N_{c}\). We suppose that the rectangular matrix \(W=(w_{1},\ldots ,w_{N_{c}})\in \mathcal {R}^{N,N_{c}}\) has full rank. Then we define the coarse grid preconditioner \(B_{c}\) as

where the small matrix \(\hat{C}\) is a low-rank approximation of C; W is called the restriction matrix, and the transposed matrix \(W^{T}\) is the prolongation matrix.

Let us consider now the construction of the preconditioned iterative processes in Krylov subspaces. We offer a general description of the multi-preconditioned semi-conjugate residual (MPSCR) iterative method [12]. Let \(r^{0}=f^0-Cu^{0}\) be the initial residual of algebraic system (17), and let \(B^{(1)}_{0},\ldots ,B^{(m_{0})}_{0}\) be a set of some nonsingular easily invertible preconditioning matrices. Using them, we define a rectangular matrix composed of the initial direction vectors \(p^{0}_{k}\), \(k=1,\ldots ,m_{0}\):

which are assumed to be linearly independent.

Successive approximations \(u^{n}\) and the corresponding residuals \(r^{n}\) will be determined with the help of the recursions

Here \(\bar{\alpha }_{n}=(\alpha ^{1}_{n},\ldots ,\alpha ^{m_{n}}_{n})^{T}\) are \(m_{n}\)-dimensional vectors. The direction vectors \(p^{n}_{l}\), \(l=1,\ldots ,m_{n}\), which form the columns of the rectangular matrices \(P_{n}=[P^{n}_{1}\cdots P^{n}_{m_{n}}]\in \mathcal {R}^{N,m_{n}}\), are defined as orthogonal vectors in the sense of satisfying the relations

where \(D_{n,n}=\mathop {\mathrm {diag}}\{\rho _{n,l}\}\) is a symmetric positive definite matrix since the matrices \(P_{k}\) have full rank, as is supposed.

Orthogonality properties (35) provide the minimization of the residual norm \(\Vert r^{n+1}\Vert _{2}\) in the Krylov block subspace of dimension \(M_{n}\):

provided that we define the coefficient vectors \(\bar{\alpha }_{n}\) and the matrices \(P_n\) by the formulas

where the auxiliary matrices

have been introduced; \(B^{(l)}_{n+1}\) are some nonsingular easily invertible preconditioning matrices, and \(\bar{\beta }_{k,n}\) are coefficient vectors that are determined, after substitution of (38) into orthogonality conditions (35), by the formula

Let us remark that a successful acceleration of various Krylov algorithms can be attained by least-squares approaches [13].

4 Parallel Implementation of the Method

The parallel implementation of the numerical approaches we have considered consists, in general, of the following main stages:

-

(a)

at each time step the grid constructing and or reconstructing the mesh at each time step if it is necessary, i.e. if the solution changes dresfiarlly in time;

-

(b)

computing the coefficients of a discrete algebraic system, and recomputing these coefficients if the input data of the original problem depend on time;

-

(c)

at each time step, implementing nonlinear iterations if the coefficients of the original IBVP depend on the unknown solution;

-

(d)

solving SLAEs by means of domain decomposition methods in Krylov subspaces;

-

(e)

postprocessing and visualization of the numerical results obtained;

-

(f)

solving the inverse or the optimal IBVP which includes constraint minimization of the objective parameterized functional based on the optimization methods and on solution of a set of direct problems, presented by the above stages;

-

(g)

control of the general computational process and decision-making in the results of mathematical modeling.

The “d” stage is the most expensive in terms of the required computational resources, and it is also the most investigated in the sense of achieving scalable parallelism. The main numerical and technological tools here are based on both domain decomposition methods and hybrid programming: MPI (Message Passing Interface system), open-MP type multi-thread computing, vectorization of operations and use of special computational units, for instance, GPGPU (see [14] and references therein). The DDMs represent two-level iterative processes in the Krylov subspaces. The upper level includes the distributed version of the MPSCR method (33)–(40), for example. In the case of a symmetric matrix C, this algorithm becomes simpler and transforms into a multi-preconditioned conjugate residual (MPCR) method with short recursions. Here matrix-vector operations are parallelized easily by means of efficient functions from the SPARSE BLAS library. To minimize inter-processor communication time, a special array buffering is implemented. The main speedup is attained by synchronously solving the auxiliary algebraic subsystems for subdomains on the corresponding processors. It is important that SLAEs can have diverse matrix structures and be solved by various direct or iterative algorithms. In a sense, we have here a heterogeneous block iterative process, and minimizing the general run-time is not simply in such cases. In this situation, the balancing domain decomposition problem is a nonstandard task that should be solved in terms of general computer resource consuming minimization.

The scalable parallelization of the other computational stages (a–c) should also be based, naturally, on the domain decomposition principle. Within the conception of the basic system of modeling (BSM; see [15]), each stage would be implemented by the corresponding BSM kernel subsystem which is interacted by means of distributed data structures.

References

Il’in, V.P.: Mathematical Modeling, Part I: Continuous and Discrete Models. SBRAS Publ., Novosibirsk (2017). (in Russian)

Il’in, V.P.: Finite Element Methods and Technologies. ICM&MG SBRAS, Novosibirsk (2007). (in Russian)

Il’in, V.P.: Methods of Solving the Ordinary Differential Equations. NSU Publ., Novosibirsk (2017). (in Russian)

Il’in, V.P.: Problems of parallel solution of large systems of linear algebraic equations. J. of Math. Sci. 216, 795–804 (2016). https://doi.org/10.1007/s10958-016-2945-4

Saad, Y.: Iterative Methods for Sparse Linear Systems. PWS Publ., New York (2002). https://doi.org/10.1137/1.9780898718003

Il’in, V.P.: Finite Difference and Finite Volume Methods for Elliptic Equations. ICM&MG SBRAS Publisher, Novosibirsk (2001). (in Russian)

Dolean, V., Jolivet, P., Nataf, F.: An Introduction to Domain Decomposition Methods: Algorithms, Theory and Parallel Implementaion. SIAM, Philadelphia (2015). https://doi.org/10.1137/1.9781611974065

Gander, M.J., Guttel, S.: ParaExp: a parallel integrator for linear initial value problems. SIAM J. Sci. Comput. 35, 123–142 (2013). https://doi.org/10.1137/110856137

Karra, S.: A hybrid Pade ADI scheme of high-order for convection-diffusion problem. Int. J. Numer. Methods Fluids 64, 532–548 (2010). https://doi.org/10.1002/fld2160

Lawson, G.L., Hanson, R.J.: Solving Least Squares Problems. Prentice-Hall, Inc., Upper Saddle River (1974). https://doi.org/10.1137/1.9781611971217

Saad, Y., Yeung, M., Erhel, J., Guyomarc’h, F.: A deflated version of the Conjugate Gradient Algorithm. SIAM J. Sci. Comput. 21, 1909–1926 (2000). https://doi.org/10.1137/s1064829598339761

Il’in, V.P.: Multi-preconditioned domain decomposition methods in the Krylov subspaces. In: Dimov, I., Faragó, I., Vulkov, L. (eds.) NAA 2016. LNCS, vol. 10187, pp. 95–106. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-57099-0_9

Il’in, V.P.: Least squares methods in Krylov subspaces. J. Math. Sci. 224, 900–910 (2017). https://doi.org/10.1007/s10958-017-3460-y

Il’in, V.: On the parallel strategies in mathematical modeling. In: Sokolinsky, L., Zymbler, M. (eds.) PCT 2017. CCIS, vol. 753, pp. 73–85. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-67035-5_6

Gladkikh, V.S., Il’in, V.P.: Basic System of Modeling (BSM): conception, architecture and methodology. In: Conference Proceedings of “Modern Problems of Mathematical Modeling, Image Processing and Parallel Computing”, pp. 151–158. RTU Publ., Rostov (2017). https://doi.org/10.23947/2587-8999-2017-2-194-200. (in Russian)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Il’in, V. (2018). High-Performance Computation of Initial Boundary Value Problems. In: Sokolinsky, L., Zymbler, M. (eds) Parallel Computational Technologies. PCT 2018. Communications in Computer and Information Science, vol 910. Springer, Cham. https://doi.org/10.1007/978-3-319-99673-8_14

Download citation

DOI: https://doi.org/10.1007/978-3-319-99673-8_14

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-99672-1

Online ISBN: 978-3-319-99673-8

eBook Packages: Computer ScienceComputer Science (R0)