Abstract

In recent years, decomposition-based multi-objective evolutionary algorithms (MOEAs) have gained increasing popularity. However, these MOEAs depend on the consistency between the Pareto front shape and the distribution of the reference weight vectors. In this paper, we propose a decomposition-based MOEA, which uses the modified Euclidean distance (\(d^+\)) as a scalar aggregation function. The proposed approach adopts a novel method for approximating the reference set, based on an hypercube-based method, in order to adapt the reference set for leading the evolutionary process. Our preliminary results indicate that our proposed approach is able to obtain solutions of a similar quality to those obtained by state-of-the-art MOEAs such as MOMBI-II, NSGA-III, RVEA and MOEA/DD in several MOPs, and is able to outperform them in problems with complicated Pareto fronts.

The first author acknowledges support from CONACyT and CINVESTAV-IPN to pursue graduate studies in Computer Science. The second author gratefully acknowledges support from CONACyT project no. 221551.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

- Decomposition-based MOEAs

- Multi-objective Evolutionary Algorithms (MOEAs)

- Modified Euclidean Distance

- Pareto Front Shape

- Reference Weight Vector

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Many real-world problems have several (often conflicting) objectives which need to be optimized at the same time. They are known as Multi-objective Optimization Problems (MOPs) and their solution gives rise to a set of solutions that represent the best possible trade-offs among the objectives. These solutions constitute the so-called Pareto optimal set and their image is called the Pareto Optimal Front (POF). Over the years, Multi-Objective Evolutionary Algorithms (MOEAs) have become an increasingly common approach for solving MOPs, mainly because of their conceptual simplicity, ease of use and efficiency.

Decomposition-based MOEAs transform a MOP into a group of sub-problems, in such a way that each sub-subproblem is defined by a reference weight point. Then, all these sub-problems are simultaneously solved using a single-objective optimizer [16]. Because of their effectiveness (e.g., with respect to Pareto-based MOEAsFootnote 1) and efficiency,Footnote 2 decomposition-based MOEAs have become quite popular in recent years both in traditional MOPs and in many-objective problems (i.e., MOPs having four or more objectives).

However, the main disadvantage of decomposition-based MOEAs is that the diversity of its selection mechanism is led explicitly by the reference weight vectors (normally the weight vectors are distributed in a unit simplex). This makes them unable to properly solve MOPs with complicated Pareto fronts (i.e., Pareto fronts with irregular shapes).

Decomposition-based MOEAs are appropriate for solving MOPs with regular Pareto front (i.e., those sharing the same shape of a unit simplex). There is experimental evidence that indicates that decomposition-based MOEAs are not able to generate good approximations to MOPs having disconnected, degenerated, badly-scaled or other irregular Pareto front shapes [2, 5].

Here, we propose a decomposition-based MOEA, which adopts the modified Euclidean distance (\(d^+\)) as a scalar aggregation function. This approach is able to switch between a PBI scalar aggregation function and the \(d^+\) distance in order to lead the optimization process. In order to adopt the \(d^+\) distance, we also incorporate an adaptive method for building the reference set. This method is based on the creation of hypercubes, which uses an archive for preserving good candidate solutions. We show that the resulting decomposition-based MOEA has a competitive performance with respect to state-of-the-art MOEAs, and that is able to properly deal with MOPs having complicated Pareto fronts.

The remainder of this paper is organized as follows. Section 2 provides some basic concepts related to multi-objective optimization. Our decomposition-based MOEA is described in Sect. 3. In Sect. 4, we present our methodology and a short discussion of our preliminary results. Finally, our conclusions and some possible paths for future research are provided in Sect. 5.

2 Basic Concepts

Formally a MOP in terms of minimization is defined as:

subject to:

where \({\varvec{x}} = [x_1,x_2,\dots ,x_n]\) is the vector of decision variables, \(f_i : \mathbb {R}^n \rightarrow \mathbb {R}\), \(i = 1 , \dots ,m\) are the objective functions and \(g_i, h_j : \mathbb {R}^n \rightarrow \mathbb {R}\), \(i = 0,\dots ,p\), \(j = 1,\dots ,q\) are the constraint functions of the problem.

We also need to provide more details about the \(\text {IGD}^+\) indicator, which uses the modified Euclidean distance that we adopt in our proposal. According to [11], the \(\text {IGD}^+\) indicator can be described as follows:

where \({\varvec{a}} \in \mathcal {A} \subset \mathbb {R}^m\), \({\varvec{z}} \in \mathcal {Z} \subset \mathbb {R}^m\), \(\mathcal {A}\) is the Pareto front set approximation and \(\mathcal {Z}\) is the reference set. \(d^+({\varvec{a}},{\varvec{z}})\) is defined as:

Therefore, we can see that the set \(\mathcal {A}\) represents a better approximation to the real \(\mathcal {PF}\) when we obtain a lower \(\text {IGD}^+\) value, if we consider the reference set as \(\mathcal {PF}_{True}\). \(\text {IGD}^+\) was shown to be weakly Pareto complaint, and this indicator presents some advantages with respect to the original Inverted Generational Distance (for more details about IGD and \(\text {IGD}^+\), see [4] and [11] respectively).

3 Our Proposed Approach

3.1 General Framework

Our approach adopts the same structure of the original MOEA/D [16], but we include some improvements in order to solve MOPs with complicated Pareto fronts. Our approach has the following features: (1) An archiving process for preserving candidate solutions which will form the reference set; (2) a method for adapting the reference set in order to sample uniformly the Pareto front; and (3) a rule for updating the reference set. Algorithm 1 shows the details of our proposed approach. Our proposed MOEA decomposes the MOP into scalar optimization subproblems, where each subproblem is solved simultaneously by an evolutionary algorithm (same as the original MOEA/D). The population, at each generation, is composed by the best solution found so far for each subproblem. Each subproblem is solved by using information only from its neighborhood, where each neighborhood is defined by the n candidate solutions which have the nearest distance based on the scalar aggregation function. The reference update process is launched when certain percentage of the evolutionary process (defined by “UpdatePercent”) is reached. The reference update process starts to store the non-dominated solutions in order to sample the shape of the Pareto front. When the cardinality of the set \(|\mathcal {A}|\) is equal to “ArchiveSize”, the reference method is launched for selecting the best candidate solutions, which will form the new reference set. Once this is done, the scalar aggregation function is updated by choosing the modified Euclidean distance (\(d^+\)) (see Eq. (4)), and the set \(\mathcal {A}\) is cleaned up. The number of allowable updates is controlled by the variable “maxUpdates”.

3.2 Archiving Process

As mentioned before, the archive stores non-dominated solutions, up to a maximum number of solutions defined by the “ArchiveSize” value. When the archive reaches its maximum capacity, the approximation reference algorithm is executed for selecting candidate solutions (these candidate solutions will form the so-called candidate reference set). After that, the archive is cleaned and the archiving process continues until reaching a maximum number of updates. The archiving process is applied after a 60% of the total number of generations. It is worth mentioning that the candidate reference set is not compatible with the weight relation ruleFootnote 3, which implies that it is not possible to use the Tchebycheff scalar aggregation function for leading the search. However, the PBI function works because it only requires directions (for more details see [16]).

3.3 Reference Set

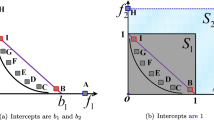

In our approach, we aim to select the best candidate points whose directions are promising (these candidate solutions will sample the Pareto front as uniformly as possible). The main idea is to apply a density estimator. For this reason, we propose to use an algorithm based on the hypercube contributions to select a certain number of reference points from the archive. Algorithm 2 provides the pseudo-code of an approach that is invoked with a set of non-dominated candidate points (called \(\mathcal {A}\) set) and the maximum number of reference points that we aim to find. The algorithm is organized in two main parts. In the first loop, we create a set of initial candidate solutions to form the so-called \(\mathcal {Q}\) set. Thus, the solutions from \(\mathcal {A}\) that form part of \(\mathcal {Q}\) will be removed from \(\mathcal {A}\). After that, the greedy algorithm starts to find the best candidate solutions which will form the reference set \(\mathcal {Z}\). In order to find the candidate reference points, the selection mechanism computes the hypercube contributions of the current reference set \(\mathcal {Q}\). Once this is done, we remove the \(i^{th}\) solution that minimizes the hypercube value and we add a new candidate solution from \(\mathcal {A}\) to \(\mathcal {Q}\). This process is executed until the cardinality of \(\mathcal {A}\) is equal to zero. In the line 21 of Algorithm 2, we apply the expand and translate operations. A hypercube is generated by the union of all the maximum volumes covered by a reference point. The \(i^{th}\) maximum volume is described as “the maximum volume generated by a set of candidate points” (these candidate points are obtained from the archive using a reference point \(y_{ref}\)). The hypercube is computed using Algorithm 3. The main idea of this algorithm is to add all the maximum volumes, which are defined by the maximum point and the reference point (\(y_{ref}\)). When a certain point is considered to be the maximum point, the objective space is split between m parts. The maximum point is removed from the set \(\mathcal {Q}\). This process is repeated until \(\mathcal {Q}\) is empty.

In the first part of Algorithm 3, we validate if \(\mathcal {Q}\) contains one element. If that is the case, we compute the volume generated by \(y_{ref}\) and \( {\varvec{q}} \in \mathcal {Q}\). Otherwise, we compute the union of all the maximum hypercubes. In order to apply this procedure, we find the vector \({\varvec{q}}_{{\varvec{max}}}\) that maximizes the hypercube. Once this is done, we create m reference points which will form the so-called \(\mathcal {Y}\) set. For each reference point from \(\mathcal {Y}\), we reduce the set \(\mathcal {Q}\) into a small subset in order to form the set \(\mathcal {Q}_{new}\). Once this is done, we proceed to compute recursively the hypercube value of the new set formed by the subset \(\mathcal {Q}_{new}\) and the new reference point \(y_{new}\). It is worth noting that this value allows to measure the relationship among each element of a non-dominated set.

4 Experimental Results

We compare the performance of our approach with respect to that of four state-of-the-art MOEAs: MOEA/DD [13], NSGA-III [5], RVEA [2], and MOMBI-II [9]. These MOEAs had been found to be competitive in MOPs with a variety of Pareto front shapes. MOEA/DD [13] is an extension of MOEA/D which includes the Pareto dominance relation to select candidate solutions and is able to outperform the original MOEA/D, particularly in many-objective problems having up to 15 objectives. NSGA-III [5] uses a distributed set of reference points to manage the diversity of the candidate solutions, with the aim of improving convergence. The Reference Vector Guided Evolutionary Algorithm (RVEA) [2] provides very competitive results in MOPs with complicated Pareto fronts. Many Objective Meta-heuristic Based on the R2 indicator (MOMBI) [8] adopts the use of weight vectors and the R2 indicator, and both mechanisms lead the optimization process. MOMBI is very competitive but it tends to lose diversity in high dimensionality. This study includes an improved version of this approach, called MOMBI-II [9].

4.1 Methodology

For our comparative study, we decided to adopt the Hypervolume indicator, due to this indicator is able to assess both convergence and maximum spread along the Pareto front. The reference points used in our preliminary study are shown in Table 1.

We aimed to study the performance of our proposed approach when solving MOPs with complicated Pareto front shapes. For this reason, we selected 18 test problems with a variety of representative Pareto front shapes from some well-known and recently proposed test suites: the DTLZ [7], the WFG [10], the MAF [3] and the VNT test suites [15].

4.2 Parameterization

In the MAF and DTLZ test suites, the total number of decision variables is given by \(n = m + k -1 \), where m is the number of objectives and k was set to 5 for DTLZ1 and MAF1, and to 10 for DTLZ2-6, and MAF2-5. The number of decision variables in the WFG test suite was set to 24, and the position-related parameter was set to \(m-1\). The distribution indexes for the Simulated Binary crossver and the polynomial-based mutation operators [6] adopted by all algorithms, were set to: \(\eta _{c}=20\) and \(\eta _{m}=20\), respectively. The crossover probability was set to \(p_{c}=0.9\) and the mutation probability was set to \(p_{m}=1/L\), where L is the number of decision variables. The total number of function evaluations was set in such a way that it did not exceed 60,000. In MOEA/DD, MOMBI-II and NSGA-III, the number of weight vectors was set to the same value as the population size. The population size N is dependent on H. For this reason, for all test problems, the population size was set to 120 for each MOEA. In RVEA, the rate of change of the penalty function and the frequency to conduct the reference vector adaptation were set to 2 and 0.1, respectively. Our approach was tested using a PBI scalar aggregation function and the modified Euclidean distance (\(d^+\)). The maximum number of elements allowed in the archive was set to 500 and the maximum number of reference updates was set to 5.

4.3 Discussion of Results

Table 2 shows the average hypervolume values of 30 independent executions of each MOEA for each instance of the DTLZ, VNT, MAF and WFG test suites, where the best results are shown in boldface and grey-colored cells contain the second best results. The values in parentheses show the variance for each problem. We adopted the Wilcoxon rank sum test in order to compare the results obtained by our proposed MOEA and its competitors at a significance level of 0.05, where the symbol “\(+\)” indicates that the compared algorithm is significantly outperformed by our approach. On the other hand, the symbol “−” means that MOEA/DR is significantly outperformed by its competitor. Finally, “\(\approx \)” indicates that there is no statistically significant difference between the results obtained by our approach and its competitor.

As can be seen in Table 2, our MOEA was able to outperform MOMBI-II, RVEA, MOEA/DD, and NSGA-III in seven instances and in several other cases, it obtained very similar results to those of the best performer. We can see that our approach outperformed its competitors in MOPs with degenerate Pareto fronts (DTLZ5-6 and VNT2-3). In this study, MOMBI-II is ranked as the second best overall performer, because it was able to outperform its competitors in four cases. It is worth mentioning that all the adopted MOEAs are very competitive because the final set of solutions obtained by them has similar quality in terms of the hypervolume indicator.

Figures 1, 2, 3 and 4 show a graphical representation of the final set of solutions obtained by each MOEA. On the MOPs with inverted Simplex-like Pareto fronts, our algorithm had a good performance (see Fig. 1). Figures 1a to e show that the solutions produced by all the MOEAs adopted have a good coverage of the corresponding Pareto fronts. However, the solutions of MOMBI-II and NSGA-III are not distributed very uniformly, while the solutions of RVEA and MOEA/DD are distributed uniformly but their number is apparently less than their population size. On MOPs with badly-scaled Pareto fronts, our approach was able to obtain the best approximation (see Fig. 2). Figures 2a to e show that the solutions produced by all the MOEAs adopted are distributed very uniformly. On MOPs with degenerate Pareto fronts, it is clear that the winner in this category is our algorithm since the solutions of NSGA-III, RVEA and MOEA/DD are not distributed very uniformly, and they were not able to converge (see Fig. 3). On MOPs with disconnected Pareto fronts, our approach did not perform better than the other MOEAs. The reason is probably that the evolutionary operators were not able to generate solutions in the whole objective space, which makes the approximations produced by our approach to converge to a single region. Figure 4 shows that RVEA was able to obtain the best approximation in DTLZ7 since its approximation is distributed uniformly along the Pareto front.

5 Conclusions and Future Work

We have proposed a decomposition-based MOEA for solving MOPs with different Pareto front shapes (i.e. those having complicated Pareto front shapes). The core idea of our proposed approach is to adopt the modified Euclidean distance (\(d^+\)) as a scalar aggregation function. Additionally, our proposal introduces a novel method for approximating the reference set, based on an hypercube-based method, in order to adapt the reference set to address the evolutionary process. Our results show that our method for adapting the reference point set improves the performance of the original MOEA/D. As can be observed, the reference set is of utmost importance since our approach leads its search process using a set of reference points. Our preliminary results indicate that our approach is very competitive with respect to MOMBI-II, RVEA, MOEA/DD and NSGA-III, being able to outperform them in seven benchmark problems. Based on such results, we claim that our proposed approach is a competitive alternative to deal with MOPs having complicated Pareto front shapes. As part of our future work, we are interested in studying the sensitivity of our proposed approach to its parameters. We also intend to improve its performance in those cases in which it was not the best performer.

Notes

- 1.

It is well-known that Pareto-based MOEAs cannot properly solve many-objective problems [12].

- 2.

- 3.

The weights of the reference point problem should be \(\sum _{i = 0}^{m}{\lambda _i} = 1\).

References

Beume, N., Naujoks, B., Emmerich, M.: SMS-EMOA: multiobjective selection based on dominated hypervolume. Eur. J. Oper. Res. 181(3), 1653–1669 (2007)

Cheng, R., Jin, Y., Olhofer, M., Sendhoff, B.: A reference vector guided evolutionary algorithm for many-objective optimization. IEEE Trans. Evol. Comput. 20(5), 773–791 (2016)

Cheng, R., et al.: A benchmark test suite for evolutionary many-objective optimization. Complex Intell. Syst. 3(1), 67–81 (2017)

Coello Coello, C.A., Reyes Sierra, M.: A study of the parallelization of a coevolutionary multi-objective evolutionary algorithm. In: Monroy, R., Arroyo-Figueroa, G., Sucar, L.E., Sossa, H. (eds.) MICAI 2004. LNCS (LNAI), vol. 2972, pp. 688–697. Springer, Heidelberg (2004). https://doi.org/10.1007/978-3-540-24694-7_71

Deb, K., Jain, H.: An evolutionary many-objective optimization algorithm using reference-point-based nondominated sorting approach, part I: solving problems with box constraints. IEEE Trans. Evol. Comput. 18(4), 577–601 (2014)

Deb, K., Pratap, A., Agarwal, S., Meyarivan, T.: A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evol. Comput. 6(2), 182–197 (2002)

Deb, K., Thiele, L., Laumanns, M., Zitzler, E.: Scalable test problems for evolutionary multiobjective optimization. In: Abraham, A., Jain, L., Goldberg, R. (eds.) Evolutionary Multiobjective Optimization. Theoretical Advances and Applications, pp. 105–145. Springer, USA (2005). https://doi.org/10.1007/1-84628-137-7_6

Hernández Gómez, R., Coello Coello, C.A.: MOMBI: a new metaheuristic for many-objective optimization based on the R2 indicator. In: 2013 IEEE Congress on Evolutionary Computation (CEC 2013), Cancún, México, 20–23 June 2013, pp. 2488–2495. IEEE Press (2013). ISBN 978-1-4799-0454-9

Hernández Gómez, R., Coello Coello, C.A.: Improved metaheuristic based on the R2 indicator for many-objective optimization. In: 2015 Genetic and Evolutionary Computation Conference (GECCO 2015), Madrid, Spain, 11–15 July 2015, pp. 679–686. ACM Press (2015). ISBN 978-1-4503-3472-3

Huband, S., Hingston, P., Barone, L., While, L.: A review of multiobjective test problems and a scalable test problem toolkit. IEEE Trans. Evol. Comput. 10(5), 477–506 (2006)

Ishibuchi, H., Masuda, H., Tanigaki, Y., Nojima, Y.: Modified distance calculation in generational distance and inverted generational distance. In: Gaspar-Cunha, A., Henggeler Antunes, C., Coello, C.C. (eds.) EMO 2015. LNCS, vol. 9019, pp. 110–125. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-15892-1_8

Ishibuchi, H., Tsukamoto, N., Nojima, Y.: Evolutionary many-objective optimization: a short review. In: 2008 Congress on Evolutionary Computation (CEC 2008), Hong Kong, June 2008, pp. 2424–2431. IEEE Service Center (2008)

Li, K., Deb, K., Zhang, Q., Kwong, S.: An evolutionary many-objective optimization algorithm based on dominance and decomposition. IEEE Trans. Evol. Comput. 19(5), 694–716 (2015)

Manoatl Lopez, E., Coello Coello, C.A.: IGD\(^+\)-EMOA: a multi-objective evolutionary algorithm based on IGD\(^{+}\). In: 2016 IEEE Congress on Evolutionary Computation (CEC 2016), Vancouver, Canada, 24–29 July 2016, pp. 999–1006. IEEE Press (2016). ISBN 978-1-5090-0623-9

Veldhuizen, D.A.V.: Multiobjective evolutionary algorithms: classifications, analyses, and new innovations. Ph.D. thesis, Department of Electrical and Computer Engineering. Graduate School of Engineering. Air Force Institute of Technology, Wright-Patterson AFB, Ohio, USA, May 1999

Zhang, Q., Li, H.: MOEA/D: a multiobjective evolutionary algorithm based on decomposition. IEEE Trans. Evol. Comput. 11(6), 712–731 (2007)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Manoatl Lopez, E., Coello Coello, C.A. (2018). Use of Reference Point Sets in a Decomposition-Based Multi-Objective Evolutionary Algorithm. In: Auger, A., Fonseca, C., Lourenço, N., Machado, P., Paquete, L., Whitley, D. (eds) Parallel Problem Solving from Nature – PPSN XV. PPSN 2018. Lecture Notes in Computer Science(), vol 11101. Springer, Cham. https://doi.org/10.1007/978-3-319-99253-2_30

Download citation

DOI: https://doi.org/10.1007/978-3-319-99253-2_30

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-99252-5

Online ISBN: 978-3-319-99253-2

eBook Packages: Computer ScienceComputer Science (R0)