Abstract

This paper introduces two tools for manual energy evaluation and runtime tuning developed at IT4Innovations in the READEX project. The MERIC library can be used for manual instrumentation and analysis of any application from the energy and time consumption point of view. Besides tracing, MERIC can also change environment and hardware parameters during the application runtime, which leads to energy savings.

MERIC stores large amounts of data, which are difficult to read by a human. The RADAR generator analyses the MERIC output files to find the best settings of evaluated parameters for each instrumented region. It generates a  report and a MERIC configuration file for application production runs.

report and a MERIC configuration file for application production runs.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The Horizon 2020 project READEX (Runtime Exploitation of Application Dynamism for Energy-efficient eXascale computing) [18] deals with manual and also automatic tools that analyze High Performance Computing (HPC) applications, and searches for the best combination of tuned parameter settings to use them optimally for application needs. This paper presents tools developed in the READEX project for manual evaluation of the dynamic behavior of the HPC applications - the MERIC and RADAR generator.

The MERIC library evaluates application behavior in terms of resource consumption, and controls hardware and runtime parameters such as the Dynamic Voltage and Frequency Scaling (DVFS), Uncore Frequency Scaling (UFS), and number of OpenMP threads through external libraries. User applications can be instrumented using the MERIC manual instrumentation to analyze each part of the code separately. The energy measurements are provided by the High Definition Energy Efficiency Monitoring (HDEEM) system [8], or by Running Average Power Limit (RAPL) counters [10].

The MERIC measurement outputs are analyzed using the RADAR generator, which produces detailed reports, and also a MERIC configuration file, which can be used to set the best parameter values for all evaluated regions in the application.

There are several research activities in HPC application energy saving due to applying power capping [6, 11] to the whole application run instead of parsing the application into regions and applying dynamic tuning. Other research is dealing with scheduling system using dynamic power capping with negligible time penalty based on previous application runs [16]. Dynamic application tuning is the goal of the READEX project, which should deliver a tool-suite for fully automatic application instrumentation, dynamism detection and analysis. The analysis should find the configuration that provide the maximum energy savings and can be used for the future production runs. The READEX tools are very complex and may not be easy to apply. Our tools present the same approach with focus on usage friendliness, albeit providing manual tuning only. Furthermore, the READEX tools are focused on x86 platforms only, which is not the case for MERIC.

2 Applications Dynamism

The READEX project expects that HPC applications have different needs in separate parts of the code. To find these parts inside a user application, three dynamism metrics are presently measured and used in the READEX project. They include:

-

1.

Execution time

-

2.

Energy consumed

-

3.

Computational intensity

Among these three metrics, the semantics of execution time and energy consumed are straightforward. Variation in the execution time and energy consumed by regions in an application during its execution is an indication of different resource requirements. The computational intensity is a metric that is used to model the behaviour of an application based on the workload imposed by it on the CPU and the memory. Presently, computational intensity is calculated using the following formula 1 and is analogous to the operational intensity used in the roofline model [22].

Selected regions in the user application are called significant. To detect the significant regions manually, profiling tools such as Allinea MAP [1] are used.

The dynamism observed in an application can be due to variation of the following factors:

-

Floating point computations (for example, this may occur due to variation in the density of matrices in dense linear algebra).

-

Memory read/write access patterns (for example, this may occur due to variation in the sparsity of matrices in sparse linear algebra).

-

Inter-process communication patterns (for example, this may occur due to irregularity in a data structure leading to irregular exchange of messages for operations such as global reductions).

-

I/O operations performed during the application’s execution.

-

Different inputs to regions in the application.

To address these factors, a set of tuning parameters has been identified in the READEX project to gain possible savings due to static and dynamic tuning. The list of the parameters contains the following:

-

hardware parameters of the CPU

-

Core Frequency (CF)

-

Uncore frequency (UCF)Footnote 1

-

-

system software parameters

-

number of OpenMP threads, thread placement

-

-

application-level parameters

-

depends on the specific application

-

All parameters can be set before an application is executed (this is called static tuning), in addition some of them can be tuned dynamically during the application runtime. For instance core and uncore frequencies can be switched without additional overhead, but switching the number of threads can affect performance due to NUMA effects and data placement and must be handled carefully. Static and dynamic tuning leads to static and dynamic savings, respectively.

Presently the MERIC tool (Sect. 3) is being developed and used in the READEX project to measure the above-mentioned dynamism metrics and evaluate applications. When using MERIC it is possible to dynamically switch CPU core and uncore frequencies and the number of used OpenMP threads. The measurements collected by these tools for an application are logged into a READEX Application Dynamism Analysis Report (RADAR) as described in Sect. 4.

3 Manual Dynamism Evaluation with MERIC

MERICFootnote 2 is a C++ dynamic library (with an interface for Fortran applications) that measures energy consumption and runtime of annotated regions inside a user application. By running the code with different settings of the tuning parameters, we analyze possibilities for energy savings. Subsequently, the optimal configurations are applied by changing the tuning parameters (list of parameters mentioned in the previous Sect. 2) during the application runtime, which can be also done by using MERIC. MERIC wraps a list of libraries that provide access to different hardware knobs and registers, operating system and runtime system variables, i.e. tuning parameters, in order to read or modify their values. The main motivation for the development of this tool was to simplify the evaluation of various applications dynamic behavior from the energy consumption point of view, which includes a large number of measurements.

The library is easy to use. After inserting the MERIC initialization function, it is possible to instrument the application through the so-called probes, which wrap potentially significant regions of the analysed code. Besides storing the measurement results, the user should not notice any changes in the behavior of the application.

3.1 MERIC Features

MERIC has minimal influence on the application’s runtime despite providing several analysis and tuning features. Its overhead depends on the energy measurement mode as described in this section, the amount of hardware performance counters read, as well as the number of instrumented regions.

Environment Settings

During the MERIC initialization and at each region start and end, the CPU frequency, uncore frequency and number of OpenMP threads are set. To do so, MERIC uses the OpenMP runtime API and the cpufreq [3] and x86_adapt [17] libraries.

Energy Measurement

The key MERIC feature is energy measurement using the High Definition Energy Efficiency Monitoring (HDEEM) system located directly on computational nodes that records 100 power samples per second of the CPUs and memories, and 1000 samples of the node itself via the BMC (Baseboard Management Controller) and an FPGA (Field Programmable Gate Array). Figure 1 shows the system diagram and a picture a node with the HDEEM.

A HDEEM system located on a node and the system diagram [2].

HDEEM provides energy consumption measurement in two different ways, and in MERIC it is possible to choose which one the user wants to use by setting the MERIC_CONTINUAL parameter.

In one mode, the energy consumed from the point that HDEEM was initialized is taken from the HDEEM Stats structure (a data structure used by the HDEEM library to provide measurement information to the user application). In this mode we read the structure at each region start and end. This solution is straightforward, however, there is a delay of approximately 4 ms associated with every read from the HDEEM API. To avoid the delay, we take advantage of the fact that during measurement HDEEM stores power samples in its internal memory. In the second mode MERIC only needs to record timestamps at the beginning and the end of each region instead of calling the HDEEM API. This results in a very small overhead for MERIC instrumentation during the application runtime because all samples are transferred from the HDEEM memory at the end of the application runtime. The energy consumption is subsequently calculated from the power samples based on the recorded timestamps.

Contemporary Intel processors support energy consumption measurements via the Running Average Power Limit (RAPL) interface. MERIC uses the RAPL counters with 1 kHz sampling frequency to allow energy measurements on machines without the HDEEM infrastructure as well as to compare them with the HDEEM measurements.

The main disadvantage of using RAPL is that it measures CPUs and memories power consumption only, without providing information about the power consumption of the blade itself. In the case of nodes with two Intel(R) Xeon(R) CPU E5-E5-2680 v3 (\(2 \times 12\) cores) processors the power baseline is approximately 70 W. To overcome this handicap we statically add this 70 W to our measurements when using RAPL counters. MERIC uses the x86_adapt library to read the RAPL counters.

The minimum runtime of each evaluated region has been set in the READEX project to 100 ms when using HDEEM or RAPL, to have enough samples per region to evaluate the region optimum configuration correctly.

Hardware Performance Counters

To provide more information about the instrumented regions of the application, we use the perf_event and PAPI libraries, which provide access to hardware performance counters. Values from the counters are transferred into cache-miss rates, FLOPs/sFootnote 3 and also the computational intensity that is a key metric for dynamism detection as described in Sect. 2.

Shared Interface for Score-P

The Score-P software system, as well as the MERIC library, allows users to manually (and also automatically) instrument an application for tracing analysis. Score-P instrumentation is also used in the READEX tool suite [13].

A user that has already instrumented an application using Score-P instrumentation or would want to use it in the future may use the readex.h header file that is provided in the MERIC repository. This allows the user to only insert the user instrumentation once, but for both MERIC and Score-P simultaneously. When a user application is compiled, one has to define the preprocessor variables USE_MERIC, USE_SCOREP (Score-P phase region only) or alternatively USE_SCOREP_MANUAL to select which instrumentation should be used.

Table 1 shows the list of functions defined in the header file, with their MERIC and Score-P equivalents. Brief description of the mentioned MERIC functions is provided in Sect. 3.2, description of the Score-P functions can be found in its user manual [20].

MERIC Requirements

MERIC currently adds synchronization MPI and OpenMP barriers into the application code to ensure that all processes/threads under one node are synchronized in a single region when measuring consumed resources or changing hardware or runtime parameters. We realize that this approach inserts extra overhead into application runtime and may discriminate a group of asynchronous applications. In future the library will allow the user to turn these barriers off.

Beyond the inserted synchronization the MERIC library requires several libraries to provide all previously mentioned features:

-

Machine with HDEEM or x86_adapt library for accessing RAPL counters

-

Cpufreq or x86_adapt library to change CPU frequencies

-

PAPI and perf_event for accessing hardware counters

ARM Jetson TX1

The MERIC library was originally developed to support resource consumption measurement and DVFS on Intel Haswell processors [9], however it has been extended to also provide support for the Jestson/TX1 ARM system [12] located at the Barcelona Supercomputing Center [14] (ARM Cortex-A57, 4 cores, 1.3 GHz) which supports energy measurements.

ARM systems are an interesting platform because they allow the setting of much lower frequencies [7] and save energy accordingly. In the case that system CPU uncore frequency is not possible to set, however, one can change the frequency of the RAM. Minimum CPU core frequency is 0.5 GHz and the maximum is 1.3 GHz. The minimum and maximum RAM frequency is 40 MHz and 1.6 GHz, respectively. To change frequencies on Jetson, no third-party libraries are necessary.

To gather power data, the Texas Instrument INA3221 chip is featured on the board [4]. It measures the per-node energy consumption and stores samples values in a file. It is possible to gather hundreds of samples per second, however the measurement effects the CPU. The following Table 2 shows the impact of sampling frequency on the CPU workload evaluated using htopFootnote 4.

3.2 Workflow

First, the user has to analyze their application using a profiler tool (such as Allinea MAP) and find the significant regions in order to cover the most consuming functions in terms of time, MPI communication, and I/O, and insert MERIC instrumentation into code to wrap the selected sections of the code. A region start function takes a parameter with the name of the region, but the stop function does not have any input parameters, because it ends the region that has been started most recently (last in, first out).

The instrumented application should be run as usual. To control MERIC behaviour it is possible to export appropriate environment variables or define a MERIC configuration file that allows the user to specify the settings not only for the whole application run (as in the case of environment variables), but also control the behavior for separate regions, computation nodes, or their sockets. The user can define hardware and runtime settings (CPU frequencies and number of threads) as well as select energy measurement mode, hardware counters to read and more.

4 RADAR: Measurement Data Analysis

RADAR presents a brief summary of the measurement results obtained with MERIC. This is a merged form of automatically generated dynamism report by both the RADAR generator (by IT4Innovations), described in detail in Sect. 4.1 and the readex-dyn-detect (by the Technical University of Munich), described in [19]. The report depicts diagrams of energy consumption with respect to a set of tuning parameters. It also contains different sets of graphical comparisons of static and dynamic significant energy savings across phases for different hardware tuning parameter configurations. In each perspective, the measured dynamism metrics are presented for the default configurations that are used for the tuning parameters.

4.1 The RADAR Generator

The RADAR generatorFootnote 5 allows users to evaluate the data measured by the MERIC tool automatically, and to get an uncluttered summary of the results in the form of a  file. Moreover, it is possible to include the report generated by the readex-dyn-detect tool, as mentioned above.

file. Moreover, it is possible to include the report generated by the readex-dyn-detect tool, as mentioned above.

The report itself contains information about both static and dynamic savings, represented not only by tables, but also plots and heat-maps. Examples can be seen in Fig. 3 and Table 3.

The generator is able to evaluate all chosen quantities at once, i.e. users do not have to generate reports for energy consumption, and compute intensity and execution time separately, because they can be contained in one report together. This provides the advantage of direct visual comparison of all optimal settings, so users can achieve a greater understanding of the application behavior quickly. The execution time change for energy-optimal settings is also included in the report, as can be seen in Table 4.

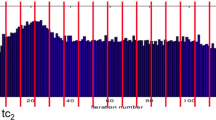

This evaluation is performed not only for the main region (usually the whole application), but for its nested regions too. Users can also specify an iterative region which contains all the nested ones and which is called directly in the main region. In this way certain iterative schemes (e.g., iterative solvers of linear systems) are understood in detail, because every iteration (or phase) is evaluated separately.

With this feature users have information about the best static optima just for the main region (which serves as the best starting settings), information about optimal settings of nested regions in an average phase, and the above-mentioned information about optimal settings of nested regions in every individual phase. If we wanted to process multiple regions like one, we can group them under one role, as can be seen in Fig. 2, where Projector_l and Projector_l_2 are different regions comprising the region Projector. If multiple runs of the program are measured, then both the average run and separate runs are evaluated.

For some programs such a report could be impractically long and so the generator offers the possibility to create a shorter version containing only the overall summary and the average phase evaluation.

The generator also supports evaluation in multiples of the original unit used in the measurement. Both the static and dynamic baseline for the energy consumption, i.e. the constant baseline and the baseline dependent on settings, are supported too.

Finally, the optimal settings for all regions and every measured quantity can be exported into the separated files, which can be used as an input for the MERIC tool, as described in Sect. 3.2.

All the above-mentioned settings are listed in the external configuration file, which is set by the generator’s flag, so users can easily change several different settings for their reports.

5 Test Case

The ESPRESO libraryFootnote 6 was selected to present MERIC and RADAR generator usage. The library is a combination of Finite Element (FEM) and Boundary Element (BEM) tools and TFETI/HTFETI [5, 15] domain decomposition solvers. The ESPRESO solver is a parallel linear solver, which includes a highly efficient MPI communication layer designed for massively parallel machines with thousands of compute nodes. The parallelization inside a node is done using OpenMP. Inside the application we have identified several regions of the code, that may have different optimal configuration see Fig. 4.

The following test was performed on the IT4Innovations Salomon cluster powered by two Intel Xeon E5-2680v3 (Haswell-EP) processors per node using a RAPL counter with a 70 W baseline for the energy consumption measurement. The processor is equipped with 12 cores and allows for CPU core and uncore frequency scaling within the range of 1.2–2.5 GHz and 1.2–3.0 GHz, respectively. We evaluated ESPRESO on a heat transfer problem with 2.7 million unknowns using one MPI process per socket.

Table 5 shows the possible savings made by using different numbers of OpenMP threads during the runtime, and by switching CPU core and uncore frequencies. This table shows that it is possible to save 4% of the overall energy just by statically setting different CPU core and uncore frequencies that can be applied even without instrumenting the application at all. Table 6 shows the impact of using different CPU frequencies in this test case, from the energy consumption point of view.

Another 7.46% of energy can be saved through dynamic switching of the tuned parameters to apply the best configuration for each significant region. Overall energy savings in this test case were 11.16%. Table 7 in the appendix of this paper contains the regions’ best settings.

6 Conclusion

The paper presented two tools that allow easy analysis of HPC applications’ behavior, with the goal to tune hardware and runtime parameters to minimize the given objective (e.g., the energy consumption and runtime).

Resource consumption measurement and dynamic parameter changes are provided by the MERIC library. The currently supported parameters that can be switched dynamically include the CPU core and uncore frequencies, as well as the number of active OpenMP threads.

The RADAR generator analyses the MERIC measurement outputs and provides detailed  reports describing the behavior of the instrumented regions. These reports also contain information about the settings that should be applied for each region to reach maximum savings. The RADAR generator produces the MERIC configuration files that should be used for production runs of the user application to apply the best settings dynamically during the runtime.

reports describing the behavior of the instrumented regions. These reports also contain information about the settings that should be applied for each region to reach maximum savings. The RADAR generator produces the MERIC configuration files that should be used for production runs of the user application to apply the best settings dynamically during the runtime.

Possible savings that can be reached when using MERIC and the RADAR generator are presented in [21], where we show that the energy savings can reach up to 10–30%.

Notes

- 1.

Uncore frequency refers to frequency of subsystems in the physical processor package that are shared by multiple processor cores, e.g., L3 cache and on-chip ring interconnect.

- 2.

MERIC repository: https://code.it4i.cz/vys0053/meric.

- 3.

The Intel Haswell processors do not support floating-point instructions counters. MERIC approximates FLOPs/s based on the counter of Advanced Vector Extensions (AVX) calculation operations. For more information visit https://github.com/RRZE-HPC/likwid/wiki/FlopsHaswell.

- 4.

htop repository: https://github.com/hishamhm/htop.

- 5.

RADAR generator repository: https://code.it4i.cz/bes0030/readex-radar.

- 6.

ESPRESO library website: http://espreso.it4i.cz/.

References

Allinea MAP - C/C++ profiler and Fortran profiler for high performance Linux code. https://www.allinea.com/products/map

High definition energy efficiency monitoring. http://www.ena-hpc.org/2014/pdf/bull.pdf

Brodowski, D.: Linux CPUFreq. https://www.kernel.org/doc/Documentation/cpu-freq/index.txt

BSC: Power monitoring on mini-clusters. https://wiki.hca.bsc.es/dokuwiki/wiki:prototype:power_monitor#jetson-tx1

Dostal, Z., Horak, D., Kucera, R.: Total FETI-an easier implementable variant of the FETI method for numerical solution of elliptic PDE. Commun. Numer. Methods Eng. 22(12), 1155–1162 (2006). https://doi.org/10.1002/cnm.881

Eastep, J., et al.: Global extensible open power manager: a vehicle for HPC community collaboration on co-designed energy management solutions. In: Kunkel, J.M., Yokota, R., Balaji, P., Keyes, D. (eds.) ISC 2017. LNCS, vol. 10266, pp. 394–412. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-58667-0_21

eLinux.org: Jetson/TX1 controlling performance. http://elinux.org/Jetson/TX1_Controlling_Performance

Hackenberg, D., Ilsche, T., Schuchart, J., Schöne, R., Nagel, W., Simon, M., Georgiou, Y.: HDEEM: high definition energy efficiency monitoring. In: Energy Efficient Supercomputing Workshop (E2SC), November 2014

Hackenberg, D., Schöne, R., Ilsche, T., Molka, D., Schuchart, J., Geyer, R.: An energy efficiency feature survey of the Intel Haswell processor. In: 2015 IEEE International Parallel and Distributed Processing Symposium Workshop (IPDPSW), May 2015

Hähnel, M., Döbel, B., Völp, M., Härtig, H.: Measuring energy consumption for short code paths using rapl. SIGMETRICS Perform. Eval. Rev. 40(3), 13–17 (2012). http://doi.acm.org/10.1145/2425248.2425252

Haidar, A., Jagode, H., Vaccaro, P., YarKhan, A., Tomov, S., Dongarra, J.: Investigating power capping toward energy-efficient scientific applications. Concurr. Comput.: Pract. Exp. e4485. https://onlinelibrary.wiley.com/doi/abs/10.1002/cpe.4485

NVIDIA: NVIDIA Jetson. http://www.nvidia.com/object/embedded-systems-dev-kits-modules.html

Oleynik, Y., Gerndt, M., Schuchart, J., Kjeldsberg, P.G., Nagel, W.E.: Run-time exploitation of application dynamism for energy-efficient exascale computing (READEX). In: Plessl, C., El Baz, D., Cong, G., Cardoso, J.M.P., Veiga, L., Rauber, T. (eds.) 2015 IEEE 18th International Conference on Computational Science and Engineering (CSE), pp. 347–350. IEEE, Piscataway, October 2015

Rajovic, N., Rico, A., Mantovani, F., Ruiz, D., Vilarrubi, J.O., Gomez, C., Backes, L., Nieto, D., Servat, H., Martorell, X., Labarta, J., Ayguade, E., Adeniyi-Jones, C., Derradji, S., Gloaguen, H., Lanucara, P., Sanna, N., Mehaut, J.F., Pouget, K., Videau, B., Boyer, E., Allalen, M., Auweter, A., Brayford, D., Tafani, D., Weinberg, V., Brömmel, D., Halver, R., Meinke, J.H., Beivide, R., Benito, M., Vallejo, E., Valero, M., Ramirez, A.: The mont-blanc prototype: an alternative approach for HPC systems. In: Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, SC 2016, pp. 38:1–38:12. IEEE Press, Piscataway (2016). http://dl.acm.org/citation.cfm?id=3014904.3014955

Riha, L., Brzobohaty, T., Markopoulos, A., Jarosova, M., Kozubek, T., Horak, D., Hapla, V.: Implementation of the efficient communication layer for the highly parallel total feti and hybrid total feti solvers. Parallel Comput. 57, 154–166 (2016)

Rountree, B., Lowenthal, D.K., de Supinski, B.R., Schulz, M., Freeh, V.W., Bletsch, T.K.: Adagio: making DVS practical for complex HPC applications. In: ICS (2009)

Schoene, R.: x86\(\_\)adapt. https://doc.zih.tu-dresden.de/hpc-wiki/bin/view/Compendium/X86Adapt

Schuchart, J., Gerndt, M., Kjeldsberg, P.G., Lysaght, M., Horák, D., Říha, L., Gocht, A., Sourouri, M., Kumaraswamy, M., Chowdhury, A., Jahre, M., Diethelm, K., Bouizi, O., Mian, U.S., Kružík, J., Sojka, R., Beseda, M., Kannan, V., Bendifallah, Z., Hackenberg, D., Nagel, W.E.: The READEX formalism for automatic tuning for energy efficiency. Computing 1–19 (2017). https://doi.org/10.1007/s00607-016-0532-7

Venkatesh, K., Lubomir, R., Michael, G., Anamika, C., Ondrej, V., Martin, B., David, H., Radim, S., Jakub, K., Michael, L.: Prace whitepaper: investigating and exploiting application dynamism for energy-efficient exascale computing (2017). www.prace-ri.eu

VI-HPS: Score-p user manual 3.1 (2017)

Vysocky, O., Beseda, M., Riha, L., Zapletal, J., Nikl, V., Lysaght, M., Kannan, V.: Evaluation of the HPC applications dynamic behavior in terms of energy consumption. In: Proceedings of the Fifth International Conference on Parallel, Distributed, Grid and Cloud Computing for Engineering. Civil-Comp Press, Stirlingshire, Paper 3 (2017)

Williams, S., Waterman, A., Patterson, D.: Roofline: an insightful visual performance model for multicore architectures. Commun. ACM 52(4), 65–76 (2009). https://doi.org/10.1145/1498765.1498785

Acknowledgement

This work was supported by The Ministry of Education, Youth and Sports from the National Programme of Sustainability (NPU II) project “IT4Innovations excellence in science - LQ1602” and by the IT4Innovations infrastructure which is supported from the Large Infrastructures for Research, Experimental Development and Innovations project “IT4Innovations National Supercomputing Center – LM2015070”.

The research leading to these results has received funding from the European Union’s Horizon 2020 Programme under grant agreement number 671657.

The work was additionally supported by VŠB – Technical University of Ostrava under the grant SP2017/165 and by the Barcelona Supercomputing Center under the grants 288777, 610402 and 671697.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix

Appendix

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Vysocky, O., Beseda, M., Říha, L., Zapletal, J., Lysaght, M., Kannan, V. (2018). MERIC and RADAR Generator: Tools for Energy Evaluation and Runtime Tuning of HPC Applications. In: Kozubek, T., et al. High Performance Computing in Science and Engineering. HPCSE 2017. Lecture Notes in Computer Science(), vol 11087. Springer, Cham. https://doi.org/10.1007/978-3-319-97136-0_11

Download citation

DOI: https://doi.org/10.1007/978-3-319-97136-0_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-97135-3

Online ISBN: 978-3-319-97136-0

eBook Packages: Computer ScienceComputer Science (R0)