Abstract

Maintenance and restoration of human well-being is healthcare’s central purpose. However, medical personnel’s everyday work has become more and more characterized by administrative tasks, such as writing medical reports or documenting a patient’s treatment. Particularly in the healthcare sector, these tasks usually entail working with different software systems on mostly traditional desktop computers. Using such machines to collect data during doctor-patient encounters presents a great challenge. The doctor wants to gather patient data as quickly and as completely as possible. On the other hand, the patient wants the doctor to empathize with him or her. Capturing data with a keyboard, using a traditional desktop computer, is cumbersome. Furthermore, this setting can create a barrier between doctor and patient. Our aim is to ease data entry in doctor-patient encounters. In this chapter, we present a software tool, Tele-Board MED, that allows recording data with the help of handwritten and spoken notes that are transformed automatically to a textual format via handwriting and speech recognition. Our software is a lightweight web application that runs in a web browser. It can be used on a multitude of hardware, especially mobile devices such as tablet computers or smartphones. In an initial user test, the digital techniques were rated as more suitable than a traditional pen and paper approach that entails follow-up content digitization.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Doctor-patient Encounter

- Traditional Desktop Computers

- Initial User Testing

- Tablet Computers

- Medical Documentation System

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Doctor-patient encounters are like many other social situations in that they require communication. Yet, unlike many situations of informal exchange, healthcare encounters necessitate a special, highly constrained type of communication. Doctors must obtain selective information that is illness or health relevant—as comprehensively, truthfully and quickly as possible—, while patients need to be informed about their health situation. In addition to such fact-oriented objectives, just as importantly, there is also the emotive side of exchange. Here, doctors may wish to convey trustworthiness or concern, while patients may need to feel soothed and understood or to experience empowerment. And as though all this were not enough, there is also the requirement of medical documentation. Whatever serviceable information doctor and patient acquire in the course of their exchange, there remains an extra step of “communicating” it to a medical documentation system.

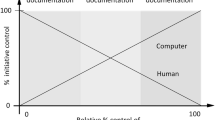

To an increasing extent, medical documentation systems are realized digitally. This is efficient given that medical discharge letters and other official documents must be provided in a machine written format. Furthermore, at least in Germany, patients have a right to access their medical records in an electronic format (Bundesgesetz 2013). Creating and using medical records therefore becomes a matter of human-computer collaboration.

A great deal is already known about patient-doctor communication, collaboration and alliance—especially since these factors are considered key determinants of treatment success (Castonguay et al. 2006). However, related questions that include digital media have received much less attention until now. How do doctor and patient communicate—how do they collaborate—with the medical documentation system? Taking the first steps towards an answer, we can begin with the observation that, at least at present, it is solely doctors who engage with the medical documentation system. Furthermore, the process is often cumbersome. Doctors still regularly take handwritten notes during the treatment session so as not to forget what they later want to convey in the digital documentation system. Since the system will not accept handwritten notes, everything needs to be retyped on a computer keyboard later on.

To develop medical documentation systems that suit user needs more than present-day solutions do, design thinking appears to be a highly serviceable approach.

For digital media, such as medical documentation systems, design thinking also introduces a useful frame of analysis developed by Byron Reeves and Clifford Nass (1996), called the media equation. Their basic idea is that people react to digital media (such as computers or internet platforms) as though they were real people or real places. This idea can first be applied in design analysis to pose the question: What if a human did what the digital media does?

In reality, however, if a human behaved like the typical medical documentation system in a practice, the conduct would seem presumptuous. Let’s imagine such a scenario with a doctor (D), patient (P) and the medical record stored on a digital documentation system (M). Let us imagine the doctor and patient as they are collecting important information orally.

-

D: This information is important, M, we need to archive it.

-

M: I don’t accept oral information. You must type it on my keyboard.

-

D: But that would disturb the treatment flow!? Okay, I’ll take handwritten notes for now. After the session I shall retype everything on your keyboard.

-

P: In the meantime, M, could you check when I had the last vaccination?

-

M: I don’t communicate with you, only with the doctor.

-

P: But you are my medical record, aren’t you?

-

M: I don’t communicate with you.

Such frames of analysis help to elucidate user needs and fuel ideation when developing future tools. We have used them in the Tele-Board MED project to rethink medical documentation.

In the past, we focused on the role of a medical documentation system during the treatment session, where it could play a more supportive role throughout. It can display information to patient and doctor, helping them keep track of the information that is already recorded as well as gaps that still need to be closed (von Thienen et al. 2016; Perlich and Meinel 2016).

We have also focused on the information output after treatment sessions, where the medical documentation system could be highly supportive again. It can create printouts for patients to take home, thus helping them remember the content of a treatment session. Furthermore, a medical documentation system can save clinicians a lot of time by creating medical discharge letters or other official clinical documents automatically based on session notes (Perlich and Meinel, in press).

One aspect that we had not looked at closely up to now is the information input. Here, present documentation systems play a highly constricting role, requiring doctors to type on a keyboard and cutting the patient off from all interactions. Therefore, we now want to place the question on center stage:

1.1 How Might We Rethink Data Entry Solutions in Healthcare Encounters?

Subsequently, we will first review related works in the field of data entry approaches, especially those used in medical contexts (Sect. 2). We will then introduce the Tele-Board MED system (Sect. 3) and suggest MED-Pad as a novel data entry solution (Sect. 4). We will explain the implementation of MED-Pad (Sect. 5) and share results from an initial user feedback study (Sect. 6), before we conclude with visions for medical documentation in the—hopefully—near future (Sect. 7).

2 Related Work on Data Entry in Medical Encounters

Notes taken during doctor-patient encounters are an important source of data feeding into medical records. The charts created during a doctor’s visit include outlines that are relevant to the patient care, e.g. present complaints, history of the present health problem, progress notes, physical examinations, diagnosis and assessment, treatment plan, and medication prescriptions. In the last decades, electronic medical records have been widely applied in patient care (Hayrinen et al. 2008). There are different approaches to handling digital documentation during doctor-patient conversations. Data entry can be carried out during the visit or afterwards, and it can be done by the care provider or by dictation to clinical assistants. Shachak and Reis (2009) conducted a literature review on the impact of electronic medical records on patient-doctor communication during consultation. They conclude that the use of electronic records can have both positive and negative impacts on a doctor-patient relationship depending on the doctor’s behavior. Positive impacts are associated with the so-called interpersonal style, characterized by utilizing the computer to sharing and reviewing information together with the patient. Further potential for an improved impact on patient encounters lies in replacing keyboard typing with voice recognition, handwriting recognition and touch screens for entering data (Weber 2003). Already in 1993 Lussier et al. developed a computerized patient record software for physicians with handwriting recognition using a portable pen-based computer. However, we assume that this tool was not designed to be used during the conversation with the patient. Recent research suggests a speech recognition system that automatically transcribes doctor-patient conversations (Chiu et al. 2017).

In this chapter, we investigate the use of computerized patient records in medical encounters by using a digital whiteboard and tablet computer with handwriting and voice recognition. The described setup is a prototype. A real-life implementation however would potentially imply additional considerations regarding legal data security regulations and the clinic’s existing information technology infrastructures.

3 Tele-Board MED System Overview

Tele-Board MED (TBM) is our medical documentation system that currently supports information captured during treatment sessions as well as the further information output processed afterwards. In order to improve its supportive character facilitated by advanced data entry mechanisms, we give a short overview of the system and how it can help in doctor-patient communication.

Tele-Board MED serves as the foundation for this communication by providing a shared digital workspace for doctor and patient. Notes taken by the doctor during consultations can be seen by both doctor and patient at the same time. The setup of such a consultation is depicted in Fig. 1. TBM’s virtual whiteboard surface is shown on a large display or projector. The doctor and patient stand or sit in front of this device and are thus able to edit and re-arrange any of the content on the screen easily.

Tele-Board MED is a web browser-based software system to support doctor-patient collaboration in medical encounters. Its main component is a digital whiteboard web application where doctor and patient can take notes, make scribbles and draw images jointly. The application can be run across different platforms on a wide variety of hardware devices as shown in Fig. 2. The system can be used on traditional desktop computers, electronic whiteboards as well as on mobile devices, such as tablet computers or smartphones. An installation of our software is not required. It is used within a web browser, which is a common application on almost any platform.

Digital whiteboard artifacts, such as sticky notes, scribbles and images, created during a treatment session are stored automatically on a central server. An explicit user initiated saving is not required. The stored digital content data can be exported into different formats (e.g. images, text documents or hardcopy printouts). Tele-Board MED can thus help to facilitate medical documentation: the relevant data is already digitally recorded and can be transformed into a document format appropriate for the desired documentation purpose, for example providing the patient with a copy of treatment session notes or creating official clinical documents.

Digitally capturing data right from the beginning during a doctor-patient treatment session is a first important step for later documentation. A duplication of work can be avoided as the data need only be acquired once. In contrast, first taking analog notes would then require re-typing them into a computer in order to create the official document (e.g. a medical report). However, for digital text documents, both methods require the data to be in a “machine readable” text format (e.g. ASCIIFootnote 1 or UTF-8Footnote 2). The prevalent way of entering such text, even with Tele-Board MED, is via a computer keyboard. Especially during treatment sessions, this is cumbersome and disruptive for doctor-patient communication.

From our perspective, it is necessary to take a step back and focus not only on existing digital data but rather on how to capture this data. In particular, to explore methods of capturing text whereby users do not have to adapt to computers (when using a keyboard). This means developing content-capturing technologies adapted to user’s natural way of working.

4 Proposed Content-Capturing Approach in Tele-Board MED

Writing something down in order to preserve existing knowledge is a fundamental human cultural paradigm. The most common way for people to do this, and one of the first things learned in school, is to put information in a handwritten form using pen and paper. Usually, this is the easiest and fastest way to take notes. The traditional method of note-keeping is an important aspect when it comes to taking notes during doctor-patient treatment sessions.

Tele-Board MED’s whiteboard application already makes it possible to scribble on the board with a pen, finger or mouse. Digital notes can therefore be created by hand. However, these digital notes are represented as vector data and not as a character encoded text format. Additionally, the whiteboard as the only input field lacks a concept that correlates to writing on a single sheet of paper.

Our goal was to enable doctors and patients to take handwritten notes digitally in a way that conveys the impression of writing on a sheet of paper. At the same time, the handwriting should be available in a text format. Our proposed solution is a web browser-based digital notepad application for handwritten notes MED-Pad (see Fig. 3), similar to the concept Sticky Note Pad described by Gumienny et al. (2011) and Gericke et al. (2012a).

MED-Pad is intended (but not limited) to be used on mobile devices such as tablet computers or smartphones. Users can draw on a full-screen surface with a stylus or finger. When pressing a button, the hand drawn scribbles are converted into their corresponding textual representation with the help of a handwriting recognition system. The recognition process has to be triggered manually. Recognizing text while writing could distract users or could be even annoying when drawing visuals not intended to be recognized. However, the mechanism of how and when recognition is carried out is currently part of our further elaboration.

While focusing mainly on capturing handwritten information, we also look at new ways of content capturing. Storing spoken words as text is a technique we want to apply and investigate. For this purpose, we have also integrated speech recognition functionality in our MED-Pad application. By pressing a button, users can employ the hardware device’s built in microphone to get their spoken words translated into text. This is depicted in Fig. 4. The recording has to be manually started and ended. It is intended for short notes and not to be used as a voice recorder over a longer time. Therefore, users have to think about the actual note they want to capture before starting the recognition.

MED-Pad’s primary content capturing focus is the collection of textual data. Especially with regard to medical documentation, textual data is the prevalent format. This textual focus is reflected by the content capturing mechanisms described above. However, there might be situations in doctor-patient encounters when textual content capturing is not sufficient, too cumbersome or even impossible (e.g. visualizing a physical object). In such a case, a picture is worth a thousand words. Thus, MED-Pad enables users to capture images or take photos right from within the application as shown in Fig. 5.

Up to this point, all captured information is stored locally within the MED-Pad application. In order to store the captured data in our remote Tele-Board MED system, the user has to send the data to our server which in turn sends it to the corresponding whiteboard application. The user can see the textual information as sticky note within Tele-Board MED’s whiteboard application (see Fig. 6). This process is perceived by the user when the sticky note “arrives” on the virtual whiteboard.

In order to integrate MED-Pad into our existing Tele-Board MED system landscape, we continue to follow our hard- and software agnostic approach. Apart from a modern web browser, MED-Pad requires neither a special operating system nor the installation of any (third party) software or proprietary web browser plugins. However, this kind of user-focused out-of-the-box usability involves some technical challenges when implementing MED-Pad.

With MED-Pad our main goal is to allow a more natural content capturing mechanism while preserving the resulting data in character encoded format. Handwriting recognition (HWR) is a complex task. Compared to Optical Character Recognition (OCR), human handwriting has a much higher amount of styles and variations. In order to be practically useful we aim to use an existing handwriting recognition system. We therefore had to elaborate on HWR and speech recognition systems in order to integrate such a system in MED-Pad.

When looking at the development of mobile applications, there is usually the choice between native (platform specific) apps and web apps. Both come with their respective pros and cons. Native apps work with the device’s built-in features. These apps tend to be easier to work with and also perform faster on the device. In contrast, web apps are oftentimes easier to maintain, as they share a common code base across all platforms. Additionally, a web app does not need to be installed and is always up-to-date, since everyone is accessing the same web app via the same URL seeing the most up-to-date version of the system. However, the advantage of a web browser-based multi-platform application usable on any device comes at the price of being limited to web browser’s provided resources and Application Programming Interfaces (APIs).

When we implemented MED-Pad, we decided on a web app-based solution. We kept following Tele-Board MED’s cross-platform approach and its advantages. APIs offered by web browsers for accessing the features of a mobile device were sufficient for our first prototype implementation. This is described in more detail in the following section.

5 MED-Pad Implementation

The concepts regarding MED-Pad and its recognition features described so far, have been implemented as a web application. For this, we had to develop different components, integrated into the Tele-Board MED system architecture.

5.1 MED-Pad Components in the Tele-Board MED System Architecture

MED-Pad consists of client- and server side components as shown in Fig. 6. The web application is run inside the user’s web browser and allows note taking by handwriting, speech, keyboard input and images. It can be requested just as any other URL from the Tele-Board MED website. The application assets like markup, styles and scripts are delivered by the TBM web server.

The web server also provides RESTFootnote 3 endpoints for sending MED-Pad content data and for using the handwriting recognition service. This service is offered by a dedicated HWR server in the TBM network. When a user clicks the HWR button in the MED-Pad application, a respective request is sent to the TBM web server, which in turn forwards it to the HWR server. Once the recognition has finished, the result is sent back to TBM web server and then back to the MED-Pad application (see Fig. 6).

The speech recognition is also handled by a server side component. Here we rely on an external service outside of TBM’s controlled scope. This is implied by the native SpeechRecognition API provided by the Google Chrome Browser we use in MED-Pad web application.

Access to server side MED-Pad REST endpoints is controlled by the TBM web server. Users must provide respective credentials in the MED-Pad application in order to send or receive any further content data apart from the application assets.

5.2 MED-Pad Content Capturing Web Application: A Progressive Web App

MED-Pad web application is implemented as Single Page Application (SPA) using plain JavaScript, HTML and CSS. It is a web page, once it is loaded, that completely runs in user’s client side web browser. All necessary assets are retrieved with a single page load. Additionally, our application is designed as a Progressive Web App (PWA), a term introduced by Google to denote web applications relying on certain architecture and web technologies (e.g. Service Worker). PWA’s main characteristics, among others, are: a native app-like look and feel, offline capabilities, and a secure origin served over an encrypted communication channel (Russell 2015, 2016).

MED-Pad’s application code is cached in user’s web browser making it available even if there is no internet connection. Furthermore, it is installable. For instance, on mobile devices running Chrome for Android, an app icon is added to user’s home screen. Afterwards the app is started like a native app in full screen mode as shown in Fig. 8. The user’s last actions (e.g. when drawing scribbles in the app or typing a text) are preserved in the last state automatically when the app or the web browser is closed. This way, MED-Pad can be used almost like a native mobile app.

The main interaction part of MED-Pad is its drawing surface. Technically, this is an HTML-Canvas element where the user can draw using a pen, a finger or a mouse. A crucial issue is that writing on the surface looks and feels as natural as possible. Thus, rendering speed and visual appearance were relevant measures during development (e.g. by using additional features for rendering, such as pressure and writing speed).

The resulting hand drawn content is stored internally as ordered vector data which builds the basis for handwriting recognition. When a user has finished taking handwritten notes, the vector data is sent to the TBM web server to be processed by the HWR server respectively. The recognition result is displayed in MED-Pad afterwards allowing the user to adjust it by means of the device’s (virtual) keyboard. The character encoded text can then be sent to the TBM whiteboard application as depicted in Fig. 6.

5.3 Handwriting Recognition as a Service: The HWR Server

Due to the fact that MED-Pad is a web application running inside a web browser there is no client side HWR mechanism available to be utilized by our application. Hence, we came up with a server side solution similar to an approach we described in Gericke et al. (2012b) using the handwriting recognition engine called Microsoft Ink integrated in the Microsoft Windows operating system (Pittman 2007).

Handwriting recognition systems can be distinguished with regards to their input data format. Human handwriting can be digitized either by scanning a sheet of paper or (offline) by capturing strokes directly during the writing process using a special input device (online). In general, an online system handles a continuous stream of two dimensional points sent from the hardware in temporal order, whereas the offline approach uses a complete representation of the written content typically in an image format (Plamondon and Srihari 2000). Online systems usually provide higher recognition rates since these systems are able to take temporal as well as spatial information into account. Furthermore, an extraction of spatial data during an image pre-processing step can be discarded since the input is already in a vector data format.

The Microsoft system used is an online handwriting recognition. In our approach with MED-Pad we use Microsoft Ink with offline data. In our definition here the property “offline” represents the fact that the vector data is completely stored first on the client side and the recognition will not be performed during writing. The advantage of having a temporal component for recognizing text, also has the downside of being prone to stroke order variations (Prasad and Kulkarni 2010). This happens when there is a spatio-temporal mismatch within the provided vector data, which is however more likely when applied to larger areas of handwritten content (Gericke et al. 2012b). Within our proposed solution for MED-Pad we intend to apply the HWR only on smaller chunks of handwritten data (i.e. at times when this aspect can be somewhat neglected).

The part of Med-Ped that facilitates handwriting recognition (see Fig. 7) is implemented as a web service and consists of three components: (1) a server machine with a Microsoft Windows 10 operating system, (2) a web server providing the service endpoint handling recognition requests from the TBM server and (3) a command line application that does the actual handwriting recognition by accessing the Windows 10 provided Microsoft Ink API.

Basic Tele-Board MED system architecture: TBM MED-Pad and whiteboard web applications run in client side web browser. Handwriting recognition is done by a dedicated Windows 10 server. Respective HWR requests are forwarded to this server inside TBM’s server infrastructure. For speech recognition a public cloud service is used

For network communication between different machines and platforms a common format is needed. Since it is not possible to access the Microsoft Ink API directly from outside the Windows machine, we implemented a web server that handles recognition requests from outside the system over the common HTTP(S) protocol. This way, the web server defines the recognition access point. We implemented the web server using JavaScript on the basis of NodeJS.Footnote 4 Request data originating from MED-Pad web application consists of handwriting vector data in JavaScript Object Notation (JSON) format. Furthermore, the language (a two letter ISO-639-1 abbreviation, e.g., “en” for English or “de” for German) that should be used for HWR is given. This is important for the recognition engine since there is lexicon used to provide contextual knowledge in order to improve recognition rates. Currently we are supporting two languages with our system: German and English. The language is taken from the internationalization settings set in MED-Pad, i.e. the user interface language the user has selected in the MED-Pad web application. Once a recognition request arrives at the NodeJS web server the latter invokes the recognition command line application and hands in the JSON data and the requested language.

The command line application is written in C# and encapsulates the actual recognition process. At first, the incoming JSON formatted data is parsed and transferred into Microsoft Ink API required data structures, which represent the user’s original handwriting strokes. Second, based on the given language an appropriate recognition object is created that processes the previously transferred data structures. As a result the recognized text is gathered by the calling NodeJS web server which sends the text back to TBM web server.

5.4 Gathering Content with Your Voice with Speech Recognition API

Our approach for transforming a user’s spoken communication into its character encoded symbolic representation differs from the HWR solution. We rely on a client side, web browser available implementation of Web Speech API (Shires and Wennborg 2014). The API consists of two parts: speech synthesis and speech recognition. We are only using the recognition part within MED-Pad. Speech recognition is a highly new interface currently only provided by Google Chrome web browser.Footnote 5

Use of the API is quite straightforward. On provided user interaction such as a button click, the browser requests access to the system’s microphone. Once access is granted by the user, the system listens to the user’s voice. On another click the listening stops and the recognition starts. The actual recognition is not processed on the user’s system but rather on a dedicated, not specified cloud based recognition service which tends to be a Google server. As with our HWR service, speech recognition depends on server side processing but as opposed to HWR not on a TBM controlled server. Figure 9 depicts the MED-Pad application after the user has clicked on the recording button initiating the voice listening phase. Speech recognition also needs the required language to be specified. We employ the selected user interface language as the desired recognition language. As soon as the server side recognition process has finished, the textual result is displayed in the application and can also be adjusted by the user and afterwards sent to the TBM whiteboard application.

5.5 Retaining Physical Artifacts with the Help of Image Capturing

There are situations when it is important to capture artifacts beyond textual information. For that reason, the MED-Pad web application allows for using pictures from the local device and annotating these pictures using scribbles or text.

We implemented this functionality by setting appropriate image MIMEFootnote 6 types (e.g. “image/jpeg”) to an HTML-File-Input element’s “accept” attribute. Clicking the respective image button in MED-Pad’s user interface provides different options. On traditional desktop computers a classical file upload dialog is shown, on mobile devices users’ are offered the option of using a local camera or selecting a photograph from their photo collection (Faulkner et al. 2017).

6 Initial User Testing

We gathered user feedback on the different methods of content capturing that are applied in a dialogue setting. Therefore, we could gain an understanding of how users feel when capturing content in the proposed ways. Two groups of two persons conducted three short interviews and were asked to apply the three approaches: (1) traditional pen and paper, (2) MED-Pad handwriting recognition with digital pen and a tablet computer, and (3) MED-Pad speech recognition with a tablet computer. We asked the dialogue partners to converse about a colloquial topic, such as their daily way to work, the perfect breakfast, and preparations for Christmas holidays. The interviewer was asked to take notes. The language of conversation and documentation was German.

We gathered qualitative feedback via free form text fields in a questionnaire and a subsequent discussion. The handwriting recognition was perceived as fast and less distractive than traditional pen and paper. One interviewer perceived it as a relief to be able to store conversation topics away by sending the captured notes to the whiteboard. However, the usage of the writing space on the digital sticky pad was not clear. While we designed the MED-Pad as a digital sticky pad intended to capture keywords or short phrases, one person captured all notes on one digital sticky note. Apparently, the writing area was rather perceived as analogous to a paper sheet instead of a small sticky note. The fewer words are captured when the recognition is triggered, the quicker the machine-readable text will appear. Up to three lines with up to four words each—the recognition happens instantly. However, over a certain number of written words, the recognition slows down and becomes unusable for our purpose of instant capturing.

The speech recognition was perceived as time-efficient and easily integrable in the conversation. However, the two modes of speaking for conversation and speaking for dictation were not easy to distinguish. Either the conversation was paused to capture premeditated content, or the conversation kept the natural flow while the recording was started alongside. With the latter approach, there is a tendency to capture long sentences, because there is no incentive to synthesize the spoken words.

Both handwriting and speech recognition results were acceptable, however contained some errors. During the course of the interview, it was not possible to quickly fix incorrectly detected words, since the text editing via the integrated digital keyboard was a bit cumbersome and too time consuming to do it parallel to the conversation. In sum, both digital techniques were rated as more suitable than the traditional pen and paper approach.

7 Conclusion

In conclusion, design thinking helps to rethink medical record experiences of patients and doctors; it inspires designs that are more user centered than conventional solutions. We use this methodology, endorsing the value of human centered design, in the development of Tele-Board MED.

In the past we had already rethought the role of medical documentation systems during treatment sessions, such as to render them more serviceable by supporting doctor-patient communication at eye level. We had also redesigned the system output, such as to provide doctors and patients with the documents they need: creating discharge letters or other official clinical documents for the doctor automatically on demand and providing the patient with printouts of session notes when requested.

In this chapter we have, in addition, focused on the information input, where standard documentation systems require a data entry procedure that is neither convenient for the patient nor the doctor. Patients are fully excluded from the process, cannot even see what the doctor records, and doctors must type on a keyboard, even though this tends to disturb patient-doctor communication.

To help the doctor communicate conveniently with the system we have implemented two additional data entry solutions. Doctors can write with a digital pen and their handwriting is automatically converted to machine readable text. Alternatively they can dictate and the content is, again, recognized automatically. With this intuitive technology, digital documentation does not disturb the treatment flow as typewriting might. Thus, doctors can document treatment content during the session digitally, rendering the documentation process as transparent towards the patient as they like. Even the patient can be given devices to enter information during the treatment session, if the doctor decides to do so.

With these developments we would like to return to the short scenario of a doctor (D), a patient (P) and the medical record, now worked on with Tele-Board MED (TBM). Let us explore what differences the redesign entails.

-

D: This information is important, we need to archive it. [Presses record button] allergic to penicillin [stops recording].

-

TBM displays “allergic to penicillin” on a sticky note on a large touch screen.

-

D: We are almost done now, except for the “family anamnesis” [points to TBM, where nothing is entered below the respective heading]. How about your close family members? Has anyone suffered from severe illnesses?

-

P: My grandmother died of breast cancer. Oh, and we have another case in the family. Shall I write that down?

-

D: Sure, if you want.

-

P [writes with a digital pen “grandmother died of breast cancer”, “cousin was diagnosed with lung cancer”]

TBM displays the information on two novel sticky notes on the large touch screen, where the doctor moves them with a finger to the section “family anamnesis”.

Thus, we suggest MED-Pad as a novel data entry solution, which adapts to the user’s natural way of working. The system is designed as a cross-platform web application that can be run on a wide variety of hardware devices such as traditional desktop computers and common mobile devices (e.g. tablet computers and smartphones). Furthermore, writing down handwritten scribbles is possible even without internet connection.

Since the MED-Pad is platform-independent, future usage scenarios could encompass patients to use their own mobile devices to create content during doctor-patient treatment sessions. When following this “Bring Your Own Device” (BYOD) approach, patients would therefore not only be on eye level with their doctor in a social sense, but also technically. Having the application always with them on their personal device allows content capturing everywhere at every time even outside of a typical treatment session. Along the path towards even better support of user needs, design thinking has proven to be a highly fruitful methodology, which can inspire further healthcare solutions in the future.

Notes

- 1.

American Standard Code for Information Interchange—character encoding standard for electronic communication.

- 2.

Unicode Transformation Format—8-bit—variable width character encoding standard.

- 3.

Representational State Transfer (REST)—Interoperability architecture for distributed hypermedia systems.

- 4.

A JavaScript run-time environment typically used server-side—https://nodejs.org/

- 5.

https://caniuse.com/#feat=speech-recognition—Retrieved Dec. 2017.

- 6.

Multipurpose Internet Mail Extensions (MIME)—Standard for defining email data format. Also used for content-type definition outside of email.

References

Bundesgesetz. (2013). Gesetz zur Verbesserung der Rechte von Patientinnen und Patienten, Bonn.

Castonguay, L. G., Constantino, M. J., & Holtforth, M. G. (2006). The working alliance: Where are we and where should we go? Psychotherapy: Theory, Research, Practice, Training, 43, 271–279. https://doi.org/10.1037/0033-3204.43.3.271

Chiu, C.-C., Tripathi, A., Chou, K., Co, C., Jaitly, N., Jaunzeikare, D., et al. (2017). Speech recognition for medical conversations. Retrieved from http://arxiv.org/abs/1711.07274

Faulkner, F., Eicholz, A., Leithead, T., & Danilo, A. (2017). HTML 5.1 2nd Edition, W3C Recommendation, W3C. Retrieved December 2017, from https://www.w3.org/TR/2017/REC-html51-20171003/

Gericke, L., Gumienny, R., & Meinel, C. (2012a). Tele-board: Follow the traces of your design process history. In H. Platter, C. Meinel, & L. Leifer (Eds.), Design thinking research: Studying co-creation in practice (pp. 15–29). Berlin: Springer.

Gericke, L., Wenzel, M., Gumienny, R., Willems, C., & Meinel, C. (2012b). Handwriting recognition for a digital whiteboard collaboration platform. In: Proceedings of the 13th international conference on Collaboration Technologies and Systems (CTS) (pp. 226–233), Denver, CO, USA, IEEE Press.

Gumienny, R., Gericke, L., Quasthoff, M., Willems, C., & Meinel, C. (2011). Tele-board: Enabling efficient collaboration in digital design spaces. In Proceedings of the 15th international conference on Computer Supported Cooperative Work in Design (CSCWD) (pp. 47–54).

Hayrinen, K., Saranto, K., & Nykanen, P. (2008). Definition, structure, content, use and impacts of electronic health records: A review of the research literature. International Journal of Medical Informatics, 77(5), 291–304. https://doi.org/10.1016/j.ijmedinf.2007.09.001.

Lussier, Y. A., Maksud, M., Desruisseaux, B., Yale, P. P., & St-Arneault, R. (1993). PureMD: a Computerized Patient Record software for direct data entry by physicians using a keyboard-free pen-based portable computer. Proceedings of symposium on computer applications in medical care (pp. 261–264). Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/1482879

Perlich, A., & Meinel, C. (2016). Patient-provider teamwork via cooperative note taking on Tele-Board MED. In Exploring complexity in health: An interdisciplinary systems approach (pp. 117–121).

Perlich, A. & Meinel, C. (in press). Cooperative Note-Taking in Psychotherapy Sessions: An Evaluation of the Therapist’s User Experience with Tele- Board MED. In Proceedings of the IEEE Healthcom 2018.

Perlich, A., von Thienen, J., Wenzel, M., & Meinel, C. (2017). Redesigning medical encounters with Tele-Board MED. In H. Plattner, C. Meinel, & L. Leifer (Eds.), Design thinking research: Taking breakthrough innovation home (pp. 101–123). Berlin: Springer.

Pittman, J. A. (2007). Handwriting recognition: Tablet PC text input. Computer, 40(9), 49–54.

Plamondon, R., & Srihari, S. N. (2000). On-line and off-line handwriting recognition: A comprehensive survey. IEEE Transactions on Pattern Analysis and Machine Intelligence, 22(1), 63–84.

Prasad, J. R., & Kulkarni, U. (2010). Trends in handwriting recognition. In 3rd International conference on emerging trends in engineering and technology (pp. 491–495).

Reeves, B., & Nass, C. (1996). The media equation: How people treat computers, television, and new media like real people and places. Cambridge: Cambridge University Press.

Russell, A. (2015). Progressive web apps: Escaping tabs without losing our soul. Retrieved December 2017.

Russell, A. (2016). What, exactly, makes something a progressive web app? Retrieved December 2017.

Shachak, A., & Reis, S. (2009). The impact of electronic medical records on patient-doctor communication during consultation: A narrative literature review. Journal of Evaluation in Clinical Practice, 15(4), 641–649. https://doi.org/10.1111/j.1365-2753.2008.01065.x

Shires, G., & Wennborg, H. (2014). Web speech API specification, Editor’s Draft, W3C. Retrieved December 2017, from https://w3c.github.io/speech-api/webspeechapi.html

von Thienen, J. P. A., Perlich, A., Eschrig, J., & Meinel, C. (2016). Smart documentation with Tele-Board MED. In H. Plattner, C. Meinel, & L. Leifer (Eds.), Design thinking research: Making design thinking foundational (pp. 203–233). Berlin: Springer.

Weber, J. (2003). Tomorrow’s transcription tools: What new technology means for healthcare. Journal of AHIMA, 74(3), 39–43–6. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/12914348

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Wenzel, M., Perlich, A., von Thienen, J.P.A., Meinel, C. (2019). New Ways of Data Entry in Doctor-Patient Encounters. In: Meinel, C., Leifer, L. (eds) Design Thinking Research. Understanding Innovation. Springer, Cham. https://doi.org/10.1007/978-3-319-97082-0_9

Download citation

DOI: https://doi.org/10.1007/978-3-319-97082-0_9

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-97081-3

Online ISBN: 978-3-319-97082-0

eBook Packages: Business and ManagementBusiness and Management (R0)