Abstract

The goal of these lecture notes is to survey some of the recent progress on the description of large-scale structure of random trees. We use the framework of Markov-Branching sequences of trees and discuss several applications.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

Mathematics Subject Classification

1 Introduction

The goal of these lecture notes is to survey some of the recent progress on the description of large-scale structure of random trees. Describing the structure of large (random) trees, and more generally large graphs, is an important goal of modern probabilities and combinatorics. Beyond the purely probabilistic or combinatorial aspects, motivations come from the study of models from biology, theoretical computer science or mathematical physics.

The question we will typically be interested in is the following. For (T n , n ≥ 1) a sequence of random unordered (i.e. non-planar) trees, where, for each n, T n is a tree of size n (the size of a tree may be its number of vertices or its number of leaves, for example): does there exist a deterministic sequence (a n , n ≥ 1) and a continuous random tree \(\mathcal {T}\) such that

To make sense of this question, we will view T n as a metric space by “replacing” its edges with segments of length 1, and then use the notion of Gromov-Hausdorff distance to compare compact metric spaces. When such a convergence holds, the continuous limit highlights some properties of the discrete objects that approximate it, and vice-versa.

As a first example, consider T n a tree picked uniformly at random in the set of trees with n vertices labelled by {1, …, n}. The tree T n has to be understood as a typical element of this set of trees. In this case the answer to the previous question dates back to a series of works by Aldous in the beginning of the 1990s [8,9,10]: Aldous showed that

where the limiting tree is called the Brownian Continuum Random Tree (CRT), and can be constructed from a standard Brownian excursion. This result has various interesting consequences, e.g. it gives the asymptotics in distribution of the diameter, the height (if we consider rooted versions of the trees) and several other statistics related to the tree T n . Consequently it also gives the asymptotic proportion of trees with n labelled vertices that have a diameter larger than \(x\sqrt {n}\) or/and a height larger than \(y\sqrt {n}\), etc. Some of these questions on statistics of uniform trees were already treated in previous works, the strength of Aldous’s result is that it describes the asymptotics of the whole tree T n .

Aldous has actually established a version of the convergence (1) in a much broader context, that of conditioned Galton–Watson trees with finite variance. In this situation, to fit to our context, T n is an unordered version of the genealogical tree of a Galton–Watson process (with a given, fixed offspring distribution with mean one and finite variance) conditioned on having a total number of vertices equal to n, n ≥ 1. Multiplied by \(1/\sqrt {n}\), this tree converges in distribution to the Brownian CRT multiplied by a constant that only depends on the variance of the offspring distribution. This should be compared with (and is related to) the convergence of rescaled sums of i.i.d. random variables towards the normal distribution and its functional analog, the convergence of rescaled random walks towards the Brownian motion. It turns out that the above sequence of uniform labelled trees can be seen as a sequence of conditioned Galton–Watson trees (when the offspring distribution is a Poisson distribution) and more generally that several sequences of combinatorial trees reduce to conditioned Galton–Watson trees. In the early 2000s, Duquesne [44] extended Aldous’s result to conditioned Galton–Watson trees with offspring distributions in the domain of attraction of a stable law. We also refer to [46, 70] for related results. In most of these cases the scaling sequences (a n ) are asymptotically much smaller, i.e. \(a_n\ll \sqrt {n}\), and other continuous trees arise in the limit, the so-called family of stable Lévy trees. All these results on conditioned Galton–Watson trees are now well established, and have a lot of applications in the study of large random graphs (see e.g. Miermont’s book [78] for the connections with random maps and Addario-Berry et al. [4] for connections with Erdős–Rényi random graphs in the critical window).

The classical proofs to establish the scaling limits of Galton–Watson trees consist in considering specific ordered versions of the trees and rely on a careful study of their so-called contour functions. It is indeed a common approach to encode trees into functions (similarly to the encoding of the Brownian tree by the Brownian excursion), which are more familiar objects. It turns out that for Galton–Watson trees, the contour functions are closely related to random walks, whose scaling limits are well known. Let us also mention that another common approach to study large random combinatorial structures is to use technics of analytic combinatorics, see [54] for a complete overview of the topic. None of these two methods will be used here.

In these lecture notes, we will focus on another point of view, that of sequences of random trees that satisfy a certain Markov-Branching property, which appears naturally in a large set of models and includes conditioned Galton–Watson trees. This property is a sort of discrete fragmentation property which roughly says that in each tree of the sequence, the subtrees above a given height are independent with a law that depends only on their total size. Under appropriate assumptions, we will see that Markov-Branching sequences of trees, suitably rescaled, converge to a family of continuous fractal trees, called the self-similar fragmentation trees. These continuous trees are related to the self-similar fragmentation processes studied by Bertoin in the 2000s [14], which are models used to describe the evolution of objects that randomly split as time passes. The main results on Markov-Branching trees presented here were developed in the paper [59], which has its roots in the earlier paper [63]. Several applications have been developed in these two papers, and in more recent works [15, 60, 89]: to Galton–Watson trees with arbitrary degree constraints, to several combinatorial trees families, including the Pólya trees (i.e. trees uniformly distributed in the set of rooted, unlabelled, unordered trees with n vertices, n ≥ 1), to several examples of dynamical models of tree growth and to sequence of cut-trees, which describe the genealogy of some deletion procedure of edges in trees. The objective of these notes is to survey and gather these results, as well as further related results.

In Sect. 2 below, we will start with a series of definitions related to discrete trees and then present several classical examples of sequences of random trees. We will also introduce there the Markov-Branching property. In Sect. 3 we set up the topological framework in which we will work, by introducing the notions of real trees and Gromov–Hausdorff topology. We also recall there the classical results of Aldous [9] and Duquesne [44] on large conditioned Galton–Watson trees. Section 4 is the core of these lecture notes. We present there the results on scaling limits of Markov-Branching trees, and give the main ideas of the proofs. The key ingredient is the study of an integer-valued Markov chain describing the sizes of the subtrees containing a typical leaf of the tree. Section 5 is devoted to the applications mentioned above. Last, Sect. 6 concerns further perspectives and related models (multi-type trees, local limits, applications to other random graphs).

All the sequences of trees we will encounter here have a power growth. There is however a large set of random trees that naturally arise in applications that do not have such a behavior. In particular, many models of trees arising in the analysis of algorithms have a logarithmic growth. See e.g. Drmota’s book [42] for an overview of the most classical models. These examples do not fit into our framework.

2 Discrete Trees, Examples and Motivations

2.1 Discrete Trees

Our objective is mainly to work with unordered trees. We give below a precise definition of these objects and mention nevertheless the notions of ordered or/and labelled trees to which we will sometimes refer.

A discrete tree (or graph-theoretic tree) is a finite or countable graph (V, E) that is connected and has no cycle. Here V denotes the set of vertices of the graph and E its set of edges. Note that two vertices are then connected by exactly one path and that #V = #E + 1 when the tree is finite.

In the following, we will often denote a (discrete) tree by the letter t, and for t = (V, E) we will use the slight abuse of notation v ∈t to mean v ∈ V .

A tree t can be seen as a metric space, when endowed with the graph distance d gr: given two vertices u, v ∈t, d gr(u, v) is defined as the number of edges of the unique path from u to v.

A rooted tree (t, ρ) is an ordered pair where t is a tree and ρ ∈t. The vertex ρ is then called the root of t. This gives a genealogical structure to the tree. The root corresponds to the generation 0, its neighbors can be interpreted as its children and form the generation 1, the children of its children form the generation 2, etc. We will usually call the height of a vertex its generation, and denote it by h t(v) (the height of a vertex is therefore its distance to the root). The height of the tree is then

and its diameter

The degree of a vertex v ∈t is the number of connected components obtained when removing v (in other words, it is the number of neighbors of v). A vertex v different from the root and of degree 1 is called a leaf. In a rooted tree, the out-degree of a vertex v is the number of children of v. Otherwise said, out-degree(v)=degree(v)- . A (full) binary tree is a rooted tree where all vertices but the leaves have out-degree 2. A branch-point is a vertex of degree at least 3.

. A (full) binary tree is a rooted tree where all vertices but the leaves have out-degree 2. A branch-point is a vertex of degree at least 3.

In these lecture notes, we will mainly work with rooted trees. Moreover we will consider, unless specifically mentioned, that two isomorphic trees are equal, or, when the trees are rooted, that two root-preserving isomorphic trees are equal. Such trees can be considered as unordered unlabelled trees, in opposition to the following definitions.

Ordered or/and Labelled Trees

In the context of rooted trees, it may happen that one needs to order the children of the root, and then, recursively, the children of each vertex in the tree. This gives an ordered (or planar) tree. Formally, we generally see such a tree as a subset of the infinite Ulam–Harris tree

where \(\mathbb {N}:=\{1,2,\ldots \}\) and \(\mathbb {N}^{0}=\{\varnothing \}\). The element \(\varnothing \) is the root of the Ulam–Harris tree, and any other \(u=u_1u_2\ldots u_n \in \mathcal {U}\backslash \{\varnothing \}\) is connected to the root via the unique shortest path

The height (or generation) of such a sequence u is therefore its length, n. We then say that \(\mathsf t \subset \mathcal {U}\) is a (finite or infinite) rooted ordered tree if:

-

\(\varnothing \in \mathsf t\)

-

if \(u=u_1\ldots u_n \in \mathsf t\backslash \{\varnothing \}\), then u = u 1…u n−1 ∈t (the parent of an individual in t that is not the root is also in t)

-

if u = u 1…u n ∈t, there exists an integer c u (t) ≥ 0 such that the element u 1…u n j ∈t if and only if 1 ≤ j ≤ c u (t).

The number c u (t) corresponds to the number of children of u in t, i.e., its out-degree.

We will also sometimes consider labelled trees. In these cases, the vertices are labelled in a bijective way, typically by {1, …, n} if there are n vertices (whereas in an unlabelled tree, the vertices but the root are indistinguishable). Partial labelling is also possible, e.g. by labelling only the leaves of the tree.

In the following we will always specify when a tree is ordered or/and labelled. When not specified, it is implicitly unlabelled, unordered.

Counting Trees

It is sometimes possible, but not always, to have explicit formulæ for the number of trees of a specific structure. For example, it is known that the number of trees with n labelled vertices is

and consequently, the number of rooted trees with n labelled vertices is

The number of rooted ordered binary trees with n + 1 leaves is

(this number is called the nth Catalan number) and the number of rooted ordered trees with n vertices is

On the other hand, there is no explicit formula for the number of rooted (unlabelled, unordered) trees. Otter [79] shows that this number is asymptotically proportional to

where c ∼ 0.4399 and κ ∼ 2.9557. This should be compared to the asymptotic expansion of the nth Catalan number, which is proportional (by Stirling’s formula) to π −1∕24nn −3∕2.

We refer to the book of Drmota [42] for more details and technics, essentially based on generating functions.

2.2 First Examples

We now present a first series of classical families of random trees. Our goal will be to describe their scaling limits when the sizes of the trees grow, as discussed in the Introduction. This will be done in Sect. 5. Most of these families (but not all) share a common property, the Markov-Branching property that will be introduced in the next section.

Combinatorial Trees

Let \(\mathbb {T}_n\) denote a finite set of trees with n vertices, all sharing some structural properties. E.g. \(\mathbb {T}_n\) may be the set of all rooted trees with n vertices, or the set of all rooted ordered trees with n vertices, or the set of all binary trees with n vertices, etc. We are interested in the asymptotic behavior of a “typical element” of \(\mathbb {T}_n\) as n →∞. That is, we pick a tree uniformly at random in \(\mathbb {T}_n\), denote it by T n and study its scaling limit. The global behavior of T n as n →∞ will represent some of the features shared by most of the trees. For example, if the probability that the height of T n is larger than \(n^{\frac {1}{2}+\varepsilon }\) tends to 0 as n →∞, this means that the proportion of trees in the set that have a height larger than \(n^{\frac {1}{2}+\varepsilon }\) is asymptotically negligible, etc. We will more specifically be interested in the following cases:

-

T n is a uniform rooted tree with n vertices

-

T n is a uniform rooted ordered tree with n vertices

-

T n is a uniform tree with n labelled vertices

-

T n is a uniform rooted ordered binary tree with n vertices (n odd)

-

T n is a uniform rooted binary tree with n vertices (n odd),

etc. Many variations are of course possible, in particular one may consider trees picked uniformly amongst sets of trees with a given structure and n leaves, or more general degree constraints. Some of these uniform trees will appear again in the next example.

Galton–Watson Trees

Galton–Watson trees are random trees describing the genealogical structure of Galton–Watson processes. These are simple mathematical models for the evolution of a population that continue to play an important role in probability theory and in applications. Let η be a probability on \(\mathbb {Z}_+\) (η is called the offspring distribution) and let m :=∑i≥1iη(i) ∈ [0, ∞] denote its mean. Informally, in a Galton–Watson tree with offspring distribution η, each vertex has a random number of children distributed according to η, independently. We will always assume that η(1) < 1 in order to avoid the trivial case where each individual has a unique child. Formally, an η-Galton–Watson tree T η is usually seen as an ordered rooted tree and defined as follows (recall the Ulam–Harris notation \(\mathcal {U}\)):

-

\(c_{\varnothing }(T^{\eta })\) is distributed according to η

-

conditionally on \(c_{\varnothing }(T^{\eta })=p\), the p ordered subtrees \(\tau _i=\{u \in \mathcal {U}:iu \in T^{\eta }\}\) descending from i = 1, …, p are independent and distributed as T η.

From this construction, one sees that the distribution of T η is given by:

for all rooted ordered tree t. This definition of Galton–Watson trees as ordered trees is the simplest, avoiding any symmetry problems. However in the following we will mainly see these trees up to isomorphism, which roughly means that we can “forget the order”.

Clearly, if we call Z k the number of individuals at height k, then (Z k , k ≥ 1) is a Galton–Watson process starting from Z 0 = 1. It is well known that the extinction time of this process,

if finite with probability 1 when m ≤ 1 and with a probability ∈ [0, 1) when m > 1. The offspring distribution η and the tree T η are said to be subcritical when m < 1, critical when m = 1 and supercritical when m > 1. From now on, we assume that

and for integers n such that \(\mathbb {P}(\# T^{\eta }=n)>0\), we let \(T_n^{\eta ,\mathrm {v}}\) denote a non-ordered version of the Galton–Watson tree T η conditioned to have n vertices. Sometimes, we will need to keep the order and we will let \(T_n^{\eta ,\mathrm {v},\mathrm {ord}}\) denote this ordered conditioned version. We point out that in most cases, but not all, a subcritical or a supercritical Galton–Watson tree conditioned to have n vertices is distributed as a critical Galton–Watson tree conditioned to have n vertices with a different offspring distribution. So the assumption m = 1 is not too restrictive. We refer to [66] for details on that point.

It turns out that conditioned Galton–Watson trees are closely related to combinatorial trees. Indeed, one can easily check with (2) that:

-

if η = Geo(1/2), \(T^{\eta ,\mathrm {v},\mathrm {ord}}_n\) is uniform amongst the set of rooted ordered trees with n vertices

-

if η = Poisson(1), \(T^{\eta , \mathrm {v}}_n\) is uniform amongst the set of rooted trees with n labelled vertices

-

if \(\eta = \frac {1}{2}\left (\delta _0 + \delta _2 \right )\), \(T^{\eta ,\mathrm {v},\mathrm {ord}}_n\) is uniform amongst the set of rooted ordered binary trees with n vertices.

We refer e.g. to Aldous [9] for additional examples.

Hence, studying the large-scale structure of conditioned Galton–Watson trees will also lead to results in the context of combinatorial trees. As mentioned in the Introduction, the scaling limits of large conditioned Galton–Watson trees are now well known. Their study has been initiated by Aldous [8,9,10] and then expanded by Duquesne [44]. This will be reviewed in Sect. 3. However, there are some sequences of combinatorial trees that cannot be reinterpreted as Galton–Watson trees, starting with the example of the uniform rooted tree with n vertices or the uniform rooted binary tree with n vertices. Studying the scaling limits of these trees remained open for a while, because of the absence of symmetry properties. These scaling limits are presented in Sect. 5.2.

In another direction, one may also wonder what happens when considering versions of Galton–Watson trees conditioned to have n leaves, instead of n vertices, or more general degree constraints. This is discussed in Sect. 5.1.2.

Dynamical Models of Tree Growth

We now turn to several sequences of finite rooted random trees that are built recursively by adding at each step new edges on the pre-existing tree. We start with a well known algorithm that Rémy [88] introduced to generate uniform binary trees with n leaves.

Rémy’s Algorithm

The sequence (T n (R), n ≥ 1) is constructed recursively as follows:

-

Step 1: T 1(R) is the tree with one edge and two vertices: one root, one leaf

-

Step n: given T n−1(R), choose uniformly at random one of its edges and graft on “its middle” one new edge-leaf. By this we mean that the selected edge is split into two so as to obtain two edges separated by a new vertex, and then a new edge-leaf is glued on the new vertex. This gives T n (R).

It turns out (see e.g. [88]) that the tree T n (R), to which has been subtracted the edge between the root and the first branch point, is distributed as a binary critical Galton–Watson tree conditioned to have 2n − 1 vertices, or equivalently n leaves (after forgetting the order in the GW-tree). As so, we deduce its asymptotic behavior from that of Galton–Watson trees. However this model can be extended in several directions, most of which are not related to Galton–Watson trees. We detail three of them.

Ford’s α-Model [ 55 ]

Let α ∈ [0, 1]. We construct a sequence (T n (α), n ≥ 1) by modifying Rémy’s algorithm as follows:

-

Step 1: T 1(α) is the tree with one edge and two vertices: one root, one leaf

-

Step n: given T n−1(α), give a weight 1 − α to each edge connected to a leaf, and α to all other edges (the internal edges). The total weight is n − 1 − α. Now, if n ≠ 2 or α ≠ 1, choose an edge at random with a probability proportional to its weight and graft on “its middle” one new edge-leaf. This gives T n (α). When n = 2 and α = 1 the total weight is 0 and we decide to graft anyway on the middle of the edge of T 1 one new edge-leaf.

Note that when α = 1∕2 the weights are the same on all edges and we recover Rémy’s algorithm. When α = 0, the new edge is always grafted uniformly on an edge-leaf, which gives a tree T n (0) known as the Yule tree with n leaves. When α = 1, we obtain a deterministic tree called the comb tree. This family of trees indexed by α ∈ [0, 1] was introduced by Ford [55] in order to interpolate between the Yule, the uniform and the comb models. His goal was to propose new models for phylogenetic trees.

k-Ary Growing Trees [ 60 ]

This is another extension of Rémy’s algorithm, where now several edges are added at each step. Consider an integer k ≥ 2. The sequence (T n (k), n ≥ 1) is constructed recursively as follows:

-

Step 1: T 1(k) is the tree with one edge and two vertices: one root, one leaf

-

Step n: given T n−1(k), choose uniformly at random one of its edges and graft on “its middle” k − 1 new edges-leaf. This gives T n (k).

When k = 2, we recover Rémy’s algorithm. For larger k, there is no connection with Galton–Watson trees.

Marginals of Stable Trees: Marchal’s Algorithm

In [73], Marchal considered the following algorithm, that attributes weights also to the vertices. Fix a parameter β ∈ (1, 2] and construct the sequence (T n (β), n ≥ 1) as follows:

-

Step 1: T 1(β) is the tree with one edge and two vertices: one root, one leaf

-

Step n: given T n−1(β), attribute the weight

-

β − 1 on each edge

-

d − 1 − β on each vertex of degree d ≥ 3.

The total weight is nβ − 1. Then select at random an edge or vertex with a probability proportional to its weight and graft on it a new edge-leaf. This gives T n (β).

-

The reason why Marchal introduced this algorithm is that T n (β) is actually distributed as the shape of a tree with edge-lengths that is obtained by sampling n leaves at random in the stable Lévy tree with index β. The class of stable Lévy trees plays in important role in the theory of random trees. It is introduced in Sect. 3.2 below.

Note that when β = 2, vertices of degree 3 are never selected (their weight is 0). So the trees T n (β), n ≥ 1 are all binary, and we recover Rémy’s algorithm.

Of course, several other extensions of trees built by adding edges recursively may be considered, some of which are mentioned in Sects. 5.3.3 and 6.1.

Remark

In these dynamical models of tree growth, we build on a same probability space the sequence of trees, contrary to the examples of Galton–Watson trees or combinatorial trees that give sequences of distributions of trees. In this situation, one may expect to have more than a convergence in distribution for the rescaled sequences of trees. We will see in Sect. 5.3 that it is indeed the case.

2.3 The Markov-Branching Property

Markov-Branching trees were introduced by Aldous [11] as a class of random binary trees for phylogenetic models and later extended to non-binary cases in Broutin et al. [30], and Haas et al. [63]. It turns out that many natural models of sequence of trees satisfy the Markov-Branching property (MB-property for short), starting with the example of conditioned Galton–Watson trees and most of the examples of the previous section.

Consider

a sequence of trees where T n is a rooted (unordered, unlabelled) tree with n leaves. The MB-property is a property of the sequence of distributions of T n , n ≥ 1. Informally, the MB-property says that for each tree T n , given that

the root of T n splits it in p subtrees with respectively λ 1 ≥… ≥ λ p leaves,

then T n is distributed as the tree obtained by gluing on a common root p independent trees with respective distributions those of \(T_{\lambda _1},\ldots ,T_{\lambda _p}\). The way the leaves are distributed in the sub-trees above the root, in each T n , for n ≥ 1, will then allow to fully describe the distributions of the T n , n ≥ 1.

We now explain rigorously how to build such sequences of trees. We start with a sequence of probabilities (q n , n ≥ 1), where for each n, q n is a probability on the set of partitions of the integer n. If n ≥ 2, this set is defined by

whereas if n = 1, \(\mathcal {P}_1:=\{(1),\emptyset \}\) (we need to have a cemetery point). For a partition \(\lambda \in \mathcal {P}_n\), we denote by p(λ) its length, i.e. the number of terms in the sequence λ. The probability q n will determine how the n leaves of T n are distributed into the subtrees above its root. We call such a probability a splitting distribution. In order that effective splittings occur, we will always assume that

We need to define a notion of gluing of trees. Consider t1, …, t p , p discrete rooted (unordered) trees. Informally, we want to glue them on a same common root in order to form a tree 〈t1, …, t p 〉 whose root splits into the p subtrees t1, …, t p . Formally, this can e.g. be done as follows. Consider first ordered versions of the trees \(\mathrm {t}^{\mathrm {ord}}_1,\ldots ,\mathrm {t}^{\mathrm {ord}}_p\) seen as subsets of the Ulam–Harris tree \(\mathcal {U}\) and then define a new ordered tree by

The tree 〈t1, …, t p 〉 is then defined as the unordered version of \( \langle \mathrm {t}^{\mathrm {ord}}_1,\ldots ,\mathrm {t}^{\mathrm {ord}}_p \rangle \).

Definition 2.1

For each n ≥ 1, let q n be a probability on \(\mathcal {P}_n\) such that q n ((n)) < 1. From the sequence q = (q n , n ≥ 1) we construct recursively a sequence of distributions \((\mathcal {L}^{\mathbf {q}}_n)\) such that for all n ≥ 1, \(\mathcal {L}^{\mathbf {q}}_n\) is carried by the set of rooted trees with n leaves, as follows:

-

\(\mathcal {L}^{\mathbf {q}}_1\) is the distribution of a line-tree with G + 1 vertices and G edges where G is a geometric distribution:

$$\displaystyle \begin{aligned}\mathbb{P}(G=k)=q_1(\emptyset)(1-q_1(\emptyset))^k, \quad k\geq 0, \end{aligned}$$ -

for n ≥ 2, \(\mathcal {L}^{\mathbf {q}}_n\) is the distribution of

$$\displaystyle \begin{aligned}\langle T_1,\ldots,T_{p(\Lambda)} \rangle \end{aligned}$$where Λ is a partition of n distributed according to q n , and given Λ, the trees T 1, …, T p( Λ) are independent with respective distributions \(\mathcal {L}^{\mathbf {q}}_{\Lambda _1},\ldots ,\) \(\mathcal {L}^{\mathbf {q}}_{\Lambda _{p(\Lambda )}}\).

A sequence (T n , n ≥ 1) of random rooted trees such that \(T_n \sim \mathcal {L}^{\mathbf {q}}_n\) for each \(n \in \mathbb {N}\) is called a MB-sequence of trees indexed by the leaves, with splitting distributions (q n , n ≥ 1).

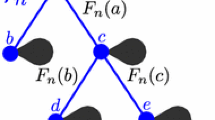

This construction may be re-interpreted as follows: we start from a collection of n indistinguishable balls, and with probability q n (λ 1, …, λ p ), split the collection into p sub-collections with λ 1, …, λ p balls. Note that there is a chance q n ((n)) < 1 that the collection remains unchanged during this step of the procedure. Then, re-iterate the splitting operation independently for each sub-collection using this time the probability distributions \(q_{\lambda _1},\ldots ,q_{\lambda _p}\). If a sub-collection consists of a single ball, it can remain single with probability q 1((1)) or get wiped out with probability q 1(∅). We continue the procedure until all the balls are wiped out. The tree T n is then the genealogical tree associated with this process: it is rooted at the initial collection of n balls and its n leaves correspond to the n isolated balls just before they are wiped out, See Fig. 1 for an illustration.

We can define similarly MB-sequences of (distributions of) trees indexed by their number of vertices. Consider here a sequence (p n , n ≥ 1) such that p n is a probability on \(\mathcal {P}_n\) with no restriction but

Mimicking the previous balls construction and starting from a collection of n indistinguishable balls, we first remove a ball, split the n − 1 remaining balls in sub-collections with λ 1, …, λ p balls with probability p n−1((λ 1, …, λ p )), and iterate independently on sub-collections until no ball remains. Formally, this gives:

Definition 2.2

For each n ≥ 1, let p n be a probability on \(\mathcal {P}_n\), such that p 1((1)) = 1. From the sequence (p n , n ≥ 1) we construct recursively a sequence of distributions \((\mathcal {V}^{\mathbf {p}}_n)\) such that for all n ≥ 1, \(\mathcal {V}^{\mathbf {p}}_n\) is carried by the set of trees with n vertices, as follows:

-

\(\mathcal {V}^{\mathbf {p}}_1\) is the deterministic distribution of the tree reduced to one vertex,

-

for n ≥ 2, \(\mathcal {V}^{\mathbf {p}}_n\) is the distribution of

$$\displaystyle \begin{aligned}\langle T_1,\ldots,T_{p(\Lambda)} \rangle \end{aligned} $$where Λ is a partition of n − 1 distributed according to p n−1, and given Λ, the trees T 1, …, T p( Λ) are independent with respective distributions \(\mathcal {V}^{\mathbf {p}}_{\Lambda _1},\ldots , \mathcal {V}^{\mathbf {p}}_{\Lambda _{p(\Lambda )}}\).

A sequence (T n , n ≥ 1) of random rooted trees such that \(T_n \sim \mathcal {V}^{\mathbf {p}}_n\) for each \(n \in \mathbb {N}\) is called a MB-sequence of trees indexed by the vertices, with splitting distributions (p n , n ≥ 1).

More generally, the MB-property can be extended to sequences of trees (T n , n ≥ 1) with arbitrary degree constraints, i.e. such that for all n, T n has n vertices in A, where A is a given subset of \(\mathbb {Z}_+\). We will not develop this here and refer the interested reader to [89] for more details.

Some Examples

-

1.

A deterministic example. Consider the splitting distributions on \(\mathcal {P}_n\)

$$\displaystyle \begin{aligned}q_n(\lceil n/2\rceil,\lfloor n/2\rfloor)=1, \quad n \geq 2,\end{aligned}$$as well as q 1(∅) = 1. Let (T n , n ≥ 1) the corresponding MB-sequence indexed by leaves. Then T n is a deterministic discrete binary tree, whose root splits in two subtrees with both n∕2 leaves when n is even, and respectively (n + 1)∕2, (n − 1)∕2 leaves when n is odd. Clearly, when n = 2k, the height of T n is exactly k, and more generally for large n, \(\mathrm {ht}(T_n)\sim \ln (n)/\ln (2)\).

-

2.

A basic example. For n ≥ 2, let q n be the probability on \(\mathcal {P}_n\) defined by

$$\displaystyle \begin{aligned}q_n((n))=1-\frac{1}{n^{\alpha}} \quad \text{and} \quad q_n(\lceil n/2\rceil,\lfloor n/2\rfloor)=\frac{1}{n^{\alpha}} \quad \text{for some }\alpha>0, \end{aligned}$$and let q 1(∅) = 1. Let (T n , n ≥ 1) be an MB-sequence indexed by leaves with splitting distributions (q n ). Then T n is a discrete tree with vertices with degrees ∈{1, 2, 3} where the distance between the root and the first branch point (i.e. the first vertex of degree 3) is a Geometric distribution on \(\mathbb {Z}_+\) with success parameter n −α. The two subtrees above this branch point are independent subtrees, independent of the Geometric r.v. just mentioned, and whose respective distances between the root and first branch point are Geometric distributions with respectively (⌈n∕2⌉)−α and (⌊n∕2⌋)−α parameters. Noticing the weak convergence

$$\displaystyle \begin{aligned}\frac{\mathrm{Geo}(n^{-\alpha})}{n^{\alpha}} \overset{\mathrm{(d)}}{\underset{n \rightarrow \infty}\longrightarrow} \mathrm{Exp}(1) \end{aligned}$$one may expect that n −αT n has a limit in distribution. We will later see that it is indeed the case.

-

3.

Conditioned Galton–Watson trees. Let \(T_n^{\eta , \mathrm {l}}\) be a Galton–Watson tree with offspring distribution η, conditioned on having n leaves, for integers n for which this is possible. The branching property is then preserved by conditioning and the sequence \((T^{\eta ,\mathrm {l}}_n,n :\vadjust { } \mathbb {P}(\#_{\mathrm {leaves}} T^{\eta })>0)\) is Markov-Branching, with splitting distributions

$$\displaystyle \begin{aligned}q^{\mathrm{GW},\eta}_n(\lambda)=\eta(p)\times \frac{p!}{\prod_{i=1}^p m_i(\lambda)!}\times \frac{\prod_{i=1}^{p}\mathbb{P}(\#_{\mathrm{leaves}} T^{\eta}=\lambda_i)}{\mathbb{P}(\#_{\mathrm{leaves}} T^{\eta}=n)} \end{aligned}$$for all \(\lambda \in \mathcal {P}_n,n\geq 2\), where # leavesT η is the number of leaves of the unconditioned Galton–Watson tree T η, and m i (λ) = #{1 ≤ j ≤ p : λ j = i}. The probability \(q^{\mathrm {GW},\eta }_1\) is given by \(q^{\mathrm {GW},\eta }_1((1))=\eta (1)\).

Similarly, if \(T_n^{\eta ,\mathrm {v}}\) denotes a Galton–Watson tree with offspring distribution η, conditioned on having n vertices, the sequence \((T^{\eta ,\mathrm {v}}_n, \mathbb {P}(\#_{\mathrm {vertices}} T^{\eta })>0)\) is MB, with splitting distributions

$$\displaystyle \begin{aligned} p^{\mathrm{GW},\eta}_{n-1}(\lambda)=\eta(p)\times \frac{p!}{\prod_{i=1}^p m_i(\lambda)!}\times \frac{\prod_{i=1}^{p}\mathbb{P}(\#_{\mathrm{vertices}} T^{\eta}=\lambda_i)}{\mathbb{P}(\#_{\mathrm{vertices}} T^{\eta}=n)} \end{aligned} $$(3)for all \(\lambda \in \mathcal {P}_{n-1},n \geq 3\) where # verticesT η is the number of leaves of the unconditioned GW-tree T η. Details can be found in [59, Section 5].

-

4.

Dynamical models of tree growth. Rémy’s, Ford’s, Marchal’s and the k-ary algorithms all lead to MB-sequences of trees indexed by leaves. To be precise, we have to remove in each of these trees the edge adjacent to the root to obtain MB-sequences of trees (the roots have all a unique child). The MB-property can be proved by induction on n. By construction, the distribution of the leaves in the subtrees above the root is closely connected to urns models. We have the following expressions for the splitting distributions:

Ford’s α-Model

For \(k \geq \frac {n}{2}, n\geq 2\),

and q 1(∅) = 1. See [55] for details. In particular, taking α = 1∕2 one sees that

k-Ary Growing Trees

Note that in these models, there are 1 + (k − 1)(n − 1) leaves in the tree T n (k), so that the indices do not exactly correspond to the definitions of the Markov-Branching properties seen in the previous section. However, by relabelling, defining for m = 1 + (k − 1)(n − 1) the tree \(\overline T_m(k)\) to be the tree T n (k) to which the edge adjacent to the root has been removed, we obtain an MB-sequence \((\overline T_m(k), m \in (k-1)\mathbb {N}+2-k)\). The splitting distributions are defined for m = 1 + (k − 1)(n − 1), n ≥ 2 and \(\lambda =(\lambda _1,\ldots ,\lambda _k) \in \mathcal {P}_m\) such that λ i = 1 + (k − 1)ℓ i , for some \(\ell _i \in \mathbb {Z}_+\) for all i (note that \(\sum _{i=1}^k \ell _i=n-2\)) by

$$\displaystyle \begin{aligned}q_m^{k}(\lambda)=\sum_{\mathbf{n}=(n_1,\ldots,n_k)\in \mathbb{N}^k : {\mathbf{n}}^{\downarrow}=\lambda}\overline q_m(\mathbf{n}) \end{aligned}$$where n ↓ is the decreasing rearrangement of the elements of n and

$$\displaystyle \begin{aligned} &\overline q_m(\mathbf{n})=\frac{1}{k(\Gamma(\frac{1}{k}))^{k-1}}\left(\prod_{i=1}^k \frac{\Gamma(\frac{1}{k}+n_i)}{n_i!}\right)\\ &\quad \times \frac{(n-2)!}{\Gamma(\frac{1}{k}+n-1)} \left(\sum_{j=1}^{n_1+1} \frac{n_1!}{(n_1-j+1)!}\frac{(n-j-1)!}{(n-2)!}\right). \end{aligned} $$See [60, Section 3].

Marchal’s Algorithm

For \(\lambda =(\lambda _1,\ldots ,\lambda _p) \in \mathcal {P}_n, n \geq 2\),

$$\displaystyle \begin{aligned} &q_n^{\mathrm{Marchal},\beta}(\lambda)\\ &\quad =\frac{n!}{ \lambda_1 ! \ldots \lambda_p ! m_1(\lambda) ! \ldots m_n(\lambda)! }\frac{\beta^{2-p}\Gamma(2-\beta^{-1})\Gamma(p-\beta)}{\Gamma(n-\beta^{-1})\Gamma(2-\beta)}\prod_{i=1}^p \frac{\Gamma(\lambda_j-\beta^{-1})}{\Gamma(1-\beta^{-1})} \end{aligned} $$where m i (λ) = #{1 ≤ j ≤ p : λ j = i}. This is a consequence of [46, Theorem 3.2.1] and [75, Lemma 5].

-

5.

Cut-trees. Cut-tree of a uniform Cayley tree. Consider C n a uniform Cayley tree of size n, i.e. a tree picked uniformly at random amongst the set of rooted tree with n labelled vertices. This tree has the following recursive property (see Pitman [85, Theorem 5]): removing an edge uniformly at random in C n gives two trees, which given their numbers of vertices, k, n − k say, are independent uniform Cayley trees of respective sizes k, n − k. Now, consider the following deletion procedure: remove in C n one edge uniformly at random, then remove another edge in the remaining set of n − 2 edges uniformly at random and so on until all edges have been removed. It was shown by Janson [65] and Panholzer [83] that the number of steps needed to isolate the root divided by \(\sqrt {n}\) converges in distribution to a Rayleigh distribution (i.e. with density \(x\exp (-x^2/2)\) on \(\mathbb {R}_+\)). Bertoin [15] was more generally interested in the number of steps needed to isolate ℓ distinguished vertices, and in that aim he introduced the cut-tree \(T^{\mathrm {cut}}_n\) of C n . The tree \(T^{\mathrm {cut}}_n\) is the genealogical tree of the above deletion procedure, i.e. it describes the genealogy of the connected components, see Fig. 2 for an illustration and [15] for a precise construction of \(T^{\mathrm {cut}}_n\). Let us just mention here that \(T^{\mathrm {cut}}_n\) is a rooted binary tree with n leaves, and that Pitman’s recursive property implies that \((T^{\mathrm {cut}}_n,n\geq 1)\) is MB. The corresponding splitting probabilities are:

$$\displaystyle \begin{aligned} q^{\mathrm{Cut}, \mathrm{Cayley}}_n(k,n-k)= \frac{(n-k)^{n-k-1}}{(n-k)!}\frac{k^{k-1}}{k!}\frac{(n-2)!}{n^{n-3}}, \quad n/2<k\leq n-1, \end{aligned}$$

Cut-tree of a uniform recursive tree. A recursive tree with n vertices is a tree with vertices labelled by 1, …, n, rooted at 1, such that the sequence of labels of vertices along any branch from the root to a leaf is increasing. It turns out that the cut-tree of a uniform recursive tree is also MB and with splitting probabilities

see [16].

Remark

The first example is a simple example of models where macroscopic branchings are frequent, unlike the second example where macroscopic branchings are rare (they occur with probability n −α → 0). By macroscopic branchings, we mean that the way that the n leaves (or vertices) are distributed above the root gives at least two subtrees with a size proportional to n. Although it is not completely obvious yet, nearly all other examples above have rare macroscopic branchings (in a sense that will be specified later) and this is typically the context in which we will study the scaling limits of MB-trees. Typically the tree T n will then grow as a power of n. When macroscopic branchings are frequent, there is no scaling limit in general for the Gromov–Hausdorff topology, a topology introduced in the next section. However it is known that the height of the tree T n is then often of order \(c\ln (n)\). This case has been studied in [30].

3 The Example of Galton–Watson Trees and Topological Framework

We start with an informal version of the prototype result of Aldous on the description of the scaling limits of conditioned Galton–Watson trees. Let η be a critical offspring distribution with finite variance σ 2 ∈ (0, ∞), and let \(T_n^{\eta ,\mathrm {v}}\) denote a Galton–Watson tree with offspring distribution η, conditioned to have n vertices (in the following it is implicit that we only consider integers n such that this conditioning is possible). Aldous [10] showed that

where the continuous tree \(\mathcal {T}_{\mathrm {Br}}\) arising in the limit is the Brownian Continuum Random Tree, sometimes simply called the Brownian tree. Note that the limit only depends on η via its variance σ 2.

This result by Aldous was a breakthrough in the study of large random trees, since it was the first to describe the behavior of the tree as a whole. We will discuss this in more details in Sect. 3.2. Let us first introduce the topological framework in order to make sense of this convergence.

3.1 Real Trees and the Gromov–Hausdorff Topology

Since the pioneering works of Evans et al. [52] in 2003 and Duquesne and Le Gall [47] in 2005, the theory of real trees (or \(\mathbb {R}\)-trees) has been intensively used in probability. These trees are metric spaces having a “tree property” (roughly, this means that for each pair of points x, y in the metric space, there is a unique path going from x to y—see below for a precise definition). This point of view allows behavior such as infinite total length of the tree, vertices with infinite degree, and density of the set of leaves.

In these lecture notes, all the real trees we will consider are compact metric spaces. For this reason, we restrict ourselves to the theory of compact real trees. We now briefly recall background on real trees and the Gromov–Hausdorff and Gromov–Hausdorff–Prokhorov distances, and refer to [51, 71] for more details on this topic.

Real Trees

A real tree is a metric space \((\mathcal {T},d)\) such that, for any points x and y in \(\mathcal {T}\),

-

there is an isometry \(\varphi _{x,y}:[0,d(x,y)]\to \mathcal {T}\) such that φ x,y(0) = x and φ x,y(d(x, y)) = y

-

for every continuous, injective function \(c:[0,1]\to \mathcal {T}\) with c(0) = x, c(1) = y, one has c([0, 1]) = φ x,y([0, d(x, y)]).

Note that a discrete tree may be seen as a real tree by “replacing” its edges by line segments. Unless specified, it will be implicit throughout these notes that these line segments are all of length 1.

We denote by [[x, y]] the line segment φ x,y([0, d(x, y)]) between x and y. A rooted real tree is an ordered pair \(((\mathcal {T},d),\rho )\) such that \((\mathcal {T},d)\) is a real tree and \(\rho \in \mathcal {T}\). This distinguished point ρ is called the root. The height of a point \(x\in \mathcal {T}\) is defined by

and the height of the tree itself is the supremum of the heights of its points, while the diameter is the supremum of the distance between two points:

The degree of a point x is the number of connected components of \(\mathcal {T} \backslash \{x\}\). We call leaves of \(\mathcal {T}\) all the points of \(\mathcal {T}\backslash \{\rho \}\) which have degree 1. Given two points x and y, we define x ∧ y as the unique point of \(\mathcal {T}\) such that [[ρ, x]] ∩ [[ρ, y]] = [[ρ, x ∧ y]]. It is called the branch point of x and y if its degree is larger than or equal to 3. For a > 0, we define the rescaled tree \(a\mathcal {T}\) as \((\mathcal {T},ad)\) (the metric d thus being implicit and dropped from the notation).

As mentioned above, we will only consider compact real trees. We now want to measure how close two such metric spaces are. We start by recalling the definition of Hausdorff distance between compact subsets of a metric space.

Hausdorff Distance

If A and B are two nonempty compact subsets of a metric space (E, d), the Hausdorff distance between A and B is defined by

where A ε and B ε are the closed ε-enlargements of A and B, i.e. A ε = {x ∈ E : d(x, A) ≤ ε} and similarly for B ε.

The Gromov–Hausdorff extends this concept to compact real trees (or more generally compact metric spaces) that are not necessarily compact subsets of a single metric space, by considering embeddings in an arbitrary common metric space.

Gromov–Hausdorff Distance

Given two compact rooted trees \((\mathcal {T},d,\rho )\) and \((\mathcal {T}',d',\rho ')\), let

where the infimum is taken over all pairs of isometric embeddings ϕ and ϕ′ of \(\mathcal {T}\) and \(\mathcal {T}'\) in the same metric space \((\mathcal {Z},d_{\mathcal {Z}}),\) for all choices of metric spaces \((\mathcal {Z},d_{\mathcal {Z}})\).

We will also be concerned with measured trees, that are real trees equipped with a probability measure on their Borel sigma-field. To this effect, recall first the definition of the Prokhorov distance between two probability measures μ and μ′ on a metric space (E, d):

This distance metrizes the weak convergence on the set of probability measures on (E, d).

Gromov–Hausdorff–Prokhorov Distance

Given two measured compact rooted trees \((\mathcal {T},d,\rho ,\mu )\) and \((\mathcal {T}',d',\rho ',\mu ')\), we let

where the infimum is taken on the same space as before and ϕ ∗μ, \(\phi ^{\prime }_*\mu ^{\prime }\) are the push-forwards of μ, μ′ by ϕ, ϕ′.

The Gromov–Hausdorff distance d GH indeed defines a distance on the set of compact rooted real trees taken up to root-preserving isomorphisms. Similarly, The Gromov–Hausdorff–Prokhorov distance d GHP is a distance on the set of compact measured rooted real trees taken up to root-preserving and measure-preserving isomorphisms. Moreover these two metric spaces are Polish, see [52] and [3]. We will always identify two (measured) rooted \(\mathbb {R}\)-trees when they are isometric and still use the notation \((\mathcal {T},d)\) (or \(\mathcal {T}\) when the choice of the metric is clear) to design their isometry class.

Statistics

It is easy to check that the function that associates with a compact rooted tree its diameter is continuous (with respect to the GH-topology on the set of compact rooted real trees and the usual topology on \(\mathbb {R}\)). Similarly, the function that associates with a compact rooted tree its height is continuous. The function that associates with a compact rooted measured tree the distribution of the height of a leaf chosen according to the probability on the tree is continuous as well (with respect to the GHP-topology on the set of compact rooted measured real trees and the weak topology on the set of probability measures on \(\mathbb {R}\)). Consequently, the existence of scaling limits with respect to the GHP-topology will directly imply scaling limits for the height, the diameter and the height of a typical vertex of the trees.

3.2 Scaling Limits of Conditioned Galton–Watson Trees

We can now turn to rigorous statements on the scaling limits of conditioned Galton–Watson trees. We reformulate the above result (4) by Aldous in the finite variance case and also present the result by Duquesne [44] when the offspring distribution η is heavy tailed, in the domain of attraction of a stable distribution. In the following, η always denotes a critical offspring distribution, \(T^{\eta ,\mathrm {v}}_n\) is an η-GW tree conditioned to have n vertices, and \(\mu ^{\eta , \mathrm {v}}_n\) is the uniform probability on its vertices. The following convergences hold for the Gromov–Hausdorff–Prokhorov topology.

Theorem 3.1

-

(i)

(Aldous [10]) Assume that η has a finite variance σ 2. Then, there exists a random compact real tree, called the Brownian tree and denoted \(\mathcal {T}_{\mathrm {Br}}\) , endowed with a probability measure μ Br supported by its set of leaves, such that as n →∞

$$\displaystyle \begin{aligned}\left(\frac{\sigma T^{\eta,\mathrm{v}}_n}{2\sqrt{n}},\mu_n^{\eta,\mathrm{v}}\right) \overset{\mathrm{(d)}}{\underset{\mathrm{GHP}} \longrightarrow} \left(\mathcal{T}_{\mathrm{Br}},\mu_{\mathrm{Br}}\right). \end{aligned}$$ -

(ii)

(Duquesne [44]) If η k ∼ κk −1−α as k →∞ for α ∈ (1, 2), then there exists a random compact real tree \(\mathcal {T}_{\alpha }\) , called the stable Lévy tree with index α, endowed with a probability measure μ α supported by its set of leaves, such that as n →∞

$$\displaystyle \begin{aligned} \left(\frac{T^{\eta,\mathrm{v}}_n}{n^{1 - 1/\alpha}}, \mu^{\eta,\mathrm{v}}_n\right) \overset{\mathrm{(d)}}{\underset{\mathrm{GHP}} \longrightarrow} \left(\left(\frac{\alpha(\alpha-1)}{\kappa \Gamma(2-\alpha)} \right)^{1/\alpha} \alpha^{1/\alpha -1} \cdot \mathcal{T}_\alpha,\mu_{\alpha}\right). \end{aligned}$$

The result by Duquesne actually extends to cases where the offspring distribution η is in the domain of attraction of a stable distribution with index α ∈ (1, 2]. See [44] for details.

The Brownian tree was first introduced by Aldous in the early 1990s in the series of papers [8,9,10]. This tree can be constructed in several ways, the most common being the following. Let (e(t), t ∈ [0, 1]) be a normalized Brownian excursion, which, formally, can be defined from a standard Brownian motion B by letting

where \(g:=\sup \{s \leq 1:B_s=0\}\) and \(d=\inf \{s \geq 1:B_s=0\}\) (note that d − g > 0 a.s. since B 1 ≠ 0 a.s.). Then consider for x, y ∈ [0, 1], x ≤ y, the non-negative quantity

and then the equivalent relation x ∼ e y ⇔ d e (x, y) = 0. It turns out that the quotient space [0, 1]∕ ∼ e endowed with the metric induced by d e (which indeed gives a true metric) is a compact real tree. The Brownian excursion e is called the contour function of this tree. Equipped with the measure μ e , which is the push-forward of the Lebesgue measure on [0, 1], this gives a version ([0, 1]∕ ∼ e , d e , μ e ) of the measured tree \((\mathcal {T}_{\mathrm Br},\mu _{\mathrm Br})\). To get a better intuition of what this means, as well as more details and other constructions of the Brownian tree, we refer to the three papers by Aldous [8,9,10] and to the survey by Le Gall [71].

In the early 2000s, the family of stable Lévy trees \((\mathcal {T}_{\alpha },\alpha \in (1,2)]\)—where by convention \(\mathcal {T}_2\) is \(\sqrt {2} \cdot \mathcal {T}_{\mathrm {Br}}\)—was introduced by Duquesne and Le Gall [46, 47] in the more general framework of Lévy trees, building on earlier work of Le Gall and Le Jan [72]. These trees can be constructed in a way similar as above from continuous functions built from the stable Lévy processes. This construction is complex and we will not detail it here. Others constructions are possible, see e.g. [50, 56]. The stable trees are important objects of the theory of random trees. They are intimately related to continuous state branching processes, fragmentation and coalescence processes. They appear as scaling limits of various models of trees and graphs, starting with the Galton–Watson examples above and some other examples discussed in Sect. 5. In particular, it is noted that it was only proved recently that Galton–Watson trees conditioned by their number of leaves or more general arbitrary degree restrictions also converge in the scaling limit to stable trees, see Sect. 5.1.2 and the references therein.

In the last few years, the geometric and fractal aspects of stable trees have been studied in great detail: Hausdorff and packing dimensions and measures [45, 47, 48, 57]; spectral dimension [34]; spinal decompositions and invariance under uniform re-rooting [49, 64]; fragmentation into subtrees [75, 76]; and embeddings of stable trees into each other [35]. We simply point out it here that the Brownian tree is binary, in the sense that all its points have their degree in {1, 2, 3} almost surely, whereas the stable trees \(\mathcal {T}_{\alpha },\alpha \in (1,2)\) have only points with degree in {1, 2, ∞} almost surely (every branch point has an infinite number of “children”).

Applications to Combinatorial Trees

Using the connections between some families of combinatorial trees and Galton–Watson trees mentioned in Sect. 2.1, we obtain the following scaling limits (in all cases, μ n denotes the uniform probability on the vertices of the tree T n ):

-

If T n is uniform amongst the set of rooted ordered trees with n vertices,

$$\displaystyle \begin{aligned}\left(\frac{T_n}{\sqrt{n}},\mu_n\right) \overset{\mathrm{(d)}}{\underset{\mathrm{GHP}}\longrightarrow} \left(\mathcal{T}_{B_r},\mu_{\mathrm{Br}}\right).\end{aligned}$$ -

If T n is uniform amongst the set of rooted trees with n labelled vertices,

$$\displaystyle \begin{aligned}\left(\frac{T_n}{\sqrt{n}},\mu_n\right) \overset{\mathrm{(d)}} {\underset{\mathrm{GHP}}\longrightarrow} \left(2\mathcal{T}_{B_r},\mu_{\mathrm{Br}}\right).\end{aligned}$$ -

If T n is uniform amongst the set of rooted binary ordered trees with n vertices,

$$\displaystyle \begin{aligned}\left(\frac{T_n}{\sqrt{n}},\mu_n\right) \overset{\mathrm{(d)}} {\underset{\mathrm{GHP}}\longrightarrow} \left(2\mathcal{T}_{B_r},\mu_{\mathrm{Br}}\right).\end{aligned}$$

As a consequence, this provides the behavior of several statistics of the trees, that first interested combinatorists.

We will not present the original proofs by Aldous [10] and Duquesne [44], but will rather focus on the fact that they may be recovered by using the MB-property. This is the goal of the next two sections, where we will present in a general setting some results on the scaling limits for MB-sequences of trees. As already mentioned, the main idea of the proofs of Aldous [10] and Duquesne [44] is rather based on the study of the so-called contour functions of the trees. We refer to Aldous and Duquesne papers, as well as Le Gall’s survey [71] for details. See also Duquesne and Le Gall [46] and Kortchemski [69, 70] for further related results.

4 Scaling Limits of Markov-Branching Trees

Our goal is to set up an asymptotic criterion on the splitting probabilities (q n ) of an MB-sequence of trees so that this sequence, suitably normalized, converges to a non-trivial continuous limit. We follow here the approach of the paper [59] that found its roots in the previous work [63] were similar results where proved under stronger assumptions. A remark on these previous results is made at the end of this section.

The splitting probability q n corresponds to a “discrete” fragmentation of the integer n into smaller integers. To set up the desired criterion, we first need to introduce a continuous counterpart for these partitions of integers, namely

which is endowed with the distance \(d_{\mathcal {S}^{\downarrow }}(\mathbf {s},\mathbf {s}')=\sup _{i\geq 1}|s_i-s_i^{\prime }|\). Our main hypothesis on (q n ) then reads:

Hypothesis (H)

There exist γ > 0 and ν a non-trivial σ-finite measure on \(\mathcal {S}^{\downarrow }\) satisfying \(\int _{\mathcal {S}^{\downarrow }}(1-s_1)\nu (\mathrm {d} \mathbf {s})<\infty \) and ν(1, 0, …) = 0, such that

for all continuous \(f: \mathcal {S}^{\downarrow } \rightarrow \mathbb {R}\).

We will see in Sect. 5 that most of the examples of splitting probabilities introduced in Sect. 2.3 satisfy this hypothesis. As a first, easy, example, consider the “basic example” introduced there (Example 2): q n ((n)) = 1 − n −α and q n (⌈n∕2⌉, ⌊n∕2⌋) = n −α, α > 0. Then, clearly, (H) is satisfied with

The interpretation of the hypothesis (H) is that macroscopic branchings are rare, in the sense that the macroscopic splitting events n↦n

s, \(\mathbf {s} \in \mathcal {S}^{\downarrow }\) with s

1 < 1 − ε occur with a probability asymptotically proportional to  , for a.e. fixed ε ∈ (0, 1).

, for a.e. fixed ε ∈ (0, 1).

The main result on the scaling limits of MB-trees indexed by the leaves is the following.

Theorem 4.1 ([59])

Let (T n , n ≥ 1) be a MB -sequence indexed by the leaves and assume that its splitting probabilities satisfy ( H ). Then there exists a compact, measured real tree \((\mathcal {T}_{\gamma ,\nu }, \mu _{\gamma ,\nu })\) such that

where μ n is the uniform probability on the leaves of T n .

The goal of this section is to detail the main steps of the proof of this result and to discuss some properties of the limiting measured tree, which belongs to the so-called family of self-similar fragmentation trees (the distribution of such a tree is entirely characterized by the parameters γ and ν). In that aim we will first study how the height of a leaf chosen uniformly at random in T n grows (Sects. 4.1 and 4.2). Then we will review some results on self-similar fragmentation trees (Sect. 4.3). Last we will explain how one can use the scaling limit of the height of a leaf chosen at random to obtain, by induction, the scaling limit of the subtree spanned by k leaves chosen independently, for all k, and then finish the proof of Theorem 4.1 with a tightness criterion (Sect. 4.4).

There is a similar result for MB-sequences indexed by the vertices.

Theorem 4.2 ([59])

Let (T n , n ≥ 1) be a MB -sequence indexed by the vertices and assume that its splitting probabilities satisfy ( H ) for some 0 < γ < 1. Then there exists a compact, measured real tree \((\mathcal {T}_{\gamma ,\nu }, \mu _{\gamma ,\nu })\) such that

where μ n is the uniform probability on the vertices of T n .

Theorem 4.2 is actually a direct corollary of Theorem 4.1, for the following reason. Consider an MB-sequence indexed by the vertices with splitting probabilities (p n ) and for all n, branch on each internal vertex of the tree T n an edge with a leaf. This gives a tree \(\overline T_n\) with n leaves. It is then obvious that \((\overline T_n,n\geq 1)\) is an MB-sequence indexed by the leaves, with splitting probabilities (q n ) defined by

(and q n (λ) = 0 for all other \(\lambda \in \mathcal {P}_n\)). It is moreover easy to see that (q n ) satisfies (H) with parameters (γ, ν), 0 < γ < 1, if and only if (p n ) does. Hence Theorem 4.1 implies Theorem 4.2, since \(\overline T_n\), endowed with the uniform probability, is at distance less than one from (T n , μ n ) for the GHP-distance.

We will present in Sect. 5 several applications of these two theorems. Let us just consider here the “basic example” of Sect. 2.3 (Example 2). We have already noticed that its splitting probabilities satisfy Hypothesis (H), with parameters α and δ (1∕2,1∕2,0,…). Hence in this case, the corresponding sequence of MB-trees T n divided by n α and endowed with the uniform probability measure on its leaves converges for the GHP-topology towards a (α, δ (1∕2,1∕2,0,…))-self-similar fragmentation tree.

Remark

These two statements are also valid when replacing in (H) and in the theorems the power sequence n γ by any regularly varying sequence with index γ > 0. We recall that a sequence (a n ) is said to vary regularly with index γ > 0 if for all c > 0,

We refer to [24] for backgrounds on that topic. For simplicity, in the following we will only works with power sequences, but the reader should have in mind that everything holds similarly for regularly varying sequences.

Convergence in Probability

In [63], scaling limits are established for some MB-sequences that moreover satisfy a property of sampling consistency, namely that for all n, T n is distributed as the tree with n leaves obtained by removing a leaf picked uniformly at random in T n+1, as well as the adjacent edge. This consistency property is demanding and the approach developed in [59] allows to do without it. However we note that if the MB-sequence is strongly sampling consistent, one can actually establish under suitable conditions a convergence in probability of the rescaled trees, which is of course an improvement. By strongly sampling consistent, we mean that versions of the trees can be built on a same probability space so that if \(T_n^{\circ }\) denotes the tree with n leaves obtained by removing an edge-leaf picked uniformly in T n+1, then (T n , T n+1) is distributed as \((T^{\circ }_n,T_{n+1})\). We refer to [63] for details.

4.1 A Markov Chain in the Markov-Branching Sequence of Trees

Consider (T n , n ≥ 1) an MB-sequence of trees indexed by the leaves, with splitting distribution (q n , n ≥ 1). Before studying the scaling limit of the trees in their whole, we start by studying the scaling limit of a typical leaf. For example, in each T n , we mark one of the n leaves uniformly at random and we want to determine how the height of the marked leaf behaves as n →∞. In that aim, let ⋆ n denote this marked leaf and let ⋆ n (k) denote its ancestor at generation k, 0 ≤ k ≤ht(⋆ n ) (so that ⋆ n (0) is the root of T n and ⋆ n (ht(⋆ n )) = ⋆ n ). Let also \(T^{\star }_n(k)\) be the subtree composed of the descendants of ⋆ n (k) in T n , formally,

and \(T^{\star }_n(k):=\emptyset \) if k > ht(⋆ n ). We then set

with the convention that X n (k) = 0 for k > ht(⋆ n ) (Fig. 3).

Proposition 4.3

The process (X n (k), k ≥ 0) is a \(\mathbb {Z}_+\) -valued non-increasing Markov chain starting from X n (0) = n, with transition probabilities

and p(1, 0) = q 1(∅) = 1 − p(1, 1).

Proof

The Markov property is a direct consequence of the Markov branching property. Indeed, given X n (1) = i 1, …, X n (k − 1) = i k−1, the tree \(T^{\star }_n(k-1)\) is distributed as \(T_{i_{k-1}}\) if i k−1 ≥ 1 and is the emptyset otherwise. In particular, when i k−1 = 0, the conditional distribution of X n (k) is the Dirac mass at 0. When i k−1 ≥ 1, we use the fact that ⋆ n is in \(T^{\star }_n(k-1)\), hence, still conditioning on the same event, we have that ⋆ n is uniformly distributed amongst the i k−1 leaves of \(T_{i_{k-1}}\). Otherwise said, given X n (1) = i 1, …, X n (k − 1) = i k−1 with i k−1 ≥ 1, \((T^{\star }_n(k-1), \star _n)\) is distributed as \((T_{i_{k-1}},\star _{i_{k-1}})\) and consequently X n (k) is distributed as \(X_{i_{k-1}}(1)\). Hence the Markov property of the chain (X n (k), k ≥ 0). It remains to compute the transition probabilities:

where Λ n denotes the partition of n corresponding to the distribution of the leaves in the subtrees of T n above the root. Since ⋆ n is chosen uniformly amongst the set of leaves, we clearly have that

□

Hence studying the scaling limit of the height of the marked leaf in the tree T n reduces to studying the scaling limit of the absorption time A n of the Markov chain (X n (k), k ≥ 0) at 0:

(to be precise, this absorption time is equal to the height of the marked leaf + 1). The study of the scaling limit of \(\left ((X_n(k),k \geq 1),A_n\right )\) as n →∞ is the goal of the next section. Before getting in there, let us notice that the Hypothesis (H) on the splitting probabilities (q n , n ≥ 1) of (T n , n ≥ 1), together with (6), implies the following behavior of the transition probabilities (p(n, k), k ≤ n):

for all continuous functions \(g:[0,1] \rightarrow \mathbb {R}\), where the measure μ in the limit is a finite, non-zero measure on [0, 1] defined by

To see this, apply (H) to the continuous function defined by

and f(1, 0, …) = g(1) + g(0).

4.2 Scaling Limits of Non-increasing Markov Chains

As discussed in the previous section, studying the height of a typical leaf in MB-trees amounts to studying the absorption time at 0 of a \(\mathbb {Z}_+\)-valued non-increasing Markov chain. In this section, we study in a general framework the scaling limits of \(\mathbb {Z}_+\)-valued non-increasing Markov chains, under appropriate assumptions on the transition probabilities. At the end of the section we will see how this applies to the height of a typical leaf in an MB-sequence. In the following,

denotes a non-increasing \(\mathbb {Z}_+\)-valued Markov chain starting from n (X n (0) = n), with transition probabilities (p(i, j), 0 ≤ j ≤ i) such that

Hypothesis (H ′)

∃ γ > 0 and μ a non-trivial finite measure on [0, 1] such that

for all continuous functions \(f:[0,1] \rightarrow \mathbb {R}\).

This hypothesis implies that starting from n, macroscopic jumps (i.e. with size proportional to n) are rare, since for a.e. 0 < ε ≤ 1, the probability to do a jump larger than εn is of order c ε n −γ where c ε =∫[0,1−ε](1 − x)−1μ(dx) (note that this may tend to ∞ when ε tends to 0).

Now, let

be the first time at which the chain enters an absorption state (note that A n < ∞ a.s. since the chain is non-increasing and \(\mathbb {Z}_+\)-valued). In the next theorem, \(\mathbb {D}([0,\infty ),[0,\infty ))\) denotes the set of non-negative càdlàg processes, endowed with the Skorokhod topology.

Theorem 4.4 ([58])

Assume (H ′).

-

(i)

Then, in \(\mathbb {D}([0,\infty ),[0,\infty ))\) ,

$$\displaystyle \begin{aligned}\left( \frac{X_n\left(\lfloor n^{\gamma}t \rfloor \right)}{n}, t \geq 0 \right) \overset{\mathrm{(d)}}{\underset{n \rightarrow \infty} \longrightarrow} \left(\exp(-\xi_{\tau(t)}),t \geq 0 \right), \end{aligned}$$where ξ is a subordinator, i.e. a non-decreasing Lévy process, and τ is the time-change (acceleration of time)

$$\displaystyle \begin{aligned}\tau(t):=\inf \left \{u \geq 0 : \int_0^u \exp(-\gamma \xi_r) \mathrm dr \geq t\right\}, t \geq 0.\end{aligned}$$The distribution of ξ is characterized by its Laplace transform \(\mathbb {E}[\exp (-\lambda \xi _t)]=\exp (-t \phi (\lambda ))\) , with

$$\displaystyle \begin{aligned}{\phi(\lambda)=\mu(\{0\})}+\mu(\{1\}) \lambda + \int_{(0,1)}(1-x^{\lambda})\frac{\mu(\mathrm dx)}{1-x}, \ \ \lambda \geq 0. \end{aligned}$$ -

(ii)

Moreover, jointly with the above convergence,

$$\displaystyle \begin{aligned}\frac{A_n}{n^{\gamma}} \overset{\mathrm{(d)}}{\underset{n \rightarrow \infty} \longrightarrow} \int_0^{\infty}\exp(-\gamma \xi_r) \mathrm dr=\inf \left\{t \geq 0: \exp(-\xi_{\tau(t)})=0 \right\}. \end{aligned}$$

Comments

For background on Lévy processes, we refer to [12]. Let us simply recall here that the law of a subordinator is characterized by three parameters: a measure on (0, ∞) that codes its jumps (which here is the push-forward of  by the application \(x \mapsto -\ln (x)\)), a linear drift (here μ({1})) and a killing rate at which the process jumps to + ∞ (here μ({0})).

by the application \(x \mapsto -\ln (x)\)), a linear drift (here μ({1})) and a killing rate at which the process jumps to + ∞ (here μ({0})).

Main Ideas of the Proof of Theorem 4.4

-

(i)

Let Y n (t) := n −1X n (⌊n γt⌋), for \(t\geq 0,n\in \mathbb {N}\). First, using Aldous’ tightness criterion [23, Theorem 16.10] and (H ′), one can check that the sequence (Y n , n ≥ 1) is tight. It is then sufficient to prove that every possible limit in distribution of subsequences of (Y n ) are distributed as \(\exp (-\xi _{\tau })\). Let Y ′ be such a limit and (n k , k ≥ 1) a sequence such that \(Y_{n_k}\) converges to Y ′ in distribution. In the limit, we actually prefer to deal with ξ than with ξ τ , and for this reason we start by changing time in Y n by setting

$$\displaystyle \begin{aligned}\tau_{Y_n}(t):=\inf \left\{u \geq 0:\int_0^u Y_n^{-\gamma}(r) \mathrm dr>t \right\} \quad \text{and} \,\, Z_n(t):=Y_n\left(\tau_{Y_n}(t)\right), t \geq 0. \end{aligned}$$One can then easily check that \((Z_{n_k})\) converges in distribution to Z′ where \(Z' =Y'\circ \tau _{Y^{\prime }}\), with \(\tau _{Y^{\prime }}(t):=\inf \big \{u \geq 0:\int _0^u (Y'(r))^{-\gamma } \mathrm dr>t \big \}\). It is also easy to reverse the time-change and get that

$$\displaystyle \begin{aligned}Y'(t)=Z'\big(\tau^{-1}_{Y^{\prime}}(t)\big) \ =Z'\left(\inf \left\{u \geq 0:\int_0^u Z^{\prime\gamma}(r) \mathrm dr>t \right\}\right), \quad t \geq 0.\end{aligned}$$With this last equality, we see that it just remains to prove that Z′ is distributed as \(\exp (-\xi )\). This can be done in three steps:

-

(a)

Observe the following (easy!) fact: if P is the transition function of a Markov chain M with countable state space \(\subset \mathbb {R}\), then for any positive function f such that f −1({0}) is absorbing,

$$\displaystyle \begin{aligned}f(M(k))\prod_{i=0}^{k-1}\frac{f(M(i))}{Pf(M(i))}, \quad k\geq 0 \end{aligned}$$is a martingale. As a consequence: for all λ ≥ 0 and n ≥ 1, if we let \(G_n(\lambda ):=\mathbb {E}\left [(X_n(1)/n)^{\lambda }\right ]\), then,

is a martingale.

-

(b)

Under (H ′), \(1-G_n(\lambda ) \underset {n \rightarrow \infty }{\sim } n^{-\gamma } \phi (\lambda )\). Together with the convergence in distribution of \((Z_{n_k})\) to Z′ and the definition of \(M^{(\lambda )}_n\), this leads to the convergence (this is the most technical part)

$$\displaystyle \begin{aligned}M^{(\lambda)}_{n_k} \overset{\mathrm{(d)}}{\underset{k \rightarrow \infty} \longrightarrow} (Z')^{\lambda} \exp(\phi(\lambda) \cdot),\end{aligned}$$and the martingale property passes to the limit.

-

(c)

Hence \((Z')^{\lambda }\exp (\phi (\lambda ) \cdot )\) is a martingale for all λ ≥ 0. Using Laplace transforms, it is then easy to see that this implies in turn that \(-\ln Z'\) is a non-decreasing process with independent and stationary increments (hence a subordinator), with Laplace exponent ϕ.

Hence \(Z'\overset {\mathrm {(d)}}=\exp (-\xi )\).

-

(a)

-

(ii)

We do not detail this part and refer to [58, Section 4.3]. Let us simply point out that it is not a direct consequence of the convergence of (Y n ) to \(\exp (-\xi _{\tau })\) since convergence of functions in \(\mathbb {D}([0,\infty ),[0,\infty ))\) does not lead, in general, to the convergence of their absorption times (when they exist). □

This result leads to the following corollary.

Corollary 4.5

Let (T n , n ≥ 1) be a MB -sequence indexed by the leaves, with splitting probabilities satisfying ( H ) with parameters (γ, ν). For each n, let ⋆ n be a leaf chosen uniformly amongst the n leaves of T n . Then,

where ξ is a subordinator with Laplace exponent \(\phi (\lambda )\,{=}\int _{\mathcal {S}^{\downarrow }} \sum _{i \geq 1}\left (1-s_i^{\lambda }\right )s_i \nu (\mathrm {d} \mathbf {s}), \lambda \geq 0\).

Proof

As seen at the end of the previous section, under (H) the transition probabilities of the Markov chain (5) satisfy assumption (H ′) with parameters γ and μ, with μ defined by (8). The conclusion follows with Theorem 4.4 (ii). □

Further Reading

Apart from applications to Markov-Branching trees, Theorem 4.4 can be used to describe the scaling limits of various stochastic processes, e.g. random walks with a barrier or the number of collisions in Λ-coalescent processes, see [58]. Recently, Bertoin and Kortchemski [18] set up results similar to Theorem 4.4 for non-monotone Markov chains and develop several applications, to random walks conditioned to stay positive, to the number of particles in some coagulation-fragmentations processes, to random planar maps (see [20] for this last point). Also in [61] similar convergences for bivariate Markov chains towards time-changed Markov additive processes are studied. This will have applications to dynamical models of tree growth in a broader context than the one presented in Sect. 5.3, and more generally to multi-type MB-trees.

4.3 Self-Similar Fragmentation Trees

Self-similar fragmentation trees are random compact measured real trees that describe the genealogical structure of self-similar fragmentation processes with a negative index. It turns out that this set of trees is closely related to the set of trees arising as scaling limits of MB-trees. We start by introducing the self-similar fragmentation processes, following Bertoin [14], and then turn to the description of their genealogical trees, which were first introduced in [57] and then in [91] in a broader context.

4.3.1 Self-Similar Fragmentation Processes

Fragmentation processes are continuous-time processes that describe the evolution of an object that splits repeatedly and randomly as time passes. In the models we are interested in, the fragments are characterized by their mass alone, other characteristics, such as their shape, do not come into account. Many researchers have been working on such models. From a historical perspective, it seems that Kolmogorov [68] was the first in 1941. Since the early 2000s, there has been a full treatment of fragmentation processes satisfying a self-similarity property. We refer to Bertoin’s book [14] for an overview of work in this area and a deepening of the results presented here.

We will work on the space of masses

which contains the set \(\mathcal {S}^{\downarrow }\), and which is equipped with the same metric \(d_{\mathcal {S}^{\downarrow }}\).

Definition 4.6

Let \(\alpha \in \mathbb {R}\). An α-self-similar fragmentation process is an \(\mathcal {S}^{\downarrow }_{-}\)-valued Markov process (F(t), t ≥ 0) which is continuous in probability and such that, for all t 0 ≥ 0, given that F(t 0) = (s 1, s 2, …), the process (F(t 0 + t), t ≥ 0) is distributed as the process G obtained by considering a sequence (F (i), i ≥ 1) of i.i.d. copies of F and then defining G(t) to be the decreasing rearrangement of the sequences \(s_iF^{(i)}(s_i^{\alpha }t),i\geq 1\), for all t ≥ 0.

In the following, we will always consider processes starting from a unique mass equal to 1, i.e. F(0) = (1, 0, …). At time t, the sequence F(t) should be understood as the decreasing sequence of the masses of fragments present at that time.

It turns out that such processes indeed exist and that their distributions are characterized by three parameters: the index of self-similarity \(\alpha \in \mathbb {R}\), an erosion coefficient c ≥ 0 that codes a continuous melt of the fragment (when c = 0 there is no erosion) and a dislocation measure ν, which is a measure ν on \(\mathcal {S}^{\downarrow }_{-}\) such that \(\int _{\mathcal {S}^{\downarrow }_{-}}(1-s_1)\nu (\mathrm {d} \mathbf {s})<\infty \). The role of the parameters α and ν can be specified as follows when c = 0 and ν is finite: then, each fragment with mass m waits a random time with exponential distribution with parameter \(\nu (\mathcal {S}^{\downarrow }_{-})\) and then splits in fragments with masses m S, where S is distributed according to \(\nu /\nu (\mathcal {S}^{\downarrow }_{-})\), independently of the splitting time. When ν is infinite, the fragments split immediately, see [14, Chapter 3] for further details.

The index α has an enormous influence on the behavior of the process: when α = 0, all fragments split at the same rate, whereas when α > 0 fragments with small masses split slower and when α < 0 fragments with small masses split faster. In this last case the fragments split so quickly that the whole initial object is reduced to “dust” in finite time, almost surely, i.e. \(\inf \{t\geq 0:F(t)=(0,\ldots )\}<\infty \) a.s.

The Tagged Fragment Process

We turn to a connection with the results seen in the previous section. Pick a point uniformly at random in the initial object (this object can be seen as an interval of length 1, for example), independently of the evolution of the process and let F ∗(t) be the mass of the fragment containing this marked point at time t. The process F ∗(t) is non-increasing and more precisely,

Theorem 4.7 (Bertoin [14], Theorem 3.2 and Corollary 3.1)

The process F ∗ can be written as

where ξ is a subordinator with Laplace exponent

and τ is a time-change depending on the parameter α, \(\tau (t)=\inf \left \{u\geq 0:\int _0^u \right .\) \(\left .\exp (\alpha \xi _t)\mathrm dr>t\right \}\).

4.3.2 Self-Similar Fragmentation Trees

It was shown in [57] that to every self-similar fragmentation process with a negative index α = −γ < 0, no erosion (c = 0) and a dislocation measure ν satisfying ν(∑i≥1s i < 1) = 0 (we say that ν is conservative), there is an associated compact rooted measured tree that describes its genealogy. We denote such a tree by \((\mathcal {T}_{\gamma ,\nu }, \mu _{\gamma ,\nu })\) and precise that the measure μ γ,ν is fully supported by the set of leaves of \(\mathcal {T}_{\gamma ,\nu }\) and non-atomic. In [91], Stephenson more generally constructed and studied compact rooted measured trees that describe the genealogy of any self-similar fragmentation process with a negative index. However in this survey, we restrict ourselves to the family of trees \((\mathcal {T}_{\gamma ,\nu }, \mu _{\gamma ,\nu })\), with γ > 0 and ν conservative.

The connection between a tree \((\mathcal {T}_{\gamma ,\nu }, \mu _{\gamma ,\nu })\) and the fragmentation process it is related to can be summarized as follows: for all t ≥ 0, consider the connected components of \(\{v \in \mathcal {T}_{\gamma ,\nu }: \mathrm {ht}(v)>t \}\), the set of points in \(\mathcal {T}_{\gamma ,\nu }\) that have a height strictly larger than t, and let F(t) denote the decreasing rearrangement of the μ γ,ν-masses of these components. Then F is a fragmentation process, with index of self-similarly − γ, dislocation measure ν and no erosion. Besides, we note that \(\mathcal {T}_{\gamma ,\nu }\) possesses a fractal property, in the sense that if we fix a t ≥ 0 (deterministic) and consider a point x at height t, then any subtree of \(\mathcal {T}_{\gamma ,\nu }\) descending from this point x (i.e. any connected component of \(\{v\in \mathcal {T}_{\gamma ,\nu }: x \in [[\rho ,v]] \}\)), having, say, a μ γ,ν-mass m, is distributed as \(m^{\gamma }\mathcal {T}_{\gamma ,\nu }\).

First Examples

The Brownian tree and the α-stable trees that arise as scaling limits of Galton–Watson trees all belong to the family of self-similar fragmentation trees. More precisely,

-

Bertoin [13] notices that the Brownian tree (\(\mathcal {T}_{\mathrm {Br}}\), μ Br) is a self-similar fragmentation tree and calculates its characteristics: γ = 1∕2 and ν Br(s 1 + s 2 < 1) = 0 and

$$\displaystyle \begin{aligned}\nu_{\mathrm{Br}}(s_1 \in \mathrm dx)=\frac{\sqrt{2}}{\sqrt{\pi} x^{3/2}(1-x)^{3/2}}, \quad 1/2<x<1.\end{aligned}$$The fact that ν Br(s 1 + s 2 < 1) = 0 corresponds to the fact the tree is binary: every branch point has two descendants trees, and in the corresponding fragmentation, every splitting events gives two fragments.

-

Miermont [75] proves that each stable tree \(\mathcal {T}_{\alpha }\) is self-similar and calculates its characteristics when α ∈ (1, 2): γ = 1 − 1∕α and

$$\displaystyle \begin{aligned}\int_{\mathcal{S}^{\downarrow}}f(\mathbf{s})\nu_{\alpha}(\mathrm{d} \mathbf{s}) = C_{\alpha} \mathbb{E}\left[T_1 f\left(\frac{\Xi_i}{T_1},i \geq 1\right)\right], \end{aligned}$$where