Abstract

In spite of the mathematical sophistication of classical gradient-based algorithms, certain inhibiting difficulties remain when these algorithms are applied to real-world problems. This is particularly true in the field of engineering, where unique difficulties occur that have prevented the general application of gradient-based mathematical optimization techniques to design problems. Difficulties include high dimensional problems, computational cost of function evaluations, noise, discontinuities, multiple local minima and undefined domains in the design space. All the above difficulties have been addressed in research done at the University of Pretoria over the past twenty years. This research has led to, amongst others, the development of the new optimization algorithms and methods discussed in this chapter.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

Keywords

- Dynamic Search Trajectories

- Snyman

- Penalty Function Formulation

- Sequential Unconstrained Minimization Techniques (SUMT)

- Conjugate Gradient Search Direction

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

1.1 Why new algorithms?

In spite of the mathematical sophistication of classical gradient-based algorithms, certain inhibiting difficulties remain when these algorithms are applied to real-world problems. This is particularly true in the field of engineering, where unique difficulties occur that have prevented the general application of gradient-based mathematical optimization techniques to design problems.

Optimization difficulties that arise are:

-

(i)

the functions are often very expensive to evaluate, requiring, for example, the time-consuming finite element analysis of a structure, the simulation of the dynamics of a multi-body system, or a computational fluid dynamics (CFD) simulation,

-

(ii)

the existence of noise, numerical or experimental, in the functions,

-

(iii)

the presence of discontinuities in the functions,

-

(iv)

multiple local minima, requiring global optimization techniques,

-

(v)

the existence of regions in the design space where the functions are not defined, and

-

(vi)

the occurrence of an extremely large number of design variables, disqualifying, for example, the SQP method.

1.2 Research at the University of Pretoria

All the above difficulties have been addressed in research done at the University of Pretoria over the past twenty years. This research has led to, amongst others, the development of the new optimization algorithms and methods listed in the subsections below.

1.2.1 Unconstrained optimization

-

(i)

The leap-frog dynamic trajectory method: LFOP (Snyman 1982, 1983),

-

(ii)

a conjugate-gradient method with Euler-trapezium steps in which a novel gradient-only line search method is used: ETOP (Snyman 1985), and

-

(iii)

a steepest-descent method applied to successive spherical quadratic approximations: SQSD (Snyman and Hay 2001).

1.2.2 Direct constrained optimization

-

(i)

The leap-frog method for constrained optimization, LFOPC (Snyman 2000), and

-

(ii)

the conjugate-gradient method with Euler-trapezium steps and gradient-only line searches, applied to penalty function formulations of constrained problems: ETOPC (Snyman 2005).

1.2.3 Approximation methods

-

(i)

A feasible descent cone method applied to successive spherical quadratic sub-problems: FDC-SAM (Stander and Snyman 1993; Snyman and Stander 1994, 1996; De Klerk and Snyman 1994), and

-

(ii)

the leap-frog method (LFOPC) applied to successive spherical quadratic sub-problems: Dynamic-Q (Snyman et al. 1994; Snyman and Hay 2002).

1.2.4 Methods for global unconstrained optimization

-

(i)

A multi-start global minimization algorithm with dynamic search trajectories: SF-GLOB (Snyman and Fatti 1987), and

-

(ii)

a modified bouncing ball trajectory method for global optimization: MBB (Groenwold and Snyman 2002).

All of the above methods developed at the University of Pretoria are gradient-based, and have the common and unique property, for gradient-based methods, that no explicit objective function line searches are required.

In this chapter the LFOP/C unconstrained and constrained algorithms are discussed in detail. This is followed by the presentation of the SQSD method, which serves as an introduction to the Dynamic-Q approximation method. Next the ETOP/C algorithms are introduced, with special reference to their ability to deal with the presence of severe noise in the objective function, through the use of a gradient-only line search technique. Finally the SF-GLOB and MBB stochastic global optimization algorithms, which use dynamic search trajectories, are presented and discussed.

2 The dynamic trajectory optimization method

The dynamic trajectory method for unconstrained minimization (Snyman 1982, 1983) is also known as the “leap-frog” method. It has been modified (Snyman 2000) to handle constraints via a penalty function formulation of the constrained problem. The outstanding characteristics of the basic method are:

-

(i)

it uses only function gradient information \({\varvec{\nabla }} f\),

-

(ii)

no explicit line searches are performed,

-

(iii)

it is extremely robust, handling steep valleys, and discontinuities and noise in the objective function and its gradient vector, with relative ease,

-

(iv)

the algorithm seeks relatively low local minima and can therefore be used as the basic component in a methodology for global optimization, and

-

(v)

when applied to smooth and near quadratic functions, it is not as efficient as classical methods.

2.1 Basic dynamic model

Assume a particle of unit mass in a n-dimensional conservative force field with potential energy at \(\mathbf{x}\) given by \(f(\mathbf{x})\), then at \(\mathbf{x}\) the force (acceleration \(\mathbf{a}\)) on the particle is given by:

from which it follows that for the motion of the particle over the time interval [0, t]:

or

where T(t) represents the kinetic energy of the particle at time t. Thus it follows that

i.e. conservation of energy along the trajectory. Note that along the particle trajectory the change in the function f, \(\Delta f=-\Delta T\), and therefore, as long as T increases, f decreases. This is the underlying principle on which the dynamic leap-frog optimization algorithm is based.

2.2 Basic algorithm for unconstrained problems (LFOP)

The basic elements of the LFOP method are as listed in Algorithm 6.1. A detailed flow chart of the basic LFOP algorithm for unconstrained problems is given in Figure 6.1.

A typical interfering strategy is to continue the trajectory when \(\Vert \mathbf{v}^{k+1}\Vert \ge \Vert \mathbf{v}^k\Vert \), otherwise set \(\mathbf{v}^k=\frac{1}{4}(\mathbf{v}^{k+1}+\mathbf{v}^k)\), \(\mathbf{x}^{k+1}=\frac{1}{2}(\mathbf{x}^{k+1}+\mathbf{x}^k)\) to compute the new \(\mathbf{v}^{k+1}\) and then continue.

In addition, Snyman (1982, 1983) introduced additional heuristics to determine a suitable initial time step \(\Delta t\), to allow for the magnification and reduction of \(\Delta t\), and to control the magnitude of the step \({\varvec{\Delta }}{} \mathbf{x} =\mathbf{x}^{k+1}-\mathbf{x}^k\) by setting a step size limit \(\delta \) along the computed trajectory. The recommended magnitude of \(\delta \) is \(\delta \approx \frac{1}{10}\sqrt{n}\) \(\times \) (maximum variable range).

2.3 Modification for constrained problems (LFOPC)

The code LFOPC (Snyman 2000) applies the unconstrained optimization algorithm LFOP to a penalty function formulation of the constrained problem (see Section 3.1) in 3 phases (see Algorithm 6.2).

For engineering problems (with convergence tolerance \(\varepsilon _x=10^{-4}\)) the choice \(\rho _0=10\) and \(\rho _1=100\) is recommended. For extreme accuracy (\(\varepsilon _x=10^{-8}\)), use \(\rho _0=100\) and \(\rho _1=10^4\).

2.3.1 Example

\({{\mathrm{Minimize\,}}}f(\mathbf{x})=x_1^2+2x_2^2\) such that \(g(\mathbf{x})=-x_1-x_2+1\le 0\) with starting point \(\mathbf{x}^0=[3,1]^T\) by means of the LFOPC algorithm. Use \(\rho _0=1.0\) and \(\rho _1=10.0\). The computed solution is depicted in Figure 6.2.

3 The spherical quadratic steepest descent method

3.1 Introduction

In this section an extremely simple gradient only algorithm (Snyman and Hay 2001) is proposed that, in terms of storage requirement (only 3 n-vectors need be stored) and computational efficiency, may be considered as an alternative to the conjugate gradient methods. The method effectively applies the steepest descent (SD) method to successive simple spherical quadratic approximations of the objective function in such a way that no explicit line searches are performed in solving the minimization problem. It is shown that the method is convergent when applied to general positive-definite quadratic functions. The method is tested by its application to some standard and other test problems. On the evidence presented the new method, called the SQSD algorithm, appears to be reliable and stable and performs very well when applied to extremely ill-conditioned problems.

3.2 Classical steepest descent method revisited

Consider the following unconstrained optimization problem:

where f is a scalar objective function defined on \(\mathbb {R}^n\), the n-dimensional real Euclidean space, and \(\mathbf {x}\) is a vector of n real components \(x_1,x_2,\ldots , x_n\). It is assumed that f is differentiable so that the gradient vector \(\varvec{\nabla } f(\mathbf {x})\) exists everywhere in \(\mathbb {R}^n\). The solution is denoted by \(\mathbf {x}^*\).

The steepest descent (SD) algorithm for solving problem (6.6) may then be stated as follows:

It can be shown that if the steepest descent method is applied to a general positive-definite quadratic function of the form \(f(\mathbf {x})=\frac{1}{2}\mathbf {x}^T\mathbf {A}\mathbf {x}+\mathbf {b}^T\mathbf {x}+c\), then the sequence \(\left\{ f(\mathbf {x}^k)\right\} \rightarrow f(\mathbf {x}^*)\). Depending, however, on the starting point \(\mathbf {x}^0\) and the condition number of \(\mathbf {A}\) associated with the quadratic form, the rate of convergence may become extremely slow.

It is proposed here that for general functions \(f(\mathbf {x})\), better overall performance of the steepest descent method may be obtained by applying it successively to a sequence of very simple quadratic approximations of \(f(\mathbf {x})\). The proposed modification, named here the spherical quadratic steepest descent (SQSD) method, remains a first order method since only gradient information is used with no attempt being made to construct the Hessian of the function. The storage requirements therefore remain minimal, making it ideally suitable for problems with a large number of variables. Another significant characteristic is that the method requires no explicit line searches.

3.3 The SQSD algorithm

In the SQSD approach, given an initial approximate solution \(\mathbf {x}^0\), a sequence of spherically quadratic optimization subproblems \(P[k], k=0,1,2,\ldots \) is solved, generating a sequence of approximate solutions \(\mathbf {x}^{k+1}\). More specifically, at each point \(\mathbf {x}^k\) the constructed approximate subproblem is P[k]:

where the approximate objective function \(\tilde{f}_k(\mathbf {x})\) is given by

and \(\mathbf {C}_k=\mathrm {diag}(c_k,c_k,\ldots , c_k)=c_k\mathbf {I}\). The solution to this problem will be denoted by \(\mathbf {x}^{*k}\), and for the construction of the next subproblem \(P[k+1],\ \mathbf {x}^{k+1}:=\mathbf {x}^{*k}\).

For the first subproblem the curvature \(c_0\) is set to \(c_0:=\left\| \varvec{\nabla } f(\mathbf {x}^0)\right\| /\rho \), where \(\rho >0\) is some arbitrarily specified step limit. Thereafter, for \(k\ge 1\), \(c_k\) is chosen such that \(\tilde{f}(\mathbf {x}^k)\) interpolates \(f(\mathbf {x})\) at both \(\mathbf {x}^k\) and \(\mathbf {x}^{k-1}\). The latter conditions imply that for \(k=1,2,\ldots \)

Clearly the identical curvature entries along the diagonal of the Hessian, mean that the level surfaces of the quadratic approximation \(\tilde{f}_k(\mathbf {x})\), are indeed concentric hyper-spheres. The approximate subproblems P[k] are therefore aptly referred to as spherical quadratic approximations.

It is now proposed that for a large class of problems the sequence \(\mathbf {x}^0,\mathbf {x}^1,\ldots \) will tend to the solution of the original problem (6.6), i.e.

For subproblems P[k] that are convex, i.e. \(c_k>0\), the solution occurs where \(\varvec{\nabla }\tilde{f}_k(\mathbf {x})=\mathbf {0}\), that is where

The solution to the subproblem, \(\mathbf {x}^{*k}\) is therefore given by

Clearly the solution to the spherical quadratic subproblem lies along a line through \(\mathbf {x}^k\) in the direction of steepest descent. The SQSD method may formally be stated in the form given in Algorithm 6.4.

Step size control is introduced in Algorithm 6.4 through the specification of a step limit \(\rho \) and the test for \(\left\| \mathbf {x}^k-\mathbf {x}^{k-1}\right\| >\rho \) in Step 2 of the main procedure. Note that the choice of \(c_0\) ensures that for P[0] the solution \(\mathbf {x}^1\) lies at a distance \(\rho \) from \(\mathbf {x}^0\) in the direction of steepest descent. Also the test in Step 3 that \(c_k<0\), and setting \(c_k:=10^{-60}\) where this condition is true ensures that the approximate objective function is always positive-definite.

3.4 Convergence of the SQSD method

An analysis of the convergence rate of the SQSD method, when applied to a general positive-definite quadratic function, affords insight into the convergence behavior of the method when applied to more general functions. This is so because for a large class of continuously differentiable functions, the behavior close to local minima is quadratic. For quadratic functions the following theorem may be proved.

3.4.1 Theorem

The SQSD algorithm (without step size control) is convergent when applied to the general quadratic function of the form \(f(\mathbf {x})=\frac{1}{2}\mathbf {x}^T\mathbf {A}\mathbf {x}+\mathbf {b}^T\mathbf {x}\), where \(\mathbf {A}\) is a \(n\times n\) positive-definite matrix and \(\mathbf {b}\in \mathbb {R}^n\).

Proof. Begin by considering the bivariate quadratic function, \(f(\mathbf {x})=x_1^2+\gamma x_2^2,\ \gamma \ge 1\) and with \(\mathbf {x}^0=[\alpha ,\beta ]^T\). Assume \(c_0>0\) given, and for convenience in what follows set \(c_0=1/\delta ,\delta >0\). Also employ the notation \(f_k=f(\mathbf {x}^k)\).

Application of the first step of the SQSD algorithm yields

and it follows that

and

For the next iteration the curvature is given by

Utilizing the information contained in (6.13)–(6.15), the various entries in expression (6.16) are known, and after substitution \(c_1\) simplifies to

In the next iteration, Step 1 gives

And after the necessary substitutions for \(\mathbf {x}^1\), \(\varvec{\nabla } f_1\) and \(c_1\), given by (6.13), (6.15) and (6.17) respectively, (6.18) reduces to

where

and

Clearly if \(\gamma =1\), then \(\mu _1=0\) and \(\omega _1=0\). Thus by (6.19) \(\mathbf {x}^2=\mathbf {0}\) and convergence to the solution is achieved within the second iteration.

Now for \(\gamma >1\), and for any choice of \(\alpha \) and \(\beta \), it follows from (6.20) that

which implies from (6.19) that for the first component of \(\mathbf {x}^2\):

or introducing \(\alpha \) notation (with \(\alpha _0=\alpha \)), that

{Note: because \(c_0=1/\delta >0\) is chosen arbitrarily, it cannot be said that \(|\alpha _1|<|\alpha _0|\). However \(\alpha _1\) is finite.}

The above argument, culminating in result (6.24), is for the two iterations \(\mathbf {x}^0\rightarrow \mathbf {x}^1\rightarrow \mathbf {x}^2\). Repeating the argument for the sequence of overlapping pairs of iterations \(\mathbf {x}^1\rightarrow \mathbf {x}^2\rightarrow \mathbf {x}^3;\ \mathbf {x}^2\rightarrow \mathbf {x}^3\rightarrow \mathbf {x}^4;\ldots \), it follows similarly that \(|\alpha _3|=|\mu _2\alpha _2|<|\alpha _2|;\ |\alpha _4|=|\mu _3\alpha _3|<|\alpha _3|;\ldots \), since \(0\le \mu _2< 1;\ 0\le \mu _3 < 1;\ldots \), where the value of \(\mu _k\) is determined by (corresponding to equation (6.20) for \(\mu _1\)):

Thus in general

and

For large positive integer m it follows that

and clearly for \(\gamma >0\), because of (6.26)

Now for the second component of \(\mathbf {x}^{2}\) in (6.19), the expression for \(\omega _1\), given by (6.21), may be simplified to

Also for the second component:

or introducing \(\beta \) notation

The above argument is for \(\mathbf {x}^0\rightarrow \mathbf {x}^1\rightarrow \mathbf {x}^2\) and again, repeating it for the sequence of overlapping pairs of iterations, it follows more generally for \(k=1,2,\ldots \), that

where \(\omega _k\) is given by

Since by (6.29), \(|\alpha _m|\rightarrow 0 \), it follows that if \(|\beta _m|\rightarrow 0\) as \(m\rightarrow \infty \), the theorem is proved for the bivariate case. Make the assumption that \(|\beta _m|\) does not tend to zero, then there exists a finite positive number \(\varepsilon \) such that

for all k. This allows the following argument:

Clearly since by (6.29) \(|\alpha _m|\rightarrow 0 \) as \(m\rightarrow \infty \), (6.36) implies that also \(|\omega _m|\rightarrow 0\). This result taken together with (6.33) means that \(|\beta _m|\rightarrow 0\) which contradicts the assumption above. With this result the theorem is proved for the bivariate case.

Although the algebra becomes more complicated, the above argument can clearly be extended to prove convergence for the multivariate case, where

Finally since the general quadratic function

may be transformed to the form (6.37), convergence of the SQSD method is also ensured in the general case.\(\Box \)

It is important to note that, the above analysis does not prove that \(\Vert \mathbf {x}^k - \mathbf {x}^*\Vert \) is monotonically decreasing with k, neither does it necessarily follow that monotonic descent in the corresponding objective function values \(f(\mathbf {x}^k)\), is guaranteed. Indeed, extensive numerical experimentation with quadratic functions show that, although the SQSD trajectory rapidly approaches the minimum, relatively large spike increases in \(f(\mathbf {x}^k)\) may occur after which the trajectory quickly recovers on its path to \(\mathbf {x}^*\). This happens especially if the function is highly elliptical (poorly scaled).

3.5 Numerical results and conclusion

The SQSD method is now demonstrated by its application to some test problems. For comparison purposes the results are also given for the standard SD method and both the Fletcher-Reeves (FR) and Polak-Ribiere (PR) conjugate gradient methods. The latter two methods are implemented using the CG+ Fortran conjugate gradient program of Gilbert and Nocedal (1992). The CG+ implementation uses the line search routine of Moré and Thuente (1994). The function and gradient values are evaluated together in a single subroutine. The SD method is applied using CG+ with the search direction modified to the steepest descent direction. The Fortran programs were run on a 266 MHz Pentium 2 computer using double precision computations.

The standard (refs. Rao 1996; Snyman 1985; Himmelblau 1972; Manevich 1999) and other test problems used are listed in Section 6.3.6 and the results are given in Tables 6.1 and 6.2. The convergence tolerances applied throughout are \(\varepsilon _g=10^{-5}\) and \(\varepsilon _x=10^{-8}\), except for the extended homogeneous quadratic function with \(n=50000\) (Problem 12) and the extremely ill-conditioned Manevich functions (Problems 14). For these problems the extreme tolerances \(\varepsilon _g\cong 0(=10^{-75})\) and \(\varepsilon _x=10^{-12}\), are prescribed in an effort to ensure very high accuracy in the approximation \(\mathbf {x}^c\) to \(\mathbf {x}^*\). For each method the number of function-cum-gradient-vector evaluations (\(N^{fg}\)) are given. For the SQSD method the number of iterations is the same as \(N^{fg}\). For the other methods the number of iterations (\(N^{it}\)) required for convergence, and which corresponds to the number of line searches executed, are also listed separately. In addition the relative error (\(E^r\)) in optimum function value, defined by

where \(\mathbf {x}^c\) is the approximation to \(\mathbf {x}^*\) at convergence, is also listed. For the Manevich problems, with \(n\ge 40\), for which the other (SD, FR and PR) algorithms fail to converge after the indicated number of steps, the infinite norm of the error in the solution vector (\(I^{\infty }\)), defined by \(\left\| \mathbf {x}^*-\mathbf {x}^c\right\| _{\infty }\) is also tabulated. These entries, given instead of the relative error in function value (\(E^r\)), are made in italics.

Inspection of the results shows that the SQSD algorithm is consistently competitive with the other three methods and performs notably well for large problems. Of all the methods the SQSD method appears to be the most reliable one in solving each of the posed problems. As expected, because line searches are eliminated and consecutive search directions are no longer forced to be orthogonal, the new method completely overshadows the standard SD method. What is much more gratifying, however, is the performance of the SQSD method relative to the well-established and well-researched conjugate gradient algorithms. Overall the new method appears to be very competitive with respect to computational efficiency and, on the evidence presented, remarkably stable.

In the implementation of the SQSD method to highly non-quadratic and non-convex functions, some care must however be taken in ensuring that the chosen step limit parameter \(\rho \), is not too large. A too large value may result in excessive oscillations occurring before convergence. Therefore a relatively small value, \(\rho =0.3\), was used for the Rosenbrock problem with \(n=2\) (Problem 4). For the extended Rosenbrock functions of larger dimensionality (Problems 13), correspondingly larger step limit values (\(\rho =\sqrt{n}/10\)) were used with success.

For quadratic functions, as is evident from the convergence analysis of Section 6.3.4, no step limit is required for convergence. This is borne out in practice by the results for the extended homogeneous quadratic functions (Problems 12), where the very large value \(\rho =10^4\) was used throughout, with the even more extreme value of \(\rho =10^{10}\) for \(n=50000\). The specification of a step limit in the quadratic case also appears to have little effect on the convergence rate, as can be seen from the results for the ill-conditioned Manevich functions (Problems 14), that are given for both \(\rho =1\) and \(\rho =10\). Here convergence is obtained to at least 11 significant figures accuracy (\(\left\| \mathbf {x}^*-\mathbf {x}^c\right\| _{\infty }<10^{-11}\)) for each of the variables, despite the occurrence of extreme condition numbers, such as \(10^{60}\) for the Manevich problem with \(n=200\).

The successful application of the new method to the ill-conditioned Manevich problems, and the analysis of the convergence behavior for quadratic functions, indicate that the SQSD algorithm represents a powerful approach to solving quadratic problems with large numbers of variables. In particular, the SQSD method can be seen as an unconditionally convergent, stable and economic alternative iterative method for solving large systems of linear equations, ill-conditioned or not, through the minimization of the sum of the squares of the residuals of the equations.

3.6 Test functions used for SQSD

Minimize \(f(\mathbf {x})\):

-

1.

\(f(\mathbf {x})=x_1^2+2x_2^2+3x_3^2-2x_1-4x_2-6x_3+6,\ \mathbf {x}^0=[3,3,3]^T,\ \mathbf {x}^*=[1,1,1]^T,\ f(\mathbf {x}^*)=0.0.\)

-

2.

\(f(\mathbf {x})=x_1^4-2x_1^2x_2+x_1^2+x_2^2-2x_1+1,\ \mathbf {x}^0=[3,3]^T,\ \mathbf {x}^*=[1,1]^T,\ f(\mathbf {x}^*)=0.0.\)

-

3.

\(f(\mathbf {x})=x_1^4-8x_1^3+25x_1^2+4x_2^2-4x_1x_2-32x_1+16,\ \mathbf {x}^0=[3,3]^T,\ \mathbf {x}^*=[2,1]^T,\ f(\mathbf {x}^*)=0.0.\)

-

4.

\(f(\mathbf {x})=100(x_2-x_1^2)^2+(1-x_1)^2,\ \mathbf {x}^0=[-1.2,1]^T,\ \mathbf {x}^*=[1,1]^T,\ f(\mathbf {x}^*)=0.0\) (Rosenbrock’s parabolic valley, Rao 1996).

-

5.

\(f(\mathbf {x})=x_1^4+x_1^3-x_1+x_2^4-x_2^2+x_2+x_3^2-x_3+x_1x_2x_3,\) (Zlobec’s function, Snyman 1985):

-

(a)

\(\mathbf {x}^0=[1,-1,1]^T\) and

-

(b)

\(\mathbf {x}^0=[0,0,0]^T,\ \mathbf {x}^*=[0.57085597,-0.93955591,0.76817555]^T,\) \(f(\mathbf {x}^*)=-1.91177218907\).

-

(a)

-

6.

\(f(\mathbf {x})=(x_1+10x_2)^2+5(x_3-x_4)^2+(x_2-2x_3)^4+10(x_1-x_4)^4,\ \mathbf {x}^0=[3,-1,0,1]^T,\ \mathbf {x}^*=[0,0,0,0]^T,\ f(\mathbf {x}^*)=0.0\) (Powell’s quartic function, Rao 1996).

-

7.

\(f(\mathbf {x})=-\left\{ \frac{1}{1+(x_1-x_2)^2}+\sin \left( \frac{1}{2}\pi x_2x_3\right) +\exp \left[ -\left( \frac{x_1+x_3}{x_2}-2 \right) ^2\right] \right\} , \mathbf {x}^0=[0,1,2]^T, \mathbf {x}^*=[1,1,1]^T,\ f(\mathbf {x}^*)=-3.0\) (Rao 1996).

-

8.

\(f(\mathbf {x})=\{-13+x_1+[(5-x_2)x_2-2]x_2\}^2+\{-29+x_1+[(x_2+1)x_2-14]x_2\}^2,\ \mathbf {x}^0=[1/2,-2]^T,\ \mathbf {x}^*=[5,4]^T, f(\mathbf {x}^*)=0.0\) (Freudenstein and Roth function, Rao 1996).

-

9.

\(f(\mathbf {x})=100(x_2-x_1^3)^2+(1-x_1)^2,\ \mathbf {x}^0=[-1.2,1]^T,\ \mathbf {x}^*=[1,1]^T, f(\mathbf {x}^*)=0.0\) (cubic valley, Himmelblau 1972).

-

10.

\(f(\mathbf {x})=[1.5-x_1(1-x_2)]^2+[2.25-x_1(1-x_2^2)]^2+[2.625-x_1(1-x_2^3)]^2,\ \mathbf {x}^0=[1,1]^T,\ \mathbf {x}^*=[3,1/2]^T,\ f(\mathbf {x}^*)=0.0\) (Beale’s function, Rao 1996).

-

11.

\(f(\mathbf {x})=[10(x_2-x_1^2)]^2+(1-x_1)^2+90(x_4-x_3^2)^2+(1-x_3)^2+10(x_2+x_4-2)^2+0.1(x_2-x_4)^2,\ \mathbf {x}^0=[-3,1,-3,-1]^T,\ \mathbf {x}^*=[1,1,1,1]^T,\ f(\mathbf {x}^*)=0.0\) (Wood’s function, Rao 1996).

-

12.

\(f(\mathbf {x})=\sum _{i=1}^n ix_i^2,\ \mathbf {x}^0=[3,3,\ldots , 3]^T,\ \mathbf {x}^*=[0,0,\ldots , 0]^T,\ f(\mathbf {x}^*)=0.0\) (extended homogeneous quadratic functions).

-

13.

\(f(\mathbf {x})=\sum _{i=1}^{n-1}[100(x_{i+1}-x_i^2)^2 + (1-x_i)^2] , \mathbf {x}^0=[-1.2,1,-1.2,1,\ldots ]^T,\) \(\mathbf {x}^*=[1,1,\ldots , 1]^T,\ f(\mathbf {x}^*)=0.0\) (extended Rosenbrock functions, Rao 1996).

-

14.

\(f(\mathbf {x})=\sum _{i=1}^n(1-x_i)^2/2^{i-1},\ \mathbf {x}^0=[0,0,\ldots , 0]^T,\ \mathbf {x}^*=[1,1,\ldots , 1]^T,\) \(f(\mathbf {x}^*)=0.0\) (extended Manevich functions, Manevich 1999).

4 The Dynamic-Q optimization algorithm

4.1 Introduction

An efficient constrained optimization method is presented in this section. The method, called the Dynamic-Q method (Snyman and Hay 2002), consists of applying the dynamic trajectory LFOPC optimization algorithm (see Section 6.2) to successive quadratic approximations of the actual optimization problem. This method may be considered as an extension of the unconstrained SQSD method, presented in Section 6.3, to one capable of handling general constrained optimization problems.

Due to its efficiency with respect to the number of function evaluations required for convergence, the Dynamic-Q method is primarily intended for optimization problems where function evaluations are expensive. Such problems occur frequently in engineering applications where time consuming numerical simulations may be used for function evaluations. Amongst others, these numerical analyses may take the form of a computational fluid dynamics (CFD) simulation, a structural analysis by means of the finite element method (FEM) or a dynamic simulation of a multibody system. Because these simulations are usually expensive to perform, and because the relevant functions may not be known analytically, standard classical optimization methods are normally not suited to these types of problems. Also, as will be shown, the storage requirements of the Dynamic-Q method are minimal. No Hessian information is required. The method is therefore particularly suitable for problems where the number of variables n is large.

4.2 The Dynamic-Q method

Consider the general nonlinear optimization problem:

where \(f(\mathbf {x})\), \(g_j(\mathbf {x})\) and \(h_k(\mathbf {x})\) are scalar functions of \(\mathbf {x}\).

In the Dynamic-Q approach, successive subproblems \(P[i],\ i=0,1,2,\ldots \) are generated, at successive approximations \(\mathbf {x}^i\) to the solution \(\mathbf {x}^*\), by constructing spherically quadratic approximations \(\tilde{f}(\mathbf {x})\), \(\tilde{g}_j(\mathbf {x})\) and \(\tilde{h}_k(\mathbf {x})\) to \(f(\mathbf {x})\), \(g_j(\mathbf {x})\) and \(h_k(\mathbf {x})\). These approximation functions, evaluated at a point \(\mathbf {x}^i\), are given by

with the Hessian matrices \(\mathbf {A}\), \(\mathbf {B}_j\) and \(\mathbf {C}_k\) taking on the simple forms

Clearly the identical entries along the diagonal of the Hessian matrices indicate that the approximate subproblems P[i] are indeed spherically quadratic.

For the first subproblem (\(i=0\)) a linear approximation is formed by setting the curvatures a, \(b_j\) and \(c_k\) to zero. Thereafter a, \(b_j\) and \(c_k\) are chosen so that the approximating functions (6.41) interpolate their corresponding actual functions at both \(\mathbf {x}^i\) and \(\mathbf {x}^{i-1}\). These conditions imply that for \(i=1,2,3,\ldots \)

If the gradient vectors \(\varvec{\nabla }^Tf\), \(\varvec{\nabla }^Tg_j\) and \(\varvec{\nabla }^Th_k\) are not known analytically, they may be approximated from functional data by means of first-order forward finite differences.

The particular choice of spherically quadratic approximations in the Dynamic-Q algorithm has implications on the computational and storage requirements of the method. Since the second derivatives of the objective function and constraints are approximated using function and gradient data, the \(O(n^2)\) calculations and storage locations, which would usually be required for these second derivatives, are not needed. The computational and storage resources for the Dynamic-Q method are thus reduced to O(n). At most, \(4+p+q+r+s\) n-vectors need be stored (where p, q, r and s are respectively the number of inequality and equality constraints and the number of lower and upper limits of the variables). These savings become significant when the number of variables becomes large. For this reason it is expected that the Dynamic-Q method is well suited, for example, to engineering problems such as structural optimization problems where a large number of variables are present.

In many optimization problems, additional bound constraints of the form \(\hat{k}_i\le x_i\le \check{k}_i\) occur. Constants \(\hat{k}_i\) and \(\check{k}_i\) respectively represent lower and upper bounds for variable \(x_i\). Since these constraints are of a simple form (having zero curvature), they need not be approximated in the Dynamic-Q method and are instead explicitly treated as special linear inequality constraints. Constraints corresponding to lower and upper limits are respectively of the form

where \(vl\in \hat{I}=(v1,v2,\ldots , vr)\) the set of r subscripts corresponding to the set of variables for which respective lower bounds \(\hat{k}_{vl}\) are prescribed, and \(wm\in \check{I}=(w1,w2,\ldots , ws)\) the set of s subscripts corresponding to the set of variables for which respective upper bounds \(\check{k}_{wm}\) are prescribed. The subscripts vl and wm are used since there will, in general, not be n lower and upper limits, i.e. usually \(r\ne n\) and \(s\ne n\).

In order to obtain convergence to the solution in a controlled and stable manner, move limits are placed on the variables. For each approximate subproblem P[i] this move limit takes the form of an additional single inequality constraint

where \(\rho \) is an appropriately chosen step limit and \(\mathbf {x}^{i}\) is the solution to the previous subproblem.

The approximate subproblem, constructed at \(\mathbf {x}^i\), to the optimization problem (6.40) (plus bound constraints (6.44) and move limit (6.45)), thus becomes P[i]:

with solution \(\mathbf {x}^{*i}\). The Dynamic-Q algorithm is given by Algorithm 6.5. In the Dynamic-Q method the subproblems generated are solved using the dynamic trajectory, or “leap-frog” (LFOPC) method of Snyman (1982, 1983) for unconstrained optimization applied to penalty function formulations (Snyman et al. 1994; Snyman 2000) of the constrained problem. A brief description of the LFOPC algorithm is given in Section 6.2.

The LFOPC algorithm possesses a number of outstanding characteristics, which makes it highly suitable for implementation in the Dynamic-Q methodology. The algorithm requires only gradient information and no explicit line searches or function evaluations are performed. These properties, together with the influence of the fundamental physical principles underlying the method, ensure that the algorithm is extremely robust. This has been proven over many years of testing (Snyman 2000). A further desirable characteristic related to its robustness, and the main reason for its application in solving the subproblems in the Dynamic-Q algorithm, is that if there is no feasible solution to the problem, the LFOPC algorithm will still find the best possible compromised solution. The Dynamic-Q algorithm thus usually converges to a solution from an infeasible remote point without the need to use line searches between subproblems, as is the case with SQP. The LFOPC algorithm used by Dynamic-Q is identical to that presented in Snyman (2000) except for a minor change to LFOP which is advisable should the subproblems become effectively unconstrained.

4.3 Numerical results and conclusion

The Dynamic-Q method requires very few parameter settings by the user. Other than convergence criteria and specification of a maximum number of iterations, the only parameter required is the step limit \(\rho \). The algorithm is not very sensitive to the choice of this parameter, however, \(\rho \) should be chosen of the same order of magnitude as the diameter of the region of interest. For the problems listed in Table 6.3 a step limit of \(\rho =1\) was used except for problems 72 and 106 where step limits \(\rho =\sqrt{10}\) and \(\rho =100\) were used respectively.

Given specified positive tolerances \(\varepsilon _x\), \(\varepsilon _f\) and \(\varepsilon _c\), then at step i termination of the algorithm occurs if the normalized step size

or if the normalized change in function value

where \(f_\mathrm {best}\) is the lowest previous feasible function value and the current \(\mathbf {x}^i\) is feasible. The point \(\mathbf {x}^i\) is considered feasible if the absolute value of the violation of each constraint is less than \(\varepsilon _c\). This particular function termination criterion is used since the Dynamic-Q algorithm may at times exhibit oscillatory behavior near the solution.

In Table 6.3, for the same starting points, the performance of the Dynamic-Q method on some standard test problems is compared to results obtained for Powell’s SQP method as reported by Hock and Schittkowski (1981). The problem numbers given correspond to the problem numbers in Hock and Schittkowski’s book. For each problem, the actual function value \(f_{\mathrm {act}}\) is given, as well as, for each method, the calculated function value \(f^*\) at convergence, the relative function error

and the number of function-gradient evaluations (\(N^{fg}\)) required for convergence. In some cases it was not possible to calculate the relative function error due to rounding off of the solutions reported by Hock and Schittkowski. In these cases the calculated solutions were correct to at least eight significant digits. For the Dynamic-Q algorithm, convergence tolerances of \(\varepsilon _f=10^{-8}\) on the function value, \(\varepsilon _x=10^{-5}\) on the step size and \(\varepsilon _c=10^{-6}\) for constraint feasibility, were used. These were chosen to allow for comparison with the reported SQP results.

The result for the 12-corner polytope problem of Svanberg (1999) is also given. For this problem the results given in the SQP columns are for Svanberg’s Method of Moving Asymptotes (MMA). The recorded number of function evaluations for this method is approximate since the results given correspond to 50 outer iterations of the MMA, each requiring about 3 function evaluations.

A robust and efficient method for nonlinear optimization, with minimal storage requirements compared to those of the SQP method, has been proposed and tested. The particular methodology proposed is made possible by the special properties of the LFOPC optimization algorithm (Snyman 2000), which is used to solve the quadratic subproblems. Comparison of the results for Dynamic-Q with the results for the SQP method show that equally accurate results are obtained with comparable number of function evaluations.

5 A gradient-only line search method for conjugate gradient methods

5.1 Introduction

Many engineering design optimization problems involve numerical computer analyses via, for example, FEM codes, CFD simulations or the computer modeling of the dynamics of multi-body mechanical systems. The computed objective function is therefore often the result of a complex sequence of calculations involving other computed or measured quantities. This may result in the presence of numerical noise in the objective function so that it exhibits non-smooth trends as design parameters are varied. It is well known that this presence of numerical noise in the design optimization problem inhibits the use of classical and traditional gradient-based optimization methods that employ line searches, such as for example, the conjugate gradient methods. The numerical noise may prevent or slow down convergence during optimization. It may also promote convergence to spurious local optima. The computational expense of the analyses, coupled to the convergence difficulties created by the numerical noise, is in many cases a significant obstacle to performing multidisciplinary design optimization.

In addition to the anticipated difficulties when applying the conjugate gradient methods to noisy optimization problems, it is also known that standard implementations of conjugate gradient methods, in which conventional line search techniques have been used, are less robust than one would expect from their theoretical quadratic termination property. Therefore the conjugate gradient method would, under normal circumstances, not be preferred to quasi-Newton methods (Fletcher 1987). In particular severe numerical difficulties arise when standard line searches are used in solving constrained problems through the minimization of associated penalty functions. However, there is one particular advantage of conjugate gradient methods, namely the particular simple form that requires no matrix operations in determining the successive search directions. Thus, conjugate gradient methods may be the only methods which are applicable to large problems with thousands of variables (Fletcher 1987), and are therefore well worth further investigation.

In this section a new implementation (ETOPC) of the conjugate gradient method (both for the Fletcher-Reeves and Polak-Ribiere versions (see Fletcher 1987) is presented for solving constrained problems. The essential novelty in this implementation is the use of a gradient-only line search technique originally proposed by the author (Snyman 1985), and used in the ETOP algorithm for unconstrained minimization. It will be shown that this implementation of the conjugate gradient method, not only easily overcomes the accuracy problem when applied to the minimization of penalty functions, but also economically handles the problem of severe numerical noise superimposed on an otherwise smooth underlying objective function.

5.2 Formulation of optimization problem

Consider again the general constrained optimization problem:

subject to the inequality and equality constraints:

where the objective function \(f(\mathbf {x})\), and the constraint functions \(g_j(\mathbf {x})\) and \(h_j(\mathbf {x})\), are scalar functions of the real column vector \(\mathbf {x}\). The optimum solution is denoted by \(\mathbf {x}^*\), with corresponding optimum function value \(f(\mathbf {x}^*)\).

The most straightforward way of handling the constraints is via the unconstrained minimization of the penalty function:

where \(\rho _j\gg 0,\ \beta _j=0\) if \(g_j(\mathbf {x})\le 0\), and \(\beta _j=\mu _j\gg 0\) if \(g_j(\mathbf {x})>0\).

Usually \(\rho _j=\mu _j=\mu \gg 0\) for all j, with the corresponding penalty function being denoted by \(P(\mathbf {x},\mu )\).

Central to the application of the conjugate gradient method to penalty function formulated problems presented here, is the use of an unconventional line search method for unconstrained minimization, proposed by the author, in which no function values are explicitly required (Snyman 1985). Originally this gradient-only line search method was applied to the conjugate gradient method in solving a few very simple unconstrained problems. For somewhat obscure reasons, given in the original paper (Snyman 1985) and briefly hinted to in this section, the combined method (novel line search plus conjugate gradient method) was called the ETOP (Euler-Trapezium Optimizer) algorithm. For this historical reason, and to avoid confusion, this acronym will be retained here to denote the combined method for unconstrained minimization. In subsequent unreported numerical experiments, the author was successful in solving a number of more challenging practical constrained optimization problems via penalty function formulations of the constrained problem, with ETOP being used in the unconstrained minimization of the sequence of penalty functions. ETOP, applied in this way to constrained problems, was referred to as the ETOPC algorithm. Accordingly this acronym will also be used here.

5.3 Gradient-only line search

The line search method used here, and originally proposed by the author (Snyman 1985) uses no explicit function values. Instead the line search is implicitly done by using only two gradient vector evaluations at two points along the search direction and assuming that the function is near-quadratic along this line. The essentials of the gradient-only line search, for the case where the function \(f(\mathbf {x})\) is unconstrained, are as follows. Given the current design point \(\mathbf {x}^k\) at iteration k and next search direction \(\mathbf {v}^{k+1}\), then compute

where \(\tau \) is some suitably chosen positive parameter. The step taken in (6.53) may be seen as an “Euler step”. With this step given by

the line search in the direction \(\mathbf {v}^{k+1}\) is equivalent to finding \(\mathbf {x}^{*k+1}\) defined by

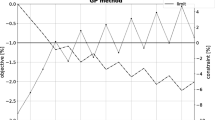

These steps are depicted in Figure 6.3.

It was indicated in Snyman (1985) that for the step \(\mathbf {x}^{k+1} = \mathbf {x}^k + \mathbf {v}^{k+1}\tau \) the change in function value \(\Delta f_k\), in the unconstrained case, can be approximated without explicitly evaluating the function \(f(\mathbf {x})\). Here a more formal argument is presented via the following lemma.

5.3.1 Lemma 1

For a general quadratic function, the change in function value, for the step \(\Delta \mathbf {x}^k = \mathbf {x}^{k+1}-\mathbf {x}^k = \mathbf {v}^{k+1}\tau \) is given by:

where \(\mathbf {a}^k = -\varvec{\nabla }f(\mathbf {x}^k)\) and \(\langle \ ,\ \rangle \) denotes the scalar product.

Proof:

In general, by Taylor’s theorem:

and

where \(\mathbf {x}^a = \mathbf {x}^k + \theta _0\Delta \mathbf {x}^k,\ \mathbf {x}^b = \mathbf {x}^k + \theta _1\Delta \mathbf {x}^k\) and both \(\theta _0\) and \(\theta _1\) in the interval [0, 1], and where \(\mathbf {H}(\mathbf {x})\) denotes the Hessian matrix of the general function \(f(\mathbf {x})\). Adding the above two expressions gives:

If \(f(\mathbf {x})\) is quadratic then \(\mathbf {H}(\mathbf {x})\) is constant and it follows that

where \(\mathbf {a}^k = \varvec{\nabla }f(\mathbf {x}^k)\), which completes the proof. \(\Box \)

By using expression (6.56) the position of the minimizer \(\mathbf {x}^{*k+1}\) (see Figure 6.4), in the direction \(\mathbf {v}^{k+1}\), can also be approximated without any explicit function evaluation. This conclusion follows formally from the second lemma given below. Note that in (6.56) the second quantity in the scalar product corresponds to an average vector given by the “trapezium rule”. This observation together with the remark following equation (6.53), gave rise to the name “Euler-trapezium optimizer (ETOP)” when applying this line search technique in the conjugate gradient method.

5.3.2 Lemma 2

For \(f(\mathbf {x})\) a positive-definite quadratic function the point \(\mathbf {x}^{*k+1}\) defined by \(f(\mathbf {x}^{*k+1})=\min _\lambda f(\mathbf {x}^k+\lambda \Delta \mathbf {x}^k)\) is given by

where

Proof:

First determine \(\theta \) such that

By Taylor’s expansion:

which gives for the step \(\theta \Delta \mathbf {x}\):

For both function values to be the same, \(\theta \) must therefore satisfy:

which has the non-trivial solution:

Using the expression for \(\Delta f_k\) given by Lemma 1, it follows that:

and by the symmetry of quadratic functions that

\(\Box \)

Expressions (6.57) and (6.58) may of course also be used in the general non-quadratic case, to determine an approximation to the minimizer \(\mathbf {x}^{*k+1}\) in the direction \(\mathbf {v}^{k+1}\), when performing successive line searches using the sequence of descent directions, \(\mathbf {v}^{k+1},\ k=1,2,\ldots \) Thus in practice, for the next \((k+1)\)-th iteration, set \(\mathbf {x}^{k+1}:=\mathbf {x}^{*k+1}\), and with the next selected search direction \(\mathbf {v}^{k+2}\) proceed as above, using expressions (6.57) and (6.58) to find \(\mathbf {x}^{*k+2}\) and then set \(\mathbf {x}^{k+2}:=\mathbf {x}^{*k+2}\). Continue iterations in this way, with only two gradient vector evaluations done per line search, until convergence is obtained.

In summary, explicit function evaluations are unnecessary in the above line search procedure, since the two computed gradients along the search direction allow for the computation of an approximation (6.56) to the change in objective function, which in turn allows for the estimation of the position of the minimum along the search line via expressions (6.57) and (6.58), based on the assumption that the function is near quadratic in the region of the search.

5.3.3 Heuristics

Of course in general the objective function may not be quadratic and positive-definite. Additional heuristics are therefore required to ensure descent, and to see to it that the step size (corresponding to the parameter \(\tau \) between successive gradient evaluations, is neither too small nor too large. The details of these heuristics are as set out below.

-

(i)

In the case of a successful step having been taken, with \(\Delta f_k\) computed via (6.56) negative, i.e. descent, and \(\theta \) computed via (6.58) positive, i.e. the function is locally strictly convex, as shown in Figure 6.4, \(\tau \) is increased by a factor of 1.5 for the next search direction.

-

(ii)

It may turn out that although \(\Delta f_k\) computed via (6.56) is negative, that \(\theta \) computed via (6.58) is also negative. The latter implies that the function along the search direction is locally concave. In this case set \(\theta :=-\theta \) in computing \(\mathbf {x}^{*k+1}\) by (6.57), so as to ensure a step in the descent direction, and also increase \(\tau \) by the factor 1.5 before computing the step for the next search direction using (6.53).

-

(iii)

It may happen that \(\Delta f_k\) computed by (6.56) is negative and exactly equal to \(\rho \), i.e. \(\Delta f_k-\rho =0\). This implies zero curvature with \(\theta =\infty \) and the function is therefore locally linear. In this case enforce the value \(\theta =1\). This results in the setting, by (6.57), of \(\mathbf {x}^{*k+1}\) equal to a point halfway between \(\mathbf {x}^k\) and \(\mathbf {x}^{k+1}\). In this case \(\tau \) is again increased by the factor of 1.5.

-

(iv)

If both \(\Delta f_k\) and \(\theta \) are positive, which is the situation depicted in Figure 6.4, then \(\tau \) is halved before the next step.

-

(v)

In the only outstanding and unlikely case, should it occur, where \(\Delta f^k\) is positive and \(\theta \) negative, \(\tau \) is unchanged.

-

(vi)

For usual unconstrained minimization the initial step size parameter selection is \(\tau =0.5\).

The new gradient-only line search method may of course be applied to any line search descent method for the unconstrained minimization of a general multi-variable function. Here its application is restricted to the conjugate gradient method.

5.4 Conjugate gradient search directions and SUMT

The search vectors used here correspond to the conjugate gradient directions (Bazaraa et al. 1993). In particular for \(k=0,1,\ldots \), the search vectors are

where \(\mathbf {s}^{k+1}\) denote the usual conjugate gradient directions, \(\beta _1=0\) and for \(k > 0\):

for the Fletcher-Reeves implementation, and for the Polak-Ribiere version:

As recommended by Fletcher (1987), the conjugate gradient algorithm is restarted in the direction of steepest descent when \(k > n\).

For the constrained problem the unconstrained minimization is of course applied to successive penalty function formulations \(P(\mathbf {x})\) of the form shown in (6.52), using the well known Sequential Unconstrained Minimization Technique (SUMT) (Fiacco and McCormick 1968). In SUMT, for \(j=1,2,\ldots \), until convergence, successive unconstrained minimizations are performed on successive penalty functions \(P(\mathbf {x})=P(\mathbf {x},\mu ^{(j)})\) in which the overall penalty parameter \(\mu ^{(j)}\) is successively increased: \(\mu ^{(j+1)}:= 10\mu ^{(j)}\). The corresponding initial step size parameter is set at \(\tau = 0.5/\mu ^{(j)}\) for each sub problem j. This application of ETOP to the constrained problem, via the unconstrained minimization of successive penalty functions, is referred to as the ETOPC algorithm. In practice, if analytical expressions for the components of the gradient of the objective function are not available, they may be calculated with sufficient accuracy by finite differences. However, when the presence of severe noise is suspected, the application of the gradient-only search method with conjugate gradient search directions, requires that central finite difference approximations of the gradients be used in order to effectively smooth out the noise. In this case relatively excessive perturbations \(\delta x_i\) in \(x_i\) must be used, which in practice may typically be of the order of 0.1 times the range of interest!

In the application of ETOPC a limit \(\Delta _m\), is in practice set to the maximum allowable magnitude \(\Delta ^*\) of the step \(\varvec{\Delta }^*= \mathbf {x}^{*k+1}-\mathbf {x}^k\). If \(\Delta ^*\) is greater than \(\Delta _m\), then set

and restart the conjugate gradient procedure, with \(\mathbf {x}^0:= \mathbf {x}^{k+1}\), in the direction of steepest descent. If the maximum allowable step is taken n times in succession, then \(\Delta _m\) is doubled.

5.5 Numerical results

The proposed new implementation of the conjugate gradient method (both the Fletcher- Reeves and Polak-Ribiere versions) is tested here using 40 different problems arbitrarily selected from the famous set of test problems of Hock and Schittkowski (1981). The problem numbers (Pr. #) in the tables, correspond to the numbering used in Hock and Schittkowski. The final test problem, (12-poly), is the 12 polytope problem of Svanberg (1995, 1999). The number of variables (n) of the test problems ranges from 2 to 21 and the number of constraints (m plus r) per problem, from 1 to 59. The termination criteria for the ETOPC algorithm are as follows:

-

(i)

Convergence tolerances for successive approximate sub-problems within SUMT: \(\varepsilon _g\) for convergence on the norm of the gradient vector, i.e. terminate if \(\Vert \varvec{\nabla }P(\mathbf {x}^{*k+1},\mu )\Vert <\varepsilon _g\) , and \(\varepsilon _x\) for convergence on average change of design vector: i.e. terminate if \(\frac{1}{2}\Vert \mathbf {x}^{*k+1}-\mathbf {x}^{*k-1}\Vert <\varepsilon _x\).

-

(ii)

Termination of the SUMT procedure occurs if the absolute value of the relative difference between the objective function values at the solution points of successive SUMT problems is less than \(\varepsilon _f\).

5.5.1 Results for smooth functions with no noise

For the initial tests no noise is introduced. For high accuracy requirements (relative error in optimum objective function value to be less than \(10^{-8}\)), it is found that the proposed new conjugate gradient implementation performs as robust as, and more economical than, the traditional penalty function implementation, FMIN, of Kraft and Lootsma reported in Hock and Schittkowski (1981). The detailed results are as tabulated in Table 6.4. Unless otherwise indicated the algorithm settings are: \(\varepsilon _x =10^{-8}\), \(\varepsilon _g =10^{-5}\), \(\Delta _m=1.0\), \(\varepsilon _f =10^{-8}\), \(\mu ^{(1)}=1.0\) and \(iout=15\), where iout denotes the maximum number of SUMT iterations allowed. The number of gradient vector evaluations required by ETOPC for the different problems are denoted by nge (note that the number of explicit function evaluations is zero), and the relative error in function value at convergence to the point \(\mathbf {x}^c\) is denoted by \(r_f\), which is computed from

For the FMIN algorithm only the number of explicit objective function evaluations nfe are listed, together with the relative error \(r_f\) at convergence. The latter method requires, in addition to the number of function evaluations listed, a comparable number of gradient vector evaluations, which is not given here (see Hock and Schittkowski 1981).

5.5.2 Results for severe noise introduced in the objective function

Following the successful implementation for the test problems with no noise, all the tests were rerun, but with severe relative random noise introduced in the objective function \(f(\mathbf {x})\) and all gradient components computed by central finite differences. The influence of noise is investigated for two cases, namely, for a variation of the superimposed uniformly distributed random noise as large as (i) 5% and (ii) 10% of \((1+|f(\mathbf {x}^*)|)\), where \(\mathbf {x}^*\) is the optimum of the underlying smooth problem. The detailed results are shown in Table 6.5. The results are listed only for the Fletcher-Reeves version. The results for the Polak- Ribiere implementation are almost identical. Unless otherwise indicated the algorithm settings are: \(\delta x_i=1.0\), \(\varepsilon _g =10^{-5}\), \(\Delta _m=1.0\), \(\varepsilon _f =10^{-8}\), \(\mu ^{(0)}=1.0\) and \(iout=6\), where iout denotes the maximum number of SUMT iterations allowed. For termination of sub-problem on step size, \(\varepsilon _x\) was set to \(\varepsilon _x:=0.005\sqrt{n}\) for the initial sub-problem. Thereafter it is successively halved for each subsequent sub-problem.

The results obtained are surprisingly good with, in most cases, fast convergence to the neighbourhood of the known optimum of the underlying smooth problem. In 90% of the cases regional convergence was obtained with relative errors \(r_x < 0.025\) for 5% noise and \(r_x < 0.05\) for 10% noise, where

and \(\mathbf {x}^c\) denotes the point of convergence. Also in 90% of the test problems the respective relative errors in final objective function values were \(r_f < 0.025\) for 5% noise and \(r_f < 0.05\) for 10% noise, where \(r_f\) is as defined in (6.63).

5.6 Conclusion

The ETOPC algorithm performs exceptionally well for a first order method in solving constrained problems where the functions are smooth. For these problems the gradient only penalty function implementation of the conjugate gradient method performs as well, if not better than the best conventional implementations reported in the literature, in producing highly accurate solutions.

In the cases where severe noise is introduced in the objective function, relatively fast convergence to the neighborhood of \(\mathbf {x}^*\), the solution of the underlying smooth problem, is obtained. Of interest is the fact that with the reduced accuracy requirement associated with the presence of noise, the number of function evaluations required to obtain sufficiently accurate solutions in the case of noise, is on the average much less than that necessary for the high accuracy solutions for smooth functions. As already stated, ETOPC yields in 90% of the cases regional convergence with relative errors \(r_x < 0.025\) for 5% noise, and \(r_x < 0.05\) for 10% noise. Also in 90% of the test problems the respective relative errors in the final objective function values are \(r_f < 0.025\) for 5% noise and \(r_f < 0.05\) for 10% noise. In the other 10% of the cases the relative errors are also acceptably small. These accuracies are more than sufficient for multidisciplinary design optimization problems where similar noise may be encountered.

6 Global optimization using dynamic search trajectories

6.1 Introduction

The problem of globally optimizing a real valued function is inherently intractable (unless hard restrictions are imposed on the objective function) in that no practically useful characterization of the global optimum is available. Indeed the problem of determining an accurate estimate of the global optimum is mathematically ill-posed in the sense that very similar objective functions may have global optima very distant from each other (Schoen 1991). Nevertheless, the need in practice to find a relative low local minimum has resulted in considerable research over the last decade to develop algorithms that attempt to find such a low minimum, e.g. see Törn and Zilinskas (1989).

The general global optimization problem may be formulated as follows. Given a real valued objective function \(f(\mathbf {x})\) defined on the set \(\mathbf {x}\in D\) in \(\mathbb {R}^{n}\), find the point \(\mathbf {x}^{*}\) and the corresponding function value \(f^{*}\) such that

if such a point \(\mathbf {x}^{*}\) exists. If the objective function and/or the feasible domain D are non-convex, then there may be many local minima which are not global.

If D corresponds to all \(\mathbb {R}^{n}\) the optimization problem is unconstrained. Alternatively, simple bounds may be imposed, with D now corresponding to the hyper box (or domain or region of interest) defined by

where \(\varvec{\ell }\) and \(\varvec{u}\) are n-vectors defining the respective lower and upper bounds on \(\mathbf {x}\).

From a mathematical point of view, Problem (6.65) is essentially unsolvable, due to a lack of mathematical conditions characterizing the global optimum, as opposed to the local optimum of a smooth continuous function, which is characterized by the behavior of the problem function (Hessians and gradients) at the minimum (Arora et al. 1995) (viz. the Karush-Kuhn-Tucker conditions). Therefore, the global optimum \(f^{*}\) can only be obtained by an exhaustive search, except if the objective function satisfies certain subsidiary conditions (Griewank 1981), which mostly are of limited practical use (Snyman and Fatti 1987). Typically, the conditions are that f should satisfy a Lipschitz condition with known constant L and that the search area is bounded, e.g. for all \({\mathbf {x}}, \bar{\mathbf {x}} \in {\mathbf {X}}\)

So called space-covering deterministic techniques have been developed (Dixon et al. 1975) under these special conditions. These techniques are expensive, and due to the need to know L, of limited practical use.

Global optimization algorithms are divided into two major classes (Dixon et al. 1975): deterministic and stochastic (from the Greek word stokhastikos, i.e. ‘governed by the laws of probability’). Deterministic methods can be used to determine the global optimum through exhaustive search. These methods are typically extremely expensive. With the introduction of a stochastic element into deterministic algorithms, the deterministic guarantee that the global optimum can be found is relaxed into a confidence measure. Stochastic methods can be used to assess the probability of having obtained the global minimum. Stochastic ideas are mostly used for the development of stopping criteria, or to approximate the regions of attraction as used by some methods (Arora et al. 1995).

The stochastic algorithms presented herein, namely the Snyman-Fatti algorithm and the modified bouncing ball algorithm (Groenwold and Snyman 2002), both depend on dynamic search trajectories to minimize the objective function. The respective trajectories, namely the motion of a particle of unit mass in a n-dimensional conservative force field, and the trajectory of a projectile in a conservative gravitational field, are modified to increase the likelihood of convergence to a low local minimum.

6.2 The Snyman-Fatti trajectory method

The essentials of the original SF algorithm (Snyman and Fatti 1987) using dynamic search trajectories for unconstrained global minimization will now be discussed. The algorithm is based on the local algorithms presented by Snyman (1982, 1983). For more details concerning the motivation of the method, its detailed construction, convergence theorems, computational aspects and some of the more obscure heuristics employed, the reader is referred to the original paper and also to the more recent review article by Snyman and Kok (2009).

6.2.1 Dynamic trajectories

In the SF algorithm successive sample points \(\mathbf {x}^{j}, j = 1,2,...,\) are selected at random from the box D defined by (6.66). For each sample point \(\mathbf {x}^{j}\), a sequence of trajectories \(T^{i}, i = 1,2,...,\) is computed by numerically solving the successive initial value problems:

This trajectory represents the motion of a particle of unit mass in a n-dimensional conservative force field, where the function to be minimized represents the potential energy.

Trajectory \(T^{i}\) is terminated when \(\mathbf {x}(t)\) reaches a point where \(f(\mathbf {x}(t))\) is arbitrarily close to the value \(f(\mathbf {x}_{0}^{i})\) while moving “uphill”, or more precisely, if \(\mathbf {x}(t)\) satisfies the conditions

where \(\epsilon _{u}\) is an arbitrary small prescribed positive value.

An argument is presented in Snyman and Fatti (1987) to show that when the level set \(\left\{ \mathbf {x}|f(\mathbf {x}) \le f(\mathbf {x}_{0}^{i}) \right\} \) is bounded and \(\varvec{\nabla }\! f (\mathbf {x}_{0}^{i}) \ne \mathbf{0}\), then conditions (6.69) above will be satisfied at some finite point in time.

Each computed step along trajectory \(T^{i}\) is monitored so that at termination the point \(\mathbf {x}_{m}^{i}\) at which the minimum value was achieved is recorded together with the associated velocity \(\dot{\mathbf {x}}_{m}^{i}\) and function value \(f_{m}^{i}\). The values of \(\mathbf {x}_{m}^{i}\) and \(\dot{\mathbf {x}}_{m}^{i}\) are used to determine the initial values for the next trajectory \(T^{i+1}\). From a comparison of the minimum values the best point \(\mathbf {x}_{b}^{i}\), for the current j over all trajectories to date is also recorded. In more detail the minimization procedure for a given sample point \(\mathbf {x}^{j}\), in computing the sequence \(\mathbf {x}_{b}^{i}, i = 1,2,...,\) is as follows.

In the original paper (Snyman and Fatti 1987) an argument is presented to indicate that under normal conditions on the continuity of f and its derivatives, \(\mathbf {x}_{b}^{i}\) will converge to a local minimum. Procedure MP1, for a given j, is accordingly terminated at step Algorithm 6.6 above if \(|| \varvec{\nabla }\! f (\mathbf {x}_{b}^{i})|| \le \epsilon \), for some small prescribed positive value \(\epsilon \), and \(\mathbf {x}_{b}^{i}\) is taken as the local minimizer \(\mathbf {x}_{f}^{j}\), i.e. set \(\mathbf {x}_{f}^{j} := \mathbf {x}_{b}^{i}\) with corresponding function value \(f_{f}^{j} := f(\mathbf {x}_{f}^{j})\).

Reflecting on the overall approach outlined above, involving the computation of energy conserving trajectories and the minimization procedure, it should be evident that, in the presence of many local minima, the probability of convergence to a relative low local minimum is increased. This one expects because, with a small value of \(\epsilon _{u}\) (see conditions (6.69)), it is likely that the particle will move through a trough associated with a relative high local minimum, and move over a ridge to record a lower function value at a point beyond. Since we assume that the level set associated with the starting point function is bounded, termination of the search trajectory will occur as the particle eventually moves to a region of higher function values.

6.3 The modified bouncing ball trajectory method

The essentials of the modified bouncing ball algorithm using dynamic search trajectories for unconstrained global minimization are now presented. The algorithm is in an experimental stage, and details concerning the motivation of the method, its detailed construction, and computational aspects will be presented in future.

6.3.1 Dynamic trajectories

In the MBB algorithm successive sample points \(\mathbf {x}^{j}, j = 1,2,...,\) are selected at random from the box D defined by (6.66). For each sample point \(\mathbf {x}^{j}\), a sequence of trajectory steps \(\Delta \mathbf {x}^{i}\) and associated projection points \(\mathbf {x}^{i+1}\), \(i=1,2,..., \) are computed from the successive analytical relationships (with \(\mathbf {x}^{1} := \mathbf {x}^{j}\) and prescribed \(V_{0_{1}} > 0\)):

where

with k a constant chosen such that \(h(\mathbf {x}) > 0\) \( \forall \) \( \mathbf {x}\in D\), g a positive constant, and

For the next step, select \(V_{0_{i+1}} < V_{0_{i}}\). Each step \(\Delta \mathbf {x}^{i}\) represents the ground or horizontal displacement obtained by projecting a particle in a vertical gravitational field (constant g) at an elevation \(h(\mathbf {x}^{i})\) and speed \(V_{0_{i}}\) at an inclination \(\theta _{i}\). The angle \(\theta _{i}\) represents the angle that the outward normal \(\mathbf {n}\) to the hypersurface represented by \(y=h(\mathbf {x})\) makes, at \(\mathbf {x}^{i}\) in \(n+1\) dimensional space, with the horizontal. The time of flight \(t_{i}\) is the time taken to reach the ground corresponding to \(y=0\).

More formally, the minimization trajectory for a given sample point \(\mathbf {x}^{j}\) and some initial prescribed speed \(V_{0}\) is obtained by computing the sequence \(\mathbf {x}^{i}, i = 1,2,...,\) as follows.

In the vicinity of a local minimum \(\hat{\mathbf {x}}\) the sequence of projection points \(\mathbf {x}^{i}, i = 1,2,...,\) constituting the search trajectory for starting point \(\mathbf {x}^{j}\) will converge since \(\Delta \mathbf {x}^{i} \rightarrow 0\) (see (6.70)). In the presence of many local minima, the probability of convergence to a relative low local minimum is increased, since the kinetic energy can only decrease for \(\alpha < 1\).

Procedure MP2, for a given j, is successfully terminated if \(|| \varvec{\nabla }\! f (\mathbf {x}^{i})|| \le \epsilon \) for some small prescribed positive value \(\epsilon \), or when \(\alpha V_{0}^{i} < \beta V_{0}^{1}\), and \(\mathbf {x}^{i}\) is taken as the local minimizer \(\mathbf {x}_{f}^{j}\) with corresponding function value \(f_{f}^{j} := h(\mathbf {x}_{f}^{j}) - k\).

Clearly, the condition \(\alpha V_{0}^{i} < \beta V_{0}^{1}\) will always occur for \(0< \beta < \alpha \) and \(0< \alpha < 1\).

MP2 can be viewed as a variant of the steepest descent algorithm. However, as opposed to steepest descent, MP2 has (as has MP1) the ability for ‘hill-climbing’, as is inherent in the physical model on which MP2 is based (viz., the trajectories of a bouncing ball in a conservative gravitational field.) Hence, the behavior of MP2 is quite different from that of steepest descent and furthermore, because of it’s physical basis, it tends to seek local minima with relative low function values and is therefore suitable for implementation in global searches, while steepest descent is not.

For the MBB algorithm, convergence to a local minimum is not proven. Instead, the underlying physics of a bouncing ball is exploited. Unsuccessful trajectories are terminated, and do not contribute to the probabilistic stopping criterion (although these points are included in the number of unsuccessful trajectories \(\tilde{n}\)). In the validation of the algorithm the philosophy adopted here is that the practical demonstration of convergence of a proposed algorithm on a variety of demanding test problems may be as important and convincing as a rigorous mathematical convergence argument.

Indeed, although for the steepest descent method convergence can be proven, in practice it often fails to converge because effectively an infinite number of steps is required for convergence.

6.4 Global stopping criterion

The above methods require a termination rule for deciding when to end the sampling and to take the current overall minimum function value \(\tilde{f}\), i.e.

as an approximation of the global minimum value \(f^{*}\).

Define the region of convergence of the dynamic methods for a local minimum \(\hat{\mathbf {x}}\) as the set of all points \(\mathbf {x}\) which, used as starting points for the above procedures, converge to \(\hat{\mathbf {x}}\). One may reasonably expect that in the case where the regions of attraction (for the usual gradient-descent methods, see Dixon et al. 1976) of the local minima are more or less equal, that the region of convergence of the global minimum will be relatively increased.

Let \(R_{k}\) denote the region of convergence for the above minimization procedures MP1 and MP2 of local minimum \(\hat{\mathbf {x}}^{k}\) and let \(\alpha _{k}\) be the associated probability that a sample point be selected in \(R_{k}\). The region of convergence and the associated probability for the global minimum \(\mathbf {x}^{*}\) are denoted by \(R^{*}\) and \(\alpha ^{*}\) respectively. The following basic assumption, which is probably true for many functions of practical interest, is now made. Basic assumption:

The following theorem may be proved.

6.4.1 Theorem (Snyman and Fatti 1987)

Let r be the number of sample points falling within the region of convergence of the current overall minimum \(\tilde{f}\) after \(\tilde{n}\) points have been sampled. Then under the above assumption and a statistically non-informative prior distribution the probability that \(\tilde{f}\) corresponds to \(f^{*}\) may be obtained from

On the basis of this theorem the stopping rule becomes: STOP when \(Pr \left[ \tilde{f} = f^{*} \right] \ge q^{*}\), where \(q^{*}\) is some prescribed desired confidence level, typically chosen as 0.99.

Proof:

We present here an outline of the proof of (6.77), and follow closely the presentation in Snyman and Fatti (1987). (We have since learned that the proof can be shown to be a generalization of the procedure proposed by Zielińsky 1981.) Given \(\tilde{n}^{*}\) and \(\alpha ^{*}\), the probability that at least one point, \(\tilde{n} \ge 1\), has converged to \(f^{*}\) is

In the Bayesian approach, we characterize our uncertainty about the value of \(\alpha ^{*}\) by specifying a prior probability distribution for it. This distribution is modified using the sample information (namely, \(\tilde{n}\) and r) to form a posterior probability distribution. Let \(p_{*}(\alpha ^{*}|\tilde{n}, r)\) be the posterior probability distribution of \(\alpha ^{*}\). Then,

Now, although the r sample points converge to the current overall minimum, we do not know whether this minimum corresponds to the global minimum of \(f^{*}\). Utilizing (6.76), and noting that \((1-\alpha )^{\tilde{n}}\) is a decreasing function of \(\alpha \), the replacement of \(\alpha ^{*}\) in the above integral by \(\alpha \) yields

Now, using Bayes theorem we obtain

Since the \(\tilde{n}\) points are sampled at random and each point has a probability \(\alpha \) of converging to the current overall minimum, r has a binomial distribution with parameters \(\alpha \) and \(\tilde{n}\). Therefore

Substituting (6.82) and (6.81) into (6.80) gives:

A suitable flexible prior distribution \(p(\alpha )\) for \(\alpha \) is the beta distribution with parameters a and b. Hence,

Using this prior distribution gives:

Assuming a prior expectation of 1, (viz. \(a=b=1\)), we obtain

which is the required result. \(\Box \)

6.5 Numerical results

The test functions used are tabulated in Table 6.6, and tabulated numerical results are presented in Tables 6.7 and 6.8. In the tables, the reported number of function values \(N_{f}\) are the average of 10 independent (random) starts of each algorithm.

Unless otherwise stated, the following settings were used in the SF algorithm (see Snyman and Fatti 1987): \(\gamma =2.0\), \(\alpha =0.95\), \(\epsilon =10^{-2}\), \(\omega =10^{-2}\), \(\delta =0.0\), \(q^{*}=0.99\), and \(\Delta t=1.0\). For the MBB algorithm, \(\alpha =0.99\), \(\epsilon =10^{-4}\), and \(q^{*}=0.99\) were used. For each problem, the initial velocity \(V_{0}\) was chosen such that \(\Delta \mathbf {x}^{1}\) was equal to half the ‘radius’ of the domain D. A local search strategy was implemented with varying \(\alpha \) in the vicinity of local minima.

In Table 6.7, \((r/\tilde{n})_{b}\) and \((r/\tilde{n})_{w}\) respectively indicate the best and worst \(r/\tilde{n}\) ratios (see equation (6.77)), observed during 10 independent optimization runs of both algorithms. The SF results compare well with the previously published results by Snyman and Fatti, who reported values for a single run only. For the Shubert, Branin and Rastrigin functions, the MBB algorithm is superior to the SF algorithm. For the Shekel functions (S5, S7 and S10), the SF algorithm is superior. As a result of the stopping criterion (6.77), the SF and MBB algorithms found the global optimum between 4 and 6 times for each problem.

The results for the trying Griewank functions (Table 6.7) are encouraging. G1 has some 500 local minima in the region of interest, and G2 several thousand. The values used for the parameters are as specified, with \(\Delta t=5.0\) for G1 and G2 in the SF-algorithm. It appears that both the SF and MBB algorithms are highly effective for problems with a large number of local minima in D, and problems with a large number of design variables.

In Table 6.8 the MBB algorithm is compared with the deterministic TRUST algorithm (Barhen et al. 1997). Since the TRUST algorithm was terminated when the global approximation was within a specified tolerance of the (known) global optimum, a similar criterion was used for the MBB algorithm. The table reveals that the two algorithms compare well. Note however that the highest dimension of the test problems used in Barhen et al. (1997) is 3. It is unclear if the deterministic TRUST algorithm will perform well for problems of large dimension, or problems with a large number of local minima in D.

In conclusion, the numerical results indicate that both the Snyman-Fatti trajectory method and the modified bouncing ball trajectory method are effective in finding the global optimum efficiently. In particular, the results for the trying Griewank functions are encouraging. Both algorithms appear effective for problems with a large number of local minima in the domain, and problems with a large number of design variables. A salient feature of the algorithms is the availability of an apparently effective global stopping criterion.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this chapter

Cite this chapter

Snyman, J.A., Wilke, D.N. (2018). NEW GRADIENT-BASED TRAJECTORY AND APPROXIMATION METHODS. In: Practical Mathematical Optimization. Springer Optimization and Its Applications, vol 133. Springer, Cham. https://doi.org/10.1007/978-3-319-77586-9_6

Download citation

DOI: https://doi.org/10.1007/978-3-319-77586-9_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-77585-2

Online ISBN: 978-3-319-77586-9

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)