Abstract

This chapter is a survey of the four standard associative digraph products, namely the Cartesian, strong, direct and lexicographic products. Topics include metric properties, connectedness, hamiltonian properties and invariants. Special attention is given to issues of cancellation and unique prime factorization.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

For our purposes, a digraph product is a binary operation \(D*D'\) on digraphs, for which \(V(D*D')\) is the Cartesian product \(V(D)\times V(D')\) of the vertex sets of the factors. There are many ways to define such products. But if we insist on the algebraic property of associativity, and demand that the projections to factors respect adjacency, then we are left with just four products, known as the standard products. One of these is the Cartesian product, introduced in Chapter 1. We review it now, and introduce the three other products.

10.1 The Four Standard Associative Products

The four standard digraph products are the Cartesian product \(D\,\Box \,D'\), the direct product \(D\times D'\!\), the strong product \(D{\,\boxtimes \,}D'\!\), and the lexicographic product \(D\circ D'\!\). Each has vertex set \(V(D)\times V(D')\). Their arcs are

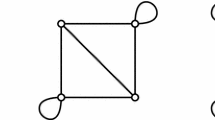

In each case D and \(D'\) are called factors of the product. In drawing products, we often align the factors roughly horizontally and vertically (like x- and y-coordinate axes) below and to the left of the vertex set \(V(D)\times V(D')\), so that \((x,x')\) projects vertically to \(x\in V(D)\) and horizontally to \(x'\in V(D')\). This is illustrated in Figure 10.1, showing examples of the Cartesian, direct and strong products. The lexicographic product is illustrated in Figure 10.2.

The definitions reveal immediately that the Cartesian, direct and strong products are commutative in the sense that the map \((x,x')\mapsto (x',x)\) yields isomorphisms \(D\,\Box \,D'\rightarrow D'\,\Box \,D\), \(D\times D'\rightarrow D'\times D\), and \(D{\,\boxtimes \,}D'\rightarrow D'{\,\boxtimes \,}D\). However, Figure 10.2 shows that the lexicographic product is not commutative.

It is also easy to check that all four standard products are associative in the sense that the identification \((x,(y,z)) = ((x,y),z)\) yields equalities

Thus we may unambiguously define products of more than two factors without regard to grouping. The product definitions extend as follows.

The vertex set of the Cartesian product \(D_1\,\Box \,\cdots \,\Box \,D_n\) is the Cartesian product of sets \(V(D_1)\times \cdots \times V(D_n)\). The arcs of the product are all pairs \((x_1,\ldots ,x_n)(y_1,\ldots ,y_n)\), where \(x_iy_i\in A(D_i)\) for some index \(i\in [n]\), and \(x_j=y_j\) for all \(j\ne i\).

The direct product \(D_1\times \cdots \times D_n\) has vertices \(V(D_1)\times \cdots \times V(D_n)\) and arcs \((x_1,\ldots ,x_n)(y_1,\ldots ,y_n)\), where \(x_iy_i\in A(D_i)\) for all \(i\in [n]\).

The strong product \(D_1{\,\boxtimes \,}\cdots {\,\boxtimes \,}D_n\) has vertices \(V(D_1)\times \cdots \times V(D_n)\), and \((x_1,\ldots ,x_n)(y_1,\ldots ,y_n)\) is an arc provided \(x_i=y_i\) or \(x_iy_i\in A(D_i)\) for all \(i\in [n]\), and \(x_iy_i\in A(D_i)\) for at least one \(i\in [n]\). Note the containment

which is only guaranteed to be an equality when \(n=2\). (As in the definition on page 467.)

Extending the lexicographic product to more than two factors, we see that \(D_1\circ \cdots \circ D_n\) has vertices \(V(D_1)\times \cdots \times V(D_n)\) and \((x_1,\ldots ,x_n)(y_1,\ldots ,y_n)\) is an arc of the product provided that there is an index \(i\in [n]\) for which \(x_iy_i\in A(D_i)\), while \(x_j=y_j\) for any \(1\le j<i\).

We define the nth powers with respect to the four products as

where there are n factors in each case.

A digraph homomorphism \(\varphi :D\rightarrow D'\) is a map \(\varphi :V(D)\rightarrow V(D')\) for which \(xy\in A(D)\) implies \(\varphi (x)\varphi (y)\in A(D')\). We call \(\varphi \) a weak homomorphism if \(xy\in A(D)\) implies \(\varphi (x)\varphi (y)\in A(D')\) or \(\varphi (x)=\varphi (y)\). A homomorphism is a weak homomorphism, but not conversely. For each \(k\in [n]\), define the projection \(\pi _k:V(D_1)\times \cdots \times V(D_n)\rightarrow V(D_k)\) as \(\pi _k(x_1,\ldots ,x_n)=x_k\). It is straightforward to verify that each projection \(\pi _k:D_1\times \cdots \times D_n\rightarrow D_k\) is a homomorphism, and \(\pi _k:D_1\,\Box \,\cdots \,\Box \,D_n\rightarrow D_k\) and \(\pi _k:D_1{\,\boxtimes \,}\cdots {\,\boxtimes \,}D_n\rightarrow D_k\) are weak homomorphisms. In general, only the first projection \(\pi _1:D_1\circ \cdots \circ D_n\rightarrow D_1\) of a lexicographic product is a weak homomorphism. Although we will not undertake such a demonstration here, it can be shown that \(\,\Box \,\), \(\times \), \({\,\boxtimes \,}\) and \(\circ \) are the only associative products for which at least one projection is a weak homomorphism (or homomorphism) and each arc of each factor is a projection of an arc in the product. See [18] for details in the class of graphs. (The arguments apply equally well to digraphs.)

For products written as \(D\,\Box \,H\), we write the projections as \(\pi _D\) and \(\pi _H\).

We continue with some algebraic properties of the four products. Denote the disjoint union of digraphs D and H as \(D+H\). The following distributive laws are immediate:

The corresponding left-distributive laws also hold, except in the case of the lexicographic product, where generally \(D\circ (H+K) \ne D\circ H+D\circ K\). Regarding this, the next proposition tells the whole story.

Proposition 10.1.1

We have \(D\circ (H+K) \cong D\circ H+D\circ K\) if and only if D has no arcs.

Proof:

If D has no arcs, then the definition of the lexicographic product shows that both \(D\circ (H+K)\) and \(D\circ H+D\circ K\) are |V(D)| copies of \(H+K\).

Conversely, suppose \(D\circ (H+K)\cong D\circ H+D\circ K\), so both digraphs have the same number of arcs. Note that in general

where the first term counts arcs \((x,x')(y,y')\) with \(xy\in A(D)\), and the second term counts such arcs with \(x=y\). Using this to count the arcs of \(D\circ (H+K)\), and again to count those of \(D\circ H+D\circ K\), we see that \(|A(D)|=0\). \(\square \)

The trivial digraph \(K_1\) is a unit for \(\,\Box \,\), \({\,\boxtimes \,}\) and \(\circ \), that is, \(K_1\times D=D\), \(\,K_1{\,\boxtimes \,}D=D\), and \(\,K_1\circ D=D= D\circ K_1\) (by identifying \((1,x)=x=(x,1)\) for all \(x\in V(D)\)). However, this does not work for the direct product because \(K_1\times D\) has no arcs, even if D does. But if we admit loops and let \(K_1^*\) be a loop on one vertex, then \(K_1^*\) is the unique digraph for which \(K_1^*\times D=D\). For this reason we often regard the direct product as a product on the class of digraphs with loops allowed, especially when dealing with issues of unique prime factorization, where the existence of a unit is crucial.

As mentioned above, the lexicographic product is the only one of the four standard products that is not commutative. However, if \(D=H^{\,\circ n}\) and \(D'=H^{\,\circ m}\) are lexicographic powers of the same digraph H, then we do of course get \(D\circ D'=D'\circ D\). Another way that D and \(D'\) can commute is if they are both transitive tournaments, in which case we have

To verify this, order the vertices of \(TT_n\) as \(v_1,v_2,\ldots , v_n\) with \(v_iv_j\in A(TT_n)\) provided \(i<j\). Order those of \(TT_m\) as \(w_1,w_2,\ldots , w_m\) with \(w_kw_\ell \in A(TT_m)\) provided \(k<\ell \). Order the set \(V(TT_n)\times V(TT_m)\) lexicographically, that is, \((v_i,w_k)<(v_j,w_\ell )\) if \(i<j\), or \(i=j\) and \(k<\ell \). The definition of \(\circ \) reveals that \(TT_n\circ TT_m\) has an arc \((v_i,w_k)(v_j,w_\ell )\) if and only if \((i,k)<(j,\ell )\). Therefore \(TT_n\circ TT_m=TT_{mn}\).

Certainly also \({\mathop {K}\limits ^{\leftrightarrow }}_n\circ {\mathop {K}\limits ^{\leftrightarrow }}_m \;=\; {\mathop {K}\limits ^{\leftrightarrow }}_{mn} \;=\; {\mathop {K}\limits ^{\leftrightarrow }}_m\circ {\mathop {K}\limits ^{\leftrightarrow }}_n\) where \({\mathop {K}\limits ^{\leftrightarrow }}_n\) is the complete biorientation of \(K_n\). And if \(D_n\) is its complement (i.e., the arcless digraph on n vertices) then \(D_n\circ D_m = D_{mn}=D_m\circ D_n\). In fact, these are the only situations in which the lexicographic product commutes, as discovered by Dörfler and Imrich [8].

Theorem 10.1.2

Two digraphs commute with respect to the lexicographic product if and only if they are both lexicographic powers of the same digraph, or both transitive tournaments, or both complete symmetric digraphs, or both arcless digraphs.

We close this section with another property of the lexicographic product. Denote by \(\overline{D}\) the complement of the digraph D, that is, the digraph on V(D) with \(xy\in A(\overline{D})\) if and only if \(xy\notin A(D)\). The equation

is easily confirmed. No other standard product has this property.

The remainder of the chapter is organized as follows. Sections 10.2 and 10.3 treat distance and connectedness for the four products. Sections 10.4, 10.5 and 10.6 deal with kings and kernels, Hamiltonian issues, and invariants. The final five sections consider algebraic questions of cancellation and unique prime factorization. Section 10.7 covers some preliminary material on homomorphisms and quotients that is used in the following section on cancellation. Section 10.9 covers prime factorization for \(\,\Box \,\) and \(\circ \). The cases \(\times \) and \(\boxtimes \) are treated in Sections 10.10 and 10.11.

10.2 Distance

Recall that the distance \(\mathrm{dist}_D(x,y)\) between two vertices \(x,y\in V(D)\) is the length of the shortest directed path from x to y, or \(\infty \) if no such path exists. This is not a metric in the usual sense, as generally \(\mathrm{dist}_D(x,y)\ne \mathrm{dist}_D(y,x)\). Let \(\mathrm{dist}'_D(x,y)\) be the length of the shortest (x, y)-path in D (not necessarily directed). This is a metric.

We begin by recording the distance formulas for each of the four products. These formulas are nearly identical to the corresponding formulas for graphs; here we adapt the proofs of Chapter 5 of Hammack, Imrich and Klavžar [18] to digraphs. Our proofs will use the fact that if \(p:D\rightarrow H\) is a weak homomorphism, then \(\mathrm{dist}_D(x,y)\ge \mathrm{dist}_H\big (p(x),p(y)\big )\), and similarly for \(\mathrm{dist}'\). This holds because if P is an (x, y)-dipath (or path) in D, then the projection of any arc of P is either an arc of H or a single vertex of H. The projections that are arcs constitute a (p(x), p(y))-diwalk (or walk) in H of length not greater than the length of P. (In fact, its length is the length of P minus the number of its arcs that are mapped to single vertices.)

Proposition 10.2.1

In a Cartesian product \(D=D_1\,\Box \,\cdots \,\Box \,D_n\), the distance between vertices \((x_1,\ldots ,x_n)\) and \((y_1,\ldots ,y_n)\) is

For the strong product \(D=D_1{\,\boxtimes \,}\cdots {\,\boxtimes \,}D_n\), the distance is

The same formulas hold when \(\mathrm{dist}\) is replaced with \(\mathrm{dist}'\).

Proof:

By associativity, it suffices to prove the statements for the case \(n=2\).

First consider the Cartesian product \(D=D_1\,\Box \,D_2\). To begin, suppose \(\mathrm{dist}_D((x_1,x_2),(y_1,y_2))\) is finite. Take a \(((x_1,x_2),(y_1,y_2))\)-dipath P of length \(\mathrm{dist}_D((x_1,x_2),(y_1,y_2))\). By definition of the Cartesian product, any arc of P is mapped to an arc in \(D_1\) or \(D_2\) by one of the two projections \(\pi _1\) and \(\pi _2\), and to a single vertex by the other. It follows that \(\pi _1\) maps P to an \((x_1,y_1)\)-diwalk in \(D_1\) of length (say) \(d_1\), and \(\pi _2\) maps P to an \((x_2,y_2)\)-diwalk in \(D_2\) of length \(d_2\), with \(\mathrm{dist}_D((x_1,x_2),(y_1,y_2))=d_1+d_2\ge \mathrm{dist}_{D_1}(x_1,y_1)+\mathrm{dist}_{D_2}(x_2,y_2)\).

In particular this means the proposition holds if \(\mathrm{dist}_{D_1}(x_1,y_1)=\infty \) or \(\mathrm{dist}_{D_2}(x_2,y_2)=\infty \). If they are both finite, take a shortest \((x_1,y_1)\)-dipath \(P_1\) in \(D_1\) and a shortest \((x_2,y_2)\)-dipath \(P_2\) in \(D_2\). Then \(D_1\,\Box \,D_2\) has a dipath

from \((x_1,x_2)\) to \((y_1,y_2)\), of length \(\mathrm{dist}_{D_1}(x_1,y_1)+\mathrm{dist}_{D_2}(x_2,y_2)\). Therefore \(\mathrm{dist}_D((x_1,x_2),(y_1,y_2))\le \mathrm{dist}_{D_1}(x_1,y_1)+\mathrm{dist}_{D_2}(x_2,y_2)\). Equality holds by the previous paragraph.

Now consider the strong product \(D_1{\,\boxtimes \,}D_2\). As each \(\pi _i:D_1{\,\boxtimes \,}D_2\rightarrow D_i\) is a weak homomorphism, it follows that \(\mathrm{dist}_D((x_1,x_2),(y_1,y_2))\ge \mathrm{dist}_{D_i}(x_i,y_i)\) for \(i=1,2\), so \(\mathrm{dist}_D((x_1,x_2),(y_1,y_2))\ge \max _{1\le i\le 2}\{\mathrm{dist}_{D_i}(x_i,y_i)\}\).

Thus, if at least one \(\mathrm{dist}_{D_i}(x_i,y_i)\) is infinite, then \(\mathrm{dist}_D((x_1,x_2),(y_1,y_2))=\infty \), and the proposition follows. Otherwise, take a shortest \((x_1,y_1)\)-dipath \(x_1a_1a_2a_3\ldots a_py_1\) in \(D_1\) and a shortest \((x_2,y_2)\)-dipath \(x_2b_1b_2b_3\ldots b_qy_2\) in \(D_2\). Say \(p\ge q\). We get the following \(((x_1,x_2),(y_1,y_2))\)-dipath in \(D_1{\,\boxtimes \,}D_2\):

Its length is \(\mathrm{dist}_{D_1}(x_1,y_1)=\max \{\mathrm{dist}_{D_i}(x_i,y_i)\}\ge \mathrm{dist}_D((x_1,x_2),(y_1,y_2))\). The reverse inequality was established in the previous paragraph.

The arguments for \(\mathrm{dist}'\) are identical, but replacing each occurrence of the word “diwalk” with “walk,” and “dipath” with “path.” \(\square \)

The situation for the direct product is quite different. It requires the following useful result concerning directed walks in a direct product.

Proposition 10.2.2

A direct product \(D=D_1\times \cdots \times D_n\) has a diwalk of length k from \((x_1,\ldots ,x_n)\) to \((y_1,\ldots ,y_n)\) if and only if each \(D_i\) has a diwalk of length k from \(x_i\) to \(y_i\).

Proof:

Suppose D has a diwalk W from \((x_1,x_2,\ldots ,x_n)\) to \((y_1,y_2,\ldots ,y_n)\), of length k. As each projection \(\pi _i:G\rightarrow G_i\) is a homomorphism, W projects to an \((x_i,y_i)\)-diwalk of length k in each \(D_i\).

Conversely, if each factor \(D_i\) has a diwalk \(x_i x_i^1 x_i^2 x_i^3 \ldots x_i^{k-1} y_i\) of length k, then by the definition of the direct product, D has a diwalk

of length k. \(\square \)

Proposition 10.2.3

In a direct product \(D=D_1\times \cdots \times D_n\), the distance between two vertices \((x_1,\ldots ,x_n)\) and \((y_1,\ldots ,y_n)\) is

or \(\infty \) if no such k exists.

Proof:

Let \(\mathrm{dist}_D\big ( (x_1,\ldots ,x_n), (y_1,\ldots ,y_n)\big )=d\). Let \(d'\) equal the smallest k for which each \(D_i\) has an \((x_i,y_i)\)-diwalk of length k, or \(\infty \) if no such k exists. We must show \(d=d'\).

If \(d=\infty \), then \(d\ge d'\). But also \(d\ge d'\) when \(d<\infty \), by Proposition 10.2.2.

On the other hand, if \(d'=\infty \), then \(d\le d'\). And again \(d\le d'\) when \(d'<\infty \), by Proposition 10.2.2. Thus \(d=d'\). \(\square \)

Distance in the lexicographic product requires a new definition. Given a vertex x of a digraph D, let \(\xi _D(x)\) be the length of a shortest non-trivial dicycle containing x, or \(\infty \) if no such dicycle exists. Let \(\xi _D'(x)\) be the length of the shortest non-trivial cycle containing x. We first state the distance formula for lexicographic products \(D_1\circ D_2\) having just two factors (a consequence of Theorem 4 of Szumny, Włoch and Włoch [54]).

Proposition 10.2.4

The distance formula for the lexicographic product is

The formula also holds with \(\mathrm{dist}\) and \(\xi \) replaced with \(\mathrm{dist}'\) and \(\xi '\).

Proof:

Suppose \(x_1\ne y_1\). Then, as the projection \(\pi _1\) is a weak homomorphism, we have \(\mathrm{dist}_{D_1\circ D_2}\big ((x_1,x_2),(y_1,y_2)\big )\ge \mathrm{dist}_{D_1}(x_1,y_1)\). On the other hand, given a shortest \((x_1,y_1)\)-dipath \(x_1a_1a_2\ldots a_py_1\) in \(D_1\), we construct a dipath \((x_1,y_1)(a_1,y_2)(a_2,y_2)(a_3,y_2)\ldots (y_1,y_2)\) in \(D_1\circ D_2\) of length \(\mathrm{dist}_{D_1}(x_1,y_1)\), so \(\mathrm{dist}_{D_1\circ D_2}\big ((x_1,x_2),(y_1,y_2)\big )= \mathrm{dist}_{D_1}(x_1,y_1)\).

Now suppose \(x_1= y_1\). Take a shortest \(((x_1,x_2),(y_1,y_2))\)-dipath P in \(D_1\circ D_2\). Because \(\pi _1\) is a weak homomorphism, \(\pi _1(P)\) is either a closed diwalk in \(D_1\) beginning and ending at \(x_1\) that is no longer than P, or it is the single vertex \(x_1\). In the first case, \(\mathrm{dist}((x_1,x_2),(y_1,y_2))\ge \xi _{D_1}(x_1)\). In the second, P lies in the fiber \(\{x_1\}\circ D_2\cong D_2\), and its length is no less than \(\mathrm{dist}_{D_2}(x_2,y_2)\). Thus \(\mathrm{dist}_{D_1\circ D_2}\big ((x_1,x_2),(y_1,y_2)\big )\ge \) \(\min \big \{\, \xi _{D_1}(x_1),\, \mathrm{dist}_{D_2}(x_2,y_2)\big \}\).

Conversely, if \(D_1\) has a closed dicycle \(x_1a_1a_2\ldots a_px_1\), then \(D_1\circ D_2\) has a dipath \((x_1,y_1)(a_1,y_2)(a_2,y_2)(a_3,y_2)\ldots (x_1,y_2)\) of the same length. And if \(D_2\) has an \((x_2,y_2)\)-dipath P, then \(\{x_1\}\circ P\) is a \(((x_1,x_2),(y_1,y_2))\)-dipath in \(D_1\circ D_2\). Thus \(\mathrm{dist}_{D_1\circ D_2}\big ((x_1,x_2),(y_1,y_2)\big )\le \) \(\min \big \{\, \xi _{D_1}(x_1),\, \mathrm{dist}_{D_2}(x_2,y_2)\big \}\).

The proof is the same for \(\mathrm{dist}'\). \(\square \)

Corollary 10.2.5

Suppose \((x_1,x_2,\ldots , x_n)\) and \((y_1,y_2,\ldots , y_n)\) are distinct vertices of \(D=D_1\circ D_2\circ \cdots \circ D_n\), and let \(k\in [n]\) be the smallest index for which \(x_k\ne y_k\). Then

(For \(k=1\) this is \(\mathrm{dist}_D\big ((x_1,x_2,\ldots , x_n),(y_1,y_2,\ldots , y_n)\big )=\) \(\mathrm{dist}_{D_1}(x_1,y_1)\). In any case, the distance does not depend on any factor \(D_i\) with \(k<i\le n\).) The formula also holds with \(\mathrm{dist}\) and \(\xi \) replaced with \(\mathrm{dist}'\) and \(\xi '\).

Proof:

If \(n=2\), this is just a restatement of Proposition 10.2.4. If \(n>2\), then applying Proposition 10.2.4 to \(D_1\circ (D_2\circ \cdots \circ D_n)\) yields

and we proceed inductively. \(\square \)

10.3 Connectivity

We now apply the results of the previous section to connectivity of the four products. Our first result characterizes connectivity and strong connectivity of three of our four products. The proofs are straightforward, with appeals to the distance formulas of Section 10.2 as needed. The parenthetical words (strongly) and (strong) in the proposition can be deleted to obtain parallel results on connectedness. (Recall that a digraph is connected if any two of its vertices can be joined by a [not necessarily directed] path.)

Theorem 10.3.1

Suppose \(D_1,\ldots , D_n\) are digraphs. Then:

-

1.

The Cartesian product \(D_1\,\Box \,\cdots \,\Box \,D_n\) is (strongly) connected if and only if each factor \(D_i\) is (strongly) connected. More generally, the (strong) components of a product \(D_1\,\Box \,\cdots \,\Box \,D_n\) are the subgraphs \(X_1\,\Box \,\cdots \,\Box \,X_n\) for which each \(X_i\) is a (strong) component of \(D_i\).

-

2.

The strong product \(D_1{\,\boxtimes \,}\cdots {\,\boxtimes \,}D_n\) is (strongly) connected if and only if each factor \(D_i\) is (strongly) connected. More generally, the (strong) components of a product \(D_1{\,\boxtimes \,}\cdots {\,\boxtimes \,}D_n\) are the subgraphs \(X_1{\,\boxtimes \,}\cdots {\,\boxtimes \,}X_n\) for which each \(X_i\) is a (strong) component of \(D_i\).

-

3.

The lexicographic product \(D_1\circ \cdots \circ D_n\) of non-trivial digraphs is (strongly) connected if and only if the first factor \(D_1\) is (strongly) connected. More generally, the (strong) components of a product \(D_1\circ \cdots \circ D_n\) are the subgraphs \(X_1\circ D_2\circ \cdots \circ D_n\), where \(X_1\) is a non-trivial strong component of \(D_1\), as well as

$$ X_1\circ X_2 \circ \cdots \circ X_k \circ D_{k+1}\circ \cdots \circ D_n, $$where \(X_i\) is a trivial (strong) component of \(D_i\) for \(1\le i <k\), and \(X_k\) is a non-trivial strong component of \(D_k\) (unless \(k=n\), in which case \(X_k\) is allowed to be trivial).

Theorem 10.3.1 is a key to understanding the interconnections between the strong components of products. Recall that the strong component digraph of a digraph D is the acyclic digraph \(\mathrm{SC}(D)\) whose vertices are the strong components of D, with an arc directed from X to Y precisely when D has an arc from X to Y. Thus \(\mathrm{SC}(D)\) carries information on the interconnections between the various strong components. The \(\mathrm{SC}\) operator respects the Cartesian and strong products in the sense that \({\mathrm{SC}}(D\,\Box \,H)={\mathrm{SC}}(D)\,\Box \,{\mathrm{SC}}(H)\) and \({\mathrm{SC}}(D\boxtimes H)={\mathrm{SC}}(D)\boxtimes {\mathrm{SC}}(H)\). Indeed, the pairwise projection map \(X\mapsto (\pi _D(X), \pi _H(X))\) sending strong components X in the product to pairs of strong components in the factors is an isomorphism in both cases \(\,\Box \,\) and \({\,\boxtimes \,}\) (as is easily checked).

Also, if every strong component of D is non-trivial, then \({\mathrm{SC}}(D\circ H)={\mathrm{SC}}(D)\). This is so because Theorem 10.3.1 says the strong components of \(D\circ H\) have form \(X\circ H\), where X is a strong component of G. From the definition of \(\circ \), the projection \(X\circ H\mapsto X\) is an isomorphism \({\mathrm{SC}}(D\circ H)\rightarrow \mathrm{SC}(D)\). (But this breaks down if D has a trivial strong component \(X=\{x_0\}\) and H has at least two strong components Y and Z, because then the distinct strong components \(X\circ Y\) and \(X\circ Z\) are both mapped to X.)

There is no result analogous to Theorem 10.3.1 for the direct product. Indeed, Figure 10.3 shows a direct product of two strong digraphs that is not even connected: Here \(\overrightarrow{C}_4\times \overrightarrow{C}_6=2\overrightarrow{C}_{12}\), where the coefficient 2 means the product is 2 disjoint copies of \(\overrightarrow{C}_{12}\). In fact, it is easy to verify the formula

(which is an instance of Theorem 10.3.2 below).

Despite the fact that a direct product of strongly connected digraphs need not be strongly connected, the converse is true: if \(D_1\times \cdots \times D_n\) is strongly connected, then each \(D_i\) must be strongly connected. This is a consequence of the fact that the projection maps are homomorphisms. Given two vertices \(x_i,y_i\) of \(D_i\), take \((x_1,\ldots ,x_n),(y_1,\ldots , y_n) \in V(D_1\times \cdots \times D_n)\). Any diwalk joining these two vertices projects to a diwalk joining \(x_i\) to \(y_i\).

Additional conditions on the factors that guarantee the product is strongly connected were first spelled out by McAndrew [37]. For a digraph D, let d(D) be the greatest common divisor of the lengths of all closed diwalks in D.

Theorem 10.3.2

If \(D_1,D_2,\ldots ,D_n\) are strongly connected digraphs, then the number of strong components of the direct product \(D_1\times D_2\times \cdots \times D_n\) is

Consequently, \(D_1\times D_2\times \cdots \times D_n\) is strongly connected if and only if each \(D_i\) is strongly connected and the numbers \(d(D_1),\ldots ,d(D_n)\) are relatively prime.

Notice how this theorem agrees with Equation (10.4) and Figure 10.3. The proof is constructive and gives a neat description of the strong components.

Proof:

We need only prove the first statement. Assume each factor \(D_i\) is strongly connected, and let \(D=D_1\times \cdots \times D_n\).

For each index \(i\in [n]\), put \(d_i=d(D_i)\), and fix a vertex \(a_i\in V(D_i)\). Define functions \(f_i:V(D_i)\rightarrow \{0,1,2,\ldots , d(D_i)-1\}\) so that \(f_i(v)\) is the length (mod \(d_i\)) of an \((a_i,v)\)-diwalk W. To see that this does not depend on W, let \(W'\) be any other \((a_i,v)\)-diwalk. Let Z be a \((v,a_i)\)-diwalk. Then the concatenations \(W+Z\) and \(W'+Z\) are closed \((a_i,a_i)\)-diwalks, and \(d_i\) divides both of their lengths \(|W+Z|\) and \(|W'+Z|\). Thus \(d_i\) divides the difference \(|W|-|W'|\) of their lengths, so |W| and \(|W'|\) have the same length, modulo \(d_i\). Hence f is well defined.

Regard \(f_i(v)\) as a coloring of vertex v, so \(D_i\) is \(d_i\)-colored. Now, to each vertex \(x=(x_1,\ldots ,x_n)\) of D, assign the n-tuple \(f(x)=(f_1(x_1),\ldots ,f_n(x_n))\). Regard the distinct n-tuples as colors, so D is colored with \(d_1d_2\cdots d_n\) colors.

Take a vertex \(b=(b_1,\ldots ,b_n)\) of D, and let \(X_b\) be the strong component of D that contains b. If \(x=(x_1,\ldots ,x_n)\) is in \(X_b\), then D has a (b, x)-diwalk of length (say) k. By Proposition 10.2.2, each \(D_i\) has a \((b_i,x_i)\)-diwalk of length k. As \(b_i\) is colored \(f_i(b_i)\), it follows from the definition of \(f_i\) that \(x_i\) is colored \(f_i(x_i)=f_i(b_i)+k\) (mod \(d_i\)). Thus every vertex x of \(X_b\) has a color of form \(f(x)=(f_1(b_1)+k, \ldots , f_n(b_n)+k)\) for some non-negative integer k. (Where the arithmetic in the ith coordinate is done modulo \(d_i\).)

Suppose for the moment that the converse is true: If \(x\in V(D)\) and \(f(x)=(f_1(b_1)+k, \ldots , f_n(b_n)+k)\) for some non-negative k, then x belongs to \(X_b\). (We will prove this shortly.) Combined with the previous paragraph, this means \(V(X_b)\) consists precisely of those vertices colored \((f_1(b_1)+k, \ldots , f_n(b_n)+k)\) for some non-negative integer k. There are precisely \(\text {lcm}\big (d_1, \ldots , d_n\big )\) such colors. In summary, D has \(d_1d_2\cdots d_n=d(D_1)\cdot d(D_2)\cdot \cdots \cdot d(D_n)\) color classes, and any strong component of D is the union of \(\text {lcm}\big (d(D_1), \ldots , d(D_n)\big )\) of them. Thus D has

strong components, and the theorem follows.

It remains to prove the assertion made above, namely that if the vertex \(b=(b_1,\ldots ,b_n)\) belongs to a strong component \(X_b\), then any vertex colored \((f_1(b_1)+k, \ldots , f_n(b_n)+k)\) belongs to \(X_b\). Thus let \(x=(x_1,\ldots ,x_n)\) be colored \(f(x)=(f_1(b_1)+k, \ldots , f_n(b_n)+k)\). That is, each \(x_i\) has color \(f_i(x_i)=f_i(b_i)+k\) (mod \(d_i\)). We need to prove that D has both a (b, x)-diwalk and an (x, b)-diwalk. By Proposition 10.2.2, it suffices to show that there is a positive integer K for which each \(D_i\) has a \((b_i,x_i)\)-diwalk of length K. (And also a \(K'\) for which each \(D_i\) has a \((x_i,b_i)\)-diwalk of length \(K'\).) The following claim assures that this is possible.

Claim. Suppose vertices \(b_i,x_i\in V(D_i)\) have colors \(f_i(b_i)\) and \(f_i(b_i)+k\), respectively. Then there is an integer \(M_i\) such that for all \(m_i\ge M_i\) there is a \((b_i,x_i)\)-diwalk of length \(m_id_i+k\). Also there is an integer \(M_i'\) such that for all \(m_i\ge M_i'\) there is an \((x_i,b_i)\)-diwalk of length \(m_id_i-k\).

Once the claim is established, we can put \(m_i=Ld_1d_2 \cdots d_n/d_i\), where L is large enough that each \(m_i\) exceeds the maximum of all the \(M_i\) and \(M_i'\). Then \(m_id_i=Ld_1d_2 \cdots d_n\) for each \(i\in [n]\), and the claim then gives the required diwalks of lengths \(K=Ld_1d_2 \cdots d_n+k\) and \(K'=Ld_1d_2 \cdots d_n-k\).

To prove the claim, let vertices \(b_i\) and \(x_i\) of \(D_i\) have colors \(f_i(b_i)\) and \(f_i(b_i)+k\), respectively. Because \(D_i\) is strongly connected, \(D_i\) has a \((b_i,x_i)\)-diwalk W. Moreover, we may assume \(|W|\ge k\), by concatenating with W (if necessary) arbitrarily many closed \((x_i,x_i)\)-diwalks. Because \(b_i\) has color \(f_i(b_i)\) and \(x_i\) has color \(f_i(b_i)+k\), it follows that W has length \(\ell d_i+k\) for some non-negative integer \(\ell \).

By definition of \(d_i\), there are dicycles \(C_1,C_2,\ldots , C_s\) in \(D_i\) for which \(d_i=\gcd (|C_1|, |C_2|, \ldots , |C_s|)\). Select a vertex \(c_i\) of each \(C_j\). Let \(P_0\) be a \((b_i,c_1)\)-diwalk, let \(P_s\) be a \((c_s,x_i)\)-diwalk, and for each \(j\in [s-1]\) let \(P_j\) be a \((c_j,c_{j+1})\)-diwalk. See Figure 10.4. By the same reasoning used for W, the diwalk \(W'=P_0+P_1+\cdots +P_s\) has length \(\ell 'd_i+k\) for some non-negative \(\ell '\).

By choice of the \(C_i\), there are integers \(u_j\) for which \(\sum _{j=1}^s u_j|C_j|=d_i\). Let \(u=\max \{|u_1|, \ldots , |u_s|\}\). Put \(w=\sum _{j=1}^{s}\frac{|C_j|}{d_i}\), which is a positive integer because \(d_i\) divides each \(|C_j|\). We will show that \(M_i=\ell '+w+w^2u\) satisfies the requirements of the claim: Let \(m_i\ge M_i\). By the division algorithm

For each \(j\in [s]\), put \(v_j=q+ru_j\). Note that each \(v_j\) is positive because

Thus we may construct a diwalk

where \(v_jC_j\) is \(C_j\) concatenated with itself \(v_j\) times. The length of \(W''\) is

Thus for any \(m_i\ge M_i\) we have constructed a \((b_i,x_i)\)-diwalk \(W''\) in \(D_i\) of length \(m_id_i+k\), and this completes the first part of the claim. By a like construction (reversing the walks in Figure 10.4, which is possible because \(D_i\) is strong) there is also a \((x_i,b_i)\)-diwalk \(W'''\) in \(D_i\) of length \(m_id_i-k\). This completes the proof of the claim, and also the proof of the Theorem. \(\square \)

The issue of connectedness of direct products is even more subtle than that of strong connectedness. Despite the contributions [3], [21] and [22], more than 50 years elapsed between McAndrew’s result on strong connectedness (Theorem 10.3.2) and the eventual characterization of connectedness by Chen and Chen [5], which we now examine. To begin the discussion, note that because all projections of \(D=D_1\times \cdots \times D_n\) to factors are homomorphisms, if D is connected, then each factor \(D_i\) is connected too. The converse is generally false, as demonstrated by \(\overrightarrow{P}_2\times \overrightarrow{P}_2\). Laying out the exact additional conditions on the factors that ensure that the product is connected requires several definitions.

A matrix A is chainable if its entries are non-negative, and it has no rows or columns of zeros, and there are no permutation matrices M and N for which MAN has block form

For a positive integer \(\ell \), we say A is \(\ell \)-chainable if \(A^\ell \) is chainable. A digraph is \(\ell \)-chainable if its adjacency matrix is \(\ell \)-chainable.

Given a walk W from x to y in a digraph D, its weight w(W) is the integer \(m-n\), where in traversing W from x to y, we encounter m arcs in forward orientation and n arcs in reverse orientation. The weight w(D) of the digraph D is the greatest common divisor of the weights of all closed walks in D, or 0 if all closed walks have weight 0.

Space limitations prevent inclusion of the proof of the following theorem. It can be found in [5].

Theorem 10.3.3

Suppose \(D_1,\ldots , D_n\) are connected digraphs. Then:

-

1.

If no \(w(D_i)\) is zero, then \(D_1\times \cdots \times D_n\) is connected if and only if both of the following conditions hold:

-

\(\gcd \big (w(D_1), \ldots ,w(D_n) \big )=1\),

-

If some \(D_i\) has a vertex of in-degree 0 (respectively out-degree 0) then no \(D_j\) (\(j\ne i\)) has a vertex of out-degree 0 (respectively in-degree 0).

-

-

2.

If some \(w(D_i)\) is zero, then \(D_1\times \cdots \times D_n\) is connected if and only if the other \(D_j\) (\(j\ne i\)) are \(\ell \)-chainable, where \(\ell =\text {diam}(D_i)\).

We conclude this section with characterizations of unilateral connectedness of the four products. Recall that a digraph is unilaterally connected if for any two of its vertices x, y there exists an (x, y)-diwalk or a (y, x)-diwalk. (Because this relation on vertices is not symmetric, and thus not an equivalence relation, there is no notion of unilateral components.) Note that strongly connected digraphs are unilaterally connected, but not conversely.

Theorem 10.3.4

A Cartesian product of digraphs is unilaterally connected if and only if one factor is unilaterally connected and the others are strongly connected. This is also true for the strong product.

For a proof, see the solution of Exercise 32.4 of Hammack, Imrich and Klavžar [18]. See the solution of Exercise 32.5 for a proof of the next result.

Theorem 10.3.5

A lexicographic product of digraphs is unilaterally connected but not strongly connected if and only if each factor is unilaterally connected, and the first factor is not strongly connected.

Finally, we have a characterization of unilaterally connected direct products due to Harary and Trauth [21].

Theorem 10.3.6

A direct product \(D_1\times \cdots \times D_n\) is unilaterally connected if and only if each of the following holds:

-

At most one factor \(D_i\) is unilaterally connected but not strongly connected.

-

\(D_1\times \cdots \times D_{i-1}\times D_{i+1}\times \cdots \times D_n\) is strongly connected.

-

\(D_1\times \cdots D_{i-1}\times C \times D_{i+1}\times \cdots \times D_n\) is strongly connected for each strong component C of \(D_i\).

10.4 Neighborhoods, Kings and Kernels

The structures of vertex neighborhoods in digraph products are clear from the definitions. For instance, \(N^+_{D\,\Box \,D'}(x,y)=\left( N^+_D(x)\times \{y\}\right) \cup \left( \{x\}\times N^+_{D'}(y)\right) \), etc. For future reference we record two particularly useful formulas, namely

These also hold with the out-neighborhoods \(N^+\) replaced by in-neighborhoods \(N^-\), and extend to arbitrarily many factors.

Recall that a k-king in a digraph is a vertex x for which there is an (x, y)-dipath of length no greater than k for all vertices y of the digraph. The next proposition follows from the distance properties in Section 10.2.

Proposition 10.4.1

Let \(D_1\) and \(D_2\) be digraphs. Then:

-

1.

\((x_1,x_2)\) is a k-king in \(D_1\boxtimes D_2\) if and only if each \(x_i\) is a k-king in \(D_i\).

-

2.

\((x_1,x_2)\) is a k-king in \(D_1\,\Box \,D_2\) if and only if each \(x_i\) is a \(k_i\)-king in \(D_i\), where \(k_1+k_2=k\).

-

3.

\((x_1,x_2)\) is a k-king in \(D_1\circ D_2\) if and only if \(x_1\) is a k-king in \(D_1\), and \(x_2\) is a k-king in \(D_2\) or \(\xi _{D_1}(x_1)\le k\) (where \(\xi \) is as defined on page 473).

-

4.

If \((x_1,x_2)\) is a k-king in \(D_1\times D_2\), then each \(x_i\) is a k-king in \(D_i\).

This proposition is due to students P. LaBarr, M. Norge and I. Sanders, directed by D. Taylor [40]. Concerning statement 4, no characterization of kings in direct products is known.

Recall that a (k, l)-kernel of a digraph D is a subset \(J\subseteq V(D)\) for which \(\mathrm{dist}_D(x,y)\ge k\) for all distinct \(x,y\in J\), and to any \(x\notin J\) there is a \(y\in J\) with \(\mathrm{dist}_D(x,y)\le l\). Szumny, Włoch and Włoch [54] explored (k, l)-kernels in so-called D-joins. Their Theorem 8 implies the following characterization for the lexicographic product. (They also enumerate all (k, l)-kernels in \(D_1\circ D_2\).)

Proposition 10.4.2

Let \(l\ge k\ge 2\). Then \(J^*\subseteq V(D_1\circ D_2)\) is a (k, l)-kernel if and only if \(D_1\) has a (k, l)-kernel J with \(J^*=\bigcup _{x\in J}\{x\}\times J_x\), where

-

\(J_x\) is a (k, l)-kernel of \(D_2\) if \(\xi _{D_1}(x)>l\) and \(\mathrm{dist}_{D_2}(y,x)> l\) for \(y\ne x\), or

-

\(J_x\) is a single vertex of \(D_2\) if \(\xi _{D_1}(x)<k\), or

-

\(\mathrm{dist}_{D_2}(x,y)\ge k\) for all distinct \(x,y\in J_x\) otherwise.

The case \(k>l\) is open. No characterizations are known for the other products, though Kwaśnik [33] proved the following.

Proposition 10.4.3

Let \(D_1\) and \(D_2\) be digraphs, and let \(J_i\) be a \((k_i,l_i)\)-kernel of \(D_i\) for each \(i=1,2\).

-

1.

\(J_1\times J_2\) is a \(\big (\min \{k_1,k_2\}, \, l_1+l_2\big )\)-kernel of \(D_1\,\Box \,D_2\) (for \(k_1,k_2\ge 2\)).

-

2.

\(J_1\times J_2\) is a \(\big (\min \{k_1,k_2\},\max \{l_1,l_2\}\big )\)-kernel of \(D_1{\,\boxtimes \,}D_2\).

See [59] for corresponding results for generalized products. Finally, we remark that Lakshmi and Vidhyapriya [34] characterize kernels in Cartesian products of tournaments with directed paths and cycles.

10.5 Hamiltonian Properties

Hamiltonian properties of digraphs have been studied extensively. The following four theorems are among the results proved in the book [44] by Schaar, Sonntag and Teichert.

Theorem 10.5.1

If \(D_1\) and \(D_2\) are Hamiltonian digraphs, then \(D_1\boxtimes D_2\) and \(D_1\circ D_2\) are Hamiltonian. If, in addition, \(D_1\) is Hamiltonian connected, and \(|D_1|\ge 3\) and \(|D_2|\ge 4\), then \(D_1\,\Box \,D_2\) is Hamiltonian.

The above additional conditions on the factors of a Cartesian product are necessary, as evidenced by the next theorem of Erdős and Trotter [57].

Theorem 10.5.2

The Cartesian product \(\overrightarrow{C}_p\,\Box \,\overrightarrow{C}_q\) is Hamiltonian if and only if there are non-negative integers \(d_1,d_2\) for which \(d_1+d_2 =\gcd (p,q)\ge 2\) and \(\gcd (p,d_1)=\gcd (q,d_2)=1\).

Recall that a digraph is traceable if it has a Hamiltonian path. It is homogeneously traceable if each of its vertices is the initial point of some Hamiltonian path.

Theorem 10.5.3

If digraphs \(D_1\) and \(D_2\) are homogeneously traceable, then so are \(D_1\,\Box \,D_2\), \(D_1\boxtimes D_2\) and \(D_1\circ D_2\).

Theorem 10.5.4

If \(D_1\) is homogeneously traceable and \(D_2\) is traceable, then \(D_1\,\Box \,D_2\) and \(D_1\boxtimes D_2\) are traceable. If \(D_1\) and \(D_2\) are traceable, then so is \(D_1\circ D_2\).

A digraph is Hamiltonian decomposable if it has a family of Hamiltonian dicycles such that every arc of the digraph belongs to exactly one of the dicycles. Ng [39] gives the most complete result among digraph products.

Theorem 10.5.5

If \(D_1\) and \(D_2\) are Hamiltonian decomposable digraphs, and \(|V(D_1)|\) is odd, then \(D_1\circ D_2\) is Hamiltonian decomposable.

At present it is not known if the assumption of odd order can be removed.

Conjecture 10.5.6

If \(D_1\) and \(D_2\) are Hamiltonian decomposable digraphs, then \(D_1\circ D_2\) is Hamiltonian decomposable.

By Theorem 10.5.2, a Cartesian product of Hamiltonian decomposable digraphs is not necessarily Hamiltonian decomposable. This is also the case for the strong product, as is illustrated by \(\overleftrightarrow {K_2}{\,\boxtimes \,}\overleftrightarrow {K_2}=\overleftrightarrow {K_4}\).

Problem 10.5.7

Determine conditions under which a Cartesian or strong product of digraphs is Hamiltonian decomposable.

A solution to this problem may shed light on the longstanding conjecture that a Cartesian product of Hamiltonian decomposable graphs is Hamiltonian decomposable. See Section 30.2 of [18] and the references therein.

Despite these difficulties, there has been progress on Cartesian products of biorientations of graphs. Stong [52] proved that complete biorientations of odd-dimensional hypercubes decompose into \(2m+1\) Hamiltonian cycles, and the same is true for \(\overleftrightarrow {C}_{n_1}\,\Box \,\cdots \,\Box \,\overleftrightarrow {C}_{n_m}\,\Box \,\overleftrightarrow {K}_{2}\) provided \(n_i\ge 3\) and \(m>2\).

Hamiltonian results for direct products of digraphs are scarce. Keating [28] proves that if \(D_1\) and \(D_2\times \overrightarrow{C}_{|D_1|}\) are Hamiltonian decomposable, then so is \(D_1\times D_2\). Paulraja and Sivasankar [41] establish hamilton decompositions in direct products of biorientations of special classes of graphs.

10.6 Invariants

Here we collect various results on invariants of digraph products, beginning with the chromatic number and proceeding to domination and independence.

The chromatic number \(\chi (D)\) of a digraph D is the chromatic number of the underlying graph of D. For the Cartesian and lexicographic products, the underlying graph of the product is the product of the underlying graph of the factors. Thus for \(\,\Box \,\) and \(\circ \), the chromatic number of products of digraphs coincides with that of products of graphs. This has been well-studied. See Chapter 26 of [18] for a survey.

The situation for the direct and strong products is different. For example, \(\chi (G\times H)\le \min \{\chi (G),\chi (H)\}\) is straightforward, whether G and H are graphs or digraphs. The celebrated Hedetniemi conjecture asserts that \(\chi (G\times H)= \min \{\chi (G),\chi (H)\}\) for all graphs G and H. But if G and H are digraphs, then it is quite possible that \(\chi (G\times H)< \min \{\chi (G),\chi (H)\}\), as was first noted by Poljak and Röld [43]. More recently, Bessy and Thomassé [2] exhibit a 5-chromatic digraph D for which \(\chi (D\times TT_5)=3\), and Tardif [55] gives digraphs \(G_n\) and \(H_n\) for which \(\chi (G_n)=n\), \(\chi (H_n)=4\) and \(\chi (G_n\times H_n)=3\). Poljak and Röld introduced the functions

and showed that if g is bounded above, then the bound is at most 16. This bound was improved to 3 in [42].

Notice that \(f(n)\le g(n)\le n\), and Hedetniemi’s conjecture is equivalent to the assertion \(g(n)=n\). Certainly if g is bounded, then so is f. Interestingly, the converse is true. Tardif [56] proved that f and g are either both bounded or both unbounded. Thus Hedetniemi’s conjecture is false if f is bounded.

There is an oriented version of the chromatic number, defined on oriented graphs, that is, digraphs with no 2-cycles. A oriented k-coloring of such a digraph D is a map \(c:V(D)\rightarrow [k]\) with the property that \(c(x)\ne c(y)\) whenever \(xy\in A(D)\), and, in addition, the existence of an arc from one color class \(X_1\) to another color class \(X_2\) implies that there are no arcs from \(X_2\) to \(X_1\). The smallest such k is called the oriented chromatic number of D, denoted \(\chi _o(D)\). Equivalently, this is the smallest k for which there is a homomorphism from D to an oriented graph of order k. The oriented chromatic number \(\chi _o(G)\) of a graph G is the maximum oriented chromatic number of all orientations of G. For a survey, see Sopena [51]. Tight bounds on this invariant are rare, even for simple classes of graphs. Aravind, Narayanan and Subramanian [1] show \(\chi _o(G\,\Box \,P_n)\le (2n-1)\chi _o(G)\), and \(\chi _o(G\,\Box \,C_n)\le 2n\chi _o(G)\), as well as \(8\le \chi _o(P_2{\,\boxtimes \,}P_n)\le 11\) and \(10\le \chi _o(P_3{\,\boxtimes \,}P_n)\le 67\). There appears to have been no other work with this invariant on products other than some progress on grids [10, 53].

A dominating set in a digraph D is a subset \(S\subseteq V(D)\) with the property that for any \(y\in V(D)-S\) there exists some \(x\in S\) for which \(xy\in A(D)\). The domination number \(\gamma (D)\) is the size of a smallest dominating set. Domination in digraphs has not been studied as extensively as in graphs. As computing the domination number of a graph is \(\mathcal{NP}\)-hard [13], the same is true for digraphs. (Consider the complete biorientation of an arbitrary graph.) Thus we can expect exact formulas only for products of special classes of digraphs. Liu et al. [35] and Shaheen [45,46,47] consider the case of Cartesian products of directed paths and cycles. For example, Shaheen proves

provided \(m,n>3\), and separate formulas are given for \(m\le 3\). Similar results for the strong product of grid graphs are considered in [48].

Concerning independence, note that (as for the chromatic number) questions of independence in Cartesian and lexicographic products of digraphs coincide with the same questions for graphs. So only the direct and strong product of digraphs are not covered by the theory of graph products. Despite this, there appears to have been little work done with them. But one interesting application deserves mention. The Gallai–Milgram theorem [12] says that the vertices of any digraph with independence number n can be partitioned into n parts, each of which is the vertex set of a directed path (see also Theorem 1.8.4). Hahn and Jackson [14] conjectured that this theorem is the best possible in the sense that for each positive n there is a digraph with independence number n, and such that removing the vertices of any \(n-1\) directed paths still leaves a digraph with independence number n. Bondy, Buchwalder and Mercier [4] used lexicographic products to construct such digraphs for \(n=2^a3^b\). (The general conjecture was proved by Fox and Sudakov [11].)

Finally, we briefly visit the notion of the exponent \(\exp (D)\) of a digraph D, which is the least positive integer k for which any two vertices of D are joined by a diwalk of length k (or \(\infty \) if no such k exists). We say D is primitive if its exponent is finite. Wielandt [58] proved that the exponent of a primitive digraph on n vertices is bounded above by \(n^2-n+1\), and established a family \(W_n\) of digraphs for which this bound is attained. Kim, Song and Hwang [32] showed that if \(D_1\) and \(D_2\) have order \(n_1\) and \(n_2\), respectively, then \(\exp (D_1\,\Box \,D_2)\le n_1n_2-1\), and this upper bound can be attained only if \(\gcd (n_1,n_2)=1\). Moreover, if \(n_1=n_2=n\), then \(\exp (D_1\,\Box \,D_2)\le n^2-n+1\), and the bound is attained only for \(D_1=\overrightarrow{C}_n\) and \(D_2= W_n\). In [30] they compute the exponents of Cartesian products of cycles, and also show that if \(D_1\) is a primitive graph and \(D_2\) is a strong digraph, then

This work continues in [29], which proves \(\exp (D_1{\,\boxtimes \,}D_2)\le n_1+n_2-2\), with equality for dicycles. Concerning the direct product, the same authors [31] show that for a primitive digraph D there is an integer m for which

10.7 Quotients and Homomorphisms

Here we set up the notions needed in the subsequent sections on cancellation and prime factorization of digraphs. Some of that material is most naturally phrased within the class of digraphs in which loops are allowed. With this in mind, let \(\mathscr {D}\) denote the set of (isomorphism classes of) digraphs without loops, and let \(\mathscr {D}_0\) be the set of digraphs in which loops are allowed. Thus \(\mathscr {D}\subset \mathscr {D}_0\). We admit as an element of \(\mathscr {D}\) the empty digraph O with \(V(O)=\emptyset \).

This section’s main theme is that a digraph is completely determined, up to isomorphism, by the number of homomorphisms into it. Recall that a homomorphism \(f:D\rightarrow D'\) between digraphs \(D,D'\in \mathscr {D}_0\) is a map \(f:V(D)\rightarrow V(D')\) for which \(xy\in A(D)\) implies \(f(x)f(y)\in A(D')\). Also f is a weak homomorphism if \(xy\in A(D)\) implies \(f(x)f(y)\in A(D')\) or \(f(x)=f(y)\).

The set of all homomorphisms \(D\rightarrow D'\) is denoted \(\text {Hom}(D,D')\), and the set of weak homomorphisms \(D\rightarrow D'\) is \(\text {Hom}_w(D,D')\). A homomorphism is injective if it is injective as a map from V(D) to \(V(D')\). We denote the set of all injective homomorphisms \(D\rightarrow D'\) as \(\text {Inj}(D,D')\). (Necessarily \(\text {Inj}(D,D')\) is also the set of injective weak homomorphisms \(D\rightarrow D'\).) Let \(\text {hom}(D,D')=|\text {Hom}(D,D')|\) be the number of homomorphisms \(D\rightarrow D'\). Similarly, \(\text {hom}_w(D,D')=|\text {Hom}_w(D,D')|\), and \(\text {inj}(D,D')=|\text {Inj}(D,D')|\) .

We will need several notions of digraph quotients. For a digraph D in \(\mathscr {D}\) and a partition \(\varOmega \) of V(D), the quotient \(D/\varOmega \) in \(\mathscr {D}\) is the digraph in \(\mathscr {D}\) whose vertices are the partition parts \(U\in \varOmega \), and with an arc from U to V if \(U\ne V\) and D has an arc uv with \(u\in U\) and \(v\in V\). Notice the map \(D\rightarrow D/\varOmega \) sending u to the element \(U\in \varOmega \) with \(u\in U\) is a weak homomorphism.

On the other hand, if \(D\in \mathscr {D}_0\), then the quotient \(D/\varOmega \) in \(\mathscr {D}_0\) is as above, but with a loop \(UU\in A(D/\varOmega )\) whenever D has an arc with both endpoints in U. The map \(D\rightarrow D/\varOmega \) sending u to the element \(U\in \varOmega \) that contains u is a homomorphism. See Figure 10.5.

The remaining results in this section (at least in the class \(\mathscr {D}_0\)) are from Lovász [36]. See also Hell and Nešetřil [23] for a very readable account. The statements concerning weak homomorphisms were developed by Culp in [7].

Lemma 10.7.1

For a digraph D, let \(\mathscr {P}\) be the set of all partitions of V(D).

-

1.

If \(D,G\in \mathscr {D}_0\), then \(\;\displaystyle {\mathrm{hom}(D,G)\,=\,\sum _{\varOmega \in \mathscr {P}}\mathrm{inj}(D/\varOmega ,G)}\) (quotients in \(\mathscr {D}_0\)).

-

2.

If \(D,G\in \mathscr {D}\), then \(\;\displaystyle {\mathrm{hom}_w(D,G)=\sum _{\varOmega \in \mathscr {P}}\mathrm{inj}(D/\varOmega ,G)}\) (quotients in \(\mathscr {D}\)).

Proof:

For the first part, put \(\varUpsilon =\left\{ (\varOmega , f)\ |\ \;\varOmega \in \mathscr {P}, \;f\in \text {Inj}(D/\varOmega , G)\right\} \), so \(|\varUpsilon |=\) \(\sum _{\varOmega \in \mathscr {P}}\text {inj}(D/\varOmega ,G)\). It suffices to show a bijection \(\theta :\text {Hom}(D,G)\rightarrow \) \(\varUpsilon \). Define \(\theta \) to be \(\theta (f)=(\varOmega ,f^*)\), where \(\varOmega =\{f^{-1}(x) \mid x \in V(G)\}\in \mathscr {P}\), and \(f^*:D/\varOmega \rightarrow G\) is defined as \(f^*(U)=f(u)\), for \(u\in U\). By construction \(\theta \) is an injective map to \(\varUpsilon \). For surjectivity, take any \((\varOmega ,f)\in \varUpsilon \), and note that \(\theta \) sends the composition \(D\rightarrow D/\varOmega {\mathop {\rightarrow }\limits ^{\small f}}G\) to \((\varOmega ,f^*)\).

The proof of part 2 is the same, except that \(\text {Hom}(D,G)\) and \(\hom (D,G)\) are replaced by \(\text {Hom}_w(D,G)\) and \(\hom _w(D,G)\), and quotients are in \(\mathscr {D}\). \(\square \)

Proposition 10.7.2

The isomorphism class of a digraph is determined by the number of homomorphisms into it, in the following senses.

-

1.

If \(G,H\in \mathscr {D}_0\) and \(\hom (X,G)=\hom (X,H)\) for all \(X\in \mathscr {D}_0\), then \(G\cong H\).

-

2.

If \(G,H\in \mathscr {D}\) and \(\hom _w(X,G)\!=\!\hom _w(X,H)\) for all \(X\in \mathscr {D}\), then \(G\cong H\).

-

3.

If \(G,H\in \mathscr {D}\) and \(\hom (X,G)\,=\,\hom (X,H)\) for all \(X\in \mathscr {D}\), then \(G\cong H\).

Proof:

For the first statement, say \(\text {hom}(X,G)=\text {hom}(X,H)\) for all \(X\in \mathscr {D}_0\). Our strategy is to show that this implies \(\text {inj}(X,G)=\) \(\text {inj}(X,H)\) for every X. Then the theorem will follow because we get \(\text {inj}(H,G)=\) \(\text {inj}(H,H)>0\) and \(\text {inj}(G,H)=\) \(\text {inj}(G,G)>0\), so there are injective homomorphisms \(G\rightarrow H\) and \(H\rightarrow G\), whence \(G\cong H\).

We use induction on |X| to show \(\text {inj}(X,G)=\) \(\text {inj}(X,H)\). If \(|X|=1\), then

If \(|X|>1\), Lemma 10.7.1 (1) applied to \(\hom (X,G)=\hom (X,H)\) yields

Let T be the trivial partition of V(X) consisting of |X| singleton sets. Then \(X/T=X\) and the above equation becomes

By the induction hypothesis, \(\text {inj}(X/\varOmega ,G)=\text {inj}(X/\varOmega ,H)\) for all non-trivial partitions \(\varOmega \). Consequently \(\text {inj}(X,G)=\) \(\text {inj}(X,H)\), completing the proof.

The second statement is proved in exactly the same way, but using \(\hom _w\) instead of \(\hom \), and part 2 of Lemma 10.7.1 instead part 1.

Finally, part 3 follows immediately from part 1, because if \(G,H\in \mathscr {D}\) and \(X\in \mathscr {D}_0-\mathscr {D}\), then X has a loop, but neither G nor H has one, so \(\hom (X,G)=0=\hom (X,H)\). \(\square \)

Observe that \(\hom \) and \(\hom _w\) factor neatly over the direct and strong products:

Proposition 10.7.3

Suppose X, D and G are digraphs.

-

1.

If \(X,D,G\in \mathscr {D}_0\), then \(\mathrm{hom}(X,\,D\times G) = \mathrm{hom}(X,D)\cdot \mathrm{hom}(X,G)\).

-

2.

If \(X,D,G\in \mathscr {D}\), then \(\mathrm{hom}_w(X,D{\,\boxtimes \,}G) = \mathrm{hom}_w(X,D)\cdot \mathrm{hom}_w(X,G)\).

Proof:

The map \(\mathrm{Hom}(X,D\times G)\rightarrow \mathrm{Hom}(X,D)\times \mathrm{Hom}(X,G)\) given by \(f\mapsto (\pi _Df,\pi _Gf)\) is injective. And it is surjective because any \((f_D, f_G)\) in the codomain is the image of \(x\mapsto (f_D(x), f_G(x))\), which is a homomorphism by definition of the direct product. This establishes the first statement, and the second follows analogously. \(\square \)

As an application, we get a quick result for direct and strong powers.

Corollary 10.7.4

If \(D,G\in \mathscr {D}_0\), then \(D^{\times n}\cong G^{\times n}\) if and only if \(D\cong G\). Also, if \(D,G\in \mathscr {D}\), then \(D^{\boxtimes n}\cong G^{\boxtimes n}\) if and only if \(D\cong G\).

Proof:

If \(D\cong G\), then clearly \(D^{\times n}\cong G^{\times n}\). Conversely, if \(D^{\times n}\cong G^{\times n}\), then Proposition 10.7.3 gives \(\hom (X,D)^n=\hom (X,G)^n\), so \(\hom (X,D)=\hom (X,G)\) for any \(X\in \mathscr {D}_0\). Thus \(D\cong G\), by Proposition 10.7.2. Apply a parallel argument to the strong product. \(\square \)

10.8 Cancellation

Given a product \(*\in \{\,\Box \,,{\,\boxtimes \,}, \times , \circ \}\) the cancellation problem seeks the conditions under which \(D*G\cong D*H\) implies \(G\cong H\) for digraphs D, G and H. If this is the case, we say that cancellation holds; otherwise it fails. Obviously cancellation fails if D is the empty digraph, for then \(D*G= O=D*H\) for any G and H. We will see that cancellation holds for each of the products \(\,\Box \,, {\,\boxtimes \,}\) and \(\circ \) provided \(D\ne O\). The situation for the direct product is much more subtle; it is reserved for the end of the section.

As in the previous section, \(\mathscr {D}\) is the class of digraphs (without loops) and \(\mathscr {D}_0\) is the class of digraphs that may have loops. Our first result concerns the strong product. The proof approach is from Culp [7].

Theorem 10.8.1

Let D, G and H be digraphs (without loops), with \(D\ne O\). If \(D{\,\boxtimes \,}G\cong D{\,\boxtimes \,}H\), then \(G\cong H\).

Proof:

Let \(D{\,\boxtimes \,}G\cong D{\,\boxtimes \,}H\). Proposition 10.7.3 says that for any digraph X,

If \(D\ne O\), then \(\mathrm{hom}_w(X,D)>0\) (constant maps are weak homomorphisms), so \(\mathrm{hom}_w(X,G)=\mathrm{hom}_w(X,H)\). Proposition 10.7.2 (2) yields \(G\cong H\). \(\square \)

Theorem 10.8.1 applies only to \(\mathscr {D}\). Indeed, cancellation over \({\,\boxtimes \,}\) fails in \(\mathscr {D}_0\). Consider the case where D is a single vertex with a loop, and \(H=K_1\). Then \(D{\,\boxtimes \,}D=D=D{\,\boxtimes \,}H\), but \(D\not \cong H\).

Echoing Theorem 10.8.1, we get a partial cancellation result for the direct product [36]. The proof is the same but uses part (1) of Proposition 10.7.2 instead of part (2), plus the fact that any constant map from X to a vertex with a loop is a homomorphism. The result is due to Lovász [36].

Theorem 10.8.2

Suppose \(D,G,H\in \mathscr {D}_0\), and D has a loop. If \(D\times G\cong D\times H\), then \(G\cong H\).

Proposition 10.7.3 has no analogue for the Cartesian product, so to deduce cancellation for it we must count our homomorphisms indirectly. The proof of the next theorem is new. A different approach uses unique prime factorization; see the remarks in Chapter 23 of [18].

Theorem 10.8.3

Let D, G and H be digraphs (without loops), with \(D\ne O\). If \(D\,\Box \,G\cong D\,\Box \,H\), then \(G\cong H\).

Proof:

The proof has two parts. First we derive a formula for \(\hom (X, D\,\Box \,G)\). Then we use it to show \(D\,\Box \,G\cong D\,\Box \,H\) implies \(\hom (X,G)=\hom (X,H)\) for all \(X\in \mathscr {D}\), whence Proposition 10.7.2 yields \(G\cong H\).

Our counting formula uses an arc 2-coloring scheme, shown in Figure 10.6. Given a 2-coloring of A(X) by the colors dashed and bold, let \(X_d\) be the spanning subdigraph of X whose arcs are the dashed arcs, and let \(X_b\) be the spanning subdigraph whose arcs are bold. Let \(X_b/X_d\) be the contraction in \(\mathscr {D}_0\) of \(X_b\) in which each connected component of \(X_d\) is collapsed to a vertex. Specifically, \(V(X_b/X_d)\) is the set of connected components of \(X_d\), and

Define \(X_d/X_b\) analogously, as the contraction of \(X_d\) by the connected components of \(X_b\). Note that \(X_b/X_d\) (resp. \(X_d/X_b\)) has a loop at U if and only if the subdigraph of X induced on U has a bold (resp. dashed) arc.

Let \(\mathscr {C}\) be the set of all arc 2-colorings of X by colors dashed and bold. We claim that there is a disjoint union

Indeed, any \(f\in \text {Hom}(X,D\,\Box \,G)\) corresponds to a 2-coloring in \(\mathscr {C}\) and a pair \((\pi _D f,\pi _G f)\in \text {Hom}(X_d/X_b, D)\times \text {Hom}(X_b/X_d, G)\), as follows. For any \(xy\in A(X)\), either \(\pi _Df(x)=\pi _Df(y)\) and \(\pi _Gf(x)\pi _Gf(y)\in A(G)\), or \(\pi _Df(x)\pi _Df(y)\in A(D)\) and \(\pi _Gf(x)=\pi _Gf(y)\). Color xy bold in the first case and dashed in the second. One verifies that \(\pi _Df\) is a well-defined homomorphism \(X_d/X_b\rightarrow D\), and similarly for \(\pi _Gf:X_b/X_d\rightarrow D\), and it is easy to check that \(f\mapsto (\pi _Df,\pi _Gf)\) is injective. For surjectivity, note that for any arc 2-coloring of X and pair \((f_D, f_G)\in \mathrm{Hom}(X_d/X_b, D)\times \mathrm{Hom}(X_b/X_d, G)\), there is an associated \(f\in \text {Hom}(X,D\,\Box \,G)\) defined as \(f(x)=(f_D(U), f_G(V))\), where \(x\in U,V\).

It follows that we can count the homomorphisms from X to \(D\,\Box \,G\) as

This completes the first part of the proof.

For the second step, suppose \(D\,\Box \,G\cong D\,\Box \,H\) and X is arbitrary. We will show \(\hom (X,G)=\hom (X,H)\) by induction on |X|. If \(|X|=1\), then \(\hom (X,G)=|G|=|H|=\hom (X,H)\). Otherwise, by Equation (10.8),

By induction, \(\hom (X_b/X_d, G)=\hom (X_b/X_d, H)\) for all colorings with at least one dashed edge. Thus, for the coloring where all edges are bold, we get

But then \(X_d/X_b\) has no arcs, so \(\hom (X_d/X_b, D)> 0\). Also, \(X_b/X_d=X\), so we get \(\hom (X, G)=\hom (X, H)\). Finally, Proposition 10.7.2 says \(G\cong H\). \(\square \)

Next we aim our homomorphism-counting program at the lexicographic product and bag a particularly strong cancellation law. We use a coloring scheme like that in Figure 10.6. For a homomorphism \(X\rightarrow D\circ G\), arcs mapping to fibers over vertices of D are colored bold, and all other arcs are colored dashed. Equation (10.8) adapts as

Verification is left as an exercise. Using this, we can prove right- and left-cancellation for the lexicographic product.

Lemma 10.8.4

Suppose D, G and H are digraphs (without loops) and \(D\ne O\). If \(G\circ D\cong H\circ D\), then \(G\cong H\). If \(D\circ G\cong D\circ H\), then \(G\cong H\).

Proof:

Say \(G\circ D\cong H\circ D\). We will get \(G\cong H\) by showing \(\hom (X,G)=\) \(\hom (X, H)\) for any X. If \(|X|=1\), then \(\hom (X,G)=|G|=|H|=\hom (X, H)\). Let \(|X|>1\) and assume \(\hom (X',G)=\hom (X', H)\) whenever \(|X'|<|X|\). As \(\hom (X,G\circ D)=\hom (X,H\circ D)\), Equation 10.9 gives

Now, \(\hom (X_d/X_b, G)=\hom (X_d/X_b, H)\) unless all arcs of X are dashed, in which case \(X_d/X_b=X\) and \(X_b\) is the arcless digraph on V(X). From this, the above equation reduces to \(\hom (X,G)\cdot |D|^{|G|}=\hom (X,H)\cdot |D|^{|G|}\), and then \(G\cong H\) by Proposition 10.7.2. For the second statement, Equation 10.9 gives

and we reason as in the first case. \(\square \)

We now discuss a notion that leads to a much stronger cancellation law. A subdigraph X of D is said to be externally related if for each \(b\in V(D)-V(X)\) the following holds: if there is an arc from b to a vertex of X, then there are arcs from b to every vertex in X; and if there is an arc from a vertex of X to b, then there are arcs from every vertex of X to b. (In the context of graphs, see Section 10.2 of [18], and the references therein.)

Given a vertex \(a=(x_1,x_2)\in V(G\circ D)\), let \(D^a\) denote the subdigraph of \(G\circ D\) induced on the vertices \(\{(x_1,x)\mid x\in V(D)\}\). We call \(D^a\) the D-layer through a. The definition of the lexicographic product implies \(D^a\cong D\), and that each \(D^a\) is externally related in \(G\circ D\). Note that each \(D^a\) is also an induced subdigraph of \(G\circ D\). All of these ideas are used in the proof of the next theorem, which was first proved by Dörfler and Imrich [8].

Theorem 10.8.5

Let D, G, H and K be non-empty digraphs (without loops). If \(G\circ D\cong H\circ K\) and \(|D|=|K|\), then \(G\cong H\) and \(D\cong K\).

Proof:

We prove this under the assumption that either D is disconnected, or that both D and its complement \(\overline{D}\) are connected. Once proved, this implies the general result, because if D is connected and \(\overline{D}\) is disconnected, then we can use Equation 10.3 to get \(\overline{G}\circ \overline{D}\cong \overline{H}\circ \overline{K}\). Then \(\overline{G}\cong \overline{H}\) and \(\overline{D}\cong \overline{K}\), and the theorem follows.

Take an isomorphism \(\varphi :G\circ D\rightarrow H\circ K\).

We first claim that for any D-layer \(D^a\), the image \(\pi _H\varphi (D^a)\) is either an arcless subdigraph of H (i.e., one or more vertices of H), or it is a single arc of H. Indeed, suppose it has an arc. Then \(\varphi (D^a)\) has an arc cd with \(\pi _H(c)\ne \pi _H(d)\). We will show that if \(\varphi (D^a)\) has a vertex x with \(\pi _H(x)\notin \{\pi _H(c),\pi _H(d)\}\), then all arcs \(x''y, yx''\) are present in \(\varphi (D^a)\), for any vertex \(y\in \varphi (D^a)\cap (K^c\cup K^d)\) and \(x''\in K^x\). This will contradict our assumption about D, because it implies that \(\varphi (D^a)\) (hence also D) is connected, but its complement is disconnected, for in \(\overline{\varphi (D^a)}\) it is impossible to find a path from x to c or d. Thus let x be as stated above. Select vertices \(c',d',x'\) in \(H\circ K-\varphi (D^a)\) with \(\pi _H(c')=\pi _H(c)\), \(\pi _H(d')=\pi _H(d)\) and \(\pi _H(x')=\pi _H(x)\). (Possible because \(|\varphi (D^a)|=|K|\), and the existence of the arc cd means that no K-layer is contained in \(\varphi (D^a)\).) The definition of \(\circ \) implies \(cd',c'd\in A(H\circ K)\). In turn, \(c'x, xd'\in A(H\circ K)\) because \(\varphi (D^a)\) is externally related. By definition of \(\circ \) we get \(cx',x'd\in A(H\circ K)\), and then also \(x'c, dx'\in A(H\circ K)\) because \(\varphi (D^a)\) is externally related. From this, the definition of \(\circ \) implies that for any vertex \(x''\) of \(K^x\) and y of \(K^c\cup K^d\) we have \(yx'', \,x''y\in A(H\circ K)\). The claim is proved. Now we break into cases.

Case 1. Suppose D is disconnected. Then \(\pi _H\varphi (D^a)\) is never an arc because then every vertex of \(\varphi (D^a)\) in the fiber over the tail of the arc would be adjacent to every vertex in the fiber over the tip, making \(\varphi (D^a)\) connected. It follows that \(\varphi \) maps components of D-layers into components of K-layers. Further, \(\varphi \) maps each component of a D-layer onto a component of a K-layer: Suppose to the contrary that C is a component of \(D^a\) and \(\varphi (C)\) is a proper subgraph of a component of \(K^{\varphi (a)}\). Take a vertex x of \(K^{\varphi (a)}-\varphi (C)\) that is adjacent to or from \(\varphi (C)\). Then \(\varphi ^{-1}(x)\) is adjacent to or from \(C\subseteq D^a\), which is externally related, so \(\varphi ^{-1}(x)\) is adjacent to or from every vertex of \(D^a\). Consequently x is adjacent to or from every vertex of \(\varphi (D^a)\). But then any vertex of y of \(\varphi (D^a)\) must be contained in \(K^{\varphi (a)}\), for otherwise it is adjacent to or from \(x\in V(K^{\varphi (a)})\), and hence also to or from \(\varphi (C)\), which is impossible. Thus \(K^{\varphi (a)}\) contains \(\varphi (D^a)\) as well as x, contradicting \(|K^{\varphi (a)}|=|\varphi (D^a)|\).

Thus each component of a D-layer is isomorphic to a component of a K-layer, and conversely, as \(\varphi \) is bijective. As there are |G| D-layers (all isomorphic to D), and just as many K-layers (isomorphic to K), we conclude \(D\cong K\). Thus \(G\circ D\cong H\circ D\), and Lemma 10.8.4 implies \(G\cong H\).

Case 2. Suppose D is connected and its complement is connected. If \(\varphi \) maps a D-layer to a K-layer, then \(D\cong K\) and Lemma 10.8.4 implies \(G\cong H\). Otherwise \(\pi _H\varphi (D^a)\) is an arc for every layer \(D^a\). Thus we can define a map \(f:G\rightarrow H\) by declaring f(x) to be the tail of the arc \(\pi _H\varphi (\pi _G^{-1}(x))\). We will finish the proof by showing f is an isomorphism. (For then \(G\cong H\), and \(D\cong K\), by Lemma 10.8.4.) We will show that f is injective; once this is done the isomorphism properties are simple consequences of the definitions. Suppose to the contrary that f is not injective, which means that for some \(a\ne b\) we have \(\pi _H\varphi (D^a)=wy\) and \(\pi _H\varphi (D^b)=wz\). Say the vertex set of \(\varphi (D^a)\) is \(A_w\cup Ay\) with \(\pi _H(A_w)=w\) and \(\pi _H(A_y)=y\). Likewise the vertex set of \(\varphi (D^b)\) is \(B_w\cup B_z\) with \(\pi _H(B_w)=w\) and \(\pi _H(B_z)=z\). Then there are arcs from each vertex of \(\varphi (B_w)\) to each vertex of \(\varphi (B_z)\), and then by definition of \(\circ \) there are arcs from each vertex of \(\varphi (A_w)\) to each vertex of \(\varphi (B_z)\). For the same reasons there are arcs from \(\varphi (A_w)\) to \(\varphi (A_y)\), and thus from \(\varphi (B_w)\) to \(\varphi (A_y)\). From this we conclude that in \(G\circ D\) there are arcs from every vertex of \(G^a\) to every vertex of \(G^b\), and arcs from every vertex of \(G^b\) to every vertex of \(G^a\). Hence there are arcs from \(\varphi (B_z)\) to \(\varphi (A_w)\), so there is an arc from \(\varphi (B_z)\) to \(\varphi (B_w)\). Thus \(\pi _H\varphi (G^b)\) contains two arcs wz and zw, contradicting the fact that this projection is a single arc. \(\square \)

We get a quick corollary concerning lexicographic powers.

Corollary 10.8.6

If \(G,H\in \mathscr {D}\), then \(G^{\circ n}\cong H^{\circ n}\) if and only if \(G\cong H\).

Having cancellation laws for the strong, Cartesian and lexicographic products, we devote the remainder of this section to the direct product. The next result due to Lovász [36] is useful in this context.

Proposition 10.8.7

Let D, C, G and H be digraphs in \(\mathscr {D}_0\). If \(C\times G\cong C\times H\) and there is a homomorphism \(D\rightarrow C\), then \(D\times G\cong D\times H\).

Proof:

As \(C\times G\cong C\times H\), Proposition 10.7.3 says \(\hom (X,C)\cdot \hom (X,G)= \hom (X,C)\cdot \hom (X,H)\) for any X. The homomorphism \(D\rightarrow C\) guarantees \(\hom (X,D)=0\) whenever \(\hom (X,C)=0\). Thus \(\hom (X,D)\cdot \hom (X,G)= \hom (X,D)\cdot \hom (X,H)\), so \(\hom (X,D\times G)=\hom (X, D\times H)\) by Proposition 10.7.3, and then Proposition 10.7.2 gives \(D\times G\cong D\times H\). \(\square \)

Now observe that cancellation can fail over the direct product. Figure 10.7 shows digraphs \(D,G,H\in \mathscr {D}_0\) for which \(D\times G\cong 3\overrightarrow{C_3} \cong D\times H\), but \(G\not \cong H\). Cancellation can also fail in the class of loopless digraphs. For example, note that for graphs we have \(K_2\times 2C_3 = 2C_6 = K_2\times C_6\), so \(\overleftrightarrow {K_2}\times 2\overleftrightarrow {C_3} \cong \overleftrightarrow {K_2}\times \overleftrightarrow {C_6}\).

A digraph D is called a zero divisor if there are digraphs \(G\not \cong H\) for which \(D\times H\cong D\times G\). For example, Figure 10.7 shows that \(D=\overrightarrow{C_3}\) is a zero divisor, and the equation above shows \(\overleftrightarrow {K_2}\) is a zero divisor. The following characterization of zero divisors is due to Lovász [36].

Theorem 10.8.8

A digraph D is a zero divisor if and only if there exists a homomorphism \(D\rightarrow \overrightarrow{C}_{p_1}+\overrightarrow{C}_{p_2}+ \cdots +\overrightarrow{C}_{p_k}\) into a disjoint union of directed cycles of distinct prime lengths \(p_1,p_2,\ldots , p_k\).

Proof:

We will prove only one (the easier) direction. See [36] for the other.

Suppose there is a homomorphism \(D\rightarrow C=\overrightarrow{C}_{p_1}+\overrightarrow{C}_{p_2}+ \cdots +\overrightarrow{C}_{p_k}\), where the \(p_i\) are distinct primes. Our plan is to produce non-isomorphic digraphs G and H for which \(C\times G=C \times H\), for then Proposition 10.8.7 will insure \(D\times G\cong D\times H\), showing D is a zero divisor.

Put \(n=p_1p_2\cdots p_k\). Let \(\mathscr {G}\) be the set of positive divisors of n that are products of an even number of the \(p_i\)’s, whereas \(\mathscr {H}\) is the set of divisors that are products of an odd number of the \(p_i\)’s. Let G and H be the disjoint unions

Clearly \(G\not \cong H\). As the direct product distributes over disjoint unions, \(C\times G=C \times H\) will follow provided \(\overrightarrow{C}_{p_i}\times G=\overrightarrow{C}_{p_i} \times H\) for each \(p_i\). We establish this with the aid of Equation (10.4), as follows:

From this, \(C\times G=C\times H\), and hence \(D\times G=D\times H\), as noted above. \(\square \)

For example, \(\overrightarrow{C}_n\) is a zero divisor when \(n>1\), as there is a homomorphism \(\overrightarrow{C}_n\rightarrow \overrightarrow{C}_p\) for any prime divisor p of n. Also, each \(\overrightarrow{P}_n\) is a zero divisor, as there are homomorphisms \(\overrightarrow{P}_n\rightarrow \overrightarrow{C}_p\).

Paraphrasing Theorem 10.8.8, if there are no homomorphisms from D into a union of directed cycles, then \(D\times G\cong D\times H\) necessarily implies \(G\cong H\). But if there is such a homomorphism then D is a zero divisor and there exist non-isomorphic digraphs G and H for which \(D\times G\cong D\times H\), as constructed in the proof of Theorem 10.8.8.

Given a digraph G and a zero divisor D, a natural problem is to determine all digraphs H for which \(G\times D\cong H\times D\). If there is only one such H, then necessarily \(H\cong G\), and cancellation holds. Thus it is meaningful to ask if there are conditions on G and D that force cancellation to hold, even if D is a zero divisor. For example, if \(G=K_1^*\), then \(G\times D\cong H\times D\) implies \(G\cong H\), regardless of whether D is a zero divisor. What other graphs have this property? We now turn our attention to this type of question, adopting the approach of [15, 19, 20].

For a digraph G, let \(S_{V(G)}\) denote the symmetric group on V(G), that is, the set of bijections from V(G) to itself. For \(\sigma \in S_{V(G)}\), define the permuted digraph \(G^{\sigma }\) to be \(V(G^\sigma )=V(G)\) and \(A(G^\sigma )=\{x \sigma (y) \mid xy\in A(G)\}\). Thus \(xy\in A(G)\) if and only if \(x\sigma (y)\in A(G^\sigma )\), and \(xy\in A(G^\sigma )\) if and only if \(x\sigma ^{-1}(y)\in E(G)\). Figure 10.8 shows several examples. The upper part shows a digraph G and two of its permuted digraphs. In the lower part, the cyclic permutation (0245) of the vertices of \(\overrightarrow{C_6}\) yields a permuted digraph \(\overrightarrow{C_6}^{(0245)}=2\overrightarrow{C_3}\). The permuted digraph \(\overrightarrow{C_6}^{(01)}\) is also shown. For another example, note that \(G^{\text {id}}=G\) for any digraph G. It may be possible that \(G^\sigma \cong G\) for some non-identity permutation \(\sigma \). For instance, \(\overrightarrow{{C_6}}^{(024)}\cong \overrightarrow{C_6}\).

The significance of permuted digraphs is given by the next proposition. asserting that \(D\times G\cong D\times H\) implies that H is a permuted digraph of G.

Proposition 10.8.9

Let G, H and D be digraphs, where D has at least one arc. If \(D\times G\cong D\times H\), then \(H\cong G^\sigma \) for some permutation \(\sigma \in S_{V(G)}\). As a partial converse, \(D\times G\cong D\times G^\sigma \) for all \(\sigma \in S_{V(A)}\), provided there is a homomorphism \(D\rightarrow \overrightarrow{P}_2\).

Proof:

Suppose \(D\times G\cong D\times H\), and D has at least one arc. Then there is a homomorphism \(\overrightarrow{P}_2\rightarrow D\), and Proposition 10.8.7 yields an isomorphism \(\varphi : \overrightarrow{P_2}\times G\rightarrow \overrightarrow{P_2}\times H\). We may assume \(\varphi \) has the form \((\varepsilon ,x)\mapsto (\varepsilon , \varphi _\varepsilon (x))\), where \(\varepsilon \in \{0,1\}=\) \(V(\overrightarrow{P_2})\), and each \(\varphi _\epsilon \) is a bijection \(V(G)\rightarrow V(H)\). (That a \(\varphi \) of such form exists is a consequence of Theorem 3 of [36]. However, it is also easily verified in the present setting, when the common factor is \(\overrightarrow{P}_2\).) Hence \(\varphi _0^{-1}\varphi _1:V(G)\rightarrow V(G)\) is a permutation of V(G). We now show that the map \(\varphi _0:G^{\varphi _0^{-1}\varphi _1}\rightarrow H\) is an isomorphism. Simply observe that

Conversely, let \(\sigma \in S_{V(G)}\). Note that the map \(\varphi \) defined as \(\varphi (0,x)=(0,x)\) and \(\varphi (1,x)=(1,\sigma (x))\) is an isomorphism \(\overrightarrow{P}_2\times G\rightarrow \overrightarrow{P}_2\times G^\sigma \) because \((0,x)(1,y)\in A(\overrightarrow{P}_2\times G)\) if and only if \((0,x)(1,\sigma (y))\in A(\overrightarrow{P}_2\times G^\sigma )\). If there is a homomorphism \(D\rightarrow \overrightarrow{P}_2\), Proposition 10.8.7 gives \(D\times G\cong D\times G^\sigma \). \(\square \)

In general, the full converse of Proposition 10.8.9 is (as we shall see) false. If there is no homomorphism \(D\rightarrow \overrightarrow{P}_2\), then not every \(\sigma \) will yield a digraph \(H=G^\sigma \) for which \(D\times G\cong D\times H\). In addition, it is possible that \(\sigma \ne \tau \) but \(G^\sigma \cong G^\tau \). Towards clarifying these issues, we next introduce a group action on \(S_{V(G)}\) whose orbits correspond to isomorphism classes of permuted digraphs.

The factorial of a digraph G is a digraph G!, defined as \(V(G!)=S_{V(G)}\), and \(\alpha \beta \in A(G!)\) provided that \(xy\in A(G)\Longleftrightarrow \) \(\alpha (x)\beta (y)\in A(G)\) for all pairs \(x,y\in V(G)\). To avoid confusion with composition, we will denote arcs \(\alpha \beta \) of G! as \([\alpha ,\beta ]\). Note that A(G!) has a group structure as a subgroup of \(S_{V(G)}\times S_{V(G)}\), that is, we can multiply arcs as \([\alpha ,\beta ][\gamma ,\delta ]=\) \([\alpha \gamma , \beta \delta ]\).

Observe that the definition implies that there is a loop \([\alpha , \alpha ]\) at \(\alpha \in V(G!)\) if and only if \(\alpha \) is an automorphism of G. In particular, any G! has a loop at the identity \(\text {id}\).

Our first example explains the origins of our term “factorial.” Let \(K_n^*\) be the complete symmetric digraph with a loop at each vertex, and note that

For less obvious computations, it is helpful to keep in mind the following interpretation of A(G!). Any arc \([\alpha ,\beta ]\in A(G!)\) is a permutation of the arcs of G, where \([\alpha ,\beta ](xy)=\alpha (x)\beta (y)\). This permutation preserves in-incidences and out-incidences in the following sense: Given two arcs xy, xz of G that have a common tail, \([\alpha ,\beta ]\) carries them to the two arcs \(\alpha (x)\beta (y)\), \(\alpha (x)\beta (z)\) of G with a common tail. Given two arcs xy, zy with a common tip, \([\alpha ,\beta ]\) carries them to the two arcs \(\alpha (x)\beta (y)\), \(\alpha (z)\beta (y)\) of G with a common tip.

Bear in mind, however, that even if the head of xy meets the tail of yz, then the arcs \([\alpha ,\beta ](xy)\) and \([\alpha ,\beta ](yz)\) need not meet; they can be quite far apart in G. To illustrate these ideas, Figure 10.9 shows the effect of a typical \([\alpha ,\beta ]\) on the arcs incident with a typical vertex z of G.

Let’s use these ideas to compute the factorial of the transitive tournament \(TT_n\), which has distinct out- and in-degrees \(0,1,\ldots , n-1\). The above discussion implies if \([\alpha ,\beta ]\in A(TT_n!)\), the out-degree of any \(x\in V(TT_n)\) equals the out-degree of \(\alpha (x)\). Hence \(\alpha =\mathrm {id}\). The same argument involving in-degrees gives \(\beta =\mathrm {id}\). Therefore \(TT_n!\) has n! vertices but only one arc \([\mathrm {id},\mathrm {id}]\). Figure 10.10 shows \(T_3!\), plus two other examples.

The group A(G!) acts on \(S_{V(G)}\) as \([\alpha ,\beta ]\cdot \sigma =\alpha \sigma \beta ^{-1}\), and this determines the situation in which \(G^\sigma =G^\tau \).

Proposition 10.8.10

If \(\sigma ,\tau \) are permutations of the vertices of a digraph G, then \(G^\sigma =G^\tau \) if and only if \(\sigma \) and \(\tau \) are in the same A(G!)-orbit.

Proof:

If there is an isomorphism \(\varphi :G^\sigma \rightarrow G^\tau \), then for any \(x,y\in V(G)\),

This means \([\varphi , \tau ^{-1}\varphi \sigma ]\in A(G!)\). Then \([\varphi , \tau ^{-1}\varphi \sigma ]\cdot \sigma =\tau \), so \(\sigma \) and \(\tau \) are indeed in the same orbit.

Conversely, suppose \(\sigma \) and \(\tau \) are in the same orbit. Take \([\alpha ,\beta ]\in A(G!)\) with \(\tau =[\alpha ,\beta ]\cdot \sigma =\alpha \sigma \beta ^{-1}\). Then \(\alpha :G^\sigma \rightarrow G^{\alpha \sigma \beta ^{-1}}=G^\tau \) is an isomorphism:

Given an arc \([\alpha ,\beta ]\in A(G!)\), we have \([\alpha ,\beta ]\cdot \beta =\alpha \). The previous proposition then assures \(G^\alpha \cong G^\beta \), and therefore yields the following corollary.

Corollary 10.8.11

If two permutations \(\sigma ,\tau \) are in the same component of G!, then \(G^\sigma \cong G^\tau \).