Abstract

The paper presents learning algorithms for a multidimensional adaptive growing neuro-fuzzy system with optimization of a neuron ensemble in every cascade. A building block for this architecture is a multidimensional neo-fuzzy neuron. The demonstrated system is distinguished from the well-recognized cascade systems in its ability to handle multidimensional data sequences in an online fashion, which makes it possible to treat non-stationary stochastic and chaotic data with the demanded accuracy. The most important privilege of the considered hybrid neuro-fuzzy system is its trait to accomplish a procedure of parallel computation for a data stream based on peculiar elements with upgraded approximating properties. The developed system turns out to be rather easy from the effectuation standpoint; it holds a high processing speed and approximating features. Compared to acclaimed countertypes, the developed system guarantees computational simpleness and owns both filtering and tracking aptitudes. The proposed system, which is ultimately a growing (evolving) system of computational intelligence, assures processing the incoming data in an online fashion just unlike the rest of conventional systems.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

- Learning method

- Cascade system

- Ensemble of neurons

- Multidimensional neo-fuzzy neuron

- Computational intelligence

- Adaptive neuro-fuzzy system

1 Introduction

A great combination of different neuro-fuzzy systems is of considerable use nowadays for a large variety of data processing problems. This fact should be highlighted by a number of preferences that neuro-fuzzy systems hold over other existing methods, and that comes from their abilities to get trained as well as their universal approximating capacities.

A degree of the training procedure may be refined by adapting both a network’s set of synaptic weights and its topology [1,2,3,4,5,6,7,8]. This notion is the ground rules for evolving (growing) systems of computational intelligence [9,10,11]. It stands to mention that probably one of the most prosperous actualizations of this attitude is cascade-correlation neural networks [12,13,14] by reason of their high level of efficacy and learning simplicity for both a network scheme and for synaptic weights. In general terms, such sort of a network gets underway with a rather simple architecture containing an ensemble of neurons to be trained irrespectively (a case of the first cascade). Every neuron in an ensemble can possess various activation functions as well as learning procedures. Nodes (neurons) in the ensemble do not intercommunicate while they are being learnt.

Eventually, when all the elements in the ensemble of the first cascade have had their weights adapted, the best neuron in relation to a learning criterion builds up the first cascade, and its synaptic weights are not able of being configured any longer. In the next place, the second cascade is commonly formed by means of akin neurons in the training ensemble. The sole difference is that neurons to be learnt in the ensemble of the second cascade own an additional input (and consequently an additional synaptic weight) which proves to be an output of the first cascade. In similar fashion to the first cascade, the second one withdraws all elements except a single one, which gives the best performance. Its synaptic weights should be fixed afterwards. Nodes in the third cascade hold two additional inputs, namely the outputs of the first and second cascades. The growing network keeps on adding new cascades to its topology until it gains the required quality of the results received over the given training set.

By way of evading multi-epoch learning [15,16,17,18,19,20,21,22,23], various kinds of neurons (preferably their outputs should depend in a linear manner on synaptic weights) may be utilized as the network’s elements. This could give the opportunity to exploit some optimal in speed learning algorithms and handle data as it arrives to the network. In the meantime, if the system is being trained in an online manner, it looks impossible to detect the best neuron in the ensemble. While handling non-stationary data objects, one node in a training ensemble may be confirmed to be the best element for one part of the training data sample (but it cannot be selected as the best one for the other parts). It may be recommended that all the units should be abandoned in the training ensemble, and some specific optimization method (selected in agreement with a general quality criterion for the network) is meant to be used for estimation of an output of the cascade.

It will be observed that the widely recognized cascade neural networks bring into action a non-linear mapping \( R^{n} \to R^{1} \), which means that a common cascade neural network is a system with a single output. By contrast, many problems solved by means of neuro-fuzzy systems demand a multidimensional mapping \( R^{n} \to R^{g} \) to be executed, that finally accounts for the fact that a number of elements to be trained in every cascade is \( g \) times more by contrast to a common neural network, which makes this sort of a system too ponderous. Hence, it seems relevant to operate a specific multidimensional neuron’s topology as the cascade network’s unit with multiple outputs instead of traditional.

The described growing cascade neuro-fuzzy system of computational intelligence is actually an effort to develop a system for handling a data stream that is fed to the system in an online way and that is in possession of a far smaller amount of parameters to be set as opposed to other widely recognized analogues.

2 An Architecture of the Hybrid Growing System

A scheme of the introduced hybrid system is represented in Fig. 1. In fact, it coincides with architecture of the hybrid evolving neural network with an optimized ensemble in every cascade group of elements to have been developed in [24,25,26,27,28,29]. A basic dissimilarity lies in a type of elements utilized and learning procedures respectively.

A network’s input can be described by a vector signal \( x\left( k \right) = \left( {x_{1} \left( k \right),x_{2} \left( k \right), \ldots ,x_{n} \left( k \right)} \right)^{T} \), where \( k = 1,2, \ldots \) stands for either a plurality of observations in the “object-property” table or an index of the current discrete time. These signals are moved to inputs of each neuron \( MN_{j}^{[m]} \) in the system (\( j = 1,2, \ldots ,q \) denotes a number of neurons in a training ensemble, \( m = 1,2, \ldots \) specifies a cascade’s number). A vector output \( \hat{y}^{[m]j} \left( k \right) = \left( {\hat{y}_{1}^{[m]j} \left( k \right),\hat{y}_{2}^{[m]j} \left( k \right), \ldots ,\hat{y}_{d}^{[m]j} \left( k \right), \ldots ,\hat{y}_{g}^{[m]j} \left( k \right)} \right)^{T} \) is eventually produced, \( d = 1,2, \ldots ,g \). These outputs are in the next place fed to a generalizing neuron \( GMN^{[m]} \) to reproduce an optimized vector output \( \hat{y}^{*[m]} \left( k \right) \) for the cascade m. Just as the input of the nodes in the first cascade is \( x\left( k \right) \), elements in the second cascade take \( g \) additional arriving signals for the obtained signal \( \hat{y}^{*[1]} \left( k \right) \), neurons in the third cascade have \( 2g \) additional inputs \( \hat{y}^{*[1]} \left( k \right),\hat{y}^{*[2]} \left( k \right) \), whilst neurons in the m-th cascade own \( \left( {m - 1} \right)g \) additional incoming signals \( \hat{y}^{*[1]} \left( k \right),\hat{y}^{*[2]} \left( k \right), \ldots ,\hat{y}^{*[m - 1]} \left( k \right) \). New cascades are becoming a part of the hybrid system within the learning procedure just as it turns out to be clear that an architecture with a current amount of cascades does not provide the required accuracy.

Since a system signal in a conventional neo-fuzzy neuron [30,31,32] is governed by the synaptic weights in a linear manner, any adaptive identification algorithm [33,34,35] may actually be applied to learning the network’s neo-fuzzy neurons (like either the exponentially-weighted least-squares method in a recurrent form

(here \( y^{d} \left( {k + 1} \right),\,d = 1,2, \ldots ,g \) specifies an external learning signal, \( 0 < \alpha \le 1 \) marks a forgetting factor) or the gradient learning algorithm with both tracking and filtering properties [35])

An architecture of a typical neo-fuzzy neuron (Fig. 2) as part of the multidimensional neuron \( MN_{g}^{[1]} \) in the cascade system is abundant, since a vector of input signals \( x\left( k \right) \) (the first cascade) is sent to same-type non-linear synapses \( NS_{di}^{[1]j} \) of the neo-fuzzy neurons, where each neuron obtains a signal \( \hat{y}_{d}^{[1]j} \left( k \right),\,\,d = 1,2, \ldots ,g \) at its output. As a result, components of the output vector \( \hat{y}^{[1]j} \left( k \right) = \left( {\hat{y}_{1}^{[1]j} \left( k \right),\hat{y}_{2}^{[1]j} \left( k \right), \ldots ,\hat{y}_{g}^{[1]j} \left( k \right)} \right)^{T} \) are computed irrespectively.

This fact can be missed by introducing a multidimensional neo-fuzzy neuron [36], whose architecture is shown in Fig. 3 and is a modification of the system proposed in [37]. Its structural units are composite non-linear synapses \( MNS_{i}^{[1]j} \), where each synapse contains \( h \) membership functions \( \mu_{li}^{[1]j} \) and \( gh \) tunable synaptic weights \( w_{dli}^{[1]j} \). In this way, the multidimensional neo-fuzzy neuron in the first cascade contains \( ghn \) synaptic weights, but only \( hn \) membership functions. That’s \( g \) times smaller in comparison with a situation if the cascade is formed of common neo-fuzzy neurons.

Assuming a \( \left( {hn \times 1} \right) \) – vector of membership functions

\( \mu^{[1]j} \left( k \right) = \left( {\mu_{11}^{[1]j} \left( {x_{1} \left( k \right)} \right),\mu_{21}^{[1]j} \left( {x_{1} \left( k \right)} \right), \ldots ,\mu_{h1}^{[1]j} \left( {x_{1} \left( k \right)} \right), \ldots ,\mu_{li}^{[1]j} \left( {x_{i} \left( k \right)} \right), \ldots ,\mu_{hn}^{[1]j} \left( {x_{n} \left( k \right)} \right)} \right)^{T} \) and a \( \left( {g \times hn} \right) \) – matrix of synaptic weights

the output signal \( MN_{j}^{[1]} \) can be written down at the \( k \) – th time moment in the form of

Learning the multidimensional neo-fuzzy neuron may be carried out applying either a matrix modification of the exponentially-weighted recurrent least squares method (1) in the form of

or a multidimensional version of the algorithm (2) [38]:

here \( y\left( {k + 1} \right) = \left( {y^{1} \left( {k + 1} \right),y^{2} \left( {k + 1} \right), \ldots ,y^{g} \left( {k + 1} \right)} \right)^{T} . \)

The rest of cascades are trained in a similar fashion, while a vector of membership functions \( \mu^{[m]j} \left( {k + 1} \right) \) in the \( m \)-th cascade enlarges its dimensionality by \( \left( {m - 1} \right)g \) elements which are guided by the preceding cascades’ outputs.

3 Output Signals’ Optimization of the Multidimensional Neo-fuzzy Neuron Ensemble

Outputs generated by the neurons in each ensemble are combined by the corresponding neuron \( GN^{[m]} \), whose output accuracy \( \hat{y}^{*[m]} \left( k \right) \) must be higher than the accuracy of any output \( \hat{y}_{j}^{[m]} \left( k \right) \). This task can be solved through the use of the neural networks’ ensembles approach. Although the well-recognized algorithms are not designated for operating in an online fashion, in this case one could use the adaptive generalizing forecasting [39, 40].

Let’s introduce a vector of ensemble inputs for the \( m \)-th cascade

then an optimal output of the neuron \( GN^{[m]} \), which is intrinsically an adaptive linear associator [1,2,3,4,5,6,7,8], can be defined as

or with additional constraints on unbiasedness

where \( c^{\left[ m \right]} = \left( {c_{1}^{\left[ m \right]} ,\,c_{2}^{\left[ m \right]} ,\, \ldots ,\,c_{q}^{\left[ m \right]} } \right)^{T} \) and \( E = \left( {1,1, \ldots ,1} \right)^{T} \) are \( \left( {q \times 1} \right) \) – vectors.

The vector of generalization coefficients \( c^{[m]} \) can be found with the help of the Lagrange undetermined multipliers’ method. For this reason, we’ll introduce a \( \left( {k \times g} \right) \) – matrix of reference signals and a \( \left( {k \times gq} \right) \) – matrix of ensemble’s output signals

a \( \left( {k \times g} \right) - \) matrix of innovations

and the Lagrange function

Here \( I \) is a \( \left( {g \times g} \right) - \) identity matrix, \( \otimes \) is the tensor product symbol, \( \lambda \) stands for an undetermined Lagrange multiplier.

Solving the Karush-Kuhn-Tucker system of equations

allows obtaining the desired vector of generalization coefficients as follows

where

\( c^{*[m]} \left( k \right) \) is an estimate of the traditional least squares method obtained by the previous \( k \) observations.

In order to research vector properties of the obtained generalization coefficients, we should make some obvious transformations. Considering that a vector of learning errors for the neuron \( GMN^{[m]} \) can be written down in the form

the Lagrange function (7) can be also put down in the form

and then solving a system of equations

we receive

where \( R^{[m]} \left( k \right) = \sum\limits_{\tau = 1}^{k} {\upsilon^{[m]} \left( \tau \right)} \upsilon^{[m]T} \left( \tau \right) = V^{[m]T} \left( k \right)V^{[m]} \left( k \right). \)

The Lagrange function’s value can be easily written down at a saddle point

analyzing which by the Cauchy-Schwarz inequality, it can be shown that the generalized output signal \( \hat{y}^{*[m]} \left( k \right) \) is not inferior to accuracy of the best neuron \( \hat{y}^{[m]j} \left( k \right) \), \( j = 1,2, \ldots ,q \) in an ensemble of output signals.

In order to provide information processing in an online manner, the expression (8) should be performed in a recurrent form which acquires the view of (by using the Sherman-Morrison-Woodbery formula)

Unwieldiness of the algorithm (9), that is in fact the Gauss-Newton optimization procedure, has to do with inversion of \( \left( {g \times g} \right) \) – matrices at every time moment \( k \). And when this value \( g \) is large enough, it is much easier to use gradient learning algorithms to tune the weight vector \( c^{[m]} \left( k \right) \). The learning algorithm can be obtained easily enough if the Arrow-Hurwitz gradient algorithm is used for a search of the Lagrange function’s saddle point which takes on the form in this case

or specifically for (10)

where \( \eta_{c} \left( {k + 1} \right) \), \( \eta_{\lambda } \left( {k + 1} \right) \) are some learning rate parameters.

The Arrow-Hurwitz procedure converges to a saddle point of the Lagrange function when a range of learning rate parameters \( \eta_{c} \left( {k + 1} \right) \) and \( \eta_{\lambda } \left( {k + 1} \right) \) is sufficiently wide. However, one could try to optimize these parameters to reduce training time. For this purpose, we should write down the expression (10) in the form

A left side of the expression (12) describes an a posteriori error \( \tilde{e}^{[m]} \left( k \right) \), which is obtained after one cycle of parameters’ tuning, i.e.

Introducing the squared norm of this error

and minimizing it in \( \eta_{c} \left( {k + 1} \right) \), i.e. solving a differential equation

we come to an optimal value for a learning rate parameter

Then the algorithms (10) and (11) can be finally put down as follows

The procedure (13) is computationally much easier than (9), and if there are no constraints (6) it turns into a multidimensional modification of the Kaczmarz-Widrow-Hoff algorithm which is widely spread in the problems of ANNs learning.

Elements of a generalization coefficients’ vector can be interpreted as membership levels, if a constraint on synaptic weights’ non-negativity for the generalizing neuron \( GMN^{[m]} \) is introduced into the Lagrange function to be optimized, i.e.

Introducing the Lagrange function with additional constraints-inequalities

(here \( \rho \) is a \( \left( {q \times 1} \right) \) – vector of non-negative undetermined Lagrange multipliers) and solving the Karush-Kuhn-Tucker system of equations

an analytical solution takes on the form

and having used the Arrow-Hurwicz-Uzawa procedure, we obtain a learning algorithm of the neuron \( GMN^{[m]} \) in the view of

The first ratio (15) can be transformed into the form of

where \( c^{[m]} \left( {k + 1} \right) \) is defined by the ratio (8), \( \left( {I - P^{[m]} \left( {k + 1} \right)EE^{T} \left( {E^{T} P^{[m]} \left( {k + 1} \right)E} \right)^{ - 1} } \right) \) is a projector to the hyperplane \( \tilde{c}^{[m]T} \left( {k + 1} \right)E = 1 \). It can be easily noticed that the vectors \( E \) and \( \left( {I - P^{[m]} \left( {k + 1} \right)EE^{T} \left( {E^{T} P^{[m]} \left( {k + 1} \right)E} \right)^{ - 1} } \right)P^{[m]} \left( {k + 1} \right)\rho \left( k \right) \) are orthogonal, so we can write down the ratios (14) and (15) in a simpler form

Then the learning algorithm of the generalizing neuron with the constraints (14) finally takes on the form

The learning procedure (16) can be considerably simplified similar to the previous one with the help of the gradient algorithm

Carrying out transformations similar to the abovementioned ones, we finally obtain

The algorithm (17) comprises the procedure (13) as a particular case.

4 Experimental Results

To illustrate the effectiveness of the suggested adaptive neuro-fuzzy system and its learning procedures, we have actualized an experimental test by means of handling the chaotic Lorenz attractor identification. The Lorenz attractor is a fractal structure which matches the Lorenz oscillator’s behavior. The Lorenz oscillator is a three-dimensional dynamical system that puts forward a chaotic flow that is also renowned for its lemniscate shape. As a matter of fact, a state of the dynamical system (three variables of the three-dimensional system) is evolving with the course of time in a complex non-repeating pattern.

The Lorenz attractor may be exemplified by a differential equation in the form of

This system of Eq. (18) can be also put down in the recurrent form

where parameter values are: \( \sigma = 10,\,r = 28,\,b = 2.66,\,dt = 0.001 \).

A data set was acquired with the benefit of (19) which comprises 10000 samples, where 7000 points establish a training set, and 3000 samples make up a validation set.

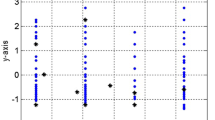

In our system, we had 2 cascades containing 2 multidimensional neurons each and a generalized neuron in each cascade. The first neuron in each cascade involves 2 membership functions. The graphical results are represented in Figs. 4, 5 and 6. One can basically see the forecasting results for the last cascade in Table 1.

5 Conclusion

The hybrid growing neuro-fuzzy architecture and its learning algorithms for the multidimensional growing hybrid cascade neuro-fuzzy system which enables neuron ensemble optimization in every cascade were considered and introduced in the article. The most important privilege of the considered hybrid neuro-fuzzy system is its trait to accomplish a procedure of parallel computation for a data stream based on peculiar elements with upgraded approximating properties. The developed system turns out to be rather easy from the effectuation standpoint; it holds a high processing speed and approximating features. It can be described by a rather high training speed which makes it possible to process online sequential data. The distinctive feature of the introduced system is the fact that every cascade is put together by an ensemble of neurons, and their outputs are joined with the optimization procedure of a specific sort. Thus, every cascade produces an output signal of the optimal accuracy. The proposed system, which is ultimately a growing (evolving) system of computational intelligence, assures processing the incoming data in an online fashion just unlike the rest of conventional systems.

References

da Silva, I.N., Spatti, D.H., Flauzino, R.A., Bartocci Liboni, L.H., dos Reis Alves, S.F.: Artificial Neural Networks: A Practical Course. Springer, Cham (2017)

Cartwright, H.: Artificial Neural Networks. Springer, New York (2015)

Suzuki, K.: Artificial Neural Networks: Architectures and Applications. InTech, New York (2013)

Koprinkova-Hristova, P., Mladenov, V., Kasabov, N.K.: Artificial Neural Networks: Methods and Applications in Bio-/Neuroinformatics. Springer, Cham (2015)

Borowik, G., Klempous, R., Nikodem, J., Jacak, W., Chaczko, Z.: Advanced Methods and Applications in Computational Intelligence. Springer, Cham (2014)

Graupe, D.: Principles of Artificial Neural Networks. Advanced Series in Circuits and Systems. World Scientific Publishing Co. Pte. Ltd., Singapore (2007)

Haykin, S.: Neural Networks and Learning Machines, 3rd edn. Prentice Hall, New Jersey (2009)

Hanrahan, G.: Artificial Neural Networks in Biological and Environmental Analysis. CRC Press, Boca Raton (2011)

Lughofer, E.: Evolving Fuzzy Systems and Methodologies: Advanced Concepts and Applications. Springer, Heidelberg (2011)

Angelov, P., Filev, D., Kasabov, N.: Evolving Intelligent Systems: Methodology and Applications. Willey, Hoboken (2010)

Kasabov, N.: Evolving Connectionist Systems. Springer, London (2003)

Fahlman, S., Lebiere, C.: The cascade-correlation learning architecture. Adv. Neural. Inf. Process. Syst. 2, 524–532 (1990)

Avedjan, E.D., Barkan, G.V., Levin, I.K.: Cascade neural networks. J. Avtomatika i Telemekhanika 3, 38–55 (1999)

Prechelt, L.: Investigation of the CasCor family of learning algorithms. Neural Netw. 10, 885–896 (1997)

Bodyanskiy, Y., Dolotov, A., Pliss, I., Viktorov, Y.: The cascaded orthogonal neural network. Int. J. Inf. Sci. Comput. 2, 13–20 (2008)

Bodyanskiy, Y., Viktorov, Y.: The cascaded neo-fuzzy architecture using cubic-spline activation functions. Int. J. Inf. Theor. Appl. 16(3), 245–259 (2009)

Bodyanskiy, Y., Viktorov, Y.: The cascaded neo-fuzzy architecture and its on-line learning algorithm. Int. J. Intel. Process. 9, 110–116 (2009)

Bodyanskiy, Y., Viktorov, Y., Pliss, I.: The cascade growing neural network using quadratic neurons and its learning algorithms for on-line information processing. Int. J. Intell. Inf. Eng. Syst. 13, 27–34 (2009)

Kolodyazhniy, V., Bodyanskiy, Y.: Cascaded multi-resolution spline-based fuzzy neural network. In: Angelov, P., Filev, D., Kasabov, N. (eds.) Proceedings of the International Symposium on Evolving Intelligent Systems, pp. 26–29. De Montfort University, Leicester (2010)

Bodyanskiy, Y., Kharchenko, O., Vynokurova, O.: Hybrid cascaded neural network based on wavelet-neuron. Int. J. Inf. Theor. Appl. 18(4), 335–343 (2011)

Bodyanskiy, Y., Grimm, P., Teslenko, N.: Evolving cascaded neural network based on multidimensional Epanechnikov’s kernels and its learning algorithm. Int. J. Inf. Technol. Knowl. 5(1), 25–30 (2011)

Bodyanskiy, Y., Vynokurova, O., Teslenko, N.: Cascaded GMDH-wavelet-neuro-fuzzy network. In: Proceedings of the 4th International Workshop on Inductive Modelling, Kyiv, pp. 22–30 (2011)

Bodyanskiy, Y., Vynokurova, O., Dolotov, A., Kharchenko, O.: Wavelet-neuro-fuzzy-network structure optimization using GMDH for the solving forecasting tasks. In: Proceedings of the International Workshop Inductive Modelling, Kyiv, pp. 61–67 (2013)

Bodyanskiy, Y., Tyshchenko, O., Kopaliani, D.: A hybrid cascade neural network with an optimized pool in each cascade. Soft. Comput. 19(12), 3445–3454 (2015)

Bodyanskiy, Y., Tyshchenko, O., Kopaliani, D.: An evolving connectionist system for data stream fuzzy clustering and its online learning. Neurocomputing (2017). http://www.sciencedirect.com/science/article/pii/S0925231217309785

Bodyanskiy, Y., Tyshchenko, O., Kopaliani, D.: Adaptive learning of an evolving cascade neo-fuzzy system in data stream mining tasks. Evolving Syst. 7(2), 107–116 (2016)

Bodyanskiy, Y., Tyshchenko, O., Deineko, A.: An evolving radial basis neural network with adaptive learning of its parameters and architecture. Autom. Control Comput. Sci. 49(5), 255–260 (2015)

Hu, Z., Bodyanskiy, Y.V., Tyshchenko, O.K., Boiko, O.O.: An evolving cascade system based on a set of neo-fuzzy nodes. Int. J. Intell. Syst. Appl. (IJISA) 8(9), 1–7 (2016)

Bodyanskiy, Y., Tyshchenko, O., Kopaliani, D.: A multidimensional cascade neuro-fuzzy system with neuron pool optimization in each cascade. Int. J. Inf. Technol. Comput. Sci. (IJITCS) 6(8), 11–17 (2014)

Miki, T., Yamakawa, T.: Analog implementation of neo-fuzzy neuron and its on-board learning. In: Mastorakis, N.E. (ed.) Computational Intelligence and Applications, pp. 144–149. WSES Press, Piraeus (1999)

Uchino, E., Yamakawa, T.: Soft computing based signal prediction, restoration and filtering. In: Ruan, D. (ed.) Intelligent Hybrid Systems: Fuzzy Logic, Neural Networks, and Genetic Algorithms, pp. 331–349. Kluwer Academic Publishers, Boston (1997)

Yamakawa, T., Uchino, E., Miki, T., Kusanagi, H.: A neo fuzzy neuron and its applications to system identification and prediction of the system behavior. In: Proceedings of the 2nd International Conference on Fuzzy Logic and Neural Networks, pp. 477–483 (1992)

Hoff, M., Widrow, B.: Adaptive switching circuits. In: Neurocomputing: Foundations of Research, pp. 123–134 (1988)

Kaczmarz, S.: Approximate solution of systems of linear equations. Int. J. Control 53, 1269–1271 (1993)

Ljung, L.: System Identification: Theory for the User. Prentice Hall, Upper Saddle River (1999)

Bodyanskiy, Y., Tyshchenko, O., Wojcik, W.: A multivariate non-stationary time series predictor based on an adaptive neuro-fuzzy approach. Elektronika - konstrukcje, technologie, zastosowania 8, 10–13 (2013)

Caminhas, W.M., Silva, S.R., Rodrigues, B., Landim, R.P.: A neo-fuzzy-neuron with real time training applied to flux observer for an induction motor. In: Proceedings 5th Brazilian Symposium on Neural Networks, pp. 67–72 (1998)

Bodyanskiy, Y.V., Pliss, I.P., Solovyova, T.V.: Multistep optimal predictors of multidimensional non-stationary stochastic processes. Doklady AN USSR A(12), 47–49 (1986)

Bodyanskiy, Y.V., Pliss, I.P., Solovyova, T.V.: Adaptive generalized forecasting of multidimensional stochastic sequences. Doklady AN USSR A(9), 73–75 (1989)

Bodyanskiy, Y., Pliss, I.: Adaptive generalized forecasting of multivariate stochastic signals. In: Proceedings of the International Conference on Latvian Signal Processing, vol. 2, pp. 80–83 (1990)

Acknowledgment

This research project is partially subvented by RAMECS and CCNU16A02015.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG

About this paper

Cite this paper

Hu, Z., Bodyanskiy, Y.V., Tyshchenko, O.K. (2018). A Multidimensional Adaptive Growing Neuro-Fuzzy System and Its Online Learning Procedure. In: Shakhovska, N., Stepashko, V. (eds) Advances in Intelligent Systems and Computing II. CSIT 2017. Advances in Intelligent Systems and Computing, vol 689. Springer, Cham. https://doi.org/10.1007/978-3-319-70581-1_13

Download citation

DOI: https://doi.org/10.1007/978-3-319-70581-1_13

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-70580-4

Online ISBN: 978-3-319-70581-1

eBook Packages: EngineeringEngineering (R0)