Abstract

Human motion monitoring widely used in medical rehabilitation, health surveillance, video effects, virtual reality and natural human–computer interaction. Existing human motion monitoring can be divided into two ways, non-wearable and wearable. Non-wearable human motion monitoring system can be used when it is not contact with user, so it has no influence on users’ daily life, but its monitoring range is constrained. Wearable human motion monitoring system can solve this problem well. But as the dataset is not complete, it is inconvenient for the research of related algorithms, and many researchers choose to build their own data acquisition platform. On the other hand, the traditional motion recognition platform which is based on inertial features, is difficult to identify static pose. This limits the application of wearable motion recognition technology. In this paper, we design a human motion recognition platform based on inertial features and positional relationship, which can provide a platform for data acquisition and dataset for the researchers who study algorithm of wearable motion recognition.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Due to human motion monitoring has a wide range of application in health care [1], film and television special effects, virtual reality, natural human–computer interaction, etc., it has been attracting more and more research workers and related businesses. At present, human motion monitoring technology could be divided into two kinds according to the scope of the monitoring system and the movable attributes: non-wearable monitoring technology and wearable monitoring technology.

Non-wearable monitoring technique means that the primary monitoring device is immovable or the monitoring device is non-contact with the monitored object. Such monitoring techniques are partly bases on optical image recognition [2], electromagnetic tracking, or acoustic positioning tracking.

Wearable monitoring system means that the monitoring system is worn on the monitored person [3]. The monitoring device is in contact with the monitored person. Wearable monitoring technology according to the different combinations of sensors can be divided into different monitoring programs [4]. Typical wearable system monitoring techniques include mechanical tracking and MEMS sensor-based monitoring methods [5]. There are also wearable attitude detection systems using other technologies [6] such as bio-fibers [7].

The two monitoring methods have their own advantages and disadvantages, and we compare the two techniques at this stage, show in Table 1.

By contrast, wearable human motion recognition has a strong portability, anti-interference ability, etc., but the recognition rate is low and the identification type is less, which limits its own application. Therefore, in this paper, we design a motion recognition platform based on position relationship and inertial feature. The accuracy is increased from the viewpoint of the sensor type. On the other hands, it provides researchers with a convenient data acquisition platform and then facilitate the researchers to identify the algorithm.

The rest of the paper is organized as follows. Section 2 puts forward the classification model of simple human motion. Section 3 introduces the hardware design of the wearable motion monitoring system. We verify the platform from the two aspects of data acquisition and motion recognition in Sect. 4. Section 5 comes the conclusion.

2 Attributes Based Classification Model

2.1 Human Motion Attributes

In order to distinguish the physical characteristics of different motions, we firstly make a standard to describe these motions. This standard is called motion attribute, which is used to describe human motions. For a variety of motion attributes, we need to abstract these attributes to be applied to describe the characteristics of any motion. The key attributes that describe human motion include rate, intensity, relative positional relationship between nodes, node absolute position relationship, etc.

2.2 Absolute Positional Relationship

Some people’s actions will lead to changes of body position in space, either horizontal or vertical. These changes can be reflected by the absolute position relationship (APR) of node. (APR) of the node refers to the position change, which is relative to the outside of the system. For some of the motion, it is difficult to use inertial characteristics to realize identification, such as up and down the stairs, because from the aspects of inertia characteristics, there is no difference between walking and up and down the stairs. When use the absolute position of the node changes can be distinguished up and down the stairs and walking.

2.3 Relative Positional Relationship

Relative positional relationship (RPR) refers to the positional relationship between multiple nodes. For some actions, we do not need to know the absolute position of the nodes, but only need to consider the relative position of some parts of the body. For instance, we can use the distance between the feet to identify the changes when walking needs to be classified. It is easier to identify this type of motion by using the relative positional relationship between nodes. Sensor data, which can represent the relative positional relationship of the nodes include the distance between nodes, the difference in air pressure between nodes and so on.

3 Platform Design

The goal of the platform is to conveniently and accurately collect the inertial sensor data, the distance between nodes, the pressure value and other data. Considering the cost of the platform and data redundancy and other issues, it should reduce the number of nodes, the location information of each node complement each other, to ensure that the characteristics of motion integrity. Hardware platform is to collect the human motion data information, so it is should consider the data storage methods and formats and mark the different motion conversion. Finally, it is also should consider the synchronization between nodes and minimize the power consumption and other issues.

3.1 Hardware Design

The motion recognition platform is divided into two parts, one part is a handheld device, and the other part is a wearable node. The main function of the handheld device is to control the wearable node to collect data or not, timing to send synchronization packets to the node, synchronize the clocks of each node. Handheld devices mainly include the main control module, button, RF module, power management module. The node includes a master module, a sensor module, a data storage module, a radio frequency module and a power management module. The system block diagram is shown in Fig. 1.

For the sake of wearing, the node should be as small as possible. We choose a smaller device, and the layout of device is designed as dense as possible. The size of the node circuit board is 3.4 cm × 3.0 cm. Node physical map is shown in Fig. 2.

Our designed platform uses a three-axis accelerometer, three-axis gyroscope, three-axis magnetometer. We choose InvenSense’s MPU6050 six-axis sensor. MPU6050 combination of three-axis accelerometer and three-axis gyroscope. Compared to multi-component solutions, it eliminates the difference between the combined gyroscope and the accelerator timeline and reduced a lot of packaging space. Three-axis magnetometer select Honeywell’s HMC5883L. In the sensor for positional relationship monitoring, MEAS’s MS5611 barometer is selected.

4 Experiments and Result Analysis

4.1 Data Collection

This paper studies the simple human motion recognition. It needs to collect data of 10 motions, including standing, lying, squatting, sitting, up and down the stairs, up and down the elevator, walking, running, 6 sets of data were collected for each motion, and each group of action duration is 5–7 min or so. The data collection process is accompanied by another person to record the duration time and type of motion. The actual locations of nodes are shown in Fig. 3.

In the ten-axis sensor data, accelerometer is used to measure the acceleration value in three directions. In the state of motion, the more intense the movement in which direction, the greater absolute value of acceleration in that direction. From Fig. 4 we can see how the acceleration changes in static and movement state. The gyroscope is a measure of angular velocity changes, the difference between the attitude of the movement and the static also clearly stands out. Pneumatic pressure reflects the height of the node changes, in the upper and lower elevators, up and down the stairs can clearly see the trend of changes in pressure. For a constant attitude, the pressure remains essentially constant. In the enlarged part, we can see that lying and standing state of the shoulder pressure changes significantly, and the foot pressure is basically the same as the actual body parts, which is also consistent with the height of the actual body parts.

4.2 Algorithm Validation

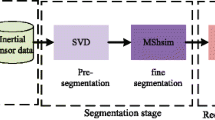

Select the appropriate set of features, using the commonly used motion recognition algorithm, like neural network BP, decision tree C4.5, SVM classifier and other algorithms to verify the correctness of the platform. C4.5 confidence factor is set to 0.25, SVM kernel function select RBF, BP uses the default configuration of a single hidden layer. SVM uses the LibSVM toolbox, C4.5 and BP use Weka toolbox.

The experimental results show in Table 2 that BP is more accurate than C4.5, C4.5 is higher than SVM. In general, the recognition accuracy is relatively high. The description of the platform to meet the motion recognition inertia feature data acquisition work.

5 Conclusion

In view of the lack of wearable gesture recognition data sources, this paper designs a more complete data acquisition platform. To use of handheld devices to control the collection of node data and motion calibration, making motion data collection more convenient. Through the analysis of human motion attributes, we proposed a more reasonable data collection program and node deployment program. According to this program, we can collect data, analyze data characteristics and build data sets. In the end we use different algorithms of the motion classification to verify the correctness of the platform.

References

Pantelopoulos, A., Bourbakis, N.G.: A survey on wearable sensor-based systems for health monitoring and prognosis. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 40(1), 1–12 (2010)

Shian-Ru, K., Le Uyen, Thuc H., Yong-Jin, L., et al.: A review on video-based human activity recognition. Computers 2(2), 88–131 (2013)

Moeslund, T.B., Hilton, A., Krüger, V.: A survey of advances in vision-based human motion capture and analysis. Comput. Vis. Image Underst. 104(2), 90–126 (2006)

Lara, O.D., Labrador, M.A.: A survey on human activity recognition using wearable sensors. IEEE Commun. Surv. Tutorials 15(3), 1192–1209 (2013)

Shaolin, W., Luping, X., Weiwei, T., et al.: A low power and high accuracy MEMS sensor based activity recognition algorithm pp. 33–38 (2014)

Tolkiehn, M., Atallah, L., Lo, B., et al.: Direction sensitive fall detection using a triaxial accelerometer and a barometric pressure sensor. In: Annual International Conference of the IEEE Engineering in Medicine and Biology Society, pp. 369–372. IEEE (2011)

Lorincz, K., Chen, B., Challen, G.W., et al.: Mercury: a wearable sensor network platform for high-fidelity motion analysis. SenSys 9, 183–196 (2009)

Acknowledgements

This work is supported by National Natural Science Foundation of China (NSFC) project No. 61671056, No. 61302065, No. 61304257, Beijing Natural Science Foundation Project No. 4152036 and the Fundamental Research Funds for the Central Universities No. FRF-TP-15-026A2.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG

About this paper

Cite this paper

He, J., Wang, C., Xu, C., Duan, S. (2018). Human Motion Monitoring Platform Based on Positional Relationship and Inertial Features. In: Xhafa, F., Patnaik, S., Zomaya, A. (eds) Advances in Intelligent Systems and Interactive Applications. IISA 2017. Advances in Intelligent Systems and Computing, vol 686. Springer, Cham. https://doi.org/10.1007/978-3-319-69096-4_52

Download citation

DOI: https://doi.org/10.1007/978-3-319-69096-4_52

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-69095-7

Online ISBN: 978-3-319-69096-4

eBook Packages: EngineeringEngineering (R0)