Abstract

In this paper we try to analyse the dual-projection approach to weighted 2-mode networks using the tools of\(\mathcal{K}\)-Formal Concept Analysis (\(\mathcal{K}\)-FCA), an extension of FCA for incidences with values in a particular kind of semiring.

For this purpose, we first revisit the isomorphisms between 2-mode networks with formal contexts. In the quest for similar relations when the networks have non-Boolean weights, we relate the dual-projection method to both the Singular Value Decomposition and the Eigenvalue Problem of matrices with values in such algebras, as embodied in Kleinberg’s Hubs and Authorities (HITS) algorithm.

To recover a relation with multi-valued extensions of FCA, we introduce extensions of the HITS algorithm to calculate the influence of nodes in a network whose adjacency matrix takes values over dioids, zerosumfree semirings with a natural order. In this way, we show the original HITS algorithm to be a particular instance of the generic construction, but also the advantages of working in idempotent semifields, instances of dioids.

Subsequently, we also make some connections with extended\(\mathcal{K}\)-FCA, where the particular kind of dioid is an idempotent semifield, and provide theoretical reasoning and evidence that the type of knowledge extracted from a matrix by one procedure and the other are different.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

Keywords

- Weighted two-mode networks

- Dual-projection analysis

- HITS

- SVD

- Idempotent semifield

- Idempotent Formal Concept Analysis

- Idempotent HITS

1 Motivation and Introduction

Consider a weighted bipartite graph,Footnote 1 or bipartite network or two-mode network, (G ∪ M, R), where R ∈ K G×M\ is a relation with values in an algebra K . This is a pervasive abstraction in Graph Theory [1] and Social Network Analysis (SNA) [2] where they are also known as affiliation or membership networks.

The direct or dual-projection approach is one of two competing methodologies for the analysis of two-mode networks, the other being the conversion approach [2]. In the latter, the data are first projected into a one-mode network and analysed with the tools of (weighted) graph analysis, that is (standard) network analysis. This raises evident and justified concerns of information loss [3].

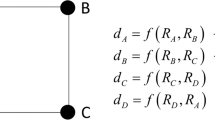

In the dual-conversion approach, however, the analysis problem is transformed into two one-mode projection networks and analysed separately, with the projections on the rows P G and the columns P M being the matrices:

The dual-projection approach postulates that we can provide measures of centrality, core vs. periphery and structural equivalence for each of the projection networks with limited loss of global information, in terms of the Singular Value Decomposition (SVD) [4]. This is a set of results about the decomposition of real- or complex-valued rectangular matrices [5, 6] with applications in data processing, signal theory, machine learning and computer science at large [7], the most important of which is the following:

Theorem 1 (The Singular Value Decomposition Theorem)

Given a matrix \(M \in \mathcal{ M}_{m\times n}(\mathcal{K})\) where \(\mathcal{K}\) is a field, there is a factorization M = UΣV ∗ —where ⋅ ∗ stands for conjugation—given in terms of three matrices

-

\(U \in \mathcal{ M}_{m\times m}(\mathcal{K})\) is a unitary matrix of left singular vectors.

-

\(\varSigma \in \mathcal{ M}_{m\times n}(\mathcal{K})\) is a diagonal matrix of non-negative real values called the singular values.

-

\(V \in \mathcal{ M}_{n\times n}(\mathcal{K})\) is a unitary matrix of right singular vectors.

Often the singular values of a matrix are listed in descending order—and the left and right singular vectors are re-ordered accordingly—as a prelude to any of a number of reconstruction theorems aimed at re-building the original matrix M from the triples of singular value, left and right vectors (σ i, u i, v i) [see 5, 6, for details]. This is particularly interesting for model building.

1.1 The Analysis of Bipartite Networks with Formal Concept Analysis

Formal Concept Analysis (FCA) can be conceived as a data-driven unsupervised learning technique for Boolean data. Its main results can be summarized as follows [8].

Theorem 2 (Basic Theorem of FCA, Extended )

Let G be a set of objetcs, M a set of attributes and (G, M, I) be a formal context with I ⊆ G × M and polar operators ⋅ ↑: 2G → 2M and ⋅ ↓: 2M → 2G .

and call formal concepts the pairs with A ↑ = B ⇔ A = B ↓ ordered as

Then:

-

1.

The set of formal concepts \(\mathfrak{B}(G,M,I)\) with the hierarchical order is a complete lattice called the concept lattice of (G, M, I) in which infima and suprema are given by:

$$\displaystyle\begin{array}{rcl} \begin{array}{rclrcl} \bigwedge _{t\in T}(A_{t},B_{t})& =&\left (\bigcap _{t\in T}A_{t},\left (\bigcup _{t\in T}B_{t}\right )^{\uparrow }\right )\qquad \bigvee _{t\in T}(A_{t},B_{t})& =&\left (\left (\bigcup _{t\in T}A_{t}\right )^{\downarrow },\bigcap _{t\in T}B_{t}\right )\end{array} & & {}\end{array}$$(2) -

2.

Conversely, a complete lattice \(\mathcal{V } =\langle V,\leq \rangle\) is isomorphic to \(\underline{\mathfrak{B}}(G,M,I)\) if and only if there are mappings \(\overline{\gamma }: G \rightarrow V\) and \(\overline{\mu }: M \rightarrow V\) such that \(\overline{\gamma }(G)\) is supremum-dense in \(\mathcal{V }\) , \(\overline{\mu }(M)\) is infimum-dense in \(\mathcal{V }\) and gIm is equivalent to \(\overline{\gamma }(g) \leq \overline{\mu }(m)\) for all g ∈ G and all m ∈ M. In particular, \(\mathcal{V }\cong \underline{\mathfrak{B}}(V,V,\leq )\) .

The ability to analyse bipartite digraphs (that is Boolean bipartite networks) comes from the existence of a “cryptomorphism” [11, pp. 155–156]—which we take to mean “unexpected isomorphisms”Footnote 2—with formal contexts, possibly first identified in [13]. We adhere here to the advantages of using cryptomorphisms for the description of apparently different objects laid out in [14], namely reaching a better understanding of the tools being analysed.

The Cryptomorphism of Bipartite Digraphs and Formal Contexts When K is the Boolean set, consider the two following definitions:

-

In Graph Theory, let G and M be two disjoint sets and consider the graph (V, E) with V = G ∪ M and E ∈ 2V ×V such that for every ordered pair e = (ge, me) ∈ E its endpoints belong to different subsets of V, ge ∈ G and me ∈ M . Then (V, E) is a bipartite graph.

-

In FCA, let G be a set of objects and M be a set of attributes, and I ∈ 2G×M be an incidence relation between them. Then (G, M, I) is a formal context.

Clearly, they define cryptomorphic entities whereby I is the restriction of E to G × M [15, Sect. 3.1].

Hence the capabilities of FCA for representing bipartite digraphs follow from the universal representation capabilities of concept lattices expressed in the Basic Theorem. This promises that many fundamental abstractions in each domain will have an important role in the other. Crucially, formal concepts are cryptomorphic to bicliques maximal with respect to inclusion [15, Proposition 1]. This was probably first suggested in [16], clearly stated in [13], an later taken up by a number of researchers [17, 18]. Note that these techniques and concerns pre-date the apparition of multi-valued extensions to FCA and therefore concentrate in Boolean data.

Some of the advantages of using FCA for modelling bipartite networks stem from the hierarchical, non-partitional (overlapping) clustering of both domains G and M [19]. This approach is contextualized and summarized in the wider context of Social Network Visualization in [20], whose most harrying problem is the visual clutter. For concept lattices this is mostly addressed by means of algebraic information reduction techniques [21] or heuristic pruning [19].

For our present purposes [22] has already noted that formal concepts define optimal factors for the reconstruction of Boolean matrices—whether incidences or adjacencies—and this is a result that strongly hints that FCA is related to the SVD.

SVD Leads to Non-Boolean Contexts The previous argumentation would seem to imply that the SVD, as a technique to analyse weighted bipartite digraphs, is also important for the analysis of formal contexts and concept lattices. Alas, although the SVD is cursorily applied to bipartite digraphs whose entries belong to {0, 1} , it is actually a procedure developed for matrices with entries in the complex field\(\mathbb{C}\) . To draw a parallel with the nice situation in the previous section would call for the consideration of an isomorphism between bipartite digraphs whose edge weights belong to an algebra and multi-valued formal contexts with incidences in said algebra.

Unfortunately, the theory of concept lattices issuing from such multi-valued contexts is not as complete as (standard) FCA: for instance, when the context takes values in a fuzzy-semiring the universal representation capabilities in the Main Theorem [23, Theorem 5.3] have not been cast in terms of (fuzzy) bipartite networks, to the best of our knowledge, and the Main Theorem of\(\mathcal{K}\)-FCA [24, Theorem 2.14], where\(\mathcal{K}\) is a complete idempotent semifield (see below) has as yet unexplored representation capabilities. We note that, despite these limitations, a number of results on the reconstruction of matrices specifically based on fuzzy-formal concepts are available [25].

1.2 The Study of Networks Using HITS and the SVD

In this chapter we are interested in laying out the relationship between Formal Concept Analysis [8] and the dual-projection approach to the analysis of bipartite networks. At the beginning of this section we have argued that the SVD must figure prominently in this picture, so we will detour slightly to show yet one more instance of the pervasiveness of it in the analysis of networks: its relation to one of the first well-known approaches to link analysis on the Web, the HITS algorithm.

The Hubs and Authorities algorithm or Hyperlink-Induced Topic Search (HITS) [26, 27] was designed to solve the problem of ranking the nodes of a dynamic, directed 1-mode network of nodes obtained from a query against a search engine. It postulates the existence of two qualities in nodes: their authoritativeness—their quality of being authorities with respect to a pervasive topic in the nodes—and their hubness—their quality of being good pointers to authorities. These are now cursorily available in software for analysing network data, e.g. [28].

Consider a network, that is, a weighted directed graph, N = (V, E, w) where V = {v i}i = 1 n is a set of nodes E ⊆ V × V is a set of edges, and w: E → [0, 1] a weight function on the edges w(v i, v j) > 0, (v i, v j) ∈ E and w(v i, v j) = 0 otherwise. It can alternatively be defined by an adjacency matrix T N with [0, 1]-weights for its edges, (T N)ij = w(v i, v j) . The notation tries to suggest that T N is a stochastic transition matrix rather than a generic adjacency.Footnote 3

HITS is solved in terms of the left and right eigenvectors of this matrix T N, where the vertices and edges of the digraph model the nodes and links in any (social) network. HITS finds an authority score a(v i) and a hub score h(v i) for each node aggregated as vectors, based on the following iterative procedure:

-

Start with initial vector estimates h < 0 > and a < 0 > of the hub and authority scores.

-

Upgrade the scores withFootnote 4:

$$\displaystyle\begin{array}{rcl} \begin{array}{rclrcl} h& \leftarrow &Ta\qquad a& \leftarrow &T^{\text{T}}h\end{array} & & {}\end{array}$$(3)so that in general, for k ≥ 1:

$$\displaystyle\begin{array}{rcl} \begin{array}{lllllll} h^{<k>}& =&(TT^{\text{T}})^{k}h^{<0>}\qquad \qquad a^{<k>} & =&(T^{\text{T}}T)^{k}a^{<0>} \\ h^{<k>}& =&(TT^{\text{T}})^{k-1}Ta^{<0>}\qquad a^{<k>}& =&(T^{\text{T}}T)^{k-1}T^{\text{T}}h^{<0>}\end{array} & & {}\end{array}$$(4) -

Since matrix T is non-negative, in general the sequences {h < k >}k and {a < k >}k would diverge, so the next step is to prove that the limits:

$$\displaystyle\begin{array}{rcl} \begin{array}{rclrcl} \lim _{k\rightarrow \infty }\frac{h^{<k>}} {c^{k}} & =&h^{<{\ast}>}\qquad \lim _{k\rightarrow \infty }\frac{a^{<k>}} {d^{k}} & =&a^{<{\ast}>}\end{array} & & {}\end{array}$$(5)exist, in which case they are eigenvectors of their respective matrices for seemingly arbitrary c and d,

$$\displaystyle\begin{array}{rcl} \begin{array}{rclrcl} (TT^{\text{T}})h^{<{\ast}>}& =&ch^{<{\ast}>}\qquad (T^{\text{T}}T)a^{<{\ast}>}& =&da^{<{\ast}>}.\end{array} & & {}\end{array}$$(6) -

As long as the initial estimates do not inhabit the null space of these matrices—making them orthogonal to h < ∗ > and a < ∗ >, respectively—the iterative process will end up finding the principal eigenvectors. The proof of this fact entails that the initial estimates h < 0 > and a < 0 > should be non-negative.

It is easy to prove the following:

Lemma 1

HITS is a specialized version of the SVD.

Proof

Since we want to emphasize the mutual dependence of hubness and authoritativeness scores, so after [29] we write (3) in matrix form

where we have substituted zero matrices for dots, as customary.

The arrows are used to suggest that we are interested in the fixpoint of the iterative update of this matrix equation. But we know that a fixpoint of it is the analytical solution of the following eigenproblem in the variable z = [x t y t]t,

for eigenvalue σ = 1 .

To see that the solutions to this problem are of the form w = [h t a t]t that is, pairs of hub and authority vectors, we expand the system (7) into two equations—called by Lanczos the “shifted eigenvalue problem” [29]—

Equation (8) is the proof that HITS is actually trying to solve the singular value-singular vector problem [5, 29], where h has the role of a left singular vector, a that of a right singular vector, and σ = 1 is the singular value. □

Note that:

-

Under the conditions laid out in the original HITS setting, the singular value is not important.

-

The projectors appear in the solution of (7) by pre-multiplying both sides of the equation with A and then we would obtain decoupled solutions that can be re-coupled with (8).

$$\displaystyle{ A^{2}\otimes z = z\otimes \sigma ^{2} \Leftrightarrow \left [\begin{array}{*{10}c} TT^{\text{T}}& \cdot \\ \cdot &T^{\text{T}}T\end{array} \right ]\otimes \left [\begin{array}{*{10}c} x\\ y\end{array} \right ] = \left [\begin{array}{*{10}c} x\\ y\end{array} \right ]\otimes \sigma ^{2} }$$(9)

In light of this, we can see how HITS, which in principle is available for 1-mode networks, that is, it is a conversion approach procedure is actually using a direct or dual-projection approach in considering both vector spaces associated with T.

In light of this, we can see how HITS is actually using a direct or dual-projection approach—in considering both vector spaces associated with T—in spite of being actually available for 1-mode networks, that is, being a conversion approach.

1.3 The Problem and Reading Guide

In this paper we develop a similar tool as the SVD for bipartite networks with non-Boolean edge weights, but we develop it as if it were an instance of the better understood, HITS problem.

-

1.

First, as suggested by the form of (4), we consider two sets G and M, because we want to study the quality of being a hub and being an authority separately. This implies passing from directed bipartite graphs to networks, also known as weighted (directed) bipartite graphs.

-

2.

Second, the matrix algorithm HITS requires a “positive” algebra with addition, multiplication and scalar division—in the case of the original HITS, this semifield is\(\mathbb{R}_{+}\), the positive reals. Hence we consider edge weights R ∈ K G×M in a naturally ordered semiring with division or positive semifield \(\mathcal{K}\) (cfr. Sect. 2.1). Then\(\mathcal{K}\)-formal contexts, denoted as (G, M, R), are a natural encoding for this type of weighted bipartite digraphs.

Note that the original HITS setting can be recovered by using V: = G = M and T: = R and working in the semifield of positive reals\(\mathbb{R}_{0}^{+}\) with R ij = 1 if (v i, v j) ∈ E and R ij = 0 otherwise. Similarly, the original dual-projection approach deals only with the case where R is actually binary but is considered to be embedded in the complex numbers.

To develop our program we first introduce in Sect. 2.1 some definitions and notation about semirings in general, and about positive semifields in particular. In Sect. 2.2 we introduce the eigenproblem over dioids as a step to solving the singular value problem in dioids, and in Sects. 2.3 and 2.4 a very general technique to do so. Section 3 presents the weight of our results, including a short Example and a Discussion. We finish with a Summary and Conclusions.

2 Theory and Methods

2.1 Semiring and Semimodules over Semirings

A semiring is an algebra\(\mathcal{S} =\langle S,\oplus,\otimes,\epsilon,e\rangle\) whose additive structure, 〈S, ⊕, ε〉, is a commutative monoid and whose multiplicative structure, 〈S∖{ε}, ⊗, e〉, is a monoid with multiplication distributing over addition from right and left and an additive neutral element absorbing for ⊗, i.e.\(\forall a \in S,\;\epsilon \otimes a =\epsilon\). A semiring is:

-

Commutative, if its multiplication is commutative.

-

Zerosumfree, if it does not have non-null additive factors of zero,\(a \oplus b =\epsilon \Rightarrow a =\epsilon \text{ and }b =\epsilon \,,\forall a,b \in S\) .

-

Entire, if\(a \otimes b =\epsilon \Rightarrow a =\epsilon \, \text{or}\,b =\epsilon \,,\forall a,b \in S\) .

-

Idempotent, if its addition is.

-

A selective semiring, if the arguments attaining the value of the additive operation can be identified.

-

Radicable, if the equation a b = c can be solved for a.

-

Complete, [30] if for every (possibly infinite) family of elements {a i}i ∈ I ⊆ S we can define an element ∑ i ∈ I a i ∈ S such that

-

1.

if\(I = \varnothing\), then ∑ i ∈ I a i = ε,

-

2.

if I = {1…n}, then ∑ i ∈ I a i = a 1 ⊕ … ⊕ a n,

-

3.

if b ∈ S, then\(b \otimes \left (\sum _{i\in I}a_{i}\right ) =\sum _{i\in I}b \otimes a_{i}\) and\(\left (\sum _{i\in I}a_{i}\right ) \otimes b =\sum _{i\in I}a_{i} \otimes b\), and

-

4.

if {I j}j ∈ J is a partition of I, then\(\sum _{i\in I}a_{i} =\sum _{j\in J}\left (\sum _{i\in I_{j}}a_{i}\right )\).

-

1.

Entire zerosumfree semirings are called sometimes information algebras and have abundant applications [31]. Their importance stems from the fact that they model positive quantities.

Crucially, every commutative semiring accepts a canonical preorder, as a ≤ b if and only if there exists c ∈ S with a ⊕ c = b which is compatible with addition. A dioid is a commutative semiring where this relation is actually an order. Dioids are zerosumfree. A dioid that is also entire—that is, when a ⊗ b = ε then either a = ε or b = ε or both—is a positive dioid.

If I is countable in the definitions above, then\(\mathcal{S}\) is countably complete and already zerosumfree [32, Proposition 22.28]. The importance for us is that in complete semirings, the existence of the transitive closures is guaranteed (see Sect. 2.3). Commutative complete dioids are already complete residuated lattices.

A semiring whose commutative multiplicative structure is a group will be called a semifield.Footnote 5 Semifields are all entire, and we will use\(\mathcal{K}\) to refer to them. Therefore semifield which is also a dioid is both entire and naturally ordered. These are sometimes called positive semifields, examples of which are the positive rationals, the positive reals or the max-plus and min-plus semifields. Semifields are all incomplete except for the Booleans, but they can be completed as\(\overline{\mathcal{K}}\) [24], and we will not differentiate between complete or completed structures.

A semimodule (over a semiring) is an analogue of a vector space over a field. Semimodules inherit from their defining semirings the qualities of being zerosumfree, complete or having a natural order. In fact, semimodules over complete commutative dioids are also complete lattices. Rectangular matrices over a semiring form a semimodule\(\mathcal{M}_{m\times n}(\mathcal{S})\), and in particular, row- and column-spaces\(\mathcal{S}^{1\times n}\) and\(\mathcal{S}^{n\times 1}\). The set of square matrices\(\mathcal{M}_{n}(\mathcal{S})\) is also a semiring (but non-commutative unless n = 1).

2.2 The Eigenvalue Problem over Dioids

Given (6), understanding the HITS iteration is easier once understood the eigenvalue problem in a semiring. So let\(\mathcal{M}_{n}(\mathcal{S})\) be the semiring of square matrices over a semiring\(\mathcal{S}\) with the usual operations. Given\(A \in \mathcal{ M}_{n}(\mathcal{S})\) the right (left) eigenproblem is the task of finding the right eigenvectors v ∈ S n×1 and right eigenvalues ρ ∈ S (respectively, left eigenvectors u ∈ S 1×n and left eigenvalues λ ∈ S) satisfying:

The left and right eigenspaces and spectra are the sets of these solutions:

Since Λ(A) = P(A t) and\(\mathcal{U}_{A}(A) =\mathcal{ V }_{\lambda }(A^{\text{T}})\) , from now on we will omit references to left eigenvalues, eigenvectors and spectra, unless we want to emphasize differences.

In order to solve (7) in dioids we have to use the following theorem [33, 34]:

Theorem 3 (Gondran and Minoux [34, Theorem 1])

Let \(A \in \mathcal{ S}^{n\times n}\) . If A ∗ exists, the following two conditions are equivalent:

-

1.

A . i + ⊗μ = A . i ∗⊗μ for some i ∈ {1…n}, and μ ∈ S.

-

2.

A . i + ⊗μ (and A . i ∗⊗μ) is an eigenvector of A for e, \(A_{.i}^{+}\otimes \mu \in \mathcal{ V }_{e}(A)\) .

where we define the transitive closure A + = ∑ k = 1 ∞ A and the transitive reflexive closure A ∗ = ∑ k = 0 ∞ A of A (also called Kleene’s plus and star operators).

In [35–37] Gondran and Minoux’ theorem was made more specific in two directions: on the one hand, by focusing on particular types of completed idempotent semirings—semirings with a natural order where infinite additions of elements exist, so transitive closures are guaranteed to exist and sets of generators can be found for the eigenspaces—and, on the other hand, by considering more easily visualizable subsemimodules than the whole eigenspace—a better choice for exploratory data analysis.

2.3 Graphs, Matrices and Closures over Dioids

From Theorem 3 it is clear that we need efficient methods to obtain the closures in order to solve the eigenvalue-eigenvector problem—and hence HITS and SVD—in the general setting of semirings. For this purpose, it is interesting to extend the cryptomorphism between weighted graphs and square matrices of Sect. 1.2 explicitly:

-

For a matrix\(A \in \mathcal{M}_{n}(\mathcal{S})\), the network or weighted digraph induced by A, N A = (V A, E A, w A), consists of a set of vertices V A, a set of arcs, E A = {(i, j)∣A ij ≠ ε S}, and a weight\(w_{A}: V _{A} \times V _{A} \rightarrow S,\;\left (i,j\right )\mapsto w_{A}(i,j) = a_{ij}\).

-

For a weighted directed graph, N = (V, E, w) where V = {v i}i = 1 n is a set of nodes E ⊆ V × V is a set of edges, and w: E → S a weight function on the edges w(v i, v j) > 0, (v i, v j) ∈ E and w(v i, v j) = 0 otherwise, the matrix\(A_{N} \in \mathcal{M}_{n}(\mathcal{S})\) is defined as (A N)ij = w(v i, v j) .

This allows us intuitively to apply all notions from networks to matrices and vice versa, like the underlying graph G A = (V A, E A) disregarding the weights, the set of paths Π A +(i, j) between nodes i and j or the set of cycles C A +. The following account is a summary of results in this respect, and we refer the reader to [35, 36] for proofs.

Lemma 2

Let \(A \in \mathcal{ M}_{n}(S)\) be a square matrix over a commutative semiring \(\mathcal{S}\) . A ∗ exists if and only if A + exists and then:

But since in incomplete semirings the existence of the closures is not warranted, our natural environment should be that of complete semirings.

On the other hands, in dioids the following lemma holds:

Lemma 3

Let \(A \in \mathcal{ M}_{n}(S)\) be a square matrix over a dioid \(\mathcal{S}\) . For partition \(\bar{n} =\alpha \cup \beta\) call \(\mathit{\text{PER}}\left (A\right ) = A_{\beta \alpha }A_{\alpha \alpha }^{{\ast}}A_{\alpha \beta } \oplus A_{\beta \beta }\) . Then

Proof

Adapted from [ 38 , Lemma 4.101] □

Notice that closures and simultaneous row and column permutations commute:

Lemma 4

Let \(A,B \in \mathcal{ M}_{n}(\mathcal{S})\) and let P be a permutation such that B = P t AP. Then B + = P t A + P and B ∗ = P t A ∗ P.

A square matrix is irreducible if it cannot be simultaneously permuted into a triangular upper (or lower) form. Otherwise we say it is reducible. Irreducibility expresses itself as a graph property on the induced digraph D A of Sect. 1.2.

Lemma 5

If \(A \in \mathcal{ M}_{n}(S)\) is irreducible, then:

-

The induced digraph D A has a single strongly connected component.

-

All nodes in its induced digraph D A are connected by cycles.

The irreducible case is used as a basic case in the recursive building of the closure of any possible matrix next. In it, the condensation digraph is built using the classes of the reachability relation in D A as the vertices (the strongly connected components of D A) and their connections as edges:

Lemma 6 (Recursive Upper Frobenius Normal Form, UFNF)

Let \(A \in \mathcal{ M}_{n}(S)\) be a matrix over a semiring and \(\overline{G}_{A}\) its condensation digraph. Then,

-

1.

(UFNF 3 ) If A has zero lines it can be transformed by a simultaneous row and column permutation of V A into the following form:

$$\displaystyle{ P_{3}^{\mathit{\text{T}}}\otimes A\otimes P_{ 3} = \left [\begin{array}{*{10}c} \mathcal{E}_{\iota \iota }& \cdot & \cdot & \cdot \\ \cdot &\mathcal{E}_{\alpha \alpha }&A_{\alpha \beta }&A_{\alpha \omega } \\ \cdot & \cdot &A_{\beta \beta }&A_{\beta \omega } \\ \cdot & \cdot & \cdot &\mathcal{E}_{\omega \omega }\\ \end{array} \right ] }$$(12)where either A αβ or A αω or both are non-zero, and either A αω or A βω or both are non-zero. Furthermore, P 3 is obtained concatenating permutations for the indices of simultaneously zero columns and rows V ι , the indices of zero columns but non-zero rows V α , the indices of zero rows but non-zero columns V ω and the rest V β as P 3 = P(V ι)P(V α)P(V β)P(V ω).

-

2.

(UFNF 2 ) If A has no zero lines it can be transformed by a simultaneous row and column permutation P 2 = P(A 1)…P(A k) into block diagonal UFNF:

$$\displaystyle{ P_{2}^{\mathit{\text{T}}}\otimes A\otimes P_{ 2} = \left [\begin{array}{*{10}c} A_{1} & \cdot &\ldots & \cdot \\ \cdot &A_{2} & \ldots & \cdot \\ \vdots & \vdots &\ddots & \vdots \\ \cdot & \cdot &\ldots &A_{K}\\ \end{array} \right ] }$$(13)where {A k}k = 1 K, K ≥ 1 are the matrices of connected components of \(\overline{G}_{A}\).

-

3.

(UFNF 1 ) If A is reducible with no zero lines and a single connected component it can be simultaneously row- and column-permuted by P 1 to

$$\displaystyle{ P_{1}^{\mathit{\text{T}}}\otimes A\otimes P_{ 1} = \left [\begin{array}{*{10}c} A_{11} & A_{12} & \cdots & A_{1R} \\ \cdot &A_{22} & \cdots & A_{2R}\\ \vdots & \vdots & \ddots & \vdots \\ \cdot & \cdot &\cdots &A_{RR}\\ \end{array} \right ] }$$(14)where A rr are the matrices associated with each of its R strongly connected components (sorted in a topological ordering), and P 1 = P(A 11)…P(A RR).

Note that irreducible blocks are the base case of UFNF1, so we sometimes refer to irreducible matrices as being in UFNF0.

Note that as a result of this Section, we know how to calculate algorithmically the transitive closure for any type of matrix A in any complete semiring.

2.4 Eigenvalues and Eigenvectors of Matrices over Complete Dioids

By the reasoning in previous sections and the cryptomorphism above, eigenvectors of the projection matrices in the dual-projection approach are vectors describing some qualities of the nodes in the weighted bipartite graph, e.g. authoritativeness or hubness in HITS. This is the reason why we need to characterize such vectors better.

2.4.1 Orthogonality of Eigenvectors

In spectral decomposition, orthogonality of the eigenvectors plays an important role. In zerosumfree semimodules orthogonality cannot be as prevalent as in standard vector spaces. To see this, first call the support of a vector, the set of indices of non-null coordinates\(\mathop{\mathrm{supp}}\nolimits (v) =\{ i \in \overline{n}\vert v_{i}\neq \epsilon \}\), and consider a simple lemma:

Lemma 7

In semimodules over entire, zerosumfree semirings, only vectors with empty intersection of supports are orthogonal.

Proof

Suppose v ⊥ u, then ∑ i = 1 n v i ⊗ u i = ε. If any v i = ε or u i = ε then their product is null, so we need only consider a non-empty\(\mathop{\mathrm{supp}}\nolimits (v) \cap \mathop{\mathrm{supp}}\nolimits (u)\) . In this case,\(v^{\text{T}} \otimes u =\sum _{i\in \mathop{\mathrm{supp}}\nolimits (v) \cap \mathop{\mathrm{supp}}\nolimits (u)}v_{i} \otimes u_{i}\). But if\(\mathcal{S}\) is zerosumfree, for the sum to be null every factor has to be null. And for a factor to be null, since\(\mathcal{S}\) is entire, either v i is null, or u i is null, and then i would not belong to the common support. □

2.4.2 The Null Eigenspaces

If any, the eigenvectors of the null eigenvalue are interesting in that they define the null eigenspace. Also, the particular eigenvalue ⊥ can only appear in UFNF3. The following proposition describes the null eigenvalue and eigenspace:

Proposition 1

Let \(\overline{\mathcal{S}}\) be a semiring and \(A \in \mathcal{ M}_{n}(\overline{\mathcal{S}})\) . Then:

-

1.

If the i-th column is zero then the characteristic vector e i is a fundamental eigenvector of ε for A and ε ∈ P p(A) .

-

2.

Non-collinear eigenvectors of ε are orthogonal, so the order of multiplicity of ⊥ ∈ P p(A) is the number of empty columns of A .

-

3.

If \(\mathcal{S}\) is entire, then G A has no cycles if and only if P p(A) = { ε} .

-

4.

If \(\mathcal{S}\) is entire and zerosumfree, the null eigenspace if generated by the fundamental eigenvectors of ε for A, \(\mathcal{V }_{\epsilon }(A) =\langle \mathop{ \mathrm{FEV}}\nolimits _{\rho }\!\!\left (\epsilon \right )A\rangle\) .

Proof

See [35, 3.6 and 3.7]. Claim 2 is a consequence of claim 1 and Lemma 7. □

Note that these are important in as much as they generate ⊥ coordinates in the eigenvectors, that is, in the hubs and authorities vectors.

2.4.3 Eigenvalues and Eigenvectors of Matrices over Positive Semifields

When\(\mathcal{S}\) has more structure we can improve on the results in the previous section. The first proposition advises us to concentrate on the irreducible blocks:

Proposition 2

If \(\mathcal{K}\) is a positive semifield, \(A \in \mathcal{ M}_{n}(\mathcal{K})\) is irreducible, and \(v \in \mathcal{ V }_{\rho }(A)\) then ρ > ε and \(\forall i \in \overline{n}\,,v_{i}>\epsilon\) .

Proof

See [33, Lemma 4.1.2]. □

Note that these results apply to\(\mathbb{R}_{0}^{+}\), but not to\(\mathbb{R}_{+,\times }\), the reals, or to\(\mathbb{C}_{+,\times }\), the complex numbers, since the latter are not dioids.

For a finite ρ ∈ K in a semifield, let\((\widetilde{A}^{\rho })^{+} = (\rho ^{-1} \otimes A)^{+}\) be the normalized transitive closure of A. The lemma below allows us to change the focus from the transitive closures to the circuit structure of G A and vice versa.

Lemma 8

If \(\mathcal{K}\) is a semifield and \(A \in \mathcal{ M}_{n}(\mathcal{K})\) , then if (ρ −1 ⊗ A)∗ exists and if either \(\sum _{c\in C_{i}}w(c) \otimes (\rho ^{-1})^{l(c)} \oplus e =\sum _{c\in C_{i}}w(c) \otimes (\rho ^{-1})^{l(c)}\) where C i denotes the set of circuits in C A + containing node v i , or (ρ −1 ⊗ A)⋅ i ∗ = (ρ −1 ⊗ A)⋅ i + then (ρ −1 ⊗ A)⋅ i ∗ is an eigenvector of A for eigenvalue ρ .

Proof

See [33, Chapter 6, Corollary 2.4]. □

When\(\mathcal{K}\) is a radicable semifield, the mean of cycle c is\(\mu _{\oplus }\!\left (c\right ) = \root{l(c)}\of{w(c)}\), If the semifield is (additively) idempotent the aggregate cycle mean of A is\(\mu _{\oplus }\!\left (A\right ) =\sum \{\mu _{\oplus }\!\left (c\right )\mid c \in C_{A}^{+}\}\). If the semiring is idempotent and selective, the nodes in the circuits that attain this mean are called the critical nodes of A,\(V _{A}^{c} =\{ i \in c\mid \mu _{\oplus }\!\left (c\right ) =\mu _{\oplus }\!\left (A\right )\}\). Then the critical nodes are\(V _{A}^{c} =\{ i \in V _{A}\mid (\widetilde{A}^{+})_{ii}^{+} = e\}\) .

We define the set of (right) fundamental eigenvectors of A for ρ as those indexed by the critical nodes.

The basic building block is the spectrum of irreducible matrices:

Theorem 4 ((Right) Spectral Theory for Irreducible Matrices [35])

Let \(A \in \mathcal{ M}_{n}(\overline{\mathcal{K}})\) be an irreducible matrix over a complete commutative selective radicable semifield. Then:

-

1.

The right spectrum of the matrix includes the whole semiring but the zero:

$$\displaystyle{\mathit{\text{P}}(A) = \overline{\mathcal{K}}\setminus \{\perp \}}$$ -

2.

The right proper spectrum only comprises the aggregate cycle mean:

$$\displaystyle{\mathit{\text{P}}^{\mathit{\text{P}}}\!(A) =\{\mu _{\oplus }\!\left (A\right )\}}$$ -

3.

If an eigenvalue is improper ρ ∈ P(A)∖ P p(A), then its eigenspace (and eigenlattice) is reduced to the two vectors:

$$\displaystyle{\mathcal{V }_{\rho }(A) =\{ \perp ^{n},\top ^{n}\} =\mathcal{ L}_{\rho }(A)}$$ -

4.

The eigenspace for a finite proper eigenvalue \(\rho =\mu _{\oplus }\!\left (A\right ) <\top\) is generated from its fundamental eigenvectors over the whole semifield, while the eigenlattice is generated by the semifield \(\nVDash =\langle \{ \perp,e,\top \},\oplus,\otimes,\perp,e,\top \rangle\) .

$$\displaystyle{\mathcal{V }_{\rho }(A) =\langle \mathop{ \mathrm{FEV}}\nolimits _{\rho }\!\!\left (A\right )\rangle _{\overline{\mathcal{K}}} \supset \mathcal{ L}_{\rho }(A) =\langle \mathop{ \mathrm{FEV}}\nolimits _{\rho }\!\!\left (A\right )\rangle _{\nVDash }}$$

Note how this theorem introduces the notion of eigenlattices to finitely represent an eigenspace over an idempotent semifield. Refer to [35] for further details.

We will see in our results that the only other UFNF type we need be concerned about is UFNF2: Let the partition of V A generating the permutation that renders A in UFNF2, block diagonal form, be V A = {V k}k = 1 K, and write\(A =\biguplus _{ k=1}^{K}A_{k}\), A k = A(V k, V k).

Lemma 9

Let \(A =\biguplus _{ k=1}^{K}A_{k} \in \mathcal{ M}_{n}(\mathcal{S})\) be a matrix in UFNF 2 , over a semiring, and \(\mathcal{V }_{\rho }(A_{k})\) ( \(\mathcal{U}_{\lambda }(A_{k})\) ) a right (left) eigenspace of A k for ρ (λ). Then,

Proof

See [36] Lemma 3.12. □

Note that this procedure is constructive and how the combinatorial nature of the proof in [36] makes the claim hold in any semiring. Clearly, if ρ ∈ P p(A k) for any k, then ρ ∈ P p(A). Since P p(A k) = Λ p(A k) for matrices admitting an UFNF2,\(\text{P}^{\text{P}}\!(A_{k}) = \varLambda ^{\text{P}}\!(A_{k}) =\bigcup _{ k=1}^{K}\text{P}^{\text{P}}\!(A_{k})\).

Corollary 1

Let \(A \in \mathcal{ M}_{n}(\mathcal{S})\) be a matrix in UFNF 2 over a semiring. Then the (left) right eigenspace of A for ρ ∈ P(A) is the product of the (left) right eigenspaces for the blocks, \(\mathcal{U}_{\lambda }(A) = \times _{k=1}^{K}\mathcal{U}_{\lambda }(A_{k})\) and \(\mathcal{V }_{\rho }(A) = \times _{k=1}^{K}\mathcal{V }_{\rho }(A_{k})\).

In complete semirings, looking for generators for the eigenspaces with δ k(k) = e and δ k(i) = ⊥ for k ≠ i, we define the right fundamental eigenvectors as

Lemma 9 proves that\(\mathop{\mathrm{FEV}}\nolimits _{\rho }^{2}\!\left (A\right ) \subset \mathcal{ V }_{\rho }(A)\), but we also have the following:

Lemma 10

Let \(A \in \mathcal{ M}_{n}(\overline{\mathcal{D}})\) be a matrix in UFNF 2 over a complete idempotent semiring with ρ ∈ P(A). Then,

-

1.

If ρ ∈ P p(A), then

.

. -

2.

If ρ ∈ P(A)∖ P p(A) then \(\mathop{\mathrm{FEV}}\nolimits _{\rho }^{2}\!\left (A\right ) =\mathop{ \mathrm{FEV}}\nolimits ^{\mathit{2},\top }\!\!\left (A\right )\).

-

3.

If ρ ∈ P p(A) then

.

. -

4.

.

.

So call\(\mathop{\mathrm{FEV}}\nolimits ^{2,\top }\!\!\left (A\right )\) the saturated fundamental eigenvectors of A, and define the (right) saturated eigenspace as\(\mathcal{V }^{\top }\!(A) =\langle \mathop{ \mathrm{FEV}}\nolimits ^{2,\top }\!\!\left (A\right )\rangle _{\overline{\mathcal{D}}}\).

Corollary 2

Let \(A \in \mathcal{ M}_{n}(\mathcal{S})\) be a matrix in UFNF 2 over a complete selective radicable idempotent semifield. Then

-

1.

For ρ ∈ P p(A), \(\mathcal{V }_{\rho }(A) \supseteq \mathcal{ V }^{\top }\!(A)\).

-

2.

For ρ ∈ P(A)∖ P p(A), \(\mathcal{V }_{\rho }(A) =\mathcal{ V }^{\top }\!(A)\).

Notice that the very general proposition below is for all complete dioids.

Proposition 3

Let \(A \in \mathcal{ M}_{n}(\overline{\mathcal{D}})\) be a matrix in UFNF 2 over a complete dioid. Then,

-

1.

For ρ ∈ P(A)∖ P p(A),

$$\displaystyle\begin{array}{rcl} \begin{array}{rclrcl} \mathcal{U}^{\top }\!(A)& =&\langle \mathop{\mathrm{FEV}}\nolimits ^{\mathit{2},\top }\!\!\left (A^{\mathit{\text{T}}}\right )\rangle _{\nVDash }\qquad \mathcal{V }^{\top }\!(A)& =&\langle \mathop{\mathrm{FEV}}\nolimits ^{\mathit{2},\top }\!\!\left (A\right )\rangle _{\nVDash }\,.\end{array} & & {}\\ \end{array}$$ -

2.

For ρ ∈ P p(A), ρ < ⊤,

$$\displaystyle\begin{array}{rcl} \begin{array}{rclrcl} \mathcal{U}_{\lambda }(A)& =&\langle \mathop{\mathrm{FEV}}\nolimits _{\rho }^{2}\!\left (A^{\mathit{\text{T}}}\right )\rangle _{\overline{\mathcal{D}}}\qquad \mathcal{V }_{\rho }(A)& =&\langle \mathop{\mathrm{FEV}}\nolimits _{\rho }^{2}\!\left (A\right )\rangle _{\overline{\mathcal{D}}}.\end{array} & & {}\\ \end{array}$$

To better represent eigenspaces, we define the spectral lattices of A,

involving the product of the component lattices,\(\mathcal{L}_{\rho }(A) = \times _{k=1}^{K}\mathcal{L}_{\rho }(A_{k})\).

3 Results

3.1 HITS over Idempotent Semifields: iHITS

Let\(\overline{\mathcal{K}} =\langle K,\oplus,\otimes,\perp \rangle\) be a complete dioid, in general, and let (G, M, R) be a\(\mathcal{K}\)-formal context. Then the space of hub scores is\(\mathcal{X} = K^{G}\) and that of authorities is\(\mathcal{Y } = K^{M}\) and they get mutually transformed by the actions of two linear functions:

To relate this problem back to the original one, we rewrite (7) in the new spaces,

we premultiply (18) by the new symmetric A to obtain

expressing the solution in terms of the projectors on both modes of (1). This proves that in order to solve the HITS problem in a dioid we have to solve the Singular Value Problem (18) which amounts to solving both decoupled Eigenvalue Problems (19).

However, these manipulations have overlooked the fact that to obtain the original HITS an idempotent semifield—not a dioid—was needed. In the interest of generality, we will develop the calculations below in terms of dioids, but the reader is warned that after a certain point we must suppose that\(\mathcal{K}\) is a semifield to reach a solution, and in particular an idempotent semifield. In this way we obtain the analog of HITS over idempotent semifields which we call iHITS .

3.2 The Eigenproblem in Symmetric Matrices

Since A is symmetric, we no longer have to worry about the distinction between the left and right eigenproblem:

Lemma 11

If A is symmetric then Λ p(A k) = P p(A k) and \(\left (\mathcal{U}_{\rho }(A)\right )^{\mathit{\text{T}}} =\mathcal{ V }_{\rho }(A)\) .

We can also refine the results in Proposition 1:

Proposition 4

Let \(\overline{\mathcal{S}}\) be a semiring and a symmetric \(A \in \mathcal{ M}_{n}(\overline{\mathcal{S}})\) . Then:

-

1.

The multiplicity of ⊥ ∈ P p(A) is the number of empty rows/columns of A .

-

2.

If \(\mathcal{S}\) is entire, P p(A) = { ε} if and only if \(A =\mathcal{ E}_{n}\) .

Proof

First, if A = A t, then the number of empty rows and empty columns is the same, and Proposition 1.2 provides the result. Second, after Proposition 1.3 P p(A) = { ε} means G A has no cycles. But if A ij ≠ ε then c = i → j → i is a cycle with non-null weight w(c) = A ij ⊗ A ji ≠ ε, which is a contradiction. □

Note that empty rows of R generate left eigenvalues while empty columns generate right eigenvalues, so the multiplicity of the null singular value may change from left to right.

To use Lemma 8 and Theorem 4, we need the maximum cycle mean:

Proposition 5

Let \(\mathcal{K}\) be a complete idempotent semifield and let \(A \in \mathcal{ M}_{n}(K)\) be symmetric. Then \(\mu _{\oplus }\!\left (c\right ) =\sup _{i,j}A_{ij}\) , where the sup, taken in the natural order of the semifield is attained.

Proof

Since A is symmetric, c = i → j → i is a cycle whenever A ij = A ji ≠ ⊥ . Then\(\mu _{\oplus }\!\left (c\right ) = A_{ij}\) . Consider one c′ such that\(\mu _{\oplus }\!\left (c'\right ) =\sup _{i,j}A_{ij} =\max _{i,j}A_{i,j}\) in the order of the semiring. This must exist since i, j are finite. If we can extend any of these critical cycles with another node k such that c″ = i → j → k → i then\(w(c'') = A_{ij} \otimes A_{jk} \otimes A_{ki} \leq A_{ij}^{3} =\mu _{\oplus }\!\left (c'\right )^{l(c'')}\), so in the aggregate mean\(\mu _{\oplus }\!\left (c'\right ) \oplus \mu _{\oplus }\!\left (c''\right ) =\mu _{\oplus }\!\left (c'\right )\) . So we induce on the length of any cycle that is an extension of c′ that\(\sum _{c\in C_{A}^{+}} =\mu _{\oplus }\!\left (c'\right ) =\sup _{i,j}A_{i,j}\) . □

To find the cycle means easily, we use the UFNF form.

3.3 UFNF Forms of Symmetric Matrices and Their Closures

For symmetric reducible matrices, the feasible UFNF types are simplified:

Proposition 6

Let \(\mathcal{S}\) be a dioid, and a symmetric \(A \in \mathcal{ M}_{n}(\mathcal{S})\) . Then:

-

(UFNF 3 ) A admits a proper symmetric UFNF 3 form if it has zero lines, and, in that case, the set of zero lines and zero rows are the same.

$$\displaystyle{ P_{3}^{\mathit{\text{T}}}\otimes A\otimes P_{ 3} = \left [\begin{array}{*{10}c} A_{\beta \beta }& \cdot \\ \cdot &\mathcal{E}_{\iota \iota }\\ \end{array} \right ] }$$(20) -

(UFNF 2 ) If a A has no zero lines it can be transformed by a simultaneous row and column permutation P 2 = P(A 1)…P(A k) into symmetric block diagonal UFNF:

$$\displaystyle{ P_{2}^{\mathit{\text{T}}}\otimes A\otimes P_{ 2} = \left [\begin{array}{*{10}c} A_{1} & \cdot &\ldots & \cdot \\ \cdot &A_{2} & \ldots & \cdot \\ \vdots & \vdots &\ddots & \vdots \\ \cdot & \cdot &\ldots &A_{K}\\ \end{array} \right ] =\biguplus _{ k=1}^{K}A_{ k} }$$(21)where {A k}k = 1 K, K ≥ 1 are the symmetric matrices of connected components of \(\overline{G}_{A}\).

-

(no UFNF 1 ) A cannot be permuted into a proper UFNF 1 form.

Proof

A simple matrix conformation procedure on Lemma 6 when the matrix is symmetric. □

We will see that this is almost the only structure we need to consider to find the eigenvectors. Consider\(R \in \mathcal{ M}_{G+M}(S)\), the bipartite network matrix, for instance, of the form:

If R 12 and R 21 are null, then we can find a permutation P so that

Now, if\(R_{2} =\mathcal{ E}_{G_{2}M_{2}}\) is null, then (23) is in UFNF3 with\(\mathcal{E}_{\iota \iota } =\mathcal{ E}_{(G_{2}+M_{2})(G_{2}+M_{2})}\) , while if R 1 and R 2 are both full, then (23) is in UFN2 with blocks A 1 and A 2, respectively. Note that other blocked forms of R simply generate an irreducible A, since the UFNF1 form is not possible.

So we can suppose that A can be simultaneously row and column permuted into a diagonal block form

with the empty lines and rows permuted to the beginning\(\mathcal{E}_{\iota \iota }\) and irreducible blocks A k. Recall that closures and permutations commute, whence the closures of the matrices in the forms above are really simple: the closure of (24) is straightforward in terms of the closures of the blocks:

The solution of this base case is highly dependent in the dioid in which the problem is stated. Since we will be solving the problem in idempotent semifields, for the irreducible base case we need only be concerned about matrix:

where we are using the shorthand\(B =\widetilde{ R}^{\mu _{\oplus }\!\left (A\right )}\) to account for the normalization with the cycle means that we need to use to find the eigenvectors. To find the closures of such irreducible matrices apply Lemma 3 to (26):

where we have used that (B t ⊗ B)∗⊗ B t = B t ⊗ (B ⊗ B t)∗ .

3.4 Pairs of Singular Vectors of Symmetric Matrices over Idempotent Semifields

To extract the eigenvectors corresponding to left singular vectors i ∈ G (respectively, right singular vectors j ∈ M) we need to check Theorem 3 against each block in its diagonal:

So the existence of\(\left (\widetilde{A}^{\mu _{\oplus }\!\left (A\right )}\right )^{+}\) requires the existence of the transitive closures (B t ⊗ B)+ and (B ⊗ B t)+ as we could have expected from (19). Note that after (6), the hub and authority scores are those columns such that:

but, importantly, (27) gives us the form of the authority score related to a particular hub score and vice versa, which is a kind of formal-concept property:

This solves completely the description of the left and right singular vectors. To find the singular values, we note from (19) that they are the square roots of the cycle means of the independent blocks or, equally, the proper eigenvalues of A,

This would include the bottom if and only if one of the blocks is empty.

3.5 Relationship to FCA

In order to interpret the results above in the light of FCA, we have to use the proper multi-valued extension of it. For such purpose,\(\mathcal{K}\)-Formal Concept Analysis is an extension of FCA for formal contexts with entries in an idempotent semifield [24] which has been used for the analysis of confusion matrices and other data with the appropriate characteristics [39–41].

Regarding the glimpse of a formal concept-like property of (28), a cursory analysis with the techniques in [24] shows that the analogue of the polars in FCA declared in (17) actually comes from two different generalized Galois connections:

-

R ⊗⋅ : K M → K G is the right adjunct of a left (Galois) adjunction, while

-

R t ⊗⋅ : K G → K M is the left adjunct in a right (Galois) adjunction.

It is well known that the iteration of these operators in the Boolean case is not concept-forming. Nevertheless, they do lead to closures. For instance, in [15] a discussion of the issue leads to operators achieving transitive closures for endorelations—that is, binary relations with identical domain and codomain—and the finding of strongly connected components in the one-mode projection graphs these endorelations define. This agrees with a technical condition often imposed on graphs prior to their study through HITS: that their adjacency matrices be irreducible, which translates into a graph with a single strongly connected component. Note also that the transitive closure of the matrix also figures prominently in this application.

Yet, in this chapter the isomorphism between the ranges of the operators in (17) defining the duality between hubs and authorities is clear and attested in a more generic context than HITS was initially conceived. Specifically, we consider graphs with any number of strongly connected components, even with none (see Sect. 3.3).

In parallel work, also, we suggest that the “standard” take on what a formal concept of a\(\mathcal{K}\)-context is should be enlarged to include not only closures, but also the interior of (multi-valued) sets of objects and attributes [42]. It may be the case that the hub and authority score vectors in the idempotent version of HITS belong to these systems of interiors. In any case, it would seem that there is not a single SVD for matrices with values in an idempotent semifield, and this issue needs to be explored further.

3.6 Example

In this section we present a HITS analysis for a weighted two-mode network both using standard HITS and HITS over the max-min-plus idempotent semifield. The data being analysed is the example in [43, p. 31], the worries data, which is a two-mode network of the type of worry declared as most prevalent by 1554 adult Israeli depending on their living countries—and sometimes those of their parents. The graph of the network is depicted in Fig. 1a.

Weighted, directed graph of the worries data [43, p. 31] and its weighted idempotent and standard “authority” (worry) and “hub” (procedence) scores. There are clear differences in both approaches. (a) Worries weighted bipartite network. (b) Principal worry scores. (c) Procedence scores

To be amenable for max-min-plus processing the original counts in the contingency matrix were transformed into a joint probability function P WP with marginals P W over worries and P P over procedence. The idempotent SVD was carried out on the pointwise mutual information matrix

The “authority” and “hub” scores are differentiated for each of the modes: they return “type of worry” and “procedence” scores, respectively. We can see that HITS and iHITS produce somehow different results: the actual meaning of these differences is data dependent and a matter for future, more specialized analyses. The idempotent primitives were developed in-house and are available from the authors upon request.

3.7 Discussion

Extensions to Other Semirings Note that the original HITS problem was set in a positive semifield that is not idempotent; hence, our method of solution does not apply yet to that case, but does apply to the max-min-plus and max-min-times semifields, examples of which are given in terms of their normalized closures. In the case of the\(\mathbb{R}_{0}^{+}\) the Perron-Frobenius theorem is usually invoked to solve HITS iteratively by means of the power method [44].

A reviewer of this paper requested a consideration of the solution of the HITS problem for the rest of dioids which are not semifields. The problem with further generalization of our scheme is the base case for the recursion of Frobenius normal forms. In the idempotent semifield case, the cycle means of Proposition 5 provide the eigenvalues needed for the normalization of the matrices that allow the calculation of the closures in the irreducible case. But in the generic positive semifield case the cycle means and the possibility of choosing the critical circuits to select the eigenvectors in the closures are not granted. Also, in inclines—and in the fuzzy semirings included in them—the base, irreducible case is completely different to that of semifields [33]. But since the generic development on dioids for UFNF1 and UFNF2 is based on combinatorial considerations, we believe that a solution for the base case for other dioids could be plugged into this UFNF recursion to obtain analogous results to those presented here. These extensions will be considered in future work.

On the Orthogonality of Solutions On another note, the SVD in standard algebra makes a strong case about the orthogonality of the left and right singular vectors belonging to different singular values in order to guarantee certain properties of the bases of singular vectors in the reconstruction. But in entire zerosumfree semirings, and in entire dioids or positive semifields a fortiori, orthogonality is a rare phenomenon, after Lemma 7.

Indeed, irreducible matrices do not have any orthogonal, but rather collinear left or right singular vectors. Regarding reducible matrices, note that (24) factors in all the possible orthogonality between eigenvectors. In fact we have,

Corollary 3

Let (G, M, R) be an \(\mathcal{S}\) -formal context over a dioid \(\mathcal{S}\) , and \(A \in \mathcal{ M}_{n}(\mathcal{S})\) as in (27). Then two of the eigenvectors for A or (left, right) singular vectors for R can only be orthogonal if they arise from different blocks.

and Proposition 3 proves that even in that case they might not be orthogonal.

However, after the work in [22, 25] orthogonality may not be needed in the case of dioids: the use of join- and meet-irreducible may guarantee perfect reconstruction.

On the Effectiveness of the Dual-Projection Approach for 2-Mode Network Analysis Yet another reviewer raised the concern that the work in [3] proves the dual-projection approach hopeless. This work of Latapy and colleagues propounds a more “direct” approach to the study of bipartite networks by means of collecting and creating measures designed specifically for them, as opposed to those adapted from 1-mode networks. They develop to some extent a criticism of the projection approach and, indirectly, of the dual-projection approach on Boolean networks.

However, they suggest that theirs and the projection approach are, in general, complementary. And in particular, that none of the criticism for Boolean projection approaches applies to projection approaches on weighted affiliation networks. On these grounds and the present work, the criticism of [3] notwithstanding, we believe the dual-projection approach is adequate for the study of 2-mode networks and can bring many insights about their behaviour.

4 Summary and Conclusions

In this paper, we related the HITS algorithms to the SVD of the adjacency matrix of a weighted 2-mode network and argued that this supports the dual-projection approach to SNA.

To make evident the relationship of these techniques to\(\mathcal{K}\)-Formal Concept Analysis, we generalized the HITS algorithms for semirings, then instantiated it in dioids, semifields (including the original semifield where it was defined) and finally in idempotent semifields, which are the algebras used by\(\mathcal{K}\)-FCA.

We showed that the projection operators are related to Galois adjunctions, rather than to the polars in Galois connections, and that this approach to weighted graph analysis has affinities to finding strongly connected components in Boolean graphs. What the connected components of weighted graphs might mean is subject for further work.

We have also provided an example of how to use the new calculations to obtain idempotent authority and hubness scores for a weighted bipartite graph, although the interpretation of such scores vis-à-vis the original ones needs further investigation.

Notes

- 1.

We will consider all graphs in this paper as directed graphs unless otherwise stated.

- 2.

- 3.

Birkhoff actually coined “crypto-isomorphism”, but the term seems to have been forced to evolve [12]. We point out that the “surprise” must come from finding concepts of different subfields to be the same. Of course cryptomorphisms boil down to plain isomorphisms as soon as the surprise fades away, so it is a mathematical concept more of an educational or sociological than a formal nature.

- 4.

This procedure will be extended in Sect. 2.3.

- 5.

To lessen the visual clutter, we drop the graph index from the matrix.

- 6.

This term is not standard: for instance, [33] prefer to use “semiring with a multiplicative group structure”, but we prefer semifield to shorten out statements.

References

Bang-Jensen, J., Gutin, G.: Digraphs. Theory, Algorithms, and Applications, 3rd edn. Springer, Heidelberg (2001)

Agneessens, F., Everett, M.G.: Introduction to the special issue on advances in two-mode social networks. Soc. Netw. 35, 145–147 (2013)

Latapy, M., Magnien, C., Vecchio, N.D.: Basic notions for the analysis of large two-mode networks. Soc. Netw. 30, 31–48 (2008)

Everett, M.G., Borgatti, S.P.: The dual-projection approach for two-mode networks. Soc. Netw. 35, 204–210 (2013)

Strang, G.: The fundamental theorem of linear algebra. Am. Math. Mon. 100, 848–855 (1993)

Golub, G.H., Van Loan, C.F.: Matrix Computations, 3rd edn. JHU Press, Baltimore (2012)

Landauer, T.K., McNamara, D.S., Dennis, S., Kintsch, W.: Handbook of Latent Semantic Analysis. Lawrence Erlbaum Associates, Mahwah (2007)

Ganter, B., Wille, R.: Formal Concept Analysis: Mathematical Foundations. Springer, Berlin/Heidelberg (1999)

Wille, R.: Restructuring lattice theory: an approach based on hierarchies of concepts. In: Ordered Sets (Banff, Alta., 1981), pp. 445–470. Reidel, Boston (1982)

Davey, B., Priestley, H.: Introduction to Lattices and Order, 2nd edn. Cambridge University Press, Cambridge (2002)

Birkhoff, G.: Lattice Theory, 3rd edn. American Mathematical Society, Providence (1967)

Rota, G.C.: Indiscrete Thoughts. Springer, Boston, MA (2009)

Freeman, L.C., White, D.R.: Using Galois lattices to represent network data. Sociol. Methodol. 23, 127–146 (1993)

Domenach, F.: CryptoLat - a pedagogical software on lattice cryptomorphisms and lattice properties. In: Ojeda-Aciego, M., Outrata, J. (eds.) 10th International Conference on Concept Lattices and Their Applications (2013)

Gaume, B., Navarro, E., Prade, H.: A parallel between extended formal concept analysis and bipartite graphs analysis. In: IPMU’10: Proceedings of the Computational Intelligence for Knowledge-Based Systems Design, and 13th International Conference on Information Processing and Management of Uncertainty, Universite Paul Sabatier Toulouse III. Springer, Heidelberg (2010)

Kuznetsov, S.O.: Interpretation on graphs and complexity characteristics of a search for specific patterns. Nauchno-Tekhnicheskaya Informatsiya Seriya - Informationnye i sistemy 1, 23–27 (1989)

Falzon, L.: Determining groups from the clique structure in large social networks. Soc. Netw. 22, 159–172 (2000)

Ali, S.S., Bentayeb, F., Missaoui, R., Boussaid, O.: An efficient method for community detection based on formal concept analysis. In: Foundations of Intelligent Systems, pp. 61–72. Springer, New York (2014)

Roth, C., Bourgine, P.: Epistemic communities: description and hierarchic categorization. Math. Popul. Stud. 12 107–130 (2005)

Freeman, L.C.: Methods of social network visualization. In: Meyers, R.A. (ed.) Encyclopedia of Complexity and Systems Science, Entry 25, pp. 1–19. Springer, New York (2008)

Duquenne, V.: On lattice approximations: syntactic aspects. Soc. Netw. 18, 189–199 (1996)

Bělohlávek, R., Vychodil, V.: Formal concepts as optimal factors in Boolean factor analysis: implications and experiments. In: Proceedings of the 5th International Conference on Concept Lattices and Their Applications, (CLA07), Montpellier, 24–26 October 2007

Bělohlávek, R.: Fuzzy Relational Systems. Foundations and Principles. IFSR International Series on Systems Science and Engineering, vol. 20. Kluwer Academic, Norwell (2002)

Valverde-Albacete, F.J., Peláez-Moreno, C.: Extending conceptualisation modes for generalised Formal Concept Analysis. Inf. Sci. 181, 1888–1909 (2011)

Bělohlávek, R.: Optimal decompositions of matrices with entries from residuated lattices. J. Log. Comput. 22 (2012) 1405–1425

Kleinberg, J.M.: Authoritative sources in a hyperlinked environment. J. ACM 46 (1999) 604–632

Easley, D.A., Kleinberg, J.M.: Networks, Crowds, and Markets - Reasoning About a Highly Connected World. Cambridge University Press, Cambridge (2010)

Kolaczyk, E.D., Csárdi, G.: Statistical Analysis of Network Data with R. Use R!, vol. 65. Springer, New York, NY (2014)

Lanczos, C.: Linear Differential Operators. Dover, New York (1997)

Golan, J.S.: Power Algebras over Semirings. With Applications in Mathematics and Computer Science. Mathematics and Its applications, vol. 488. Kluwer Academic, Dordrecht (1999)

Pouly, M., Kohlas, J.: Generic Inference. A Unifying Theory for Automated Reasoning. Wiley, Hoboken (2012)

Golan, J.S.: Semirings and Their Applications. Kluwer Academic, Dordrecht (1999)

Gondran, M., Minoux, M.: Graphs, Dioids and Semirings. New Models and Algorithms. Operations Research/Computer Science Interfaces. Springer, New York (2008)

Gondran, M., Minoux, M.: Valeurs propres et vecteurs propres dans les dioïdes et leur interprétation en théorie des graphes. EDF, Bulletin de la Direction des Etudes et Recherches, Serie C, Mathématiques Informatique 2, 25–41 (1977)

Valverde-Albacete, F.J., Peláez-Moreno, C.: The spectra of irreducible matrices over completed idempotent semifields. Fuzzy Sets Syst. 271, 46–69 (2015)

Valverde-Albacete, F.J., Peláez-Moreno, C.: The spectra of reducible matrices over complete commutative idempotent semifields and their spectral lattices. Int. J. Gen. Syst. 45, 86–115 (2016)

Valverde-Albacete, F.J., Peláez-Moreno, C.: Spectral lattices of reducible matrices over completed idempotent semifields. In: Ojeda-Aciego, M., Outrata, J., (eds.) Concept Lattices and Applications (CLA 2013), pp. 211–224. Université de la Rochelle, Laboratory L31, La Rochelle (2013)

Cohen, G., Gaubert, S., Quadrat, J.P.: Duality and separation theorems in idempotent semimodules. Linear Algebra Appl. 379, 395–422 (2004)

Peláez-Moreno, C., García-Moral, A.I., Valverde-Albacete, F.J.: Analyzing phonetic confusions using Formal Concept Analysis. J. Acoust. Soc. Am. 128, 1377–1390 (2010)

Valverde-Albacete, F.J., González-Calabozo, J.M., Peñas, A., Peláez-Moreno, C.: Supporting scientific knowledge discovery with extended, generalized formal concept analysis. Expert Syst. Appl. 44, 198–216 (2016)

González-Calabozo, J.M., Valverde-Albacete, F.J., Peláez-Moreno, C.: Interactive knowledge discovery and data mining on genomic expression data with numeric formal concept analysis. BMC Bioinf. 17, 374 (2016)

Valverde-Albacete, F.J., Peláez-Moreno, C.: The linear algebra in extended formal concept analysis over idempotent semifields. In: Bertet, K., Borchmann, D. (eds.) Formal Concept Analysis, Springer Berlin Heidelberg, 211–227 (2017)

Mirkin, B.: Mathematical Classification and Clustering. Nonconvex Optimization and Its Applications, vol. 11. Kluwer Academic, Dordrecht (1996)

Akian, M., Gaubert, S., Ninove, L.: Multiple equilibria of nonhomogeneous Markov chains and self-validating web rankings. arXiv:0712.0469 (2007)

Acknowledgements

The authors have been partially supported by the Spanish Government-MinECo projects TEC2014-53390-P and TEC2014-61729-EXP for this work.

We would like to thank the reviewers of earlier versions of this paper for their help in improving it.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this chapter

Cite this chapter

Valverde-Albacete, F.J., Peláez-Moreno, C. (2017). A Formal Concept Analysis Look at the Analysis of Affiliation Networks. In: Missaoui, R., Kuznetsov, S., Obiedkov, S. (eds) Formal Concept Analysis of Social Networks. Lecture Notes in Social Networks. Springer, Cham. https://doi.org/10.1007/978-3-319-64167-6_7

Download citation

DOI: https://doi.org/10.1007/978-3-319-64167-6_7

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-64166-9

Online ISBN: 978-3-319-64167-6

eBook Packages: Computer ScienceComputer Science (R0)

.

. .

. .

.