Abstract

After the introduction, in the first part of the chapter, we review some properties of the scalar Hill equation, a second-order linear ordinary differential equation with periodic coefficients. In the second part, we extend and compare the vectorial Hill equation; most of the results are confined to the case of two degrees of freedom (DOF). In both cases, we describe the equations with parameters \( \left( \alpha ,\beta \right) \), the zones of instability in the \(\alpha -\beta \) plane are called Arnold Tongues. We graphically illustrate the properties wherever it is possible with the aid of the Arnold Tongues.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

3.1 Introduction

Hill equation (3.10) was introduced by George Hill in the 1870s, but it was not published until 1886 in [28]. It is a linear second-order ordinary differential equation with a periodic function, originally an even function, to describe the variations in the lunar orbit. It matched so well with the data available in those days, that of immediately gained wide diffusion. In the lapse between the results that Hill got and the date of publication, the Floquet Theorem [20] was published. Nowadays, any study of Hill equation is based on Floquet’s result. More than half a century later, the Nobel prize winner Piotr Kapitsa [32] used the newer Theory of Perturbations to find a condition in which the upper equilibrium point of a pendulumFootnote 1 may be stabilized varying periodically its suspension point. In detail, if the suspension point of a pendulum of mass M and length L, variates periodically as \(z=A\cos \omega t\), then the upper equilibrium point becomes stable if \(A\omega > \sqrt{2gL}\), where \(g=9.81\) m/s\(^{2}\), is the acceleration of the gravity. The pendulum with periodic variation of its suspension point is called Kapitsa’s Pendulum. After this result was published, some authors reported that Stephenson [46] had obtained earlier a similar result, in opinion of the author this is partially true, because Stephenson’s paper claims that it is possible, but he did not express any condition. In 1928, van der Pol and StruttFootnote 2 [47] published the first Arnold Tongues,Footnote 3 they claimed belong to the Mathieu equation (Eq. 3.10, when \(q\left( t\right) =\cos t\)), but actually they reproduced the Arnold Tongues diagram for the Meissner Equation (Eq. 3.10, when \(q\left( t\right) = sign\left( \cos t\right) \)).

Even more interesting is that there exist a seven-century tradition at the Cathedral of Santiago of Compostela, since then they have experienced a Kapitsa’s Pendulum with a large censer (O Botafumeiro), which reaches approximately \(\pm 82{^\circ }\), in 17 cycles and it takes approximately 80 s to achieve the maximum excursion [42]. This effect is contained in Kapitsa’s result, because when the condition \(A\omega >\sqrt{2gL}\) is satisfied, simultaneously the upper equilibrium becomes stable and the lower equilibrium becomes unstable, as in the Botafumeiro. Around 1940 the Romantic era for Hill equation ended. Late 1940s until 1960s, two prominent Russian academicians Krein and Yakubovich, established the foundation of linear Hamiltonian with periodic coefficients; we mention two celebrated references, [34, 48]. Other important contributions were made by Gelfand–Lidskii [24], Starzhinskii [45], Bolotin [6], Atkinson [4], and Eastham [19]; above all of them, it was Lyapunov himself, who contributed approximately half of his Ph.D. Thesis to the problem of stability of Linear Periodic Systems [35]. Relations, only for the scalar case with the Sturm–Liouville Theory, appear in Atkinson [4], Eastham [19], Yakubovich and Starzhinskii [48], and the excellent book of Marchenko [37]; a recent reference is [10]. A recent application of parametric resonance in the roll effect of ships appeared in [21]. Two excellent surveys are Champneys [11] or Seyranian [43]; encyclopedic and very deep results related to the spectrum of Hill’s equation were presented by McKean and Moerbeke [38].

The Direct Problem refers to: Given a Hill equation, find the spectral bands or Arnold Tongues associated; this paper deals only with this case. The Inverse Problem, consists in: Given the spectral data, to recover the equation which has the given spectrum. The inverse problem of the Sturm–Liouville problem related to the scalar Hill equation was solved in the 1960s by Gelfand and Levitan [23] and others. But, it was Borg [7] who defined the problem and gave the first key results. Atkinson [4] and Eastham [19] gave interesting results. In the opinion of the author, the inverse problem associated with the vectorial Hill equation is far from being solved, because it is not equivalent to a vectorial Sturm–Liouville (SL) Problem, although some early attempts are found in [26] and more recently in [5]. In physics literature, they name to the Hill equation, the 1-dimensional Schrödinger equation with periodic potential.

This chapter is organized as follows: the first section is an introduction and historical overview, in Sect. 3.2 we present mathematical preliminaries particularly concerning matrices, Sect. 3.3 is dedicated to survey the results for scalar Hill equation, in Sect. 3.4 we present the 2-DOF Hill equation or vectorial Hill equation, the objective of Sect. 3.5 gives a set of open problems and different possible generalizations, finally in Sect. 3.6 we present some conclusions.

3.2 Preliminaries

In this section, we present the main background required subsequently, namely Floquet Theory, which gives us the basic property of the solutions of a linear ordinary differential equations with periodic coefficients. Then we review the Hamiltonian systems, and the associated Hamiltonian and symplectic matrices with their main properties.

3.2.1 Floquet Theory

Given a linear system described as a set of first-order linear ordinary differential equations with periodic coefficients, as:

where \(A\left( t\right) \) is an \(n\times n\) matrix whose components are piecewise continuous, and periodic with minimum period T; i.e., \(A\left( t+T\right) =A\left( t\right) \) for all t; for the sake of brevity we will say that \(A\left( t\right) \) is T -periodic. The solutions of (3.1) may be expressed in terms of the state transition matrix Footnote 4 \(\varPhi \left( t,t_{0}\right) \), which has the following basic properties, see [8] or [12]:

-

(a)

\(\underset{}{\varPhi \left( t,t\right) }=I_{n}\) \(\forall t\in \mathbb {R}\)

-

(b)

\(\underset{}{\left[ \varPhi \left( t,t_{0}\right) \right] }^{-1}=\varPhi \left( t_{0},t\right) \)

-

(c)

\(\underset{}{\varPhi \left( t_{2},t_{0}\right) }=\varPhi \left( t_{2},t_{1}\right) \varPhi \left( t_{1},t_{0}\right) \) \(\forall t_{0},t_{1},t_{2}\in \mathbb {R}\),

-

(d)

\(\underset{}{\frac{\partial }{\partial t}\varPhi \left( t,t_{0}\right) } =A\left( t\right) \varPhi \left( t,t_{0}\right) \), and

-

(e)

\(\underset{}{\forall \;x\left( t_{0}\right) }=x_{0}\in \mathbb {R} ^{n}\), the solution of (3.1) is \(x\left( t\right) =\varPhi \left( t,t_{0}\right) x_{0}.\)

Using the state transition matrix previously reviewed, Floquet Theory [8] asserts:

Theorem 3.1

(Floquet) Given the periodic linear system (3.1), its state transition matrix satisfies:

where \(P\left( t+T\right) =P\left( t\right) \) is an \(n\times n\) periodic matrix of the same period T of the system (3.1), and R is a constant \(n\times n\) matrix, not necessarily real even if (3.1) is real.Footnote 5

If we make \(t_{0}=0\) in (3.2) and by property (a), we get \(P^{-1}\left( 0\right) =I_{n}\), then we get the most well-known version:

Corollary 3.1

(Floquet Theorem) Given the system (3.1), for \(t_{0}=0\) its state transition matrix satisfies:

where \(P\left( t+T\right) =P\left( t\right) \) is an \(n\times n\) periodic matrix of the same period as the system (3.1), and R is a constant \( n\times n\) matrix.

Now if we evaluate (3.3) at \(t=T\), taking into account that \(P\left( t\right) \) is T-periodic, \(P\left( T\right) =I_{n},\) then

The last constant matrix is particularly important, it is called Monodromy Matrix and will be designated by M.

Remark 3.1

The Monodromy matrix defined by (3.4) is dependent of the initial time \( t_{0}\); but not its spectrum. Let us designate \(M_{t_{0}}=\varPhi \left( T+t_{0},t_{0}\right) \), then using (3.2) for \(t=T+t_{0}\), \(\varPhi \left( T+t_{0},t_{0}\right) =P^{-1}\left( T+t_{0}\right) e^{RT}P(t_{0})=P^{-1}\left( t_{0}\right) e^{RT}P(t_{0})=P^{-1}\left( t_{0}\right) MP(t_{0})\). This shows that \(\varPhi \left( T+t_{0},t_{0}\right) =M_{t_{0}}\) and M are similar. As long as our use of the Monodromy matrix is reduced to its spectrum, there is no difference to use M or \(M_{t_{0}}.\)

Two consequences of the Floquet Theorem are of great importance: Reducibility and Stability.

I.- Reducibility

Given a system \(\overset{\underset{\bullet }{}}{x}=A\left( t\right) x\), if we make the following change of coordinates \(z\left( t\right) =T\left( t\right) x\left( t\right) \), where the square \(n\times n\) matrix \(T\left( t\right) \) satisfies:

-

(i)

\(T\left( t\right) \) is differentiable and invertible \(\forall t,\) and

-

(ii)

The matrices \(T\left( t\right) ,\overset{\underset{\bullet }{}}{T}\left( t\right) ,\) and \(T^{-1}\left( t\right) \) are all bounded

Then the Transformation matrix \(T\left( t\right) \) is called a Lyapunov Transformation.Footnote 6

Roughly speaking, the system in coordinates x or z, keep their stability property if, \(T\left( t\right) \) the matrix which relates x and z is a Lyapunov Transformation. For properties of Lyapunov Transformations see [1, 8, 22].

Definition 3.1

A time-varying linear system (not necessarily periodic) \(\overset{\underset{ \bullet }{}}{x}=A\left( t\right) x\), is said to be ‘reducible,’ if there exists a linear time-varying Lyapunov Transformation \(T\left( t\right) \) such that \(z\left( t\right) =T\left( t\right) x\left( t\right) \)

where \(\left[ T^{-1}\left( t\right) A\left( t\right) T\left( t\right) +T^{-1}\left( t\right) \overset{\underset{\bullet }{}}{T}\left( t\right) \right] =R\) a constant matrix

Any system (3.1) T-periodic is reducible, the result is expressed formally in the next theorem. All the symbols refer to the factorization given in (3.3).

Theorem 3.2

Given a T-periodic linear system \(\overset{\underset{\bullet }{}}{x} =A\left( t\right) x\), the change of coordinates \(z\left( t\right) =P^{-1}\left( t\right) x\left( t\right) \) transforms the system into a linear time-invariant system:

Remark 3.2

It follows that for linear periodic systems, there is a linear periodic transformation, which transforms the original periodic time-varying system into a linear time-invariant system. Unfortunately this result, while very useful for analysis, it is not so for synthesis; because one requires the solution in order to perform this change of coordinates.

II.- Stability

Recall the stability definition in the sense of Lyapunov [35] (or contemporary reference [33]):

Definition 3.2

The zero solution of \(\overset{\underset{\bullet }{}}{x}=A\left( t\right) x\)

-

(a)

Stable, if \(\ \forall \) \(\varepsilon >0,\exists \) \(\delta >0\) such that \( \left\| x\left( t_{0}\right) \right\|<\delta \Longrightarrow \left\| x\left( t\right) \right\| <\varepsilon \) \(\forall \) \(t\ge t_{0}\)

-

(b)

Asymptotically stable if the zero solution is stable and \(\underset{ t\rightarrow \infty }{\lim }x\left( t\right) =\mathbf {0}.\)

In our system (3.1) \(\overset{\underset{\bullet }{}}{x}=A\left( t\right) x,\) for \(t\ge 0,\) t may be expressed as : \(t=kT+\tau \), k a non-negative integer and \(\tau \in \left[ 0,T\right) \); then the solution satisfies \(t_{0}=0\) and \(x(0)=x_{0}\):

from the last step, we can conclude that

-

(a)

Asymptotic Stability: \(x(t)\rightarrow \mathbf {0}\) if only if \(\sigma \left( M\right) \subset \overset{\circ }{D}_{1}\triangleq \left\{ z\in \mathbb {C}:\left| z\right| <1\right\} \)

-

(b)

Stability: x(t) remains bounded \(\forall \) \(t\ge 0\) iff \( \sigma \left( M\right) \subset \overline{D}_{1}\triangleq \left\{ z\in \mathbb {C}:\left| z\right| \le 1\right\} \) and if \(\lambda \in \sigma \left( M\right) \) and \(\left| \lambda \right| =1\), \(\lambda \) is a simple root of the minimal polynomial of M.

Remark 3.3

Notice that both properties, reducibility, and stability, for linear T -periodic systems could be obtained thanks to the Floquet factorization (3.3).

3.2.2 Hamiltonian Systems

Given a differentiable function \(\mathcal {H}\left( q,p\right) \), called a Hamiltonian function, which depends on vectors q and p, which satisfies the equations:

is called a Hamiltonian System, also called in Russian literature Canonical System. The Hamiltonian function represents the energy of the system and for the case in which this function \(\mathcal {H}\left( q,p\right) \) does not depend explicitly of time, this quantity being preserved along the solutions of (3.7); when this property holds the system is called Conservative. This guarantees that the system (3.7) has a first integral, [3, 14, 39]. The Hamiltonian systems (3.7) are always of even order, say 2n if \(q,p\in \mathbb {R}^{n}\). For further properties see [3, 39].

We shall consider Hamiltonian functions that are also function of time, i.e., \(\mathcal {H}\left( t,q,p\right) \), in this case the Hamiltonian system is no longer conservative. Also we shall only regard linear Hamiltonian systems, then the Hamiltonian function is a quadratic homogeneous form, i.e.,

where \(H\left( t\right) \) is a \(2n\times 2n\) symmetric matrix, in this case the Hamiltonian System (3.7) may be expressed as:

where \(J=\left[ \begin{array}{cc} 0 &{} I_{n} \\ -I_{n} &{} 0 \end{array} \right] \). Notice that \(J^{-1}=J^{T}=-J\) and \(J^{2}=-I_{2n}\).

Finally, if the linear Hamiltonian system is T-periodic, then \(H\left( t+T\right) =H\left( t\right) =H^{T}\left( t\right) \). We are going to assume this relation to hold from now on.

Definition 3.3

([39]) An even-order matrix \(A\in \mathbb {R}^{2n\times 2n}\) is called Hamiltonian Matrix, if \(A=JH\), where H is a symmetric matrix; equivalently \(JA+A^{T}J=0\)

From \(JA+A^{T}J=0\), we get \(A=J^{-1}\left( -A^{T}\right) J\), i.e., A is similar to \(-A^{T}\) therefore they have the same spectrum:

We have proven the key property of constant Hamiltonian matrices, that is, its spectrum is symmetric with respect to the imaginary axis.

Theorem 3.3

Let \(A\in \mathbb {R}^{2n\times 2n}\) be a Hamiltonian matrix, then if \(\lambda \in \sigma \left( A\right) \) \(\Longrightarrow \) \(-\lambda \in \sigma \left( A\right) \).Footnote 7 Equivalently, the characteristic polynomial of a Hamiltonian matrix has only even powers or it is an even polynomial.

Remark 3.4

Notice also that, the trace of a Hamiltonian matrix is always zero.

Hamiltonian matrices are closely related to another kind of matrices, the symplectic ones.

Definition 3.4

([39]) An even-order real matrix \(M\in \mathbb {R}^{2n\times 2n}\) is called a Symplectic Matrix, if \(M^{T}JM=J\).

The determinant of a symplectic matrix is \(+1\), moreover the set of symplectic matrices of a given order form a Group [39]. The key property of constant symplectic matrices is that its spectrum is symmetric with respect to the unit circle, it may be easily proven, from the definition and the fact that a symplectic matrix is always invertible, then \( M^{T}=JM^{-1}J^{-1}\), i.e., \(\sigma \left( M^{T}\right) =\sigma \left( M^{-1}\right) =\sigma \left( M\right) \) \(\Longrightarrow \) if \(\lambda \in \sigma \left( M\right) \) then \(\lambda ^{-1}\in \sigma \left( M\right) \). Let us express this fact formally in the next theorem:

Theorem 3.4

Let \(M\in \mathbb {R}^{2n\times 2n}\) be a symplectic matrix, then if \(\lambda \in \sigma \left( A\right) \) \(\Longrightarrow \) \(\lambda ^{-1}\in \sigma \left( A\right) .\) Equivalently, the characteristic polynomial of a Symplectic matrix is self-reciprocal [39] or palindromic [31], i.e., \(p_{M}\left( \lambda \right) =\lambda ^{2n}p_{M}\left( \lambda ^{-1}\right) \).

The property that relates Hamiltonian matrices with symplectic ones in a given Hamiltonian system is:

Theorem 3.5

Let \(\dfrac{d}{dt}\left[ \begin{array}{c} q \\ p \end{array} \right] =JH\left( t\right) \left[ \begin{array}{c} q \\ p \end{array} \right] \) for some \(H\left( t\right) =H^{T}\left( t\right) \) be a linear time-varyingFootnote 8 Hamiltonian system, then its state transition matrix is a symplectic matrix.

Remark 3.5

A linear time-invariant Hamiltonian system can not be asymptotically stable, because of the symmetry of its eigenvalues; this property goes to all the Hamiltonian Systems time-invariant or not; and linear or not.

Remark 3.6

Hamiltonian systems enjoy another important property: For arbitrary 2n-dimensional system \(2n-1\) independent first integrals are required in order to arrive at a first-order 1-dimensional ODE, which may be integrated by quadratures to finally integrate the whole system. The Liouville Theorem ensures that a 2n-dimensional Hamiltonian system is integrated if we know only n independent first integrals [3, 39]; only half of the work!

3.3 Hill Equation: The Scalar Case

In this section, we are going to present the main properties of scalar Hill’s equation, namely

where \(q\left( t\right) \) is T-periodic.Footnote 9 The parameter \(\alpha \) represents the square of the natural frequency for \(\beta =0\); the parameter \(\beta \) is the amplitude of the parametric excitation, and the periodic function \(q\left( t\right) \) is called the excitation function. For comparison reasons, we are going to use three different excitation functions: (a) \(q\left( t\right) =\cos t\), in this case the equation is called Mathieu equation; (b) \(q\left( t\right) =sign\left( \cos t\right) \), in this case the equation is called Meissner equation, and (c) \(q\left( t\right) =\cos t+\cos 2t\), which was used originally by Lyapunov [35].

Notice that a linear second-order differential equation with periodic coefficients:

where a(t) and b(t) are T-periodic, may be always transformed with \(y\,{=}\,e^{-\frac{1}{2}\int a\left( \tau d\tau \right) }z \), into (3.10), therefore there is no loss of generality to consider with respect to (3.10). Note also that this is not a Lyapunov Transformation in general [27].

If we define the 2-dimensional vector \(x\triangleq \left[ \begin{array}{c} y \\ \overset{\underset{\bullet }{}}{y} \end{array}\right] \), the Eq. (3.10) may be rewritten as

where J is as in Eq. (3.9) for \(n=1\), and \(H\left( t+T\right) =H\left( t\right) =H^{T}\left( t\right) \), satisfies the condition for linear Hamiltonian systems. Then the state transition matrix of Hill’s equation in the format (3.12) is a Symplectic matrix for all t. Therefore its Monodromy matrix M is also a symplectic matrix. The characteristic polynomial \(p_{M}\left( \mu \right) \) of the Monodromy Matrix M is of the form:

Definition 3.5

The eigenvalues of the Monodromy matrix M, equivalently the roots of its characteristic polynomial \(p_{M}\left( \mu \right) \), are called multipliers of (3.12) or (3.10), denoted by \(\mu .\) For Hamiltonian systems are symmetric with respect to the unit circle.

Definition 3.6

Associated to every multiplier \(\mu ,\) there exist (an infinite) numbers called characteristic exponents \(\lambda \) related to a multiplier by \(\mu =e^{\lambda T}.\)

The roots of \(p_{M}\left( \mu \right) \) or the multipliers of (3.10) are:

-

If \(tr^{2}\left( M\right) <4\) the multipliers are complex conjugates and their modulus is \(\left| \mu _{1,2}\right| ^{2}=\dfrac{ tr^{2}\left( M\right) }{4}+\dfrac{4-tr^{2}\left( M\right) }{4}=1.\) The two eigenvalues are different, which implies that the minimal and the characteristic polynomials of M are the same. This case corresponds to a stable system.

-

If \(tr^{2}\left( M\right) >4\), the multipliers are real and reciprocal, \(\mu _{1}=\left[ tr\left( M\right) +\right. \left. \sqrt{tr^{2}\left( M\right) -4}\right] /2 \) and \(\mu _{2}=\left[ tr\left( M\right) -\sqrt{tr^{2}\left( M\right) -4} \right] /2\). Obviously \(\mu _{1}+\mu _{2}=tr\left( M\right) \) and \(\mu _{1}\mu _{2}=1\), so \(\mu _{2}=\mu _{1}^{-1}\). If one of the multipliers, say \( \mu _{1}>1\), then this case corresponds to instability.

-

If \(tr^{2}\left( M\right) =4\) the multipliers are real and equal to \( +1 \) if \(tr\left( M\right) =+2,\) or the multipliers are equal to \(-1\) if \( tr\left( M\right) =-2\). In this case, Hill equation is stable if only if M is a diagonal matrix or scalar matrix, otherwise the Hill equation is unstable.Footnote 10

The boundaries between stability-instability correspond to this last case, i.e., when \(\left| tr\left( M\right) \right| =2.\) It is clear that M depends on the parameters \(\alpha ,\beta .\) It is customary to define [36]Footnote 11 \(\phi \left( \alpha ,\beta \right) \triangleq tr\left( M\right) .\) Hochstadt [29] was the first to recognize the important properties of \(\phi \left( \alpha ,\beta \right) \).

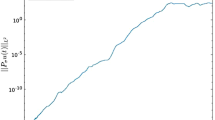

Theorem 3.6

(Hochstadt) The function \(\phi \left( \alpha ,\beta \right) \) for any \(\beta \) constant, is an entire function of order \(\frac{1}{2}.\) The functions \(\phi \left( \alpha ,\beta \right) \pm 2=0\) have an infinite number of roots. For any \(\beta _{0}\), and for \(\alpha _{0}\) sufficiently negative, \(\phi \left( \alpha _{0},\beta _{0}\right) \) is positive, therefore increasing \(\alpha \) appears the first root for the equation \(\phi \left( \alpha ,\beta \right) -2=0\), which corresponds to a double multiplier at \(+1,\) and from there appear two roots (not necessarily different) at \(-1\), then two roots \(+1\), up to infinity.

Due to the Hochstadt Theorem, there are two infinite sequences:

The first sequence corresponds to roots of \(\phi \left( \alpha ,\beta \right) +2=0\), and the second sequence corresponds to \(\phi \left( \alpha ,\beta \right) -2=0\). Moreover they interlace as:

This fact is illustrated in Fig. 3.1

Remark 3.7

Notice that for the values in which \(\phi \left( \alpha ,\beta _{0}\right) \in \left[ -2,2\right] \) the multipliers lie on the unit circle, and for the values in which \(\left| \phi \left( \alpha ,\beta _{0}\right) \right| >2\) the multipliers are both positive or both negative, and one the reciprocal of the other. Also if for some value of \(\alpha =\alpha _{1} \) both multipliers lie on \(-1\), and increasing this value up to the point \(\alpha =\alpha _{2}\) for which both multipliers lie on \(+1\); the path of the multiplier from the point \(-1\) to \(+1\) should be through arcs on the unit circle, they can not go from \(-1\) to \(+1\) on the real line, because at 0 the non-singularity of the Monodromy matrix would be violated. This property goes to any degree of freedom as long as the system is Hamiltonian. Moreover, even in the non-Hamiltonian case the Monodromy matrix is always nonsingular, because it is the state transition matrix, evaluated at the end of a period.

3.3.1 Multipliers of Hamiltonian Systems

Given in general a linear Hamiltonian system:

where J was defined in (3.9) and \(H\left( t\right) \in \mathbb {R} ^{2n\times 2n}\) is a real symmetric matrix. Due to the fact that a Hamiltonian system cannot exhibit asymptotic stability, then the accepted definition for weak stability of a Hamiltonian system is that all the solutions be bounded in \(\left( -\infty ,+\infty \right) \). The following definition is also required:

Definition 3.7

The Hamiltonian system (3.17) is strongly stable if it is stable (bounded) and there exists an \(\varepsilon >0\), such that for all \( \widetilde{H}\left( t\right) \) \(2n\times 2n\) symmetric matrices, while \( \left\| \widetilde{H}\left( t\right) -H\left( t\right) \right\| <\varepsilon \), all the Hamiltonian systems

are stable.

The condition of strong stability for Hamiltonian Systems was formulated more than 50 years ago, the sufficiency by Krein [34], and the necessity by Gelfand and Lidskii [24]; another definition is required for an indefinite inner product associated to the symplectic geometry of the Hamiltonian System [48].

Definition 3.8

Given an even-order real vector space of dimension 2n, and the standard inner product \(\left( x,y\right) \triangleq y^{T}x,\) and given any Hermitian nonsingular matrix \(H\in R^{2n\times 2n},\) it is possible to define the \( indefinite \) \(inner \) \(product \) as \(\left\langle x,y\right\rangle \triangleq \left( Gx,y\right) .\) We are going to use \(H=iJ\).Footnote 12

For any multiplier on the unit circle \(\mu ,\) its associated eigenvector \( v_{\mu }\) is such that \(\left\langle v_{\mu },v_{\mu }\right\rangle \ne 0\), if \(\left\langle v_{\mu },v_{\mu }\right\rangle >0\), \(\mu \) is called Multiplier of the First Kind; if \(\left\langle v_{\mu },v_{\mu }\right\rangle <0\), \(\mu \) is called Multiplier of the Second Kind [48]. If \(\left| \mu \right| \ne 1,\) \(\left\langle v_{\mu },v_{\mu }\right\rangle =0,\) but if we extend the definition of Multiplier of first kind for \(\mu :\left| \mu \right| <1;\) and Multiplier of the second kind for those \(\mu :\left| \mu \right| >1.\) Then all the multipliers are of first or second kind, and moreover, for a Hamiltonian system of dimensions 2n, n multipliers are of first kind and the remaining n multipliers are of second kind.Footnote 13

Remark 3.8

The key property due to Krein is that the multipliers including their kind are continuous functions with respect to variations in the Hamiltonian functions, in our case the symmetric Matrix \(H\left( t\right) \), [34, 48].

Due to the last remark, if two multipliers coincide on the unit circle and both are of the same kind, they cannot leave the unit circle, because they would violate continuity of the kind of multipliers.

Finally, to formulate the Gelfand–Lidskii–Krein Theorem, we require this last definition:

Definition 3.9

A multiplier \(\mu \) with algebraic multiplicity r, is said to be definite of first or second kind, if \(\left\langle q,q\right\rangle \) is of the same sign for all q in the eigenspace associated to \(\mu .\)

Theorem 3.7

(Krein–Gelfand–Lidskii) The linear periodic Hamiltonian system \( \overset{\underset{\bullet }{}}{x}=JH\left( t\right) x\) is strongly stable iff all the multipliers lie on the unit circle and those with algebraic multiplicity greater than one are definite or all are of the same kind.

3.3.2 Arnold’s Tongues

If we mark in the \(\alpha -\beta \) plane the points of instability, which correspond to \(\left| tr\left( M\right) \right| >2\) with some color, and leave blank the points of stability which correspond to \(\left| tr\left( M\right) \right| <2\); this diagram is called Ince-Strutt diagram. Figure 3.2 shows the Ince-Strutt diagram for the Mathieu equation.

Remark 3.9

We have to emphasize that the Ince-Strutt diagram was obtained numerically, i.e., gridding 1000 points in each of the chosen intervals for \(\alpha \in \left[ -1,10\right] \) and \(\beta \in \left[ 0,10\right] \). Then integrating the differential equation in the time interval \(\left[ 0,2\pi \right] \) with the initial conditions \(\left[ \begin{array}{cc} 1&0 \end{array} \right] ^{T},\) we get the solution \(\mathbf {x}_{1}\left( t\right) ,\) similarly for initial condition \(\left[ \begin{array}{cc} 0&1 \end{array} \right] ^{T}\) we get another solution \(\mathbf {x}_{2}\left( t\right) \) on each one of the grid points; finally the Monodromy matrix is \(M= \left[ \begin{array}{cc} \mathbf {x}_{1}\left( 2\pi \right)&\mathbf {x}_{2}\left( 2\pi \right) \end{array} \right] .\)

3.3.3 Meissner Equation

The exceptional cases in which an analytic solution of the scalar Hill equations may be obtained [40] are: (a) \(q\left( t\right) \) a train of impulses, (b) \(q\left( t\right) \) piecewise constant, (c) \(q\left( t\right) \) piecewise linear and d) \(q\left( t\right) \) elliptic functionsFootnote 14. The case b) for \(q\left( t\right) =sign\left( \cos t\right) \), which corresponds to the Meissner Equation, is particularly simple. It is easy to get the Monodromy matrix analytically, full details are in [44, pp. 276–278]. For \(\alpha >\beta \ge 0\), we have

\({\begin{array}{l} M= \\ \left( \begin{array}{cc} \cos \left( \sqrt{\alpha -\beta }\pi \right) &{} \frac{1}{\left( \sqrt{\alpha -\beta }\right) }\sin \left( \sqrt{\alpha -\beta }\pi \right) \\ -\left( \sqrt{\alpha -\beta }\right) \sin \left( \sqrt{\alpha -\beta }\pi \right) &{} \cos \left( \sqrt{\alpha -\beta }\pi \right) \end{array} \right) \bullet \\ \\ \bullet {}\left( \begin{array}{cc} \cos \left( \sqrt{\alpha +\beta }\pi \right) &{} \frac{1}{\left( \sqrt{\alpha +\beta }\right) }\sin \left( \sqrt{\alpha +\beta }\pi \right) \\ -\left( \sqrt{\alpha +\beta }\right) \sin \left( \sqrt{\alpha +\beta }\pi \right) &{} \cos \left( \sqrt{\alpha +\beta }\pi \right) \end{array} \right) = \\ \\ \left( \begin{array}{c} \cos \pi \sqrt{\alpha +\beta }\cos \pi \sqrt{\alpha -\beta }-\left( \sin \pi \sqrt{\alpha +\beta }\sin \pi \sqrt{\alpha -\beta }\right) \frac{\sqrt{ \alpha +\beta }}{\sqrt{\alpha -\beta }} \\ -\left( \sin \pi \sqrt{\alpha +\beta }\cos \pi \sqrt{\alpha -\beta }\right) \sqrt{\alpha +\beta }-\left( \cos \pi \sqrt{\alpha +\beta }\sin \pi \sqrt{ \alpha -\beta }\right) \sqrt{\alpha -\beta } \end{array} \right. \\ \\ \left. \begin{array}{c} \frac{1}{\sqrt{\alpha +\beta }}\left( \sin \pi \sqrt{\alpha +\beta }\right) \left( \cos \pi \sqrt{\alpha -\beta }\right) +\frac{1}{\sqrt{\alpha -\beta }} \left( \cos \pi \sqrt{\alpha +\beta }\right) \left( \sin \pi \sqrt{\alpha -\beta }\right) \\ \left( \cos \pi \sqrt{\alpha +\beta }\right) \left( \cos \pi \sqrt{\alpha -\beta }\right) -\frac{\sqrt{\alpha -\beta }}{\sqrt{\alpha +\beta }}\left( \sin \pi \sqrt{\alpha +\beta }\right) \left( \sin \pi \sqrt{\alpha -\beta } \right) \end{array} \right) \\ \end{array}} \)

and its trace is

then the condition \(\left| tr\left( M\right) \right| =2\), reduces to:

\(\begin{array}{lll} \left| 2\cos \overset{}{\left( \pi \sqrt{\alpha +\beta }\right) }\cos \left( \pi \sqrt{\alpha -\beta }\right) -\right. &{} &{} \\ &{} &{} \\ \;\;\left. \left[ \frac{\sqrt{\alpha -\beta }}{\sqrt{\alpha +\beta }} +\frac{\sqrt{\alpha +\beta }}{\sqrt{\alpha -\beta }}\right] \left( \sin \left( \pi \sqrt{\alpha +\beta }\right) \sin \left( \pi \sqrt{\alpha -\beta } \right) \right) \right| &{} = &{} 2 \end{array} \)

If we make \(\beta =0\) in this last expression \(\left| tr\left( M\right) \right| =2\), in order to know the points at which the Arnold Tongues are born, we get

\( \begin{array}{ccc} \left| 2\left[ \cos \left( \pi \sqrt{\alpha }\right) \cos \overset{}{ \left( \pi \sqrt{\alpha }\right) }\right] -2\left[ \sin \left( \pi \sqrt{ \alpha }\right) \sin \overset{}{\left( \pi \sqrt{\alpha }\right) }\right] \right| &{} = &{} 2 \\ \Updownarrow &{} &{} \\ \left| 2\cos \overset{}{\left( 2\pi \sqrt{\alpha }\right) }\right| &{} = &{} 2 \\ \Updownarrow &{} &{} \\ 2\pi \sqrt{\alpha } &{} = &{} k\pi \end{array} \)

which finally leads us to \(\boxed {\alpha =\underset{}{\overset{}{\dfrac{k^{2}}{4}}}\, \mathrm{for}\, k=0,1,2,\ldots }\)

It is customary to assign a number to each Arnold Tongue according to the rule: kth Arnold Tongue touches the \(\alpha \)-axis at \(\dfrac{k^{2}}{4}.\) We may also say that in the boundaries of even-order Arnold’s Tongues there is at least one T-periodic solution, similarly, in the boundaries of odd-order Arnold’s Tongues there is at least one 2T-periodic solution.

Figure 3.3 shows the Meissner equation, i.e., the Hill equation for \(q\left( t\right) =sign\left( \cos t\right) \).

Remark 3.10

Notice in the Ince-Strutt diagram for the Meissner equation, starting from the 3rd Arnold Tongue, the appearance of zero-length intervals in the \( \alpha \) directions; these points are called Coexistence, and correspond a parameters in which all the solutions are T-periodic if they belong to an even-order tongue, or 2T-periodic if they belong to an odd-order tongue. Notice also that coexistence points are exceptional ones.Footnote 15

If we introduce the notation

\(Tongue\left( i\right) \triangleq \left\{ \left( \alpha ,\beta \right) :\left( \alpha ,\beta \right) \text { belongs to the }i{\text {th}}\text { Arnold Tongue}\right\} \),

in the above notation we include their boundaries. We may express compactly the next fundamental property:

Remark 3.11

(Non-intersecting) All the Arnold Tongues are non-intersecting, i.e., \(Tongue\left( i\right) \cap Tongue\left( j\right) =\phi \), \(\forall \) \(i\ne j.\)

3.3.4 Critical Lines

The following question arises: What happen when we analyze in large intervals [9] of \(\left( \alpha ,\beta \right) ?\) In Fig. 3.4 we show the same diagram for Meissner equation, but now in the intervals \( \alpha \in \left[ 0,120\right] \) and \(\beta \in \left[ 0,120\right] .\)

We may observe from Fig. 3.4 that below \(45^{\circ }\) the region is ‘essentially stable’ and above this line is ‘essentially unstable’: this line was designated by Broer [9] as the ‘critical line’, and it is independent of the function \(q\left( t\right) \) used, as long as \( q\left( t \right) \) is of zero average and \(\left( \Vert q\left( t\right) \right\| _{2}\triangleq \left[ \mathop {\intop }_0^T \left| q\left( t \right) \right| ^{2}dt\right] ^{1/2}=\left\| \cos t\right\| _{2}\).

3.3.5 Forced Hill Equation

In [41] the T-periodically forced Hill equation was analyzed, i.e.,

It is known [36], that in the stable regions there exists kT-periodic solutions, for \(k\ge 3,\) of the homogeneous equation (3.18 with \(f\left( t\right) =0\)), for these values of \(\left( \alpha ,\beta \right) \) there are two independent kT-periodic solutions. Figure 3.5 shows these kT -periodic lines for \(k=3,5,9\), and 14.

When we apply a forced periodic term \(f\left( t+T\right) =f\left( t\right) \), of the same period T as the exciting function \(q\left( t\right) .\) In [41], we prove that if \(f\left( t\right) \) contains a kT-periodic harmonic, then the corresponding kT-periodic line becomes unstable, due to linear resonance.

3.3.6 Open-Loop Stabilization of Hill Equation

The last point considered for the scalar Hill equations is: Given a Hill equation for some set of parameters \(\left( \alpha _{0},\beta _{0}\right) :\) \(\ \overset{\underset{\bullet \,\bullet }{}}{y}+\left[ \alpha _{0}+\beta _{0}q\left( t\right) \right] y=0\), where \(q\left( t\right) \) is a T -periodic function. If the equation for these parameters is unstable, the following problem is posed (Fig. 3.6):

Problem 3.1

There exists another T-periodic function \(r\left( t\right) \) such that the new Hill equation \(\ \overset{\underset{\bullet \,\bullet }{}}{y}+\left[ \overset{}{\alpha _{0}}+\beta _{0}\left( \overset{}{q\left( t\right) } +\gamma r\left( t\right) \right) \right] y=0\) is stable for the same set of parameters \(\left( \alpha _{0},\beta _{0}\right) \)? [16].

Solution 3.1

Suppose \((\alpha _{0},\beta _{0})\) is unstable, equivalently \((\alpha _{0},\beta _{0})\in Tongue\left( i\right) \) for some \(i\ge 1,\) add a T-periodic function \(r\left( t\right) \) to \(q\left( t\right) \) such that \( (\alpha _{0},\beta _{0})\in Tongue\left( i+1\right) \). This guarantees that if \(tr\left[ M(\alpha _{0},\beta _{0},q\left( t\right) )\right] >2\), then \(tr\left[ M(\alpha _{0},\beta _{0},q\left( t\right) +\right. \left. r\left( t\right) )\right] <-2\).Footnote 16

Due to the continuity of \(tr\left[ M(\alpha _{0},\beta _{0},q\left( t\right) )\right] \), if we perform the convex combination of \(q\left( t\right) \) and \(r\left( t\right) \), i.e., \(q\left( t\right) \rightarrow q\left( t\right) +\gamma r\left( t\right) ,\) for some \(\gamma \in \left[ 0,1\right] \),

\(\left. tr\left[ M(\alpha _{0},\beta _{0},q\left( t\right) +\gamma r\left( t\right) )\right] \right| _{\gamma =0}>2\) and similarly

\(\left. tr\left[ M(\alpha _{0},\beta _{0},q\left( t\right) +\gamma r\left( t\right) )\right] \right| _{\gamma =1}<-2,\) \(\Longrightarrow \)

\(\exists \) \(\gamma _{0}\in \left( 0,1\right) :\left. tr\left[ M(\alpha _{0},\beta _{0},q\left( t\right) +\gamma r\left( t\right) )\right] \right| _{\gamma =\gamma _{0}}=0\), which corresponds to a stable system.

Notice that the previous solution rests heavily on Remark 11 (Non-Intersecting).

3.4 Hill Equation: Two Degrees of Freedom Case

In the 2 degrees of freedom case, \(y\left( t\right) \in \mathbb {R}^{2}\)

Notice, that we have included matrices \(A,B\in \mathbb {R}^{2\times 2}\), and we keep our two-parameter \(\left( \alpha ,\beta \right) \) in order to make some comparisons with the 1-DOF case.

Similarly to the 1-DOF case, if we define \(x=\left[ \begin{array}{c} y \\ \overset{\underset{\bullet }{}}{y} \end{array} \right] \in \mathbb {R}^{4}\), we may express (3.19) in state space as:

In order to the system described by (3.20) be a Hamiltonian (\(H\left( t\right) =H^{T}\left( t\right) \)), the restrictions: \(A=A^{T}\) and \(B=B^{T}\) should be satisfied.

Without loss of generality, we may assume matrix A diagonal with positive entries, which represents the square of the two natural frequencies of the system without parametric excitation. An early publication appears in [26], where the author analyzes a pair of Mathieu equations coupled.

Now there are four multipliers, eigenvalues of the Monodromy matrix, they have symmetry with respect to the real axis because we are treating real matrices, and there is a symmetry with respect to the unit circle because the state transition matrix is symplectic. Now there are three possibilities for multipliers to abandon the unit circle, namely: (a) a pair of multipliers leaving at the point \(+1\), (b) a pair leaving at the point \(-1\), and (c) two conjugate pairs leaving the unit circle at any point \(1\measuredangle \theta \), \(\theta \in \left( 0,\pi \right) \).Footnote 17 The cases (a) and (b) already appear in the 1-DOF case; but (c) is a new case for systems having at least 2-DOFs, and it is called Krein Collision of the multipliers. Figure 3.7 represents the three case above.

Points where multipliers for a 2-DOF Hamiltonian system may leave the unit circle. Note that for leaving the unit circle at the points \(\pm 1\), only two multipliers are required; but to leave the unit circle at \(1\measuredangle \theta \), for \(\theta \ne 0\) or \(\pi \), the four multipliers should satisfy the configuration shown

3.4.1 Reduction of the Characteristic Polynomial

Because of the symmetry of the characteristic polynomial of the Monodromy Matrix, \(p_{M}\left( \mu \right) =\mu ^{4}-A\mu ^{3}+B\mu ^{2}-A\mu +1\), is a self-reciprocal polynomial, Howard and MacKay [30] introduced a new variable \(\rho =\mu +\mu ^{-1}\), in this variable the characteristic polynomial of M reduces to degree 2, and is given by:

their corresponding eigenvalues are:

and the eigenvalues of \(p_{M}\left( \mu \right) \) are recovery from:

Remark 3.12

The symmetry property inherited by the Hamiltonian nature allows to reduce the order in the analysis to one halfFootnote 18

The transition boundaries defined when a multiplier leave the unit circle or equivalently using (3.22) are given by two lines and a parabola:

Figure 3.8 shows the relationships given in (3.24) indicating the typical multiplier positions. The reduced polynomial \(Q\left( \rho \right) =\rho ^{2}-A\rho +B-2\), the white zone represents parameters A, B which produces multipliers of \(p_{M}\left( \mu \right) =\mu ^{4}-A\mu ^{3}+B\mu ^{2}-A\mu +1 \) on the unit circle through formula (3.23). Colored regions correspond to unstable zones, in the boundary \(B=+2A-2\) there is at least one T-periodic solution; in the boundary \(B=-2A-2\) there is at least one 2T-periodic solution; in the parabola boundary \(B=A^{2}/4+2\) there are a couple of multipliers at some point of the unit circle except \(\pm 1\), and have two periodic solutions of any frequency in general (Fig. 3.9).

Remark 3.13

Figures 3.10 and 3.11 use this same colors code.

Zoom of the Fig. 3.8 for the point A there is at least one T-periodic and at least one 2T -periodic solutions; for the point B there are at least two linearly independent 2T-periodic solutions; and for the point C there are at least two linearly independent T-periodic solutions

Using this code of colors, Fig. 3.10 shows the Arnold Tongues for a 2-DOFs Mathieu equation, and Fig. 3.11 shows the Arnold Tongues for a 2-DOFs Meissner equation. For comparison reasons we chose the same matrices A and B.

For the Figs. 3.10 and 3.11 we use the following equation:

with \(A=\left[ \begin{array}{cc} 1 &{} 0 \\ 0 &{} 2 \end{array} \right] \) and \(B=\left[ \begin{array}{cc} 1 &{} -1 \\ -1 &{} 2 \end{array} \right] \) and we have used \(q\left( t\right) =\cos t\) for the Fig. 3.10; and \(q \left( t\right) = sign\left( \cos t \right) \) for the Fig. 3.11.

Arnold Tongues for a 2-DOF Mathieu equation (3.25) with \(q \left( t\right) =\cos t\). Blue zones correspond to Combination Arnold Tongues

Arnold Tongues for a 2-DOF Meissner equation (3.25) with \(q\left( t\right) =sign\left( \cos t\right) \). Blue zones correspond to Combination Arnold Tongues

Zones of instability occur when some pair of multipliers coincide in the point \(+1\) or \(-1\) and after that they leave the unit circle, as in the scalar case, but there are multipliers associated to each of the natural frequencies of the subsystems; therefore there are two possible ways to leave at each of the points \(\pm 1,\) each one associated with the two subsystems, these Tongues are called Principal. But the true new characteristic is that the multiplier now may leave the unit circle at any point \(1\measuredangle \theta \) for some \(\theta \in \left( 0,\pi \right) ,\) these Krein Collision give unstable zones called Combination Arnold Tongues. There are two kinds of Combination Arnold Tongues: summing or difference, see [48] for further information.

Remark 3.14

In the 2-DOF case, some comments are in order. First, there are Arnold Tongues related to each of the natural frequencies of each subsystem. And of course, the Arnold Tongues associated to each subsystem are non-overlapping. Nevertheless, generically the Arnold Tongues for some rationally independent natural frequencies are intersecting.

Remark 3.15

(Critical lines for 2-DOF) With respect to the critical line for Hill equation of at least 2-DOFs, we claim that generically this critical line now form an angle with the horizontal axis lower than \(45^{\circ }\), see Fig. 3.12 for the Lyapunov like equation, i.e., \(q\left( t\right) =\cos t+\cos 2t\) [15].

Remark 3.16

(Forced 2-DOF Hill Equation) There is no chance to extend the property obtained to simultaneously have parametric instability (Arnold Tongues) and linear resonance in the same Ince-Strutt diagram as in Fig. 3.5.

Remark 3.17

(Open-loop stabilization) In general, it is not possible for 2-DOF systems to take advantage of the non-intersecting property because this property does not exist in n-DOF for \(n \ge 2\). Nevertheless for very small values of \(\beta \), we could develop this idea, see Fig. 3.6.

3.4.2 Computational Issues

The obvious algorithm to get the Arnold Tongues is gridding and integrating for every point \(\left( \alpha ,\beta \right) .\) This for a \(1000 \times 1000\) gridding, could take of the order of 20 h to run, in a Dell desktop PC Intel core 2 duo 2.8 GHz 4GB ram and 980 GPU’s. So, if we keep this naive approach and use a parallel computation, then this algorithm could decrease its speed up to 2 min for the same resolution. The use of the analytic boundaries of the reduced polynomial (3.21) not only decreases the speed of computation, but also gives better precision. For large scales such as those required for critical lines, it is required to use symplectic integrators [25] in order to keep symplecticity of the Monodromy matrix, which guarantees good precision.

3.5 Future Work

We are going to enumerate the possible extensions of the scalar and 2-DOF Hill equation.

3.5.1 Generalizations of the Scalar Case

Given the scalar equation:

it always represents a Hamiltonian System, therefore the only generalization possible is:

-

Scalar I.- The function \(q\left( t\right) \) is no longer T -periodic, could be Quasi-Periodic or Almost Periodic. Notice that in this case there is not a Floquet Theorem!Footnote 19

A function \(q\left( t\right) \) is Quasi-Periodic, denoted \(q\left( t\right) \in QP\), if it is the sum of a finite number of Periodic functions of frequency not rationally related; for instance \(q\left( t\right) =\sin t+\sin \pi t.\)

Recall that a function \(q\left( t\right) \) is Periodic if it admits a Convergent Fourier Series of the form:

Remark 3.18

Notice that there exists a fundamental frequency \(\omega _{0}\) and its harmonics \(\left( k\omega _{0}\right) \), rationally related.

A function \(q\left( t\right) \) is Almost-Periodic, denoted \( q\left( t\right) \in AP\), if it admits a Convergent Generalized Fourier Series of the form:

where the sequence \(\left\{ \ldots ,\rho _{k},\rho _{k+1},\ldots \right\} \in \ell _{2} \) Footnote 20, this condition guarantees the convergence.

3.5.2 Generalizations of the 2-DOFs

Given the 2-DOFs Hill equation expressed in state variables:

The following possible open problems are listed in increasing level of complexity:

-

2-DOFs I.- If we keep the function \(q\left( t\right) \) T -periodic, but matrix B is no longer symmetric. It is possible to solve the problem, because we still may apply the Stability consequence of the Floquet Theorem. But the Monodromy Matrix is no longer symplectic. So the same condition for stability, all the multiplier lie on the unit circle, there is no result for strong stability. It is unknown how the multiplier leaves the unit circle.

-

2-DOFs II.- The function \(q\left( t\right) \) is no longer T -periodic, then \(q\left( t\right) \in QP\) or \(q\left( t\right) \in AP\). Again, there is not a Floquet Theorem, there is no Monodromy Matrix, therefore no analytic condition of the stability based on the multipliers, etc.

-

2-DOFs III.- The function \(q\left( t\right) \) is no longer T -periodic, but \(q\left( t\right) \in QP\) or \(q\left( t\right) \in AP\); and B is no longer symmetric. The resulting system is no longer Hamiltonian, neither T-Periodic. None of the tools used are valid. Very scarce results exist in this area.

-

IV.- This item does not belong to n-DOFs Hamiltonian systems, if the dimension of the state equation is odd (never is a Hamiltonian system), i.e.,

$$\begin{aligned} \overset{\underset{\bullet }{}}{x}=A\left( t\right) x,A\left( t\right) =A\left( t+T\right) ,\ \ x\left( t\right) \in \mathbb {R}^{2n+1} \end{aligned}$$the system is still periodic, we may apply the Floquet Theorem, but for stable or bounded systems there is always a real multiplier at \(+1\) or \(-1\), it is unknown how to leave the unit circle, etc.

A final comments about the relationship between Hill equation [36] and Sturm–Liouville Theory [14]. In the scalar case if we write the standard SL Problem

and the spectral parameter \(\alpha \), we recovery the T-Periodic boundaries of the Arnold Tongues with the above Boundary Value Problem.

If we replace the boundary conditions by: (b) \(y(0)=-y(T)\) & \(\overset{ \underset{\bullet }{}}{y}\left( 0\right) =-\overset{\underset{\bullet }{}}{y} \left( T\right) \), we get as a solution the 2T-periodic boundaries of the Arnold Tongue [19] or [36].

Completely different is the 2-DOF case, because with the above boundaries conditions (a) and (b) we recovery the boundaries of the principal Arnold Tongues, but the boundaries of the Combination Arnold Tongues do not correspond to some specific boundary condition.

3.6 Conclusions

We may summarize the differences explained in the previous exposition, in the following table:

Property | 1-DOF | 2-DOF |

|---|---|---|

Multiplier leaving the unit circle | only at \(+1\) or \(-1\) | any \(1\measuredangle \theta \), \(\theta \in [0,\pi ]\) |

Arnold Tongues | Not interesting | for high excitation \(\beta \) generically intersecting |

Boundaries of the Arnold Tongues | \(\exists T\)-periodic or 2T-periodic sols | a) May have T-periodic sol b) May have 2T-periodic sol c) May have T & 2T-periodic sol d) A periodic solution nonconmensurable with T |

Combination Tongues | NO | YES |

Critical Lines | 45\(^\circ \) | less than 45\(^\circ \) |

Equivalent with SL Problem | YES | NO |

Notes

- 1.

When the pendulum is assumed a mass M hanging of rigid massless rod.

- 2.

More well known as Lord Rayleigh, more correctly Baron Rayleigh because Baron is a higher novelty title than Lord.

- 3.

The name Arnold Tongues was introduced after [2].

- 4.

Matriciant in the russian literature [1]. Also denominated as Cauchy Matrix or Normalized Fundamental Matrix.

- 5.

The necessary and sufficient condition for R to be real is that the real negative eigenvalues of \(\varPhi \left( T,0\right) \), be of algebraic multiplicity even [1].

- 6.

- 7.

Given a square matrix A, by \(\sigma \left( A\right) \) we denote its spectrum, i.e., the set of all the eigenvalues.

- 8.

Not necessarily periodic.

- 9.

We will assume through the paper that \(q\left( t\right) \) is piecewise continuous, integrable in \(\left[ 0,T\right] \) and of zero average, i.e., \( \mathop {\intop }_0^T q\left( t\right) dt=0\).

- 10.

When the multipliers are \(\pm 1\) and the Monodromy matrix is diagonal, and we say that there is a point of Coexistence, because there are two linearly independent periodic solutions of Hill equation; T-periodic for multipliers \(+1\), and 2T-periodic for the multipliers equal to \(-1\).

- 11.

In Magnus [36] the function that we call \(\phi \left( \alpha ,\beta \right) \), is denoted as \(\varDelta \left( \lambda \right) \), because \(\lambda \) is used instead of our \(\alpha \), and the parameter \(\beta \) is not used in the cited work.

- 12.

- 13.

Equivalently, if we increase the Hamiltonian, i.e., \(\widetilde{H}\left( t\right) -H\left( t\right) >0,\) and \(\mu \) was an isolated multiplier on the unit circle associated to \(H\left( t\right) \), when \(H\left( t\right) \) is increased to \(\widetilde{H}\left( t\right) ,\) \(\mu \) moves on the unit circle to \(\widetilde{\mu }\); if \(\arg \widetilde{\mu }>\arg \mu \), the multiplier \(\mu \) is said to be a Multiplier of the First Kind, contrarily, i.e., \(\arg \widetilde{\mu }<\arg \mu \), the multiplier \(\mu \) is a Multiplier of the Second Kind [48].

- 14.

In the case that the periodic function \(q\left( t\right) \) is an elliptic function, called Lamé Equation.

- 15.

Chulaevsky [13] justifies the fact that coexistence points are exceptional ones, because: ... ‘From a topological point of view the scalar matrices, which correspond to coexistence points, form a subvariety in the variety of \(2\times 2\) Jordan Cells.’

- 16.

Here \(tr\left[ M(\alpha _{0},\beta _{0},q\left( t\right) +\gamma r\left( t\right) )\right] \) refers to the trace of the Monodromy Matrix associated to \(\overset{\underset{\bullet \,\bullet }{}}{y}+\left[ \overset{}{\alpha _{0}}+\beta _{0}\left( \overset{}{q\left( t\right) }+\gamma r\left( t\right) \right) \right] y=0\).

- 17.

We use \(r\measuredangle \theta \) to represent a complex number with modulus r, and argument \(\theta .\)

- 18.

In [17] this property is extended to Hamiltonian systems with dissipation, strictly speaking this class of systems is not longer Hamiltonian.

- 19.

There is a reduced for of a Floquet theorem, no factorization is possible, but there is a reducibility part.

- 20.

A sequence \(\left\{ \ldots ,x_{k},x_{k+1}\ldots \right\} \) double infinite belongs to \(\ell _{2}\) if \(\sum \limits _{k=-\infty }^{\infty }\left| x_{k}\right| ^{2}=M<\infty \). See for instance [18].

References

Adrianova, L.Y.: Introduction to Linear Systems of Differential Equations. American Mathematical Society, Providence (1995)

Arnol’d, V.I.: Remarks on the perturbation theory for problems of Mathieu type. Russ. Math. Surv. 38(4), 215–233 (1983)

Arnold, V.I.: Mathematical Methods of Classical Mechanics. Translated from the 1974 Russian original by K. Vogtmann and A. Weinstein. Corrected reprint of the second (1989) edition. Graduate Texts in Mathematics, vol. 60 (1989)

Atkinson, F.V.: Discrete and Continuous Boundary Problems. Academic Press, New York (1964)

Belokolos, E.D., Gesztesy, F., Makarov, K.A., Sakhnovich, L.A.: Matrix-valued generalizations of the theorems of Borg and Hochstadt. Lect. Notes Pure Appl. Math. 234, 1–34 (2003)

Bolotin, V.V.: The Dynamic Stability of Elastic Systems. Holden- Day lnc, San Francisco (1964)

Borg, G.: Eine umkehrung der Sturm-Liouvilleschen eigenwertaufgabe. An inverse problem of the self-adjoint Sturm-Liouville equation. Acta Math. 78(1), 1–96 (1946) (In German)

Brockett, R.: Finite Dimensional Linear Systems. Wiley, New York (1969)

Broer, H., Levi, M., Simo, C.: Large scale radial stability density of Hill’s equation. Nonlinearity 26(2), 565–589 (2013)

Brown, B.M., Eastham, M.S., Schmidt, K.M.: Periodic Differential Operators, vol. 228. Springer Science & Business Media, New York (2012)

Champneys, A.: Dynamics of parametric excitation. Mathematics of Complexity and Dynamical Systems, pp. 183–204. Springer, New York (2012)

Chen, C.T.: Linear System Theory and Design. Oxford University Press, Oxford (1998)

Chulaevsky, V.A.: Almost Periodic Operators and Related Nonlinear Integrable Systems. Manchester University Press, Manchester (1989)

Coddington, E.A., Levinson, N.: Theory of Ordinary Differential Equations. Tata McGraw-Hill Education, New York (1955)

Collado, J.: Critical lines in vectorial Hill’s equations. Under preparation (2017)

Collado, J., Jardón-Kojakhmetov, H.: Vibrational stabilization by reshaping Arnold Tongues: a numerical approach. Appl. Math. 7, 2005–2020 (2016)

Collado, J., Ramirez, M., Franco, C.: Hamiltonian systems with dissipation and its application to attenuate vibrations. Under preparation (2017)

Corduneaunu, C.: Almost Periodic Oscillations and Waves. Springer, Berlin (2009)

Eastham, M.S.P.: The Spectral Theory of Periodic Differential Equations. Scottish Academic Press, London (1973)

Floquet, G.: Sur les équations différentielles linéaires à coefficients périodiques. Annales scientifiques de l’École normale supérieure 12, 47–88 (1883)

Fossen, T., Nijmeijer, H. (eds.): Parametric Resonance in Dynamical Systems. Springer Science & Business Media, New York (2011)

Gantmacher, F.R.: The Theory of Matrices, vol. 2. Chelsea, Providence (1959)

Gelfand, I.M., Levitan, B.M.: On the determination of a differential equation from its spectral function. Izvestiya Rossiiskoi Akademii Nauk. Seriya Matematicheskaya 15(4), 309–360. English Trans. Am. Math. Soc. Trans. 1(2), 253–304, 1955 (1951)

Gelfand, I.M., Lidskii, V.B.: On the structure of the regions of stability of linear canonical systems of differential equations with periodic coefficients. Am. Math. Soc. Transl. 2(8), 143–181 (1958)

Hairer, E., Lubich, C., Wanner, G.: Geometric Numerical Integration: Structure-Preserving Algorithms for Ordinary Differential Equations, vol. 31. Springer Science & Business Media, New York (2006)

Hansen, J.: Stability diagrams for coupled Mathieu-equations. Ingenieur-Archiv 55(6), 463–473 (1985)

Hayashi, C.: Forced Oscillations in Non-Linear Systems. Nippon Printing and Publishing Co, Osaka (1953)

Hill, G.W.: On the part of the motion of the lunar perigee which is a function of the mean motions of the sun and moon. Acta Math. 8(1), 1–36 (1886)

Hochstadt, H.: Function theoretic properties of the discriminant of Hill’s equation. Mathematische Zeitschrift 82(3), 237–242 (1963)

Howard, J.E., MacKay, R.S.: Linear stability of symplectic maps. J. Math. Phys. 28(5), 1036–1051 (1987)

Kalman, D.: Uncommon Mathematical Excursions: Polynomial and Related Realms. Dolciani Mathematical Expositions, vol. 35. Mathematical Association of America (2009)

Kapitsa, P.L.: Dynamic stability of the pendulum when the point of suspension is oscillating. Sov. Phys. JETP 21, 588 (In Russian). English Translation: In Collected works of P. Kapitsa (1951)

Khalil, H.K.: Nonlinear Systems. Prentice-Hall, Upper Saddle River (2001)

Krein, M.G.: Fundamental aspects of the theory of $\lambda -$ zones of stability of a canonical system of linear differential equations with periodic coefficients. To the memory of AA Andronov [in Russian], Izd. Akad. Nauk SSSR, Moscow, 414–498. English Translation: Am. Math. Soc. Transl. 120(2), 1–70 (1955)

Lyapunov, A.M.: The general problem of the stability of motion. Int. J. Control 55(3), 531–534. Also: Taylor and Francis (1992). From the original Ph.D. thesis submitted in Kharkov (1882)

Magnus, W., Winkler, S.: Hill’s Equation. Dover Phoenix Editions. Originally published by Wiley, New York 1966 and 1979 (2004)

Marchenko, V.A.: Sturm-Liouville operators and their applications. Kiev Izdatel Naukova Dumka. Engl. Transl. (2011). Sturm-Liouville Operators and Applications, vol. 373. American Mathematical Society (1977)

McKean, H.P., Van Moerbeke, P.: The spectrum of Hill’s equation. Inventiones mathematicae 30(3), 217–274 (1975)

Meyer, K., Hall, G., Offin, D.: Introduction to Hamiltonian Dynamical Systems and the N-Body Problem, vol. 90. Springer Science & Business Media, New York (2008)

Richards, J.A.: Modeling parametric processes–a tutorial review. Proc. IEEE 65(11), 1549–1557 (1977)

Rodriguez, A., Collado, J.: Periodically forced Kapitza’s pendulum. In: American Control Conference (ACC), pp. 2790–2794. IEEE (2016)

Sanmartín Losada, J.R.: O Botafumeiro: parametric pumping in the middle age. Am. J. Phys. 52, 937–945 (1984)

Seyranian, A.P.: Parametric resonance in mechanics: classical problems and new results. J. Syst. Des. Dyn. 2(3), 664–683 (2008)

Seyranian, A.P., Mailybaev, A.A.: Multiparameter Stability Theory with Mechanical Applications, vol. 13. World Scientific, Singapore (2003)

Staržhinskiĭ, V.M.: Survey of works on conditions of stability of the trivial solution of a system of linear differential equations with periodic coefficients. Prik. Mat. Meh. 18, 469–510 (Russian). Engl. Transl. Am. Math. Soc. Transl. Ser. 1(2) (1955)

Stephenson, A.: On induced stability. Lond. Edinb. Dublin Philos. Mag. J. Sci. 15(86), 233–236 (1908)

van der Pol, B., Strutt, M.J.O.: On the stability of the solutions of Mathieu’s equation. Lond. Edinb. Dublin Philos. Mag. J. Sci. 5(27), 18–38 (1928)

Yakubovich, V.A., Starzhinskii, V.M.: Linear Differential Equations with Periodic Coefficients, vol. 1 & 2. Halsted, Jerusalem (1975)

Acknowledgements

The author wishes to thank to A. Rodriguez, C. Franco and M. Ramirez for a detailed revision of the first draft and also to G. Rodriguez for the computational work.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG

About this chapter

Cite this chapter

Joaquin Collado, M. (2018). Hill Equation: From 1 to 2 Degrees of Freedom. In: Clempner, J., Yu, W. (eds) New Perspectives and Applications of Modern Control Theory. Springer, Cham. https://doi.org/10.1007/978-3-319-62464-8_3

Download citation

DOI: https://doi.org/10.1007/978-3-319-62464-8_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-62463-1

Online ISBN: 978-3-319-62464-8

eBook Packages: EngineeringEngineering (R0)