Abstract

A brief introduction to high-speed cameras is presented. The most frequently asked question is “Why do we need high-speed cameras?” To this we answer with three typical situations of an object: (1) it remains still, (2) it is moving, and (3) it is observed under a microscope. For the next question “How can we acquire high-speed images?” two important factors (1. illumination and 2. trigger) are discussed. One example is shown, where it is very difficult to find an appropriate trigger as well as illumination device. As the last part of this chapter there follows a summary of this book.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

1 How Does a Horse Gallop?

Curiosity is one of the most essential elements of the human mind. When we encounter something extraordinary, we like to know “what” at first, “how” as the next, and then “why”. This roughly corresponds to a famous scenario of “Copernicus—Kepler—Newton”: Copernicus said that the earth revolves around the sun, Kepler showed how the earth revolves around the sun, and Newton said why it does so. Often we have to know the “what” more precisely in order to get an answer to the “how”, and the “how” more precisely in order to understand the “why”. One of the close-to-life examples may be a sudden sound of an explosion which you hear, when you are walking in the street. What? You look around. Nothing is broken, but you see an airplane in the sky. So, you can guess the “what”: the airplane flies faster than sound. To know “how” and “why” is, however, not so simple, and scientists have worked and are working on that.

Without technical help our knowledge on “what” and “how” is very limited. We obtain information from our five senses: sight, hearing, taste, smell and touch. For the sight we can see light of wavelength from about 380 to 750 nm. The ultraviolet or infrared light we cannot see. As detectors the human eyes are limited not only due to their detectable wavelength range, but also their detectable spatial resolution, temporal resolution and intensity. However, this kind of limitation is not always disadvantageous for our daily life. For example, human eyes cannot follow an intensity change faster than 15 Hz. Therefore, light from lamps which work with a frequency of 50/60 Hz looks continuous for our eyes. Classical silent movies are made with 16–24 Hz to show us continuous movement [1]. On the contrary, flickering of 10 Hz may cause seizure symptoms. An example is the Pocket Monster incident that happened in Japan in 1997 [2]: Many children who watched a TV program of the popular animation film “Pocket Monster” got indescribable feelings, and about 700 of them were treated in hospitals for seizure syndromes.

In order to satisfy our curiosity plenty of equipment and devices have been developed. For the light of wavelength shorter than 380 nm or longer than 750 nm we need ultraviolet or infrared detectors. Development of microscopes and electron microscopes offer a better spatial resolution. Photomultipliers and photodiodes can amplify weak signals to become detectable. For improving temporal resolution we need additional equipment, namely high-speed cameras. In this book we like to show with high-speed cameras, what we can see, that we do not see just with our own eyes.

The first concrete high-speed images were taken by Eadweard Muybridge in 1887 [3,4,5]. He placed 12 cameras along a galloping way, and the shutter of each camera was connected with taut wire, which released the shutter electrically when the horse passed. The 12 cameras were successively triggered so that they produced a series of images of a galloping horse. At that time, there was an exciting discussion on whether at least one of the four feet touches the ground during galloping. Muybridge’s photographs (Fig. 1) showed, in fact, that there is a moment when all four legs are in the air. Moreover, they showed that a very complicated coordination of the legs is involved: there is no symmetry between front legs and hind legs, and no strongly synchronized movement either of the two front legs or of the two hind legs.

Perhaps Gioacchino Rossini knew this before Muybridge. He composed the William Tell Overture in 1829. When you hear the second half of the overture, you have a vivid recollection of galloping horses. He managed to express the gallop acoustically from rather simple rhythms by using various musical instruments together (see Fig. 2). If you beat out these rhythms, probably you are disappointed. It does not sound like gallop. But if you listen to an orchestra playing it, it is gallop! We hear many additional “noises” between the main rhythms. Interestingly, the temporal resolution of our ear is better than that of our eyes (human ears: 20–100 Hz depending on the band width, main frequency, as well as the amount of involved noises) [6]. Although such psycho-acoustics is a very interesting field, we will not discuss this matter here.

To come back to the high-speed imaging, since Muybridge’s work the methods to take high-speed images have been continuously developed, using strobes, laser pulses on the one hand, rotating drums, rotating mirrors and streak tubes on the other hand. The development of light sensitive semiconductors has drastically changed the world of high-speed imaging. Note that the terms “high-speed cameras”, as well as “high-speed imaging” used in this volume do not relate to any specific definition of the SMPTE (Society of Motion Pictures and Television Engineers).

2 Why Do We Need High-Speed Cameras?

There are several chapters dealing with this important question in a rather specific way (see, for example, Chapter “Brandaris Ultra High-speed Imaging Facility”: Lajoinie et al., Chapter “High-speed Imaging of Shock Waves and their Flow Fields”: Kleine). However, here we give answers of a more general nature.

2.1 For Temporal Resolution

The Pocket Monster incident [2] indicated that the human eye certainly can detect a frequency of 10 Hz. This corresponds to a camera which takes an image every 0.1 s. If we like to see the light intensity changes of a fluorescent lamp lighted by a power source with alternating current of 50 Hz, we need a camera which works with a time interval shorter than 0.02 s, as long as the lamp itself does not move. If the change of the light intensity is faster, then we need a correspondingly faster camera: for instance, for observing light intensity changes of 106 Hz, we need a camera which records images faster than every 1 μs.

2.2 For Spatio-Temporal Resolution

When an object is moving (relative to the camera), then we have to take a spatial factor into consideration. In order to illustrate such a case, we often show a movie of a fast train passing with 180 km/h in front of a camera fixed on the platform. In a movie taken with a conventional video camera with 25–30 fps you see a kind of moving band with interruptions, whereby you may detect segments of wagons. Here the question is: With which frequency do you have to take images in order to recognize faces of passengers in this train? In our example the train moves in one direction with 50 m/s (=180 km/h). Human eyes with a temporal resolution limit of 10 Hz can observe objects in the train larger than 5 m length along the train. If you have to detect an object of 1 cm length (which is necessary for the recognition of human faces), a frequency 500 times larger (5000 Hz, corresponding to 0.2 ms) is required.

2.3 For Microscopic Observation

When a particle is moving with a velocity of 0.01 m/s, you can observe this movement with your own eyes, as far as the particle is large enough to be seen. However, if the particle is so small that you have to magnify it with a microscope, then the observation of even such a slow movement (0.01 m/s) is not any more so obvious. Under a microscope an apparent velocity is magnified, depending on the magnification factor. If the magnification factor along the particle movement is 100, the apparent velocity of the object moving with 0.01 m/s is 1 m/s. Moreover, the field of view gets small, also depending on the magnification. Therefore, an appropriate high-speed camera is required to observe a moving object under the microscope.

3 How to Take High-Speed Images

Since automatic cameras have been developed, taking pictures in daily life has become very easy. Just to push a button produces more or less acceptable photographs. You do not need to think about exposure, diaphragm, or distance. Even a flash goes off automatically, when it is dark. If you, as a user of automatic cameras, have an opportunity to use a professional non-automatic camera, you may be frustrated: you have to select the correct diaphragm and exposure time , and adjust the focus precisely, in order to take pictures which are worth keeping. Even more complicated is the situation, if you, as a user of normal video cameras, suddenly get a high-speed camera. In the following I describe my own experiences, when I had an opportunity to use a high-speed camera for the first time: I was a normal user of automatic cameras and had no experience with high-speed cameras.

The camera offered to me for use was an ultra high-speed camera, which can take images with a frame rate of up to 1 million frames/s (1 Mfps) and 100 successive images [7]. The camera was a kind of a prototype without any instruction manual. The person who brought the camera to me demonstrated how to take images of the moments during hand clapping. When he clapped his hands, the acoustic signal triggered the camera and a series of images of clapping hands was taken. I could see how the hands were deformed at the clapping moment and right after. I tried to follow what he had shown me and it worked perfectly. The acoustic trigger was very convenient for this purpose. So I told him that I could manage to use the camera now, and he left the laboratory.

Being on my own, I was curious and ambitious to see clapping hands with the maximum frame rate. I set the frame rate to 1 Mfps and clapped my hands. The camera was triggered, captured signals were processed… and I saw 100 successive black pictures. Disappointed I set the frame rate back to 5000 fps and increased it stepwise. I noticed that at the frame rate of 10,000 fps the light which I used was not strong enough even with the maximum diaphragm. So I brought a stronger lamp and increased the frame rate. Fine, I could take 100 successive images with 10,000, 20,000 fps, and more. However, since the number of the images was limited to 100, I could see only the very beginning of hand clapping with such a higher frame rate. How would I see the later phase of hand clapping with the higher frame rate? Can I change the timing of the trigger?

Thus, there are two crucial things that you have to consider: how to illuminate the object and how to take events at the right moment. If these two factors are not well set, then images obtained may be completely dark, or completely still throughout the whole series of frames.

3.1 Illumination

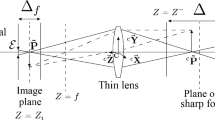

Not only for high-speed photography, but also for normal photography, it is important to choose the right illumination device and corresponding exposure time, depending on the sensitivity of the equipment. For the high-speed photography, the exposure time should be shorter (or, at least, equal) than the time interval of one frame, and subsequently a correspondingly strong light source is required. If you use a flash lamp or pulsed lamp as a strong light source, the timing of the flash or pulse should be synchronized with the course of events.

Note that it is difficult to take high-speed images of light-sensitive objects. Also care should be taken for protecting operators’ eyes, when strong lamps are used.

3.2 Trigger

An appropriate trigger device is essential for taking images at the right moment, especially when the number of frames is limited. For example, if the number of frames is limited to 100 and the frame rate is 1 Mfps, the total time of observation is 100 μs. In such a case the manual trigger does not function at all. Even for much slower motion like horse galloping, Muybridge had to use an electrical signal from the taut wire to open the shutters successively. The trigger signal can be electrical, optical, acoustic, pneumatic, or other. You have to select the most convenient trigger for your experiments and build the corresponding experimental set-up. For example, an acoustic trigger with a microphone is most convenient, if you like to observe the moment of a rupturing balloon: when a balloon is ruptured, usually a big bang is caused.

There are two ways to trigger the camera: a trigger for starting to capture images, or one for stopping to capture images. The latter is possible, when the captured signals are saved on chip electronically [7]. While the shutter of the camera is open, images are captured and stored continuously and at the same time overwritten one after the other. When a trigger signal arrives, image capturing and overwriting processes are stopped. Afterward the image signals of all storage elements are read out. Recently, this trigger method is used preferentially, because the timing can be set in a more flexible way, as explained in the following.

Usually a TTL (Transistor-Transistor-Logic) signal from electronic, optical, acoustic or other sensors is used as a trigger signal. It is practical to set a variable delay to stop the image capturing process after an input of the TTL signal. It is also possible to set a number of frames (m), during which images are still captured after the trigger signal arrives. The range of m is from 0 to n, where n is the total number of frames. For example, if m is set to 0, the image capturing is stopped immediately, and all signals kept in the memory are those of the phenomena happening before the trigger (pre-trigger mode). If m is set to n, the image capturing continues for n frames (m = n) after the trigger, and images of n frames after the trigger are recorded (post-trigger mode). The image capturing can be stopped at any frame within n frames (middle-trigger mode). Thus, the on-chip memory has an additional advantage regarding such flexible trigger modes.

3.3 A Difficult Case for Both Illumination and Trigger: Collapsing Beer Bubbles

Figure 3 presents a series of images, how a beer bubble starts collapsing. One bubble marked by a yellow circle has a heptagonal boundary with 7 adjacent bubbles. Images from (a) to (f) show that this heptagonal border is “melting” in time: the borderlines become wider and unclear. The “melting” takes place within 3 ms. It is nice to see such a process. (This series of images is one of the best among about 100 experiments.) But then, what will happen afterward? I wish to see images beyond 100 frames.

In search of examples for the later phase, I found one movie which just by chance started after melting (see Fig. 4). Note that the images shown in Fig. 4 are not directly succeeding the ones of Fig. 3, but they were taken at a completely different time: the bubble in Fig. 4 has 9 adjacent fronts (7 in Fig. 3). The camera starts with Fig. 4a taking images just after melting, which corresponds to the stage (f) of Fig. 3. Since we cannot measure the exact time after the event in Fig. 4 starts, just “t = \( \tau \)” is given as the time for Fig. 4a: \( \tau \approx 3 \) ms. It looks as if the fences of 9 houses around a lake sink into an abyss. The surrounding bubbles are torn inside by the central collapsing bubble. This process takes further 3 ms. (Videos of these two series of Figs. 3 and 4 are shown online—see Appendix.)

Carrying out the experiments for observing the process of collapsing beer bubbles, we could not avoid the two big problems: illumination and trigger. The first problem is our illumination system: our fibre optics caused many white dots in the images, which are seen in Figs. 3 and 4. They are due to light reflected on the surface of the foam. These random dots disturb the view of events. If more sophisticated illumination devices were used, the intensity of the reflected light could be reduced.

On the other hand, these dots can be useful. They indicate clearly where an event occurs: no event—no movement. Only the dots inside of the yellow circle change their location and intensity, while the dots outside of this circle stay during a time interval of 3 ms as they are (see Fig. 3a–f). Thus, an appropriate illumination device should be selected not only for the intensity but also for what you like to observe.

The second, and even more difficult problem is how to trigger the camera at the right moment. Honestly speaking, to obtain such images as shown in Figs. 3 and 4 is a matter of luck. First of all we do not know which bubble will start to collapse . If you take a large field of view, then some of the bubbles in this field may collapse at some time during acquiring images. But then, each bubble is too small to allow for observation of what happens at the border to adjacent bubbles. Therefore, it is necessary to magnify the field of view. Then, of course, the probability of a collapse occurring in the smaller area becomes lower.

If ever a collapse luckily occurs in this field of view, there is no way to trigger the camera on time. Collapse happens spontaneously and provides no detectable signal. Can we wait for further luck? Let us see which kind of luck we need, when we do not have any appropriate trigger.

Figure 5a illustrates, how long image acquisition lasts, depending on the frame rate of the camera, when the number of consecutive frames is limited to 100. When the frame rate is 100 fps, images are taken at every 10 ms. Therefore, the total acquisition time is 1000 ms (10 ms × 100 frames). When the frame rate is 1000 fps, the total acquisition time is 100 ms, and with a frame rate of 10,000 fps, it is reduced to 10 ms. Now, let us assume that a bubble collapse occurs every 1 s within our field of view. Then, theoretically this event should be recorded with the probability of 100%, when the frame rate is 100 fps. At 1000 fps, the probability of a bubble collapse during acquisition period is 10% and at 10,000 fps it is even 1% only.

The next assumption is that this event to be observed lasts 3 ms. Figure 5b shows how many frames correspond to 3 ms, depending on the frame rate. Since images are taken at every 10 ms for the frame rate of 100 fps, an event of 3 ms occurs within one frame. It means that among 100 images you obtain two consecutive images right before and after the event occurs. At 1000 fps, 3 ms corresponds to 3 frames, and at 10,000 fps it corresponds to 30 frames. If you need 30 frames for analyzing the event of 3 ms length in detail, the frame rate of the camera should be 10,000 fps. So one has to carry out experiments under the probability of 1%.

Images of Fig. 4 were taken with 32,000 fps. Was I so lucky to catch a collapse in the field of view within an acquisition period of 3 ms?

I have to confess that I used some tricks in order to satisfy my curiosity. Bubbles should be larger and easier for collapse, so that not so much magnification is required and that the probability to take images at the right time gets higher. After several attempts I found that the best way is to add one drop of kitchen detergent to about 10 ml beer and to bubble up with breath. These bubbles often collapse, when the container (a petri dish in this case) is slightly shaken. Knocking at the table on which the petri dish was placed can trigger a collapse, and also can trigger the camera acoustically. In this way I could take some series of images with a probability of about 10%. And my initial curiosity was satisfied.

On the other hand, as a scientist I am not yet satisfied with these results and procedures. To analyze such bubble collapse I have to carry out more systematic measurements. However, I cannot rely totally on luck in this endeavor. This is the reason why I stopped to work on this subject for a while. An image trigger could solve the problem: a trigger device which gives a signal, when parts of the image start changing. And then, I could resume my work on this subject….

4 About This Book

Following this introductory Chapter, PART II “Pioneering Works on High-Speed Imaging” is presented by Lauterborn and Kurz and Lajoinie et al. Lauterborn and Kurz describe their studies on bubbles and microbubbles along the development of high-speed imaging. Principles of various devices for capturing high-speed images, as developed since the 1960s, are explained, especially in the research field of microbubbles. Important is that their challenges have not yet finished, but they are further developing 3D holography. Lajoinie et al. write about the “Brandaris” camera and its applications. Here the rotating mirror technique and light-sensitive semiconductors are combined, and this combination realizes a high frame rate of up to 25 Mfps together with a high resolution of 500 × 292 pixels and large consecutive frame numbers up to 128. There is one disadvantage of this camera: it is quite large (150 cm length) and heavy (140 kg). This camera and cameras with the same principle are often used now for medical applications.

Two articles on light-sensitive semiconductors follow in PART III “Cameras with CCD/CMOS Sensors”. The light-sensitive semiconductors are at the heart of modern high-speed cameras. Two types of light-sensitive semiconductors are involved: the CCD (charge coupled device) and the CMOS (complementary metal oxide semiconductor). Etoh and Nguyen report on the development of high-speed image sensors, especially about a CCD with on-chip memory. With such newly developed CCD sensors, it becomes possible to make ultra high-speed cameras of a handy size, which achieve a high frame rate of 1 Mfps without losing two other crucial specifications: a high resolution of 312 × 256 pixels and a hundred consecutive images. Kuroda and Sugawa describe their improvement of CMOS sensors with on chip-memory. A camera with the most recently developed CMOS sensor is extremely light sensitive and can take 256 consecutive images with up to 10 Mfps even under day light. The resolution is 400 × 250 pixels.

The theme of PART IV is “Shock Waves”. Kleine gives an overview of visualization techniques for shock waves. He explains principles of various methods to visualize transparent media: shadowgraph , schlieren technique and interferometry. He compares images taken with different methods and discusses their advantages and disadvantages. While Kleine mainly describes the air flow, Takayama shows the behavior of underwater shock waves : underwater shock waves generated in ultrasound oscillations or in a shock tube , generated by underwater explosions , shock/bubble interaction, reflection of shock waves, and many other phenomena. For the visualization he uses holograms and interferometry.

In the field of material research high-speed cameras have become inevitable. There are three contributions in PART V “Materials Research”: Hild et al. for DIC (digital image correlation), Freitag et al. for laser processing, and Rack et al. for X-ray imaging . Hild et al. present analyses of the mechanical behavior of materials under stress (for example, deformation, damage or fracture) by using DIC. Freitag et al. investigated laser processing like laser welding and laser drilling of CFRP (carbon fiber reinforced plastics) . Laser processing could overcome difficulties of mechanical processing of CFRP, which is used wherever high strength-to-weight ratio and rigidity are required, such as in aerospace and automotive fields. Rack et al. show how useful it is for visualizing the interior of opaque materials, if the hard X-rays are combined with high-speed imaging technique. As examples of application, observations of the dynamics of aqueous foam and laser processing of polystyrene foam are shown.

One of the most important industrial applications of high-speed cameras are combustion studies, which can contribute to a sustainable and environmentally friendly energy use. The first article of PART VI “Combustion” is written by Aldén and Richter on high-speed laser visualization techniques in combustion research. They developed such visualization techniques with a combination of a Multi YAG laser and a framing camera , or a combination of a high repetition rate laser and a high power burst laser together with CMOS cameras . With these techniques they report on the behavior of turbulent combustion. Kawahara presents in the second article of PART VI combustion processes in car engines. He visualizes fuel injection, spray impingement on a wall, laser ignition processes, as well as an engine knocking cycle in order to understand what is going on inside a car engine.

PART VII “Explosions and Safety” is concerned with two objects which are contrasting in size between each other. One is explosions in a free field and the other is explosions of bubbles in a liquid. In order to protect buildings and infrastructure against explosions, Stolz et al. observe the behavior of flying fragments after explosions: their trajectories were calculated by tracing their launch conditions after explosion. They also studied the resistance of materials under highly dynamic conditions, which is certainly different from that under gentle stresses. Hieronymus observed shock-induced explosions of bubbles and further interaction of exploding bubbles. The influence of different parameters, such as the composition of the bubbles and the initial pressure was analyzed. The results are important for the safety assessment of explosion risks in the systems of bubble containing liquid.

The next subject after explosive bubbles is presented in PART VIII “Droplets”. There are two contributions: one is an inactive droplet impacting on a solid surface and the other a chemically active droplet impacting on a liquid surface. Langley et al. present, by using time-resolved interferometry, what happens in detail at the moment when a droplet contacts a solid surface. They discuss the formation and evolution of an air layer between the droplet and the solid surface, compression of the gas, and other newly found phenomena. Contrary to this work, for Müller the droplet is not a direct object to be observed but a tool to initiate chemical reactions (observed as color changes of a pH indicator, as well as the Belousov-Zhabotinsky reaction). He finds spatial inhomogeneity and instability at the early stage of color change of the pH indicator bromothymol blue . Furthermore, he suggests that such color reactions can be useful for studying drop behavior.

PART IX “Microbubbles and Medical Applications” is concerned with microbubbles and their medical applications: three contributions from physics (Garbin), technical characterization (Stride et al.), and therapeutic applications (Kudo) of coated/not coated microbubbles. Garbin describes the dynamics of coated microbubbles in ultrasound, including their stability, behavior in ultrasound, optical trapping, buckling and expulsion and shape oscillations . Stride et al. present a characterization of functionalized microbubbles, which could be applied to ultrasound imaging and targeted drug delivery . They use three complementary methods for characterization: ultra high-speed video microscopy, laser scattering and acoustic scattering/attenuation, and compare the characteristics of coated and non-coated microbubbles. Kudo observes cavitation bubbles generated by an artificial heart valve , which might cause valve failure and valve thrombosis. On the other side, cavitation bubbles are used as surgical equipment, non-invasive therapy and drug delivery. By using high-speed imaging it is possible to visualize cavitation activities in medical fields so that its effects can be minimized or maximized.

Certainly, some readers may wonder why “shock waves” appear not only in PART IV “Shock Waves”, or “bubbles” appear not only in PART IX “Microbubbles and Medical Applications”, but almost everywhere throughout this book. In fact, many subjects are related to each other: for example, when a shock wave interacts with some bubble, the bubble may explode and create another shock wave.

Since each article of this volume has its own theme, all contributions are allocated at the most suitable place according to the theme. We think that many readers will learn about new ideas from the chapters of this book and start to look for future challenges in wider fields. They may see what we have not seen before, and thus enlarging the scope of modern science and technology.

References

J. Brown, Audio-visual palimpsests: Resynchronizing silent films with ‘special’ music, in The Oxford Handbook of Film Music Studies, ed. by D. Neymeyer (Oxford University Press, Oxford, 2014), p. 588

T. Takahashi, Y. Tsukahara, Pocket Monster incident and low luminance visual stimuli: Special reference to deep red flicker stimulation. Pediatr. Int. 40, 631–637 (1997)

E. Muybridge, A.V. Mozley, in Human and Animal Locomotion (Dover, New York, 1887)

M. Leslie, in The man who stopped time (Stanford Magazine) (May–June 2001)

P. Oridger, Time Stands Still: Muybridge and the Instantaneous Photography Moment (Oxford University Press and Stanford University, Stanford, 2003)

M. Osaki, T. Ohkawa, T. Tsumura, Temporal resolution of hearing detection of temporal gaps as a function of frequency rasion and auditory temporal processing channels. Japan Soc. Physiol. Anthropol. 3, 25–30 (1998)

T.G. Etoh, D. Poggeman, G. Kreider, H. Mutoh, A.J.P. Theuwissen, A. Ruckelshausen, Y. Kondo, H. Maruno, K. Takubo, H. Soya, K. Takehara, T. Okinaka, Y. Takano, An image sensor which captures 100 consecutive frames at 1,000,000 frames/s. IEEE T. Electron. Dev. 50, 144–151 (2003)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

421713_1_En_1_MOESM1_ESM.avi

Beer bubble collapse observed at 16000 fps. White dots are random reflections of illumination light. A black semicircle at the right side is the tip of an optical fiber used for illumination (MPG 8087 kb)

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG

About this chapter

Cite this chapter

Tsuji, K. (2018). History of Curiosity. In: Tsuji, K. (eds) The Micro-World Observed by Ultra High-Speed Cameras. Springer, Cham. https://doi.org/10.1007/978-3-319-61491-5_1

Download citation

DOI: https://doi.org/10.1007/978-3-319-61491-5_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-61490-8

Online ISBN: 978-3-319-61491-5

eBook Packages: EngineeringEngineering (R0)